Abstract

As research on biological unmanned aerial vehicle (UAV) swarm control has blossomed, professionals face increasing time and cognitive pressure in mastering the rapidly growing domain knowledge. Although recent general large language models (LLMs) may augment human cognitive capabilities, they still face significant hallucination and interpretability issues in domain-specific applications. To address these challenges, this study designs and evaluates a domain-specific LLM for the biological UAV swarm control using an enhanced Retrieval-Augmented Generation (RAG) framework. In particular, this study proposes an element-based chunking strategy to build the domain-specific knowledge base and develops novel hybrid retrieval and reranking modules to improve the classical RAG framework. This study also carefully conducts automatic and expert evaluations of our domain-specific LLM, demonstrating the advantages of our model regarding accuracy, relevance, and human alignment.

1. Introduction

Large language models (LLMs) have attracted increasing attention in both academia and industry due to their remarkable performance in understanding and generating human-like text. These capabilities enable LLMs to assist in various knowledge-intensive tasks. However, applying general-purpose LLMs to solve complex domain-specific problems still faces challenges. They often suffer from hallucinations and expertise deficiency [1,2]. These limitations become particularly evident in emerging interdisciplinary applications that demand high domain accuracy and professional interpretability.

A representative example is the domain of biological unmanned aerial vehicle (UAV) swarm control, where systems emulate behaviors observed in natural collectives to achieve decentralized coordination. For instance, bird flocks dynamically avoid collisions while maintaining cohesion [3]. Fish schools optimize group movement to evade predators [4]. Insect swarms efficiently allocate tasks through simple local rules [5]. Modeling and controlling such emergent behaviors require expertise to ensure effective coordination, communication, and decision-making among UAVs [6]. As the field expands, domain professionals face increasing cognitive burdens in keeping up with the rapidly evolving body of knowledge on swarm strategies, coordination algorithms, and their deployment in varied operational environments. This application scenario reveals a broader academic challenge in systematically adapting LLMs to achieve domain-accurate and hallucination-resilient performance in professional and interdisciplinary tasks.

Accordingly, this study proposes a domain-specific LLM tailored for biological UAV swarm control. This model leverages a domain-specific knowledge base and an enhanced Retrieval-Augmented Generation (RAG) framework [7] to provide accurate and professional solutions to domain experts. In particular, this study concentrates on the following three objectives:

- Designing an enhanced RAG framework with hybrid retrieval and reranking modules;

- Constructing a structured domain knowledge base by parsing multimodal documents using an element-based chunking strategy;

- Evaluating the proposed domain-specific LLM using both automatic metrics and expert feedback in the context of biological UAV swarm control.

This study highlights the potential of RAG with a domain knowledge base in augmenting general-purpose LLMs to address hallucination and interpretability issues in the field of biological UAV swarm control. By integrating RAG technology, the proposed model simplifies access to and comprehension of the extensive body of professional knowledge. By consolidating domain knowledge, the model also supports the development of biological UAV swarm systems and reduces cognitive and technical barriers for users, thereby accelerating innovation in this critical and rapidly evolving field.

The remainder of this paper is structured as follows. Section 2 reviews the relevant literature. Section 3 outlines the RAG framework and the proposed methodology. Section 4 describes the construction of the domain knowledge base. Section 5 presents the automatic experiments and expert evaluations of our domain-specific LLM. Finally, Section 6 concludes the paper with a summary of key findings and implications.

2. Related Work

This section surveys the current state of domain-specific LLMs and document chunking, which form the backbone of effective knowledge base development. This review sets the stage for the innovations introduced later in the paper.

2.1. Domain-Specific LLMs

With the continuous advancement of natural language processing technologies, general-purpose LLMs such as GPT-4o and DeepSeek have demonstrated exceptional capabilities in language tasks. However, as shown in Table 1, they still face limitations in terms of hallucinations and professional interpretability. To address these issues, domain-specific LLMs have emerged, focusing on specific industries and integrating professional knowledge to deliver more precise and specialized services.

Table 1.

Literature themed in LLMs’ hallucination and interpretability.

Currently, there are two main frameworks for implementing domain-specific LLMs: fine-tuning and RAG. The fine-tuning framework retrains pre-trained models using domain-specific data, such as Low-Rank Adaptation (LoRA) [11], which improves the model’s adaptation to domain knowledge while reducing computational costs. However, such a framework relies heavily on large amounts of labeled data and faces challenges such as limited generalizability and difficulty in integrating new knowledge [12].

In contrast, the RAG framework, with its efficiency and flexibility, has become a new direction for the development of domain-specific LLMs. RAG enhances content accuracy and timeliness by retrieving relevant information from local knowledge bases or the internet and combining it with a generative model to output answers. This framework is instrumental in scenarios requiring rapid responses to the latest developments and reduces the reliance on large amounts of labeled data.

Domain-specific LLMs have made significant breakthroughs in fields such as healthcare, law, and finance. For example, the WiNGPT2 project, by combining medical knowledge graphs, healthcare dialogue datasets, and professional fine-tuning methods, has significantly enhanced intelligent healthcare question-answering, diagnostic support, and treatment suggestions. Similarly, domain-specific LLMs in finance and law also use similar strategies to improve the professionalism and efficiency of services. In the aviation industry, which is rich in complex and unstructured text data, labeled data for model training are scarce. Domain-specific AviationGPT has emerged to leverage these domain data, offering versatile solutions for various NLP tasks and improving performance.

UAV swarms, with their enhanced capabilities and expanded coverage, are increasingly utilized in both civilian and military applications. Despite their potential, several challenges persist in UAV swarm control, including obstacle avoidance, formation control, and the need for real-time, adaptive, and independent operations based on local information [13,14,15]. Traditional methods often struggle with high computational complexity, slow response times, and reliance on global environmental data, making them unsuitable for large-scale and dynamic swarm operations. These limitations are where domain-specific LLMs become indispensable. Unlike traditional UAV systems, which lack an integrated database, domain-specific LLMs are equipped with vast, specialized knowledge bases. These models can reference diverse information, from physics to swarm behavior, ensuring that UAVs can make well-informed decisions autonomously. This feature is particularly important in domains where human oversight is limited or impractical.

In summary, developing domain-specific LLMs for UAV swarm control is essential to enhance the performance, efficiency, and adaptability of large-scale UAV swarm control in complex and dynamic environments. These models are necessary tools to help people access domain-specific knowledge and generate solutions for complex UAV swarm control problems.

2.2. Document Chunking

Knowledge bases play an irreplaceable role in building domain-specific LLMs. Knowledge bases can be divided into structured and unstructured sources [16]. Structured knowledge sources, stored in tables or triples, are easy to query and process, while unstructured sources such as text documents and web pages are widely used in fields like healthcare and finance due to their flexibility and extensive coverage. However, with the surge in data, especially multimodal documents (e.g., PDFs, scanned documents), traditional question-answering systems solely focusing on text processing face significant challenges.

When domain-specific LLMs process documents with extensive content, their limited context window size often prevents them from grasping the overall meaning of the document [17]. To address this issue, text chunking techniques have been proposed, which break down long documents into smaller, more focused sections, enabling LLMs to process each part more accurately and build a more comprehensive and consistent understanding of the entire document.

Chunking is a technique for breaking large blocks of text into smaller, manageable segments, which are crucial for improving the performance of domain-specific LLMs when querying vector databases. In particular, the RAG framework requires that documents must be split into smaller chunks before applying vector embeddings. This process helps optimize the accuracy of content retrieval from the vector database by aligning the document’s structure with the model’s processing constraints.

The main goal of chunking is to strike a balance between preserving semantic coherence and minimizing noise within the embedded content. Proper chunking enables the retrieval of the most relevant document parts based on a user’s query. However, achieving this balance presents several challenges. On the one hand, if chunks are too large, they may include excessive irrelevant information, negatively impacting retrieval accuracy. On the other hand, if chunks are too small, essential contextual information might be lost, leading to incoherent or shallow responses. Thus, effective document chunking strategies are vital to ensure information completeness and relevance in the RAG mode.

There are several common methods for chunking, each with its strengths and limitations, suitable for different contexts:

- Fixed-size Chunking: In this method, text is divided into chunks of a predefined word count. It is a straightforward and computationally efficient approach, particularly in cases where semantic context is relatively uniform across the document. However, fixed-size chunking may fail to preserve important context or capture the logical structure of the document;

- Content-based Chunking: This approach divides text based on its inherent content, such as punctuation (e.g., periods or commas) or sentence boundaries. It is often used in combination with natural language processing tools like Natural Language Toolkit or spaCy for sentence segmentation. While content-based chunking can better respect natural language boundaries, it may still overlook document structure, which limits its utility in more complex tasks such as question-answering;

- Recursive Chunking: A more flexible and adaptive approach, recursive chunking involves applying chunking rules iteratively. For example, splitting a document by paragraph breaks (“\n” or “\r”) first and checking if chunks exceed a predefined size. If necessary, the process continues by applying finer rules (e.g., sentence-level breaks) to reduce chunk size further. Recursive chunking balances chunk size and context preservation, but designing precise rules that ensure effective segmentation across diverse document types remains a technical challenge;

- From Small to Large Chunking: This method involves generating chunks of varying sizes, from small to large, and storing them in a vector database with hierarchical relationships to support recursive search. While this technique allows the flexibility to handle different levels of granularity, it requires significantly more storage and may introduce redundancy due to the overlapping content across multiple chunk sizes;

- Special Structure Chunking: This approach involves creating custom chunkers tailored to specific document types (e.g., Markdown, LaTeX, or programming code). These chunkers aim to preserve the integrity of the document’s structure while breaking it into meaningful segments. Special structure chunking is ideal for handling highly structured content but requires significant effort to design chunkers for each document type and ensure they handle variations in format correctly.

Each chunking method has its advantages and trade-offs. The appropriate strategy depends on the specific task at hand, such as the complexity of the document or the computational limitations of the RAG system. Ongoing research is focused on improving document chunking techniques to enhance retrieval accuracy, maintain semantic integrity, and reduce the computational burden on domain-specific LLMs. Advances in combining document structure-aware chunking with efficient retrieval models hold great potential for developing smarter, more efficient question-answering systems powered by domain-specific LLMs.

3. Framework for Biological UAV Swarm Control Based on Retrieval-Augmented Generation (RAG)

To facilitate the understanding of our work, this section introduces the base model and details the methods for improving the model using hybrid retrieval and reranking.

3.1. The Base Model—Retrieval-Augmented Generation (RAG)

RAG represents a pivotal framework that enhances the quality and accuracy of domain-specific LLM outputs by integrating retrieval mechanisms with generative processes. When tasked with answering questions or generating text, RAG retrieves relevant information from a large document corpus and utilizes this retrieved knowledge to supplement the generative model. This method enables researchers to avoid retraining LLMs for every specific task, instead appending a dynamic knowledge base to provide additional context. Consequently, RAG is particularly well suited for knowledge-intensive tasks, reducing hallucinations and improving domain-specific outputs.

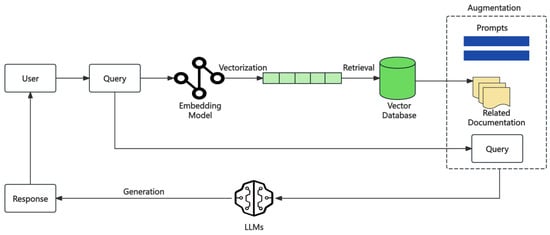

The classical RAG framework typically consists of three main stages: retrieval, augmentation, and generation. The whole process is shown in Figure 1. Based on the user’s query, relevant information is fetched from an external knowledge base. The query is transformed into a vector using an embedding model, and similarity searches are conducted within a vector database to identify the top K most relevant data points. Retrieval can also involve hybrid methods, combining keyword-based searches such as BM25 and vector-based searches like KNN, to enhance precision and recall. This hybrid approach compensates for limitations in each method, improving diversity and relevance. The retrieved relevant knowledge is then combined with the query and embedded into a predefined prompt template. This enriched input ensures that the generative model receives sufficient context for producing accurate and comprehensive outputs. The augmented prompt is subsequently fed into the base LLMs to generate the desired response, leveraging the enhanced contextual information.

Figure 1.

A classical RAG framework.

3.1.1. Retrieval Stage

When receiving a query input from a user, the retrieval stage aims to extract relevant information from an external knowledge base, which may be public or private. Information retrieval refers to the process by which users query and obtain relevant information from an information collection based on their needs. It is generally divided into broad information retrieval and narrow information retrieval. Common information retrieval models include the vector space model, the probabilistic retrieval model, and topic models.

The vector space model, proposed by Salton et al. in the 1970s and successfully applied in the SMART text retrieval system, is a classic similarity computation model [18]. It represents documents as vectors in a document space through feature selection and weight calculation, and document similarity is measured by calculating the similarity between vectors. The most commonly used vector space similarity metric for text processing is cosine distance.

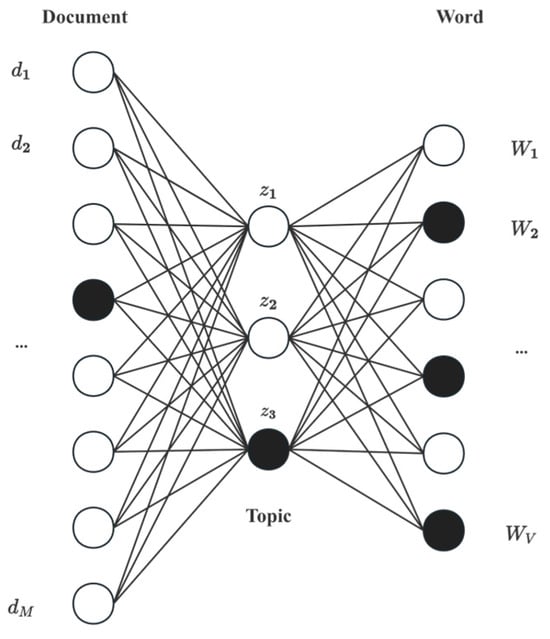

Topic models are statistical models that use unsupervised learning to cluster the implicit semantic structures of documents. They are primarily applied in semantic analysis and text mining and have been used in bioinformatics research. Topic models are typical bag-of-words models, as shown in Figure 2, and assume that a document is composed of multiple topics, with each topic being a set of words with no sequential relationship between words. A common topic model is Latent Dirichlet Allocation (LDA).

Figure 2.

Document–subject–word 3-layer model.

The probabilistic retrieval model is considered one of the most effective models in current information retrieval. It is similar to the concept of Bayesian classification but fundamentally different. The primary purpose is not to classify query results but to rank documents relevant to the query content based on relevance scores.

Best Matching 25 (BM25), as a classic probabilistic model for structured document retrieval, is widely applied in commercial search engines. BM25 builds on the term frequency-inverse document frequency (TF-IDF) model [19] and adds two adjustable parameters, k1 and b, which represent “term frequency saturation” and “field length normalization”, respectively. These parameters are used to adjust the role of term frequency and document length in weight calculations. Experimental evidence shows that when k1 = 1.2 and b = 0.75, the results produced by the BM25 algorithm are the most reasonable [20].

Currently, most retrieval methods in the RAG framework are based on vector similarity. However, a single retrieval path may limit the overall accuracy of the RAG framework. Currently, most research efforts to improve accuracy focus on the generation stage, such as adjusting LLM prompts and enhancing the performance of the generator. However, these efforts have a limited impact on the overall accuracy of the RAG framework, as if the context retrieved in the retrieval phase is irrelevant to the query, the answer will be inaccurate. Therefore, finding the optimal retrieval method for the RAG framework remains an important research area.

3.1.2. Augmentation Stage

Augmentation is the key stage that integrates the retrieval and generation modules, playing a crucial role in the RAG framework. There are generally three main augmentation strategies: input-level fusion, output-level fusion, and intermediate-level fusion, which target different aspects of the generator:

- Input-level fusion: This method combines the retrieved documents with the original input to form a single sequence, which is then input into the generator. While effective, this approach is limited by the number of documents, as overly long sequences may exceed the model’s processing capacity. For domain-specific LLMs, input-level fusion can enhance zero-shot capabilities by automatically retrieving appropriate natural language prompts;

- Output-level fusion: During the prediction phase, the subsequent token distribution generated by the language model is combined with the distribution from the retrieved corpus. This approach is flexible and easy to integrate but may limit the model’s ability to perform deep reasoning;

- Intermediate-level fusion: By designing a semi-parameterized module, retrieval results are integrated into the internal layers of the generative model. This method may enhance performance but increases complexity and requires high access to the model’s internals, which may not be feasible in practical applications.

3.1.3. Generation Stage

In the RAG framework, the generator is another core component responsible for converting the retrieved information into natural and fluent text. Traditional language models inspire the design of the generator, but unlike traditional generative models, the generator in RAG improves the accuracy and relevance of the generated content by utilizing the retrieved information. In the RAG framework, the generator’s input includes not only traditional contextual information but also relevant text fragments obtained through the retriever. This framework enables the generator to understand the context behind the query, thereby generating informative responses. In practical applications, generators are often categorized into white-box and black-box models:

- White-box models: These models allow access to model parameters and mainly include encoder–decoder models and pure decoder models. Encoder–decoder models like T5 and BART connect input and target tokens through cross-attention mechanisms. Pure decoder models process the concatenation of input and target tokens and gradually build representations. White-box generators support parameter optimization and adapt to different retrieval and augmentation methods;

- Black-box models: These generators, such as the GPT series, Codex, and Claude, do not allow access to their parameters. They only support basic input queries and response receipts without internal structure modification or parameter updates. Black-box generators focus on the retrieval and augmentation process, enhancing performance by adding knowledge, guidance, or examples to the input to compensate for the limitations of not being able to directly optimize model parameters.

The RAG framework has demonstrated advantages in addressing challenges associated with large-scale pre-trained models, such as hallucinations and insufficient domain knowledge. Researchers have leveraged the RAG framework in various domains to improve task-specific outcomes. However, the classical RAG framework suffers from limitations such as single-mode retrieval mechanisms, insufficient coverage, and simplistic ranking methods, which compromise answer quality. To address these issues, this paper proposes an enhanced RAG framework by incorporating hybrid retrieval and reranking mechanisms, aiming to enhance both retrieval and generation performance and thereby improve the overall effectiveness of the domain-specific LLMs.

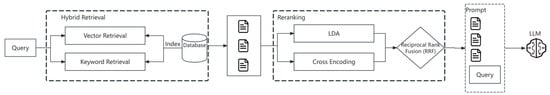

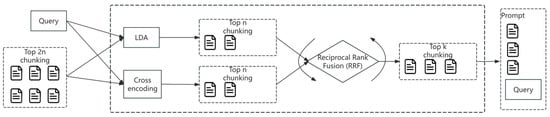

In particular, we introduce a hybrid retrieval module in the retrieval stage, using both vector-based retrieval and traditional keyword retrieval in parallel to improve overall retrieval performance. By combining vector and keyword retrieval, the system can better capture query intent and provide more precise and relevant search results. The process is shown in Figure 3.

Figure 3.

An enhanced RAG framework with hybrid retrieval and reranking.

Based on the retrieved documents, a reranking mechanism is introduced to optimize the final document ranking. First, an optimized LDA model is trained using knowledge-based partitions. The retrieved partitions are then input into both the LDA model and a cross-encoder, generating two separate ranking results. The LDA model understands the underlying thematic structure of the documents through topic modeling, while the cross-encoder better captures the contextual relationship between the query and the documents.

To integrate the multiple ranking results, we employ Reciprocal Rank Fusion (RRF). RRF integrates the outputs of various ranking models by weighted fusion, ultimately generating a combined ranking. This fusion method not only considers the strengths of different ranking models but also effectively improves the accuracy and diversity of the final ranking results.

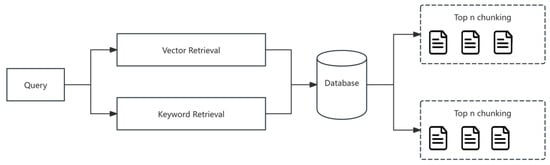

3.2. Hybrid Retrieval Based on Vectors and Keywords

In modern information retrieval architectures, vector retrieval plays a central role, particularly in the RAG framework. This technique segments documents from external knowledge bases into semantically coherent chunks and maps them into a multi-dimensional vector space. User queries undergo the same transformation, enabling precise identification of subtle semantic relationships between queries and documents. For example, the phrases “a cat chasing a mouse” and “a kitten hunting a mouse” exhibit high similarity in vector space, far surpassing their association with “I like eating ham”. This technique ensures accurate localization of relevant texts, providing enriched contextual support for generation models and improving answer accuracy. Figure 4 presents the basic mechanism of this hybrid retrieval.

Figure 4.

Hybrid retrieval.

However, in specific scenarios—such as retrieving names, product models, acronyms, or specific IDs—traditional keyword retrieval methods (e.g., BM25) demonstrate superior performance. These methods excel in exact matching tasks, particularly for precise searches involving specific entity names or numbers and for accurately capturing low-frequency but high-value terms.

Therefore, we propose introducing a hybrid retrieval module into the RAG framework, combining vector retrieval with keyword retrieval to leverage the strengths of both approaches while mitigating their weaknesses. This hybrid retrieval module integrates the advantages of the two techniques, constructing a more efficient and comprehensive retriever suitable for diverse and complex information retrieval needs.

In particular, we select the BM25 algorithm as the keyword retrieval method. BM25 is a probabilistic retrieval model in the field of information retrieval [20]. It belongs to the Okapi BM family and is primarily used to evaluate the relevance between documents and queries. Based on a probabilistic model, BM25 aims to enhance retrieval performance by measuring the degree of match between queries and documents. The core of the BM25 algorithm lies in calculating the relevance score between a query and a document. The primary formula for BM25 is shown below:

The primary objective of this algorithm is to compute the relevance score between document and query , denoted as . The contribution of each term in query is calculated using the inverse document frequency and term frequency . The inverse document frequency measures the importance of term within the entire document collection, while the term frequency represents the number of occurrences of term in document , represents the total number of terms in document , and represents the average length of all documents in the document collection. Table A1 in Appendix B provides descriptions of these parameters.

For the vector-based retriever, the K-Nearest Neighbors (KNN) algorithm is selected in this study [21]. In the field of information retrieval, KNN is based on the vector space model. Both documents and queries are represented as vectors [22]. The most relevant documents are retrieved by calculating the similarity between these vectors. In the vector space model, document and query are represented as vectors and , respectively. Similarity is typically measured by calculating the distance between the two vectors, and in this study, Cosine Similarity is chosen as the measure, and it is calculated as follows:

When a user query is input into the system, two types of retrievers independently perform block retrieval in the knowledge base based on the query statement, each returning the Top-N relevant blocks as candidates for subsequent reranking.

3.3. Reranking Based on Topic Modeling and Cross-Encoding

To further enhance the relevance and accuracy of query results, a reranking module is incorporated after the initial retrieval process. In the classical RAG framework, the system might retrieve a large number of contextual blocks based on their similarity to the query. However, not all retrieved contexts are highly relevant to the query. The reranking module reorders and filters the retrieved blocks, discarding irrelevant or less important blocks and prioritizing the most relevant ones. This module improves the overall accuracy of the RAG process. Figure 5 represents the whole process.

Figure 5.

The process of reranking.

The reranking module in this study employs a hybrid algorithm, integrating Latent Dirichlet Allocation (LDA) topic modeling and cross-encoding techniques to perform a second-level ranking of the blocks retrieved in the search phase. The final ranking is determined using a reciprocal ranking approach.

This study selects LDA as the first ranking algorithm in the reranking module. The LDA is a generative probabilistic model used to discover latent topics from a large collection of documents [23]. LDA assumes that each document is composed of multiple topics, and each topic is generated by a set of words with different probability distributions. The core idea of LDA is to connect words and documents through topics. Specifically, LDA assumes that each document is a mixture of several topics, and each topic is a distribution over a set of words. In the LDA, both the topic and word distributions are generated from Dirichlet distributions. The goal of model training is to infer the topic distribution for each document and the word distribution for each topic based on the document collection.

The generation process of the LDA model follows the work of Blei et al. [24]: the joint distribution of the topic proportion , the topic assignments , and the observed words , conditioned on the corpus-level parameters and , is defined as follows:

In this formula, denotes the document-specific topic distribution, drawn from a Dirichlet prior parameterized by ; represents the latent topic assignment for the n-th word; is the observed word at position n; defines the topic-word distributions shared across the corpus; n is the total number of words in the document. This formulation reflects the generative process of LDA: for each document, a topic distribution is sampled from the Dirichlet prior . For each word in the document, a topic is drawn from , and the word is generated from the corresponding topic-specific distribution defined by . Table A2 in Appendix B lists descriptions of each parameter.

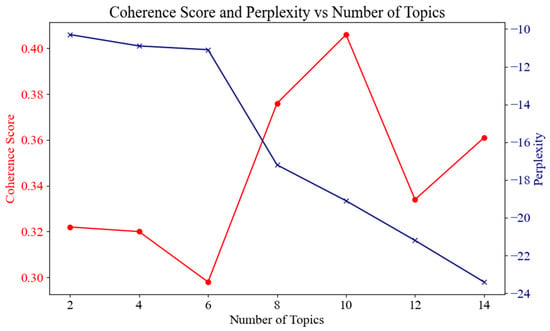

Before performing reranking, the LDA topic model based on the specialized domain must be trained in advance. This study chooses to use the blocks from the knowledge base as the training data for the LDA. First, the blocks in the knowledge base are processed with tokenization, stop-word removal, punctuation removal, and other preprocessing steps to obtain a clean vocabulary. The blocks are then represented using the bag-of-words model, where each block is represented by a term frequency vector. The topic distribution for the blocks and the word distribution for the topics are randomly initialized. Through methods such as Gibbs sampling, and are iteratively updated until convergence. The model’s performance is evaluated using coherence and perplexity, and the optimal number of topics K is selected [23], with the trained optimal LDA model saved.

In the reranking module, the user’s input query and all blocks retrieved through the hybrid retrieval method are fed into the trained optimal LDA model. The vocabulary is mapped to the topic space, generating topic distributions or the query and for the blocks. Cosine Similarity is then used to compute the similarity between the query and each block, followed by ranking. The LDA topic modeling approach captures the latent semantics of the query and the retrieved block vocabulary, rather than being limited to surface-level word matching.

In addition to using LDA topic modeling for secondary ranking of the retrieved blocks, this study also introduces the CrossEncoder architecture [25]. By interactively encoding the query and candidate documents, the CrossEncoder generates high-quality relevance scores, thereby optimizing the ranking results. Unlike the Bi-Encoder architecture [26] used in the hybrid search, which encodes the query and block text independently, the CrossEncoder jointly encodes the query and document, allowing it to capture the complex interaction information between the two. Thus, the CrossEncoder can more precisely model the interaction between the query and block text, making it more effective in tasks that require fine-grained semantic matching.

In CrossEncoding, the query and block text are combined into a long sequence as input, and then encoded by a pre-trained language model.

The specific process is as follows: First, the query and block text are concatenated into an input sequence in the format “”, where and are special tokens indicating the start of the sequence and separators, respectively. The concatenated sequence is then input into the pre-trained language model, which encodes the sequence through multiple layers of the Transformer encoder to generate hidden state representations. Next, the hidden state corresponding to the token is extracted from the encoder’s output as the joint representation of the query and block text. Finally, this joint representation is passed through a simple feed-forward neural network (e.g., a linear layer) to calculate the relevance score between the query and the block. The expression of the CrossEncoder architecture proceeds as follows:

In this context, refers to the pre-trained Transformer encoder, represents the hidden state corresponding to the token, and is a feed-forward neural network used to compute the relevance score.

After obtaining the results from the two ranking methods mentioned above, this study applies the RRF method [27] to generate the final reranked list. RRF aggregates multiple ranking results by computing a weighted sum of reciprocal ranks for each document. This method assigns higher weights to documents ranked higher in the individual lists, making it particularly effective for large-scale text data. RRF not only improves retrieval accuracy and relevance but also enhances coverage and robustness by incorporating diverse information sources [28].

The steps are as follows:

- (1)

- Obtain two independent ranking results from the CrossEncoder method and the LDA topic model, denoted as and respectively;

- (2)

- Set a tuning parameter , typically with a value of 60;

- (3)

- For each block , compute the reciprocal rank in each ranking result;

- (4)

- Perform a weighted sum of the reciprocal ranks for each block to calculate its RRF score;

- (5)

- Finally, sort all documents based on their RRF scores and return the final reranked results. Select the top K blocks from the final ranking as the ultimate block set .

The formula for the RRF algorithm is shown below (Table A3 in Appendix B summarizes parameters in this formula):

With ongoing advancements, RAG is expected to play a critical role in further enhancing the application and capabilities of LLMs across diverse fields. By integrating high-quality retrieval and generation processes, RAG can significantly expand the utility of pre-trained models, providing accurate, context-rich, and domain-specific outputs while mitigating computational and data-related constraints.

4. Domain Knowledge Base Construction

We established a private database focused on biological UAV swarm control, incorporating data preprocessing and element-based chunking to optimize recall accuracy. For this database, we selected the Moka Massive Mixed Embedding (M3E) model as the embedding model.

4.1. Data Collection

We crawled the professional literature from Google Scholar, with keywords including “biological UAV swarm control”, “biological swarm intelligence”, and “swarm autonomous control” entered. For each keyword, we crawled 500 papers. The crawling stopped at this point since the limited database was related.

4.2. Data Preprocessing

To ensure the relevance and quality of the dataset, preprocessing was conducted. Preliminary data processing was conducted, with the old-dated, incomplete, and non-readable literature dropped. Papers without key terms (“UAV”, “biological”, “swarm”) were excluded. After filtering out the overlapped and irrelevant results, we obtained 1320 professional documents as the private knowledge base.

4.3. Element-Based Chunking Strategy

Chunking techniques divide long texts into smaller sections, optimizing the recall accuracy of embedded models in vector databases. Chunking is the process of breaking large blocks of text into smaller segments. This technique is necessary when working with embedding models, as it helps optimize the accuracy of content retrieved from vector databases. When embedding content, the length of the content—such as whether it is a single sentence or a complete paragraph or document—can influence the system’s outcome.

On the one hand, when embedding a sentence, the resulting vector focuses on the specific meaning of that sentence. Comparing embeddings at the sentence level makes it easier to identify relationships, but this process may overlook the broader contextual information found within the paragraph or document. On the other hand, when embedding a complete paragraph or document, the embedding process must account for the entire context as well as the relationships between sentences and phrases within the text. This strategy generates a more comprehensive vector representation, capturing the text’s broader meaning and themes.

However, larger input text sizes can introduce noise or diminish the importance of individual sentences or phrases, making it more challenging to find accurate matches during query indexing. Short text chunks (e.g., sentences) focus on specific meanings, facilitating inter-sentence comparison but possibly neglecting the broader context. Long text chunks (e.g., paragraphs/documents) capture context and relationships between elements but may introduce noise and dilute the importance of individual sentences or phrases. Given that these documents are predominantly text-based, this study adopted an unstructured chunking strategy, setting a granularity of 512 tokens. This approach aligns with base model constraints and effectively handles unstructured text. This paper processes documents using an unstructured framework to identify and extract elements within papers. Some of these elements are short and cannot be considered chunks, so to generate chunks from these elements, the following steps need to be followed:

- (1)

- If the element’s text length is less than 512 characters, attempt to merge it with the subsequent element;

- (2)

- Iteratively merge the element texts in the above step until the desired length is achieved, without disrupting the individual elements;

- (3)

- If a heading element is found, start a new chunk;

- (4)

- If a table element or image element is found, start a new chunk and save the entire table or image.

4.4. Embedding Model

For the vectorization part of the knowledge base, this paper chooses the Moka Massive Mixed Embedding (M3E) model released by MokaAI as the embedding model. M3E is designed to provide an all-in-one text embedding model that can convert natural language into dense vectors. It supports not only homogeneous sentence similarity judgments but also heterogeneous text retrieval. With just this single model, all application scenarios can be covered. The M3E model’s strong retrieval capabilities for both homogeneous and heterogeneous texts play a significant role in optimizing search performance. For example, it can help improve query understanding and document indexing, thereby enhancing the accuracy and efficiency of search.

5. Experiment and Evaluation

In this section, we evaluate the performance of our domain-specific LLM in both automatic evaluation and expert evaluation. Because there is no benchmark dataset for biological UAV swarm control, we first selected a public professional dataset to conduct automatic evaluation for our enhanced RAG framework in terms of objective metrics. Next, we established a private dataset themed in biological UAV swarm control and made expert evaluations for our LLM-generated results in terms of subjective accuracy, relevance, and human alignment [29].

5.1. Experiment in Public Professional Domain

5.1.1. Experimental Dataset

To validate the effectiveness of our enhanced RAG framework, a famous open dataset in a professional domain is selected for evaluating the framework. The dataset chosen for experimentation in the public professional field is the LeCaRDv2 dataset [30]. This dataset is well known in the professional domain for its comprehensive case collection and meticulous construction method. It includes 800 queries and 55,192 candidates extracted from 4.3 million case files. All cases in the dataset have been annotated by multiple experts specializing in criminal law. Their expertise ensures the accuracy and reliability of the annotations.

Detailed statistics of the LeCaRDv2 dataset and other popular public professional case retrieval datasets are shown in Table 2. Among them, LeCaRDv1, CAIL 2019-SCM, CAIL 2022-LCR, and COLIEE 2020 provide a limited number of candidate cases for each query. In contrast, COLIEE 2021 and LeCaRDv2 require determining relevant documents from the entire corpus, making the task more challenging. Compared to LeCaRDv1, LeCaRDv2 is closer to real-world retrieval tasks, requiring models to demonstrate both efficiency and effectiveness. The statistical data show that LeCaRDv2 is currently the largest Chinese public professional case retrieval dataset, with tens of thousands of annotated data points.

Table 2.

Basic description of the public professional dataset.

5.1.2. Data Preprocessing and LDA Modeling

We employed the “candidates” data from the public dataset as the training data for the LDA model. The specific training process begins with preprocessing the dataset, which involves steps such as tokenization, stop-word removal, and stemming to construct high-quality training corpora. Then, the preprocessed text is input into the LDA model, and the optimal hyperparameters, specifically the number of topics, are selected using consistency and complexity as evaluation metrics. The consistency evaluation metric measures the similarity of words within the same topic, with higher consistency indicating better topic coherence. The complexity evaluation metric measures the model’s generalization ability and fit, where excessively high complexity may lead to overfitting, and excessively low complexity may lead to underfitting. The consistency–complexity trade-off during training is as follows.

As shown in Figure 6, when the number of topics is set to 10, the trained LDA model has both high consistency and moderate complexity. Therefore, we ultimately select the LDA model with 10 topics as the optimal model and save it for the RAG framework.

Figure 6.

The variation of consistency and complexity of the public benchmark dataset with the number of topics.

5.2. Experimental Setup

5.2.1. Experimental Environment

The experiments for training and evaluation in this study were conducted on Linux servers rented from the Autodl platform. The configuration is as follows: a single L20 GPU with 48 GB of VRAM; a 20-core virtual CPU, Intel(R) Xeon(R) Platinum 8457C; 100 GB of memory; and manufactured by NVIDIA in Ningxia, China.

5.2.2. Parameter Settings

In this study, the recall number for the hybrid search combining keyword search and vector search is set to Top-N = 10. The k-value for reranking using Reciprocal Rank Fusion is set to 60, and the final number of recalled chunks is set to Top-K = 10. The batch size for model performance evaluation is 4.

5.2.3. Evaluation Metrics

To accurately measure the performance of our proposed LLM, this study uses widely adopted metrics such as recall, F1 Score, BLEU score, and Cosine Similarity.

Recall measures the proportion of actual positive samples correctly predicted as positive. For the comparison of two strings, the recall is calculated as follows:

TP (True Positive) refers to the number of correctly matched characters or words in the generated text, and FN (False Negative) refers to the number of characters or words in the reference text that failed to match. Recall plays an important role in detecting the model’s sensitivity and recognition ability.

F1 Score is a metric that combines precision and recall to evaluate the overall performance of the model. For the comparison of the two texts, first, we need to determine True Positives (TPs), False Positives (FPs), and False Negatives (FNs). Its calculation is as follows:

In text generation, TP refers to the number of correctly matched characters or words, FP refers to the number of incorrect matches, and FN refers to the number of unmatched characters or words that should have matched.

BLEU (Bilingual Evaluation Understudy) is a key evaluation metric to assess the precision of models that generate multiple correct outputs [31]. Its core idea is to compare the overlap of n-grams in candidate and reference translations, with higher overlap indicating better translation quality. n-grams are sequences of n words or characters that appear consecutively in the text, and depending on the value of n, and n-grams can be unigram, bigram, and trigram. BLEU is considered the origin of all evaluation metrics. Unigram measures the accuracy of word translation, while higher-order n-grams measure the fluency of sentence translation. In practice, n is typically taken from 1 to 4, and the results are weighted and averaged.

The formula for BLEU calculation is as follows:

BP is a penalty factor to avoid overly short translations, defined as follows:

Here, represents the length of the candidate translation, and represents the length of the shortest reference translation. The formula for refers to the precision of n-grams, and is the weight of n-grams, usually set to uniform weight, i.e., for any n. A higher BLEU score indicates a higher similarity between the generated and reference translations.

Cosine Similarity measures the similarity between two vectors, ranging from −1 to 1, indicating the degree of alignment of the vectors’ directions. In string comparison, the strings are first represented as vectors, and the Cosine Similarity is calculated as follows:

and are the vector representations of the two strings, the numerator is the dot product of the vectors, and the denominator is the product of their norms. The closer the Cosine Similarity value is to 1, the more similar the two strings are.

These evaluation metrics collectively assess the performance of the question-answering system in text generation tasks, ensuring that the model performs well across various aspects. In the experiment, these metrics are used to compare the generated texts and reference texts, providing an objective evaluation of the answer quality and offering a basis where further optimization and improvement could be made.

5.3. Results and Analysis

5.3.1. Main Experiment Results

To validate the effectiveness of our enhanced RAG framework, we chose a baseline model without RAG and compared it with the classical RAG framework. Specifically, the RAG framework designed in this paper makes several improvements over the classical RAG, including hybrid retrieval and reranking, while the classical RAG framework only uses a single vector retrieval with the retrieved results directly input as context along with the query into the baseline model. This section presents results on the above public benchmark dataset and evaluates the system’s performance using the four metrics mentioned in the previous section.

As shown in Table 3, the results support that our enhanced RAG framework outperforms both the classical RAG and the baseline model across all evaluation metrics. Specifically, our enhanced RAG framework excels particularly in recall and F1 Score. The improvement in recall suggests that our enhanced RAG framework is more effective in retrieving relevant information, likely due to the introduction of the hybrid retrieval strategy. Hybrid retrieval combines the advantages of vector retrieval and keyword search, enabling a more comprehensive coverage of the retrieval space, which in turn improves the recall rate.

Table 3.

A comparison of experimental results for the public benchmark dataset.

In terms of F1 Score, our enhanced RAG framework also shows a significant improvement. F1 Score considers both precision and recall, and our framework performs well in both aspects. In addition to hybrid retrieval, this improvement may also be attributed to the introduction of the reranking module. By using cross-encoding and LDA topic modeling, the initial retrieval results were reassessed, allowing for more accurate identification of high-quality retrieval results, thereby enhancing the overall F1 Score.

The improvements in Cosine Similarity and BLEU scores also demonstrate the advantages of our enhanced RAG framework in semantic matching and generation quality. The increase in Cosine Similarity indicates that our enhanced RAG framework produces more accurate semantic representations in vector space. At the same time, the rise in BLEU scores reflects better quality of the generated text and higher similarity to the reference answers. However, the experimental results show that the improvement in these two metrics is relatively modest compared to the classical RAG. This could be attributed to the introduction of the non-vector-based retrieval method BM25, which means that a large portion of the retrieved chunks have low relevance to the query in terms of vector similarity, thus limiting the improvement in Cosine Similarity.

5.3.2. Ablation Experiment

We also conducted ablation experiments to further assess the importance of individual modules in our enhanced RAG framework. By progressively removing or disabling certain parts of the system and observing the changes in performance, researchers can determine the contribution of each component to the overall system’s performance. This method not only helps us understand the working mechanism of the system but also identifies the most critical parts for performance improvement, thus guiding future optimization and enhancements.

To gain a deeper understanding of the impact of each module in the RAG framework designed in this paper on overall performance, we conducted a series of ablation experiments. Specifically, we performed ablation on the system’s hybrid retrieval and reranking modules by controlling variables and gradually removing these components to observe changes in system performance.

As shown in Table 4, the following experimental configurations were tested:

Table 4.

A comparison of ablation experimental results for the public professional dataset.

Baseline (w/o RAG): The baseline model without RAG;

+KNN: The baseline with KNN vector retrieval, i.e., the classical RAG;

+KNN + Rerank: The baseline with KNN vector retrieval and the reranking module;

+BM25: The combination of the baseline model and the traditional BM25 keyword search method, where the results retrieved by keyword search are directly used as context without vector retrieval;

+BM25 + Rerank: Reordering applied after retrieving the initial results using BM25 for secondary filtering;

+Hybrid Search: Parallel use of vector retrieval and keyword search without adding the reranking module;

Our RAG: The optimized RAG proposed in this study, incorporating both hybrid retrieval and reranking modules for dual optimization of retrieval performance.

It should be noted that when the reranking module is removed, hybrid retrieval returns Top-2N chunks as context. To control for equal recall of contextual chunks across all experimental groups, we set Top-N to 5 for the RAG framework without reranking. When the retrieval module only uses a single retrieval method and lacks the reranking module, Top-N is set to 10. When the retrieval module only uses a single retrieval method but includes the reranking module, Top-N is set to 20, with Top-K still set to 10. The overall control principle is to keep the number of chunks returned to the baseline model consistent across all groups.

After introducing the BM25 retrieval method to the baseline model, the performance across all metrics is improved, suggesting that providing reference materials to the baseline model is an effective approach. However, the improvement from BM25, a traditional keyword search method, is noticeably less than that from vector retrieval. Similarly, adding the reranking module to BM25 further improves the question-answering results.

When combining KNN and BM25 for hybrid retrieval, the model shows significant improvement in all metrics compared to using a single retrieval method, indicating that the hybrid search method, combining vector and keyword retrieval, can more comprehensively cover the retrieval space and thus improve the retrieval effectiveness.

Finally, our enhanced RAG framework performs best in almost all metrics, suggesting that introducing both hybrid retrieval and reranking modules significantly enhances the model’s overall performance.

In summary, by gradually removing each module, the ablation study clearly observes its contribution to the model’s performance. The hybrid retrieval strategy and reranking module play a key role in enhancing the model’s performance.

5.3.3. Parameter Sensitivity Analysis

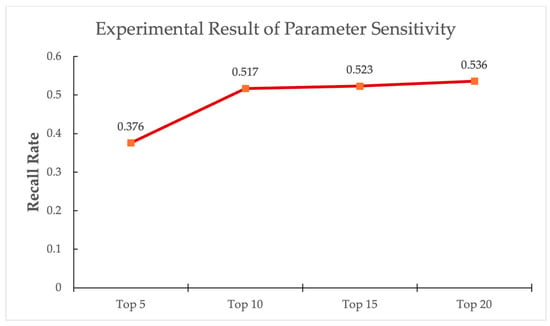

Based on the results of the comparison experiments and ablation studies mentioned above, it can be concluded that the hybrid retrieval and reranking modules have a significant impact on improving the model’s performance in question-answering tasks. Additionally, as mentioned in Section 4, the retrieval quantity Top-N for hybrid retrieval and the final Top-K selected by reranking are two parameters that also affect the final performance. Therefore, this section performs a parameter sensitivity analysis on the hyperparameters related to the number of recalled chunks to understand their impact on the question-answering task performance.

In this experiment, the values of Top-N and Top-K are set to be equal, meaning that regardless of the parameter values, the reranking process will filter out half of the initial chunks obtained from hybrid retrieval. Recall rate is chosen as the evaluation metric.

As shown in Figure 7, it can be observed that as the values of Top-N/Top-K increase, the model’s recall rate shows a gradual improvement on both datasets. By comparing the recall rates at different Top-N/Top-K values, it is evident that when the Top-N/Top-K values are small (e.g., Top 5), the recall rate is relatively low. This result might be due to the limited number of candidate results from retrieval and reranking, which fail to cover all relevant information adequately. As the Top-N/Top-K values increase, more candidate results are included in the reranking process, which enhances the coverage and accuracy of retrieval, ultimately improving the model’s recall rate. However, when the Top-N/Top-K values increase to a certain extent (e.g., Top 20), the rate of improvement in recall diminishes, indicating that adding more candidates within a certain range has diminishing returns. It can be inferred that when the number of recalled chunks becomes too large, the amount of information provided to the LLM also increases, and the proportion of useful information for answering the query decreases, which prevents a significant improvement in the answering performance.

Figure 7.

The result of parameter sensitivity analysis.

Additionally, since baseline models often have limitations on the number of context inputs, the number of recalled chunks is limited and cannot be increased indefinitely. Finally, excessively long contexts can slow down the LLM’s response generation speed. During the experiment, it was observed that as the Top-N/Top-K values increased, the experiment’s execution time also increased.

In conclusion, through the parameter sensitivity analysis of Top-N/Top-K values, we can deduce that an appropriate increase in the number of candidate results in retrieval and reranking can improve the model’s recall rate. However, excessively large Top-N/Top-K values have limited effectiveness in improving model performance. Therefore, in practical applications, the appropriate Top-N/Top-K values should be selected based on the specific task requirements to strike a balance between model performance and computational resources.

5.4. Expert Evaluation on Specialized UAV Domain

5.4.1. Face Validity with Typical Cases

Face validity is commonly used to evaluate how well a model appears to perform its intended function [32]. To establish the face validity of our enhanced model and contextualize its evaluation, we designed typical cases according to suggestions from domain experts. These cases include questions such as “On what sensory information do swarm entities base their decisions, and how does this relate to biological organisms?”, “How many neighbors are taken into account?”, “What is the speed of behavior propagation throughout the swarm?”, and “Are there known cases of swarm instability?”. The details of these case questions, along with both answers from our enhanced model and raw model, are presented in Appendix A.

Upon scrutinizing the answers, the raw model misaligned with recent domain-specific research and misinterpreted some key findings. For example, for the question “Are there known cases of swarm instability?” the definition of “swarm instability” is misaligned with the context of UAV swarms.

“Swarm instability is a phenomenon that has been observed in some natural systems, but it is not a well-established concept with clear definitions or criteria.”

In contrast, the enhanced model provides a more precise definition:

“Swarm instability refers to a situation where a group of individuals, whether they are insects, animals, or even autonomous robots, exhibit chaotic or unpredictable behavior that leads to a breakdown in the overall structure or coordination of the swarm. This concept is most commonly associated with swarms of insects like bees or ants, but it can also apply to other contexts, such as robotic swarms.”

Examples of swarm instability provided by the raw model further illustrate its inaccuracy. The raw model’s example is not only vague but also mischaracterizes adaptive behaviors as instability:

“One example of a system that has been studied for swarm instability is the flocking behavior of birds. In some cases, the flock may become disorganized and exhibit erratic behavior, such as flying in random directions or changing altitude. This behavior has been attributed to swarm instability, but it is not clear whether this is a general phenomenon or specific to certain types of flocks or conditions.”

In contrast, the enhanced model provides precise and relevant examples of swarm instability, supported by reliable references (see Appendix A), demonstrating its alignment with domain-specific research and applicability to biological UAV swarm control. Two key examples highlight this improvement:

“Robotic Swarms: In the context of robotics, swarm instability can occur when a group of robots fails to achieve its intended collective behavior due to communication errors, environmental changes, or unexpected interactions. For example, a robotic swarm designed to search for objects in a disaster area might become unstable if one or more robots fail to communicate effectively with the others 3.

Fish Schools: Fish schools can sometimes become unstable, leading to the formation of ‘schools within schools’ or the breakdown of the overall school structure. This can happen due to environmental changes, predation, or other factors that disrupt the school’s normal patterns 4.”

Unlike the raw model, which conflates adaptive behaviors with instability, the enhanced model accurately identifies scenarios where coordination fails and provides concrete, evidence-based illustrations. This improvement underscores the enhanced model’s superior ability to capture domain-specific nuances, making it a more reliable tool for applications in biological UAV swarm control.

Another case demonstrates the raw model’s tendency to hallucinate. For the question “How many neighbors are taken into account in UAV swarm control?” the raw model fails to provide a concrete answer, instead offering an oversimplified inference.

“A larger number of neighbors can lead to faster convergence and more stable behavior, but may also require more computational resources and may be less effective in dealing with large swarms.”

In contrast, the enhanced model delivers a precise and evidence-based response, supported by the professional literature (see Appendix A), demonstrating its alignment with domain-specific research. The enhanced model explains the following:

“The number of neighbors considered in UAV swarm control varies depending on the algorithm, communication constraints, and specific application requirements. 1. Algorithm Type: Topological Neighborhood: Fixed number of nearest neighbors (e.g., 3–8), regardless of distance. Inspired by biological systems like bird flocks or fish schools 1…2. Common Ranges: Many practical implementations use 4–6 neighbors for balance between cohesion and computational efficiency…3. Design Factors: Communication Bandwidth: Limits the number of neighbors to prevent overload; Scalability: Smaller fixed numbers (e.g., 3–5) ensure efficient computation in large swarms… Typical Answer: …UAV swarms often consider 3 to 8 neighbors, with 4–6 being a common range in many algorithms. The exact number is tailored to the system’s design goals and constraints.”

This response provides a specific range and contextualizes it within the constraints of UAV swarm design, drawing on biological inspiration to justify the choice of neighbors. Unlike the raw model, which oversimplifies the problem, the enhanced model addresses both technical and biological aspects, making it a more reliable tool for applications in biological UAV swarm control.

These cases provide initial evidence of the face validity of our enhanced model, where the aim of domain accuracy and professional interpretability is better met by the enhanced model. To further evaluate its effectiveness and compare it with the raw model, we conducted an expert evaluation using a larger question set covering a comprehensive range of swarm intelligence topics. This evaluation seeks precise and informed judgments from domain experts to validate the model’s performance.

5.4.2. Expert Evaluation Procedure

Building upon demonstrated improvements in domain accuracy and biological swarm control interpretability in the above cases, we conducted a structured expert evaluation to ensure naturalness and comprehensiveness [29].

We invited ten experts specializing in UAV swarm intelligence, each with expertise in at least one of five key topics: decision-making, path planning, control, communication, and application [33]. To ensure an unbiased assessment, we employed an anonymous online evaluation system where experts reviewed model-generated answers. The evaluation involved 50 questions, evenly distributed across the five domains (i.e., 10 questions per category). Each expert needs to compare two sets of answers (our model versus the raw model). To maintain objectivity, the evaluation was conducted in a blind test format, ensuring that experts were unaware of which model generated each response. Experts rated responses along three key dimensions (outlined in Table 5): accuracy, relevance, and human alignment. To quantify these evaluations, we employed a 5-point Likert scale (1 = Very Dissatisfied, 5 = Very Satisfied). Before beginning the formal assessment, participants were provided with detailed evaluation criteria to ensure consistency in judgment. These criteria were also displayed alongside the scoring interface to reinforce understanding.

Table 5.

Expert evaluation criteria.

The performance comparison between our model and the raw model was conducted across three evaluation dimensions (accuracy, relevance, and human alignment) and was categorized into five domains: decision-making, path planning, control, communication, and application. Multivariate Analysis of Variance (MANOVA) procedures were conducted to assess the performance differences according to expert evaluation results.

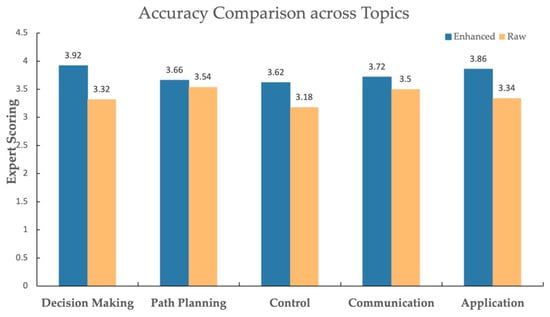

5.4.3. Result

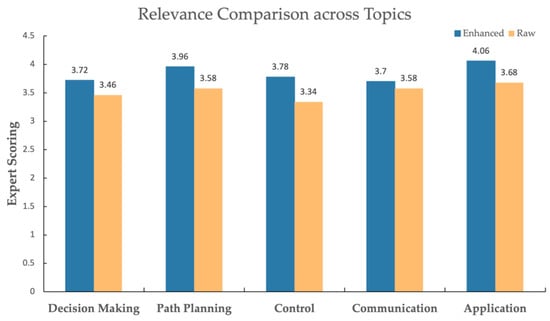

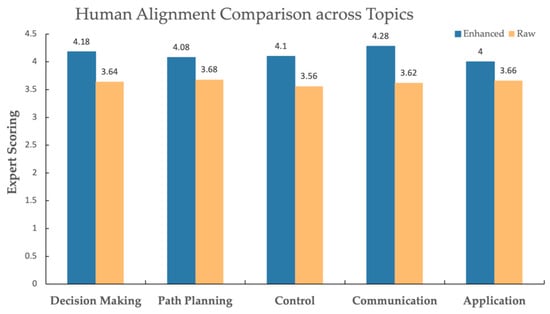

Our domain-specific LLM with the enhanced RAG framework outperforms the baseline model (without RAG), demonstrating its superior performance in accuracy, relevance, and human alignment across multiple layers of UAV swarm intelligence. Notably, our model achieves significantly higher scores in all three criteria in the control layer (Figure 8, Figure 9 and Figure 10), indicating its capability to generate precise, context-relevant, and ethically aligned responses for UAV swarm control tasks. Specifically, the MANOVA result indicates that the enhanced model significantly outperforms the raw model across three key evaluation criteria (F(3496) = 17.52, p < 0.001). The accuracy of our model (Meanenhanced = 3.76, SDenhanced = 0.79) is significantly higher than that of the raw model (Meanraw = 3.38, SDraw = 0.79, t(498) = 5.38, p < 0.001). Similarly, the relevance score (Meanenhanced = 3.84, SDenhanced = 0.90) significantly exceeds that of the raw model (Meanraw = 3.53, SDraw = 0.85, t(498) = 4.04, p < 0.001). Finally, the enhanced model also shows stronger human alignment (Meanenhanced = 4.13, SDenhanced = 0.98) than that of the raw model (Meanraw = 3.63, SDraw = 0.85, t(498) = 6.05, p < 0.001).

Figure 8.

A comparison of expert evaluation on accuracy.

Figure 9.

A comparison of expert evaluation on relevance.

Figure 10.

A comparison of expert evaluation on human alignment.

To assess whether model performance varies across question domains, another MANOVA was conducted on accuracy, relevance, and human alignment. The results show no significant multivariate effect of the topic, F(12, 1304.65) = 1.29, p = 0.218 (Wilks’ Lambda). Follow-up one-way ANOVAs also indicate no significant univariate differences across topics in accuracy (F(4, 495) = 1.32, p = 0.261), relevance (F(4, 495) = 2.18, p = 0.070), or human alignment (F(4, 495) = 0.30, p = 0.879). Additionally, there is no significant interaction between model type and question topic, F(12, 1291.42) = 1.09, p = 0.362, based on Wilks’ Lambda, indicating that the enhanced model’s advantage over the raw model is stable across different topics.

Figure 8, Figure 9 and Figure 10 present a comparison of the model performance in terms of accuracy, relevance, and human alignment across five distinct topics. These results clearly demonstrate that the enhanced LLM consistently outperforms the raw model in all domains. Notably, the enhanced model provides more precise and contextually appropriate responses, while exhibiting stronger adherence to ethical standards, societal norms, and user expectations.

6. Conclusions, Limitations, and Future Directions

This study designs and evaluates a domain-specific LLM with an enhanced RAG framework for the domain of biological UAV swarm control. Particularly, this study innovatively designs an enhanced RAG framework by integrating vector search with traditional keyword search, using a hybrid retrieval approach for context retrieval. This study also introduces a reranking module to further enhance the retrieval accuracy. Next, this study proposes an element-based document chunking strategy, which considers the structure of the document and preserves the information of elements comprehensively, to effectively build a domain knowledge base for biological UAV swarm control. Finally, this paper conducts automatic and expert evaluations on both public benchmark datasets and a private UAV domain dataset to evaluate our domain-specific LLMs.

There are several limitations of this study. First, this study focuses on designing domain-specific LLMs by introducing the RAG framework, leaving room for improvement in the base model, such as through pre-training or fine-tuning. Second, incorporating domain knowledge into the base model via internalized learning could improve question-answering performance. The quality and richness of the knowledge base for biological UAV swarm control can be further extended. Third, a more comprehensive comparison with alternative module algorithms and other recent adaptive RAG variants, such as Self-RAG [34] and Retrieval-Augmented Fine-Tuning (RAFT) [35] in professional domains, can be further conducted to enhance the generalizability of this study.

Notwithstanding these limitations, this study enriches domain specification techniques of LLMs and underscores the potential of RAG with a domain knowledge base in augmenting general-purpose LLMs in the field of biological UAV swarm control: (1) Improving the performance of RAG framework: Our enhancement primarily focuses on enriching retrieval methods and introducing a reranking module. Based on vector search, the framework performs keyword searches in parallel. After initial context retrieval, a reranking module is supplemented. This module employs topic modeling and a cross-encoder model for similarity re-evaluation, validating the relevance between context and queries. The final chunk ranking is obtained through reverse ranking fusion and input into the base model along with the query. This optimization improves retrieval accuracy, providing the domain-specific LLMs with accurate reference materials and enabling them to answer questions more effectively. (2) Proposing a domain-specific knowledge base construction method: Our contribution mainly lies in the proposed new document chunking scheme. For complex unstructured document data, the study employs an element-based chunking method. Compared to traditional fixed chunking methods, this approach preserves the information of elements more completely. (3) Evaluating both public and private datasets to gain complete insight into the field of biological UAV swarm control: This study conducts both automatic and expert evaluation experiments on a public benchmark dataset and a self-built biological UAV swarm control dataset. The results demonstrate that our domain-specific LLM outperforms the base model. Additionally, ablation experiments analyze the effectiveness of different modules, clarifying their advantages on professional knowledge-intensive tasks.

Author Contributions

Conceptualization, J.-X.H. and L.C.; funding acquisition, J.-X.H.; methodology, L.C. and L.M.; investigation, L.C. and L.M.; data curation, L.C.; supervision, J.-X.H.; writing—original draft preparation, L.M.; writing—review and editing, J.-X.H., L.C. and L.M. All authors have read and agreed to the published version of the manuscript.

Funding

The work described in this paper was partially supported by grants from the National Natural Science Foundation of China (Nos. 72472008, 71974010).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Appendix A.1

This appendix contains all sample case questions and their answers from the raw model and our enhanced model.

| Case Question 1. On what sensory information do swarm entities base their decisions, and how does this relate to biological organisms? |

| Raw model’s response to question 1: Swarm entities, such as insects or birds, often make decisions based on sensory information from their surroundings. For example, an insect might use its sense of vision to detect food sources or potential threats, or a bird might use its sense of smell to locate a mate or identify a predator. In some cases, swarm entities may also use information from the actions or behaviors of other members of their swarm. For example, a bird might follow the lead of other birds in a flock, or an insect might rely on the signals or behaviors of its fellow insects to make decisions about where to go or what to do. This approach to decision-making is similar to that used by some biological organisms, such as social insects or fish. For example, some species of ants rely on chemical signals from other ants to coordinate their activities and make decisions about where to forage for food. Similarly, some fish use visual cues, such as the movement of other fish or the shape of the water column, to navigate and make decisions about where to go. Overall, swarm entities and other biological organisms often rely on a combination of sensory information from their surroundings and the actions and behaviors of other members of their group to make decisions about how to behave. |

| Enhanced model’s response to question 1: Swarm entities base their decisions on various sensory information that allows them to interact with their environment and each other. This sensory information can include, but is not limited to, the following:

Notes:

|

| Case question 2. How many neighbors are taken into account in UAV swarm control? |

| Raw model’s reponse to question 2: In UAV swarm control, the number of neighbors that are taken into account can vary depending on the specific application and control algorithm. Generally, the number of neighbors is a hyperparameter that can be set by the controller. For example, in a decentralized control algorithm, the controller may use a fixed number of neighbors or may dynamically adjust the number of neighbors based on the current state of the swarm. In a centralized control algorithm, the number of neighbors may be set to a fixed value or may be adjusted based on the current state of the swarm. The number of neighbors can affect the performance of the control algorithm, as it determines the level of cooperation between the UAVs in the swarm. A larger number of neighbors can lead to faster convergence and more stable behavior, but may also require more computational resources and may be less effective in dealing with large swarms. A smaller number of neighbors can be more efficient, but may require more careful tuning and may be more susceptible to outliers and other disturbances in the swarm. |

| Enhanced model’s response to question 2: The number of neighbors considered in UAV swarm control varies depending on the algorithm, communication constraints, and specific application requirements. Here’s a concise breakdown: 1. Algorithm Type: Topological Neighborhood: Fixed number of nearest neighbors (e.g., 3–8), regardless of distance. Inspired by biological systems like bird flocks or fish schools 1. Metric Neighborhood: All neighbors within a defined radius. The actual count depends on swarm density and communication range 2. 2. Common Ranges: Many practical implementations use 4–6 neighbors for balance between cohesion and computational efficiency. Some systems dynamically adjust based on swarm density or task demands (e.g., collision avoidance vs. formation keeping) 3. 3. Design Factors:

Notes:

|

| Case question 3. What is the speed of behavior propagation throughout the swarm? |

| Raw model’s response to question 3: The speed of behavior propagation throughout a swarm can vary depending on the specific swarm and the behavior being propagated. In general, the speed of behavior propagation can be influenced by factors such as the size of the swarm, the density of the swarm, the communication channels used by the swarm, and the behavior being propagated itself. For example, in some swarms, behavior may propagate quickly due to high density and efficient communication channels. In other swarms, behavior may propagate more slowly due to lower density or less efficient communication channels. It is also worth noting that the speed of behavior propagation can be influenced by external factors such as environmental changes or disturbances. Overall, the speed of behavior propagation throughout a swarm can be difficult to predict and can vary significantly depending on the specific swarm and situation. |

| Enhanced model’s response to question 3: The speed of behavior propagation throughout a UAV swarm can depend on several factors, including the communication protocol used, the size of the swarm, and the characteristics of the swarm dynamics. In general, the speed of behavior propagation is typically much faster than the speed of information dissemination. For example, in a decentralized control algorithm, the behavior of each UAV is determined locally and then broadcast to its neighboring UAVs, which can propagate the behavior to other neighboring UAVs quickly 1. However, the speed of behavior propagation can be limited by several factors, such as the communication bandwidth, the network latency, and the computational resources available to the UAVs 2. For example, if the communication bandwidth is low, the behavior of UAVs may not propagate quickly enough to respond to changes in the swarm dynamics. If the network latency is high, the behavior of UAVs may not propagate quickly enough to respond to changes in the swarm dynamics in real-time 3. Overall, the speed of behavior propagation is an important consideration in UAV swarm control, as it determines how quickly the swarm can adapt to changes in the environment and how effectively the controller can coordinate the behavior of the UAVs. Notes:

|

| Case question 4. Are there known cases of swarm instability? |