1. Introduction

The increasing proliferation of unmanned aerial systems (UASs), ranging from small consumer drones to larger tactical platforms, has led to a surge in both legitimate and malicious applications. While drones offer substantial benefits across various sectors, including agriculture, logistics, security, and emergency response, their misuse by criminal and terrorist actors presents significant security concerns. Reports of drones being used for smuggling contraband, conducting unauthorized surveillance, interfering with critical infrastructure, and executing targeted attacks have underscored the urgency of developing effective countermeasures [

1,

2,

3]. Law enforcement agencies and security forces face the challenge of detecting, tracking, identifying, and mitigating these threats in a rapidly evolving technological landscape.

Despite the availability of numerous counter-drone solutions on the market [

4,

5,

6,

7], no widely accepted standardized methods exist for assessing their performance. This lack of standardization complicates the efforts of acquisition agencies, law enforcement entities, and other stakeholders tasked with selecting and deploying appropriate counter-drone technologies. Without objective, comparable data, end users struggle to match specific operational requirements with the capabilities of available counter-drone systems. As a result, the selection and procurement process remains fragmented, often leading to suboptimal solutions that may fail to address real-world threats effectively.

To bridge this gap, the COURAGEOUS project [

8] has developed a pre-standard document in the form of a CENELEC Workshop Agreement (CWA). This document, designated CWA 18150 [

9], outlines a standardized test methodology for assessing the performance of counter-drone systems under controlled and relevant operational conditions. The methodology defines a set of evaluation scenarios designed to replicate real-world operational challenges, allowing for fair qualitative and quantitative comparisons of different counter-drone solutions. By adopting this standardized framework, security agencies, procurement bodies, and industry stakeholders can better understand system capabilities and limitations, ultimately improving the selection and deployment of counter-drone technologies.

The purpose of this paper is to provide a structured overview of the CWA 18150 document, serving as both an abstract and an introduction to the standardized test methodology it defines. This paper details the key aspects of performance assessment, including risk analysis, test environments, operational requirements, and evaluation metrics. It aims to offer a comprehensive understanding of the framework developed under the COURAGEOUS project and to facilitate its broader adoption within the security and defense communities. The full CWA document, which contains detailed methodology and implementation guidelines, can be accessed at

https://www.cencenelec.eu/media/CEN-CENELEC/CWAs/ICT/cwa-18150.pdf (accessed on 22 April 2025).

2. Related Work

2.1. Counter-Drone

The technical development of new counter-drone solutions is a common subject of research in the counter-drone area. The projects H2020-ALFA [

10], H2020-ALADDIN [

11], and H2020-SafeShore [

12] are noteworthy examples, as they all drone detection systems created for particular use cases. The creation of an effective drone detection system is generally fraught with two major challenges. First, regardless of the sensor technology employed, drone platforms typically have a very small cross-section and detection baseline [

13]. In fact, drones employ common radio transmission frequencies, have a small sound signature (from a relevant distance), a small visual/infrared signature, a small RADAR cross section, etc. Furthermore, it is challenging to eliminate false positives because the signatures of many drones and birds are fairly similar [

14].

RADAR [

15], acoustics [

16], visual [

17], infrared [

18] (thermal and short-wave), radio spectrum sensing [

19], lidar [

20], and other sensing modalities can be employed to tackle the drone detection problem. Nevertheless, due to the difficulty of solving the problem under real-world operating conditions, the majority of current solutions rely on a combination of various sensing techniques to solve the drone detection problem [

13] and combine conventional detection and tracking techniques [

21] that come from computer vision to accomplish multi-sensor tracking.

The incorporation of counter-drone solutions and practices into standard operating procedures for law enforcement agencies is also a crucial factor to take into account. The projects SkyFall [

22] and DroneWise [

23] are pertinent in this context; they examine various counter-drone systems, integrate the best available systems in a law enforcement organization training, and provide a number of useful end-user-focused measures to enhance the response to UAS terrorist attacks.

2.2. Standard Testing for Counter-Drone Solutions

In terms of standard test methodology development for counter-drone solutions, there have been multiple developments in the past. To state the main problem: two opposing needs can be found regarding the creation of standardized test techniques for assessing drone detection systems’ performance [

13]. In order to identify the limitations of the system being tested, it is necessary to carefully regulate the test settings because drone detection systems typically rely on intricate data fusion and the processing of sensor data. This first need calls for repeatable tests in controlled environments. However, since drone detection systems must function around the clock and in all weather situations, it is necessary to evaluate how well they operate in a variety of environmental settings. This second need calls for the evaluation of the systems in a non-controlled operational environment. Since these constraints conflict with one another, a standardized test methodology must carefully balance these two categories of needs. Therefore, the goal is to identify a validation approach that meets the needs of the platform developers for a quantitative statistically meaningful validation as well as the end users’ requirement for a qualitative operational validation of the system [

13].

In the realm of robotics, for example, the National Institute of Standards and Technology (NIST) in the United States has previously recommended such qualitative and quantitative validation approaches [

24]. Based on the work performed at NIST, a first qualitative and quantitative validation methodology was presented in [

25] and validated in [

26]. This methodology was applied for the first time in the framework of standardized counter-drone testing in the years 2017 and 2018 [

27] in the framework of the SafeShore project [

12]. In that period, the National Nuclear Security Administration of the United States Department of Energy also conducted research in the United States to build a counter-drone testing and evaluation methodology [

28]. Although it never actually became a standard, the test approach outlined defines test techniques, performance measurements, UAS types tested, critical variables, and the required data analysis to reliably assess the capabilities of counter-drone technology.

The absence of a standard test method started becoming problematic from around the year 2020, when multiple counter-drone solutions became available on the market, and the performance capabilities of these systems became harder and harder to differentiate for end users. Law enforcement agencies and other government actors conducted various counter-drone equipment tests in critical infrastructure protection scenarios [

29], but the problem is that these trials are highly costly for all actors involved, and results are not easily transferable to other environments.

This challenge has led to multiple standardization efforts across international organizations. EUROCAE WG-115 [

30] has been actively developing standards such as ED-286, which provides an Operational Services and Environment Definition (OSED) for counter-drone operations in controlled airspace, and ED-322, which sets System Performance and Interoperability Requirements for Non-Cooperative UAS Detection Systems. These efforts focus specifically on airport environments and happen in close coordination between Europe (EUROCAE) and the United States, where the Federal Aviation Administration (FAA) has initiated Special Committee SC-238 to establish comprehensive evaluation guidelines for counter-drone technologies. By working together, EUROCAE WG-115 and FAA SC-238 aim to ensure the safe integration of counter-drone systems within existing aviation structures while maintaining effective detection and mitigation capabilities.

NATO is another key player in establishing counter-drone test procedures. (i) Establishing a counter-drone community; (ii) policy, concepts, doctrine, tactics, techniques, and procedures; (iii) standardization; and (iv) research, development, and operation activities are among the topics being worked on by the NATO counter-drone Working Group. The annual TIE exercises, which are designed to promote interoperability among various counter-drone assets, are a significant example [

31].

In Germany, DIN is working on DIN 5452-9 [

32], which focuses on drone detection, as well as an attachment that defines conformance test procedures. These efforts aim to create a robust testing framework that allows for systematic evaluation of counter-drone effectiveness. Similarly, the UK’s National Protective Security Authority (NPSA) is developing a “counter-drone Testing and Evaluation Standard”, which aims to provide a structured methodology for performance assessment.

The International Standardization Organization ISO is also contributing to the standardization landscape with ISO/CD 16746 [

33], which provides guidance to end-users on the deployment of counter-drone equipment, and ISO/CD 16747 [

34], which supports manufacturers in producing effective and compliant counter-drone solutions. These standards seek to harmonize best practices and technical specifications, ensuring consistency and interoperability across different jurisdictions.

These initiatives demonstrate the global recognition of the need for standardized test methodologies. However, disparities in national regulations and differing operational requirements present ongoing challenges. The CWA 18150 developed under the COURAGEOUS project aims to maximize the usage of work being carried out in many countries and complements these efforts by providing a structured approach to performance evaluation while ensuring compatibility with emerging international standards. By aligning with these ongoing standardization initiatives, CWA 18150 seeks to provide acquisition agencies and security stakeholders with a reliable framework for assessing and comparing counter-drone technologies in a transparent and repeatable manner.

2.3. Organization of This Paper

The remainder of this paper is organized as follows. In order to provide a clear grasp of the problem,

Section 3 provides an analysis of incidents related to UAS and an analysis of identified gaps in counter-drone capabilities. To further analyze the current playing field,

Section 4 investigates the current technologies and methods used for counter-drone operations. Based on the groundwork in the previous sections,

Section 5 proposes 10 standard counter-drone scenarios. These standard scenarios will serve as a guideline throughout the whole standardization process. For each of the standard scenarios, a risk analysis is performed, as explained in

Section 6. In a parallel line of work and through discussions with end-users of counter-drone solutions, a set of

operational needs for counter-drone coverage were devised in

Section 7, together with a series of

counter-drone system performance requirements and metrics. Based on all this input, a counter-drone System Evaluation Method was developed in

Section 8. This methodology was validated during three large-scale trials in Greece, Belgium and Spain, as discussed in

Section 9.

Section 10 concludes this paper by investigating and discussing the results obtained and by identifying remaining gaps and directions for future research.

3. Incidents Analysis and Identification of Gaps

3.1. Incident and Threat Analysis

The increasing proliferation of drones has led to a rise in security concerns globally. Unauthorized drone activities have been reported in various environments, ranging from airports to government facilities, often leading to operational disruptions or security breaches. In order to obtain a good overview of the problem, a systematic review of more than 200 drone-related incidents in the European territory was performed, analyzing recent drone incidents, categorizing threat actors, and highlighting the gaps in counter-drone measures:

Targeted Locations: Airports, government buildings, military installations, and critical infrastructure remain prime targets for unauthorized drone activities.

Incident Types: The most common incidents include unauthorized surveillance, smuggling, near-miss collisions with manned aircraft, and weaponized drone deployments.

Operational Environments: Dense urban areas present unique challenges due to radio frequency (RF) congestion and limited line-of-sight detection capabilities.

Technological Capabilities: Advances in commercial drone technology, including autonomous navigation and payload adaptability, have outpaced current counter-drone countermeasures.

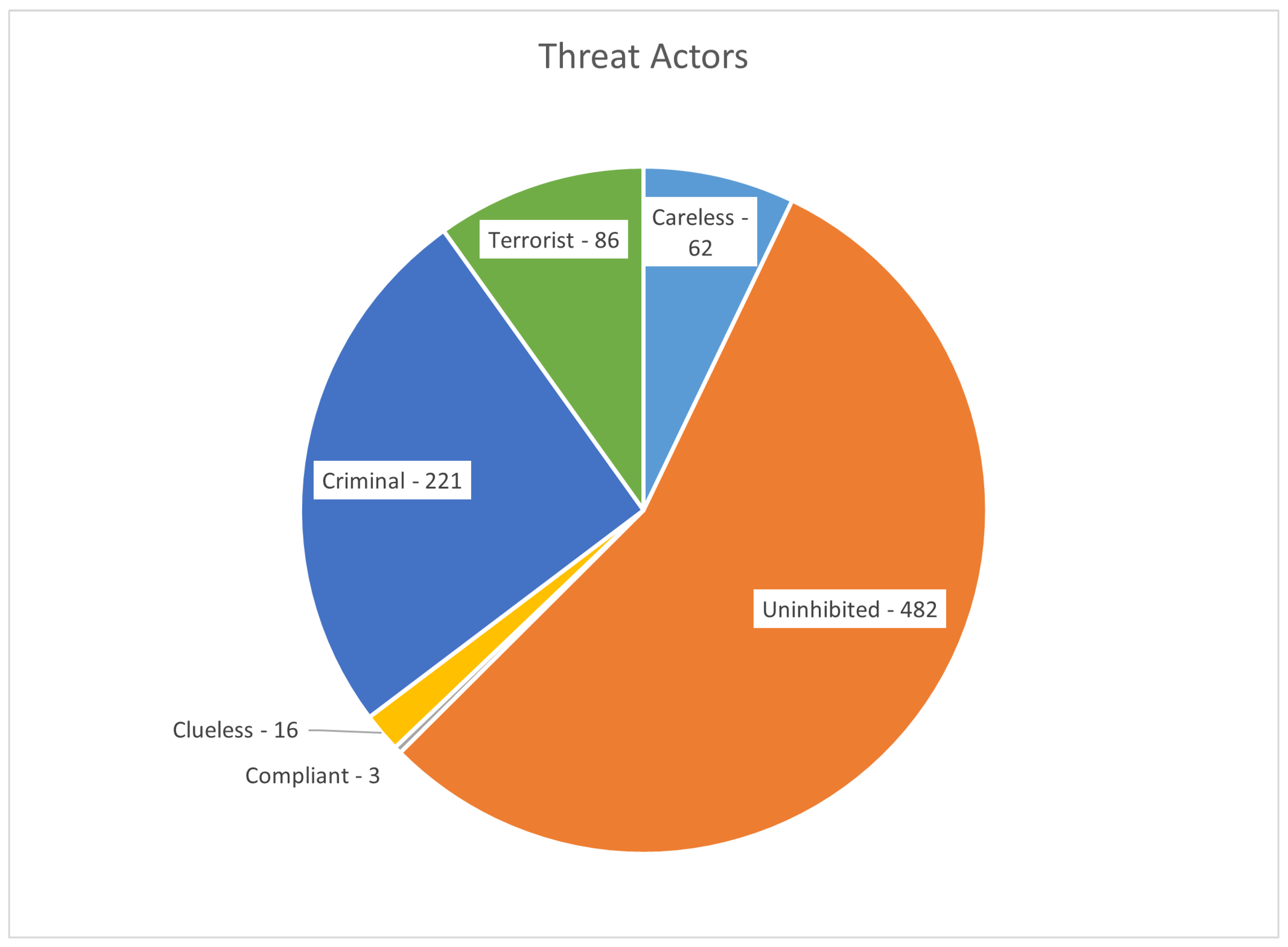

The analysis of drone-related incidents reveals that the majority of cases involve uninhibited operators, followed by criminal activities.

Figure 1 categorizes these threat actors into six main groups:

Uninhibited (55%): Operators who frequently violate regulations but do not necessarily have malicious intent.

Criminal (25%): Drones used for illicit activities such as smuggling contraband.

Terrorist (10%): Drones employed in attacks or reconnaissance by hostile entities.

Careless (7%): Operators who occasionally breach airspace restrictions unintentionally.

Clueless (2%): Operators unaware of regulations, often leading to airspace violations.

Compliant (0.3%): Law-abiding drone users who adhere to regulations.

The data indicate that while regulatory violations are common, a significant number of incidents involve the intentional misuse of drones for criminal or terrorist purposes.

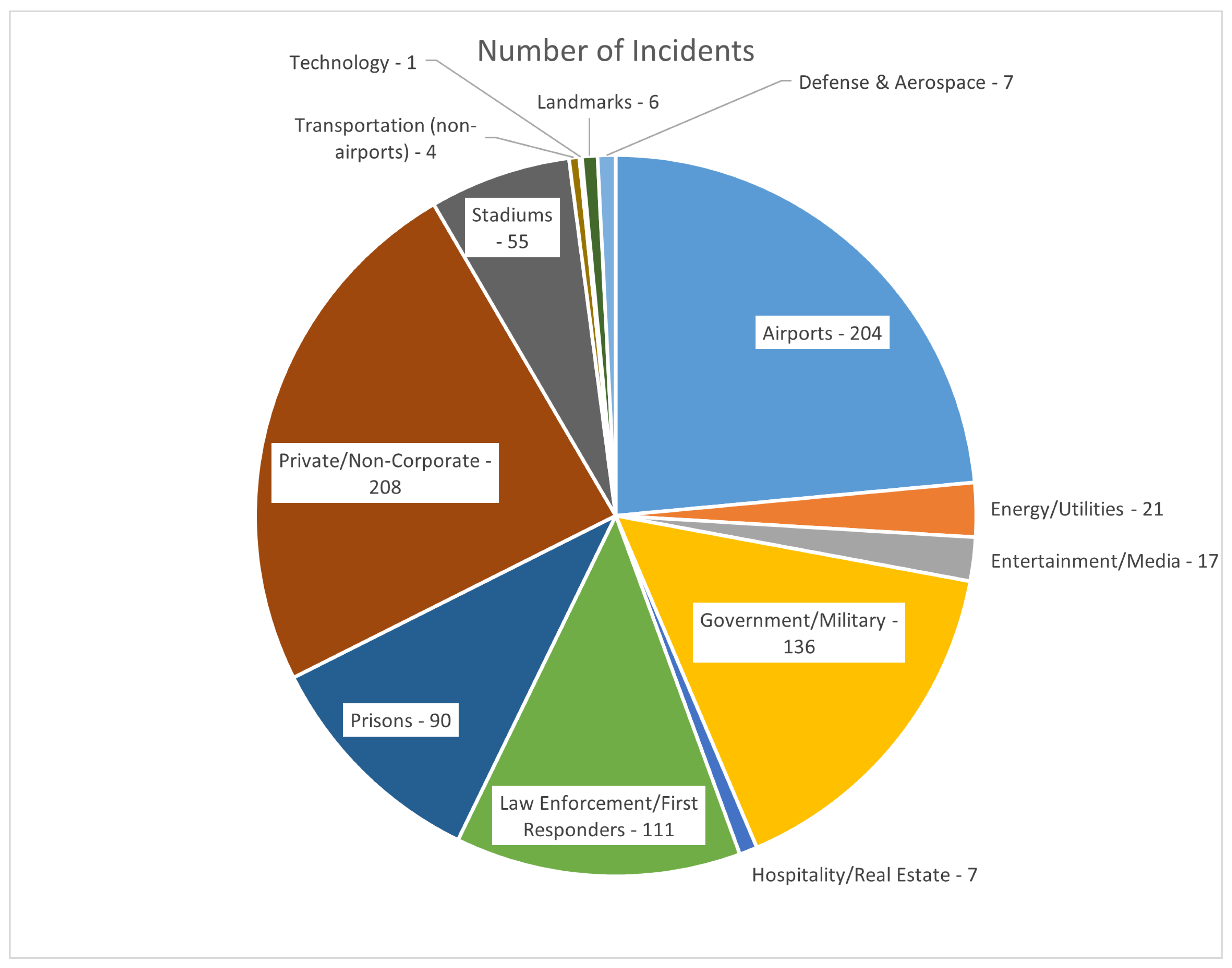

Drone-related incidents have been observed across various sectors.

Figure 2 provides an overview of the distribution of incidents across different environments:

Private/Non-Corporate (24%): Drones flown over residential areas, often leading to privacy violations.

Airports (24%): Incidents causing flight delays and safety concerns.

Government/Military (16%): Unauthorized surveillance or potential attacks.

Law Enforcement/First Responders (13%): Interference with emergency operations.

Prisons (10%): Drones used for smuggling contraband.

Stadiums (6%): Uncontrolled drone operations during events.

The high occurrence of incidents in airports and private properties suggests an urgent need for robust counter-drone strategies.

3.2. Gap Analysis for Counter-Drone Measures

Despite advancements in drone detection and mitigation, several gaps remain unaddressed.

Legislation governing counter-drone operations varies significantly across jurisdictions, creating ambiguity regarding permissible countermeasures. Additionally, free-access areas, such as public spaces and private properties, pose enforcement difficulties due to privacy and regulatory limitations. Furthermore, there is no unified response framework for handling drone incursions.

Despite the availability of various DTI technologies, only 5% of the reported incidents indicate the presence of an operational detection system. Furthermore, these systems exhibit reduced efficacy in RF-dense environments, adverse weather conditions, and scenarios involving multiple airborne objects.

While off-the-shelf drones account for the majority of incidents, the increasing prevalence of modified and custom-built UAVs presents a growing concern. Such drones often utilize non-standard communication protocols, rendering conventional RF-based detection methods less effective.

A significant proportion of incidents involve drones carrying undefined payloads, raising uncertainties regarding their intent and threat level. Distinguishing between benign and hostile drones remains a major challenge, especially in areas where multiple UAS operate simultaneously.

A unified incident reporting mechanism is lacking across member states, hindering data-driven policy formulation and response coordination. The absence of structured information-sharing frameworks limits the development of proactive countermeasures.

4. Review of Current Counter-Drone Frameworks

The field of counter-drone has seen significant advancements, driven by the increasing use of drones for both commercial and military applications. This section provides an overview of the existing detection, tracking, and identification (DTI) technologies and their combinations in counter-drone solutions.

4.1. Technologies for Detect, Track, and Identify (DTI) in Counter-Drone Solutions

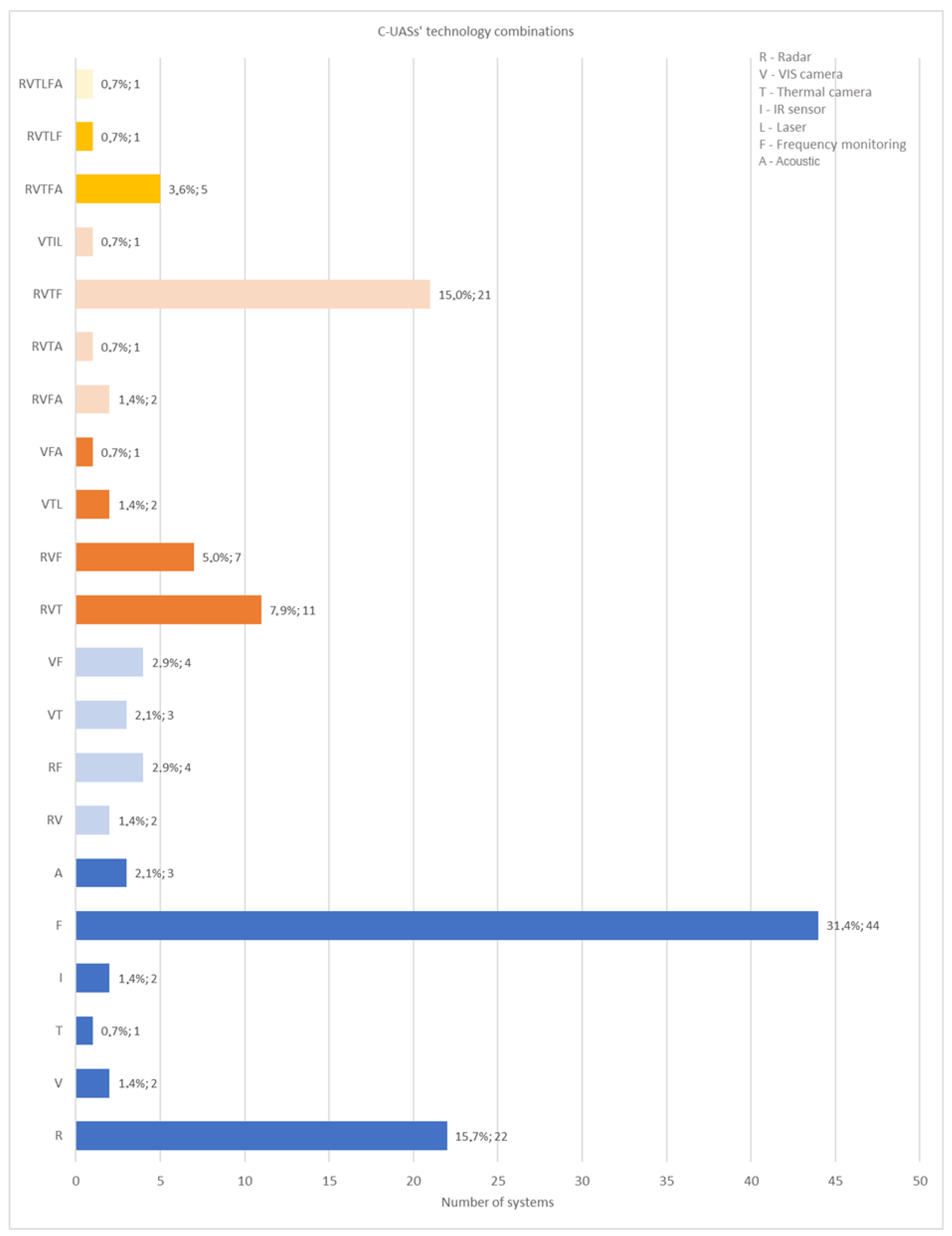

For this study on counter-drone technologies, information on 260 anti-drone systems was initially collected. This initial dataset was downscaled to 144 systems based on the level of detail and the appropriateness of the data that could be obtained for the systems. These counter-drone solutions utilize multiple technologies for detecting, tracking, and identifying drones.

Figure 3 illustrates the distribution of these technologies. The most widely used methods include the following [

35].

Microwave radars: Employed in 55% of the systems, radars detect drones through active radio wave emissions and reflections. Among their principal advantages is their capability to operate independently of lighting conditions, enabling continuous operation both day and night. Radars also maintain functionality under moderate adverse weather conditions, although performance may be degraded in cases of heavy rain, fog, or snow. A significant strength lies in their ability to provide not only detection but also precise measurements of range, radial velocity, and, in some configurations, altitude. Furthermore, microwave radars are capable of wide-area surveillance, especially when integrated with mechanically or electronically scanned antenna arrays. However, radars exhibit certain limitations. The detectability of small drones, often characterized by minimal radar cross-sections, remains challenging, particularly when using lower-frequency radars. Atmospheric attenuation, especially at higher frequencies, can significantly reduce detection ranges in adverse weather. Moreover, radars are susceptible to electromagnetic interference, both accidental and deliberate (e.g., jamming), which can compromise their reliability. Lastly, as active emitters, radar systems can themselves be detected by adversaries, posing operational security risks.

Visible light (VIS) cameras: Used in 47% systems, VIS cameras enable optical detection and visual tracking, very often as a secondary sensing modality to support the identification of the drone threat or to provide a visual cue for the operators. Visible light (VIS) cameras are widely employed in detection and identification tasks due to their ability to capture detailed imagery that is easily interpretable by human operators and compatible with automated image recognition algorithms. They offer the advantage of high spatial resolution, enabling the identification of small targets at considerable distances, provided that appropriate optics are used. VIS cameras are passive sensors and thus do not reveal their position, making them suitable for covert operations. Nevertheless, their effectiveness is highly dependent on ambient illumination. In low-light conditions, such as nighttime or heavy cloud cover, their detection capability diminishes markedly unless supplemented with artificial illumination, which itself compromises operational discretion. Additionally, environmental factors such as haze, fog, and precipitation can significantly impair VIS camera performance, reducing both detection probability and identification accuracy.

Thermal imaging cameras: Integrated into 35% of systems, these cameras detect drones based on infrared signatures. Thermal imaging cameras provide a complementary detection capability by exploiting differences in infrared radiation emitted by objects of varying temperatures. They enable effective surveillance in complete darkness and can detect drones based on the thermal signatures of their motors, batteries, and other heat-generating components. Thermal cameras are less sensitive to visual obstructions like smoke or camouflage, enhancing their utility in degraded visual environments. However, their performance can be affected by environmental conditions such as heavy rain, dense fog, or high ambient temperatures, which reduce thermal contrast. Furthermore, thermal cameras generally offer lower spatial resolution compared to VIS cameras, complicating the task of precise identification. Their field of view is often narrower when designed for long-range detection, necessitating careful integration into multi-sensor systems for comprehensive coverage.

Frequency monitoring devices: Present in 64% systems, these devices detect and analyze radio frequency emissions from drones and their controllers. Frequency monitoring devices constitute a passive detection technology based on the interception and analysis of radio frequency (RF) communications between drones and their operators. Their key advantage lies in their ability to detect drones beyond visual or radar line of sight, as RF signals can propagate through certain obstacles. Additionally, some systems can exploit RF fingerprints or decode transmitted telemetry to infer the type and status of a drone. Frequency monitoring is inherently stealthy, posing no risk of revealing the presence of the detection system. Nevertheless, the technique is limited to drones actively emitting signals within monitored frequency bands. Drones operating autonomously, with pre-programmed routes and no active communication links, remain undetectable. Moreover, urban environments saturated with RF signals present significant challenges, including high false alarm rates and difficulty in accurate localization.

Acoustic sensors: Used in 10% systems, these sensors capture and analyze the sound signatures of drone propellers. Acoustic sensors offer another passive method for drone detection, relying on the identification of characteristic sound signatures generated by drone rotors and motors. Their advantages include independence from electromagnetic spectrum constraints and the ability to detect drones at relatively short ranges without line of sight, depending on environmental conditions. Acoustic arrays can be compact and rapidly deployable, making them suitable for mobile operations. However, acoustic detection suffers from severe limitations. Environmental noise, such as wind, vehicular traffic, or industrial machinery, can mask drone signatures and lead to missed detections or false alarms. Additionally, the effective detection range of acoustic systems is limited, typically to a few hundred meters under ideal conditions, which may be insufficient for early threat warning in many operational scenarios.

Infrared (IR) sensors and lidar systems: These are less commonly used, with 2% and 4% of implementations, respectively. Infrared (IR) sensors, distinct from thermal cameras in this context, typically refer to simpler, often single-pixel or low-resolution devices detecting changes in infrared radiation across a scene. They are advantageous for detecting warm objects against cooler backgrounds and can serve as effective triggers for cuing higher-resolution systems. Like thermal cameras, they operate independently of visible light, providing nighttime capability. However, their lack of imaging capacity constrains their utility primarily to detection rather than classification or identification tasks. Furthermore, IR sensors share similar vulnerabilities to environmental factors such as fog and precipitation, which attenuate infrared radiation and degrade performance. Finally, lidar systems, employing laser light to measure distances to objects, offer high-precision three-dimensional mapping and object detection capabilities. Lidar can provide exact spatial positioning of detected objects, an advantage in cluttered environments where other sensors may struggle with false positives. It is especially effective for short- to medium-range applications where detailed scene understanding is critical. Nonetheless, lidar systems are active emitters and thus can potentially be detected by adversaries. Their performance is also sensitive to atmospheric conditions, with fog, rain, and snow, causing signal scattering and attenuation. Moreover, eye-safety considerations constrain the power of lidar emissions, potentially limiting operational range in certain contexts.

The prevalence of these technologies indicates a trend toward multi-sensor fusion for enhanced reliability and robustness.

Recent Trends in Data Fusion Algorithms for Detection and Tracking The field of sensor fusion for detection and tracking, especially in counter-drone applications, is rapidly evolving through both AI-based methods and enhancements of classical techniques. The objective is to increase system robustness, reduce false alarms, and provide continuous operational capability under varying environmental conditions. Key algorithmic approaches currently influencing the domain include the following.

Multi-Sensor Data Fusion with AI Augmentation

Recent C-UAS systems increasingly employ AI-enhanced sensor fusion, where outputs from multiple heterogeneous sensors (e.g., radar, EO/IR cameras, acoustic arrays, RF detectors) are merged using machine learning models. Neural-network-based fusion algorithms, particularly Convolutional Neural Networks for image-based inputs and Recurrent Neural Networks or Transformers for time-series sensor data, are used to correlate and refine detection and tracking information.

These methods train models on multimodal datasets to recognize complex threat patterns, compensate for sensor-specific weaknesses, and provide probabilistic threat classification. AI helps handle missing or inconsistent data, dynamically weighting sensor contributions depending on contextual factors like weather or terrain.

Kalman Filter and Its Modern Extensions

The Kalman Filter and its variants, such as the Extended Kalman Filter and the Unscented Kalman Filter, remain fundamental in tracking moving objects. In C-UAS systems, Kalman-based methods are used to estimate drone positions and velocities by fusing inputs from radar, EO/IR, and RF systems.

Recent trends include Adaptive Kalman Filters, where the process and measurement noise covariances are adjusted online based on sensor reliability indicators. Additionally, Interacting Multiple Model Filters are employed to switch between different motion models (e.g., hover, fast maneuver, ballistic drop) depending on drone behavior.

Bayesian Data Fusion

Bayesian approaches, especially Bayesian Networks and Particle Filters, are prominent for managing uncertainty in detection and tracking. These algorithms model the probability distribution over possible drone states and update these probabilities as new sensor observations are received.

Modern particle filter implementations incorporate multi-hypothesis tracking to handle multiple drones and ambiguous observations, reducing misidentification rates. Bayesian fusion is also applied when combining conflicting sensor outputs, allowing soft evidence integration rather than binary decisions.

Dempster–Shafer Theory for Evidence Fusion

The Dempster–Shafer Theory of Evidence is increasingly used to fuse outputs from heterogeneous sensors in a way that explicitly models ignorance and conflicts between sensor reports.

This method provides belief intervals rather than point estimates, improving decision-making under uncertainty. It is particularly valuable when sensor reliability varies dynamically, such as in RF-denied or visually degraded environments.

Graph-Based and Topological Fusion

Emerging methods involve representing multi-sensor outputs as nodes and edges in a graph, allowing Graph Neural Networks or topological data analysis to find consistent tracking paths and correlations between detections.

Graph-based fusion is especially effective in complex scenarios with swarming drones or dense urban environments, where traditional filters struggle due to frequent occlusions and sensor dropout.

4.2. Combination of Technologies in Counter-Drone Solutions

Modern counter-drone solutions often integrate multiple detection technologies to improve detection probability and tracking accuracy.

Figure 4 presents the percentage distribution of different technological combinations within counter-drone solutions. The breakdown is as follows:

Single-technology systems: Fifty-three percent of counter-drone solutions rely on one detection method, primarily frequency monitoring or radar-based detection.

Two-technology systems: nine percent of solutions combine two methods, such as radar and VIS cameras.

Three-technology systems: Fifteen percent of solutions incorporate three distinct technologies, enhancing multi-modal detection.

Four or more technology systems: Twenty-three percent of solutions employ four or more technologies, typically integrating radars, cameras, RF monitoring, and additional sensors.

The trend toward multi-technology systems highlights the need for robust data fusion methodologies to effectively mitigate false positives and negatives.

Countermeasures and Mitigation Techniques

Upon the detection and identification of a drone threat, various mitigation techniques can be deployed:

Electronic Countermeasures: These include RF jamming, spoofing, and protocol manipulation to disrupt drone communications or take control of the aircraft.

Kinetic Solutions: Physical neutralization methods, such as net guns, interceptor drones, and high-energy laser weapons, are deployed in scenarios requiring the immediate elimination of threats.

Directed Energy Systems: Microwave-based and laser-based directed energy weapons provide non-contact neutralization by disabling drone electronics or structure.

4.3. Challenges and Considerations in Counter-Drone Technology Selection

Selecting an appropriate counter-drone system requires the consideration of multiple factors, including the following.

Detection range: Different technologies exhibit varying effectiveness depending on range constraints and environmental conditions.

Weather resistance: Radar and frequency monitoring are more resilient to adverse weather than optical and infrared-based systems.

False alarms: Acoustic sensors and frequency monitoring devices may generate false detections due to ambient noise or RF interference.

Deployment feasibility: Mobile, vehicular, and fixed installations require different logistical and operational considerations.

Integration with existing systems: Many counter-drone solutions must interface with existing security frameworks, requiring compatibility with software and hardware components.

Regulatory constraints: National and international regulations govern the use of certain detection and mitigation techniques, affecting system deployment.

Operational Environments: Urban settings present challenges due to RF congestion and the presence of obstacles, whereas open-field environments offer improved detection and engagement opportunities.

Advancements in UAS Technology: The increasing use of autonomous drones, encrypted communications, and low-observable designs necessitates the continuous evolution of counter-drone methodologies.

The current landscape of counter-drone technologies reflects an ongoing arms race between drone capabilities and counter-drone solutions. Future advancements in artificial intelligence, sensor fusion, and autonomous countermeasure deployment will play a critical role in enhancing the resilience and effectiveness of counter-drone frameworks.

5. Development of Standard Scenarios

5.1. The Need for Standard Scenarios

To ensure a comprehensive assessment of counter-drone capabilities, standardized scenarios must be developed. These scenarios provide a structured framework for testing detection, tracking, and identification (DTI) performance across diverse threat conditions. Without such standardization, comparisons between different counter-drone solutions remain inconsistent, hindering technological advancements and operational readiness. The establishment of well-defined scenarios enables researchers, security agencies, and policymakers to benchmark system effectiveness, identify limitations, and refine countermeasure strategies.

Standardization also supports regulatory efforts, helping define clear operational requirements and legal constraints for counter-drone deployment. By ensuring that different counter-drone technologies are tested under comparable conditions, authorities can establish baseline performance metrics and guidelines. Furthermore, standardized scenarios aid in developing training programs for law enforcement agencies, ensuring personnel are well-prepared to handle UAS threats effectively.

In addition to enhancing technical evaluations, standardized scenarios facilitate collaboration across international security agencies and industry stakeholders. By defining common testing parameters, organizations can share data and insights, driving collective improvements in counter-drone technologies and response strategies. The increasing complexity of drone threats underscores the need for a unified approach, making scenario standardization a crucial step in advancing counter-drone capabilities.

5.2. Methodology for Creating Standard Scenarios

The development of standardized scenarios within the COURAGEOUS project followed a systematic methodology incorporating multiple data sources and expert validations. The process involved four key steps.

Literature Review: An extensive review of existing counter-drone frameworks, threat assessments, and prior studies was conducted to identify recurring challenges and gaps in current methodologies.

Incident Analysis: A comprehensive analysis of past UAS-related security incidents was performed to extract relevant parameters influencing scenario design, such as environmental conditions, UAS characteristics, and operational constraints.

Stakeholder Input: Law enforcement agencies (LEAs) and other end users provided valuable insights through structured questionnaires and workshops, ensuring that the scenarios aligned with real-world security concerns.

Iterative Validation: The initial set of scenarios was refined through iterative discussions with experts and field trials, ensuring applicability across diverse operational contexts.

In each of these steps, a broad set of factors was considered, including drone size, speed, flight altitude, payload type, and operational intent. By incorporating these elements, the resulting scenarios comprehensively address the spectrum of UAS threats.

Beyond the methodological approach, statistical models and simulations were used to analyze potential drone threats under different conditions. Advanced modeling techniques, including Monte Carlo simulations, were applied to predict UAS behavior and potential countermeasure effectiveness. This data-driven approach ensures that the developed scenarios not only reflect historical incidents but also anticipate emerging threats in the rapidly evolving UAS landscape.

5.3. Overview of Standard Scenarios

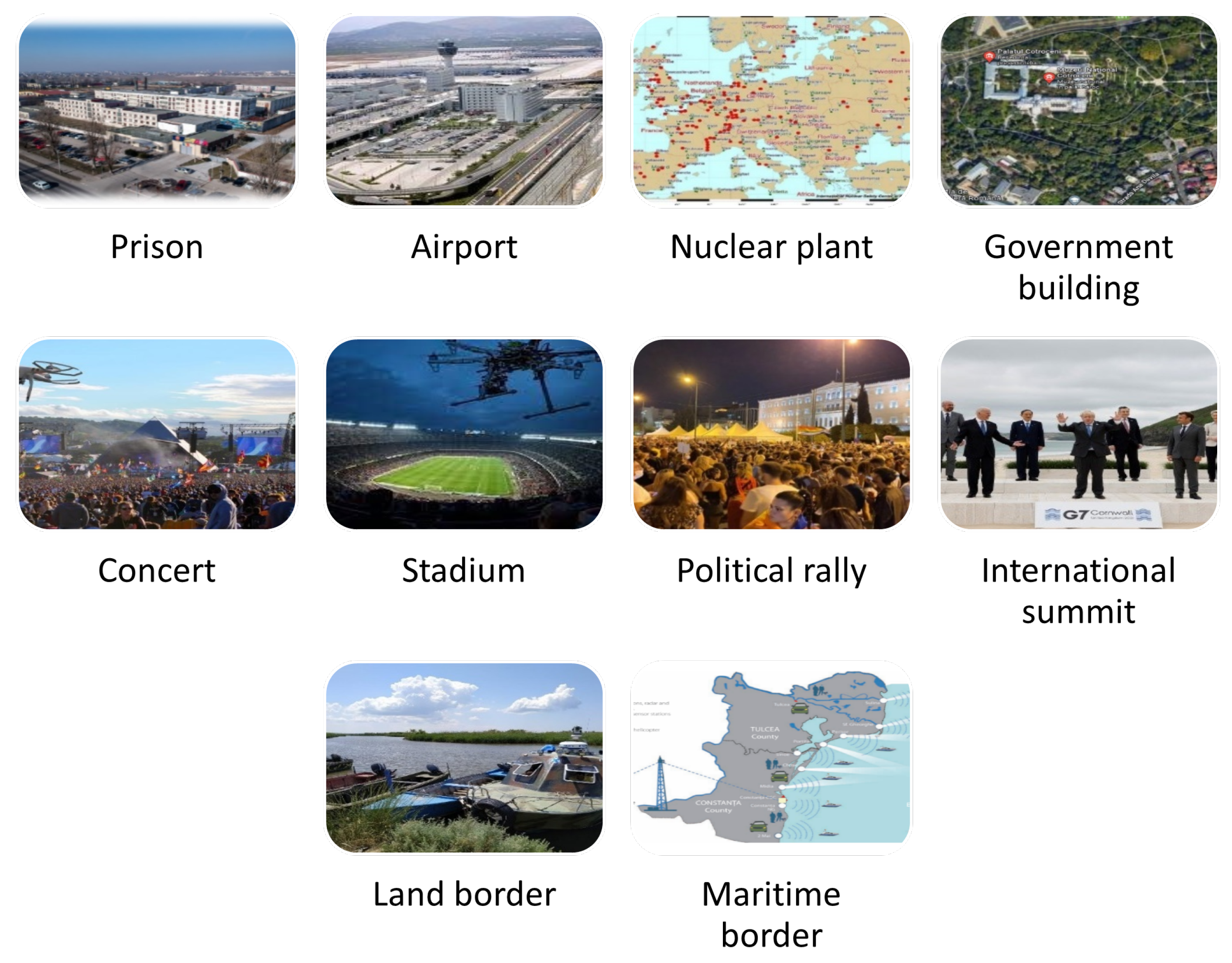

The standard scenarios developed within the COURAGEOUS framework are categorized into three main groups [

36]:

Sensitive Sites/Critical National Infrastructure,

Public Spaces Protection/Events, and

Border Protection (Land–Maritime). Each scenario reflects a plausible threat event, outlining key operational conditions and adversarial tactics.

The ten standardized scenarios are shown in

Figure 5 and can be described as follows.

Prison—Unauthorized drone activity attempting to smuggle contraband into a correctional facility.

Airport—A rogue UAS operating in restricted airspace, posing a collision risk to manned aircraft.

Nuclear Plant—Surveillance or potential sabotage by a hostile drone over critical energy infrastructure.

Government Building—Unauthorized UAS activity threatening national security at government premises.

Stadium—A drone attempting to disrupt a large public event, such as a sports match.

Outdoor Concert—Aerial surveillance or potential attack during a high-profile entertainment event.

Outdoor Political Rally—A drone deployed for surveillance or direct attack during a political gathering.

International Summit—Hostile UAS presence at a high-security diplomatic event.

Land Border—Surveillance or smuggling activity using drones to bypass border controls.

Maritime Border—UAS-assisted illegal crossing or surveillance in maritime security zones.

Each scenario is designed with specific parameters, including environmental conditions, drone capabilities, and the nature of the security challenge. These structured descriptions enable precise testing and evaluation of counter-drone performance under realistic threat conditions.

By standardizing these scenarios, a common reference framework is established that enhances interoperability, facilitates comparative assessments, and improves the overall effectiveness of counter-drone solutions. The scenarios provide a foundation for controlled testing environments and real-world field trials, helping refine counter-drone response strategies and operational protocols.

However, it is obvious that these standard scenarios do not cater to all use cases. This is why an open architecture was used in the development of the CWA pre-standard, enabling any user to come up with new scenarios based on the existing templates. Future research will also continue to adapt these scenarios based on evolving threats and technological advancements, ensuring that the standardized framework remains relevant and effective in countering UAS threats.

6. Risk Analysis and Risk Assessment Metrics

The risk assessment of potential UAS threats is crucial to ensuring effective mitigation strategies. A comprehensive risk assessment must consider the dynamic and evolving nature of UAS threats, which range from accidental airspace incursions to deliberate attacks. The risk evaluation process involves understanding the threat landscape, assessing vulnerabilities in existing defense mechanisms, and defining appropriate metrics to quantify risks and system performance.

This section presents the contributions in developing a systematic methodology for risk analysis in counter-drone applications, introducing a structured approach to evaluating threats posed by drones, and provides a set of standardized risk assessment metrics tailored to different operational contexts. By leveraging empirical data, expert inputs, and analytical models, the framework enhances the ability to quantify and respond to drone-related risks effectively.

6.1. Methodological Approach to Risk Assessment

The COURAGEOUS project adopted a multi-layered methodological approach to define and evaluate the risks associated with UAS threats. The process involved the identification of key risk factors through literature analysis, historical incident evaluation, and end-user consultations. A structured questionnaire was disseminated among stakeholders, including law enforcement agencies (LEAs) and security professionals, to systematically assess the likelihood and impact of various UAS threat scenarios.

The risk assessment model integrates two primary indicators:

Likelihood: The probability of an adversarial UAS operating within a given scenario.

Impact: The potential consequences of an unauthorized UAS action, taking into account physical, financial, and operational damages.

By combining these factors, a comprehensive risk matrix was developed. This matrix allows for the classification of drone threats into different risk categories, facilitating prioritization and resource allocation for countermeasures. The methodology further considers the role of UAS capabilities, such as payload capacity, flight endurance, and autonomy, in shaping the threat landscape.

The risk posed by UAS can be categorized into multiple dimensions, including technical, operational, and strategic risks. Technical risks involve limitations in detection, tracking, and neutralization capabilities, while operational risks stem from the complexity of deploying counter-drone systems in urban and contested environments. Strategic risks pertain to policy, regulatory, and legal challenges that influence the effectiveness of counter-drone solutions.

A structured risk assessment framework is required to systematically evaluate the probability and impact of UAS threats. The Specific Operations Risk Assessment (SORA) methodology is commonly used to analyze these risks, providing a step-by-step approach to evaluate operational safety and mitigation strategies. SORA identifies risk factors such as the likelihood of drone incursions, the potential consequences of an attack, and the effectiveness of existing countermeasures.

A core innovation in the framework is the establishment of a dynamic risk evaluation model that continuously updates based on real-time threat intelligence and evolving UAS technology trends. This ensures that the risk assessment process remains relevant and adaptable to emerging threats.

6.2. Risk Assessment Metrics

To effectively quantify risk levels, several key performance indicators (KPIs) must be defined. Detection probability measures the ability of a counter-drone system to identify unauthorized drones within a defined range and timeframe. The false alarm rate quantifies the occurrence of erroneous detections that may lead to operational inefficiencies. Response time evaluates the system’s ability to detect, track, and neutralize a threat before it reaches its target. The mitigation success rate reflects the effectiveness of countermeasures in neutralizing hostile drones. Additionally, environmental adaptability assesses system performance under varying weather and geographical conditions.

The risk analysis for each scenario (as presented in the previous section) is derived from an aggregation of expert evaluations and empirical data. Each scenario is assigned a total risk score based on the weighted sum of likelihood and impact assessments. The highest risk scenario identified was the Prison Scenario, as it concerns a scenario in an urban environment, where it is impossible to exert a continuous total control. The lowest risk scenario was the International Summit, as for this type of scenario, multiple counter-drone tools can be employed and a strict control can be enforced.

The project also introduces Key Risk Indicators (KRIs), which serve as benchmark values for assessing UAS threats. These indicators include (among others):

Environmental complexity and operational constraints.

The technical capabilities of adversarial UAS.

The effectiveness of existing counter-drone measures against varying drone technologies.

The frequency of drone incursions in specific operational environments.

The ability of counter-drone operators to respond effectively to identified threats.

The results of this analysis directly contribute to refining counter-drone strategies. By mapping risk scores to operational response levels, security agencies can optimize resource allocation and response protocols. Furthermore, the adoption of standardized risk assessment methodologies fosters interoperability among European security forces, enhancing cross-border collaboration in drone threat mitigation.

7. Performance Requirements

7.1. Operational Needs and Performance Requirements

Operational needs define the essential capabilities required to counter unauthorized UAS incursions. These requirements are derived from standardized scenarios encompassing diverse operational environments, such as urban centers, airports, prisons, and maritime borders. Key operational parameters include early warning systems, real-time tracking, multi-sensor integration, and seamless interoperability with existing security infrastructure.

A critical challenge in defining operational needs is the lack of universally accepted technical standards. Consequently, operational requirements must be structured to ensure compatibility with evolving threats and emerging technologies. The ability to accurately detect, track, and identify threats remains the cornerstone of an effective counter-drone strategy, with priority considerations given to minimizing false alarms and maximizing detection range.

7.2. Methodology for Operational Needs Definition

To systematically capture the operational needs for counter-drone operations, an approach with structured requirements definitions was employed. The methodology adheres to best practices in system engineering and requirements management, ensuring consistency, traceability, and usability for subsequent system development phases. The approach follows a sequential process:

Operational Needs Definition: Identification of core operational requirements based on stakeholder input and analysis of real-world threat scenarios.

System Procurement Considerations: Translation of operational needs into system-level requirements to guide the selection and development of counter-drone technologies.

Validation and Iteration: Continuous refinement of requirements based on testing, feedback, and evolving operational challenges.

This iterative framework ensures that the operational needs remain aligned with real-world constraints while accommodating technological advancements and policy developments.

To standardize the requirements formulation, the IEEE 29148 process terminology was adopted. This standard is widely recognized across European partners and has demonstrated effectiveness in prior security and defense-related projects. The prioritization of requirements follows a structured classification to facilitate clear decision-making:

Shall: High-priority requirements that must be met for the project to achieve its core objectives.

Should: Medium-priority requirements that are desirable but not essential, allowing for trade-offs if necessary.

May: Low-priority requirements that provide additional value but do not impact the fundamental operational capability of the system.

This classification enables end users to systematically articulate their needs while ensuring technical partners can effectively translate these requirements into implementable system specifications.

To facilitate clarity and traceability, operational needs were documented using a standardized template. Each requirement is uniquely identified and categorized based on its significance and intended application. The structured format includes

Requirement Number: A unique identifier for tracking and reference.

Requirement Name: A concise descriptor summarizing the need.

Description: A detailed explanation, including the rationale and operational context.

Importance: The assigned prioritization level (Shall, Should, May).

This approach ensures that all stakeholders—ranging from system operators to technology developers—have a common understanding of the operational expectations and constraints.

The structured requirements framework encompasses a comprehensive range of operational needs, particularly focusing on detection, tracking, and identification (DTI) capabilities. Given the diversity of deployment environments, requirements are tailored to address specific scenarios, such as:

General Operational Needs: Baseline capabilities applicable across multiple deployment contexts.

Scenario-Specific Needs: Custom requirements adapted to distinct security environments (e.g., airports, government buildings, public events).

Integration Needs: Interoperability with existing security infrastructure and command-and-control systems.

By linking operational needs with specific deployment contexts, the approach ensures that the defined requirements remain practical and actionable.

7.3. Performance Requirements and Metrics

Performance requirements translate operational needs into quantifiable metrics. They are influenced by multiple operational factors, including the size and speed of target drones, the complexity of the operational environment, and the response time needed to neutralize threats. Detection capabilities must be comprehensive, covering a wide range of altitudes and distances while maintaining high accuracy rates. The system should effectively discriminate between drones and other airborne objects to minimize false alarms. Tracking performance must ensure the continuous and stable monitoring of drone movements, even in scenarios where multiple UAS are operating simultaneously.

Identification accuracy is another critical requirement, as distinguishing between different drone models and payload types is essential for assessing threat levels. This involves integrating multispectral imaging, radio frequency (RF) analysis, and machine-learning-based classification. Furthermore, mitigation effectiveness is a key factor in assessing counter-drone performance. Whether employing electronic countermeasures, physical interception, or other mitigation strategies, the system must neutralize threats reliably while minimizing collateral risks.

The COURAGEOUS framework focuses on the DTI aspect and categorizes all DTI requirements into the following.

Detection Performance: The system must identify drones across various size classifications and operational altitudes.

Tracking and Identification: The continuous tracking of detected UAS with high positional accuracy, distinguishing between cooperative and non-cooperative drones.

System Interoperability: Compatibility with forensic analysis tools and integration with existing security networks, including air traffic management (ATM) and U-space services.

Robustness: Resistance to environmental factors such as adverse weather conditions and electromagnetic interference.

Performance evaluation necessitates the development of objective metrics to assess system effectiveness across different operational contexts. Typical metrics to assess performance requirements include detection range, tracking accuracy, response time, and system resilience against environmental perturbations. Detection probability measures the likelihood of successfully identifying a drone within a given range, while the false alarm rate assesses the frequency of incorrect detections. The system’s response time is a crucial metric, as rapid threat engagement is necessary to prevent unauthorized drone activity. Tracking accuracy is evaluated based on the system’s ability to maintain a drone’s position data over time, ensuring continuous monitoring and predictive analysis of its trajectory. Additionally, environmental adaptability examines how well a system performs under varying weather conditions, electromagnetic interference, and urban congestion. System resilience against countermeasures, such as drone evasive maneuvers and signal-jamming resistance, is also considered a vital performance parameter. The integration of artificial intelligence (AI) and advanced data fusion techniques further enhances counter-drone performance, allowing for improved decision-making and automation in real-time threat scenarios.

7.4. Counter-Drone Evaluation Framework

The evaluation of counter-drone solutions requires a structured framework to assess their effectiveness, reliability, and operational suitability. This framework provides a standardized approach for documenting, reviewing, and comparing test results across various counter-drone solutions, facilitating informed decision-making for the development, procurement, and deployment of such technologies. The COURAGEOUS project proposes a structured evaluation methodology for assessing counter-drone effectiveness.

An effective evaluation framework must ensure objectivity through quantitative and qualitative metrics, maintain repeatability by establishing test conditions that allow consistent assessments, and be adaptable to different operational environments and evolving threats. Additionally, transparency in documentation is crucial for stakeholders and regulatory bodies to ensure compliance and trust in the evaluation process.

The proposed framework comprises a standardized testbed for performance validation, incorporating real-world scenarios to benchmark system capabilities. Evaluation methodologies include

Scenario-based testing: Simulating realistic threats in controlled environments to analyze system responses.

Quantitative metrics assessment: Measuring system performance against predefined benchmarks, such as detection accuracy and latency.

Comparative analysis: Establishing a common baseline for evaluating different counter-drone solutions under identical testing conditions.

Continuous improvement cycle: The iterative refinement of requirements and metrics based on empirical test results.

The methodology for evaluating counter-drone performance consists of multiple stages. Initially, clear evaluation goals must be defined, focusing on aspects such as detection accuracy, response time, and mitigation effectiveness. Following this, test scenarios should be carefully selected to represent a range of operational conditions, including urban, rural, and maritime environments, ensuring that the system’s adaptability is thoroughly tested.

Once scenarios are established, performance metrics are employed to measure key capabilities, such as detection probability, tracking accuracy, and neutralization success rate. Data collection and analysis play a crucial role in this process, as information is gathered from sensor logs, operator feedback, and forensic assessments to ensure a comprehensive evaluation. Comparative benchmarking is then used to establish performance baselines, enabling an objective comparison between different counter-drone systems under identical conditions.

This evaluation framework provides a transparent mechanism for stakeholders to evaluate and compare counter-drone technologies and make informed procurement decisions. The systematic approach outlined in this paper underscores the necessity of a structured framework for counter-drone development and assessment. By aligning operational needs with performance requirements and employing rigorous evaluation methodologies, the proposed methodology aims to enhance the efficacy and reliability of counter-drone benchmarking technologies.

8. Performance Evaluation and Validation

8.1. Evaluation Metrics

The proposed evaluation methodology is designed to be objective-driven, meaning that it focuses on predefined operational goals and performance criteria. It is structured around scenario-based testing, in which DTI systems are assessed under conditions that closely resemble real-world environments. These scenarios include sensitive sites and critical infrastructure such as airports, power plants, and military installations; public spaces such as stadiums and mass gatherings; and border security environments where drones may be used for illicit activities. Each scenario incorporates environmental variables such as weather conditions, signal interference, and varying drone behaviors, ensuring a comprehensive assessment of system performance.

The evaluation framework employs clearly defined performance metrics, as defined in

Table 1, to enable standardized comparisons between different DTI solutions. In this evaluation of detection, classification, and tracking algorithms, the performance is commonly assessed through four fundamental outcomes: True Positives (TPs), False Positives (FPs), False Negatives (FNs), and True Negatives (TNs).

True Positives (TPs) refer to the number of instances in which the system correctly identifies a target or event of interest as present. In the context of drone detection, a true positive occurs when a drone is correctly detected and classified as such. The TPs are calculated as the duration of the ground truth trajectories in which there was at least a single identified track associated with the truth.

False Positives (FPs) denote instances where the system incorrectly identifies a non-target as a target. In detection systems, a false positive corresponds to a false alarm, where an object (e.g., a bird, vehicle, or atmospheric phenomenon) is mistakenly classified as a drone. The value for the FP corresponds to the duration of the tracked trajectories in which there was no associated truth, but the track was identified as positive.

False Negatives (FNs) represent the number of cases where the system fails to detect or classify a true target. A false negative occurs when an actual drone is present but the system does not detect or recognize it. The value for the FN corresponds to the duration of the truth trajectories in which there was not an identified track associated with the truth.

True Negatives (TNs) are the instances where the system correctly identifies the absence of a target. In drone detection, a true negative occurs when no drone is present, and the system appropriately reports the absence of a threat.

These four outcomes form the basis for calculating key performance metrics such as the Precision and the F1 Score, which are critical for a comprehensive assessment of system effectiveness under operational conditions.

8.2. Evaluation Methodology

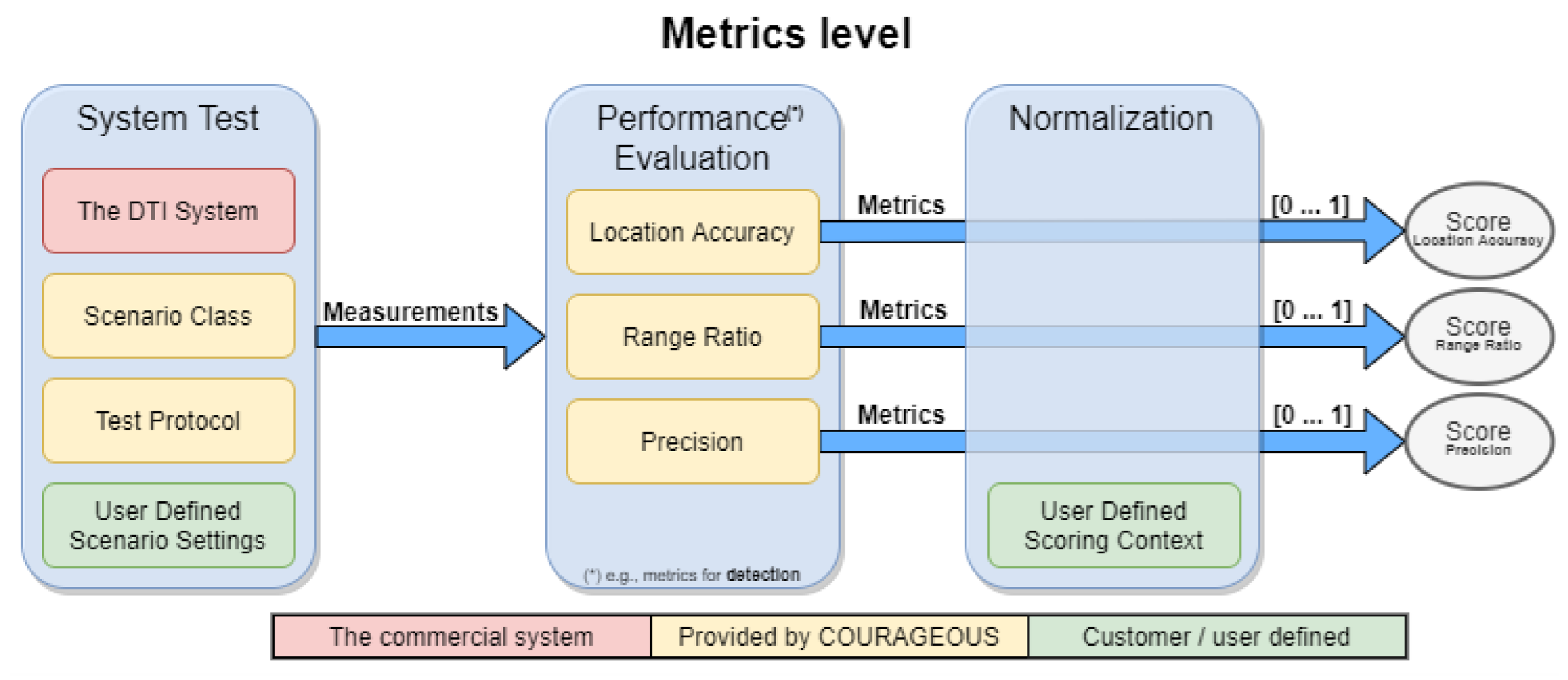

To maintain fairness and objectivity, the methodology follows a comparative performance evaluation approach. All tested systems undergo identical scenarios under controlled conditions, eliminating external biases that could influence the results. Therefore, the outputs from system tests are normalized into a score ranging from 0 (worst) to 1 (best), facilitating consistent comparisons across different DTI systems. The metric evaluation process is illustrated in

Figure 6.

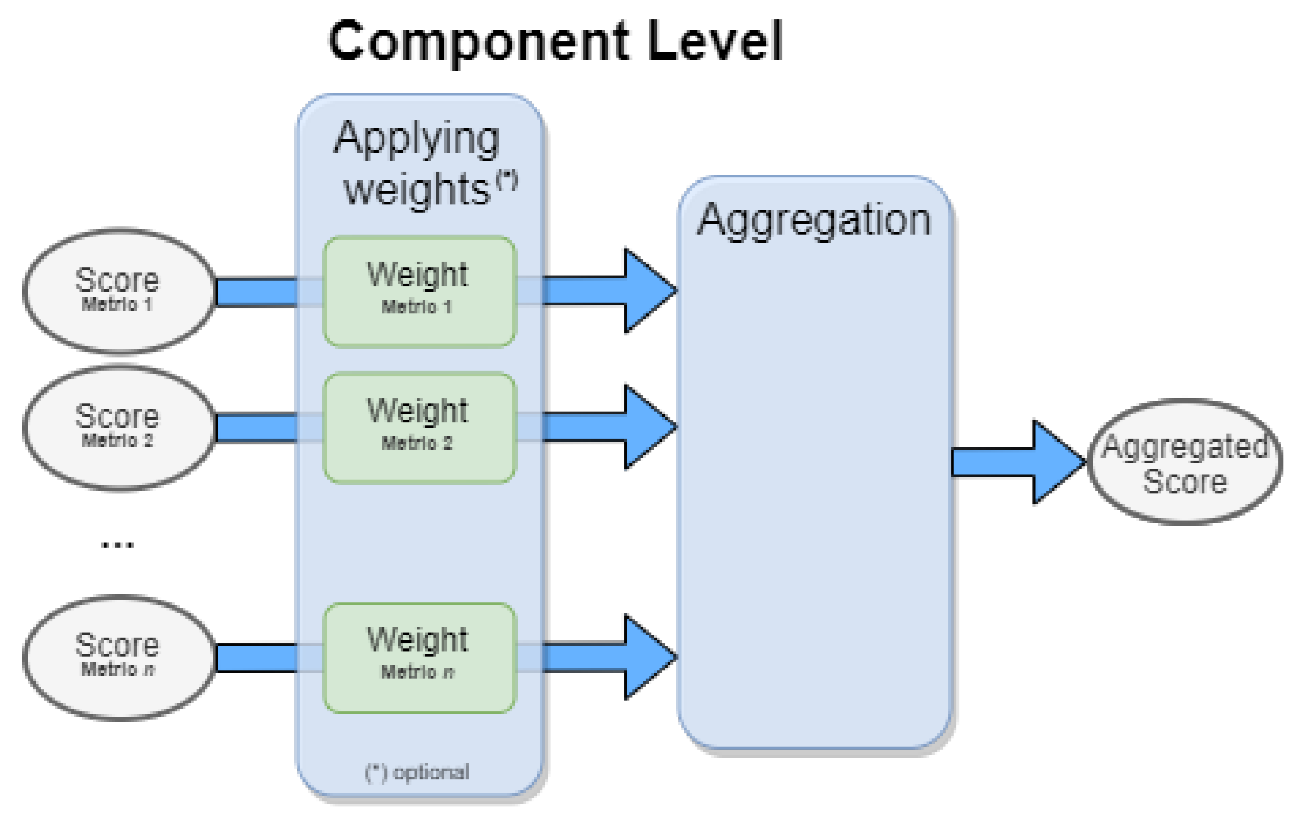

The component level integrates normalized metric scores into functional categories such as detection, tracking, and identification. Each component aggregates the relevant metric scores and applies user-defined weights based on operational priorities. This step provides an overall evaluation score for each functional component, forming the basis for system-level assessment. The process is visualized in

Figure 7.

The combination of metric and component evaluations ensures that system performance is assessed holistically, accounting for individual function effectiveness and overall operational utility. This structured methodology supports data-driven decision-making in selecting optimal DTI solutions for specific use cases. The methodology ensures that results are not only accurate and repeatable but also actionable, enabling decision-makers to make informed choices when selecting DTI solutions. For a more detailed description of the test methodology, including the experimental setup and specific evaluation procedures, refer to [

37].

9. Validation

Ensuring the reliability and applicability of the test methodology requires a structured validation process. Validation is conducted in two key stages: simulation-based verification and operational field trials. Simulation-based verification serves as the initial step, allowing for controlled testing of different scenarios in a virtual environment. This stage is crucial for refining performance metrics, optimizing detection algorithms, and identifying potential system limitations before real-world deployment. By simulating a wide range of conditions, including adverse weather, electronic interference, and varying drone speeds, this approach provides a preliminary assessment of system robustness and adaptability.

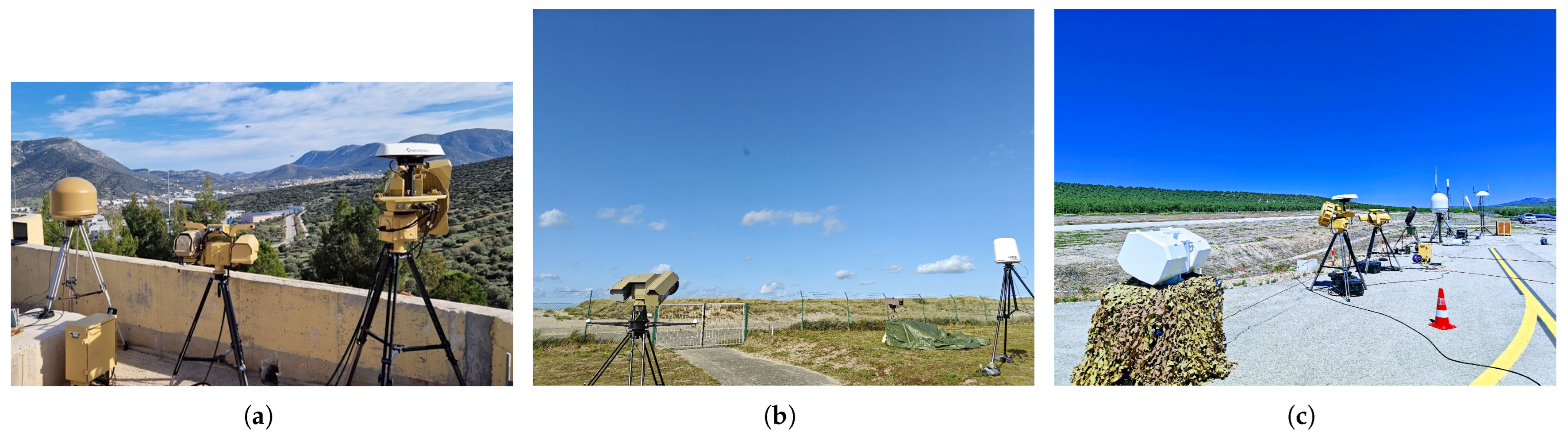

Following successful simulation-based verification, the methodology underwent validation through real-world operational field trials. These trials are conducted in diverse environments to evaluate system adaptability under actual deployment conditions and in different geographical contexts. Therefore, field trials were organized in Greece, Belgium, and Spain, as depicted in

Figure 8.

During the trials, different types of drones were used to test the performance of the DTI systems. The ground truth used to measure this performance was the drone trajectory recorded by GPS logger devices installed on board the drones. In addition, the telemetry data registered by the drone autopilot was also logged to have an additional source of information in case any data inconsistencies were detected after processing the logs.

The counter-drone companies provided their data in a standard data format that was designed specifically to log the information from the DTI systems during their operation. For this data format, a structured format has been chosen as opposed to, e.g., a table, as data scopes can vary. For instance, the version of the format is global to the document, whereas the elevation of a point is specific to a single data point. Using a structured format also allows easily extending it without breaking backwards compatibility. JSON has been chosen due to its simplicity and the number of libraries available for writing and parsing data. This data format is open source and can be found at

https://grvc.us.es/courageous/ (accessed on 22 April 2025).

A range of Custom Off-The-Shelf and homebuilt drones were used during the trials. All the drones were equipped with GPS loggers and were able to fly pre-programmed flight paths. A range of flight operations were planned based upon the scenarios described above, cycling through the available drones and combining several changing parameters such as altitude and speed. In addition, there were also blind free-flight missions carried out by police units in order to simulate more erratic flight movements.

Given the output of the tested DTI systems as well as the corresponding ground truth, a comparative analysis of the collected data and ground truth was performed. In a first step, data pre-processing was performed through time window filtering, transformation of coordinate systems and selection of areas of interest. In a second step, the detection tracks are associated with the ground truth, and finally, the resulting performance metrics are calculated and normalized. These metrics are used to generate an aggregated score separately per tested DTI system. These data were then compared to the data provided by the counter-drone companies participating in the trials.

These field trials allowed for the assessment of DTI performance in uncontrolled settings, where factors such as urban density, environmental noise, and unpredictable drone behavior influence results. Additionally, operational trials provide empirical performance benchmarks, ensuring that the test methodology remains relevant and practical for real-world applications. The iterative nature of this validation process allows for continuous improvements, enabling refinements based on field observations and feedback from end-users.

By combining simulation-based verification with live operational trials, the validation methodology ensures that the testing framework is both scientifically rigorous and operationally relevant. The integration of these two stages provides a holistic evaluation approach, mitigating risks associated with system deployment and ensuring that performance assessments remain accurate and actionable. For a detailed discussion of the validation process, including specific trial designs and performance analysis methods, refer to [

38].

10. Conclusions

This paper presented a standardized evaluation methodology for counter-drone solutions, developed within the framework of the COURAGEOUS project. The increasing threat posed by unauthorized drone activity, coupled with the lack of widely accepted performance assessment methods, necessitates a rigorous and repeatable testing framework for counter-drone technologies.

The proposed methodology defines a set of standardized test scenarios designed to reflect real-world operational challenges across diverse environments, including critical infrastructure protection, public event security, and border surveillance. A comprehensive evaluation framework was developed, integrating both qualitative and quantitative performance metrics. These metrics, including detection accuracy, tracking stability, response time, and false alarm rates, ensure a structured and objective assessment of counter-drone capabilities.

To validate the methodology, extensive field trials were conducted in multiple locations, including Greece, Belgium, and Spain. These trials demonstrated the applicability and robustness of the evaluation framework, confirming its ability to differentiate system performance across various operational conditions. The integration of structured data logging and standardized reporting formats further enhances the transparency and repeatability of the assessment process.

The presented result is a pre-standard, which is open and free for everyone to consult. However, more work is required to transform this pre-standard into a full standard, which will be the subject of future research. More research is indeed required for refining standardized testing protocols, particularly in addressing evolving threats posed by increasingly autonomous and stealthy drones. Moreover, the limitation adopted in the presented work of only considering the DTI aspect of the counter-drone kill chain is highly artificial and cannot be maintained in the future. Indeed, from a client’s perspective, counter-drone tools need to provide holistic solutions to the UAS incursion problem, and this does mean incorporating a clear link between detection, tracking, identification and neutralization/interception and—not to be forgotten—forensics and training. Future research should thus focus on linking DTI and neutralization aspects, expanding test scenarios, and enhancing interoperability with existing security infrastructures. By aligning with ongoing international standardization initiatives, the COURAGEOUS framework provides a critical step toward the objective benchmarking and certification of counter-drone technologies, ultimately supporting more informed decision-making for security and defense stakeholders.

Author Contributions

Conceptualization, methodology, writing—review and editing, validation, formal analysis, D.D., P.P., A.K., K.B., M.Ż., R.R., S.S., A.M., J.v.d.P., I.M., A.O., C.C. and C.P.; writing—original draft preparation, project administration, funding acquisition, G.D.C. All authors have read and agreed to the published version of the manuscript.

Funding

This project has received funding from the European Union’s Internal Security Fund Police under Grant Agreement 101034655 (COURAGEOUS).

Data Availability Statement

The datasets presented in this article are not readily available because the performance data of counter-drone tools that protect critical infrastructure aer security-sensitive data and cannot be shared. However, the CEN/CENELEC Workshop agreement, which is a pre-standard document that extensively details the presented work and includes a detailed methodology and implementation guidelines for assessing the performance of drone-drone solutions, can be accessed freely at

https://www.cencenelec.eu/media/CEN-CENELEC/CWAs/ICT/cwa-18150.pdf (accessed on 22 April 2025).

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| C-UAS | COunter Unmanned Aerial Systems |

| CWA | CENELEC Workshop Agreement |

| DIN | Deutsches Institut für Normung |

| DTI | Detection, Tracking, Identification |

| EUROCAE | European Organisation for Civil Aviation Equipment |

| FAA | Federal Aviation Administration |

| KPI | Key Performance Indicator |

| KRI | Key Risk Indicators |

| LEA | Law enforcement agency |

| NATO | North Atlantic Treaty Organization |

| ISO | International Standardization Organisation |

| NIST | National Institute of Standards and Technology |

| NPSA | National Protective Security Authority |

| OSED | Operational Services and Environment Definition |

| RF | Radio Frequency |

| UAS | Unmanned Aerial Systems |

References

- Ashok, V.; Kumar, N.; Sarkar, S.; Degadwala, S. Security Threats of Unmanned Aerial Vehicles; Springer International Publishing: Cham, Switzerland, 2023; pp. 133–164. [Google Scholar] [CrossRef]

- Buric, M.; De Cubber, G. Counter Remotely Piloted Aircraft Systems. MTA Rev. 2017, 27, 9–18. [Google Scholar] [CrossRef]

- Austria, V.B.B.; Francisco, R.; Hassan, R.A. Assessing the Threat: Autonomous Commercial Drones and Its Potential for Mass Civilian Casualty Attacks. In Proceedings of the International Research and Innovation Summit (IRIS) 2024, San Jose del Monte, Philippines, 16–19 April 2024; Volume 2. [Google Scholar] [CrossRef]

- Kang, H.; Joung, J.; Kim, J.Y.; Kang, J.; Cho, Y.S. Protect Your Sky: A Survey of Counter Unmanned Aerial Vehicle Systems. IEEE Access 2020, 8, 168671–168710. [Google Scholar] [CrossRef]

- González-Jorge, H.; Aldao, E.; Fontenla-Carrera, G.; Veiga-López, F.; Balvís, E.; Ríos-Otero, E. Counter Drone Technology: A Review. Preprints 2024. [Google Scholar] [CrossRef]

- Sirohi, H.S.; Khairnar, C.N.; Kumar, P.; Kumar, A. A Comprehensive Review of Modern Counter-Drone Technologies: Trends, Challenges, and Future Directions. Int. J. Sci. Technol. Eng. 2024, 12, 4405–4418. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Y.; Song, H. Counter-Unmanned Aircraft System(s) (C-UAS): State of the Art, Challenges, and Future Trends. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 4–29. [Google Scholar] [CrossRef]

- De Cubber, G.; Petsioti, P.; Roman, R.; Mohamoud, A.; Maza, I.; Church, C. The COURAGEOUS Project Efforts Towards Standardized Test Methods for Assessing the Performance of Counter-Drone Solutions. In Proceedings of the 11th Biennial Symposium on Non-Lethal Weapons, Brussels, Belgium, 22–25 May 2023; p. 44. [Google Scholar]

- CEN Workshop Agreement—CWA 18150—Unmanned Aircraft Systems—Counter UAS—Testing Methodology. European Standardization Committee. 2024. Available online: https://www.cencenelec.eu/media/CEN-CENELEC/CWAs/ICT/cwa-18150.pdf (accessed on 22 April 2025).

- Van den Broek, B.; Van der Velde, J.; Van den Baar, M.; Nijsten, L.; Van Heijster, R. Automatic Threat Evaluation for Border Security and Surveillance. In Counterterrorism, Crime Fighting, Forensics, and Surveillance Technologies III; SPIE: Bellingham, WA, USA, 2019; Volume 11166, pp. 113–122. [Google Scholar] [CrossRef]

- Baptista, M.; Fernandes, L.; Chaves, P. Tracking and Classification of Aerial Objects. In Intelligent Transport Systems. From Research and Development to the Market Uptake; Martins, A., Ferreira, J., Kocian, A., Eds.; INTSYS Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Springer: Cham, Switzerland, 2020; Volume 310. [Google Scholar] [CrossRef]

- De Cubber, G.; Shalom, R.; Coluccia, A.; Borcan, O.; Chamrád, R.; Radulescu, T.; Izquierdo, E.; Gagov, Z. The SafeShore System for the Detection of Threat Agents in a Maritime Border Environment. In Proceedings of the IARP Workshop on Risky Interventions and Environmental Surveillance, Les Bon Villers, Belgium, 18–19 May 2017. [Google Scholar]

- Doroftei, D.; De Cubber, G. Qualitative and Quantitative Validation of Drone Detection Systems. In Proceedings of the International Symposium on Measurement and Control in Robotics (ISMCR2018), Mons, Belgium, 26–28 September 2018. [Google Scholar]

- Coluccia, A.; Fascista, A.; Schumann, A.; Sommer, L.; Ghenescu, M.; Piatrik, T.; De Cubber, G.; Nalamati, M.; Kapoor, A.; Saqib, M.; et al. Drone-vs-Bird Detection Challenge at IEEE AVSS2019. In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taipei, Taiwan, 18–21 September 2019; pp. 1–7. [Google Scholar]

- Li, C.J.; Ling, H. An Investigation on the Radar Signatures of Small Consumer Drones. IEEE Antennas Wirel. Propag. Lett. 2017, 16, 649–652. [Google Scholar] [CrossRef]

- Mezei, J.; Molnar, A. Drone Sound Detection by Correlation. In Proceedings of the 2016 IEEE 11th International Symposium on Applied Computational Intelligence and Informatics, Timisoara, Romania, 12–14 May 2016; pp. 509–518. [Google Scholar] [CrossRef]

- Rozantsev, A. Vision-Based Detection of Aircrafts and UAVs. Master’s Thesis, EPFL, Lausanne, Switzerland, 2017. [Google Scholar] [CrossRef]

- Andrai, P.; Radii, T.; Mutra, M.; Ivoevi, J. Nighttime Detection of UAVs Using Thermal Infrared Camera. Transp. Res. Procedia (INAIR) 2017, 28, 183–190. [Google Scholar] [CrossRef]

- Sit, Y.L.; Nuss, B.; Basak, S.; Orzol, M.; Wiesbeck, W.; Zwick, T. Real-Time 2D+Velocity Localization Measurement of a Simultaneous-Transmit OFDM MIMO Radar Using Software Defined Radios. In Proceedings of the European Radar Conference (EuRAD), London, UK, 5–7 October 2016; pp. 21–24. [Google Scholar]

- De Haag, M.U.; Bartone, C.G.; Braasch, M.S. Flight-Test Evaluation of Small Form-Factor Lidar and Radar Sensors for SUAS Detect-and-Avoid Applications. In Proceedings of the 2016 IEEE/AIAA 35th Digital Avionics Systems Conference (DASC), Sacramento, CA, USA, 25–29 September 2016; pp. 1–11. [Google Scholar] [CrossRef]

- Enescu, V.; De Cubber, G.; Cauwerts, K.; Sahli, H.; Demeester, E.; Vanhooydonck, D.; Nuttin, M. Active Stereo Vision-Based Mobile Robot Navigation for Person Tracking. Integr. Comput.-Aided Eng. 2006, 13, 203–222. [Google Scholar] [CrossRef]

- Unmanned Airspace. EU Framework Agreement for Counter Drone Devices Features Skywall Net Capture Solution. 2021. Available online: https://www.unmannedairspace.info/counter-uas-systems-and-policies/eu-framework-agreement-for-counter-drone-devices-features-skywall-net-capture-solution/ (accessed on 22 April 2025).

- DroneWise Consortium. DroneWISE Project. Available online: https://dronewise-project.eu/ (accessed on 28 March 2023).

- Jacoff, A. Guide for Evaluating, Purchasing, and Training with Response Robots Using DHS-NIST-ASTM International Standard Test Methods. In Standard Test Methods for Response Robots; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2014. [Google Scholar]

- Doroftei, D.; Matos, A.; Silva, E.; Lobo, V.; Wagemans, R.; De Cubber, G. Operational Validation of Robots for Risky Environments. In Proceedings of the 8th IARP Workshop on Robotics for Risky Environments, Lisbon, Portugal, 28–29 January 2015. [Google Scholar]

- De Cubber, G.; Doroftei, D.; Balta, H.; Matos, A.; Silva, E.; Serrano, D.; Govindaraj, S.; Roda, R.; Lobo, V.; Marques, M. Operational Validation of Search and Rescue Robots. In Search and Rescue Robotics—From Theory to Practice; InTech: London, UK, 2017. [Google Scholar] [CrossRef]

- Doroftei, D.; De Cubber, G. Using a Qualitative and Quantitative Validation Methodology to Evaluate a Drone Detection System. Acta IMEKO 2019, 8, 20–27. [Google Scholar] [CrossRef]

- Kouhestani, C.; Woo, B.; Birch, G. Counter Unmanned Aerial System Testing and Evaluation Methodology. In Proceedings of the SPIE 10184, Sensors, and Command, Control, Communications, and Intelligence (C3I) Technologies for Homeland Security, Defense, and Law Enforcement Applications XVI, Anaheim, CA, USA, 10–11 April 2017; Volume 1018408. [Google Scholar] [CrossRef]

- Church, C. Interpol Drone Countermeasure Exercise Report; Interpol: Lyon, France, 2022. [Google Scholar]

- EUROCAE. New Working Group: WG-115/Counter UAS (C-UAS). 2019. Available online: https://www.eurocae.net/new-working-group-wg-115-counter-uas-c-uas/ (accessed on 22 April 2025).

- NATO. NCI Agency Holds NATO’s Live-Testing Counter-Drone Exercise. 2022. Available online: https://www.ncia.nato.int/about-us/newsroom/nci-agency-holds-natos-livetesting-counterdrone-exercise.html (accessed on 22 April 2025).

- Kretschmann, T. A Standard for C-UAS—DIN 5452-9: Improving the quality of systems and creating a level playing field. In Security 2024; DIN: Berlin, Germany, 2024. [Google Scholar]

- ISO/CD 16746; Unmanned Aircraft Systems—Counter UAS — Quality and Safety for Users. International Standardisation Organisation: Geneva, Switzerland, 2025. Available online: https://www.iso.org/standard/84801.html (accessed on 22 April 2025).

- ISO/CD 16747; Unmanned Aircraft Systems— Counter UAS—Quality and Safety for Manufacturers. International Standardisation Organisation: Geneva, Switzerland, 2025. Available online: https://www.iso.org/standard/84802.html (accessed on 22 April 2025).

- Brewczyński, K.D.; Życzkowski, M.; Cichulski, K.; Kamiński, K.A.; Petsioti, P.; De Cubber, G. Methods for Assessing the Effectiveness of Modern Counter Unmanned Aircraft Systems. Remote Sens. 2024, 16, 3714. [Google Scholar] [CrossRef]

- Petsioti, P.; Życzkowski, M.; Brewczyński, K.; Cichulski, K.; Kamiński, K.; Razvan, R.; Mohamoud, A.; Church, C.; Koniaris, A.; De Cubber, G.; et al. Methodological Approach for the Development of Standard C-UAS Scenarios. Open Res. Eur. 2024, 4, 240. [Google Scholar] [CrossRef]