Abstract

Unmanned Aircraft Systems (UASs) have undergone rapid development over recent years, but have also became vulnerable to security attacks and the volatile external environment. Ensuring that the performance of UASs is safe and secure no matter how the environment changes is challenging. Runtime Verification (RV) is a lightweight formal verification technique that could be used to monitor UAS performance to guarantee safety and security, while reactive synthesis is a methodology for automatically synthesizing a correct-by-construction controller. This paper addresses the problem of the generation and design of a secure UAS controller by introducing a unified monitor and controller synthesis method based on RV and reactive synthesis. First, we introduce a novel methodological framework, in which RV monitors is applied to guarantee various UAS properties, with the reactive controller mainly focusing on the handling of tasks. Then, we propose a specification pattern to represent different UAS properties and generate RV monitors. In addition, a detailed priority-based scheduling method to schedule monitor and controller events is proposed. Furthermore, we design two methods based on specification generation and re-synthesis to solve the problem of task generation using metrics for reactive synthesis. Then, to facilitate users using our method to design secure UAS controllers more efficiently, we develop a domain-specific language (UAS-DL) for modeling UASs. Finally, we use F Prime to implement our method and conduct experiments on a joint simulation platform. The experimental results show that our method can generate secure UAS controllers, guarantee greater UAS safety and security, and require less synthesis time.

1. Introduction

Unmanned Aerial Vehicles (UAVs), commonly referred to as drones, serve as the operational core of Unmanned Aircraft Systems (UASs). These autonomous or remotely piloted platforms integrate advanced sensors, Global Positioning Systems (GPSs), and lightweight composite materials to enable cross-domain applications in agriculture, cinematography, infrastructure diagnostics, environmental surveillance, and military operations. Their functional superiority stems from critical attributes, including real-time data transmission capabilities, cost-efficiency compared to manned alternatives, operational autonomy, and exceptional adaptability to complex environments.

With the rapid development of UAS technology, safety and security risks have also escalated. Despite integrating advanced technologies, UASs remain vulnerable to faults caused by unpredictable state transitions and external interference. Attackers exploit system hardware, software, and networks to compromise confidentiality, integrity, and availability through techniques including malicious code injection, authentication bypass, GPS spoofing, and DDoS attacks [1]. For instance, Iranian forces successfully captured a U.S. UAV in 2011 by executing GPS spoofing on the RQ-170 [2]. He et al. [3] describe a physical attack on the Inertial Measurement Unit (IMU) of a UAS by ultrasonic waves, which is a typical side-channel attack. Michael Hooper et al. [4] implemented a buffer overflow attack on the Parrot Bebop 2. Moreover, complex network architectures, flexible physical environments, and excessive access interfaces may lead to more vulnerabilities and attack vectors when manufacturers attempt to improve the quality of drones. Attackers can exploit these vulnerabilities to cause sensitive data leakage, system hijacking, and even instant drone crashes [5].

Runtime Verification (RV) [6] is a lightweight formal verification technique that attempts to determine whether a target system’s runtime behavior satisfies or violates specified properties based on the current execution path. Unlike model checking, RV technology relies solely on the observed execution path, thereby avoiding the state space explosion inherent in verifying complex systems through model checking. These characteristics make RV particularly suitable for security protection in resource-constrained UASs [7]. Runtime Enforcement (RE) is a technique to ensure that a program will respect a given set of properties by adopting enforcement operations. When abnormal behavior occurs, RE dynamically intervenes to maintain critical security properties [8].

Reactive synthesis [9] is a formal methodology for automatically generating correct-by-construction reactive systems from temporal logic specifications. The synthesized system is typically formalized as an automaton that strictly satisfies the given specifications, where the input of the automaton represents the environmental variables acquired through UAV sensors (e.g., position, obstacle detection) and the output of the automaton dictates actuator-driven actions (e.g., trajectory adjustments, taking a photograph). Our synthesis algorithm builds upon the Generalized Reactivity (1) (GR(1)) game [10,11], which can be used in multi-robot systems [12,13].

Through reactive synthesis, reactive controllers can be constructed for UAS. By scheduling these controller events, a UAV can accomplish certain tasks. At the same time, RV monitors can also be deployed on a UAV to detect threats. However, when a monitor detects a threat and takes corresponding countermeasures (i.e., enforcement operations) to defend against it, these countermeasures may affect the scheduling of controller events, and even lead to the failure of UAV controller scheduling. In addition, task design based on GR(1) specification has difficulty when describing quantitative tasks, which limits its areas of application. Furthermore, due to the lack of a domain-specific language for UASs based on RV and reactive synthesis, traditional languages have proved inefficient to standardize the design of the UAS atomic propositions used in specifications. The designed monitor and controller specifications also lack portability and reusability.

To address the problems above, we expanded the work in [5] and designed the Unified Monitor and Controller Synthesis (UMCS) method for securing complex UASs. The main contributions can be briefly summarized as follows:

- We designed a UMCS framework based on RV and reactive synthesis. Within this framework, monitor and controller events are classified, prioritized, and scheduled based on their priorities to secure the control of the UAV.

- We designed a regular expression to express the task specification with metrics, and propose methods based on specification generation and re-synthesis to achieve task generation with metrics in controller synthesis.

- Based on our UMCS framework, we designed the Unmanned Aircraft System Description Language (UAS-DL). This language provides a standardized, easy-to-use programming interface to facilitate users in configuring UAS resources and designing UAV tasks and monitors.

2. Related Work

Our work integrates methodologies from UAV control, UAV security, and domain-specific language design. This research builds upon established frameworks in RV and reactive synthesis. In this section, we analyze these foundational influences.

2.1. On UAV Control

Current UASs exhibit limited autonomy, relying heavily on human–machine interaction. Since the middle of 2009, the US Air Force has designated in-service UAVs as “Remotely Piloted Aircraft” to differentiate them from truly autonomous UASs [14]. Researchers consequently pursue technologies that enhance UAV autonomy, aiming to reduce manual control burdens, dependency on robust communication links, and mission decision latency. Pachter et al. [15] conceptualized autonomous control as “highly” automated operation in unstructured environments. Boskovic et al. [16] expanded this to include real-time perception, information processing, and control adaptation. Distinct from traditional automatic control systems with predefined responses, autonomous control emphasizes self-determination [17], representing an evolutionary advancement in control theory.

Current UASs exhibit limited autonomy, relying heavily on human–machine interaction. Since the middle of 2009, the US Air Force has designated in-service UAVs as “Remotely Piloted Aircraft” to differentiate them from truly autonomous UASs [14]. Researchers consequently pursue technologies that enhance UAV autonomy, aiming to reduce manual control burdens, dependency on robust communication links, and mission decision latency. Pachter et al. [15] conceptualized autonomous control as “highly” automated operation in unstructured environments. Boskovic et al. [16] expanded this to include real-time perception, information processing, and control adaptation. Distinct from traditional automatic control systems with predefined responses, autonomous control emphasizes self-determination [17], representing an evolutionary advancement in control theory.

The multi-UAV collaborative task allocation problem is a complex combinatorial optimization problem within task assignment and resource allocation. This problem focuses on optimal task-to-UAV assignments under resource, platform, and temporal constraints to maximize system-wide efficiency. Vincent et al. analyzed cooperative search strategies for UAVs operating in hazardous environments [18]. Current solutions for the multi-task allocation problem include network flow optimization [19,20] and mixed-integer linear programming [21]. Recent advances in distributed UAV control have spurred interest in decentralized approaches; for example, Akselrod et al. applied hierarchical Markov decision processes for distributed sensor management [22]. Game theory and deep reinforcement learning also emerge as prominent methodologies [12,13,23,24,25,26].

However, existing works prioritize task execution over security considerations like communication jamming or sensor spoofing. Our methodology generates a correct-by-construction controller while maintaining UAV safety/security properties.

2.2. On UAV Security

In recent years, commercial drones and smart cars have suffered from many malicious attacks, resulting in several traffic accidents. So the core issue in this paper is the automatic generation of UAS security behaviors, as studies on UAS vulnerabilities and corresponding attack chains have become essential and fundamental. Most UASs consist of several components: the control unit, the dynamics module, the navigation module, the sensor module, the communication module, etc. The authors of [1,27] introduced confidentiality, integrity, and availability from the perspective of information security. Confidentiality means that the system forbids unauthorized access or interception of data; Integrity is the property that protects the system from jamming by malicious data; Availability refers to a timely response for legitimate requests. Vrizlynn [28] and Leela [29] divided attack vectors into physical attacks and remote attacks.

RV is a technology that checks if the system’s execution satisfies the given properties [6]. In [7,30], the authors implemented an RV monitor on a UAS using LTL formulas to describe the security requirements, using Bayesian Networks and the FPGA hardware platform. Schneider et al. proposed a method that combines traditional RV, enforcement, and control prediction to automatically generate security enforcement code, in order to compensate for the lack of protection capabilities of RV theory against dynamic environmental uncertainties [31].

However, using RV to describe and detect safety and security threats of UAVs and take countermeasures against these threats may lead to task failure, while our method can schedule monitor and controller events based on their priorities and parallelizability and guarantees more UAV safety and security properties.

2.3. On GR(1)-Based Domain Specific Language

LTLMop [32] is software for specifying and synthesizing robot missions on 2D maps. The tool comes with a graphical map editor and allows specifications to be expressed in the GR(1) subset of LTL or structured English [33]. Spectra is a new specification language for reactive systems, specifically tailored for the context of reactive synthesis. Spectra comes with designated Spectra Tools, a set of analyses, including a synthesizer to obtain correct-by-construction implementations, several means for executing the resulting controller, and additional analyses aimed at helping engineers write higher-quality specifications [34].

The structured English of LTLMop and Spectra can be used to design GR(1) specifications conveniently, but the GR(1) specification also restricts the expressivity of the language for describing safety and security properties, and these languages cannot support the construction of propositions from low-level resources, while our UAS-DL supports the input of UAV properties in LTL/MTL and the construction of atomic propositions from the software and hardware resources of UAVs.

3. Preliminaries

3.1. Temporal Logic

Linear Temporal Logic (LTL) proposed by Pnueli [35] can be used to describe the specifications for UAVs. Compared to alternative temporal logic, such as CTL, CTL*, and so on, LTL is not only sufficient to express the complex specifications for UAVs, but also possesses an efficient synthesis algorithm [36]. LTL’s further expansion, such as LTL3, Signal Temporal Logic (STL) [37], Metric Temporal Logic (MTL) [38], and Discrete-Time MTL (DT-MTL) [39], can also be used for RV [6] of the real-time control system, describing the more complex properties. Before introducing RV and reactive synthesis, we first present the syntax and semantics of LTL.

3.1.1. LTL Syntax

Given a countable set of Boolean variables (propositions) , without loss of generality, we assume that all variables are Boolean. The general case in which a variable ranges over arbitrary finite domains can be reduced to the Boolean case. LTL formulas are constructed as follows:

Based on the fundamental syntax, some frequently used Boolean constants and derivative operators can be defined as follows: First, list the constants:

and propositional logic operators:

and temporal operators:

3.1.2. LTL Semantics

Given an infinite sequence composed by subsets of and a position , where denote the i-th element of and . The satisfaction relationship ⊨ between , i and an LTL formula is defined as follows:

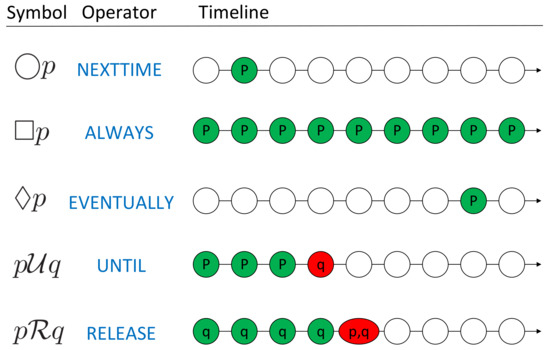

Intuitively, the formula () with the next operator means is True in the next position of the sequence. with the until operator indicate that will be True somewhere in the future, and must be maintained as True until is True. And () with always and () with eventually express the properties that will always hold True in the sequence and will be True at least once in the future, respectively. Another kind of LTL formula in the form of () is also quite common in the specifications for UAVs. It means that will be True infinite times, and is usually used to express the goal of systems which need to be achieved repeatedly. A pictorial representation of commonly used LTL temporal operators is shown in Figure 1, where p and q are atomic propositions and green/red circular nodes represent the occurrence of propositions.

Figure 1.

Pictorial representation of LTL temporal operators.

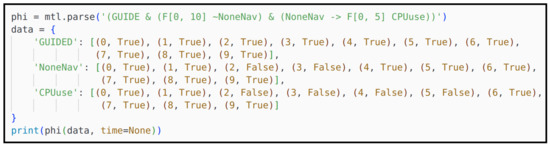

3.1.3. Metric Temporal Logic

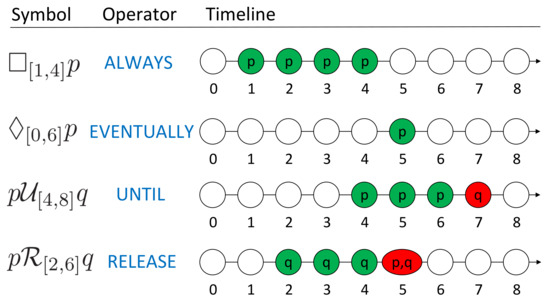

For requirements that express specific time bounds, we use a variant of LTL that adds these time bounds, called MTL [38]. For MTL, each of these temporal operators is accompanied by upper and lower time bounds that express the time period during which the operator must hold. Specifically, MTL includes the operators and and , where the temporal operator applies in the time between time i and time j. For an event that occurs at a discrete time in UAS, we can use discrete-time MTL [39] to describe its properties. Similarly, a pictorial representation of the MTL temporal operators is shown in Figure 2.

Figure 2.

Pictorial representation of MTL temporal operators.

3.2. Runtime Verification

Runtime Verification [6] is a lightweight formal verification technique that serves as an effective complement to traditional approaches, such as model checking and testing. The most important feature of RV is that its verification object focuses on the actual runtime operation of the monitored system, enabling timely adjustments when detecting anomalous UAV behaviors that violate the established properties of the UAS. This mechanism helps avoid the occurrence of UAV mission failures and to prevent behavioral malfunctions.

In this paper, we define a UAV execution fault as a deviation between the observed current behavior and the expected behavior of the UAS. In other words, we monitor the execution violations of the UAV’s established properties, which are typically identified by deviations between the current system state and the expected state. Such execution faults may ultimately lead to mission failures. Martin Leucker and Christian Schallhart have provided a widely accepted definition in the runtime verification domain through their research [6].

In RV for UAV, we abstract a run of the UAS as a series of continuous infinite system state sequences. We then employ a monitor to check whether the runtime execution satisfies the established UAV properties by generating verdicts (typically Boolean values of True/False). Formally, we denote a UAV property as , where represents the set of . Thus, the RV violation/satisfaction problem of UAV physical system can be abstracted as verifying whether the execution belongs to , i.e., mathematically determining if a given word is included in a formal language. This approach reduces the verification complexity significantly. We follow the definition of an RV monitor by Martin Leucker and Christian Schallhart in their seminal work [6]:

Definition 1.

(Monitor). A monitor is a device that reads a finite trace and yields a certain verdict.

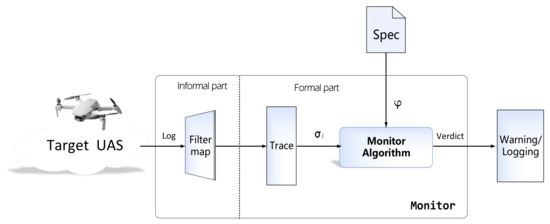

The RV monitor architecture for UAS is illustrated in Figure 3. The monitor is an independent algorithmic component connected to the target system via a broadcast bus. A system execution trace generally comprises a series of formally defined state data, which serve as the monitoring input. These states can be extracted and filtered through a system operation semi-formal mapping of our Monitor-Oriented Programming (MOP) tool. Ultimately, the monitor outputs the verdict, indicating whether the current UAV trace complies with the established specifications.

Figure 3.

RV monitor in UAV verification architecture.

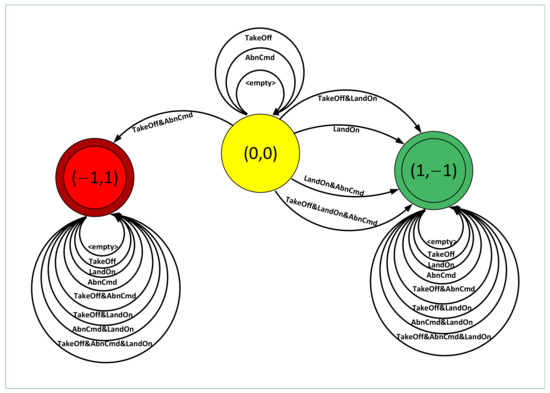

3.3. Reactive Synthesis

Given the system model and specifications in the form of LTL formulas, our goal is to synthesize a controller that generates behavior strategies satisfying the given specifications, if the formulas are realizable [25]. The synthesis algorithm is a two-player game between the UAS and its environment that synthesizes the winning strategies for UAV in the form of an automaton by solving the -calculus over the game structure [36].

3.3.1. GR(1) Specification

The standard approach to LTL synthesis involves constructing a Büchi automaton from the LTL formula followed by conversion to an equivalent deterministic Rabin automaton. This two-stage conversion process induces a double-exponential state blow-up. To circumvent this prohibitive complexity, a special restriction of LTL, called GR(1) specification, is taken into consideration [36].

For a UAV in the multi-UAV system, its specification consists of the following six parts based on the atomic proposition set :

- and are propositional logic formulas without temporal operators which are defined on and , respectively. They describe the initial conditions of the environment’s and UAV’s behaviors, respectively.

- is a conjunction of several subformulas in the form of , where is a Boolean formula defined on , and . limits the relation between the next behaviors of the environment and current state.

- is a conjunction of several subformulas in the form of , where is a Boolean formula defined on , and . limits the relation between the next behaviors of UAV and the current state, as well as the next behaviors of the environment.

- and are the conjunctions of several subformulas in the form of , where is a Boolean formula defined on . They describe the final goals of the environment’s and UAV’s behaviors, respectively.

The formula is called a Generalized Reactive(1) (GR(1)) formula [36]. Intuitively speaking, (i.e., ) constrains the possible environments and (i.e., ) limits the UAV’s behaviors, so the specification defines the rules of ’s behaviors under given environments.

Although GR(1) specifications possess strictly weaker expressive power than full LTL (e.g., cannot be expressed by the fragment), this fragment remains sufficiently expressive for characterizing most practical system requirements, particularly in reactive system design [36].

3.3.2. Controller Synthesis

The GR(1) synthesis algorithm achieves cubic-time complexity for strategy automaton construction, where n denotes the size of the state space [36].

The synthesis algorithm [36] resolves a two-player adversarial game between the environment and the UAV, in which the initial states of the environment and the UAV are limited by and , respectively, and and determine the state transitions. The game’s winning condition is specified by a GR(1) formula . If no matter how the environment changes, only within the can the UAV find a way to proceed, and the path of the game is compliant to , it is said that the UAV is winning. The synthesis algorithm is to find the winning strategy for the UAV. As described in [36], a game structure can be obtained on the basis of the specifications, and then the strategy for the UAV could be synthesized (if possible) by using -calculus over the game structure.

The strategy synthesized by the algorithm is represented in the form of the automaton :

- X is the set of input (environment) propositions,

- Y is the set of output (UAV) propositions,

- Q is the set of states,

- is the set of initial states,

- is the transition relation,

- is the state labeling function, where is the set of UAV propositions that are True in state q.

Given an admissible input sequence satisfying the environmental constraint , the strategy automaton enables the UAV to generate a discrete motion trajectory, which can guide the UAV’s regional navigation decisions while dynamically activating or deactivating specific actions.

4. Unified Monitor and Controller Synthesis Framework

A UAS typically consists of a UAV and a Ground Control Station (GCS); so, the actions of the UAV can be considered as the system, while the inputs from the UAV sensors and the commands from the GCS are considered as the environment. The communication between the UAV and the GCS can be abstracted into the process of the UAV sensing data from its environment.

For simple UAS implementations requiring monitoring of limited safety/security properties, we propose a modular architecture to enhance reusability, in which the UAV specifications are decoupled into two distinct components: Monitor and Actuator [5].

The Monitor module, grounded in RV theory, performs real-time compliance checking of the UAV executions against predefined properties through data stream analysis. Conversely, the Actuator module fulfills functional requirements by executing dynamic control actions.

All outputs from the Monitor will be set as sensor inputs to the Actuator. However, this design introduces controller synthesis complexity that escalates exponentially with the number of monitor propositions. Here, we consider an experiment against a risky command attack as an example:

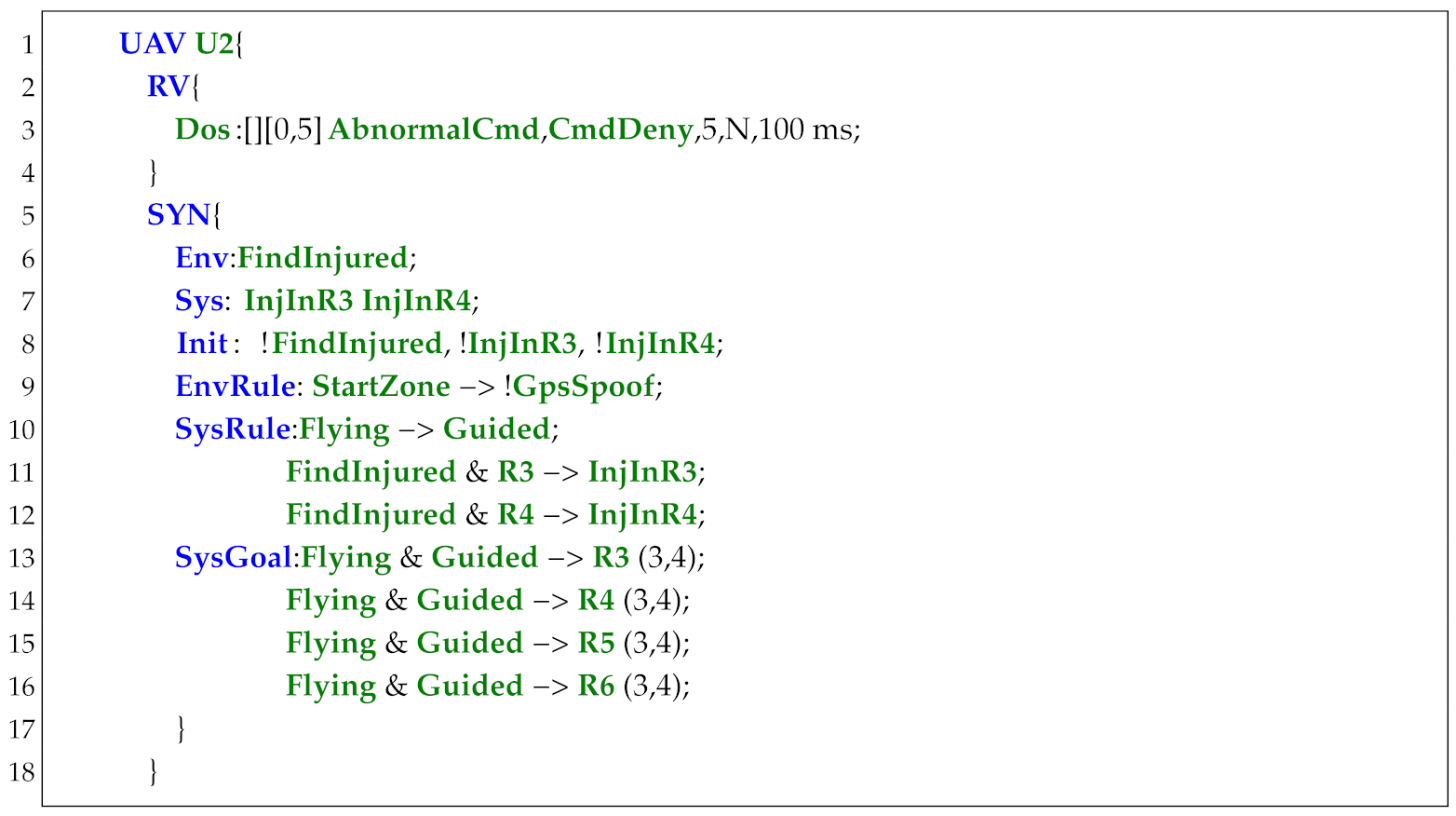

Example 1.

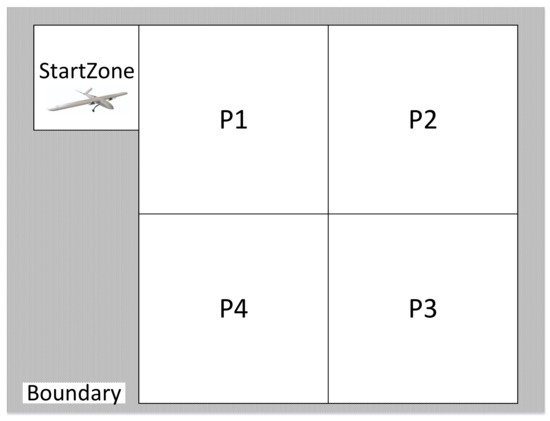

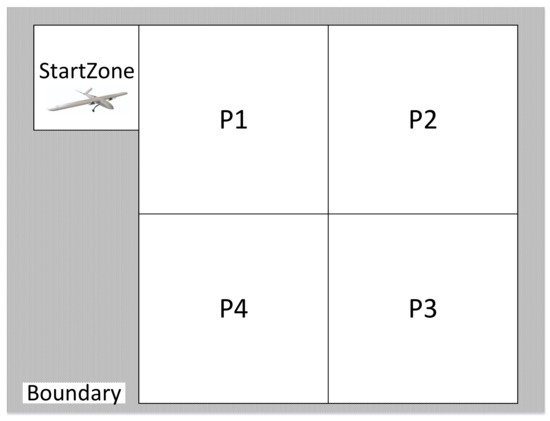

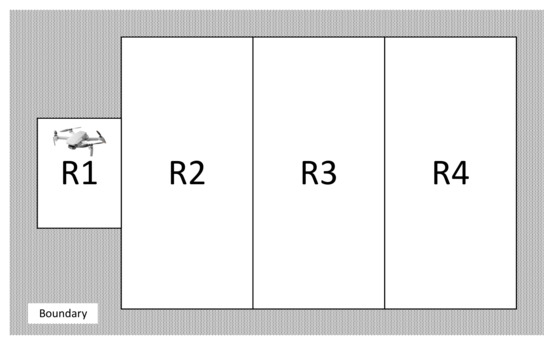

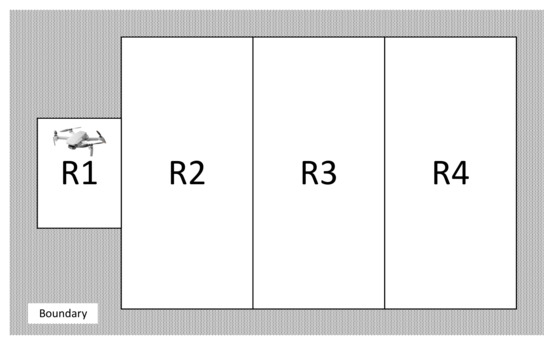

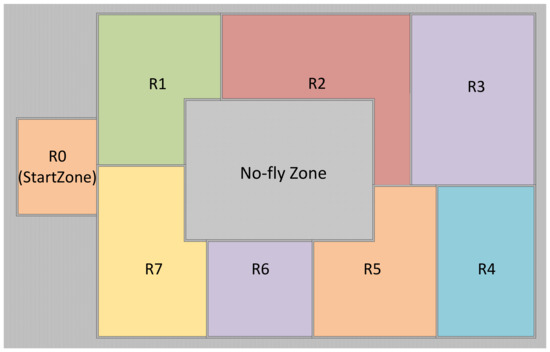

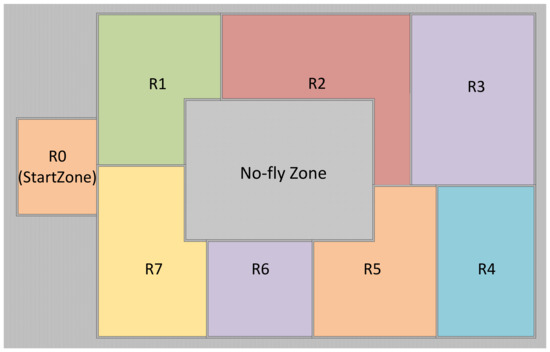

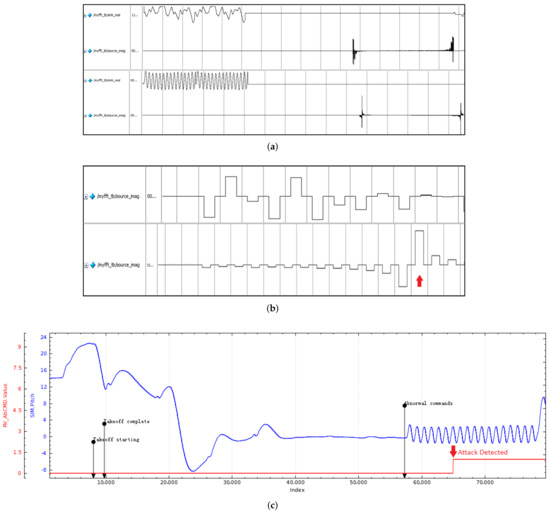

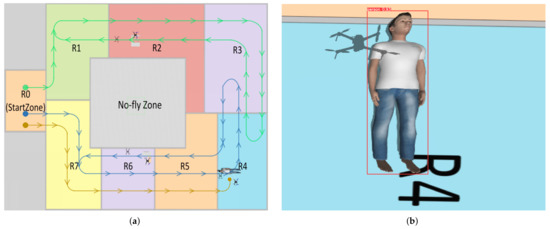

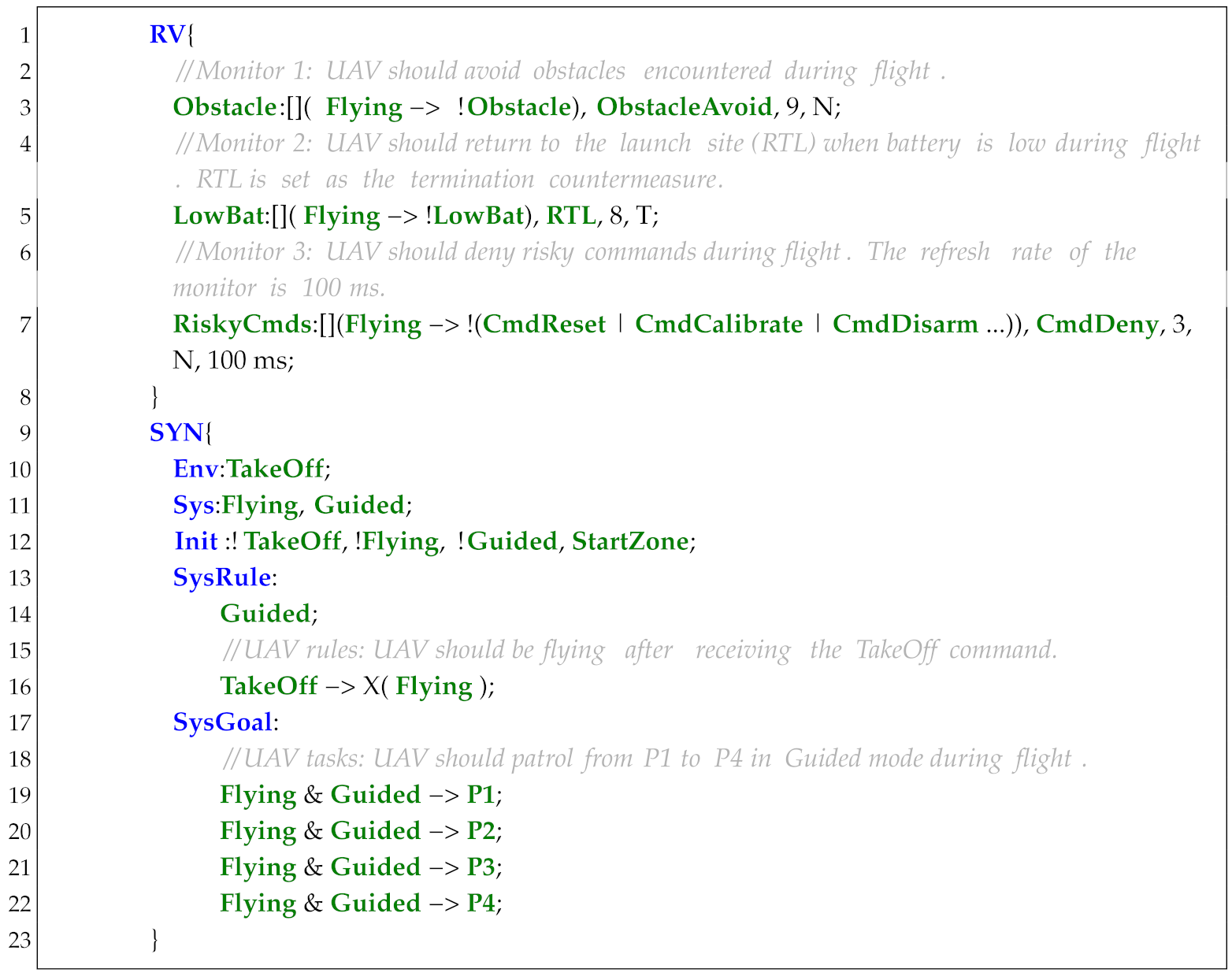

The UAV takes off from the StartZone in Figure 4 and patrols waypoints P1–P4 in Guided mode. The formal specification is defined in Listing 1, with three runtime monitors deployed: Monitor 1 is designed to avoid obstacle (line 7), Monitor 2 is designed to monitor the power consumption and change to Return To Launch (RTL) mode when the power is low (lines 9–10), and Monitor 3 is designed for defending multi-class risky command attacks (lines 12–13). Although secure controllers can be synthesized by manually integrating security properties with task specifications, this approach exhibits three critical limitations:

Figure 4.

The workspace of the UAS model.

| Listing 1. Specification of Example 1. |

|

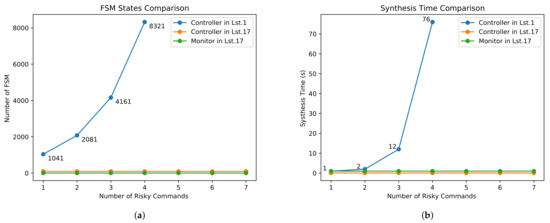

Limitation 1: Integrating UAV property monitor specifications with task specifications [5] leads to exponential growth in controller synthesis complexity. Concurrently, the LTL fragment supported by GR(1) inherently restricts the expressiveness of monitorable properties. Table 1 quantifies this relationship, demonstrating how the controller’s Finite State Machine (FSM) state number and synthesis time scale increase with increasing risky commands in Monitor 3 (line 12). When monitoring over four risky commands, synthesis fails to complete within the acceptable time frame (>15 min). Furthermore, GR(1)’s LTL subset cannot express the temporal properties requiring metric constraints (e.g., MTL formulae like ) or standard LTL that the RV monitors support. In addition, due to the lack of a priority mechanism in action scheduling, some high-priority actions may not be scheduled in time.

Table 1.

Synthesis time and FSM state number of Example 1.

Limitation 2: Traditional GR(1)-based task specifications lack metric task generation capabilities. This manifests in two critical constraints: (a) inability to bound action execution frequencies (e.g., enforcing ≤3 retries for landing procedures), and (b) mandatory infinite recurrence of all UAV goals. These limitations significantly restrict the controller’s applicability in real-world scenarios requiring quantitative task design.

Limitation 3: The lack of domain-specific language for UASs based on formal methods leads to difficulties in designing secure controllers for UAVs. Mapping information from various software and hardware resources into Application Programming Interfaces (APIs) compatible with GR(1) specifications’ atomic propositions is undoubtedly challenging. Employing different programming languages for separately developing monitor and controller specifications further deteriorates the efficiency of UAV specification design, while generating code with compromised portability and usability.

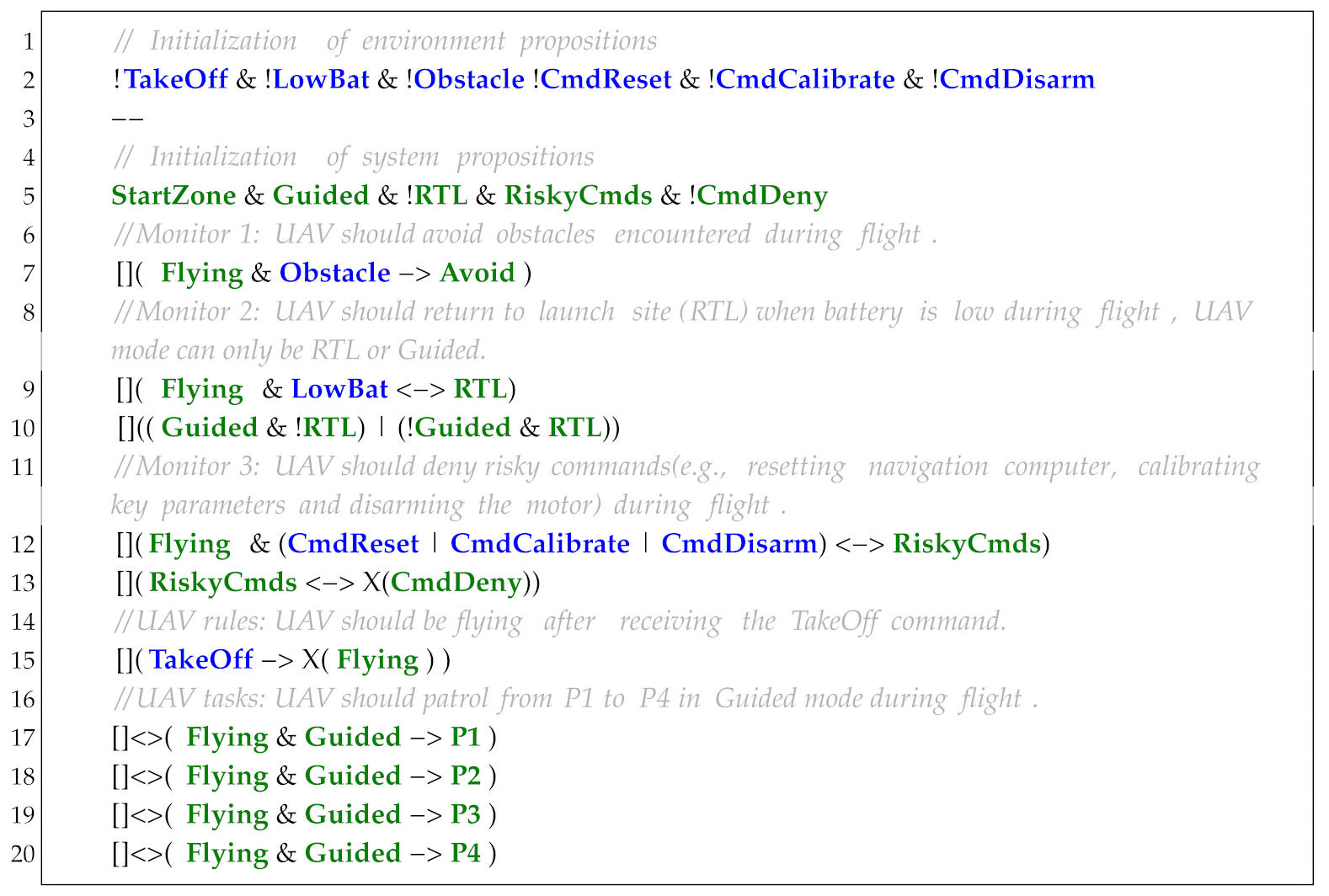

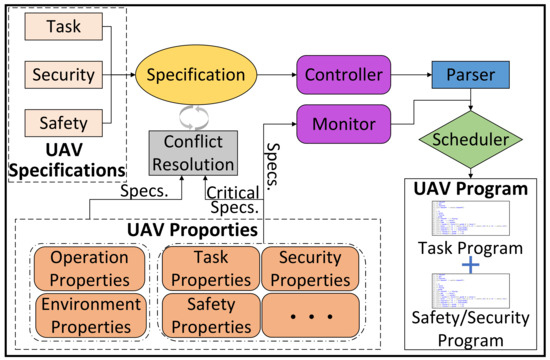

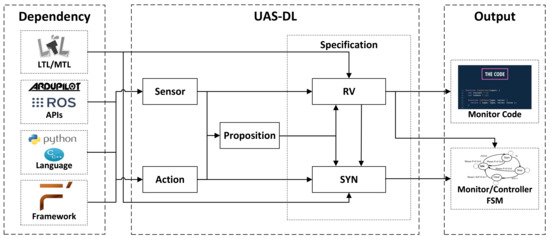

To solve these limitations, we propose a unified monitor and controller synthesis framework for a UAS secure controller (Figure 5). First, we propose a specification pattern to describe frequently used UAS properties (e.g., task, safety, and security constraints) (Section 5). In addition, in order to facilitate users in designing various resource interfaces, tasks specification, and monitored UAS properties, we design the doman-specific language (UAS-DL) (Section 7). Based on the predefined UAV properties and UAS-DL, a novel monitor-controller synthesis methodology is developed. This approach integrates RV with reactive synthesis techniques to generate a secure controller for UASs (Section 4). Depending on the task requirements, the runtime monitor outputs are fed into the controller synthesizer’s environmental constraints. Monitor countermeasures will also be classified as critical/non-critical based on their impact on controller event scheduling. Critical countermeasures (which influence scheduling) and their corresponding monitor ouptuts are mapped to the system constraint outputs and the environmental constraint inputs, respectively. Non-critical monitors operate autonomously for real-time UAV property monitoring without disrupting controller event scheduling. The scheduler prioritizes both monitor countermeasures and controller actions/motions according to their urgency and parallelization capabilities (Section 6.2). We develop two methods for tasks generation with metrics to enhance the operational applicability of synthesized UAV controllers (Section 6.3). The framework was implemented via NASA’s component-based F’ framework, with validation conducted on a Gazebo/ArduPilot/ROS (Robot Operating System) co-simulation platform (Section 8). The experimental results demonstrate the following advantages:

Figure 5.

Unified monitor and controller synthesis framework.

Advantage 1: By classifying and handling RV monitor specifications based on their impact on the scheduling of controller events (instead of embedding all monitor specifications in the controller specifications), we can use more formal languages (e.g., LTL, STL, and MTL) to express the safety and security properties. This enhances the expressiveness of specifications while reducing both the controller’s synthesis time and the number of states in the generated automata. In addition, by dividing events into multiple categories and making the monitor and controller events loosely coupled, our priority-based scheduling method improves the system’s response speed and supports the deployment of additional monitors and the design of more sophisticated tasks.

Advantage 2: In practice, due to the limited resources of UAVs, few goals are required to be fulfilled infinitely, most need only to be fulfilled once or a finite number of times. To address this, we design a regular expression to express task specifications with metrics. We then propose methods based on specification generation and re-synthesis to achieve task generation with metrics in GR(1)-based controller synthesis. This approach enables users to design correct-by-construction UAV tasks with enhanced flexibility and precision through formal methods.

Advantage 3: Based on F Prime (F′), a component-based open-source framework [40], we design a domain-specific language (UAS-DL) that provides a programming platform for formal specification design of a UAS. UAS-DL supports embedding code in UAVs’ commonly used languages, such as C/C++, Python, and ArduPilot commands. The language enables (1) extraction of software/hardware resource information from UAS, (2) design of propositional interfaces and monitor/task specifications, and (3) implementation of advanced features, including task metrics and event priorities. Components developed with standardized interfaces through UAS-DL further demonstrate high reusability and portability.

5. UAS Properties and Specification Patterns

5.1. System Structure of UAS

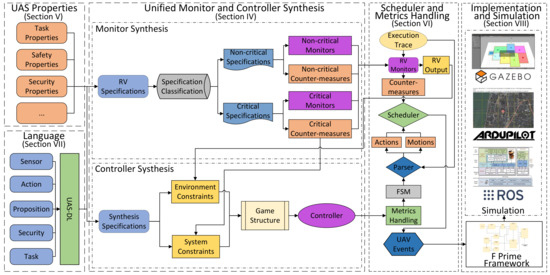

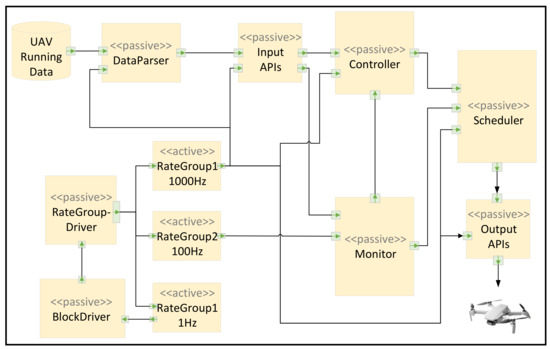

A UAS is generally divided into two components: the UAV and the GCS. The GCS can be a Remote Controller (RC), a smart mobile device, a computer, or even a military base, establishing bidirectional communication with the UAV to enable remote state monitoring and action control. UAVs are of various types, including fixed-wing crafts, rotorcrafts, and others. In addition to remote human operation, UAVs can operate in autonomous or semi-autonomous modes. Autonomous UAVs sense the environment using active or passive perception systems, make decisions through real-time mission planning algorithms, and command actuators to execute specific behaviors for achieving desired goals. Functionally, most UASs consist of four layers, as illustrated in Figure 6 [41]:

Figure 6.

System structure of UAS.

- Perception layer. UAVs are equipped with a variety of sensors: positioning and navigation are implemented by the Global Navigation Satellite System (GNSS) and IMU, where GNSS provides absolute positioning and IMU enables relative positioning and attitude estimation. Environment perception relies on radar, cameras, and the Automatic Dependent Surveillance-Broadcast (ADS-B) system for state broadcasting and collision avoidance. Sensor fusion methods integrate these data streams to construct environmental models and detect anomalies through statistical property analysis [42].

- Execution layer. This layer comprises an application sublayer (high-level command parsing) and an action sublayer (low-level actuation). The onboard control system (e.g., Pixhawk or APM) processes perception and control inputs, generating actuator commands via algorithms such as the Strapdown Inertial Navigation System (SINS), Kalman filtering, and PID control. The action sublayer translates these commands to drive the physical actuators to execute the dynamic motions [43].

- Control layer. The GCS serves as the UAS command center, monitoring real-time flight states (position, altitude, battery), payload status, and sensor feeds. It supports short-term decision-making (e.g., obstacle avoidance) and long-term mission planning (e.g., task rescheduling), with commands transmitted via MAVLink or similar protocols. The GCS usually integrates four modules: communication (data uplink/downlink), information display (GUI for operators), data storage (flight logs), and video processing (real-time analytics) [44].

- Transmission layer. UAVs establish heterogeneous networks through Air-to-Ground (AG, e.g., 4G/5G), Air-to-Air (AA, e.g., swarm coordination), and satellite links. These networks prioritize low-latency command delivery and high-bandwidth data transmission (e.g., HD video streaming).

5.2. Specification Patterns for UAS

UAS vulnerabilities span multiple critical domains, including the perception layer (e.g., sensor spoofing), the execution layer (e.g., actuator hijacking), the control layer (e.g., command injection), and the transmission layer (e.g., man-in-the-middle attacks). To address these, a pattern-based framework is designed to formally model both safety properties (e.g., collision-free trajectory compliance) and security properties (e.g., encrypted data integrity). This framework enables the synthesis of runtime-enforced controllers through temporal logic constraints. A specification pattern within this framework comprises six components [5]:

- Input is the user-defined proposition that provides the interface between the real-world environment and the abstract model of UAS. In real UAS control software (e.g., ArduPilot), inputs can be events parsed from flight log files.

- Property defines safety/security requirements constraining UAS behaviors, typically expressed as shall not statements (e.g., shall not enter no-fly zones).

- Specification formalizes properties using temporal logic formulas, such as .

- Output is the monitor’s verdict, which can be fed into the environment constraint () of the GR(1) synthesizer.

- Countermeasure is the enforcement operation a UAS should take when the properties are violated, which can be fed into the system constraint () of the GR(1) synthesizer.

- Priority quantifies the urgency of an event on a scale from 1 (low) to 10 (high). For example, triggering RTL mode terminates current missions (priority 5) and is assigned priority 9.

Therefore, we analyze the threats and summarize the specification patterns for their corresponding properties; we introduce one possible countermeasure and introduce its property specification based on the protective mechanism. The definitions and descriptions of the input and output interfaces of UAS are shown in Table 2.

Table 2.

Definitions and descriptions of input, output, and countermeasure interfaces of UAS.

5.3. Properties of UAS

Based on the property patterns, the corresponding properties are designed by analyzing the safety/security threats and task specifications of UAS. Various taxonomies are studied and discussed from different perspectives. We have detailed the classification of properties in another paper [41]. However, this study adopts methodological simplifications to enhance conceptual clarity in modeling UAV behaviors. Specifically, some three-dimensional operational dynamics are abstracted into two-dimensional representations, with spatial constraints and motion primitives intentionally reduced in parametric complexity. Real-world UAV applications inherently involve multi-domain environmental interactions and adaptive response mechanisms. Such implementation-specific considerations include, but are not limited to, aerodynamic disturbances, sensor noise compensation, and dynamic obstacle avoidance, which lie beyond the theoretical scope of this foundational investigation. The current abstraction preserves essential behavioral equivalences between control strategies and physical implementations, deliberately excluding practical engineering constraints to maintain analytical focus on core operational principles. Some specific examples are demonstrated in the following sections.

5.3.1. Security Properties of UAS

UAS security threats can be classified into three categories (hardware threats, software threats, and cyber-physical threats) and 13 specific threats [41]. The following are three representative threats among the 13.

Signal Traffic Blocking. As shown in Table 3, signal analysis and processing are restricted by the limited resources in embedded systems, such as UAS. Massive and continuous data sent to UAS can easily overwhelm and exhaust these resources. DoS or DDoS attacks on UAS are fatal and difficult to defend against. Because the packets sent to UAS are valid and consume too many resources, the system will become overloaded and fail to respond to normal requests. Gabriel Vasconcelos et al. [45] performed an experiment to evaluate the impact of DoS attacks on the AR.Drone 2.0 using three attack tools: LOIC, Netwox, and Hping3. Directional radio frequency interference is a simple way to jam the target UAS by emitting signals with specific directions, power levels, and frequencies.

Table 3.

Specification pattern : signal traffc blocking.

Control Commands Spoofing. As shown in Table 4, this attack typically results from logic flaws or insufficient authorization. For example, the DJI Phantom III was totally hijacked by hackers during GeekPwn 2015. The attackers cracked the signals of the BK5811 chip mounted on the Phantom III’s RC controller and exploited design vulnerabilities in the chip to generate malicious commands.

Table 4.

Specification pattern : control commands spoofing.

Sensor Spoofing. As shown in Table 5, data from sensors are the most important input for the UAS. Beyond the control flow, attackers can also interfere with or spoof sensor data to degrade system performance. For example, GPS spoofing is the most common attack and can be categorized into autonomous spoofing and forwarding spoofing. Researchers have studied multiple cases in [29]. Countermeasures may include mechanisms to verify consistency among multiple sensors regarding the following: (1) motion speed (e.g., the coordinates of the UAV cannot change from Beijing to New York within a few seconds); (2) time synchronization between GPS and NTP [46]; and (3) directional consistency of the GPS and electronic compass.

Table 5.

Specification pattern : sensor spoofing.

5.3.2. Safety Properties of UAS

As shown in Table 6, unlike security threats that are mainly caused by attackers, safety threats primarily originate from the environment and the UAV’s intrinsic limitations, such as battery consumption, maximum flight speed, system memory, CPU utilization, and obstacle avoidance. For example, to prevent the UAV from being unable to return due to low battery charge, we can set its minimum battery level to 50% during flight.

Table 6.

Specification pattern : safety constraint

5.3.3. Task Properties of UAS

As shown in Table 7, the UAV monitor-property pattern can be applied to design basic tasks following the principle (i.e., triggering specific actions when predefined conditions are satisfied). For instance, during high-altitude airdrop operations targeting injured personnel, the UAV altitude must remain below 30 meters (m) to ensure delivery accuracy within a 1-meter radius of the target coordinates. This constraint is critical because atmospheric disturbances above 30 m altitude can induce unacceptable trajectory deviations.

Table 7.

Specification pattern : task constraint.

6. Scheduling Algorithm and Task Generation with Metrics

To achieve accurate monitoring and control of UAS, our UMCS framework must address two fundamental challenges: the unified scheduling of multiple UAV events and the quantifiable design of GR(1)-based UAV tasks. To overcome these challenges, we introduce two novel approaches: priority-based scheduling and task generation with metrics based on specification generation and re-synthesis.

6.1. Priority-Based Scheduling

To coordinate RV monitor countermeasures with controller actions/motions, we implement a priority-based scheduling architecture.

Scheduler Fundamentals

The scheduling mechanism requires three core definitions:

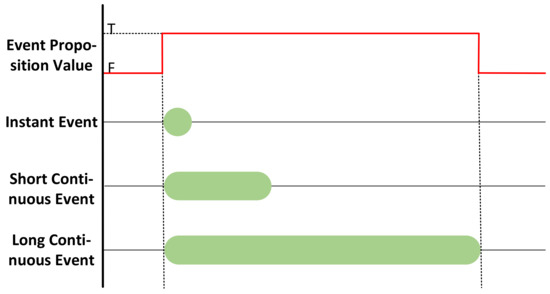

1. Event Categories: The scheduler needs to manage controller-generated actions/motions and monitor-generated countermeasures, collectively termed events. As illustrated in Table 8, we classify events into three types based on execution time, interruptibility, and termination characteristics:

- Instant events require short execution time (typically within one scheduling cycle), exhibit non-interruptible characteristics, and feature autonomous termination. These events are atomic operations that either: (1) execute in parallel when resource-independent, or (2) undergo sequential scheduling during resource conflicts. Example implementations include CmdDeny and ObstacleAvoid.

- Short continuous events require extended duration with interruptible execution and self-termination capability. Termination triggers occur upon either: (1) natural task completion, or (2) proposition value transition to False (e.g., Move and RTL operations).

- Long continuous events require persistent duration with interruptible execution. Termination triggers occur only upon the proposition value transition to False, as exemplified by the UAV being in Guide and Hover modes.

Table 8.

Event characteristics.

Table 8.

Event characteristics.

| Event Categories | Execution Time | Interruptable? | Automatic Termination? |

|---|---|---|---|

| Instant Event | Short | No | Yes |

| Short Continuous Event | Long | Yes | Yes |

| Long Continuous Event | Long | Yes | No |

Figure 7.

Execution time of event.

Table 9.

Examples of common events.

2. Event Parallelizability: Events demonstrating concurrent execution capability are classified as parallelizable, or are otherwise deemed non-parallelizable. Non-parallelizability arises from either: (a) shared resource allocation (software/hardware), or (b) behavioral incompatibility. For instance, Rising and Falling events exhibit resource conflict through shared engine utilization, whereas UAV flight modes Hover and RTL demonstrate behavioral incompatibility through conflicting control objectives.

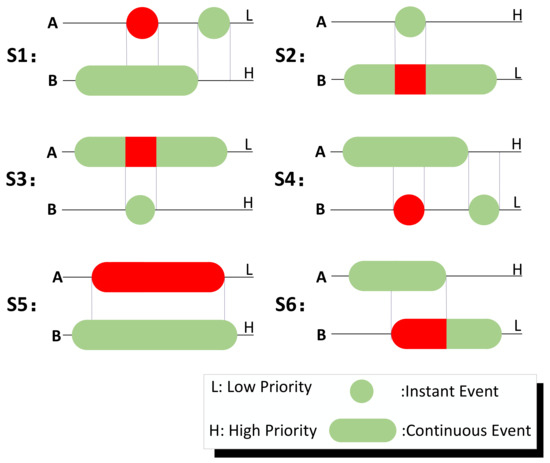

Under ideal conditions where task specifications incorporate comprehensive parallelizability analysis (particularly for action–motion interactions) and the controller is automatically synthesized, all events within each controller state should exhibit parallelizability. Our research specifically examines parallelizability interactions between the controller and RV monitor. Given the inherent parallelizability of instant events in our architecture, the core investigation focuses on the parallelizability between instant and continuous events. The implemented Priority-Based Preemptive Scheduling (PBPS) mechanism addresses six fundamental concurrency patterns shown in Figure 8, where:

- A = Controller-generated event

- B = Monitor-generated countermeasure

- Executing = Active state

- Blocked = Resource contention state

- Priority levels: Low (L) vs. High (H)

Figure 8.

Main scenarios of non-parallelizable events.

In S2 and S3, high-priority instant events can preempt low-priority continuous event scheduling. For instance, when movement and obstacle avoidance exhibit resource conflicts, a high-priority instant obstacle avoidance during low-priority continuous movement proves both necessary and feasible. Such events typically avoid task failures and should be classified as non-critical countermeasures.

In S4 and S6, controller high-priority controller events block low-priority monitor events, inducing execution delays permissible for non-critical scenarios. Consider a UAV high-speed flight prioritizing mission-critical tasks over low-priority energy-saving alerts—deceleration countermeasure can be deferred until task completion.

In S1 and S5, high-priority monitor events interfering with low-priority controller events risk task failure. These require designation as critical events through synthesizer specification integration to resolve non-parallelizability issues, or alternatively as termination events aborting current tasks for emergency procedures.

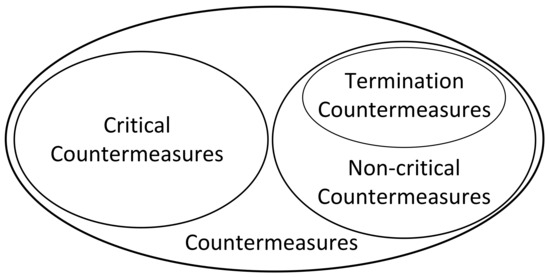

3. Countermeasures Categories: To optimize non-parallelizable event scheduling efficiency, we divide the monitor countermeasures into the following three types, as shown in Figure 9:

- Critical countermeasures: Task-conflicting events

- Non-critical countermeasures:

- –

- Termination type: Task-aborting events

- –

- Deferrable type: Temporarily suppressible events

Figure 9.

Countermeasures categories.

Critical countermeasures refer to those that block or delay the scheduling of controller events. S1 and S5 in Figure 8 are typical scenarios of critical countermeasures, which are generally non-parallelizable continuous countermeasures with higher priority than that of the controller events. The corresponding specifications of critical countermeasures need to be added to the task specification. The added specifications are generally composed of the trigger condition (e.g., ) and predefined operational specifications. For example, when the UAV mode is switched to RTL under GPS attacks, the synthesizer can automatically add the trigger condition specification () and predefined operations for countermeasures Hover.

Non-critical countermeasures refer to those that can be parallelized with the controller events or terminate the execution of UAV tasks. These countermeasures are not added to the system proposition of the task specification. For example, CmdDeny is a typical non-critical countermeasure, as it generally does not affect the execution of other events.

Termination countermeasures are a subset of non-critical countermeasures that terminate UAV task execution. Since their occurrence causes task failure, they require immediate termination of existing controller event scheduling. Common examples include RTL and EmergLanding (Emergency Landing). Conversely, non-termination countermeasures refer to non-critical countermeasures, excluding termination ones.

For each event, non-parallelizable parameters should be configured as shown in Table 10. The non-parallelizable events of Move include RTL, EmergLanding, ObstacleAvoid, and Hover (numbers in parentheses indicate priority levels). Critical events are automatically determined by the synthesizer based on event parallelizability or manually set. Termination events (e.g., RTL (7) and EmergLanding (8)) must be manually defined. A high-priority termination event will override a scheduled low-priority one. For instance, if a UAV executing RTL (7) due to a DoS attack encounters a mechanical fault requiring EmergLanding (8), the latter terminates RTL. ObstacleAvoid, a high-priority instant event, can interrupt low-priority termination or controller events. Hover, a continuous event with the same priority as Move, is classified as critical, and its corresponding specifications must be added to the task specification.

Table 10.

Demo non-parallelizable events table.

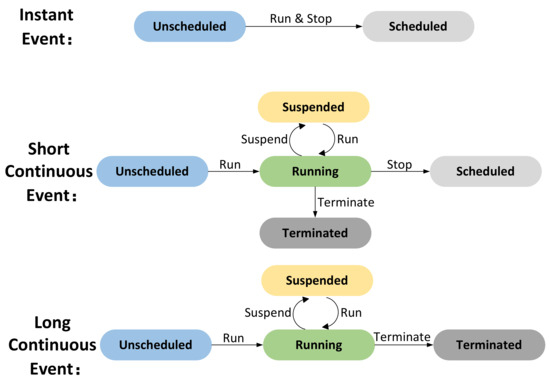

4. Event States: For efficient scheduling, we define five states: Unscheduled, Running, Suspended, Scheduled, and Terminated.

As shown in Figure 10, the initial state of each event is Unscheduled. The scheduler handles events differently based on their types: (1) An unscheduled instant event transitions to Scheduled immediately after running. (2) An unscheduled short continuous event enters the Running state when scheduled. Running events can be suspended by higher-priority non-parallelizable events, moving to Suspended, and resume Running once those events complete. If its proposition value becomes False, it transitions to the Terminated state. Once it is completed, it transitions to the Scheduled state. (3) A long continuous event behaves similarly but lacking a Scheduled state, its termination depends solely on its proposition value.

Figure 10.

Event states of instant and continuous events.

6.2. Operating Principle of the Scheduler

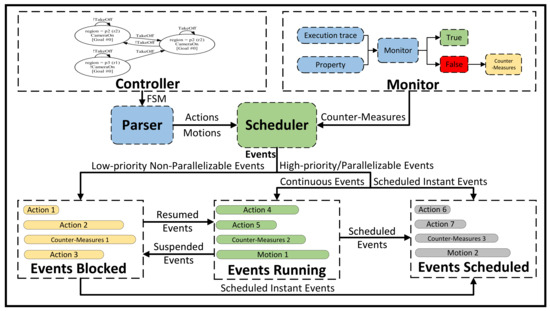

Figure 11 illustrates the scheduler’s operational schematic. Before task execution, the UAV requires user-defined specifications for its controller, including task objectives, safety, and security constraints. General UAV properties (e.g., operational modes, environmental interactions) can also be predefined through specifications.

Figure 11.

Schematic diagram of the scheduler.

These specifications generate monitors, with critical ones (e.g., safety constraints) and other predefined properties (e.g., operational/environmental rules) being sent to the synthesizer alongside UAV specifications for conflict resolution and controller synthesis. Both controller events (via the parser) and monitor events are scheduled by the scheduler to produce the UAV’s task execution and safety/security enforcement.

6.2.1. Implementation of the Parser

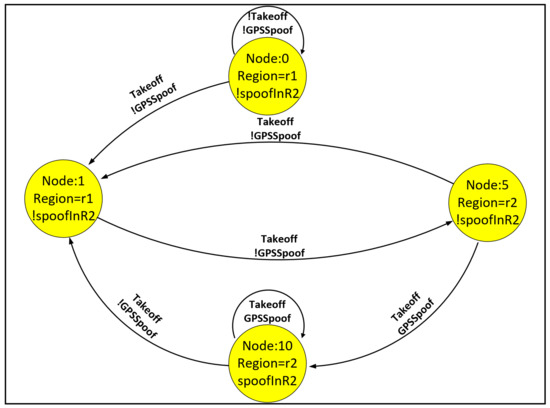

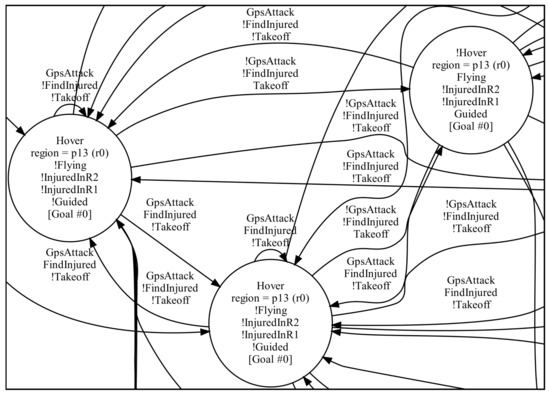

The parser is designed to translate the synthesized FSM of the controller into event flows. Figure 12 illustrates a segment of the FSM, composed of nodes and edges. Nodes represent the UAV’s position and motion states, while edges denote sensor-triggered transitions. By monitoring sensor states, the UAV transitions between states and executes corresponding events. To manage position changes, the external action Move is designed to control UAV movement.

Figure 12.

Demo FSM.

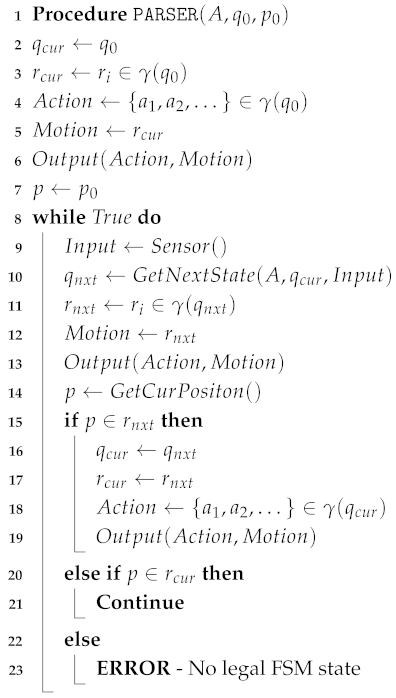

The parser converts the FSM into event flows based on real-time sensor states. Leveraging modifications to the classical algorithm in [25], we propose Algorithm 1 for FSM-to-event-flow parsing, which feeds the scheduler with structured event sequences.

| Algorithm 1: Parsing an FSM into event flow |

|

Initially, the UAV is placed at position (line 1) in region i such that (line 3), where is the initial automaton state satisfying . Furthermore, based on all other propositions , the appropriate UAV actions and motions are output (line 6).

At each step, the UAV senses its environment and determines the values of the binary inputs . Based on these inputs and its current state, it chooses a successor state (line 10) and extracts the next region (line 11), where it should go according to . Then, it outputs the new motion to the scheduler to drive it toward the next region.

If the UAV enters the next region in this step (line 15), the execution changes the current automaton state and region (lines 16–17), extracts the appropriate actions (line 18), and outputs them to the scheduler to activate/deactivate actions (line 19). If the UAV is neither in the current region nor in the next region, which could happen only if the environment violates its assumptions, then the execution is stopped with an error (line 23) [25].

6.2.2. Implementation of the Scheduler

Figure 13 shows the basic structure of the scheduler. The scheduler is composed of five components:

- Parser, which parses the FSM of the Controller into event flows for the scheduler;

- Scheduler, which coordinates actions and motions from the Controller and countermeasures from the Monitor based on priority;

- Events Running, a set recording currently executing events;

- Events Blocked, a set containing unscheduled or suspended events;

- Events Scheduled, a set listing all scheduled events.

Figure 13.

Schematic of scheduler operation.

The Scheduler schedules events and records their states in different event sets based on their priorities and parallelizability. Events with high priority or parallelizable events can run directly. If an event is instant, it transitions from Unscheduled to Scheduled and is added to the scheduled event set. Otherwise, if it is a continuous event, it transitions from Unscheduled to Running and joins the running event set.

Similarly, low-priority non-parallelizable events and suspended events in the running event set are moved to the blocked event set to await scheduling. When events in the blocked event set are scheduled, instant events are added to the scheduled event set, while continuous events are added to the running event set.

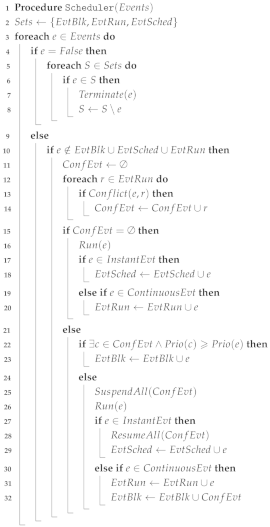

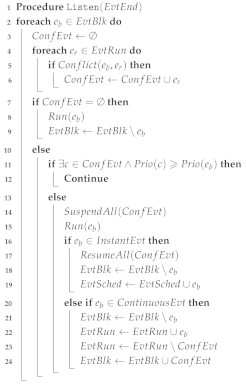

Algorithm 2 shows the processing procedure of non-termination events for the scheduler. The scheduler takes all event states as input. First, it periodically checks the value of each event (line 1). When the value of event e is False, it indicates that the event has not occurred or has finished; thus, the running event is terminated and the event is removed from the event set (lines 3–8). If the value of e is True (line 9), the event e is either occurring or already running. The scheduler then checks for conflicting events with e in the running set (lines 12–14). If no conflicts exist, event e is executed (lines 15–16), with instant events added to the scheduled set and continuous events added to the running set (lines 17–20). If the conflict set is non-empty, the scheduler checks for higher-priority events in the conflict set. If such events exist, e is moved to the blocked set (lines 21–23). Otherwise, all conflicting events are suspended, and e is executed (lines 24–26). For instant events, the scheduler resumes the suspended events and adds e to the scheduled set (lines 27–29). For continuous events, e is added to the running set, and the suspended events are moved to the blocked set (lines 30–32).

Events in the blocked set monitor the status of events in the running event set to determine their activation timing. Algorithm 3 illustrates this monitoring mechanism. At the end of each event, a completion signal is sent, serving as input to the algorithm (line 1). To optimize efficiency, events in the blocked set are ordered by descending priority and insertion time; higher-priority events added earlier are placed at the front of the queue. For each blocked event , the algorithm traverses the running set to identify non-parallelizable conflicts confEVT (lines 4–6). If confEVT is empty, is executed and removed from the blocked set (lines 7–9). If conflicts exist, the algorithm checks for higher-priority events in confEVT (lines 10–12). If such events do not exist, all conflicting events are suspended, and is executed (lines 13–15). For instant events , the algorithm resumes suspended conflicts, removes from the blocked set, and schedules it (lines 16–19). For continuous events, is moved to the running set, while conflicting events are added to the blocked set (lines 20–24).

Short continuous events terminate automatically upon completion, transitioning from the running to scheduled set (this process is omitted here for simplicity).

The handling of termination events is similar to that of non-termination events. The key difference lies in the input: termination events do not require interaction with the controller’s FSM (as terminating all low-priority scheduling tasks inherently halts their execution), so the input solely comprises countermeasures from the monitor. This process is straightforward and omitted here for brevity.

| Algorithm 2: Handling of non-termination events in Scheduler |

|

| Algorithm 3: Listening process of the blocked events |

|

6.3. Task Generation with Metrics

In the process of UAS task design, the design of goal specifications is the core content of task design. Our UAV task design is based on GR(1) specification, in which the system goal always requires at least one goal specification. When the system goal contains multiple goal specifications, these goals will be fulfilled infinitely often (if it can be fulfilled) in turn. However, in practice, due to the limited resources of UAV, goals can seldom be fulfilled infinitely; many goals need only to be fulfilled once or a finite number of times. To solve this problem, we propose two methods based on specification generation and re-synthesis.

Due to the complexity of the GR(1) specification, it is difficult to put forward a general method to solve the problem of task generation with metrics without limiting the expressivity of the GR(1) specification. Therefore, we design a regular expression for the system goal specification with the metric of fulfilment times, i.e., the expression indicates that will eventually be executed when are True. In this expression, is a composition of environment/system propositions and symbols (¬, ∧, ∨, →, ↔, and ), and is a composition of system propositions and symbols (¬, ∧, ∨, →, ↔, and ). For example, the task “When UAV takes off, goes to region R2 or R3” can be expressed as . According to our experience, there does not seem to be a significant loss in expressivity as most UAV task specifications that we encountered can be either directly expressed or translated to this format.

In normal conditions, the value of an action/motion being True denotes the action/motion is executed and being False denotes not executed. Based on the concept of “Counters” introduced in [34] for GR(1) specifications, we give two methods to generate counter variables that can keep track of the numbers of occurrences of an action/motion and calculate its execution times. If execution times of an action are over 1, we need to count the number of its variation from True to False. If fulfilment times are 1, we need only to test if the value of this action is True.

6.3.1. Task Generation with Metrics Based on Specification Generation

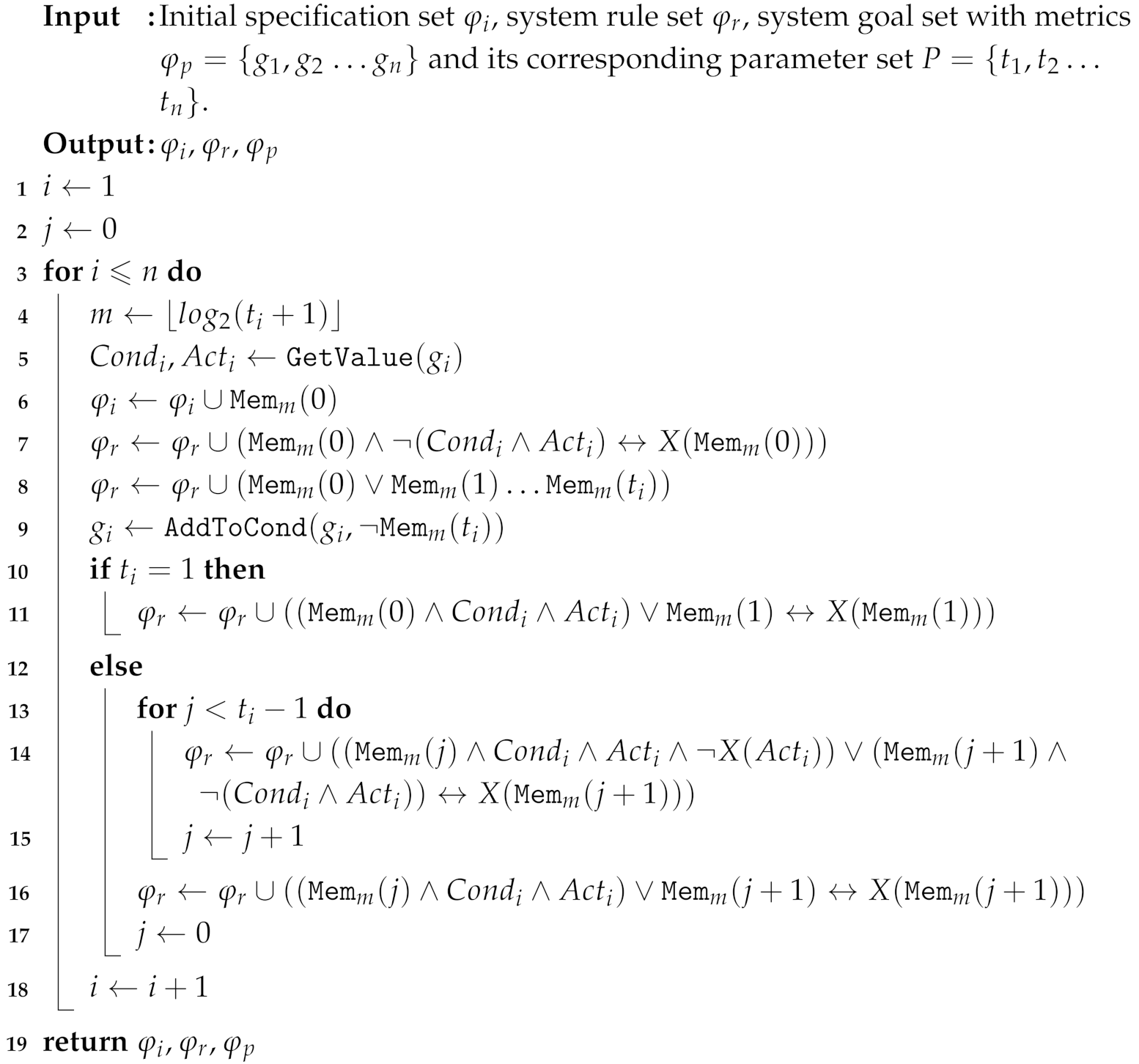

Based on our regular expression, we can design the Algorithm 4 that can automatically generate auxiliary specifications to record the execution times of goal specifications with metrics.

We use an example to illustrate the basic idea of this method:

Example 2.

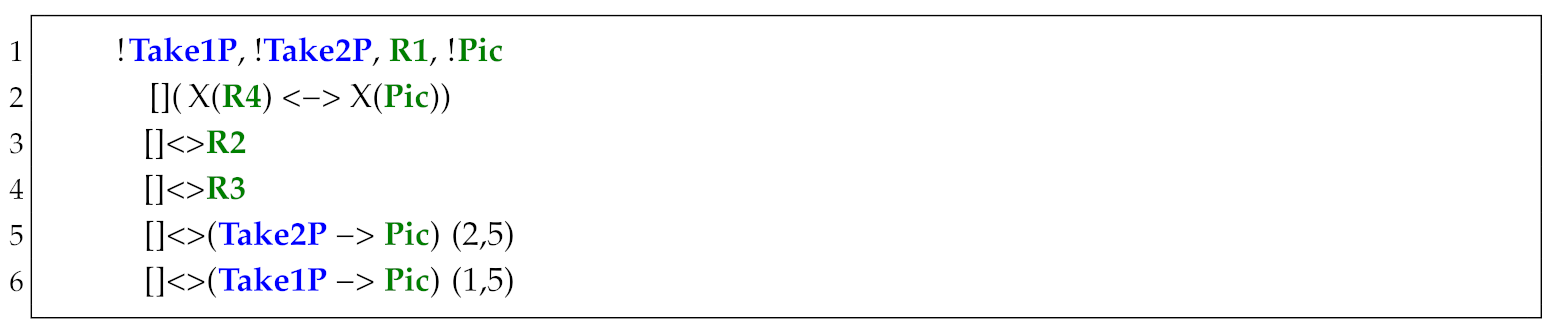

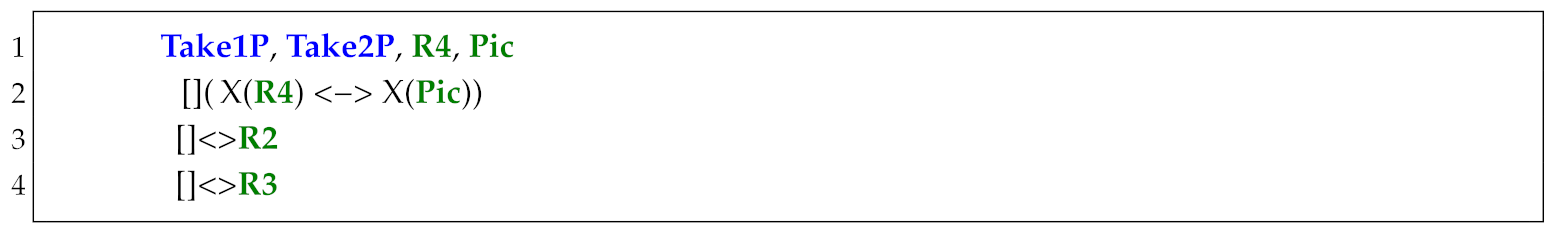

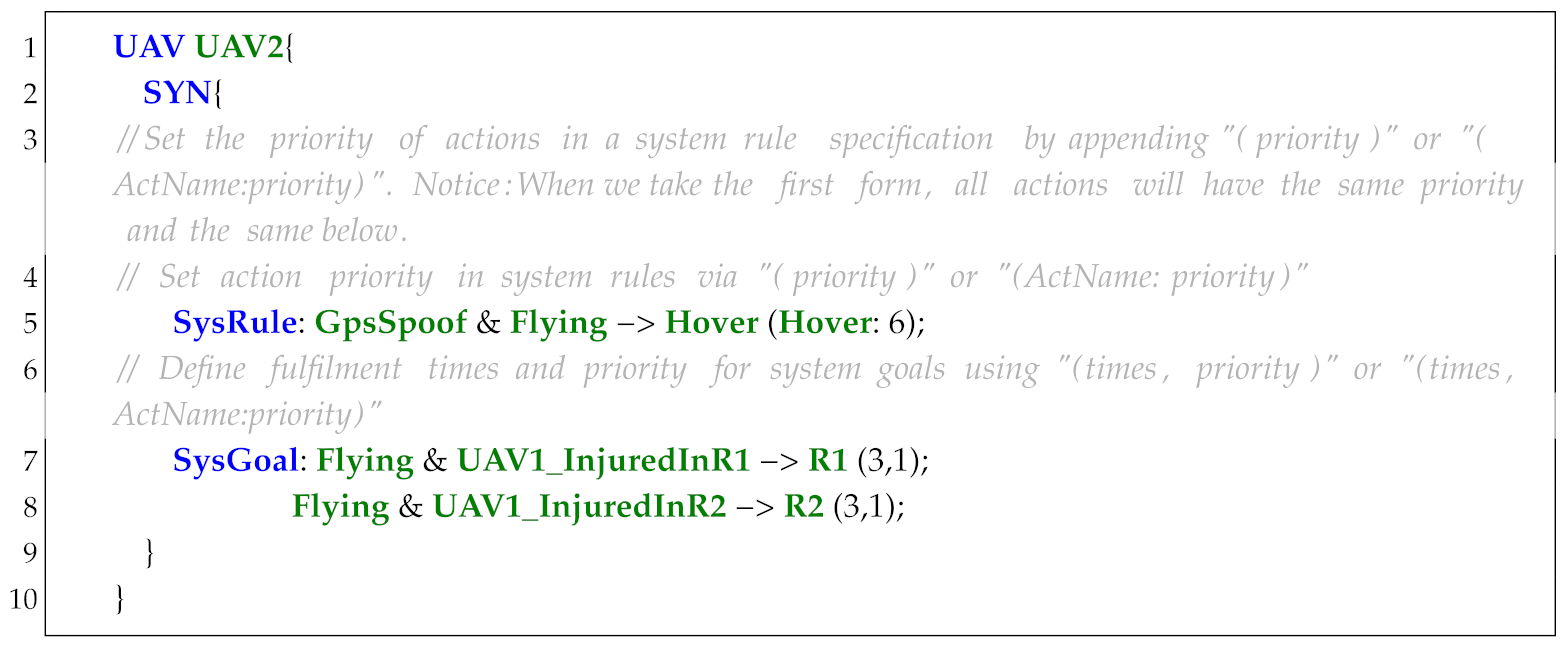

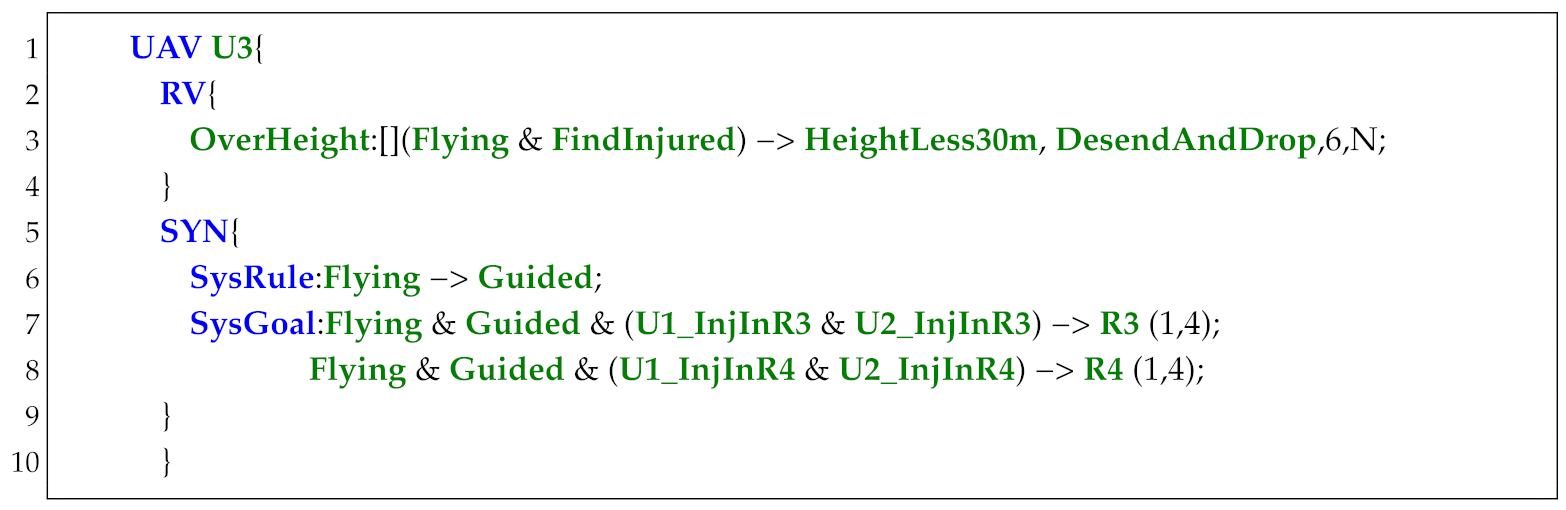

In Figure 14, the UAV takes off from R1 and patrols R2 and R3 infinitely often. The UAV can take 1 picture each time when visiting R4. When instructions Take1P or Take2P are , UAV should take 1 or 2 pictures from R4. The original specification is shown below, where "(2,5)" denotes the action Pic’s fulfillment times is 2 and priority level is 5 (Listing 2).

Figure 14.

Demo map.

| Listing 2. Original specification with parameters. |

|

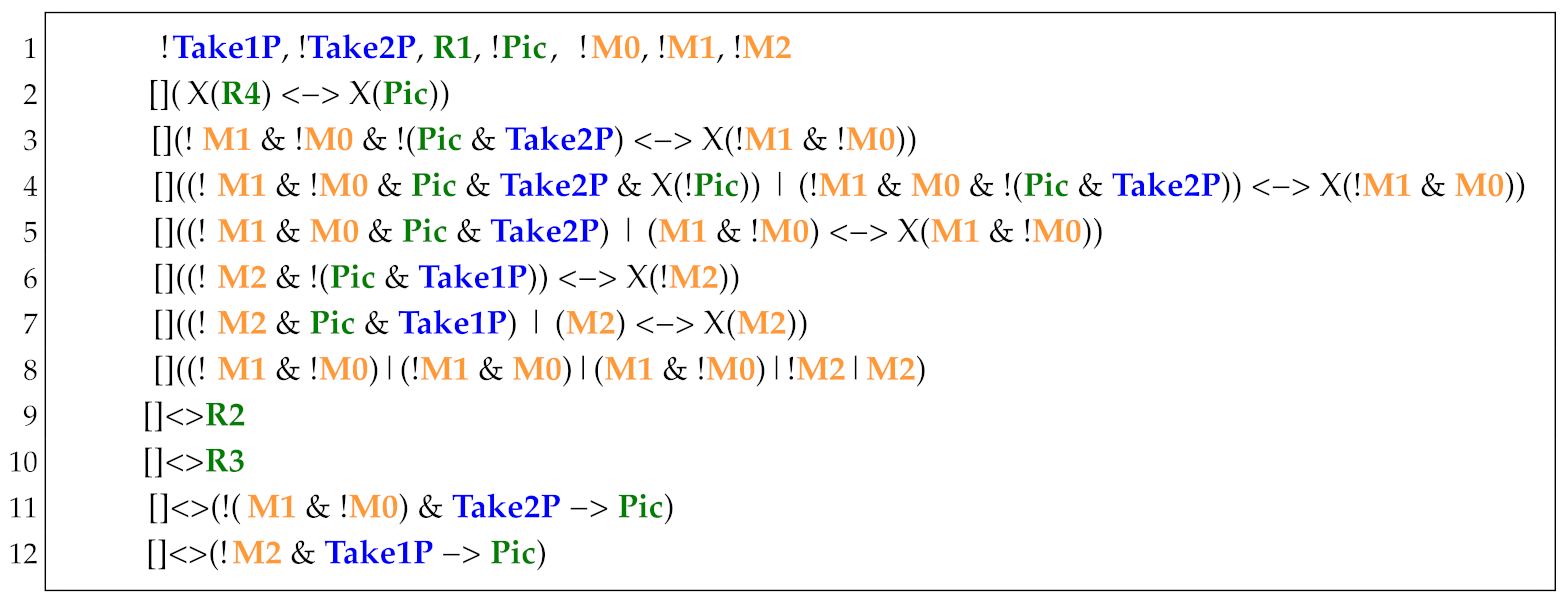

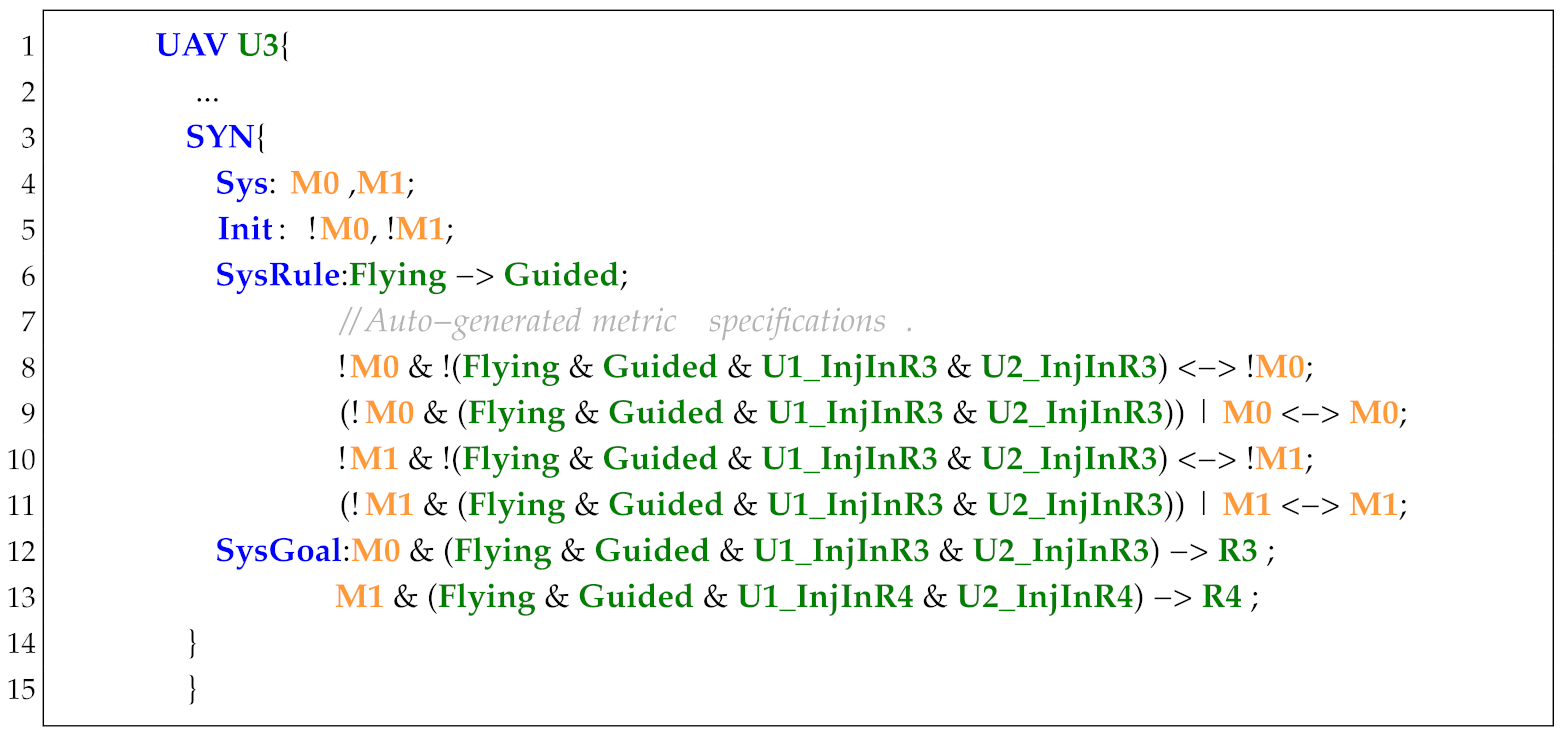

To solve the problem of task generation with metrics, we can generate the specification as shown in Listing 3. The added propositions M0, M1, and M2 are used to record the fulfillment times of the system goal specifications with metrics and to deactivate these goals when they are fulfilled for certain times. The specification contains added propositions that are generated by the auxiliary specification.

| Listing 3. New specification with generated specifications. |

|

| Algorithm 4: Specification generation algorithm |

|

To obtain these generated specifications, in Algorithm 4, we first calculate the number of memory propositions (line 4)—m memory propositions can store at most fulfilment times. Thus, the minimal value of m is .

Then, we extract and from each goal by using function (line 5). In line 6, we add the memory propositions with initial value 0 to ; function can generate m propositions with the value of n (e.g., in Listing 3, : , : ).

In lines 7–8, we add to specifications that:

- Memory proposition values remain False when conditions/actions are False (line 7).

- Limit the range of values of memory propositions (line 8).

In line 9, we replace the of with the conjunction of the original conditions and using .

By checking the truth table of the generated specification, we find that when is True ( is not fulfilled for times), the value matches the original specification. When is False ( is fulfilled for times), the value of the generated specification becomes always True (i.e., this goal specification is deactivated).

In lines 11 to 22, we add some specifications that can record the variations in .

For (lines 11–13), we test if . If satisfied, the memory proposition changes from 0 to 1 and remains constant.

For (line 14), we verify if one of the following conditions is satisfied:

- Variation occurs: Values of (j) and are True in the current state and will be True in the next state.

- Condition remains: Values of and .

When satisfied, increments to or remains constant at .

The specification (lines 16) is similar to the specification in lines 14, with the key difference being the last memory value tracking of only requires rather than variation of from True to False in the next state.

In Listing 3, specifications in lines 3–5 and lines 6–7 are generated to record the fulfillment times of the goal in lines 11 and 12, respectively. By adding these specifications, when we give instructions Take2P or Take1P to UAV, it can go to R4 to take two or one pictures and then patrol R2 and R3 infinitely often.

6.3.2. Task Generation with Metrics Based on Re-synthesis

Due to the method of specification generation adding additional memory propositions (which increases controller complexity and may cause synthesis failure), by observing the fact that removing the system goals of a specification does not change its synthesizability, we can use an extra counter to record the metric n of each system goal, remove the fulfilled goals, and re-synthesize a new controller until all goals with metrics are fulfilled. This approach avoids adding memory propositions to the original specification through three key steps:

- Step 1. During controller operation, maintain a counter that decreases by when state changes under , until (indicating the goal is fulfilled n times);

- Step 2. Remove the fulfilled goal specification, refresh the initial states with the current states, then re-synthesize the modified specification to obtain a new controller;

- Step 3. Iterate Steps 1–2 until all system goal specifications with metrics are removed.

We show the basic procedure of this method in Algorithm 5. Algorithm 5 requires the specifications S, synthesized FSM A of S, FSM A’s transition function , FSM state Q, system goal set , metric goal set , corresponding parameter set , and memory state set . The memory state set M is used to store the occurrence state of .

In lines 5–6, we extract and from each goal by using the function GetValue. In lines 7–8, if the fulfilment times of the current goal is 1, we test whether the propositions in state Q satisfy via IsTrue. If True, the fulfilment times are reset to 0.

In lines 10–16, if the fulfilment times of exceed 1, we check if Q satisfies . If True, is considered to have occurred under , and is set to 1; otherwise, if has occurred, its fulfilment times decrease by 1 and is set to 0.

In lines 17–25, we first check if any counter in P reaches 0 (indicating eliminable goals). For each such case, we remove the corresponding elements from , M, S, and P (lines 18–23), then refresh the initial specifications of S using the current state Q via RefreshInit (line 24), and finally, re-synthesize the modified S to generate a new FSM A (line 25). Finally, the state Q is updated via the transition function (lines 26–27).

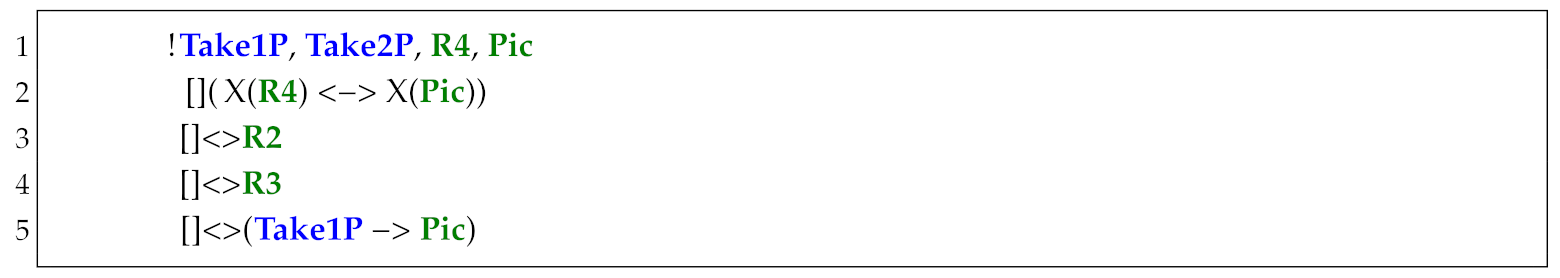

We use the specification in Listing 2 as an example. When first sending the instruction Take2P to the UAV, the UAV will visit R4 twice to capture two pictures while patrolling R2 and R3. During the second visit to R4, the value of action Pic becomes True, and the corresponding counter for goal Take2P decrements to 0 (indicating goal fulfillment). This triggers re-synthesis of a new controller by removing the specification for Take2P and updating the initial specification with the current state, as shown in Listing 4.

| Algorithm 5: Re-synthesis algorithm |

|

| Listing 4. The specification to re-synthesize when goal Take2P is fulfilled. |

|

Subsequently, after sending the instruction Take1P, the UAV visits R4 once to capture one picture, followed by another re-synthesis (specification in Listing 5). Table 11 demonstrates the reduction in FSM size after two re-synthesis processes, attributable to the elimination of fulfilled goal specifications.

Table 11.

The controller size after using the re-synthesis method.

| Listing 5. The specification to re-synthesize when goal Take1P is fulfilled. |

|

6.3.3. Advantages and Disadvantages of the Two Methods

As shown in Table 12, both methods exhibit distinct advantages and limitations.

Table 12.

Advantages and disadvantages of the two methods.

The method based on specification-generation is suitable for scenarios with limited parameters and restricted parameter value ranges. This approach requires only a single synthesis iteration, which produces a more complex automaton structure while simplifying the scheduling process, albeit at the potential cost of higher computational resource consumption.

In contrast, the re-synthesis-based method imposes fewer constraints on both parameter quantity and value ranges. However, this flexibility necessitates multiple synthesis iterations and a more complex scheduling process.

6.3.4. Limitations

Our methods address the finite execution of goal specifications via regular expressions, yet three key limitations persist in practical design:

Limitation 1: Uncountable fulfilment times. To count the occurrence of some Actions/Motions, we need to detect transitions of Actions/Motions values from True to False. However, if certain Actions/Motions remain persistently True while their fulfilment times exceed 1, counting failures occur. This problem arises when system goals contain only goals with metrics, and other completed goals prevent the last one from being countable (e.g., values remain True indefinitely).

A naive solution involves adding specifications like to force state transitions. While synthesis of such specifications may enable counting through cyclic behavior (e.g., moving between regions in Figure 14), it risks triggering unintended Actions/Motions. For instance, a UAV is designed to take off from R1 to visit R2 twice; the original system goal specification is uncountable (UAV will take off from R1 and always stay in R2). Adding goal specification to force the UAV to leave will make this goal countable, but this will introduces unnecessary complexity and unreasonable behaviors. Fundamentally, unreasonable fulfilment time assignments exacerbate this issue. A pragmatic approach is to terminate tasks when solitary goal actions with metrics stagnate in True states, rather than awaiting improbable transitions.

Limitation 2: Strict fulfilment times. When setting the fulfilment times of a goal to n, our methods guarantee that its Actions/Motions will execute at least n times if conditions are satisfied. However, we cannot ensure execution occurs exactly n times, primarily due to the following:

- Propositions of may appear in other specifications, potentially altering their values

- Infeasibility of strict finite fulfilment for certain goals (e.g., in Figure 14, a UAV required to visit regions R1 and R3 four times cannot simultaneously visit R2 exactly twice)

For the first case, the specification generation method can be augmented with:

If synthesizable, this enforces precise n-time execution.

For the second case, similar specifications like may cause unsynthesizability; thus, assigning rational metrics may ease this problem.

Limitation 3: Conditional Dependency Problem. When the Condition of goal specification B depends on the Actions/Motions of goal specification A (i.e., propositions in B’s condition also exist in A’s action set), if goal A’s fulfilment times m are less than goal B’s times n, this may prevent B from being fulfilled n times.

To mitigate this:

- Avoid propositional dependencies through modular specification design

- Enforce the constraint for dependent goals

- Implement dependency checking via:

For instance, if UAV’s goal B (n = 5) depends on goal A (m = 3), either increase m or decouple the dependencies.

7. Modeling and Description of UAS

7.1. UAS Modeling

The purpose of synthesis is to construct a reactive controller for the UAV, i.e., generating execution trajectories satisfying given temporal specifications. To achieve this, we formalize a system model comprising UAVs and their environment through GR(1) game theory.

Consider a multi-UAV system with agents . For any UAV , other UAVs are treated as its dynamic environmental components. which means one UAV can obtain dynamic information from both the environment and other UAVs. Then, we construct the Position, Action, and Sensor models for the multi-UAV system as follows (see Table 13) [5]:

Table 13.

Propositions set for system model.

Position Model. We assume the workspace is a plane polygon, denoted by a position coordinate set Z partitioned into finite convex polygonal zones , where for and . The Boolean proposition is True if UAV is in zone . Obviously, the proposition is a mutual exclusion constraint, namely, exactly only one element in is True at any step.

Action Model. UAVs must execute action sequences to satisfy system functionalities, including “patrolling”, “target navigation”, and “alarm triggering”. These actions are denoted by , and is True if UAV executes action such as “patrolling”. Here, we define as the behaviors set of .

Sensor Model. The environment is perceived through UAV sensors (e.g., GPS, LiDAR). We abstract the sensors as a set of binary environment propositions . UAV can receive data from sensor , which holds True if it is triggered (e.g., Lidar senses surrounding obstacles).

Summarily, we define , , and as the sets of propositions , , and , respectively, as well as . Based on and , the multi-UAV system model can be given with the sets of behaviors and sensors.

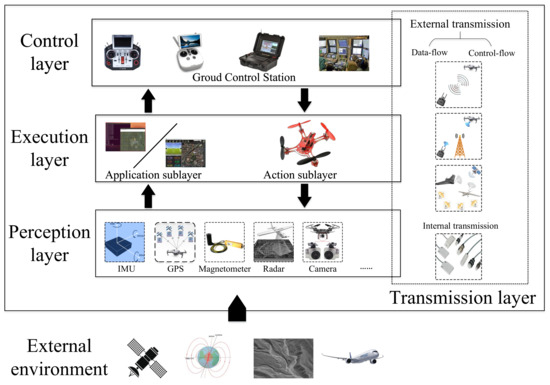

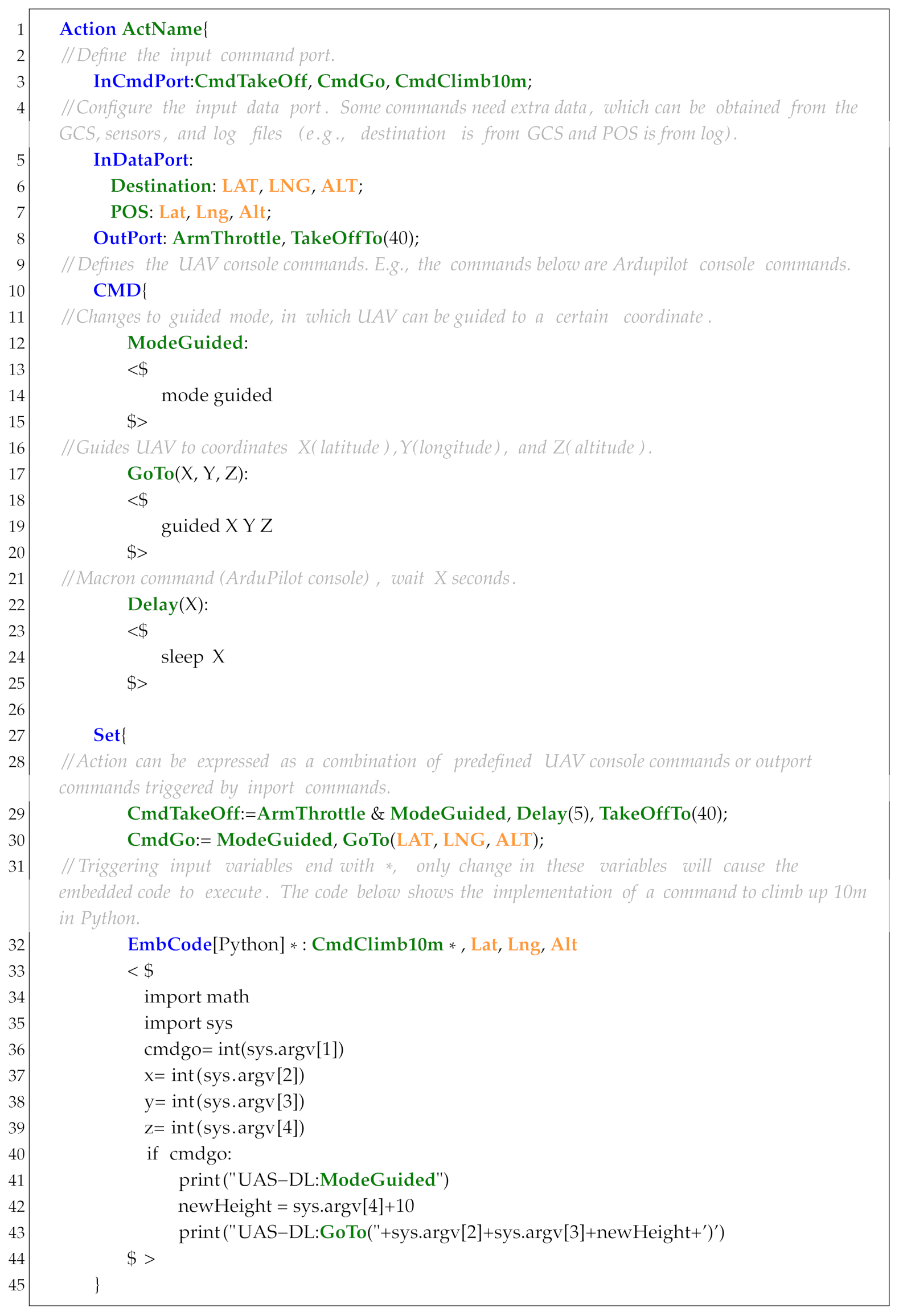

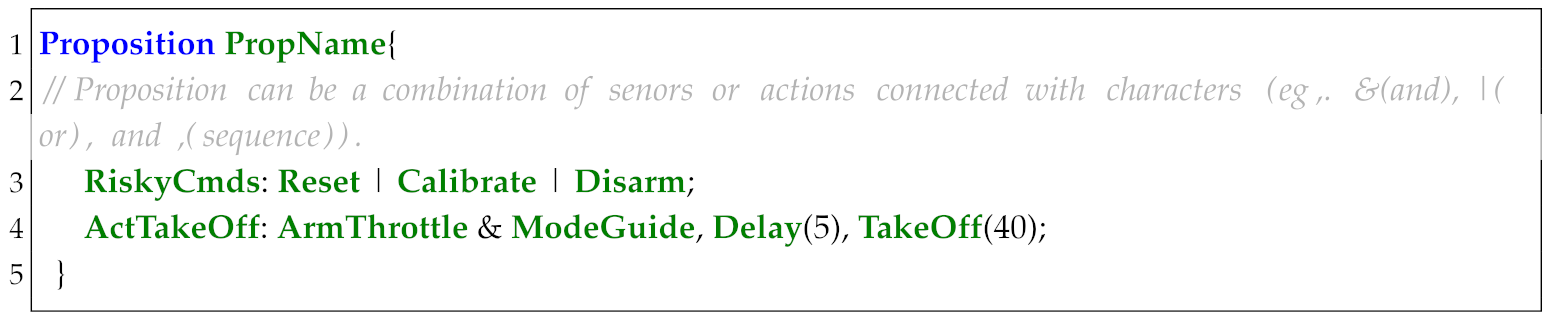

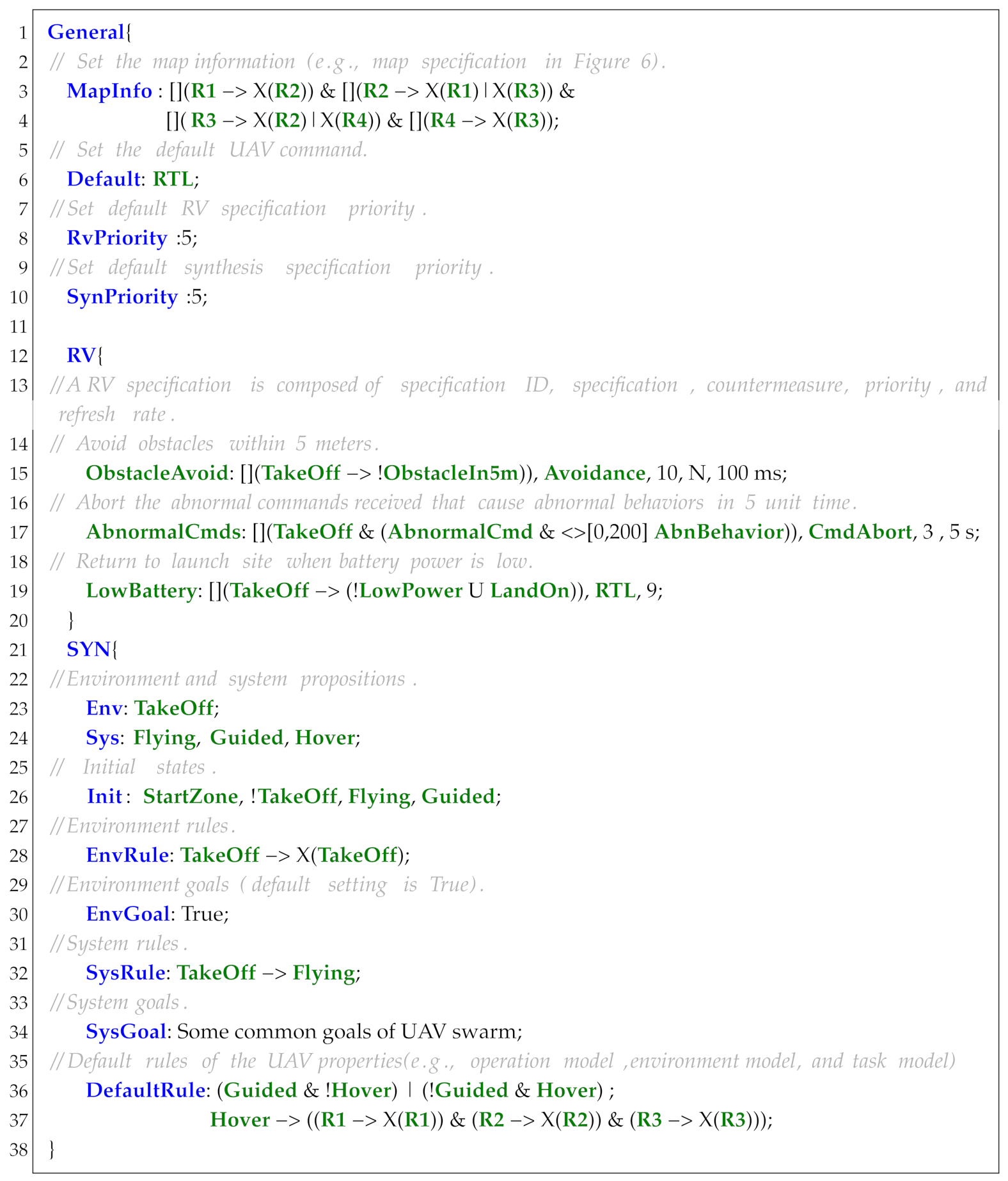

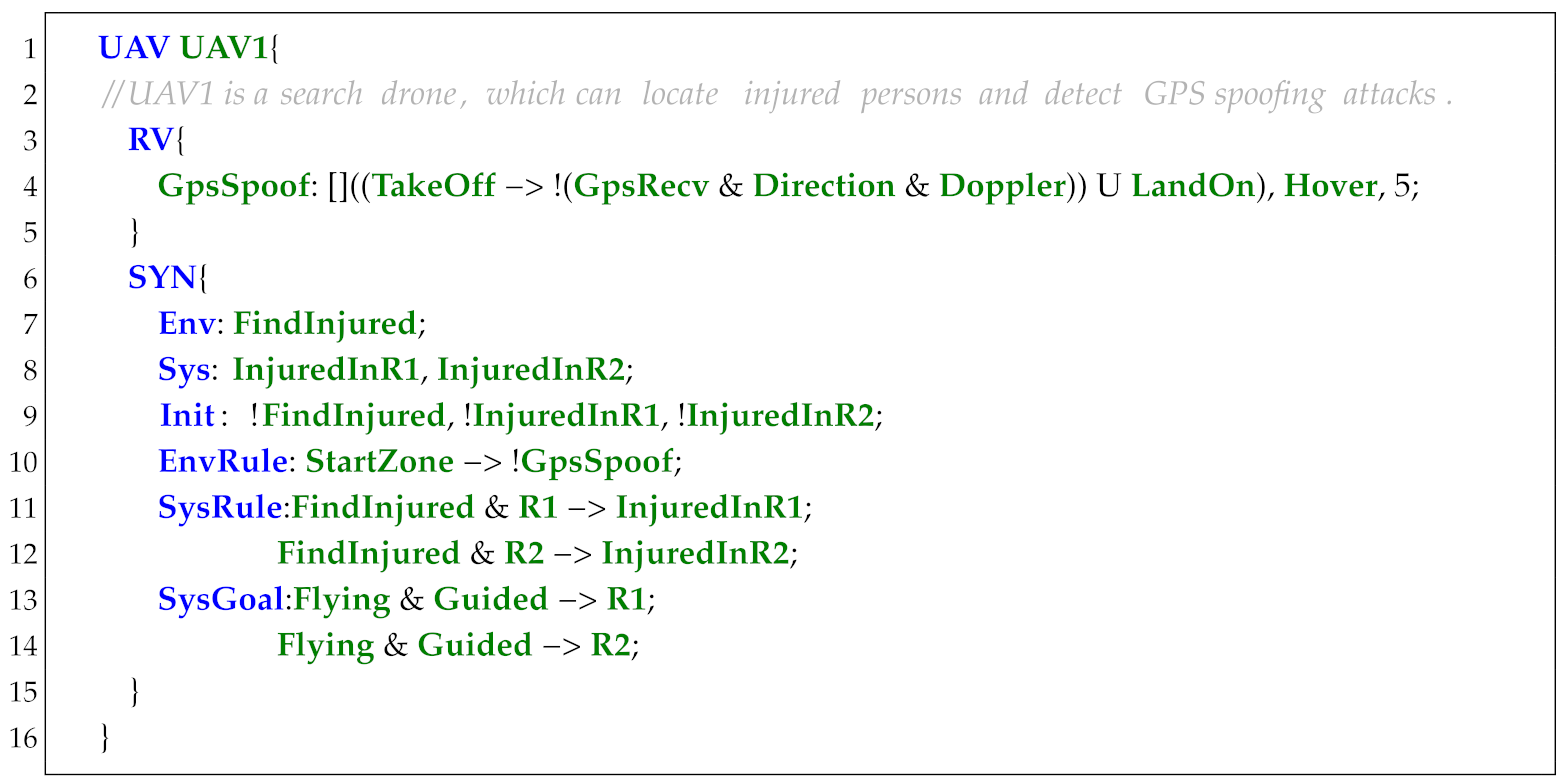

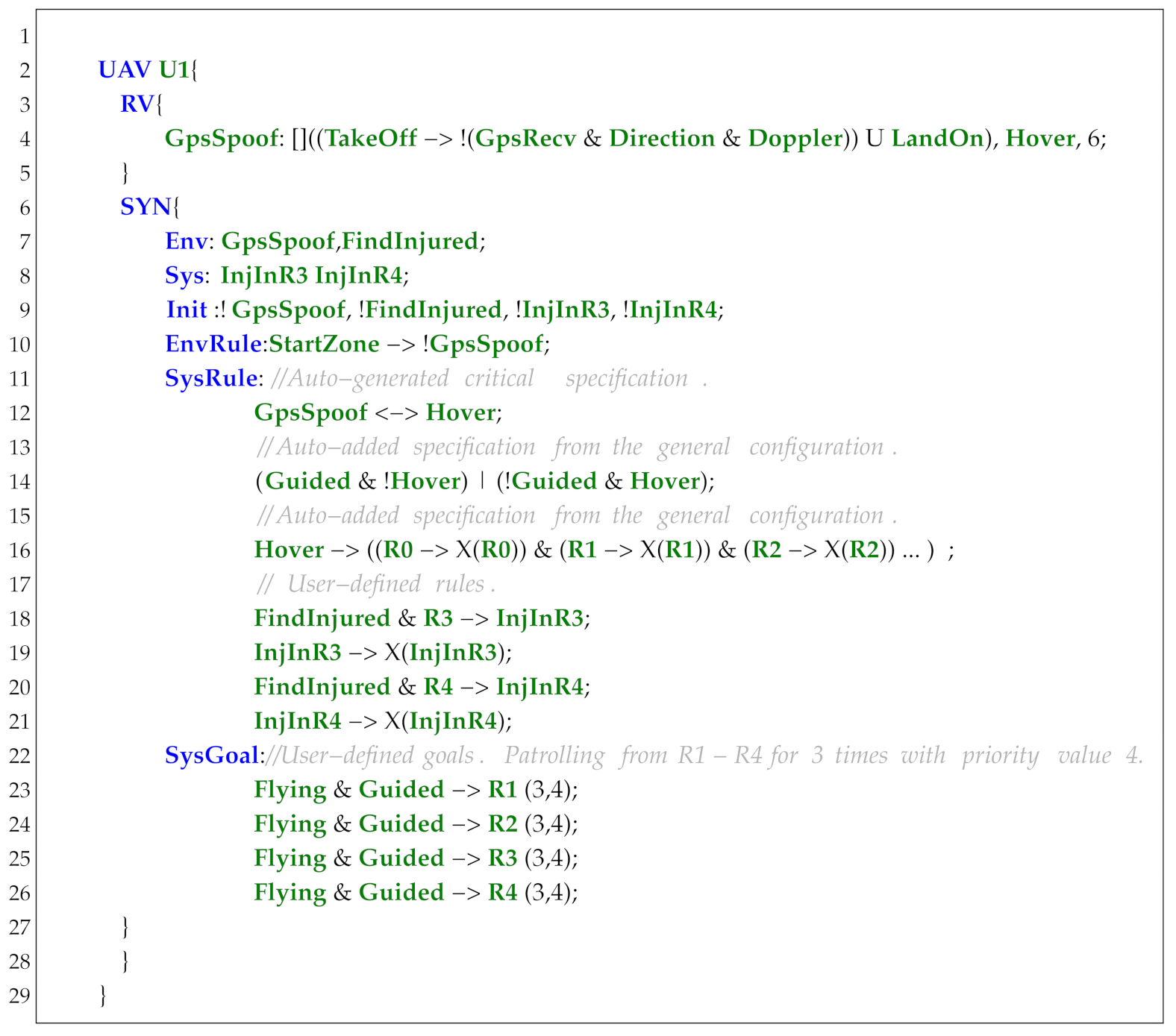

7.2. Unmanned Aircraft System Description Language

Based on our framework and UAS model, in order to facilitate users in flexibly configuring the hardware and software resources, designing the UAS properties and tasks for the multi-UAV system, we borrowed the concept of domain-specific languages (DSL) and designed the UAS-DL for our method.

Figure 15 illustrates the architecture of UAS-DL. A core feature lies in its capability to abstractly model heterogeneous UAS hardware/software resources as atomic propositions, which are directly applicable to the formal verification of UAS properties and task specifications.

Figure 15.

Architecture of UAS-DL.

The UAS-DL framework comprises four core components:

- : Sensor information extracted from APIs of simulation platforms (e.g., ArduPilot and ROS)

- : Actuator commands derived through Sensor data processing

- : Propositional logic composed of the propositions from in and

- : Specifications describing UAV priorities and tasks in LTL/MTL containing:

- –

- : Runtime verification monitor specification describing UAV priorities

- –

- : GR(1) synthesis specification mainly describing UAV tasks

The specifications can be converted into monitors in the form of code or FSM, while the specifications can be synthesized into the FSM controller.

The simplified syntax of UAS-DL (Table 14) comprises five core components, which correspond to the four parts in the UAS-DL architecture (among them, the GeneralSetting and the UAVSetting correspond to the Specification):

- : Sensor definition composed of key words “Inport”, “OutPort”, “Var” and “Set”

- : Action definition composed of key words “InCmdport”, “InDataport”, “OutPort”, etc.

- : Propositions definition composed of propositions defined by or

- : Global constraints in LTL/MTL specification

- : UAV constraints in LTL/MTL specification

Production rules are printed in bold, and printable contents are enclosed in “quotation marks”. We use words with the suffix ID (e.g., PpID) (proposition ID) to denote element names. Multiplicity is defined using the following:

- *: Zero or more repetitions

- +: At least one occurrence

- ?: Optional element (zero or one instance)

To demonstrate UAS-DL syntax, we use the highlighting conventions:

- Sensor, VAR, Set (UAS-DL keywords in blue)

- SenName, POS (user-defined identifiers in green)

- Lat, Lng (other language (e.g., F Prime Prime (FPP) [40]) predefined keywords in orange)

- "// Sensor initialization" (code comments in gray)

In the following sections, we will give several examples to demonstrate the usage of each part of the UAS-DL.

Table 14.

Syntax of UAS-DL.

Table 14.

Syntax of UAS-DL.

| UAS-DL syntax: | ||

| UAS-DL | ::= | Sensor Action* Proposition* GeneralSetting UAVSetting+ |

| Basic definitions: | ||

| Number | ::= | (’0’ .. ’9’) + (’.’ (’0’ .. ’9’)+)? |

| ID...PpID, Param, Atomic | ::= | [A-Za-z0-9]+ |

| Message | ::= | [A-Za-z0-9’∼]+ |

| Code | ::= | ∼[]+ |

| Url | ::= | [A-Za-z0-9./]+ |

| Basic syntax: | ||

| TimeInterval | ::= | ’[’ Number ’:’ Number ’]’ |

| Expression | ::= | Expression (’&’ | ’|’ | ’,’) Expression | Expr (’>’ | ’<’ | ’’ | ’’ | ’=’) Expr |

| Expr | ::= | Expr (’*’ | ’/’) Expr | Expr (’+’ | ’−’) Expr | Number | (ID | Message) | ’(’ Expr ’)’ |

| LTL | ::= | "True" | "False" | Atomic | ’(’ LTL ’)’ | LTL (’&’ | ’|’ | ’’ | ’’) LTL | (’!’ | ’∼’) LTL |

| | (’[]’ | ’G’ | ’’ | ’F’ | ’X’ | "Next") LTL | LTL ’U’ LTL | ||

| MTL | ::= | "True" | "False" | Atomic | ’(’ MTL ’)’ | MTL (’&’ | ’|’ | ’’ | ’’) MTL| (’!’ | ’∼’) MTL |

| | (’[]’ | ’G’ | ’’ | ’F’ ) TimeInterval? MTL | (’X’ | "Next") MTL | MTL ’U’ TimeInterval? MTL | ||

| GR1 | ::= | "True" | "False" | Atomic | Shared | ’(’ GR1 ’)’ | GR1 (’&’ | ’|’ | ’’ | ’’) GR1 | (’!’ | ’∼’) |

| GR1| (’[]’ | ’G’ | ’’ | ’F’ | ’X’ | "Next") GR1 | ||

| SpecParm | ::= | ’(’ Param? (’,’ Param)? | (ParamID:Param (’,’ ParamID:Param)*) ’)’ |

| SpecExp | ::= | GR1SpecParm? |

| Map | ::= | "MapInfo" ’:’ (Url | GR1) ’;’ |

| Default | ::= | "Default" ’:’ ModeID ’;’ |

| RvPriority | ::= | “RvProity” ’:’ Number ’;’ |

| SYNPriority | ::= | “SynPriority” ’:’ Number ’;’ |

| EnvParms | ::= | "Env" ’:’ Atomic (’,’ Atomic)+ ’;’ |

| SysParms | ::= | "Sys" ’:’ Atomic (’,’ Atomic)+ ’;’ |

| Init | ::= | "Init:" (((’!’ | ’∼’)? Atomic) (’,’ ((’!’ | ’∼’)? Atomic))* SpecParm? ’;’)* |

| EnvRule | ::= | "EnvRule:" (GR1 ’;’)* |

| EnvGoal | ::= | "EnvGoal:" (GR1 ’;’)* |

| SysRule | ::= | "SysRule:" (SpecExp ’;’)* |

| SysGoal | ::= | "SysGoal:" (SpecExp ’;’)* |

| Monitor | ::= | (MonitorID ’:’ (LTL | MTL) (’,’ CounterMeasureID)? (’,’ Number)? (’,’ Number)? |

| (’,’ ’N’|’C’|’T’)? (’,’ Number ’s’|’ms’)?’;’)* | ||

| RV | ::= | "RV" ’{’ Monitor ’}’ |

| SYN | ::= | "SYN" ’{’ Default? EnvParms? SYSParms? Init? EnvRule? EnvGoal? SYSRule? SysGoal? ’}’ |

| Sensor syntax: | ||

| Sensor | ::= | "Sensor" SenID ’{’ |

| ("InPort" ’:’ InID ’:’LogID (’,’ LogID)* ’;’)? | ||

| "OutPort" ’:’ OutID (’,’ OutID)* ’;’ | ||

| ("VAR" ’:’ VarID (’,’ VarID)* ’;’)? | ||

| "Set" ’{’ (SetID ":=" Expression ’;’ | ’EmbCode’ ’[’ CodeID ’]’ (’*’ | ’+’)? | ||

| ’:’ (LogID(’*’)? (’,’ LogID(’*’)?)*)? "" Code ";")+ ’}’ | ||

| ’}’ | ||

| Action syntax: | ||

| Action | ::= | "Action" ActID ’{’ |

| "InCmdport" ’:’ CmdID (’,’ CmdID)* ’;’ | ||

| ("InDataport" ’:’ (DataID ’:’LogID (’,’ LogID)* ’;’)*)? | ||

| ("OutPort" ’:’ OutID (’,’ OutID)* ’;’)* | ||

| ("VAR" ’:’ VarID (’,’ VarID)* ’;’)? | ||

| ("CMD" ’{’ (CmdID ’:’ " Code ";")* ’}’)* | ||

| "Set" ’{’ (SetID ":=" Expression ’;’ | "EmbCode" ’[’ CodeID ’]’ (’*’ | ’+’)? | ||

| ’:’ (CmdID | LogID)(’*’)? (’,’ (CmdID | LogID)(’*’)?)* "" Code ";")+ ’}’ | ||

| ’}’ | ||

| Proposition syntax: | ||

| Proposition | ::= | "Proposition" PropsID ’{’ (PropID ’:’ PpID ((’,’ | ’&’ | ’|’) PpID)* ’;’)+ ’}’ |

| GeneralSetting syntax: | ||

| GeneralSetting | ::= | "General" ’{’Map Default RvPriority? SYNPriority? RV? SYN? ’}’ |

| UAVSetting syntax: | ||

| UAVSetting | ::= | "UAV" UAVID ’{’ Default? RV? SYN? ’}’ |

7.2.1. Sensor

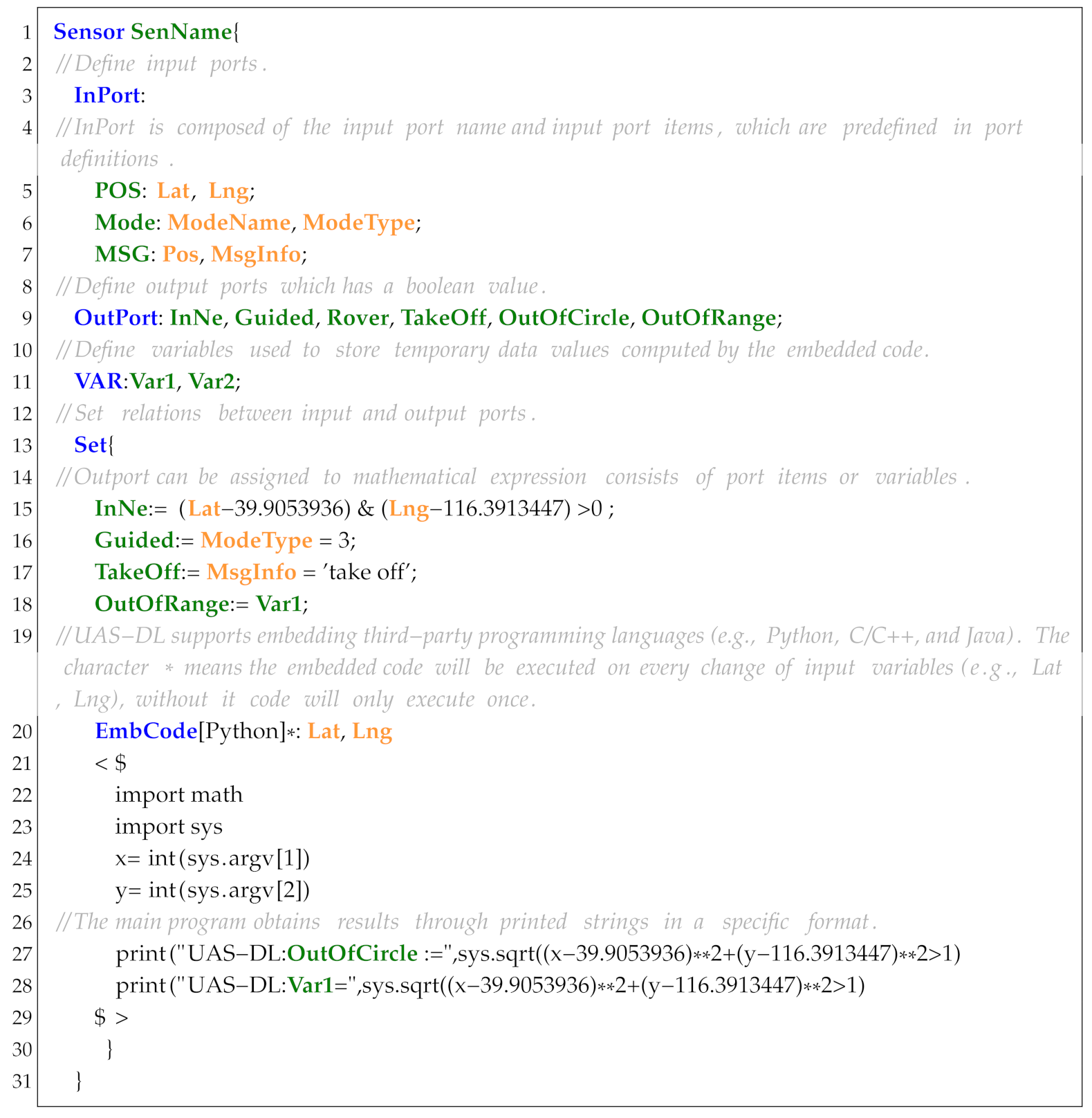

Sensor is designed to process and convert the extracted UAS running trajectories (e.g., UAS logs) into Boolean expressions that can be directly used in propositions and specifications. In order to be compatible with the F’ development framework, the syntax of Sensor is designed to support fast conversion into F’ components. As shown in Listing 6, a sensor starts with the keyword Sensor followed by a sensor name. Additional keywords include InPort, OutPort, VAR, Set, and EmbCode.

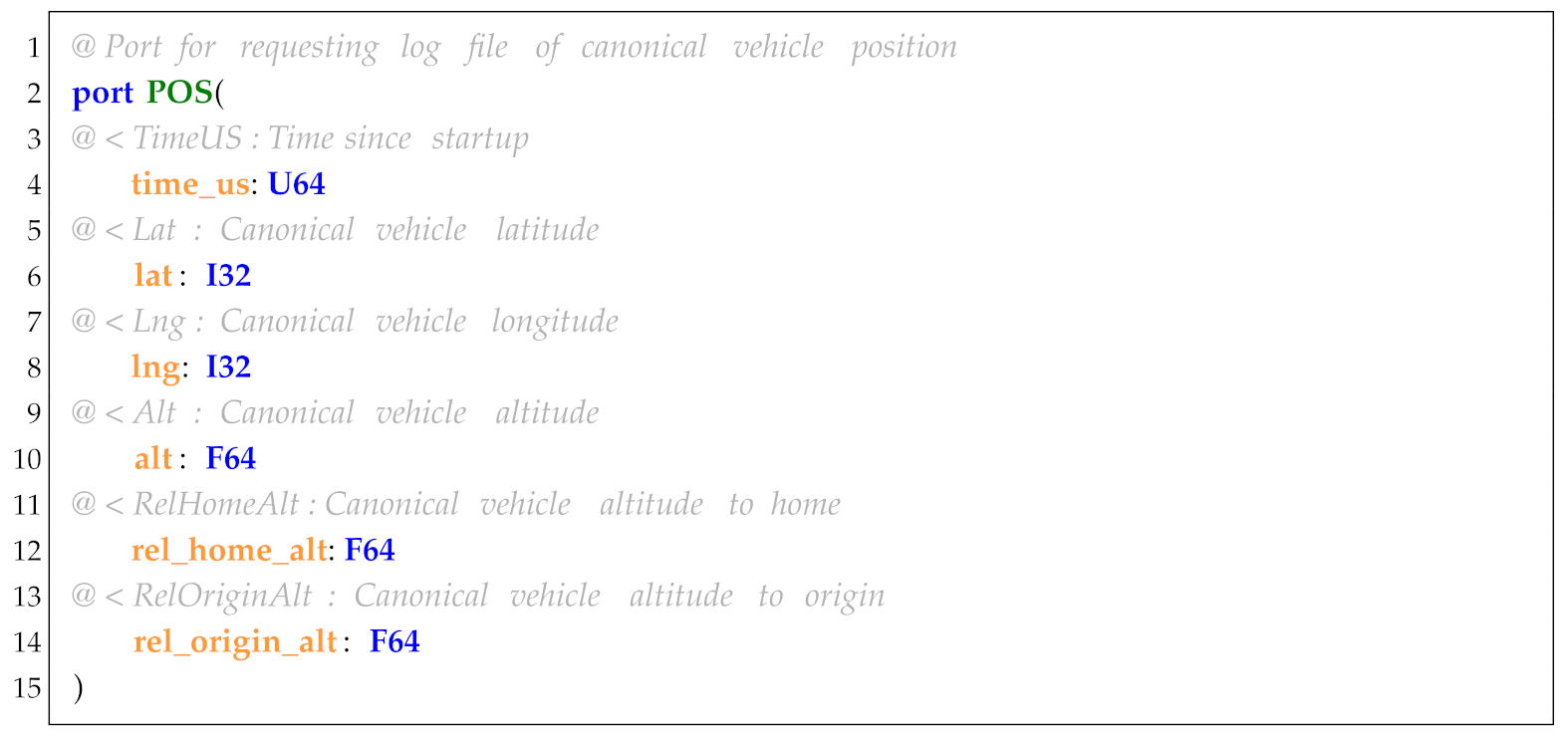

InPort is used to define the input hardware and software resources, which are mainly obtained from the sensors, ROS topics, and UAS logs. For convenience, we first define the commonly used software and hardware resources of UAS-DL in FPP. As shown in Listing 7, it represents position information defined in FPP based on data extracted from ArduPilot logs. Using this predefined port information, we can conveniently define the input variables. As an example, to define the latitude and longitude variables (Listing 6, line 5), we analyze the UAS logs to identify related entries (e.g., GPS sensor recordings), define the log items (Lat, Lng) in FPP, and finally, reference these predefined log data.

| Listing 6. Demo code of the keyword Sensor. |

|

| Listing 7. Demo code of port in FPP. |

|

OutPort is used to define the output variables that can serve as input variables for other components. For example, the output port variables (Listing 6, line 9) denote the following: InNe: whether the UAV is in the northeast of the origin; Guided: whether the flight mode is set to Guided; Rover: whether the flight mode is set to Rover; TakeOff: whether the UAV has taken off; OutOfCircle/OutOfRange: whether the UAV is within or outside a circular range.

VAR is used to define the intermediate constants computed by the embedded code. For example, variable Var1 (Listing 6, line 11) stores the value generated in the embedded code (Listing 6, line 28).