Identification of Salt Marsh Vegetation in the Yellow River Delta Using UAV Multispectral Imagery and Deep Learning

Abstract

1. Introduction

2. Materials and Methods

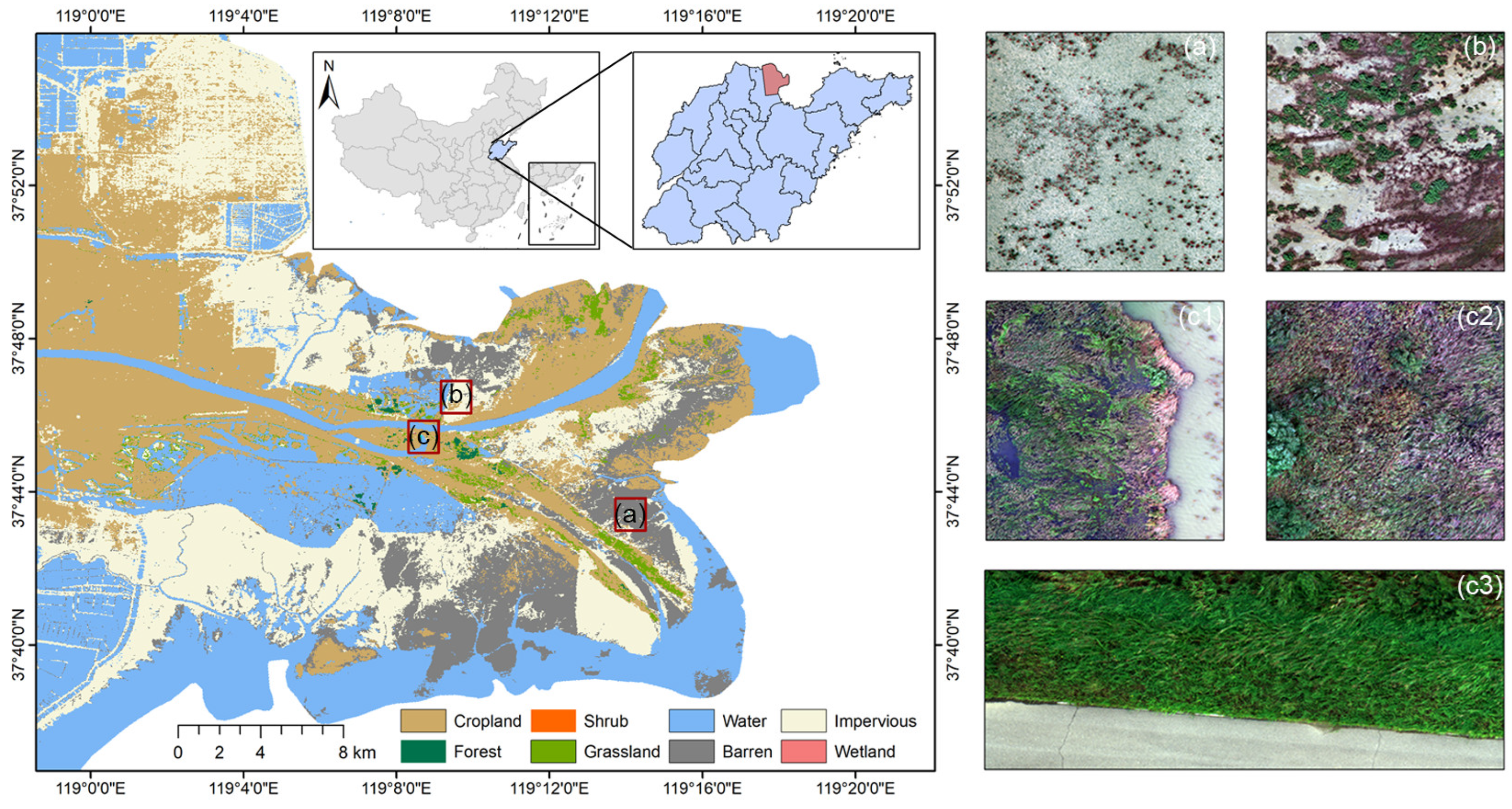

2.1. Study Area

2.2. Data Acquisition and Preprocessing

2.3. Construction of the Sample Dataset

2.4. Vegetation Indices

2.5. Jeffries–Matusita (JM) Distance

2.6. Machine Learning Algorithms

2.6.1. Random Forest

2.6.2. XGBoost

2.7. Deep Learning Algorithms

2.7.1. U-Net

2.7.2. SegNet

2.8. Accuracy Evaluation

3. Results

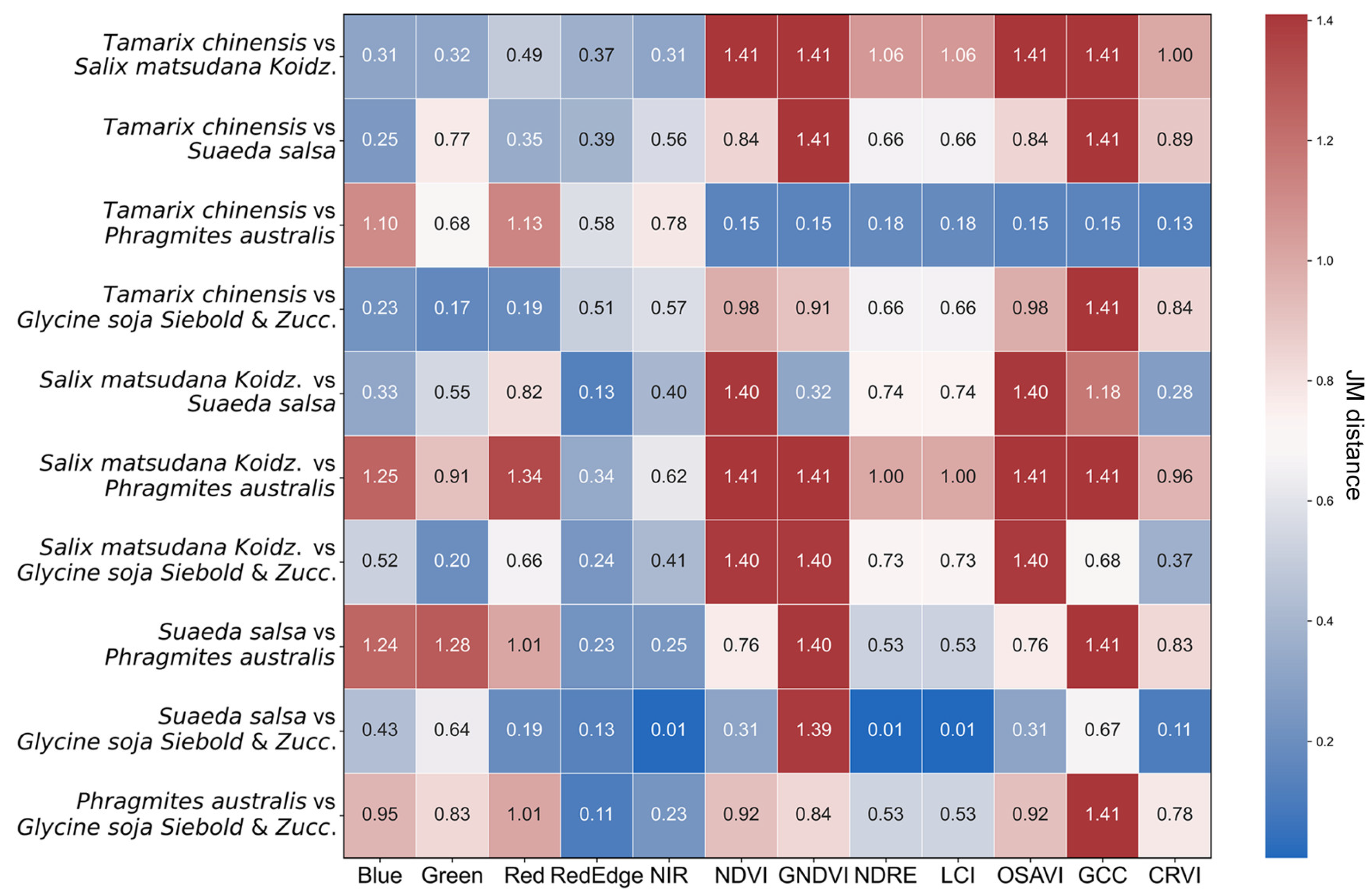

3.1. Spectral Characteristics and Divisibility Analysis of Wetland Vegetation Types

3.2. Feature Importance Ranking

3.3. Deep Learning Training Parameter Analysis

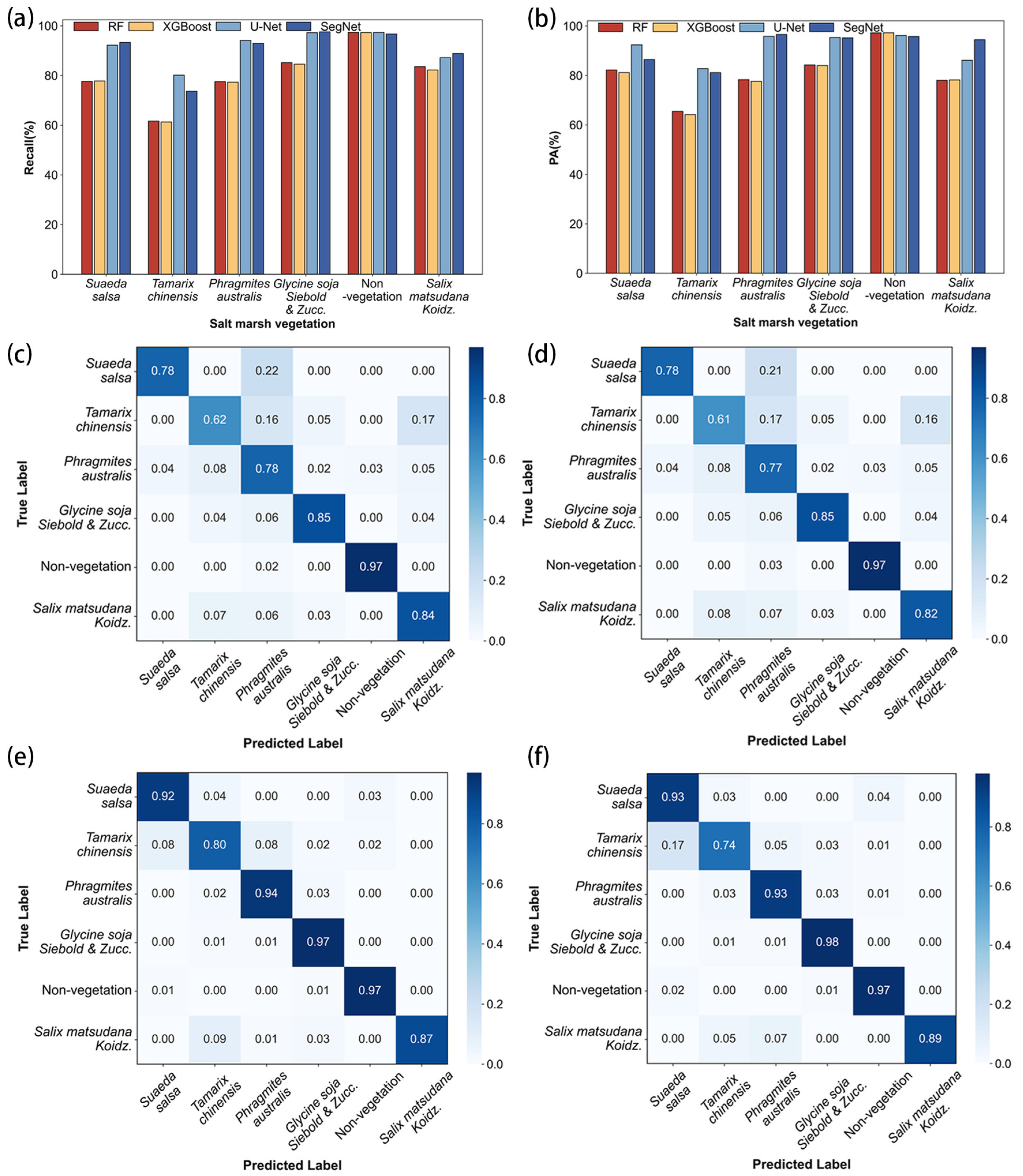

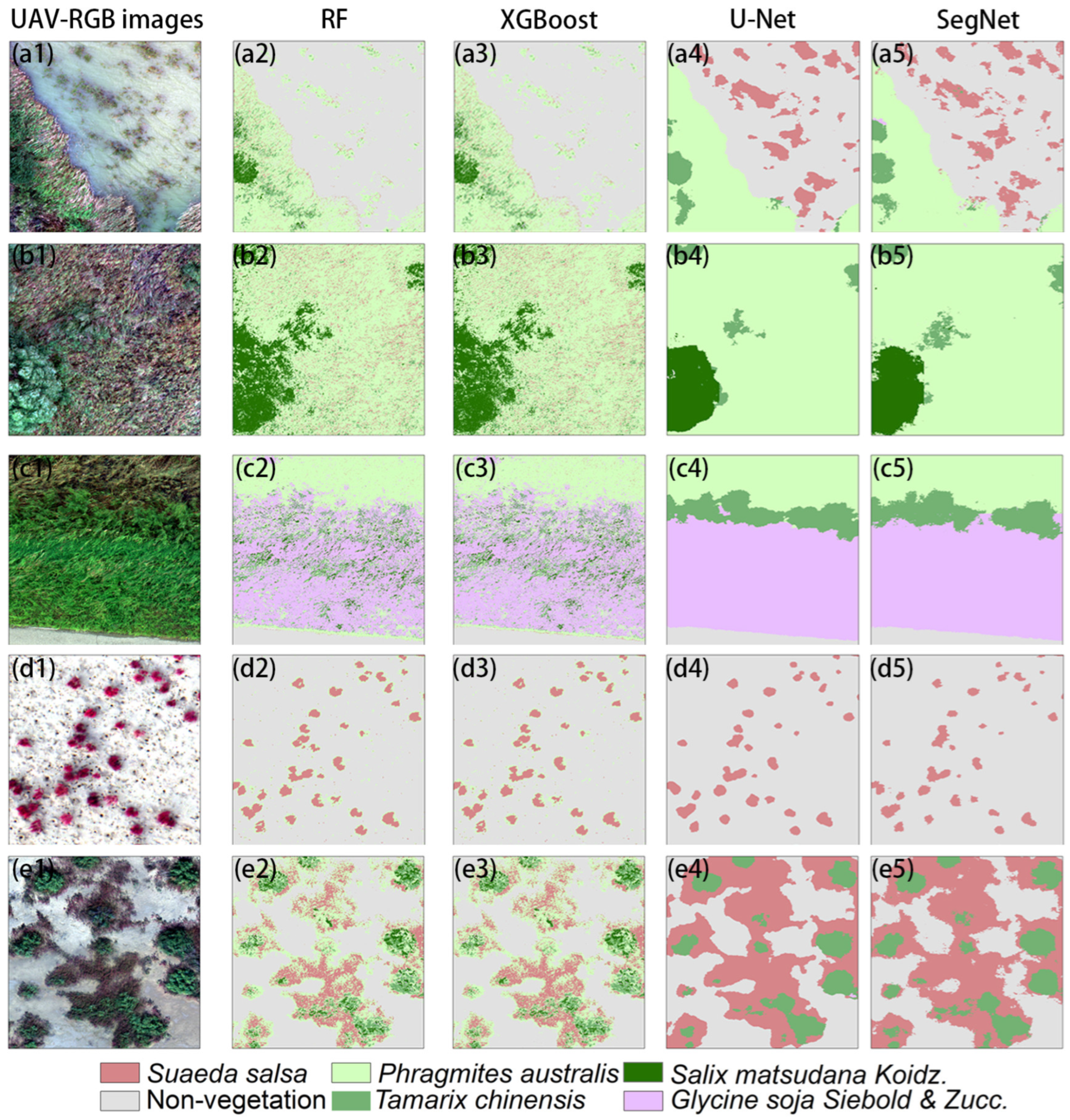

3.4. Comparison of Classification Accuracy Between Machine Learning and Deep Learning Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Barbier, E.B.; Hacker, S.D.; Kennedy, C.; Koch, E.W.; Stier, A.C.; Silliman, B.R. The value of estuarine and coastal ecosystem services. Ecol. Monogr. 2011, 81, 169–193. [Google Scholar] [CrossRef]

- Fagherazzi, S.; Kirwan, M.L.; Mudd, S.M.; Guntenspergen, G.R.; Temmerman, S.; D’Alpaos, A.; Van De Koppel, J.; Rybczyk, J.M.; Reyes, E.; Craft, C. Numerical models of salt marsh evolution: Ecological, geomorphic, and climatic factors. Rev. Geophys. 2012, 50, RG1002. [Google Scholar]

- Lou, Y.; Dai, Z.; Long, C.; Dong, H.; Wei, W.; Ge, Z. Image-based machine learning for monitoring the dynamics of the largest salt marsh in the Yangtze River Delta. J. Hydrol. 2022, 608, 127681. [Google Scholar] [CrossRef]

- Balke, T.; Stock, M.; Jensen, K.; Bouma, T.J.; Kleyer, M. A global analysis of the seaward salt marsh extent: The importance of tidal range. Water Resour. Res. 2016, 52, 3775–3786. [Google Scholar] [CrossRef]

- Stückemann, K.-J.; Waske, B. Mapping Lower Saxony’s salt marshes using temporal metrics of multi-sensor satellite data. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103123. [Google Scholar] [CrossRef]

- Temmerman, S.; Meire, P.; Bouma, T.J.; Herman, P.M.; Ysebaert, T.; De Vriend, H.J. Ecosystem-based coastal defence in the face of global change. Nature 2013, 504, 79–83. [Google Scholar]

- Sun, H.; Jiang, J.; Cui, L.; Feng, W.; Wang, Y.; Zhang, J. Soil organic carbon stabilization mechanisms in a subtropical mangrove and salt marsh ecosystems. Sci. Total Environ. 2019, 673, 502–510. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, M.; Zhang, W. Landscape pattern changes and its drivers inferred from salt marsh plant variations in the coastal wetlands of the Liao River Estuary, China. Ecol. Indic. 2022, 145, 109719. [Google Scholar] [CrossRef]

- Huang, Y.; Zheng, G.; Li, X.; Xiao, J.; Xu, Z.; Tian, P. Habitat quality evaluation and pattern simulation of coastal salt marsh wetlands. Sci. Total Environ. 2024, 945, 174003. [Google Scholar]

- Mason, V.G.; Burden, A.; Epstein, G.; Jupe, L.L.; Wood, K.A.; Skov, M.W. Blue carbon benefits from global saltmarsh restoration. Glob. Change Biol. 2023, 29, 6517–6545. [Google Scholar]

- Leahy, M.G.; Jollineau, M.Y.; Howarth, P.J.; Gillespie, A.R. The use of Landsat data for investigating the long-term trends in wetland change at Long Point, Ontario. Can. J. Remote Sens. 2005, 31, 240–254. [Google Scholar] [CrossRef]

- Slagter, B.; Tsendbazar, N.-E.; Vollrath, A.; Reiche, J. Mapping wetland characteristics using temporally dense Sentinel-1 and Sentinel-2 data: A case study in the St. Lucia wetlands, South Africa. Int. J. Appl. Earth Obs. Geoinf. 2020, 86, 102009. [Google Scholar] [CrossRef]

- Pan, Y.; Xu, X.; Long, J.; Lin, H. Change detection of wetland restoration in China’s Sanjiang National Nature Reserve using STANet method based on GF-1 and GF-6 images. Ecol. Indic. 2022, 145, 109612. [Google Scholar] [CrossRef]

- Li, H.; Liu, Q.; Huang, C.; Zhang, X.; Wang, S.; Wu, W.; Shi, L. Variation in Vegetation Composition and Structure across Mudflat Areas in the Yellow River Delta, China. Remote Sens. 2024, 16, 3495. [Google Scholar] [CrossRef]

- Chen, J.; Chen, Z.; Huang, R.; You, H.; Han, X.; Yue, T.; Zhou, G. The Effects of Spatial Resolution and Resampling on the Classification Accuracy of Wetland Vegetation Species and Ground Objects: A Study Based on High Spatial Resolution UAV Images. Drones 2023, 7, 61. [Google Scholar] [CrossRef]

- Villoslada, M.; Bergamo, T.F.; Ward, R.; Burnside, N.; Joyce, C.; Bunce, R.; Sepp, K. Fine scale plant community assessment in coastal meadows using UAV based multispectral data. Ecol. Indic. 2020, 111, 105979. [Google Scholar] [CrossRef]

- Zheng, J.-Y.; Hao, Y.-Y.; Wang, Y.-C.; Zhou, S.-Q.; Wu, W.-B.; Yuan, Q.; Gao, Y.; Guo, H.-Q.; Cai, X.-X.; Zhao, B. Coastal wetland vegetation classification using pixel-based, object-based and deep learning methods based on RGB-UAV. Land 2022, 11, 2039. [Google Scholar] [CrossRef]

- Zhou, Z.; Yang, Y.; Chen, B. Estimating Spartina alterniflora fractional vegetation cover and aboveground biomass in a coastal wetland using SPOT6 satellite and UAV data. Aquat. Bot. 2018, 144, 38–45. [Google Scholar] [CrossRef]

- Detka, J.; Coyle, H.; Gomez, M.; Gilbert, G.S. A drone-powered deep learning methodology for high precision remote sensing in California’s coastal shrubs. Drones 2023, 7, 421. [Google Scholar] [CrossRef]

- Simpson, G.; Nichol, C.J.; Wade, T.; Helfter, C.; Hamilton, A.; Gibson-Poole, S. Species-Level Classification of Peatland Vegetation Using Ultra-High-Resolution UAV Imagery. Drones 2024, 8, 97. [Google Scholar] [CrossRef]

- Patil, M.B.; Desai, C.G.; Umrikar, B.N. Image classification tool for land use/land cover analysis: A comparative study of maximum likelihood and minimum distance method. Int. J. Geol. Earth Environ. Sci. 2012, 2, 189–196. [Google Scholar]

- Sanchez-Hernandez, C.; Boyd, D.S.; Foody, G.M. Mapping specific habitats from remotely sensed imagery: Support vector machine and support vector data description based classification of coastal saltmarsh habitats. Ecol. Inform. 2007, 2, 83–88. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Chica-Olmo, M.; Abarca-Hernandez, F.; Atkinson, P.M.; Jeganathan, C. Random Forest classification of Mediterranean land cover using multi-seasonal imagery and multi-seasonal texture. Remote Sens. Environ. 2012, 121, 93–107. [Google Scholar] [CrossRef]

- Shi, F.; Gao, X.; Li, R.; Zhang, H. Ensemble Learning for the Land Cover Classification of Loess Hills in the Eastern Qinghai–Tibet Plateau Using GF-7 Multitemporal Imagery. Remote Sens. 2024, 16, 2556. [Google Scholar] [CrossRef]

- Van Beijma, S.; Comber, A.; Lamb, A. Random forest classification of salt marsh vegetation habitats using quad-polarimetric airborne SAR, elevation and optical RS data. Remote Sens. Environ. 2014, 149, 118–129. [Google Scholar] [CrossRef]

- Li, H.; Wang, C.; Cui, Y.; Hodgson, M. Mapping salt marsh along coastal South Carolina using U-Net. ISPRS J. Photogramm. Remote Sens. 2021, 179, 121–132. [Google Scholar] [CrossRef]

- Dang, K.B.; Nguyen, M.H.; Nguyen, D.A.; Phan, T.T.H.; Giang, T.L.; Pham, H.H.; Nguyen, T.N.; Tran, T.T.V.; Bui, D.T. Coastal wetland classification with deep u-net convolutional networks and sentinel-2 imagery: A case study at the tien yen estuary of vietnam. Remote Sens. 2020, 12, 3270. [Google Scholar] [CrossRef]

- Xing, H.; Niu, J.; Feng, Y.; Hou, D.; Wang, Y.; Wang, Z. A coastal wetlands mapping approach of Yellow River Delta with a hierarchical classification and optimal feature selection framework. Catena 2023, 223, 106897. [Google Scholar] [CrossRef]

- Zhang, C.; Gong, Z.; Qiu, H.; Zhang, Y.; Zhou, D. Mapping typical salt-marsh species in the Yellow River Delta wetland supported by temporal-spatial-spectral multidimensional features. Sci. Total Environ. 2021, 783, 147061. [Google Scholar] [CrossRef]

- Chen, C.; Ma, Y.; Yu, D.; Hu, Y.; Ren, L. Tracking annual dynamics of carbon storage of salt marsh plants in the Yellow River Delta national nature reserve of china based on sentinel-2 imagery during 2017–2022. Int. J. Appl. Earth Obs. Geoinf. 2024, 130, 103880. [Google Scholar] [CrossRef]

- Wang, Z.; Ke, Y.; Lu, D.; Zhuo, Z.; Zhou, Q.; Han, Y.; Sun, P.; Gong, Z.; Zhou, D. Estimating fractional cover of saltmarsh vegetation species in coastal wetlands in the Yellow River Delta, China using ensemble learning model. Front. Mar. Sci. 2022, 9, 1077907. [Google Scholar] [CrossRef]

- Li, J.; Hussain, T.; Feng, X.; Guo, K.; Chen, H.; Yang, C.; Liu, X. Comparative study on the resistance of Suaeda glauca and Suaeda salsa to drought, salt, and alkali stresses. Ecol. Eng. 2019, 140, 105593. [Google Scholar]

- Kiviat, E. Ecosystem services of Phragmites in North America with emphasis on habitat functions. AoB Plants 2013, 5, plt008. [Google Scholar]

- Yang, H.; Xia, J.; Cui, Q.; Liu, J.; Wei, S.; Feng, L.; Dong, K. Effects of different Tamarix chinensis-grass patterns on the soil quality of coastal saline soil in the Yellow River Delta, China. Sci. Total Environ. 2021, 772, 145501. [Google Scholar] [PubMed]

- Jiang, Z.; Huete, A.R.; Chen, J.; Chen, Y.; Li, J.; Yan, G.; Zhang, X. Analysis of NDVI and scaled difference vegetation index retrievals of vegetation fraction. Remote Sens. Environ. 2006, 101, 366–378. [Google Scholar]

- García Cárdenas, D.A.; Ramón Valencia, J.A.; Alzate Velásquez, D.F.; Palacios Gonzalez, J.R. Dynamics of the indices NDVI and GNDVI in a rice growing in its reproduction phase from multi-spectral aerial images taken by drones. In Proceedings of the International Conference of ICT for Adapting Agriculture to Climate Change, Cali, Colombia, 21–23 November 2018; pp. 106–119. [Google Scholar]

- Davidson, C.; Jaganathan, V.; Sivakumar, A.N.; Czarnecki, J.M.P.; Chowdhary, G. NDVI/NDRE prediction from standard RGB aerial imagery using deep learning. Comput. Electron. Agric. 2022, 203, 107396. [Google Scholar]

- Hollberg, J.L.; Schellberg, J. Distinguishing intensity levels of grassland fertilization using vegetation indices. Remote Sens. 2017, 9, 81. [Google Scholar] [CrossRef]

- Steven, M.D. The sensitivity of the OSAVI vegetation index to observational parameters. Remote Sens. Environ. 1998, 63, 49–60. [Google Scholar] [CrossRef]

- Burke, M.W.; Rundquist, B.C. Scaling PhenoCam GCC, NDVI, and EVI2 with harmonized Landsat-Sentinel using Gaussian processes. Agric. For. Meteorol. 2021, 300, 108316. [Google Scholar]

- Zeng, J.; Sun, Y.; Cao, P.; Wang, H. A phenology-based vegetation index classification (PVC) algorithm for coastal salt marshes using Landsat 8 images. Int. J. Appl. Earth Obs. Geoinf. 2022, 110, 102776. [Google Scholar]

- Canovas-Garcia, F.; Alonso-Sarria, F. Optimal combination of classification algorithms and feature ranking methods for object-based classification of submeter resolution Z/I-imaging DMC imagery. Remote Sens. 2015, 7, 4651–4677. [Google Scholar] [CrossRef]

- Shao, Y.; Lunetta, R.S.; Wheeler, B.; Iiames, J.S.; Campbell, J.B. An evaluation of time-series smoothing algorithms for land-cover classifications using MODIS-NDVI multi-temporal data. Remote Sens. Environ. 2016, 174, 258–265. [Google Scholar] [CrossRef]

- Phan, T.N.; Kuch, V.; Lehnert, L.W. Land cover classification using Google Earth Engine and random forest classifier—The role of image composition. Remote Sens. 2020, 12, 2411. [Google Scholar] [CrossRef]

- Speiser, J.L.; Miller, M.E.; Tooze, J.; Ip, E. A comparison of random forest variable selection methods for classification prediction modeling. Expert Syst. Appl. 2019, 134, 93–101. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III. pp. 234–241. [Google Scholar]

- Bragagnolo, L.; da Silva, R.V.; Grzybowski, J.M.V. Amazon forest cover change mapping based on semantic segmentation by U-Nets. Ecol. Inform. 2021, 62, 101279. [Google Scholar]

- Wang, J.; Hadjikakou, M.; Hewitt, R.J.; Bryan, B.A. Simulating large-scale urban land-use patterns and dynamics using the U-Net deep learning architecture. Comput. Environ. Urban Syst. 2022, 97, 101855. [Google Scholar] [CrossRef]

- Fu, B.; Liu, M.; He, H.; Lan, F.; He, X.; Liu, L.; Huang, L.; Fan, D.; Zhao, M.; Jia, Z. Comparison of optimized object-based RF-DT algorithm and SegNet algorithm for classifying Karst wetland vegetation communities using ultra-high spatial resolution UAV data. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102553. [Google Scholar] [CrossRef]

- Deng, T.; Fu, B.; Liu, M.; He, H.; Fan, D.; Li, L.; Huang, L.; Gao, E. Comparison of multi-class and fusion of multiple single-class SegNet model for mapping karst wetland vegetation using UAV images. Sci. Rep. 2022, 12, 13270. [Google Scholar]

- Diederik, P.K. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Chen, C.; Yuan, X.; Gan, S.; Luo, W.; Bi, R.; Li, R.; Gao, S. A new vegetation index based on UAV for extracting plateau vegetation information. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103668. [Google Scholar]

- Schmidt, K.; Skidmore, A. Spectral discrimination of vegetation types in a coastal wetland. Remote Sens. Environ. 2003, 85, 92–108. [Google Scholar] [CrossRef]

- Liu, M.; Zhan, Y.; Li, J.; Kang, Y.; Sun, X.; Gu, X.; Wei, X.; Wang, C.; Li, L.; Gao, H. Validation of Red-Edge Vegetation Indices in Vegetation Classification in Tropical Monsoon Region—A Case Study in Wenchang, Hainan, China. Remote Sens. 2024, 16, 1865. [Google Scholar] [CrossRef]

- Zahir, S.A.D.M.; Omar, A.F.; Jamlos, M.F.; Azmi, M.A.M.; Muncan, J. A review of visible and near-infrared (Vis-NIR) spectroscopy application in plant stress detection. Sens. Actuators A Phys. 2022, 338, 113468. [Google Scholar] [CrossRef]

- Sun, C.; Liu, Y.; Zhao, S.; Zhou, M.; Yang, Y.; Li, F. Classification mapping and species identification of salt marshes based on a short-time interval NDVI time-series from HJ-1 optical imagery. Int. J. Appl. Earth Obs. Geoinf. 2016, 45, 27–41. [Google Scholar] [CrossRef]

- Wu, N.; Shi, R.; Zhuo, W.; Zhang, C.; Zhou, B.; Xia, Z.; Tao, Z.; Gao, W.; Tian, B. A classification of tidal flat wetland vegetation combining phenological features with Google Earth Engine. Remote Sens. 2021, 13, 443. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Li, H.; Cui, G.; Liu, H.; Wang, Q.; Zhao, S.; Huang, X.; Zhang, R.; Jia, M.; Mao, D.; Yu, H. Dynamic Analysis of Spartina alterniflora in Yellow River Delta Based on U-Net Model and Zhuhai-1 Satellite. Remote Sens. 2025, 17, 226. [Google Scholar] [CrossRef]

- Fu, B.; Liu, M.; He, H.; Fan, D.; Liu, L.; Huang, L.; Gao, E. Comparison of Multi-class and Fusion of single-class SegNet model for Classifying Karst Wetland Vegetation using UAV Images. Preprints 2021. [Google Scholar] [CrossRef]

- Bhatnagar, S.; Gill, L.; Ghosh, B. Drone image segmentation using machine and deep learning for mapping raised bog vegetation communities. Remote Sens. 2020, 12, 2602. [Google Scholar] [CrossRef]

- Fan, X.; Yan, C.; Fan, J.; Wang, N. Improved U-net remote sensing classification algorithm fusing attention and multiscale features. Remote Sens. 2022, 14, 3591. [Google Scholar] [CrossRef]

- Wang, H.; Gui, D.; Liu, Q.; Feng, X.; Qu, J.; Zhao, J.; Wang, G.; Wei, G. Vegetation coverage precisely extracting and driving factors analysis in drylands. Ecol. Inform. 2024, 79, 102409. [Google Scholar]

- Chiu, W.-Y.; Couloigner, I. Evaluation of incorporating texture into wetland mapping from multispectral images. EARSeL eProceedings 2004, 3, 363–371. [Google Scholar]

- Murray, H.; Lucieer, A.; Williams, R. Texture-based classification of sub-Antarctic vegetation communities on Heard Island. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 138–149. [Google Scholar]

| Class | Class Description | UAV Image Example (RGB) |

|---|---|---|

| Suaeda salsa | Primarily grows in mid-tide and low-tide zones, with varying vegetation coverage. |  |

| Tamarix chinensis | Lives in moist saline-alkali soils, with a growing season from April to November. |  |

| Phragmites australis | Distributes along water shores, with a growing season from April to October. |  |

| Glycinesoja Siebold & Zucc. | Popular in low-lying, wetland areas with dense shrubs or Phragmites australis reed beds, with a growing season from March to October. |  |

| Salix matsudana Koidz. | Common in arid lands or wetlands, with a rapid growth period from June to July. |  |

| Class | Deep Learning | Machine Learning | ||

|---|---|---|---|---|

| Labeled Pixels | Percentage (%) | Labeled Pixels | Percentage (%) | |

| Suaeda salsa | 3,973,631 | 14.71 | 69,398 | 6.87 |

| Tamarix chinensis | 2,618,854 | 9.69 | 257,240 | 25.48 |

| Phragmites australis | 7,790,032 | 28.84 | 109,569 | 10.85 |

| Glycine soja Siebold & Zucc. | 6,606,232 | 24.45 | 103,266 | 10.23 |

| Salix matsudana Koidz. | 175,038 | 0.65 | 154,217 | 15.28 |

| Non-vegetation | 5,851,443 | 21.66 | 315,908 | 31.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, X.; Yang, C.; Fang, L.; Chen, J.; Wang, X.; Gao, N.; Zheng, P.; Wang, G.; Wang, Q.; Ren, S. Identification of Salt Marsh Vegetation in the Yellow River Delta Using UAV Multispectral Imagery and Deep Learning. Drones 2025, 9, 235. https://doi.org/10.3390/drones9040235

Bai X, Yang C, Fang L, Chen J, Wang X, Gao N, Zheng P, Wang G, Wang Q, Ren S. Identification of Salt Marsh Vegetation in the Yellow River Delta Using UAV Multispectral Imagery and Deep Learning. Drones. 2025; 9(4):235. https://doi.org/10.3390/drones9040235

Chicago/Turabian StyleBai, Xiaohui, Changzhi Yang, Lei Fang, Jinyue Chen, Xinfeng Wang, Ning Gao, Peiming Zheng, Guoqiang Wang, Qiao Wang, and Shilong Ren. 2025. "Identification of Salt Marsh Vegetation in the Yellow River Delta Using UAV Multispectral Imagery and Deep Learning" Drones 9, no. 4: 235. https://doi.org/10.3390/drones9040235

APA StyleBai, X., Yang, C., Fang, L., Chen, J., Wang, X., Gao, N., Zheng, P., Wang, G., Wang, Q., & Ren, S. (2025). Identification of Salt Marsh Vegetation in the Yellow River Delta Using UAV Multispectral Imagery and Deep Learning. Drones, 9(4), 235. https://doi.org/10.3390/drones9040235