Estimating Stratified Biomass in Cotton Fields Using UAV Multispectral Remote Sensing and Machine Learning

Abstract

1. Introduction

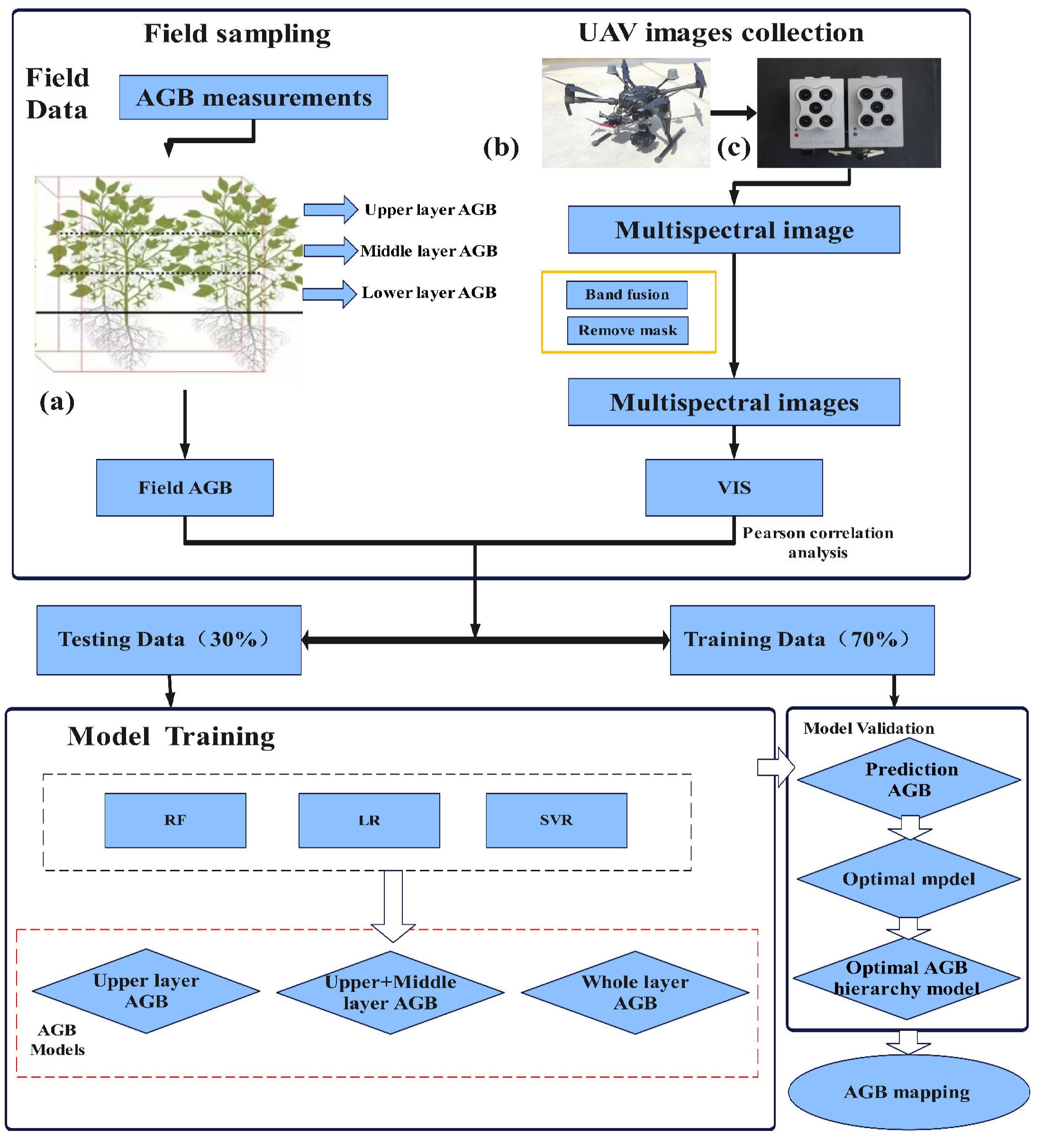

2. Materials and Methods

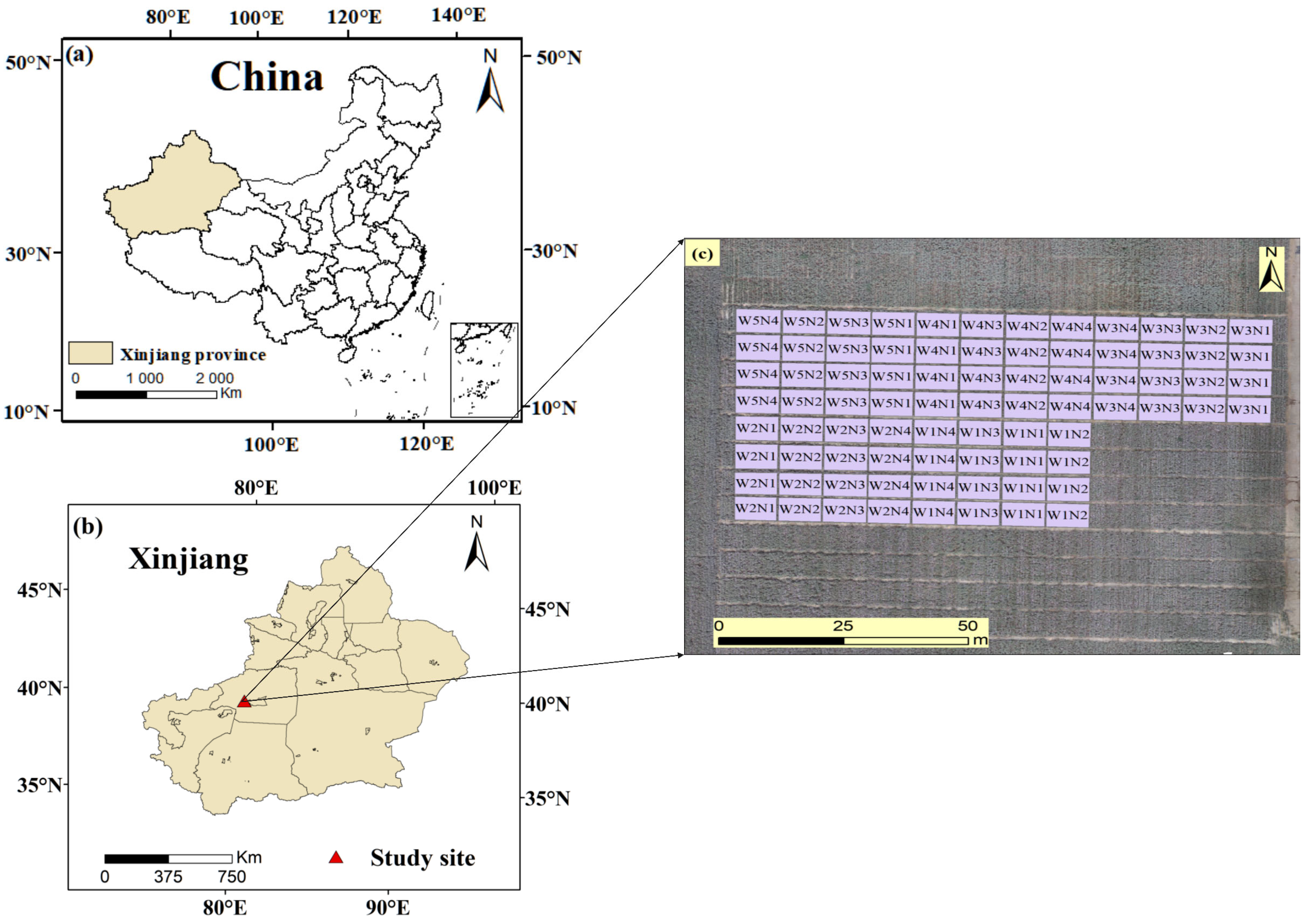

2.1. Overview of the Study Area

2.2. Experimental Design

2.3. UAV Data Acquisition

2.4. Ground-Truth Data Collection

2.5. Vegetation Indices and Feature Extraction

2.5.1. Spectral Image Acquisition

2.5.2. Spectral Image Preprocessing

2.5.3. Spectral Index Processing

2.5.4. Spectral Index Extraction

2.6. Model Selection

2.7. Statistical Analysis

3. Results

3.1. Cotton Biomass Analysis

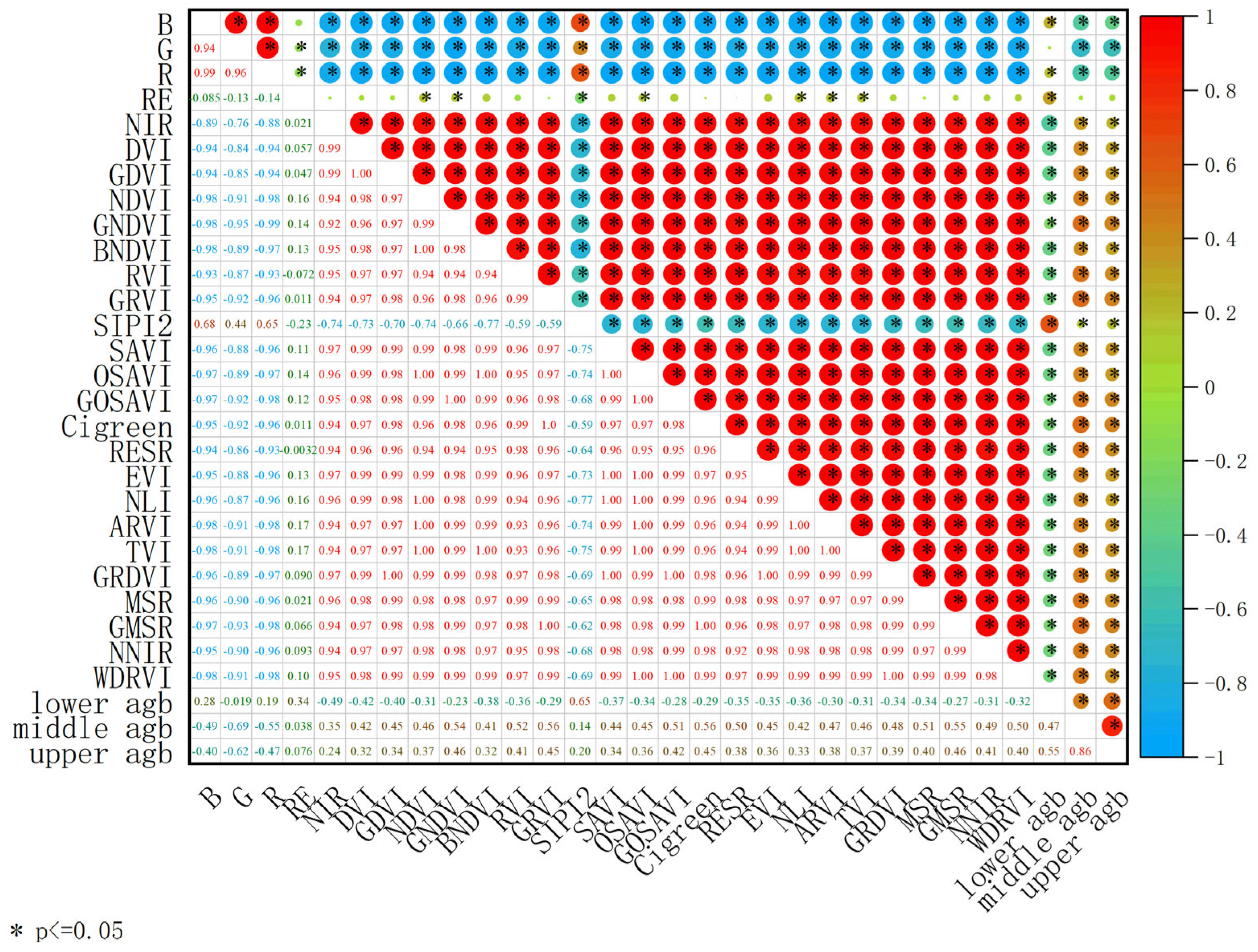

3.2. Correlation Analysis of AGB of Cotton Based on Pearson

3.3. Vegetation Index Was Used to Estimate Cotton Biomass at Different Growth Stages

3.3.1. Data Division

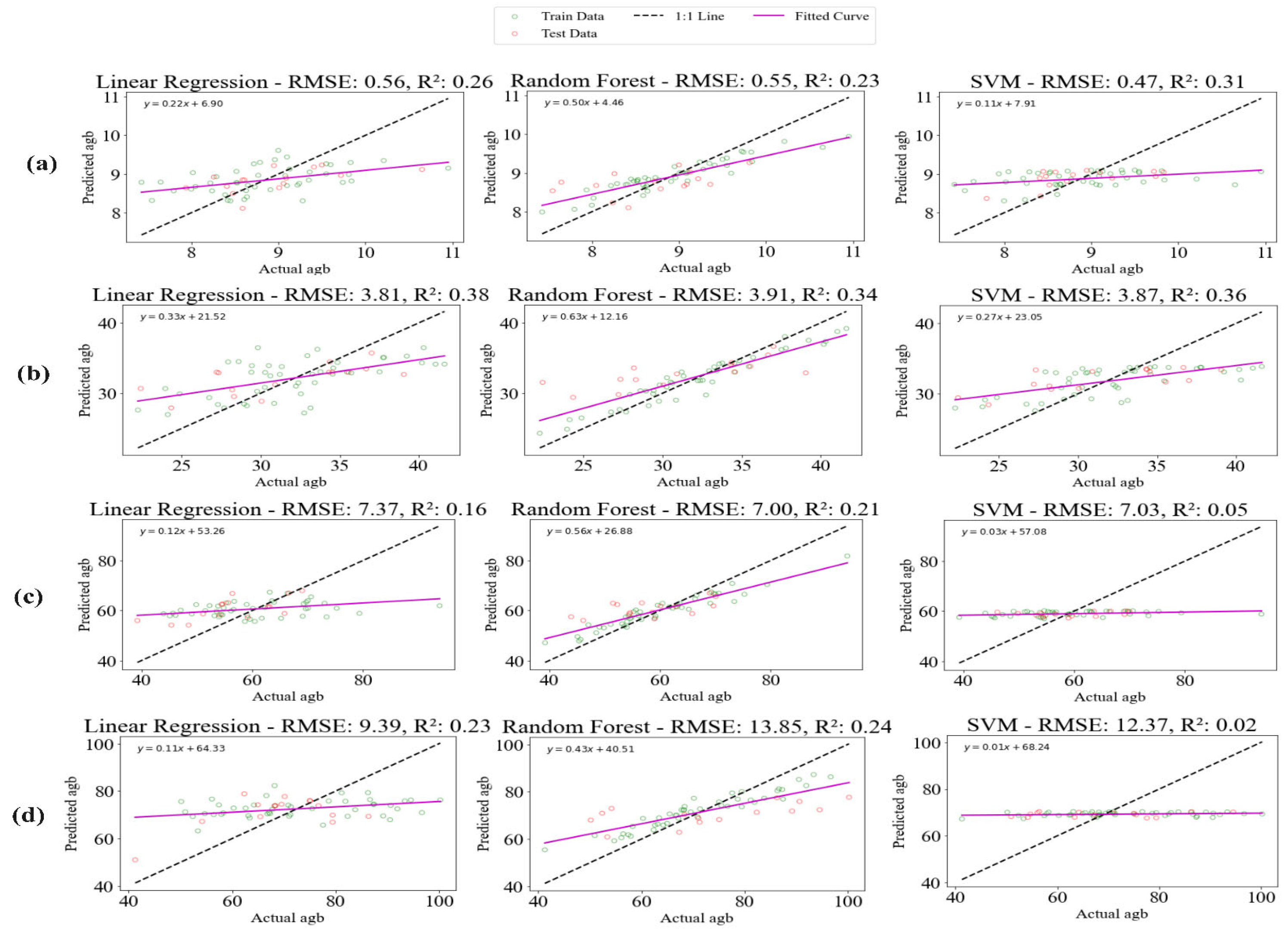

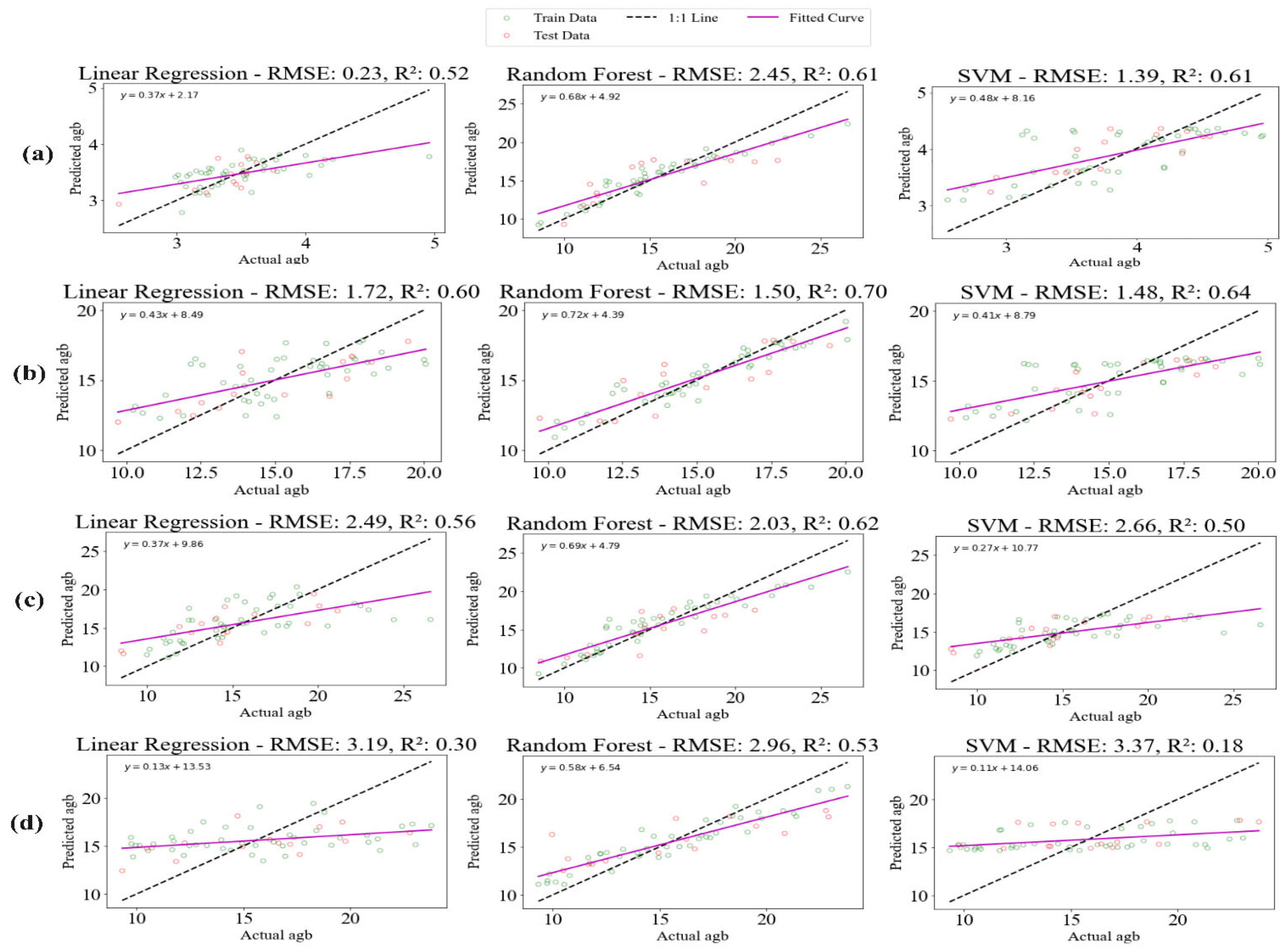

3.3.2. Construction of Upper, Middle, and Lower Inversion Models

3.3.3. Construction of Upper- and Middle-Level Inversion Models

3.3.4. Construction of Upper-Layer Inversion Model

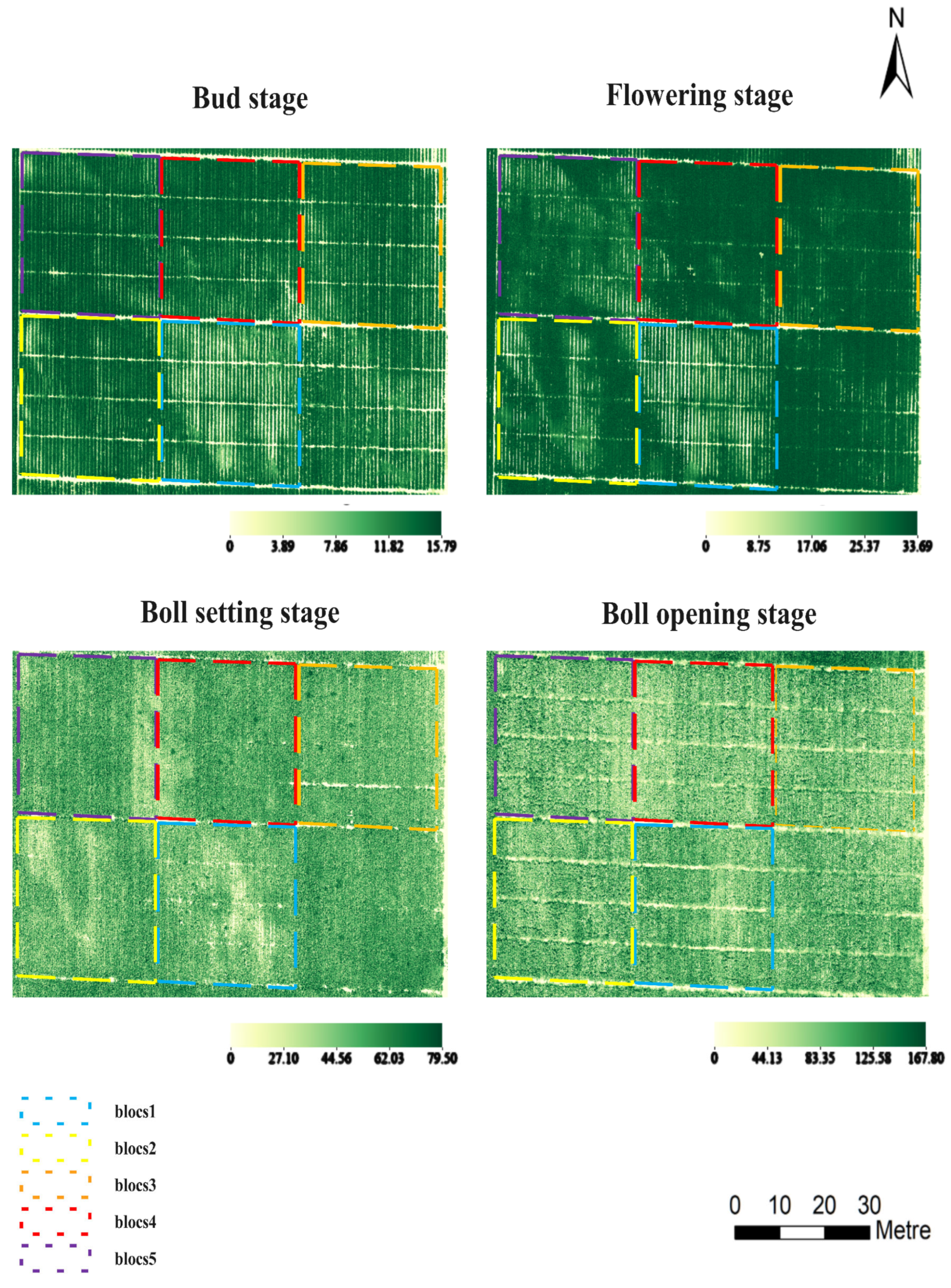

3.4. Above-Ground Biomass Inversion Mapping

4. Discussion

4.1. Estimation of AGB in Vertical Distribution of Cotton Based on Spectral Characteristics

4.2. Advantages of Constructing AGB Estimation Model for Upper and Middle Layers of Cotton

4.3. Differences in and Advantages of the New Model

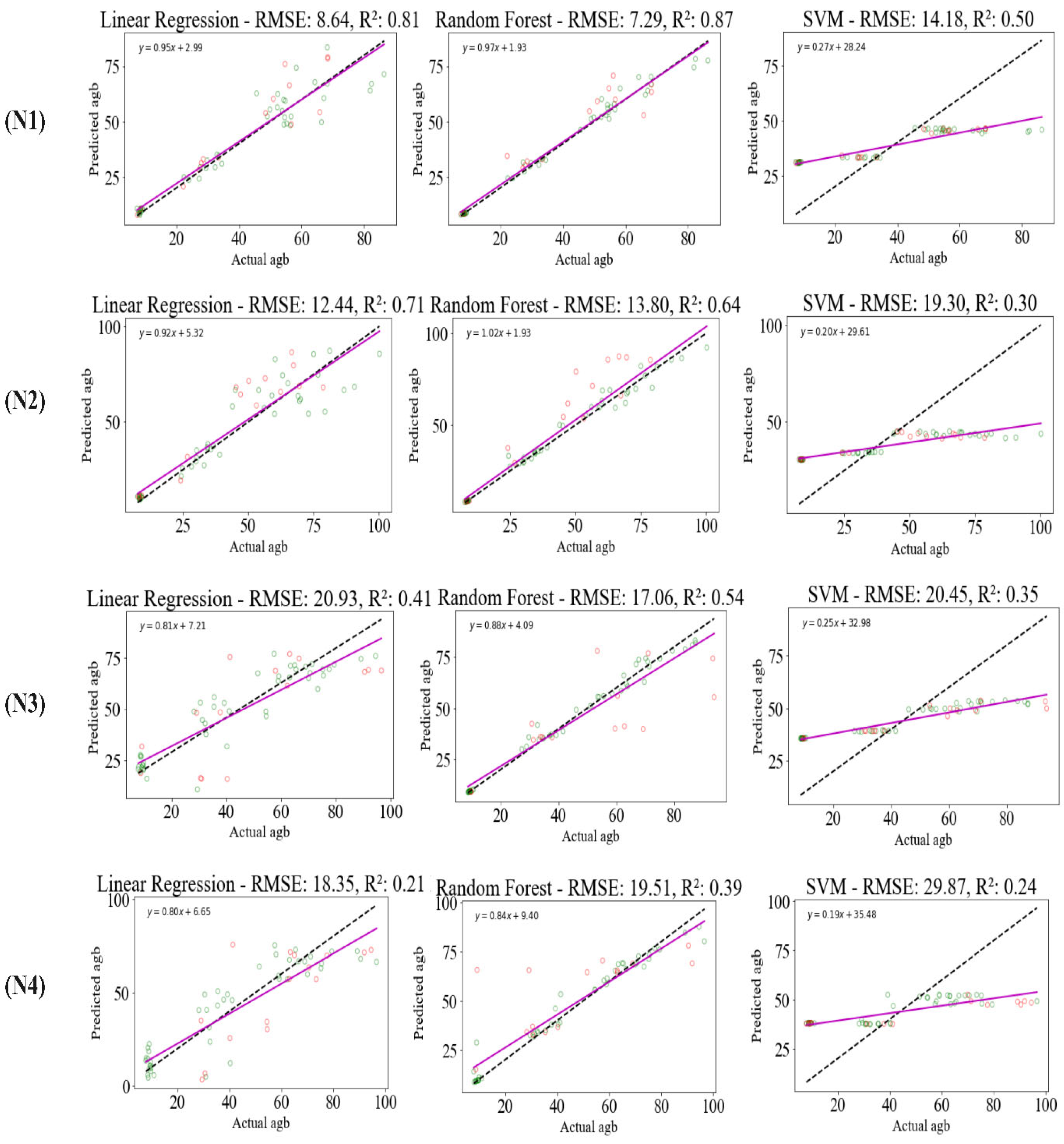

4.4. Effect of Machine Learning Algorithm on AGB Estimation Model of Cotton at Different Levels

4.5. Effect of Different N Treatments on the Model

4.6. Directions for Improving the AGB Estimation Model

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Liao, Z.; He, B.; Quan, X.; van Dijk, A.I.J.M.; Qiu, S.; Yin, C. Biomass estimation in dense tropical forest using multiple information from single-baseline P-band PolInSAR data. Remote Sens. Environ. 2019, 221, 489–507. [Google Scholar] [CrossRef]

- Zhao, L.; Chen, E.; Li, Z.; Zhang, W.; Fan, Y. A New Approach for Forest Height Inversion Using X-Band Single-Pass InSAR Coherence Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5206018. [Google Scholar] [CrossRef]

- Packalen, P.; Strunk, J.; Packalen, T.; Maltamo, M.; Mehtätalo, L. Resolution dependence in an area-based approach to forest inventory with airborne laser scanning. Remote Sens. Environ. 2019, 224, 192–201. [Google Scholar] [CrossRef]

- Zhang, Y.; Shao, Z. Assessing of Urban Vegetation Biomass in Combination with LiDAR and High-resolution Remote Sensing Images. Int. J. Remote Sens. 2020, 42, 964–985. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Xu, L.; Zhou, L.; Meng, R.; Zhao, F.; Lv, Z.; Xu, B.; Zeng, L.; Yu, X.; Peng, S. An improved approach to estimate ratoon rice aboveground biomass by integrating UAV-based spectral, textural and structural features. Precis. Agric. 2022, 23, 1276–1301. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Bareth, G.; Bendig, J.; Tilly, N.; Hoffmeister, D.; Aasen, H.; Bolten, A. A Comparison of UAV- and TLS-derived Plant Height for Crop Monitoring: Using Polygon Grids for the Analysis of Crop Surface Models (CSMs). Photogramm. Fernerkund. Geoinf. 2016, 2016, 85–94. [Google Scholar] [CrossRef]

- Jay, S.; Baret, F.; Dutartre, D.; Malatesta, G.; Héno, S.; Comar, A.; Weiss, M.; Maupas, F. Exploiting the centimeter resolution of UAV multispectral imagery to improve remote-sensing estimates of canopy structure and biochemistry in sugar beet crops. Remote Sens. Environ. 2019, 231, 110898. [Google Scholar] [CrossRef]

- Togeirode Alckmin, G.; Lucieer, A.; Rawnsley, R.; Kooistra, L. Perennial ryegrass biomass retrieval through multispectral UAV data. Comput. Electron. Agric. 2022, 193, 106574. [Google Scholar] [CrossRef]

- Walter, J.D.C.; Edwards, J.; McDonald, G.; Kuchel, H. Estimating Biomass and Canopy Height with LiDAR for Field Crop Breeding. Front. Plant Sci. 2019, 10, 1145. [Google Scholar] [CrossRef] [PubMed]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef]

- Yin, J.; Feng, Q.; Liang, T.; Meng, B.; Yang, S.; Gao, J.; Ge, J.; Hou, M.; Liu, J.; Wang, W.; et al. Estimation of Grassland Height Based on the Random Forest Algorithm and Remote Sensing in the Tibetan Plateau. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 178–186. [Google Scholar] [CrossRef]

- Güner, Ş.T.; Diamantopoulou, M.J.; Poudel, K.P.; Çömez, A.; Özçelik, R. Employing artificial neural network for effective biomass prediction: An alternative approach. Comput. Electron. Agric. 2022, 192, 106596. [Google Scholar] [CrossRef]

- Mansaray, L.R.; Zhang, K.; Kanu, A.S. Dry biomass estimation of paddy rice with Sentinel-1A satellite data using machine learning regression algorithms. Comput. Electron. Agric. 2020, 176, 105674. [Google Scholar] [CrossRef]

- Wan, L.; Zhang, J.; Dong, X.; Du, X.; Zhu, J.; Sun, D.; Liu, Y.; He, Y.; Cen, H. Unmanned aerial vehicle-based field phenotyping of crop biomass using growth traits retrieved from PROSAIL model. Comput. Electron. Agric. 2021, 187, 106304. [Google Scholar] [CrossRef]

- Yang, B.; Wu, X.; Hao, J.; Xu, D.; Liu, T.; Xie, Q. Estimation of wood failure percentage under shear stress in bamboo-wood composite bonded by adhesive using a deep learning and entropy weight method. Ind. Crops Prod. 2023, 197, 116617. [Google Scholar] [CrossRef]

- Chen, M.; Yin, C.; Lin, T.; Liu, H.; Wang, Z.; Jiang, P.; Ali, S.; Tang, Q.; Jin, X. Integration of Unmanned Aerial Vehicle Spectral and Textural Features for Accurate Above-Ground Biomass Estimation in Cotton. Agronomy 2024, 14, 1313. [Google Scholar] [CrossRef]

- Oteng-Frimpong, R.; Karikari, B.; Sie, E.K.; Kassim, Y.B.; Puozaa, D.K.; Rasheed, M.A.; Fonceka, D.; Okello, D.K.; Balota, M.; Burow, M.; et al. Multi-locus genome-wide association studies reveal genomic regions and putative candidate genes associated with leaf spot diseases in African groundnut (Arachis hypogaea L.) germplasm. Front. Plant Sci. 2023, 13, 107644. [Google Scholar] [CrossRef]

- Boiarskii, B. Comparison of NDVI and NDRE Indices to Detect Differences in Vegetation and Chlorophyll Content. J. Mech. Contin. Math. Sci. 2019, 4, 20–29. [Google Scholar] [CrossRef]

- Yang, Z.; Yu, Z.; Wang, X.; Yan, W.; Sun, S.; Feng, M.; Sun, J.; Su, P.; Sun, X.; Wang, Z.; et al. Estimation of Millet Aboveground Biomass Utilizing Multi-Source UAV Image Feature Fusion. Agronomy 2024, 14, 701. [Google Scholar] [CrossRef]

- Hernandez, A.; Jensen, K.; Larson, S.; Larsen, R.; Rigby, C.; Johnson, B.; Spickermann, C.; Sinton, S. Using Unmanned Aerial Vehicles and Multispectral Sensors to Model Forage Yield for Grasses of Semiarid Landscapes. Grasses 2024, 3, 84–109. [Google Scholar] [CrossRef]

- Cao, Y.; Li, G.L.; Luo, Y.K.; Pan, Q.; Zhang, S.Y. Monitoring of sugar beet growth indicators using wide-dynamic-range vegetation index (WDRVI) derived from UAV multispectral images. Comput. Electron. Agric. 2020, 171, 105331. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, L.; Han, J.; Zha, Y.; Zhu, P. Deep convolutional neural networks for rice grain yield estimation at the ripening stage using UAV-based remotely sensed images. Field Crops Res. 2019, 235, 142–153. [Google Scholar] [CrossRef]

- Putra, A.N.; Kristiawati, W.; Mumtazydah, D.C.; Anggarwati, T.; Annisa, R.; Sholikah, D.H.; Okiyanto, D.; Sudarto. Pineapple biomass estimation using unmanned aerial vehicle in various forcing stage: Vegetation index approach from ultra-high-resolution image. Smart Agric. Technol. 2021, 1, 100025. [Google Scholar] [CrossRef]

- Shen, Y.; Yan, Z.; Yang, Y.; Tang, W.; Sun, J.; Zhang, Y. Application of UAV-Borne Visible-Infared Pushbroom Imaging Hyperspectral for Rice Yield Estimation Using Feature Selection Regression Methods. Sustainability 2024, 16, 632. [Google Scholar] [CrossRef]

- Guo, X.-Y.; Li, K.; Shao, Y.; Lopez-Sanchez, J.M.; Wang, Z.-Y. Inversion of Rice Height Using Multitemporal TanDEM-X Polarimetric Interferometry SAR Data. Spectrosc. Spectr. Anal. 2020, 40, 878–884. [Google Scholar] [CrossRef]

- Killeen, P.; Kiringa, I.; Yeap, T.; Branco, P. Corn Grain Yield Prediction Using UAV-Based High Spatiotemporal Resolution Imagery, Machine Learning, and Spatial Cross-Validation. Remote Sens. 2024, 16, 683. [Google Scholar] [CrossRef]

- Niu, Q.; Feng, H.; Zhou, X.; Zhu, J.; Yong, B.; Li, H. Combining UAV visible light and multispectral vegetation indices for estimating SPAD value of winter wheat. Trans. Chin. Soc. Agric. Mach 2021, 52, 183–194. [Google Scholar]

- Rozenstein, O.; Haymann, N.; Kaplan, G.; Tanny, J. Validation of the cotton crop coefficient estimation model based on Sentinel-2 imagery and eddy covariance measurements. Agric. Water Manag. 2019, 223, 105715. [Google Scholar] [CrossRef]

- Shammi, S.A.; Huang, Y.; Feng, G.; Tewolde, H.; Zhang, X.; Jenkins, J.; Shankle, M. Application of UAV Multispectral Imaging to Monitor Soybean Growth with Yield Prediction through Machine Learning. Agronomy 2024, 14, 672. [Google Scholar] [CrossRef]

- Liu, S.; Jin, X.; Bai, Y.; Wu, W.; Cui, N.; Cheng, M.; Liu, Y.; Meng, L.; Jia, X.; Nie, C.; et al. UAV multispectral images for accurate estimation of the maize LAI considering the effect of soil background. Int. J. Appl. Earth Obs. Geoinf. 2023, 121, 103383. [Google Scholar] [CrossRef]

- Deery, D.M.; Rebetzke, G.J.; Jimenez-Berni, J.A.; Condon, A.G.; Smith, D.J.; Bechaz, K.M.; Bovill, W.D. Ground-Based LiDAR Improves Phenotypic Repeatability of Above-Ground Biomass and Crop Growth Rate in Wheat. Plant Phenomics 2020, 2020, 8329798. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Wu, F.; Zhu, S.; Zhang, W.; Wu, F.; Yang, T.; Yang, G.; Zhao, Y.; Sun, C.; Liu, T. Research on Rapeseed Above-Ground Biomass Estimation Based on Spectral and LiDAR Data. Agronomy 2024, 14, 1610. [Google Scholar] [CrossRef]

- Tan, H.; Kou, W.; Xu, W.; Wang, L.; Wang, H.; Lu, N. Improved Estimation of Aboveground Biomass in Rubber Plantations Using Deep Learning on UAV Multispectral Imagery. Drones 2025, 9, 32. [Google Scholar] [CrossRef]

| Date of Flight | Date of Field Sampling | Growth Stages |

|---|---|---|

| 06-12 | 06-12 | Bud stage |

| 07-08 | 07-08 | Flowering stage |

| 08-09 | 08-09 | Boll setting stage |

| 09-02 | 09-02 | Boll opening stage |

| Number of Channels | Channel Name | Central Wavelength/nm | FW HM/nm |

|---|---|---|---|

| 1 | Blue1 | 444 | 28 |

| 2 | Blue2 | 475 | 32 |

| 3 | Green1 | 531 | 14 |

| 4 | Green2 | 560 | 27 |

| 5 | Red1 | 650 | 16 |

| 6 | Red2 | 668 | 14 |

| 7 | Red Edge1 | 705 | 10 |

| 8 | Red Edge2 | 717 | 12 |

| 9 | Red Edge3 | 740 | 18 |

| 10 | NIR | 842 | 57 |

| Vegetation Index | Calculation Formula | Reference |

|---|---|---|

| Normalized Vegetation Index (NDVI) | [20] | |

| Normalized Red-edged Vegetation Index (NNIR) | [21] | |

| Ratio Vegetation Index (RVI) | [22] | |

| Difference Vegetation Index (DVI) | [23] | |

| Wide Dynamic Vegetation Index (WDRVI) | [24] | |

| Green Vegetation Index (GRVI) | [22] | |

| Green Normalized Difference Vegetation Index (GNDVI) | [25] | |

| Blue Normalized Difference Vegetation Index (BNDVI) | [25] | |

| Green Difference Vegetation Index (GDVI) | [26] | |

| Enhanced Vegetation Index (EVI) | [27] | |

| Structurally insensitive pigment index 2 (SIPI2) | [28] | |

| Soil-Conditioned Vegetation Index (SAVI) | [29] | |

| Optimized Soil-Conditioned Vegetation Index (OSAVI) | [29] | |

| Generalized Optimized Soil-Regulated Vegetation Index (GOSAVI) | [30] | |

| Green Chlorophyll Index (CIGreen) | [27] | |

| Red Edge Simple Ratio (RESR) | [31] | |

| Atmospheric Impedance Vegetation Index (ARVI) | (NIR − 2 ∗ R + B)/(NIR + B) | [32] |

| Triangular Vegetation Index (TVI) | [27] | |

| GreenRed Difference Vegetation Index (GRDVI) | [30] | |

| Improved Simple Odds Index (MSR) | ( | [33] |

| Generalized Improved Simple Odds Index (GMSR) | [30] |

| Reproductive Period | Level | Sample Size (pcs) | Mean (g/cm2) | Min (g/cm2) | Max (g/cm2) | Standard Deviation | Coefficient |

|---|---|---|---|---|---|---|---|

| Bud stage | upper | 60 | 3.46 | 2.55 | 4.96 | 0.37 | 0.11 |

| middle | 60 | 3.05 | 2.24 | 3.60 | 0.35 | 0.11 | |

| lower | 60 | 2.37 | 1.60 | 3.38 | 0.37 | 0.15 | |

| Flowering stage | upper | 60 | 15.09 | 9.72 | 20.06 | 2.61 | 0.17 |

| middle | 60 | 12.34 | 8.52 | 17.59 | 2.09 | 0.17 | |

| lower | 60 | 4.50 | 1.56 | 9.32 | 1.46 | 0.33 | |

| Boll setting stage | upper | 60 | 15.46 | 8.50 | 26.59 | 3.90 | 0.25 |

| middle | 60 | 11.49 | 7.60 | 20.88 | 2.46 | 0.21 | |

| lower | 60 | 32.47 | 18.51 | 48.50 | 6.82 | 0.21 | |

| Boll opening stage | upper | 60 | 15.63 | 9.34 | 23.79 | 4.00 | 0.26 |

| middle | 60 | 11.20 | 5.73 | 17.63 | 2.61 | 0.23 | |

| lower | 60 | 45.02 | 21.90 | 65.41 | 9.76 | 0.22 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Z.; Fan, S.; Li, Y.; Tang, Q.; Bao, L.; Zhang, S.; Sarsen, G.; Guo, R.; Wang, L.; Zhang, N.; et al. Estimating Stratified Biomass in Cotton Fields Using UAV Multispectral Remote Sensing and Machine Learning. Drones 2025, 9, 186. https://doi.org/10.3390/drones9030186

Hu Z, Fan S, Li Y, Tang Q, Bao L, Zhang S, Sarsen G, Guo R, Wang L, Zhang N, et al. Estimating Stratified Biomass in Cotton Fields Using UAV Multispectral Remote Sensing and Machine Learning. Drones. 2025; 9(3):186. https://doi.org/10.3390/drones9030186

Chicago/Turabian StyleHu, Zhengdong, Shiyu Fan, Yabin Li, Qiuxiang Tang, Longlong Bao, Shuyuan Zhang, Guldana Sarsen, Rensong Guo, Liang Wang, Na Zhang, and et al. 2025. "Estimating Stratified Biomass in Cotton Fields Using UAV Multispectral Remote Sensing and Machine Learning" Drones 9, no. 3: 186. https://doi.org/10.3390/drones9030186

APA StyleHu, Z., Fan, S., Li, Y., Tang, Q., Bao, L., Zhang, S., Sarsen, G., Guo, R., Wang, L., Zhang, N., Cui, J., Jin, X., & Lin, T. (2025). Estimating Stratified Biomass in Cotton Fields Using UAV Multispectral Remote Sensing and Machine Learning. Drones, 9(3), 186. https://doi.org/10.3390/drones9030186