AUP-DETR: A Foundational UAV Object Detection Framework for Enabling the Low-Altitude Economy

Highlights

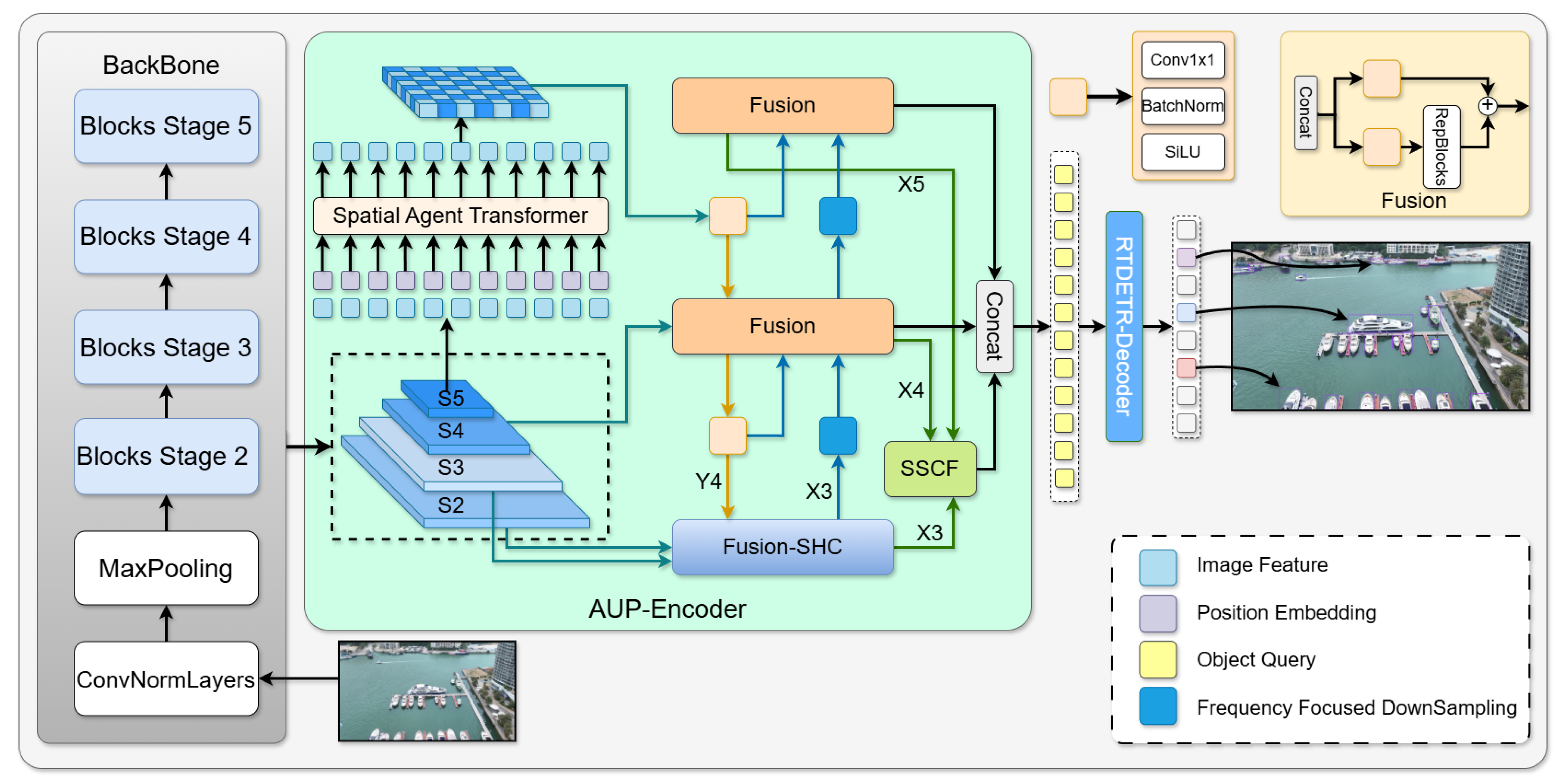

- We propose AUP-DETR, a novel end-to-end detection framework for UAVs, whose specialized modules for multi-scale feature fusion and global context modeling achieve a 4.41% mAP50 improvement over the baseline on the UCA-Det dataset.

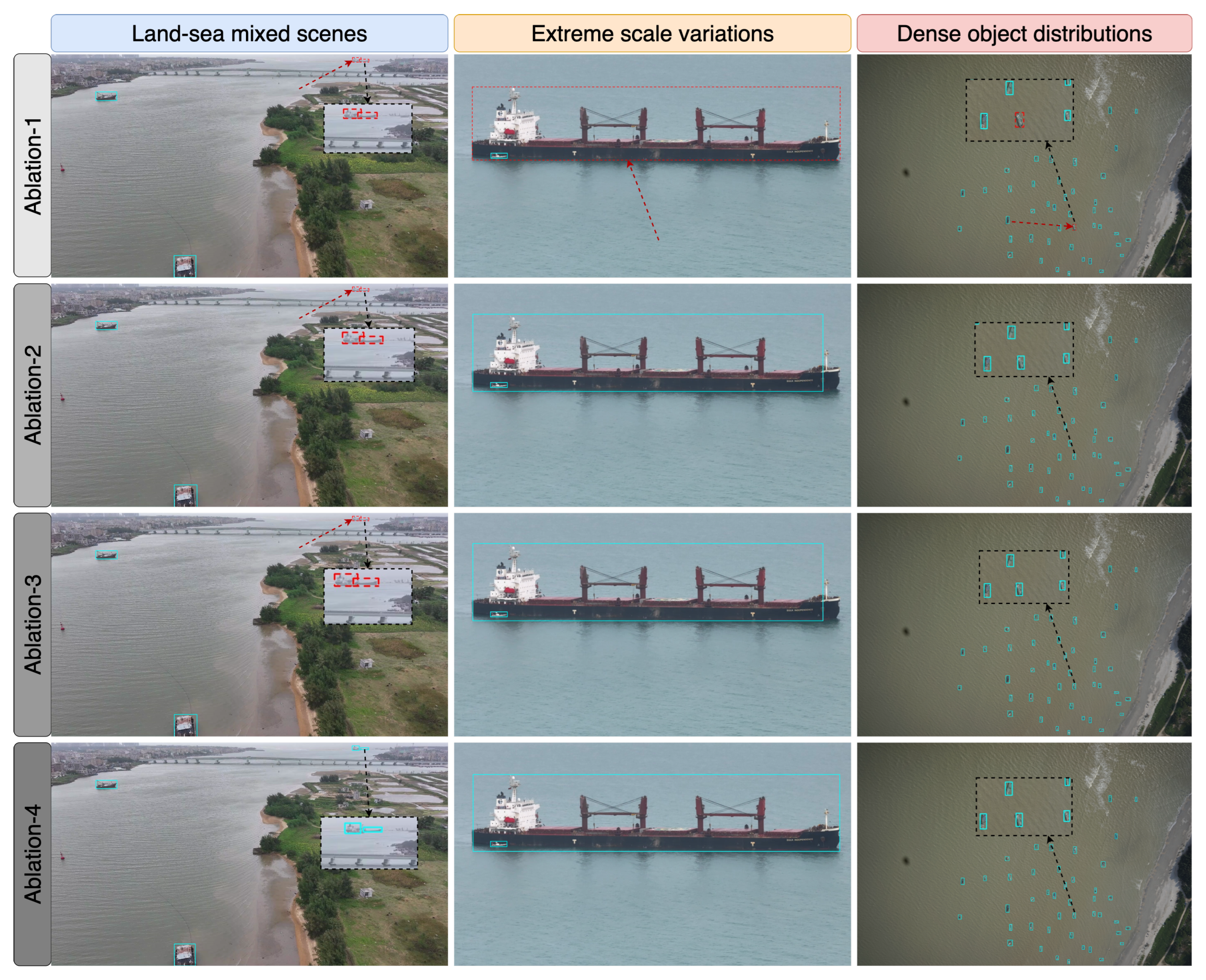

- We constructed the UCA-Det dataset, a new large-scale dataset specifically for UAV perception in complex urban port environments, filling a gap left by existing datasets that lack land–sea mixed scenes, extreme scale variations, and dense object distributions.

- This work provides a robust and efficient perception solution that is critical for enabling UAV autonomy in challenging real-world applications, such as automated logistics and intelligent infrastructure inspection within the low-altitude economy.

- Our research, including both the high-performance AUP-DETR model and the UCA-Det dataset, establishes a new challenging dataset that can facilitate and empower future academic and applied research in perception for complex low-altitude environments.

Abstract

1. Introduction

- We introduce the UCA-Det (Urban Coastal Aerial Detection) dataset. It is a large-scale new dataset designed for object detection in urban port environments from a UAV perspective. This dataset addresses the shortcomings of existing aerial datasets by providing numerous images that feature unique land–sea mixed scenes, extreme scale variations, and dense object distributions.

- We propose AUP-DETR (Aerial Urban Port Detector). This framework is specifically designed to efficiently process high-resolution aerial images while maintaining high accuracy.

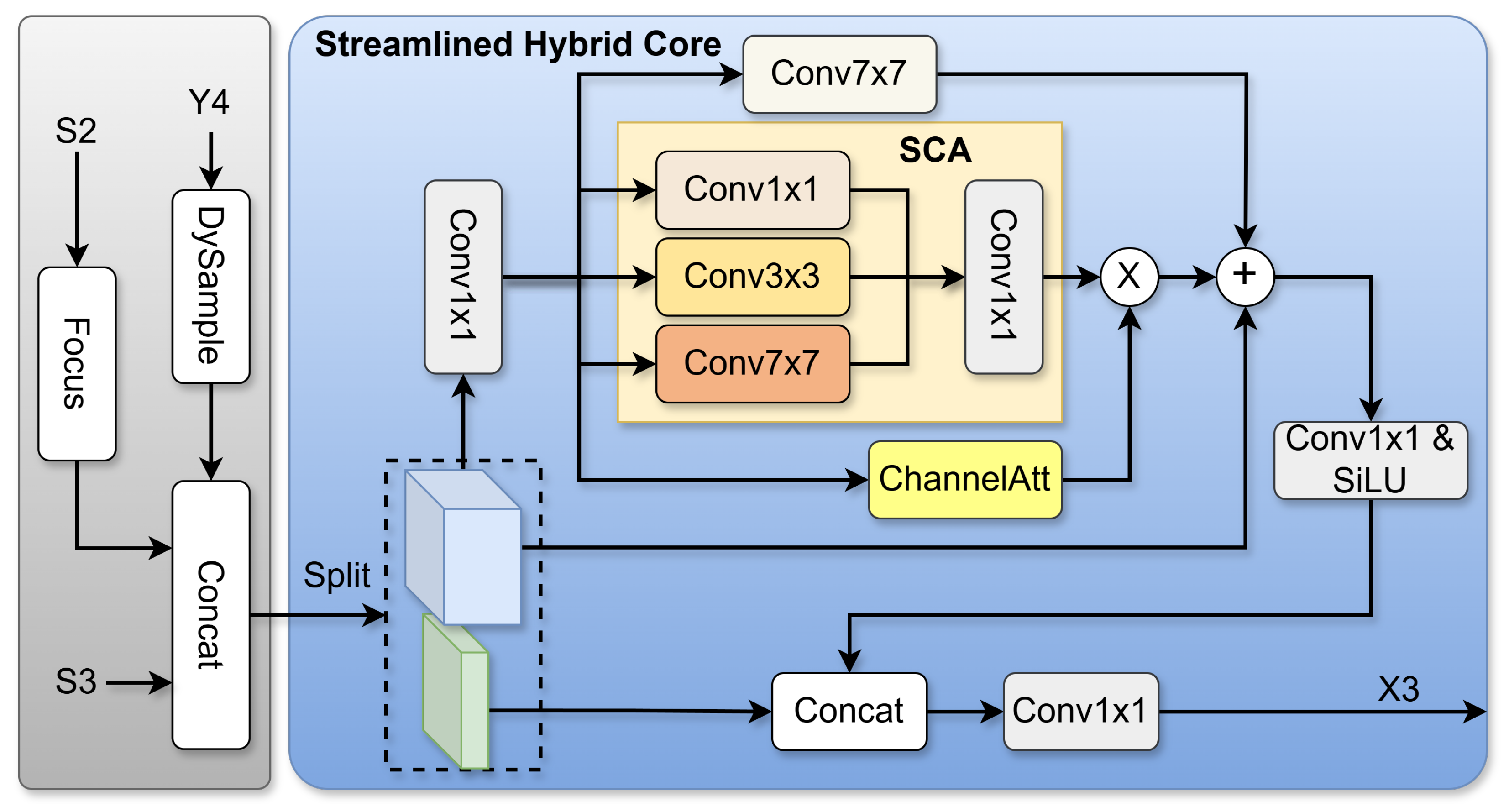

- We designed the Fusion with Streamlined Hybrid Core (Fusion-SHC), a lightweight module. Its purpose is to significantly enhance small aerial object representation through the efficient fusion of low-level spatial details and high-level semantics.

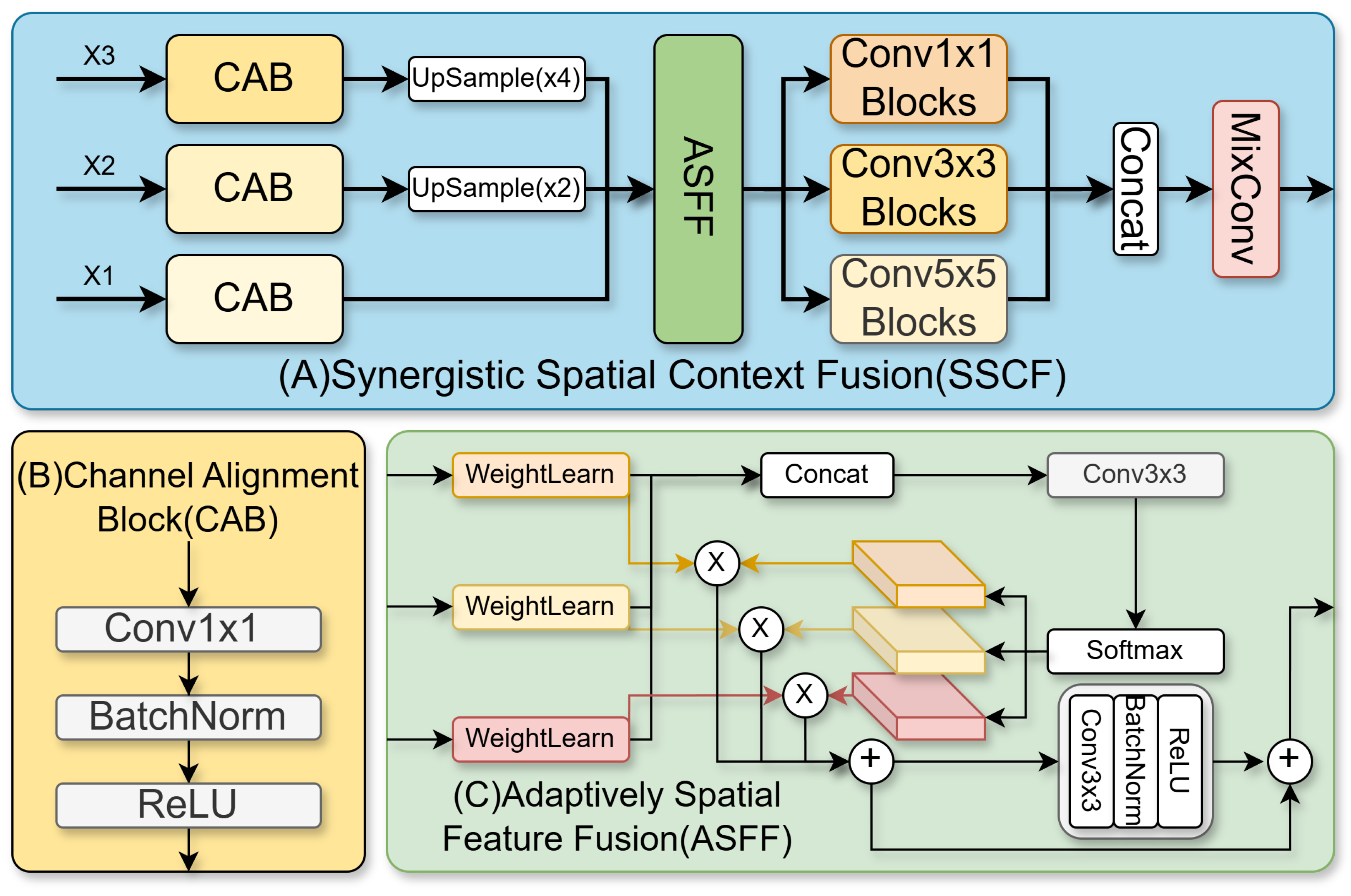

- We introduce the Synergistic Spatial Context Fusion (SSCF) module. It adaptively integrates features from all scales to generate an information-rich and highly unified representation for the final detection head.

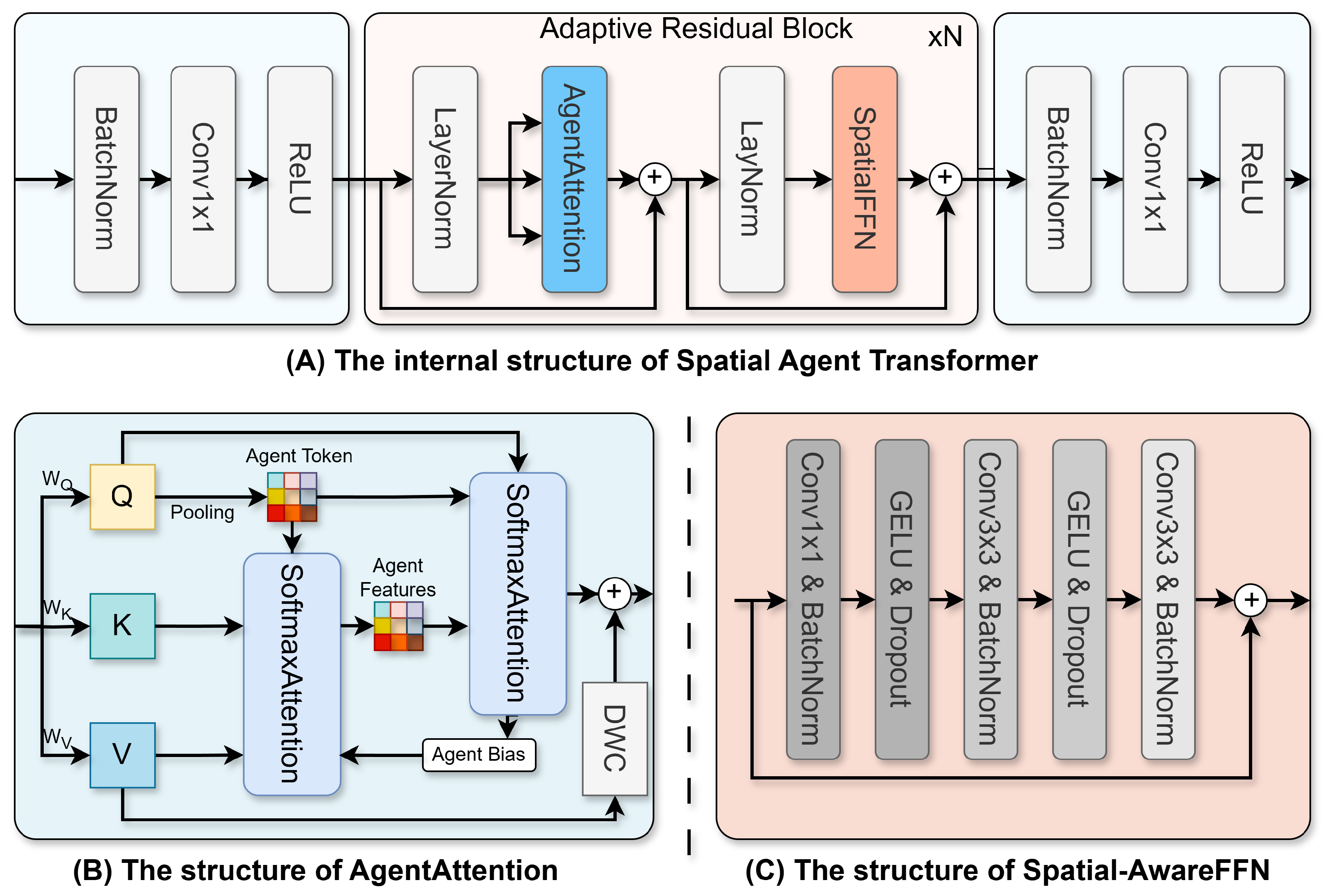

- We propose the Spatial Agent Transformer (SAT), which effectively models global context and long-range dependencies. This capability is crucial to distinguish heterogeneous targets, such as ships and vehicles, in complex scenes.

2. Related Works

2.1. General-Purpose Object Detection

2.2. UAV Object Detection

3. Methods

3.1. Basic Components of AUP-DETR

3.2. Fusion with Streamlined Hybrid Core (Fusion-SHC)

3.3. Synergistic Spatial Context Fusion (SSCF)

3.4. Spatial Agent Transformer (SAT)

4. Experiments and Discussion

4.1. The Datasets

4.2. Experimental Setup

4.2.1. Experimental Environment Configuration

4.2.2. Evaluation Metrics

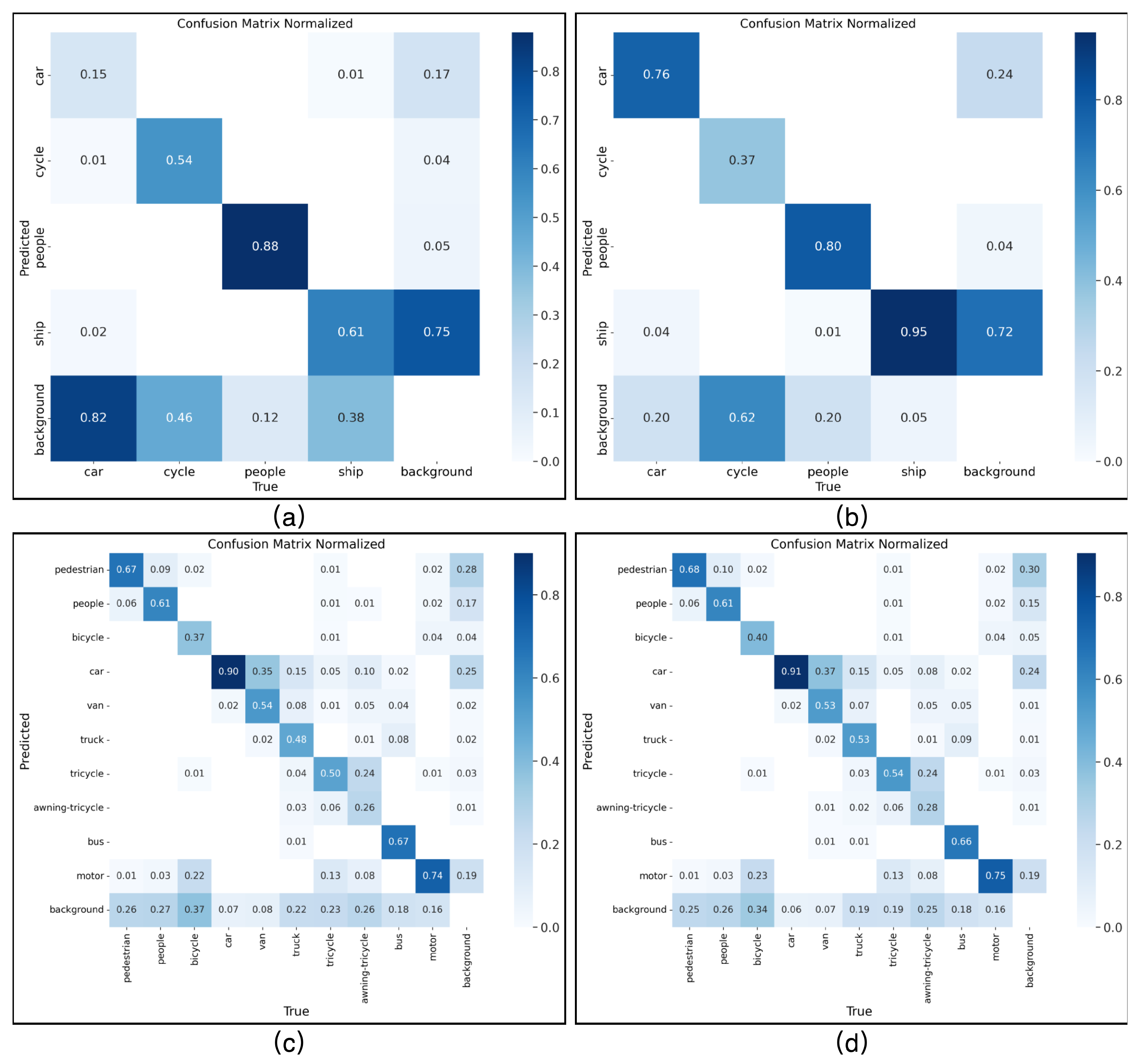

4.3. Ablation Experiment

4.4. Evaluation of the Number of Adaptive Residual Blocks in SAT

4.5. Evaluation of the Number of Attention Heads in SAT

4.6. A Comparison of the Results for Different Datasets

4.6.1. The Results on UCA-Det

4.6.2. The Results on VisDrone

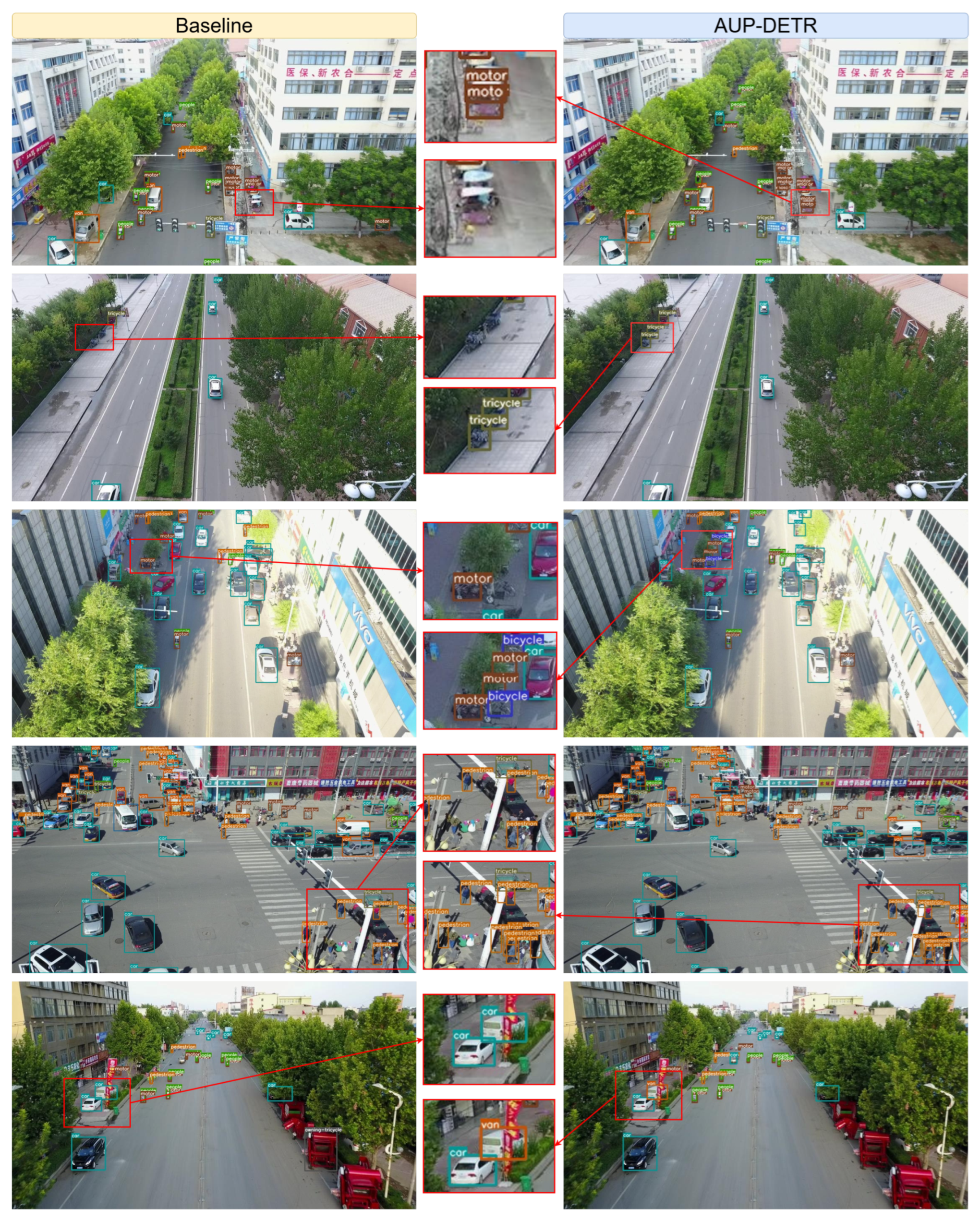

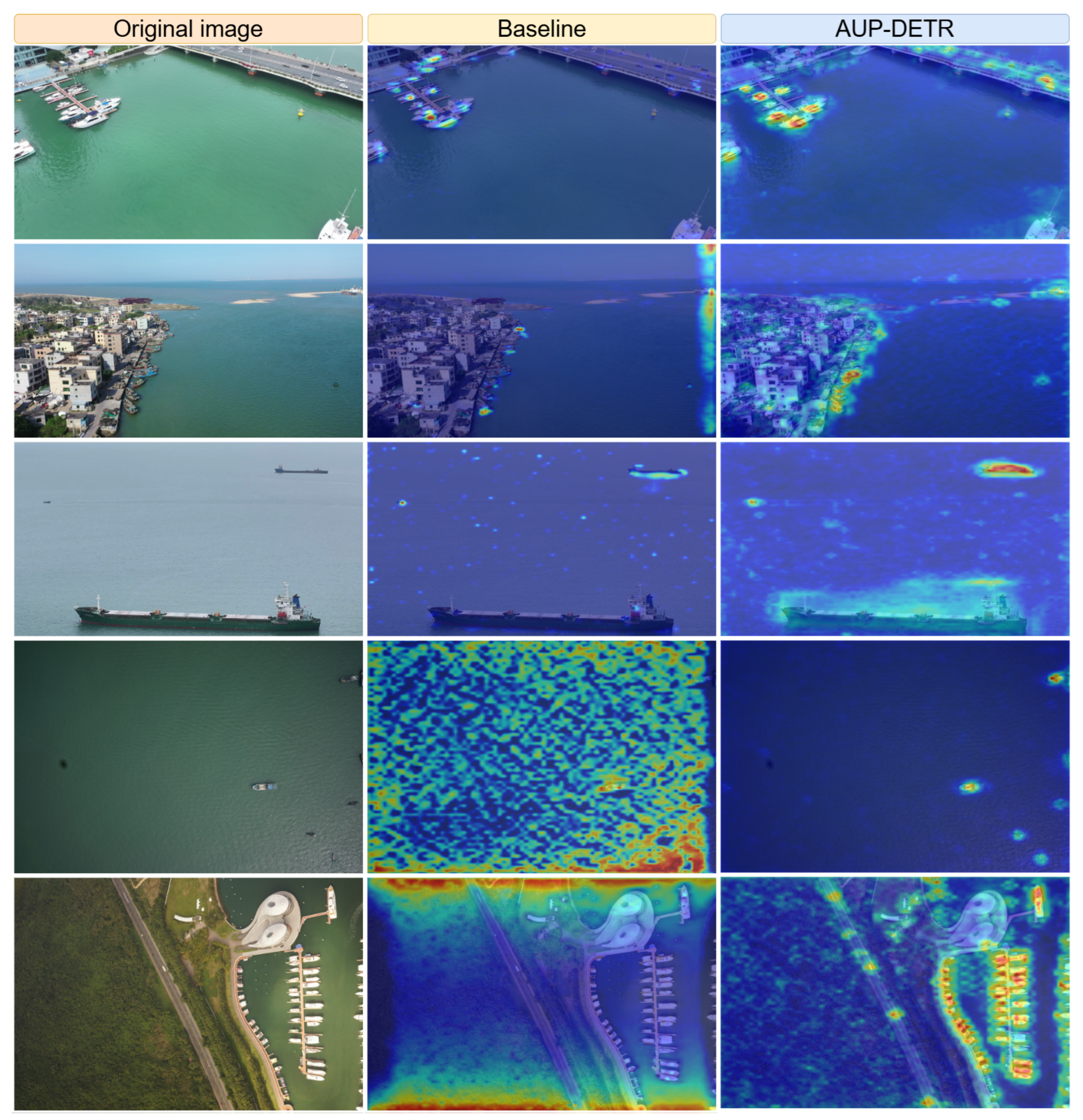

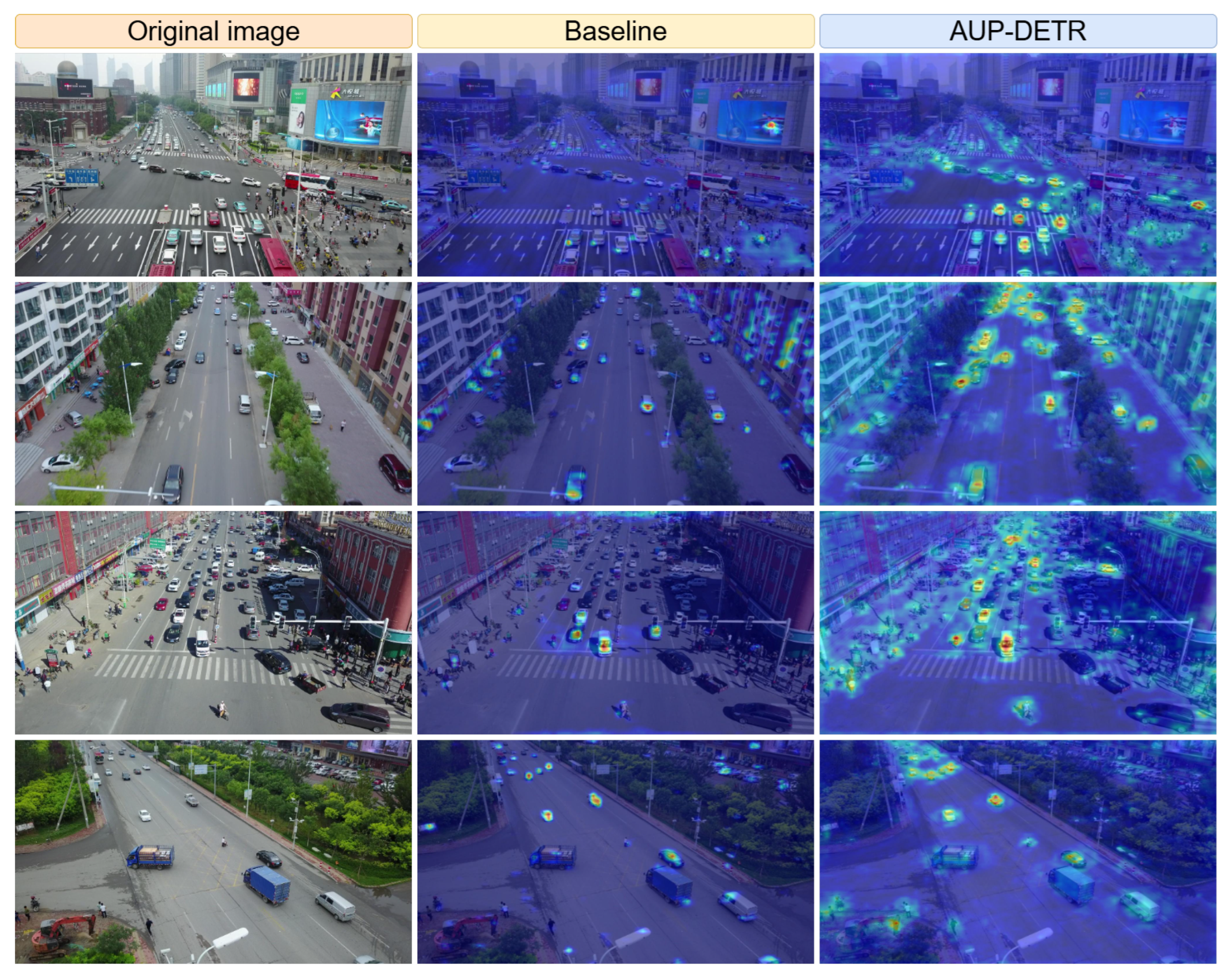

4.7. Visual Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jiang, Y.; Li, X.; Zhu, G.; Li, H.; Deng, J.; Han, K.; Shen, C.; Shi, Q.; Zhang, R. Integrated sensing and communication for low altitude economy: Opportunities and challenges. IEEE Commun. Mag. 2025. Early Access. [Google Scholar] [CrossRef]

- Zhou, Y. Unmanned aerial vehicles based low-altitude economy with lifecycle techno-economic-environmental analysis for sustainable and smart cities. J. Clean. Prod. 2025, 499, 145050. [Google Scholar] [CrossRef]

- Huang, C.; Fang, S.; Wu, H.; Wang, Y.; Yang, Y. Low-altitude intelligent transportation: System architecture, infrastructure, and key technologies. J. Ind. Inf. Integr. 2024, 42, 100694. [Google Scholar] [CrossRef]

- Song, Y.; Zeng, Y.; Yang, Y.; Ren, Z.; Cheng, G.; Xu, X.; Xu, J.; Jin, S.; Zhang, R. An overview of cellular ISAC for low-altitude UAV: New opportunities and challenges. IEEE Commun. Mag. 2025. Early Access. [Google Scholar] [CrossRef]

- Bai, Y.; Zhao, H.; Zhang, X.; Chang, Z.; Jäntti, R.; Yang, K. Toward autonomous multi-UAV wireless network: A survey of reinforcement learning-based approaches. IEEE Commun. Surv. Tutor. 2023, 25, 3038–3067. [Google Scholar] [CrossRef]

- Wang, J.; Zhou, K.; Xing, W.; Li, H.; Yang, Z. Applications, evolutions, and challenges of drones in maritime transport. J. Mar. Sci. Eng. 2023, 11, 2056. [Google Scholar] [CrossRef]

- Leng, J.; Ye, Y.; Mo, M.; Gao, C.; Gan, J.; Xiao, B.; Gao, X. Recent advances for aerial object detection: A survey. ACM Comput. Surv. 2024, 56, 1–36. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Ding, J.; Xue, N.; Xia, G.S.; Bai, X.; Yang, W.; Yang, M.Y.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; et al. Object detection in aerial images: A large-scale benchmark and challenges. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7778–7796. [Google Scholar] [CrossRef] [PubMed]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The unmanned aerial vehicle benchmark: Object detection and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Jiao, Z.; Wang, M.; Qiao, S.; Zhang, Y.; Huang, Z. Transformer-based Object Detection in Low-Altitude Maritime UAV Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4210413. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Hosang, J.; Benenson, R.; Schiele, B. Learning non-maximum suppression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4507–4515. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Zhang, H.; Liu, K.; Gan, Z.; Zhu, G.N. UAV-DETR: Efficient end-to-end object detection for unmanned aerial vehicle imagery. arXiv 2025, arXiv:2501.01855. [Google Scholar]

- Hua, W.; Chen, Q. A survey of small object detection based on deep learning in aerial images. Artif. Intell. Rev. 2025, 58, 162. [Google Scholar] [CrossRef]

- Liu, C.; Gao, G.; Huang, Z.; Hu, Z.; Liu, Q.; Wang, Y. Yolc: You only look clusters for tiny object detection in aerial images. IEEE Trans. Intell. Transp. Syst. 2024, 25, 13863–13875. [Google Scholar] [CrossRef]

- Ye, T.; Qin, W.; Li, Y.; Wang, S.; Zhang, J.; Zhao, Z. Dense and small object detection in UAV-vision based on a global-local feature enhanced network. IEEE Trans. Instrum. Meas. 2022, 71, 2515513. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Bashir, S.M.A.; Wang, Y. Small object detection in remote sensing images with residual feature aggregation-based super-resolution and object detector network. Remote Sens. 2021, 13, 1854. [Google Scholar] [CrossRef]

- Zhang, L.; Xing, Z.; Wang, X. Background instance-based copy-paste data augmentation for object detection. Electronics 2023, 12, 3781. [Google Scholar] [CrossRef]

- Qian, W.; Yang, X.; Peng, S.; Zhang, X.; Yan, J. RSDet++: Point-based modulated loss for more accurate rotated object detection. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7869–7879. [Google Scholar] [CrossRef]

- Han, W.; Li, J.; Wang, S.; Wang, Y.; Yan, J.; Fan, R.; Zhang, X.; Wang, L. A context-scale-aware detector and a new benchmark for remote sensing small weak object detection in unmanned aerial vehicle images. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102966. [Google Scholar] [CrossRef]

- Li, X.; Diao, W.; Mao, Y.; Gao, P.; Mao, X.; Li, X.; Sun, X. OGMN: Occlusion-guided multi-task network for object detection in UAV images. ISPRS J. Photogramm. Remote Sens. 2023, 199, 242–257. [Google Scholar] [CrossRef]

- Min, X.; Zhou, W.; Hu, R.; Wu, Y.; Pang, Y.; Yi, J. Lwuavdet: A lightweight uav object detection network on edge devices. IEEE Internet Things J. 2024, 11, 24013–24023. [Google Scholar] [CrossRef]

- Ye, T.; Qin, W.; Zhao, Z.; Gao, X.; Deng, X.; Ouyang, Y. Real-time object detection network in UAV-vision based on CNN and transformer. IEEE Trans. Instrum. Meas. 2023, 72, 2505713. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3974–3983. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning spatial fusion for single-shot object detection. arXiv 2019, arXiv:1911.09516. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- PaddlePaddle Authors. PaddleDetection, Object Detection and Instance Segmentation Toolkit Based on PaddlePaddle. 2019. Available online: https://github.com/PaddlePaddle/PaddleDetection (accessed on 24 November 2025).

- Yang, C.; Huang, Z.; Wang, N. QueryDet: Cascaded sparse query for accelerating high-resolution small object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 13668–13677. [Google Scholar]

- Yang, F.; Fan, H.; Chu, P.; Blasch, E.; Ling, H. Clustered object detection in aerial images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8311–8320. [Google Scholar]

- Xu, C.; Ding, J.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G.S. Dynamic coarse-to-fine learning for oriented tiny object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7318–7328. [Google Scholar]

- Tang, S.; Zhang, S.; Fang, Y. HIC-YOLOv5: Improved YOLOv5 for small object detection. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 6614–6619. [Google Scholar]

- Roh, B.; Shin, J.; Shin, W.; Kim, S. Sparse detr: Efficient end-to-end object detection with learnable sparsity. arXiv 2021, arXiv:2111.14330. [Google Scholar]

| Hyperparameter | Value |

|---|---|

| Total Epochs | 400 |

| Patience | 20 |

| Batch Size | 4 |

| Input Image Size | 640 × 640 |

| Optimizer | AdamW |

| Initial Learning Rate | 0.0001 |

| Weight Decay | 0.0001 |

| Warmup Steps | 2000 |

| Warmup Momentum | 0.8 |

| Horizontal Flip | 0.5 |

| Mosaic | 1 |

| Mixup | 0.2 |

| Ablation | Fusion-SHC | SSCF | SAT | P (%) | R (%) | mAP95 (%) | mAP50 (%) | Params (M) | Flops (G) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | ✗ | ✗ | ✗ | 77.08 | 60.57 | 34.84 | 65.27 | 21.3 | 72.5 |

| 2 | ✓ | ✗ | ✗ | 66.47 | 56.27 | 33.14 | 60.02 | 21.1 | 71.3 |

| 3 | ✓ | ✓ | ✗ | 78.56 | 57.39 | 35.10 | 63.15 | 21.6 | 82.6 |

| 4 | ✓ | ✓ | ✓ | 82.24 | 63.74 | 37.80 | 69.68 | 22.7 | 83.6 |

| ARB Layer | P (%) | R (%) | mAP95 (%) | mAP50 (%) | Params (M) | Flops (G) |

|---|---|---|---|---|---|---|

| 1 | 81.52 | 60.92 | 34.66 | 63.92 | 22.1 | 83.1 |

| 2 | 82.24 | 63.74 | 37.80 | 69.68 | 22.7 | 83.6 |

| 3 | 76.29 | 61.01 | 36.07 | 66.66 | 23.4 | 84.1 |

| 4 | 72.02 | 61.27 | 35.81 | 65.93 | 24.0 | 84.6 |

| 5 | 70.00 | 61.95 | 34.58 | 65.01 | 24.6 | 85.0 |

| Attention Head | P (%) | R (%) | mAP95 (%) | mAP50 (%) | Params (M) | Flops (G) |

|---|---|---|---|---|---|---|

| 2 | 68.83 | 60.75 | 35.21 | 62.61 | 22.7 | 83.6 |

| 4 | 74.11 | 59.74 | 34.78 | 62.98 | 22.7 | 83.6 |

| 8 | 82.24 | 63.74 | 37.80 | 69.68 | 22.7 | 83.6 |

| 16 | 75.20 | 57.51 | 34.73 | 61.31 | 22.7 | 83.6 |

| 32 | 64.26 | 60.07 | 33.37 | 61.10 | 22.7 | 83.6 |

| Model | P (%) | R (%) | mAP95 (%) | mAP50 (%) | APS (%) | APM (%) | APL (%) | Params (M) | Flops (G) |

|---|---|---|---|---|---|---|---|---|---|

| YOLOv8-M [33] | 73.42 | 52.60 | 31.42 | 55.73 | 18.0 | 35.7 | 56.8 | 25.9 | 78.9 |

| YOLOv8-L [33] | 73.02 | 49.39 | 32.12 | 55.87 | 19.5 | 36.5 | 60.0 | 43.7 | 165.2 |

| YOLOv8-X [33] | 60.53 | 48.32 | 31.11 | 53.98 | 18.3 | 35.0 | 51.8 | 68.2 | 257.8 |

| YOLOv9-S [34] | 78.02 | 44.34 | 26.09 | 48.60 | 13.4 | 30.9 | 40.4 | 7.2 | 26.9 |

| YOLOv9-M [34] | 60.88 | 51.57 | 29.95 | 54.10 | 16.5 | 35.2 | 55.0 | 20.1 | 76.8 |

| YOLOv10-M [35] | 65.74 | 45.45 | 31.08 | 53.66 | 17.4 | 37.7 | 48.8 | 15.4 | 59.1 |

| YOLOv10-L [35] | 74.63 | 47.79 | 29.98 | 53.23 | 16.1 | 34.3 | 53.4 | 24.4 | 120.4 |

| YOLOv10-X [35] | 68.74 | 50.97 | 30.79 | 55.21 | 20.2 | 35.5 | 50.5 | 29.5 | 160.4 |

| YOLOv11-N [36] | 56.55 | 35.67 | 21.18 | 40.20 | 9.5 | 25.6 | 40.4 | 2.6 | 6.5 |

| YOLOv11-S [36] | 56.31 | 50.38 | 29.69 | 53.48 | 16.8 | 34.3 | 54.6 | 9.4 | 21.5 |

| YOLOv11-M [36] | 67.14 | 52.83 | 30.77 | 56.06 | 19.1 | 34.8 | 57.4 | 20.1 | 68.0 |

| YOLOv11-L [36] | 65.40 | 56.50 | 33.22 | 59.86 | 19.3 | 39.8 | 54.8 | 25.3 | 86.9 |

| YOLOv11-X [36] | 62.62 | 57.78 | 33.82 | 59.10 | 20.0 | 40.6 | 64.7 | 57.0 | 194.9 |

| RT-DETR-R18 [18] | 66.96 | 65.96 | 35.22 | 66.62 | 29.3 | 37.1 | 35.8 | 26.1 | 67.6 |

| RT-DERT-R50 [18] | 71.06 | 54.95 | 35.40 | 62.84 | 28.2 | 38.9 | 36.4 | 42.0 | 129.6 |

| UAV-DETR-R18 [19] | 77.08 | 60.57 | 34.84 | 65.27 | 29.1 | 36.0 | 41.4 | 21.3 | 72.5 |

| UAV-DETR-R50 [19] | 78.79 | 61.00 | 36.90 | 64.91 | 31.4 | 39.2 | 37.3 | 44.6 | 161.4 |

| AUP-DETR (Ours) | 82.24 | 63.74 | 37.80 | 69.68 | 32.0 | 38.0 | 42.3 | 22.7 | 83.6 |

| Model | mAP95 (%) | mAP50 (%) | Params (M) | Flops (G) |

|---|---|---|---|---|

| YOLOv8-M [33] | 24.6 | 40.7 | 25.9 | 78.9 |

| YOLOv8-L [33] | 26.1 | 42.7 | 43.7 | 165.2 |

| YOLOv8-X [33] | 29.1 | 46.7 | 68.2 | 257.8 |

| YOLOv9-S [34] | 22.7 | 38.3 | 7.2 | 26.9 |

| YOLOv9-M [34] | 25.2 | 42.0 | 20.1 | 76.8 |

| YOLOv10-M [35] | 24.5 | 40.5 | 15.4 | 59.1 |

| YOLOv10-L [35] | 26.3 | 43.1 | 24.4 | 120.4 |

| YOLOv10-X [35] | 29.6 | 47.1 | 29.5 | 160.4 |

| YOLOv11-N [36] | 18.8 | 31.9 | 2.6 | 6.5 |

| YOLOv11-S [36] | 23.0 | 38.7 | 9.4 | 21.5 |

| YOLOv11-M [36] | 25.9 | 43.1 | 20.1 | 68.0 |

| YOLOv11-L [36] | 29.4 | 47.2 | 25.3 | 86.9 |

| YOLOv11-X [36] | 31.0 | 49.2 | 57.0 | 194.9 |

| PP-YOLOE-P2-Alpha-l [37] | 30.1 | 48.9 | 54.1 | 111.4 |

| QueryDet [38] | 28.3 | 48.1 | 33.9 | 212.0 |

| ClusDet [39] | 26.7 | 50.6 | 30.2 | 207.0 |

| DCFL [40] | – | 32.1 | 36.1 | 157.8 |

| HIC-YOLOv5 [41] | 26.0 | 44.3 | 9.4 | 31.2 |

| DETR [9] | 24.1 | 40.1 | 60.0 | 187.0 |

| Deformable DETR [16] | 27.1 | 42.2 | 40.0 | 173.0 |

| Sparse DETR [42] | 27.3 | 42.5 | 40.9 | 121.0 |

| RT-DETR-R18 [18] | 26.7 | 44.6 | 26.1 | 67.6 |

| RT-DETR-R50 [18] | 28.4 | 47.0 | 42.0 | 129.6 |

| UAV-DETR-EV2 [19] | 28.7 | 47.5 | 13.0 | 42.9 |

| UAV-DETR-R18 [19] | 29.8 | 48.8 | 21.3 | 72.5 |

| UAV-DETR-R50 [19] | 31.5 | 51.1 | 44.6 | 161.4 |

| AUP-DETR (Ours) | 29.9 | 48.5 | 22.7 | 83.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, J.; Liu, X.; Li, X.; Hu, Y. AUP-DETR: A Foundational UAV Object Detection Framework for Enabling the Low-Altitude Economy. Drones 2025, 9, 822. https://doi.org/10.3390/drones9120822

Xu J, Liu X, Li X, Hu Y. AUP-DETR: A Foundational UAV Object Detection Framework for Enabling the Low-Altitude Economy. Drones. 2025; 9(12):822. https://doi.org/10.3390/drones9120822

Chicago/Turabian StyleXu, Jiajing, Xiaozhang Liu, Xiulai Li, and Yuanyan Hu. 2025. "AUP-DETR: A Foundational UAV Object Detection Framework for Enabling the Low-Altitude Economy" Drones 9, no. 12: 822. https://doi.org/10.3390/drones9120822

APA StyleXu, J., Liu, X., Li, X., & Hu, Y. (2025). AUP-DETR: A Foundational UAV Object Detection Framework for Enabling the Low-Altitude Economy. Drones, 9(12), 822. https://doi.org/10.3390/drones9120822