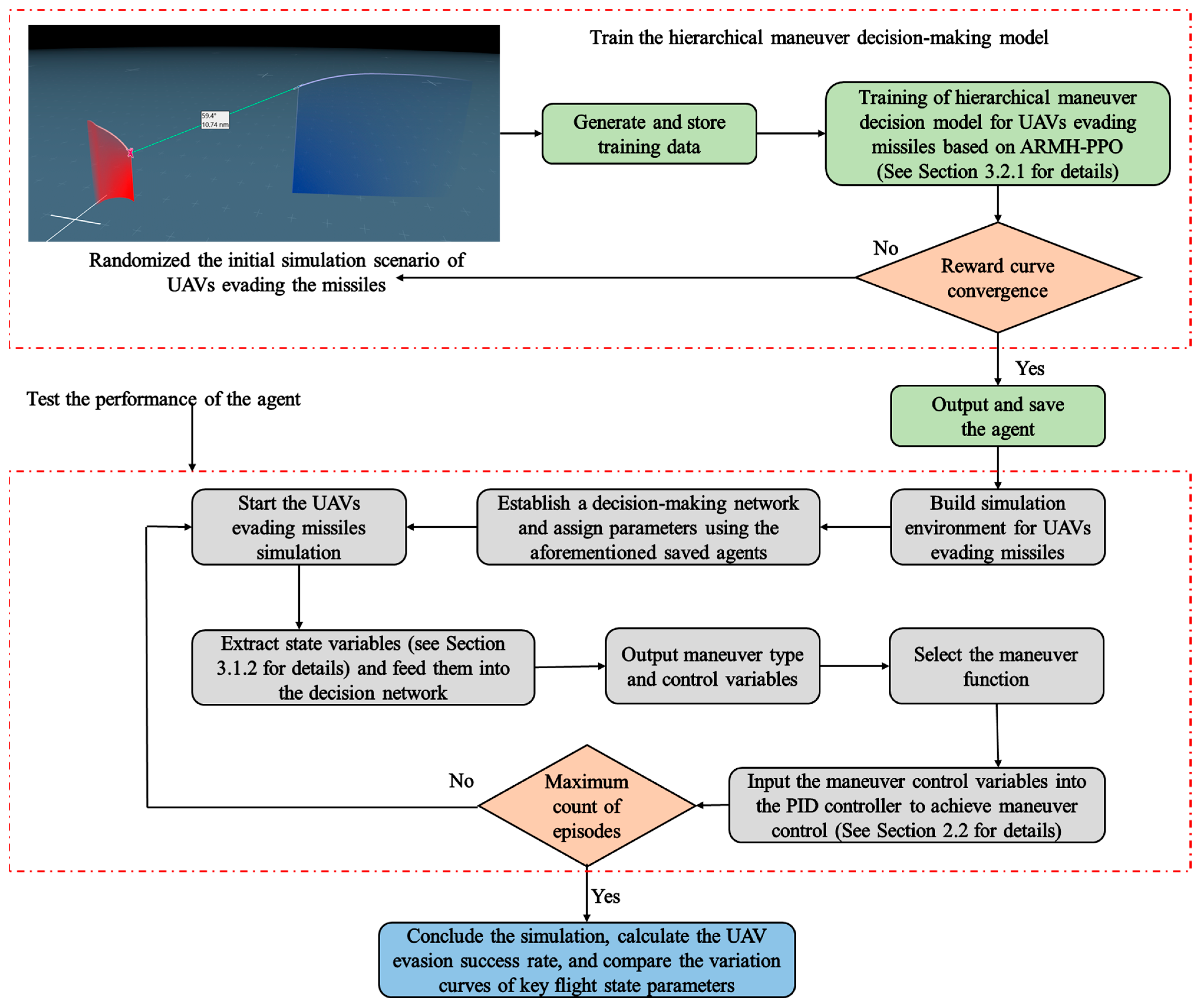

Since the ARMH-PPO algorithm is an enhancement of the basic PPO algorithm framework, to improve the comprehensiveness of the ARMH-PPO algorithm description, its fundamental structure and key improvements will be introduced below.

3.1.1. The Basic Structure of the PPO Algorithm

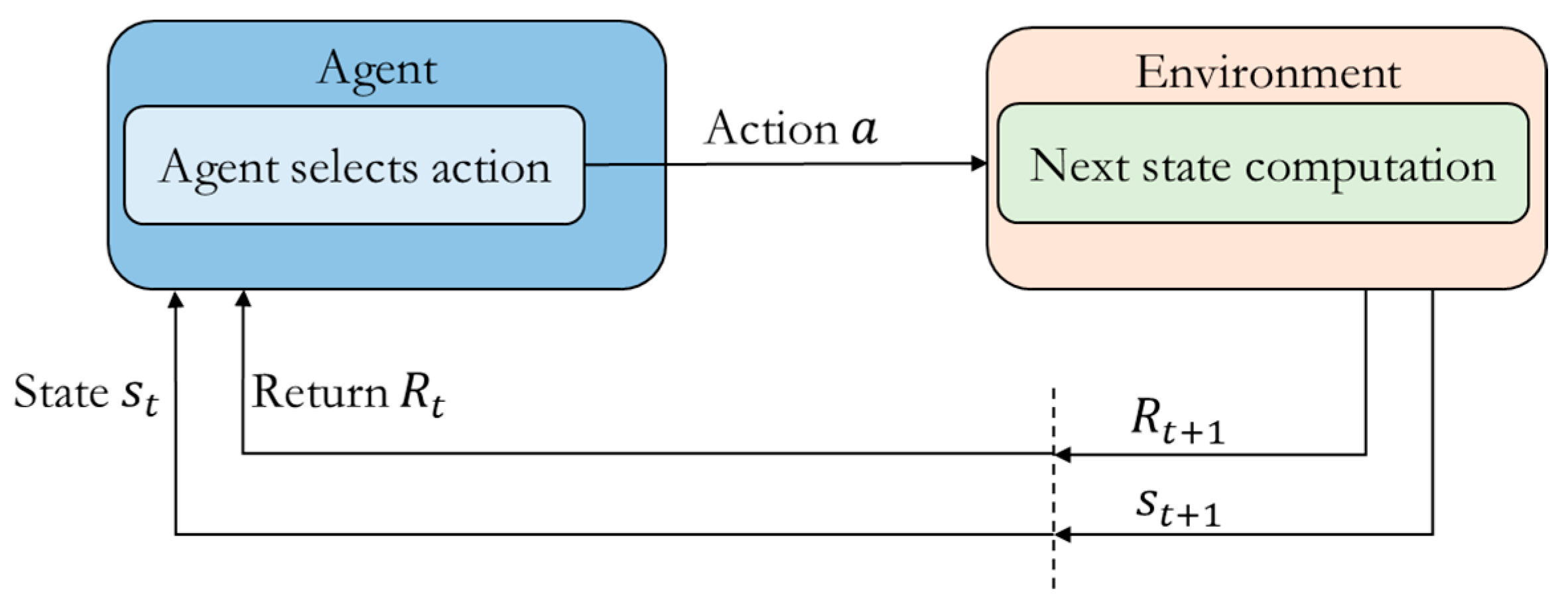

Reinforcement learning (RL) algorithms consist of five main components: the agent, the environment, the state, the action

A, and the observation

R. At time step

t, the agent generates action

and interacts with the environment. After executing the action, the agent’s state transitions from

to

, and the agent receives an environment return value

. Through this process, the agent dynamically modifies its data during interactions with the environment. After a sufficient number of interactions, the agent acquires an optimized action policy. The agent-environment interaction process is illustrated in

Figure 4 below.

The computational process of RL is an ongoing exploration to optimize strategies. The aforementioned strategy refers to the mapping from states to actions. The following formula provides the probability of selecting action

for state

:

By employing RL algorithms, our objective is to maximize the action value of the corresponding state:

Traditional RL methods store state and action value function values in tabular form. While this approach enables rapid acquisition of required state and action values for simple problems, it struggles with complex scenarios. Deep learning integrates feature learning into the model, endowing it with self-learning capabilities and robustness to environmental changes, making it suitable for nonlinear models. However, deep learning cannot achieve unbiased estimation of data rules. Moreover, this approach requires large labeled datasets and repeated computations to achieve high prediction accuracy. Based on the above analysis, when tackling complex nonlinear problems, it is feasible to construct DRL algorithms by combining deep learning with RL algorithms and applying them to solve complex issues.

PPO stands as one of the most widely employed DRL algorithms today. Building upon the trust region policy optimization (TRPO) algorithm, it optimizes the following objective function:

Among these,

represents the probability ratio between the old and new strategies introduced by importance sampling, calculated as follows:

represents the dominance function, indicating how much the value of choosing action

is higher than the average value under the current state

. Its calculation method is as follows:

Since the true value of the advantage function is unknown, an appropriate method must be employed to estimate it. References [

28,

29] utilize the state value function

and apply generalized advantage estimation (GAE) techniques to estimate the advantage function. Reference [

30] employs finite-horizon estimators (FHE) for advantage function estimation. Considering the characteristics of sample sequences and network update mechanisms, this paper utilizes GAE to pre-estimate the action advantage function, with the calculation method outlined below:

When

, the calculation methods for the estimated advantage function in GAE and FHE are equivalent.

represents the one-step temporal differential error, calculated as follows:

Incorporating policy entropy into the optimization function of the PPO algorithm [

30,

31,

32] enhances the agent’s exploration capability for unknown policies. Policy entropy is defined as follows:

The optimization function for increasing strategy entropy is as follows:

where

is the temperature coefficient.

Reference [

33] proposes an improved PPO algorithm that constructs an objective function integrating policy network optimization, value network optimization, and policy entropy. It employs a parameter-sharing mechanism between the policy and value networks to enhance algorithmic efficiency. Experimental results demonstrate that the proposed algorithm inherits the advantages of TRPO while offering simpler implementation and greater generalization capability. Drawing upon the network parameter sharing mechanism and objective function construction methods from the aforementioned literature, this paper establishes the following objective function based on the constructed policy network optimization function:

Among these,

denotes the squared-error loss, representing the optimization objective function for the value network. Its calculation method is as follows:

Assuming PPO uses a trajectory sequence with a fixed time step

T to update its network structure, the algorithm’s workflow is as follows. First, in each episode, each actor network receives data over a time step

T with a network size of

N. Next, based on this data, the agent loss function

is constructed and optimized using the Adam optimizer [

34] for

k epochs. Then, in the subsequent episode, the updated network parameters are used to generate an action policy, and the iterative cycle continues. The pseudocode for PPO is shown in Algorithm 1.

| Algorithm 1 PPO, actor-critic style |

| 1 | for episode = 1,2,do |

| 2 | for actor = 1,2,N do |

| 3 | Implementation strategy , cumulative time step T; |

| 4 | Using GAE technology to calculate advantage estimates , ; |

| 5 | end for |

| 6 | Optimize the loss function using the Adam optimizer to obtain new network parameters; |

| 7 | Update the parameters of the policy network and value network ; |

| 8 | end for |

3.1.2. ARMH-PPO Algorithm

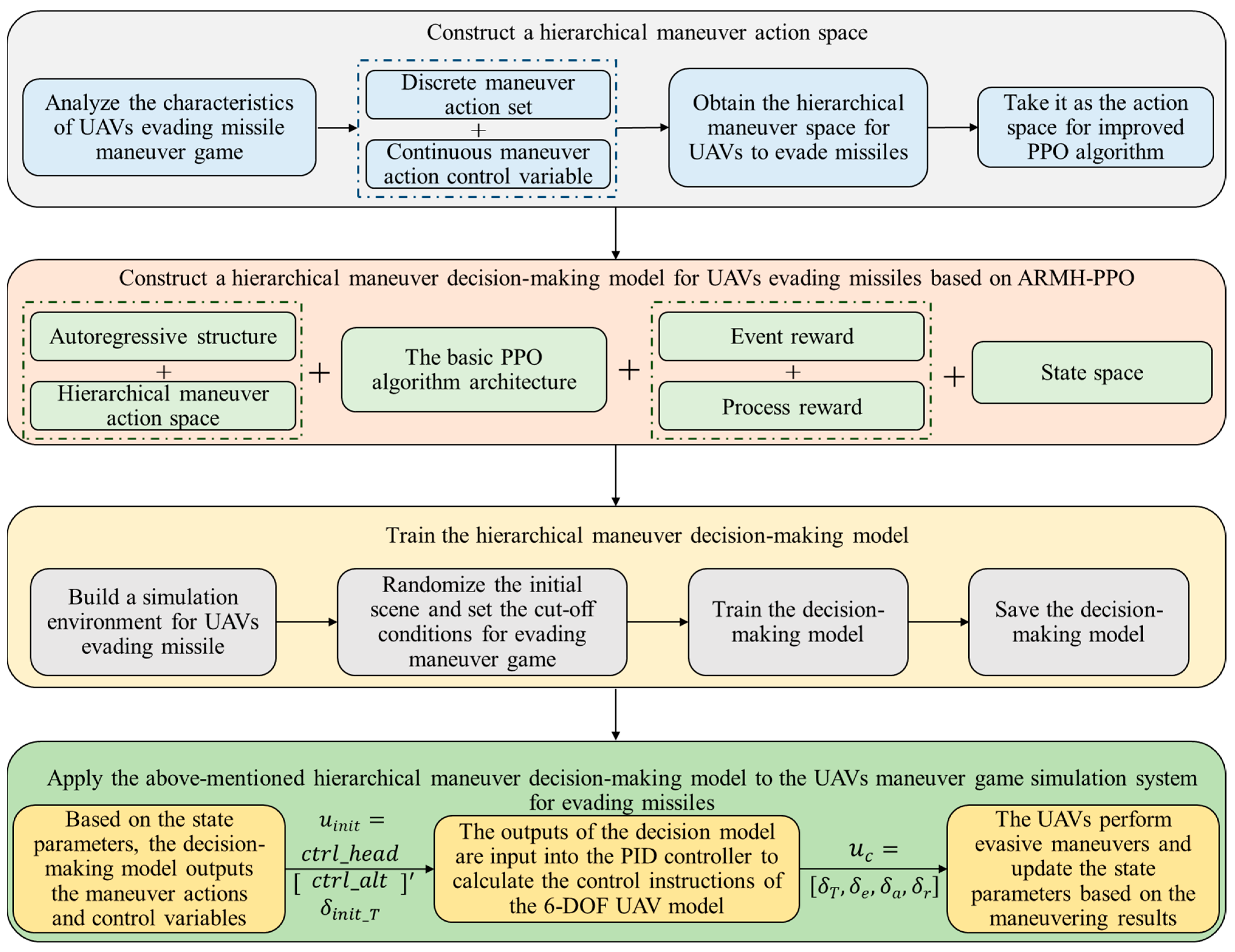

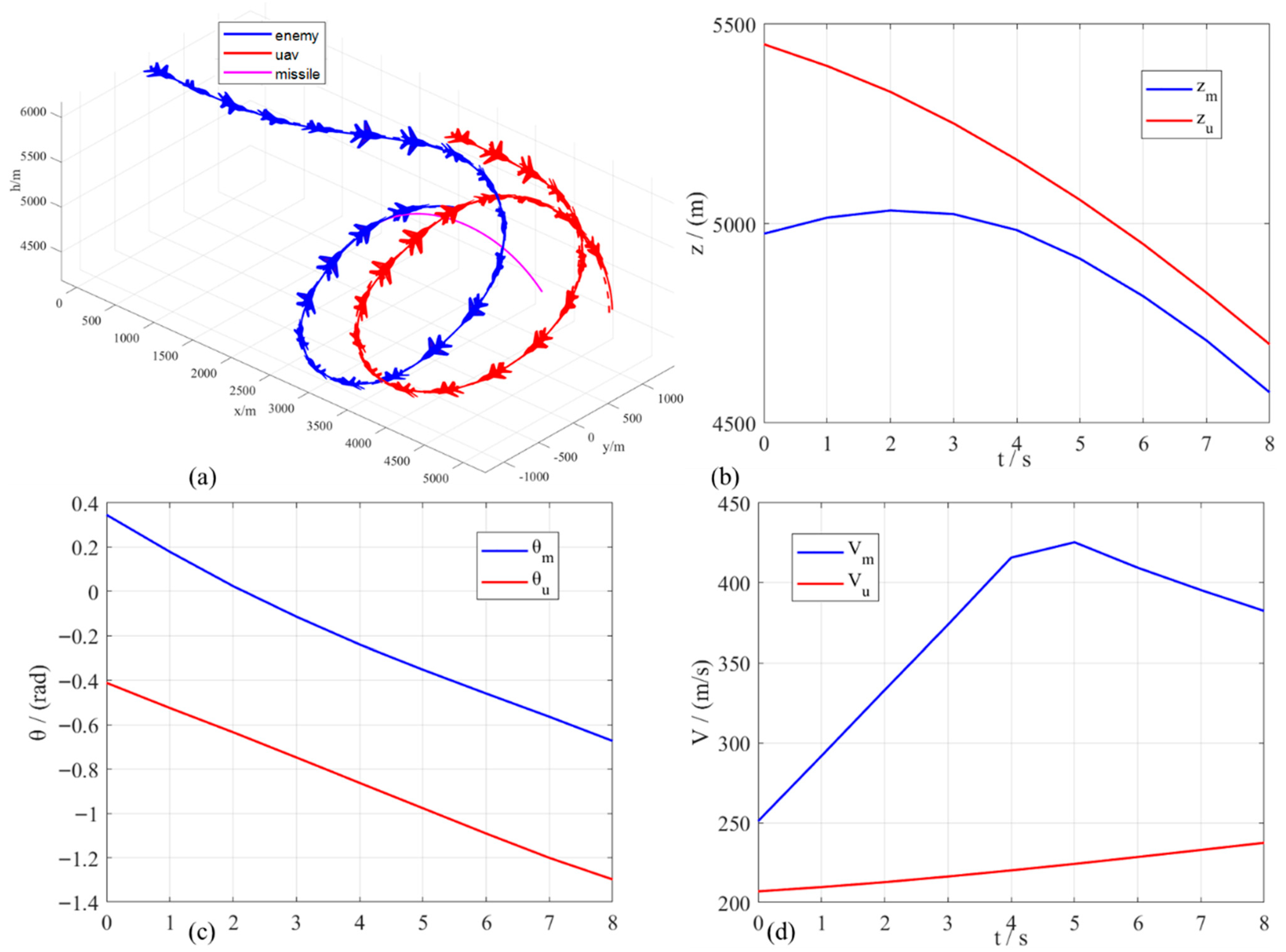

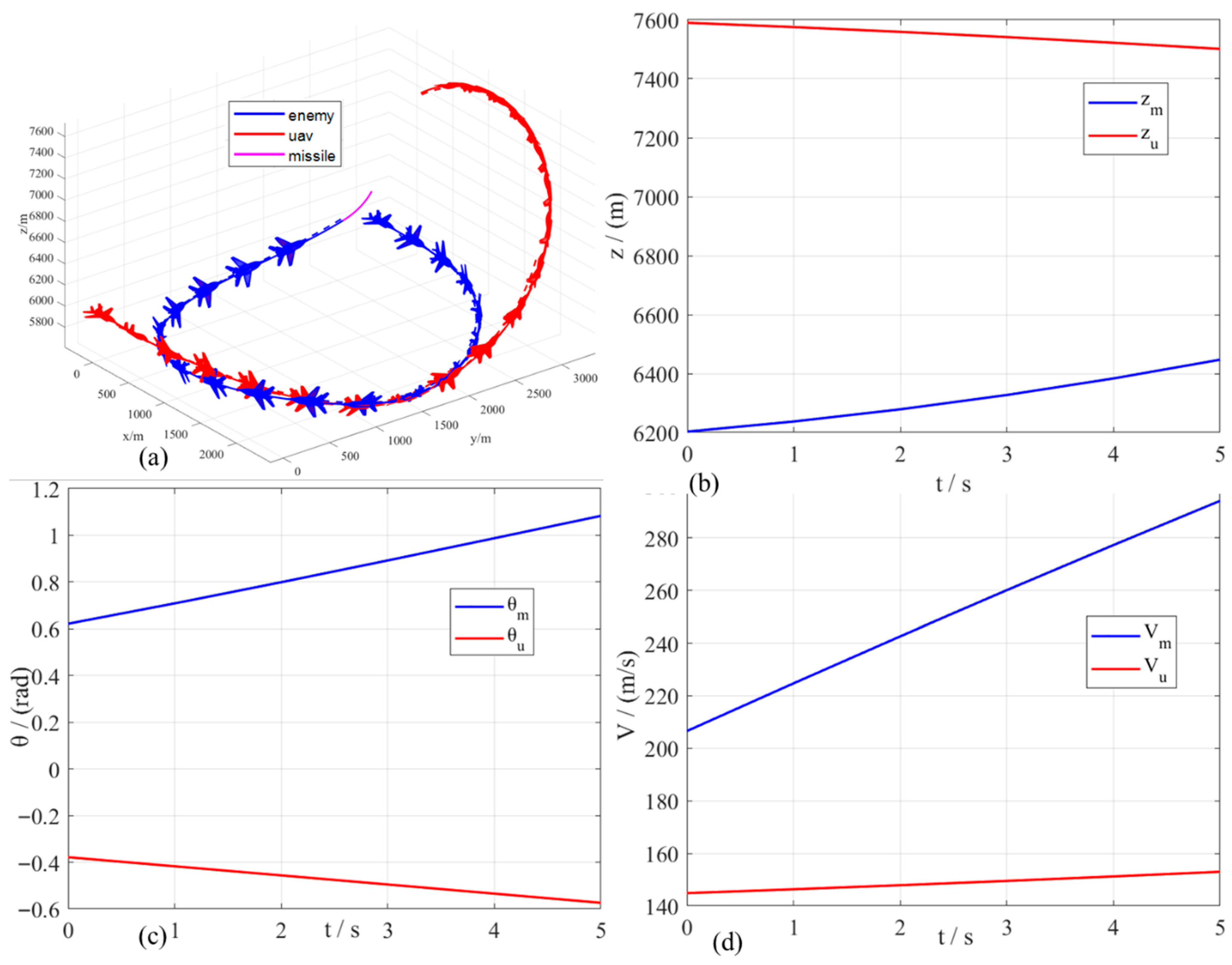

Building upon the fundamental architecture of the PPO algorithm and considering the characteristics of UAV evasion maneuvers against missiles as well as the requirements of actual missions, targeted improvements are made to the PPO algorithm. These modifications encompass the action space, state space, reward function, and network architecture.

- (1)

Hierarchical maneuver action space

The action space of classical DRL algorithms encompasses types such as discrete action space, continuous action space, multidimensional discrete action space, and hybrid action space. Drawing on action space modeling methods for air-to-air mission agents, we model the action space for UAV missile evasion. Common approaches to air-to-air mission action space modeling include the following:

The action space in the air-to-air mission exhibits continuous characteristics. Some studies utilize aircraft control stick and throttle stick deflections as maneuver control variables, solving for these variables via intelligent algorithms. In the AlphaDogFight competition, Lockheed Martin employed aircraft stick and throttle deflection as agent action parameters, solving these parameters using a DRL algorithm that outputs deflections for elevator, rudder, aileron, and throttle [

35]. References [

36,

37] selected tangential overload, normal overload, and roll angle as the agent’s action control variables. Since this paper employs a 6-DOF UAV dynamics model, the action space modeling method from [

35] can be adapted, and the proposed algorithm can be used to solve for the UAV’s maneuver control variables. However, the aforementioned action space modeling method has the following shortcomings: Since existing flight control knowledge is not integrated into the algorithm, it must learn both maneuver strategies and flight control; that is, the decision-making performance of the algorithm is tightly coupled with the UAV platform. This hinders stable aircraft control and makes the agent training process difficult to converge.

To enhance agent training efficiency, some studies have discretized the action space for air-to-air mission and modeled it as a discrete action space. This approach first constructs a maneuver action set, from which the agent selects the optimal maneuver action during each decision-making instance. Reference [

38] proposes a matrix game algorithm to address autonomous maneuver decision-making in air-to-air mission. The discrete action space constructed in this work comprises seven fundamental maneuvers: constant velocity, maximum acceleration climb, maximum acceleration descent, maximum G-load left turn, maximum G-load right turn, maximum G-load climb, and maximum G-load dive. These basic maneuvers are simple to implement, and the agent can achieve complex maneuvers by selecting different combinations of actions. However, these basic actions primarily consider the extreme cases of aircraft flying at maximum acceleration and maximum g-load, which do not align with the actual air-to-air mission process.

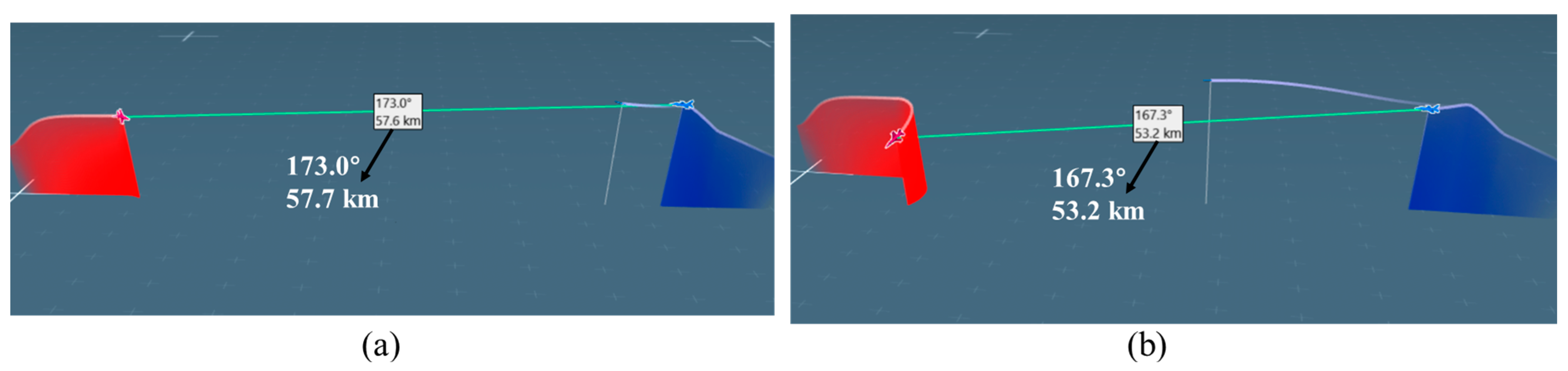

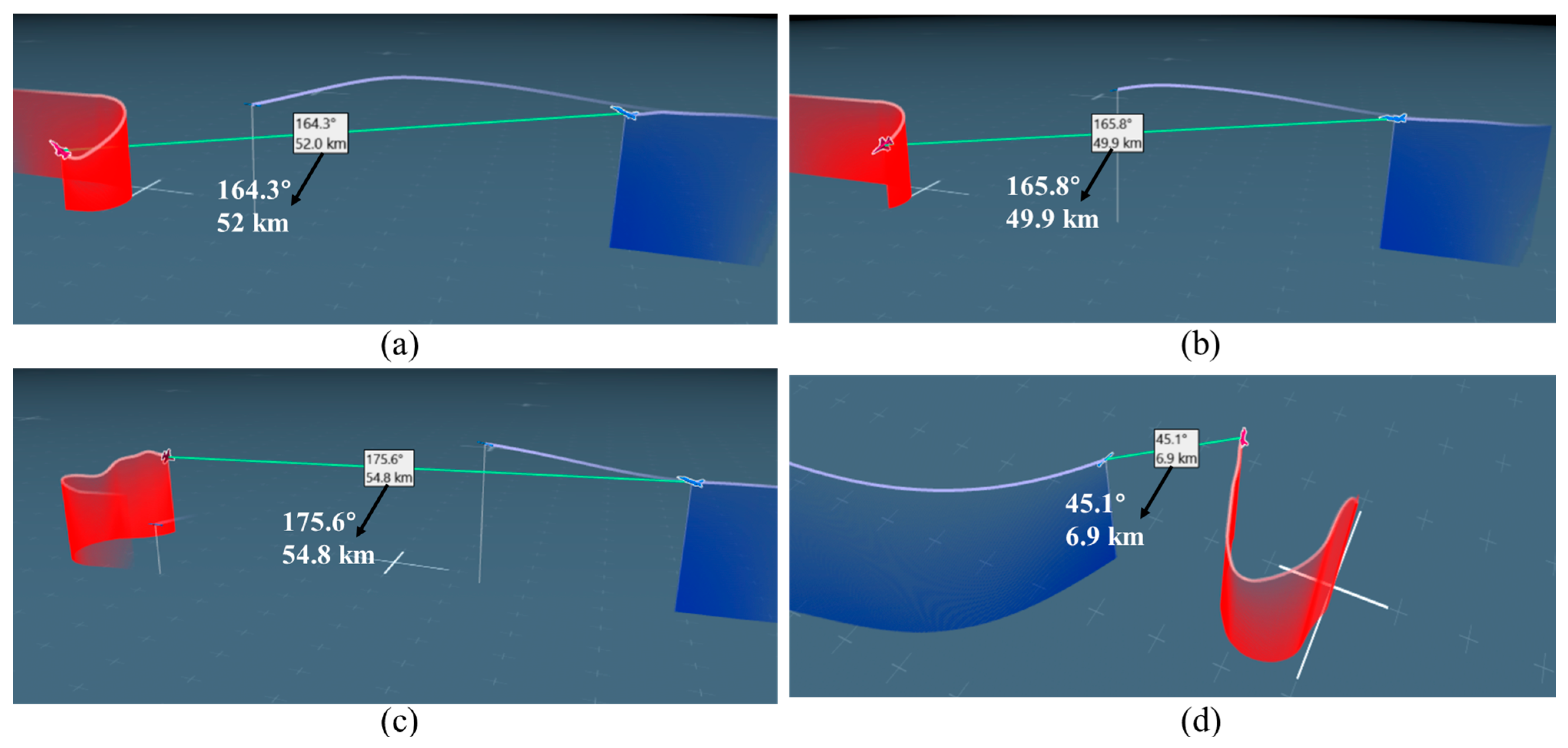

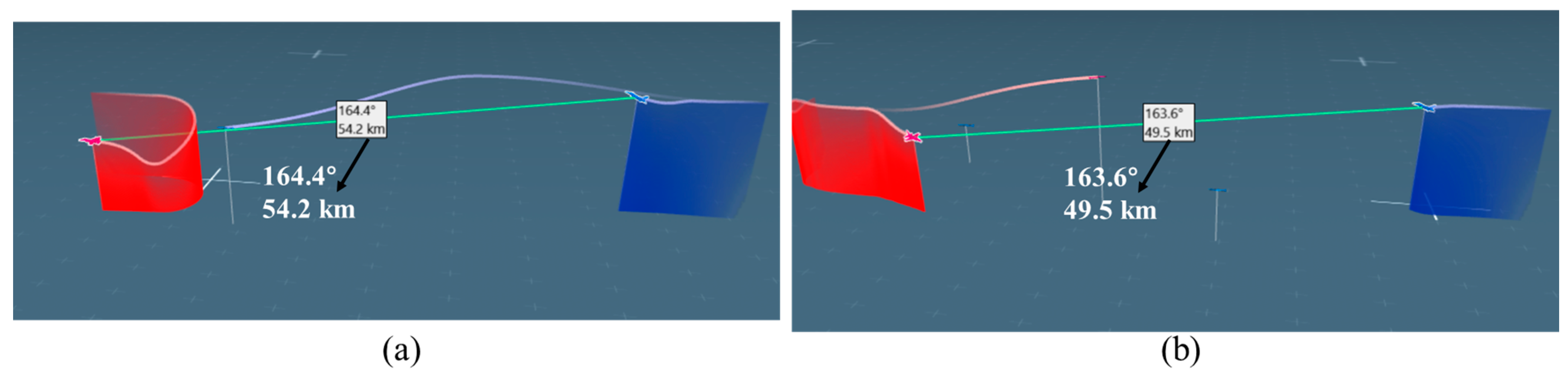

Based on the aforementioned research and considering the practical requirements of UAV evasion maneuvers against missiles as well as the need for multi-platform transferability of decision models, this paper constructs a hierarchical maneuver action space as shown in

Table 3. Since this maneuver space incorporates both discrete action sets and continuous maneuver control variables, it constitutes a hybrid action space. As shown in

Table 3, the discrete action set comprises eight maneuvers [

39]: pull-up, turn, sharp turn, barrel roll, level flight, S-maneuver, climb, and dive. The pull-up maneuver is introduced to prevent the UAV from breaching minimum altitude restrictions, while the dive maneuver is employed to lure the missile into lower altitude layers. This strategy leverages the increased aerodynamic drag at lower altitudes to dissipate the missile’s kinetic energy, thereby mitigating the threat posed by the missile. Based on the above eight fundamental maneuvers, the agent selects appropriate combinations of different actions to drive the UAV to execute complex evasive maneuvers.

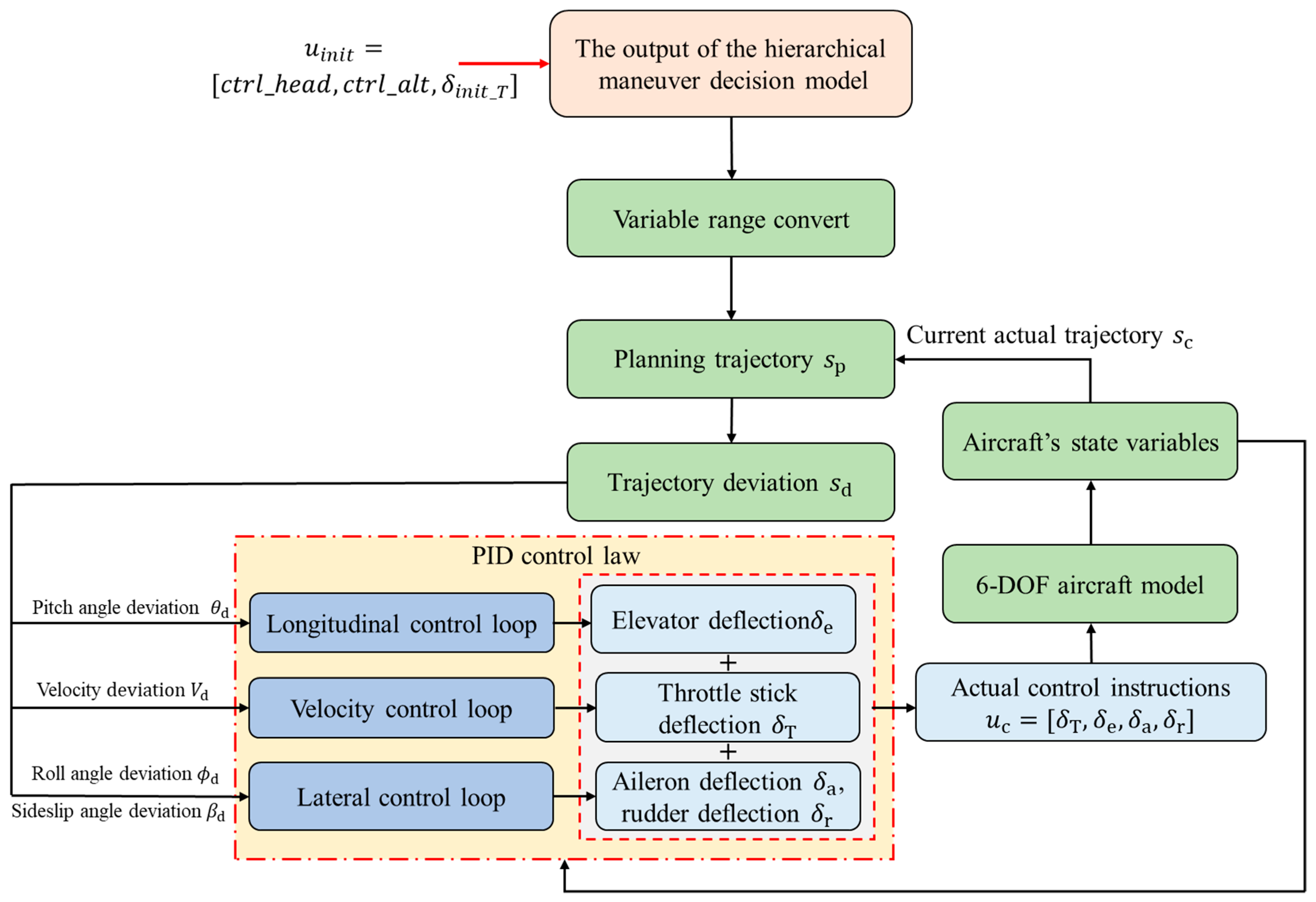

The control variables in the action space are heading, altitude, and throttle stick deflection. During training episodes, at each decision point, the UAV evasion missile hierarchical maneuver decision model selects optimized control variables from the continuous maneuver control variables based on current state features and the chosen maneuver type. The continuous maneuver control variable ranges are shown in

Table 4. The control variable ranges in

Table 4 are normalized. First, the agent solves for heading, altitude, and throttle stick control variables via the evasion maneuver decision algorithm. Next, a transformation function converts these into planned heading, altitude, and throttle stick deflection values. Then, a PID controller [

27] solves for elevator, rudder, aileron, and throttle stick deflections. Finally, the resulting stick and throttle deflections are input to the 6-DOF UAV motion model to drive the execution of evasive maneuvers.

This paper constructs a hierarchical maneuver space to decouple the decision algorithm from the simultaneous learning of flight control and evasive maneuver strategies. This approach not only reduces the complexity of evasive maneuver decision-making but also addresses the issue of limited model transferability across platforms. Furthermore, by normalizing the range of maneuver control inputs, the approach prevents extreme scenarios where the aircraft frequently operates at maximum acceleration and overload due to control values exceeding preset limits. This ensures the UAV’s maneuvering actions align with real-world capabilities.

- (2)

State space

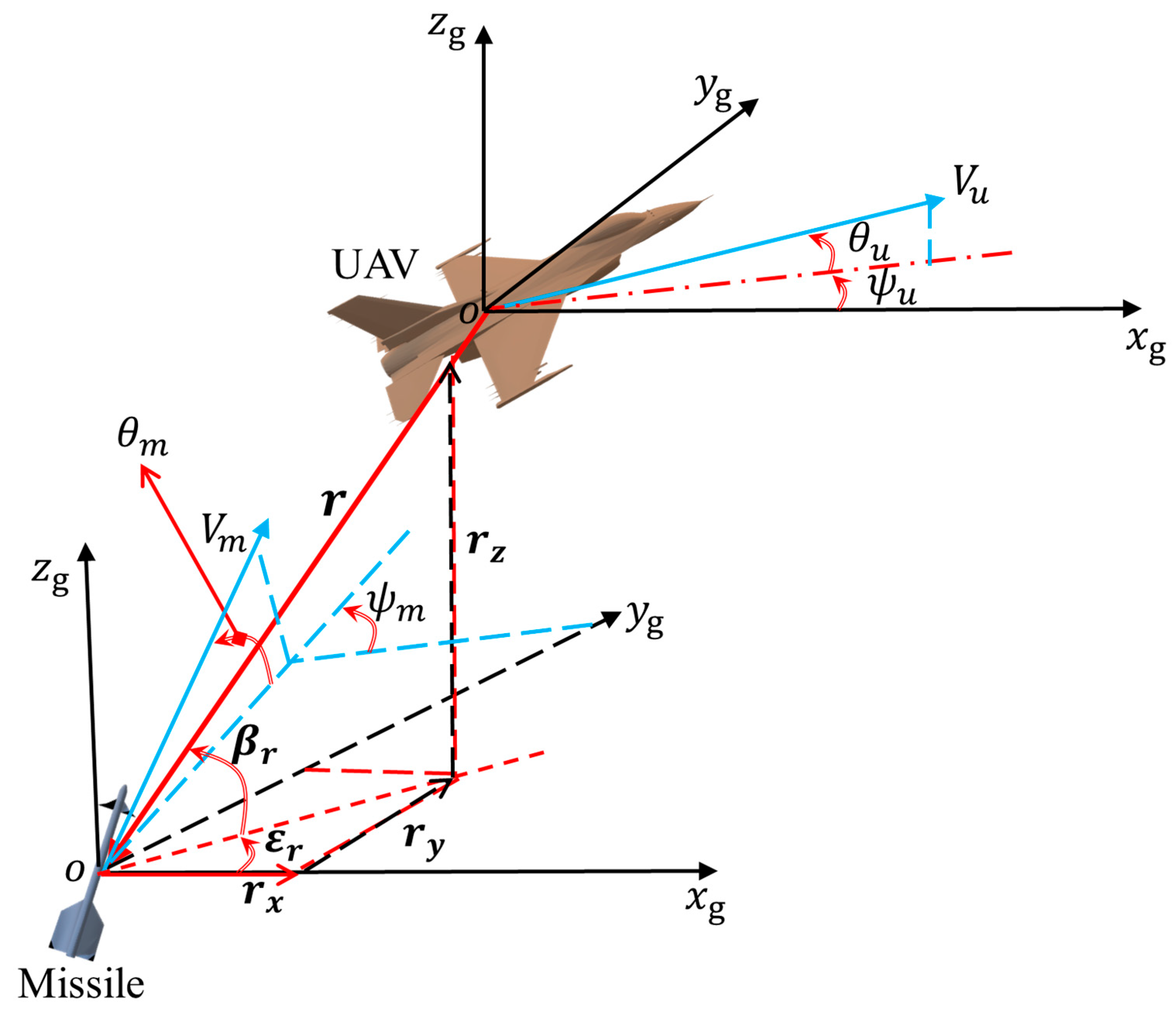

During UAV missile evasion, the state space in DRL is constructed by extracting partial UAV state variables and the relative motion characteristics between the missile and UAV. To capture the dynamic evolution of the evasion maneuvering game between the UAV and missile, the following 10 state variables are employed to construct the algorithm’s state space [

40]: relative distance between UAV and missile

, missile elapsed time

, relative heading angle

, UAV’s velocity

, UAV’s altitude

, UAV’s yaw angle

, missile’s velocity

, missile’s altitude

, missile-to-UAV LOS inclination angle change rate

, and LOS declination angle change rate

.

To eliminate the adverse effects of differing ranges for these 10 state variables on algorithm training performance, each state variable must undergo normalization processing before inputting them into the evasion missile hierarchical maneuver decision model for training. This ensures consistent input parameter ranges for the model. The expressions for normalizing each state variable are as follows.

Here, represents the status variable type identifier, which follows the same sequence as the order of status variables in the state space; denotes the status variable type currently undergoing normalization processing; and , respectively, indicate the minimum and maximum values of the current status variable; and is the normalized value of the current status variable.

- (3)

Reward function

The reward function plays a crucial role in DRL algorithms. The inherent reward function for the UAV missile evasion problem provides a reward at the end of each round based on the outcome of that round. However, such rewards are overly sparse, making it difficult to guide the agent toward learning an optimal strategy. To address the issue of reward sparsity, ref. [

41] employs reward-shaping techniques. This approach introduces process rewards to guide the agent’s training process. Based on [

39,

42], a reward function for the UAV missile evasion problem is designed as shown in

Table 5.

This paper categorizes rewards into event-based and process-based rewards. Event-based rewards are sparse, granted only upon the occurrence of specific events. Process-based rewards are dense and calculated at every time step. This category includes UAV altitude, missile-to-UAV relative distance, missile-to-UAV LOS declination angle, and LOS inclination angle. The UAV altitude reward reflects the aircraft’s potential energy advantage. This reward incentivizes the agent to execute climb maneuvers, thereby accumulating higher terminal energy. This approach enables the UAV to prioritize its own safety while leveraging its energy advantage, providing essential support for subsequent air-to-air missions. The calculation method for the UAV altitude reward is as follows.

and

denote the UAV’s altitude at time

t and its initial altitude, respectively;

is a positive coefficient primarily used to adjust the range of values for the altitude reward function.

The relative distance between the missile and the UAV refers to the distance between the UAV and the missile, which is updated at each time step. When the relative distance is less than or equal to the missile’s kill radius

, the UAV is hit by the missile, and the agent receives a penalty. When the relative distance exceeds the missile’s kill radius

, the agent receives a reward proportional to the magnitude of the distance between the UAV and the missile. This incentivizes the agent to execute tailing maneuvers or tangential maneuvers, thereby increasing the relative distance or slowing the rate of approach, thus enhancing the UAV’s survivability. The calculation method for the missile-UAV relative distance reward is as follows.

and

denote the relative distance and initial relative distance between the UAV and missile at time

t, respectively;

is a positive coefficient primarily used to adjust the value range of the relative distance reward function.

The LOS declination angle between the missile and the UAV refers to the angle between the horizontal projection of the LOS vector and the horizontal coordinate axis. As the LOS declination angle increases, the missile is forced to turn to maintain tracking of the UAV, consuming missile energy and increasing the UAV’s success rate in evading the missile. Under the aforementioned conditions of increased LOS declination angle, the reward value obtained by the agent for this parameter increases, which helps guide the agent to execute turning maneuvers. The calculation method for the LOS declination angle reward between the missile and UAV is as follows [

43].

and

denote the LOS declination angle between the UAV and missile at time

t and initial LOS declination angle, respectively;

and

are positive coefficients primarily used to adjust the value range of the LOS declination angle reward function.

The LOS inclination angle between a missile and a UAV refers to the angle between the LOS vector and the horizontal plane. An increased LOS inclination angle induces the missile to follow the maneuver, thereby consuming missile energy and disrupting its stable tracking conditions. When the LOS inclination angle is negative and its absolute value increases, the agent receives a higher reward value for this parameter. This encourages the agent to execute a dive maneuver, which accelerates missile energy depletion by leveraging greater air resistance at low altitudes while simultaneously disrupting the seeker’s tracking conditions using ground clutter at low altitudes. The calculation method for the LOS inclination angle reward between the missile and UAV is as follows [

43].

and denote the LOS inclination angle between the UAV and missile at time t and initial LOS inclination angle, respectively; and are positive coefficients primarily used to adjust the value range of the LOS inclination angle reward function.

It should be noted that in the reward functions described above, the coefficients

,

and

serve to eliminate the impact of differences in the value ranges of individual reward functions on the overall reward function. Literature [

39,

43] provides detailed introductions and experimental analyses regarding the setting of these coefficients. This paper adopts the relevant coefficient setting methods from the aforementioned references.

By integrating the event reward values from

Table 5 and the process reward function calculations described above, we compute the overall event reward and overall process reward separately. Building upon this foundation, we draw inspiration from exploratory learning theory to design a temporal decay factor. This factor is multiplied by the overall process reward, and the resulting reward is then added to the overall event reward to yield the algorithm’s total reward. The calculation method for the overall event reward

is as follows.

denotes the reward received by the agent after the event that terminates the current training episode occurs.

to

represent the following rewards, respectively: UAV landing reward, UAV hit by missile reward, UAV speed less than the set minimum speed reward, missile landing reward, missile speed less than the set minimum speed reward, UAV close-range evasion of missile reward, exceeding the maximum simulation time reward, and missile loss of target reward. During each training episode, not all eight events listed above necessarily occur. Only when the triggering condition for a specific event is met will its reward be assigned; rewards for other events are set to zero during that episode.

The calculation method for the overall process reward

is as follows.

At each time step, the process rewards described above are first assigned different values based on the relative posture between the UAV and the missile. The total process reward for that time step is then obtained by summing all process rewards.

The calculation method for the overall algorithm reward

is as follows.

serves as a temporal decay factor, designed to minimize undue human influence on the agent during strategy exploration. n represents the current training episode count of the UAV evasion maneuver decision-making model. and represent the weight coefficients for event rewards and process rewards, respectively, with +. They are primarily used to adjust the influence of these two reward types on the agent’s training process. is the attenuation coefficient of process rewards, which is mainly used to control the attenuation rate of the influence of process rewards on the agent’s policy search process. Since event rewards are sparse, relying solely on them to guide model training would hinder convergence and prevent the agent from learning effective strategies. The introduction of process rewards integrates expert knowledge into the training process, guiding the agent to search for optimized strategies during the early stages of algorithm iteration. In the later stages of model training, the agent has developed a certain capacity for strategy optimization. At this point, the role of process rewards diminishes, meaning the influence of expert experience on the model training process decreases. The agent primarily relies on event rewards to guide its autonomous exploration of strategies.

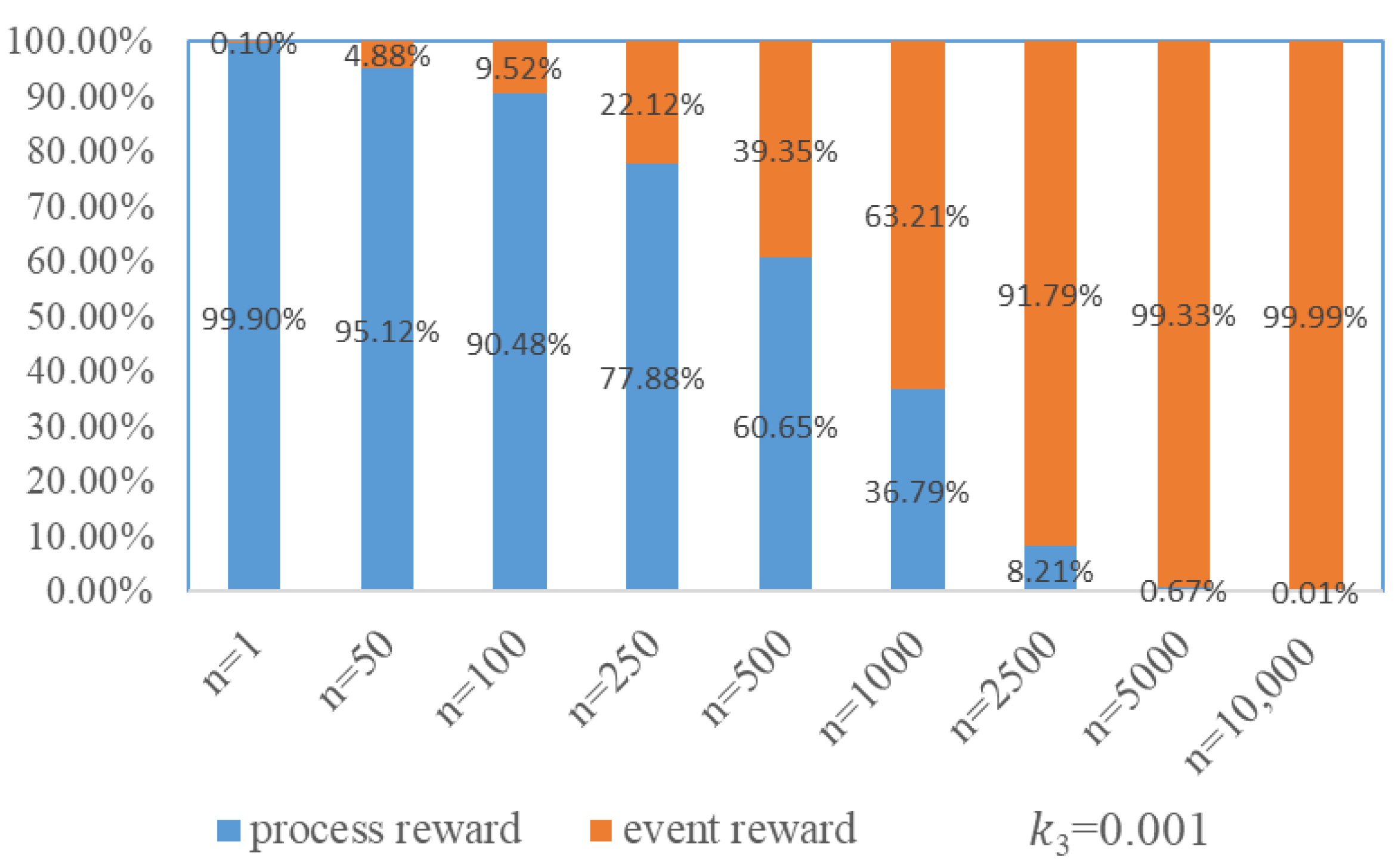

When

= 0.001, as

n increases, the proportion of event rewards and process rewards in the overall algorithmic reward varies with the count of training episodes, as shown in

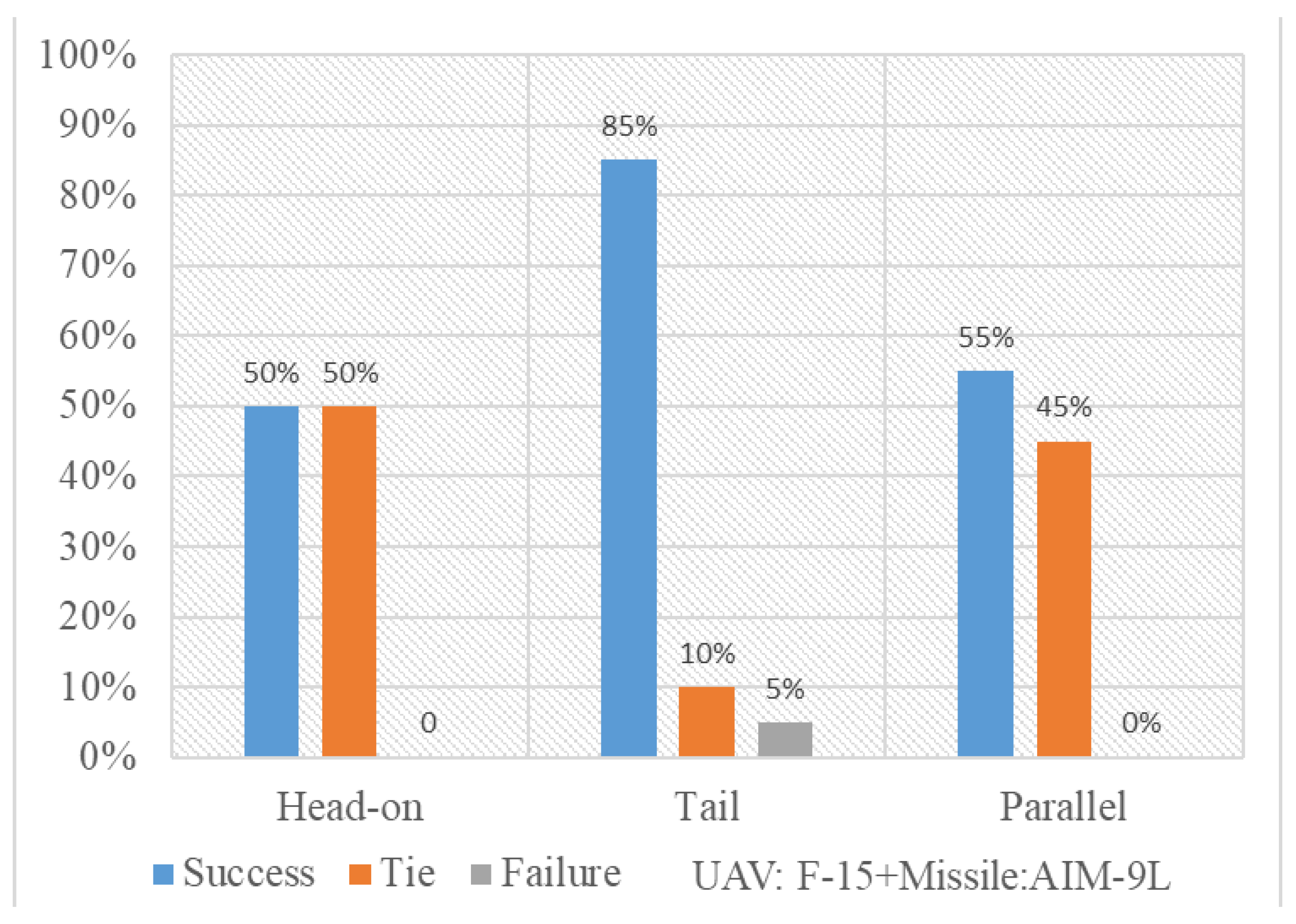

Figure 5. During the initial phase of training the evasive maneuver decision-making model, event rewards account for a smaller proportion. At this stage, expert experience primarily guides the agent in searching for maneuver strategies. As training progresses, when the model reaches 1000 training episodes, event rewards constitute 63.21% of the total. At this point, the role of expert experience in guiding the agent’s search for maneuver strategies diminishes, and the agent increasingly relies on autonomous exploration to search for maneuver strategies. By the 2500th episode, event rewards reach 91.79%. At this point, the guiding influence of process rewards on the agent’s maneuver strategy search further diminishes. This helps mitigate the limitation where the agent’s learning process is overly influenced by expert knowledge, which would otherwise confine its autonomous maneuver decision-making capabilities to the level of expert knowledge. Furthermore, the guiding role of process rewards during the early training phase prevents the agent from engaging in excessive blind exploration, which is beneficial for accelerating the convergence of the UAV evasive maneuver decision-making model training process.

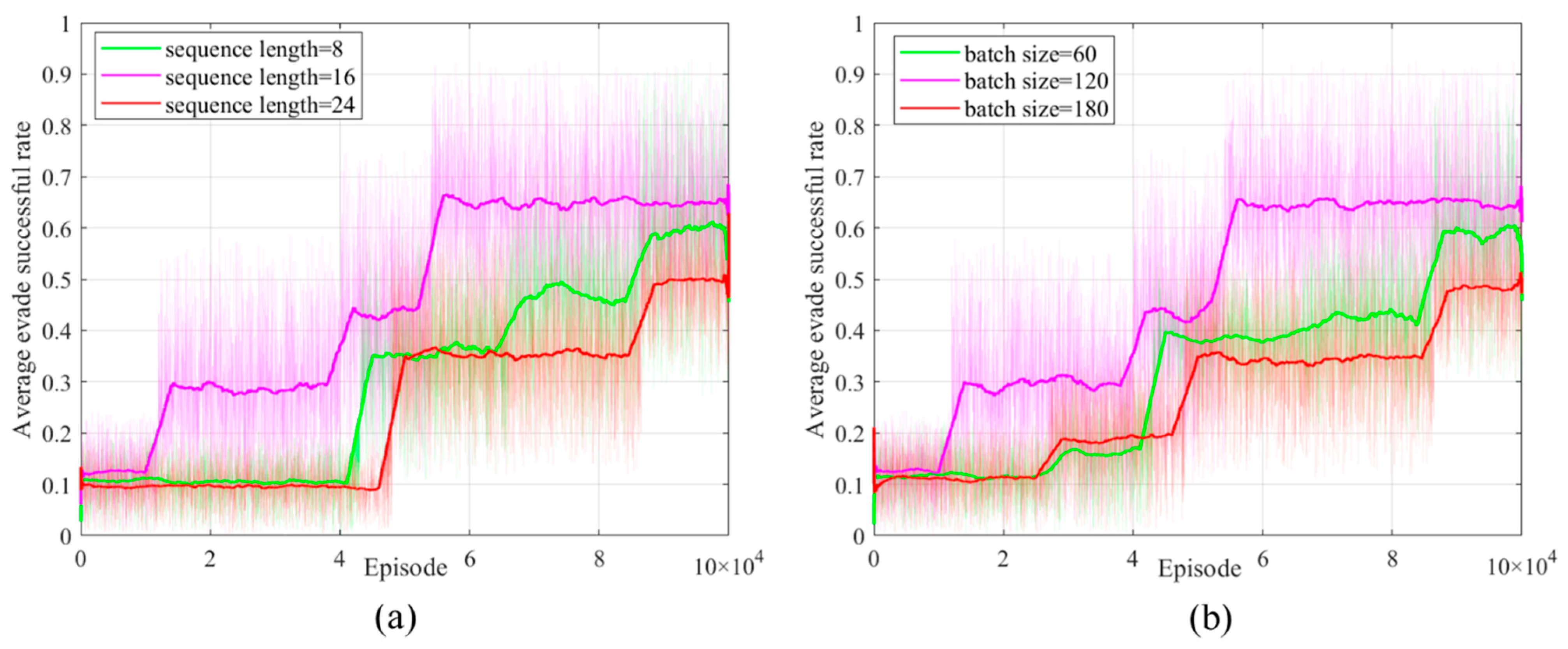

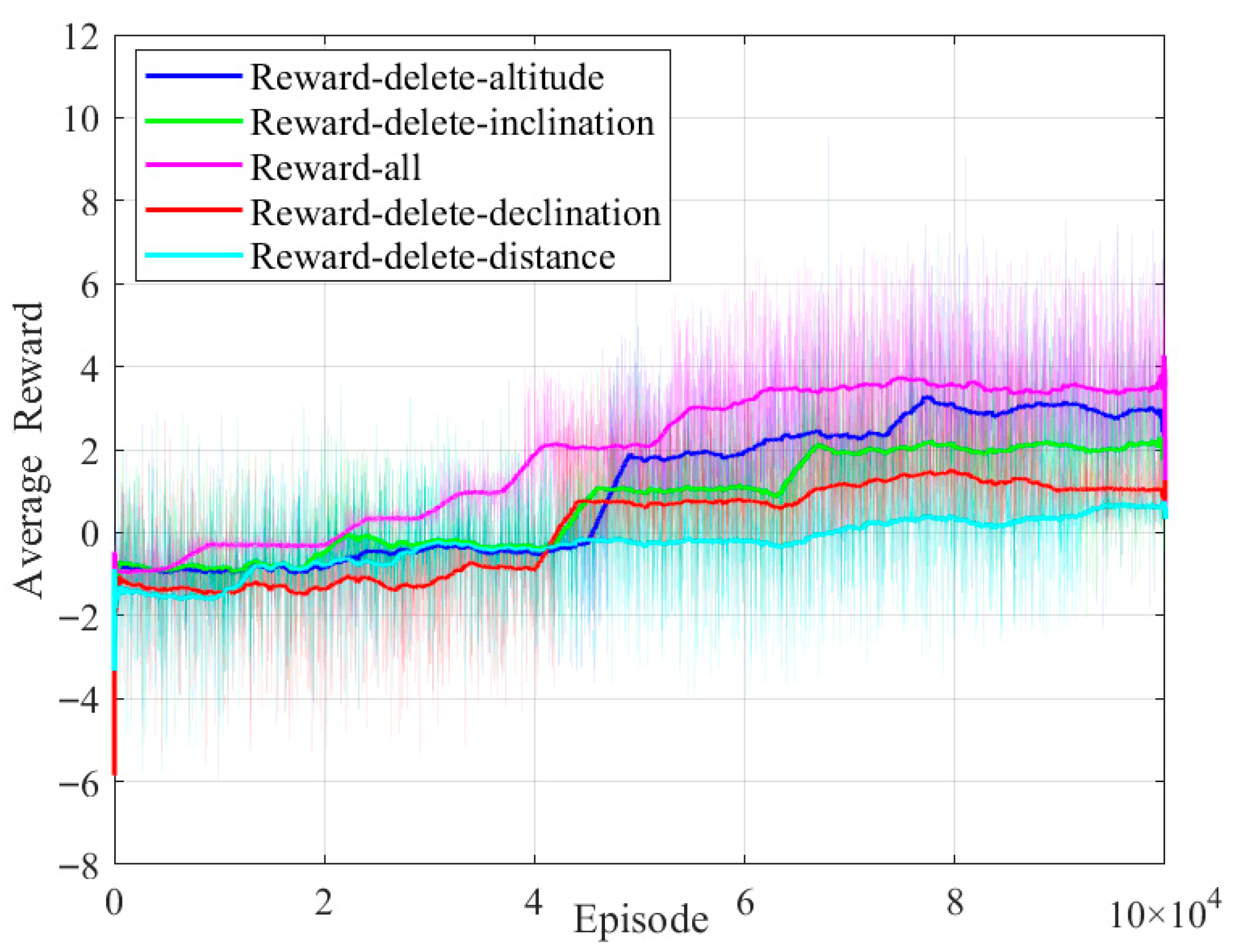

Next, comparative experiments are conducted on the values of

,

, and

to determine their optimal settings. During the training of the UAV evasion maneuver decision-making model, statistical analysis of the average reward value’s variation with training episodes provides support for determining these parameters.

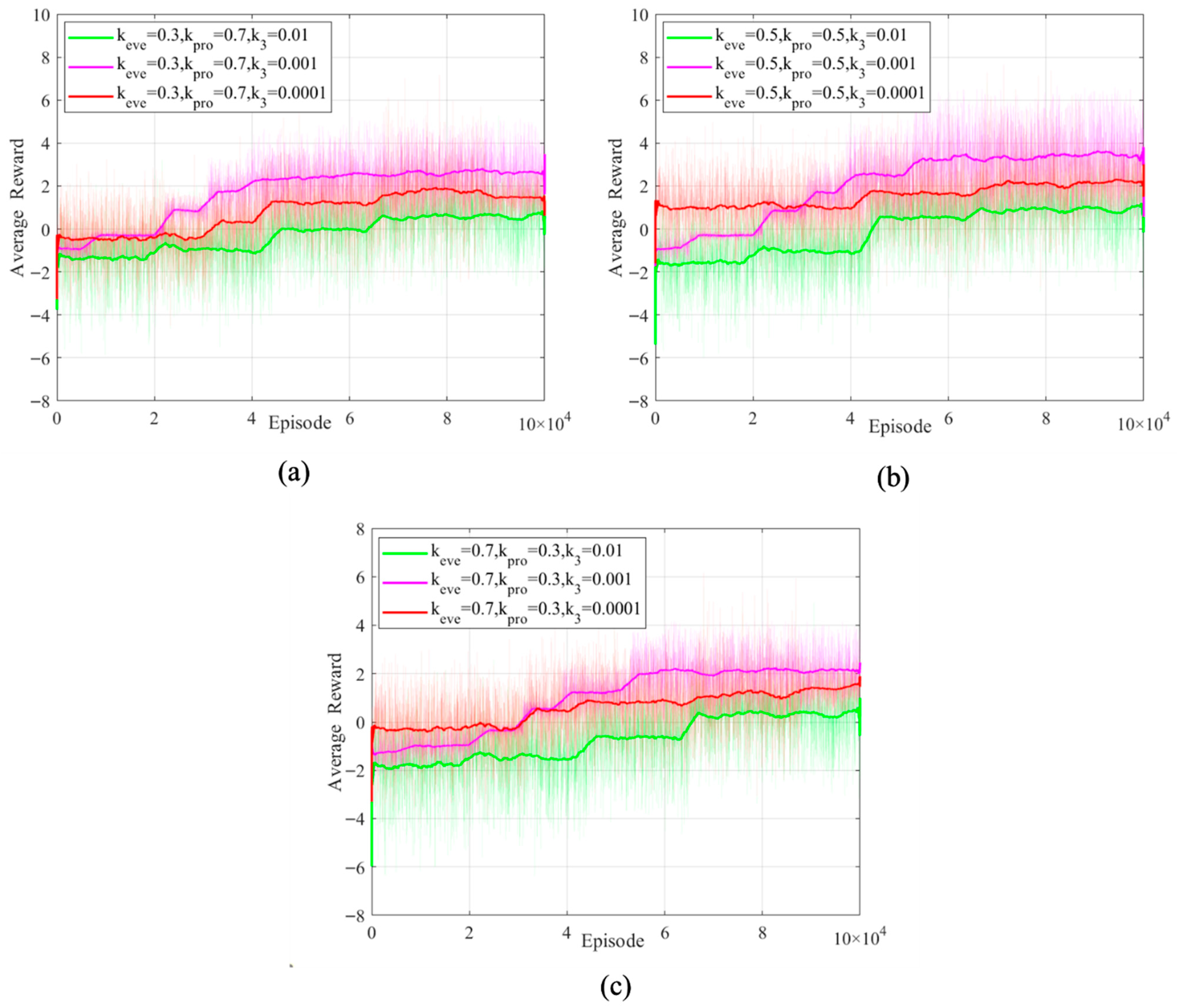

Figure 6 illustrates the curve depicting the average reward value’s change.

Under different combinations of reward weight coefficients, when

and

, the average maximum reward value in

Figure 6b exceeds that of the other two combinations. With fixed reward weight coefficients, when

, the agent achieves the lowest average reward value. When

, the agent ranks second in average reward during the early training phase and first in the late training phase. When

, the agent achieves the highest average reward during the early training phase, but the average reward growth slows significantly in the later training phase. This is primarily attributed to the parameter setting favoring expert knowledge guidance during the initial training process. However, this also tends to confine the agent’s evasive maneuver decision-making to expert-level capabilities, limiting its ability to explore new strategies. Based on the above analysis, this paper sets the reward weighting coefficient and process reward decay coefficient as follows:

,

.

- (4)

Network architecture

By adopting the PPO algorithm framework from

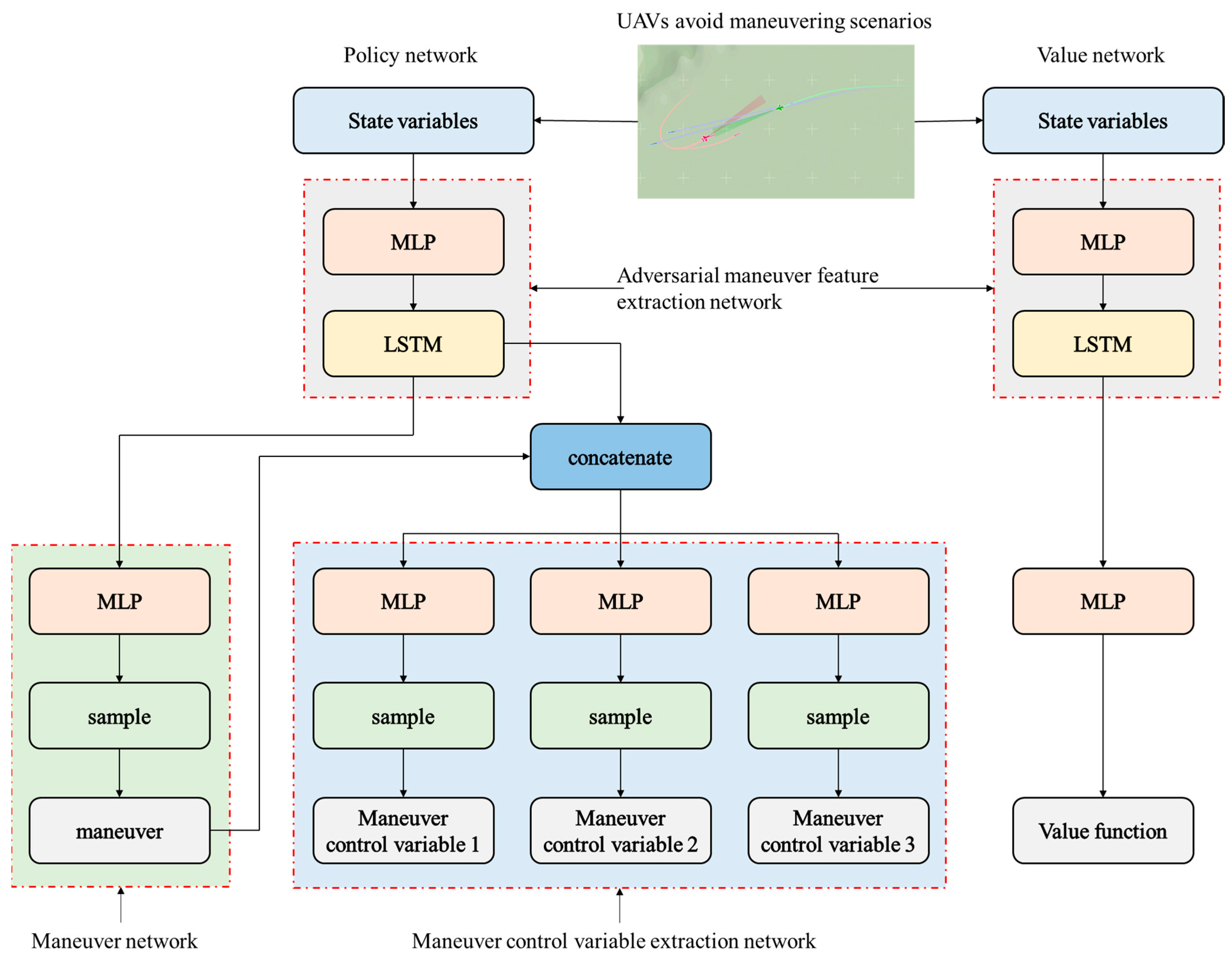

Section 3.1.1 and the state space, action space, and reward function design methods proposed in this section, this paper presents an improved PPO algorithm, namely the ARMH-PPO algorithm. Its main structure is illustrated in

Figure 7. The ARMH-PPO algorithm retains most settings of the PPO algorithm and adopts an actor-critic structure comprising a policy network and a value network. The policy network outputs evasive maneuvers based on the current state, while the value network evaluates the current state and outputs an estimate of the state value function.

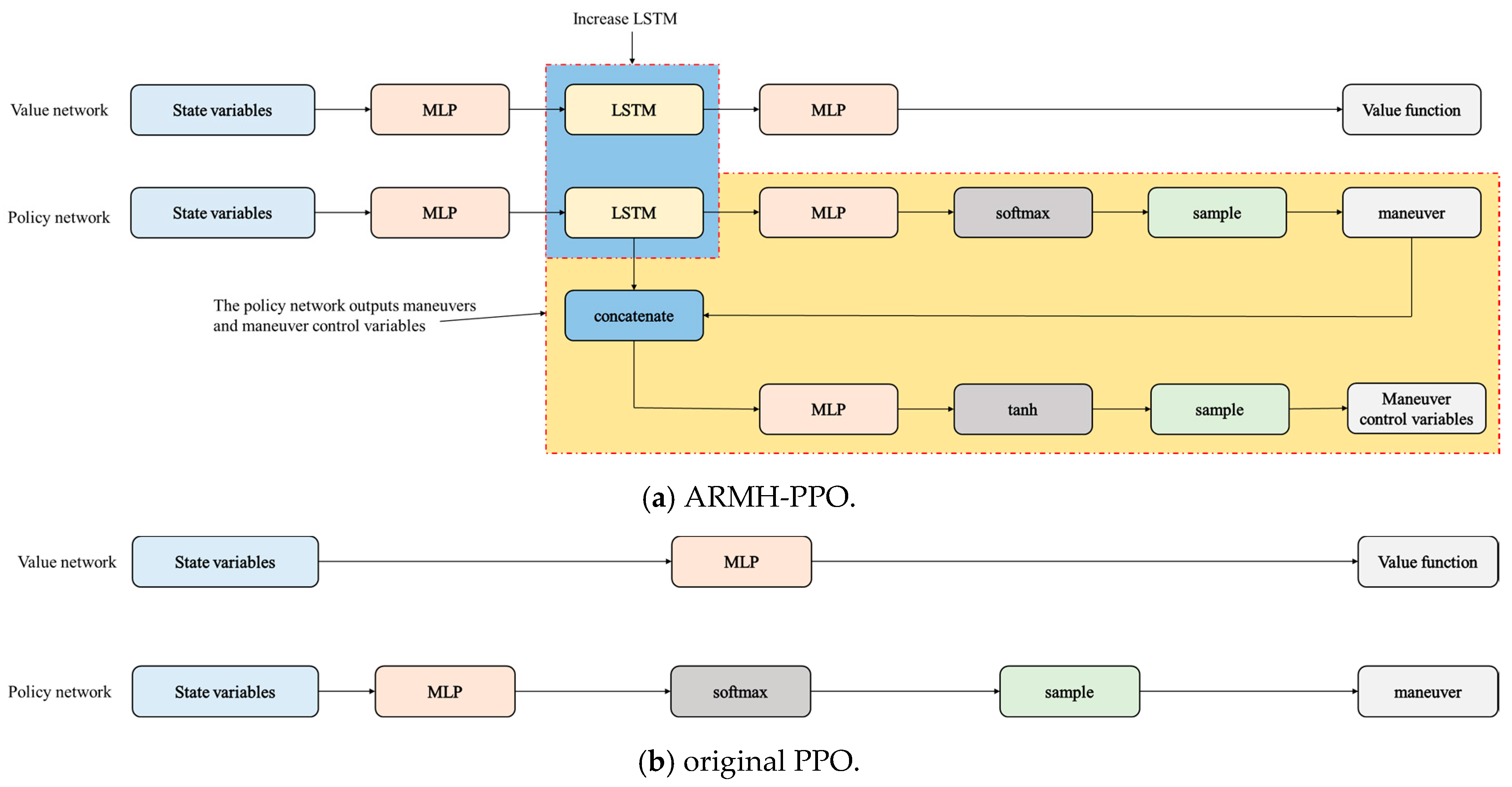

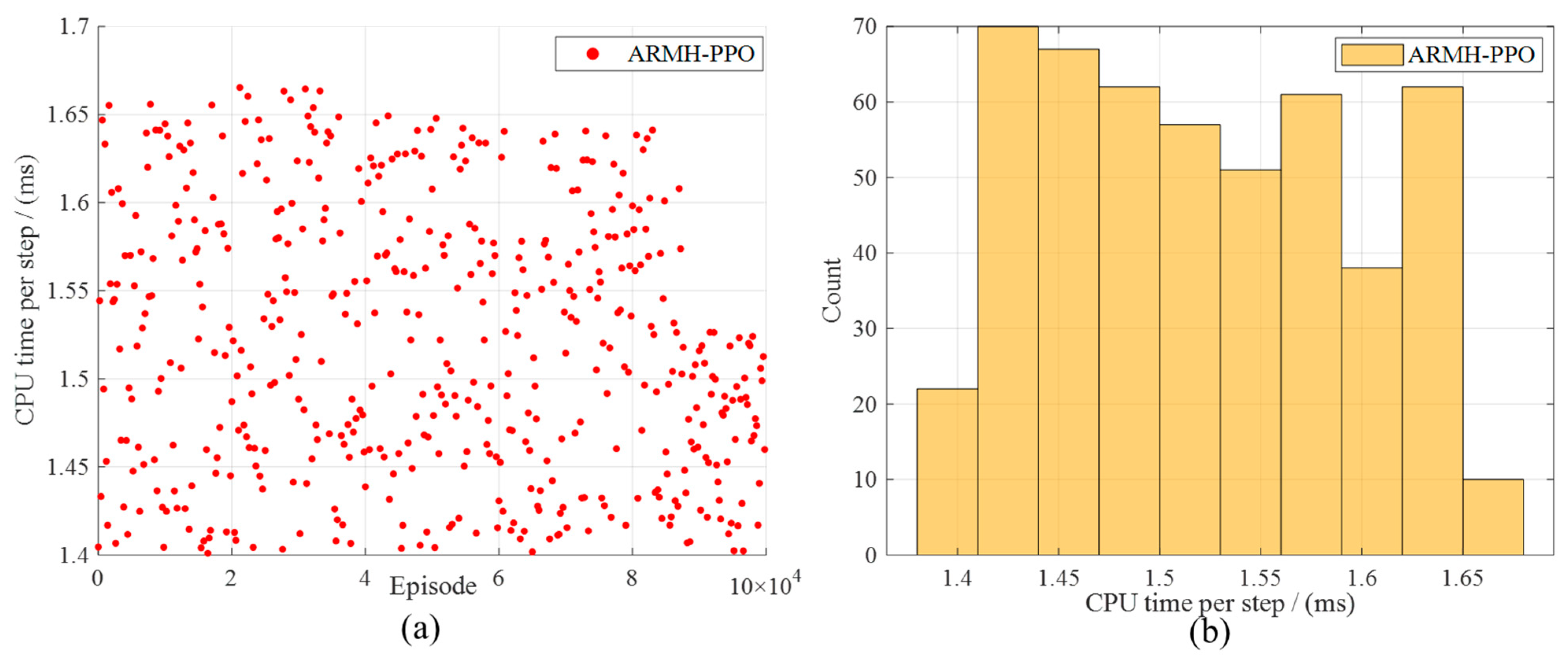

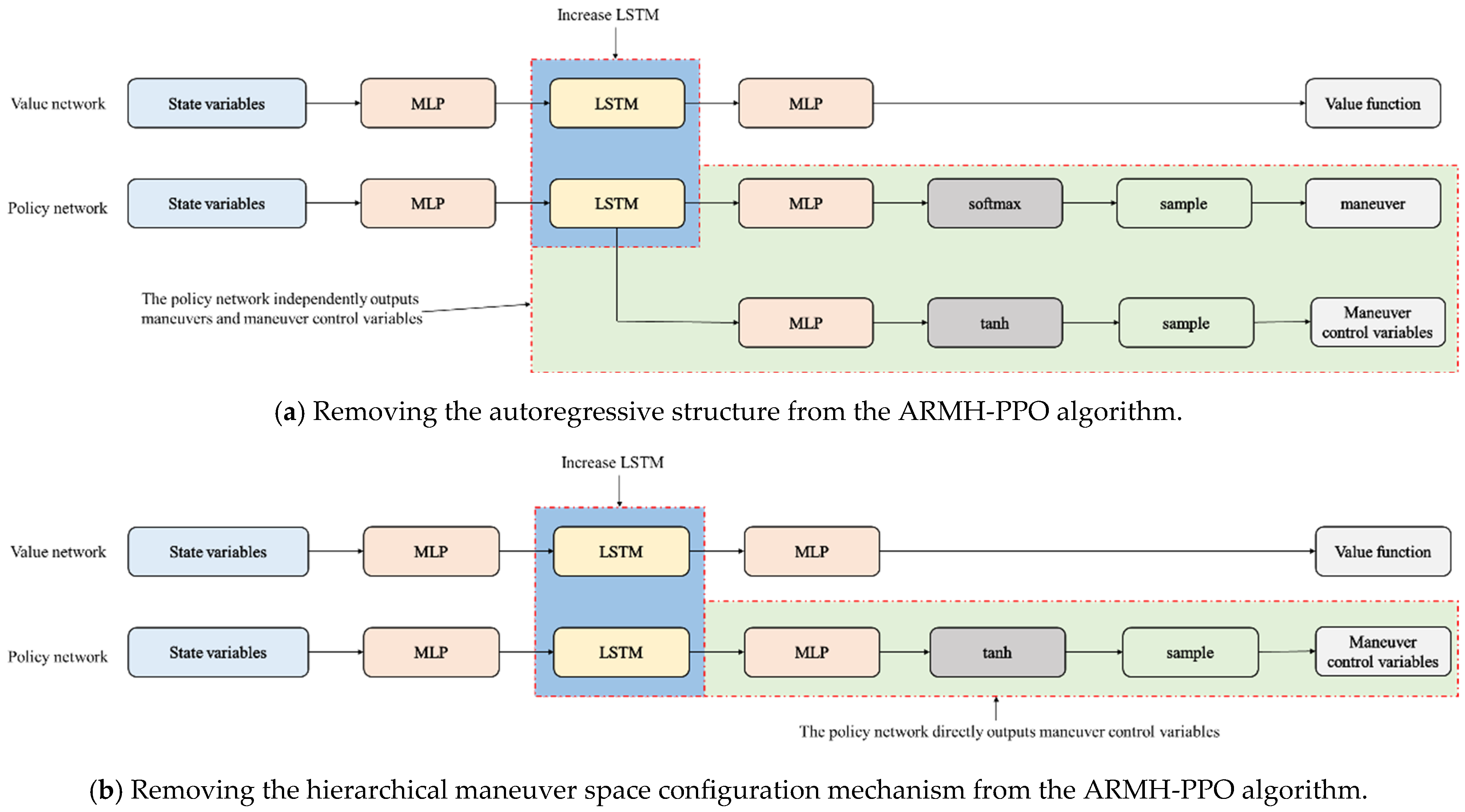

Figure 8 compares the original PPO algorithm architecture with the ARMH-PPO algorithm structure. Compared to the original PPO algorithm, the ARMH-PPO algorithm introduces the following key improvements:

(1) Incorporate LSTM networks into the policy network and value network. Given that UAV evasion maneuver decision-making exhibits sequential decision characteristics, and considering LSTM’s superiority over fully connected networks in processing time series data [

44], LSTM is employed to process observations during the evasion maneuver game process, thereby extracting features from the game time series. The adversarial feature extraction network depicted in

Figure 7 illustrates this methodology.

(2) By adopting an autoregressive structure, the improved PPO algorithm is made applicable for solving problems involving both discrete actions and continuous control variables within hierarchical maneuver action spaces. The traditional PPO algorithm is only applicable to decision-making in a single action space, i.e., scenarios involving either continuous actions or discrete actions. Decision models constructed based on this method struggle to address evasive maneuver decision problems across different UAV and missile platforms. To address the hierarchical maneuver action space proposed in this paper and uncover the relationship between maneuver action types and maneuver action control variables—thereby enhancing the stability of UAV evasive maneuvers—the policy network is modified. The improved policy network comprises a maneuver action network and a maneuver action control variable network, as illustrated in

Figure 7. The maneuver action network outputs selection probabilities for each action within the discrete action set, with maneuvers obtained through sampling; The maneuver control variable network adopts an autoregressive-like structure. It takes the UAV evasion maneuver adversarial features extracted by LSTM and the sampled maneuvers as inputs. First, subnetworks separately output the mean of the continuous action control variables. Then, combining the variance of the maneuver control variables, a normal distribution is constructed. Finally, the maneuver control variables are sampled from this distribution. In the ARMH-PPO algorithm, a complete action

a comprises the maneuver type

m and the corresponding maneuver control variable

x. Since different maneuvers have distinct control variable ranges, a strong correlation exists between maneuver control variables and types. To reinforce this relationship, an autoregressive form is adopted, explicitly requiring maneuver types as inputs to the maneuver control variable network. Thus, the relationship between maneuver types and control variables is defined as follows:

Here,

z represents the evasive maneuver countermeasure features extracted by the LSTM network,

m denotes the sampled maneuver type,

x is the corresponding control variable for the maneuver, and

is the function represented by the maneuver control variable network. Since the maneuver type

m is also the output of the policy network—meaning the policy network uses its own output as input—this resembles the autoregressive text generation approach in Chinese information processing. Therefore, borrowing the concept of autoregression, the algorithm is said to possess the characteristic of autoregressive generation of maneuvers. In

Section 4.2.2, the effectiveness of the autoregressive structure is validated through the implementation of ablation experiments.