1. Introduction

In recent years, through the approach of manned–unmanned teaming, unmanned aerial vehicles (UAVs) have been playing increasingly important roles in various fields, such as emergency rescue, maritime enforcement, agricultural irrigation, environmental monitoring, traffic management, public safety, and healthcare [

1]. This expanded reliance, however, imposes heightened demands on human operators. Prolonged operation and sustained attention can easily lead to operators’ mental fatigue, resulting in slower reaction time, spatial disorientation, impaired judgment, and reduced control precision. These factors then result in increased probabilities of operational errors and accidents, thereby posing a serious threat to overall system safety [

2].

The operational risks are exacerbated in dynamic operational scenarios, such as sudden weather changes, signal interference, or equipment failure, which can compromise UAV stability and endurance. A recent human factors analysis and classification system (HFACS) analyzed 77 UAV accidents and found that up to 55% of the leading causes were operator decision errors [

3]. This often occurred when operators faced an unexpected hazard, while taking manual control or overrode automation due to uncertainty, sometimes exacerbating the situation instead of recovering from it. Additionally, Ghasri and Maghrebi once adopted 138 UAV incident records across Australia for failure analysis, and they found that operators’ loss of awareness is a critical factor [

4]. In so far, the civil and aviation safety authority (CASA) regulations require operators to have remote license to fly drones larger than 2 kg, which in turn is an indication of a proactive approach regarding human factors. Similar incidental crashes were identified and analyzed in [

5,

6].

According to the National Transportation Safety Board (NTSB) investigation, spatial disorientation and the operator’s stress may lead to incorrect operations, ultimately causing the accident. The NTSB concluded that the accident might have been avoidable if timely intervention had been implemented to help the pilot regain a normal cognitive state and operate correctly. Therefore, to mitigate such risks, it is essential for operators to maintain a high level of cognitive readiness, enabling them to anticipate hazards and sustain situational awareness. Consequently, the real-time monitoring and assessment of the operator’s cognitive state is crucial for facilitating timely interventions, enhancing decision-making, and ultimately ensuring mission efficacy and safety.

Cognitive state, defined as the internal state generated during an individual’s interaction with external objects [

7], effectively reflects an operator’s control capacity. Therefore, real-time assessment of cognitive state holds significant practical importance, as it enables timely intervention to prevent serious flight safety incidents [

8]. Current methodologies for cognitive state recognition largely follow a dichotomy, relying on either non-physiological or physiological signals. The former, which involves modalities such as text, voice, facial expressions, and gestures, constitutes the foundation of most existing studies [

9,

10,

11]. Nevertheless, this approach is inherently limited in reliability, as it is susceptible to deliberate concealment, where individuals may voluntarily mask their facial cues or modulate their tone. In comparison, physiological signals offer superior reliability due to their involuntary nature, which prevents subjects from deliberately masking them [

12].

Electroencephalography (EEG) has emerged as the preferred non-invasive modality for cognitive state assessment among all available physiological signals, recognized for its reliability and effectiveness. This recognition stems from its ability to directly and effectively capture the brain’s electrical activity, a property that has led to its widespread application in the field of cognitive state research [

13,

14,

15]. With the advancement of sensor networks [

16,

17], intelligent sensing system [

18,

19,

20], and energy-efficient biomedical systems [

21,

22], EEG-based methods have gained feasibility under varied application domains. There is a growing recognition that the performance of evaluation models is highly dependent on the quality of the input EEG signal. This has spurred the parallel development of advanced EEG pre-processing and artifact-removal pipelines tailored for portable, low-intrusiveness systems [

23,

24,

25,

26,

27]. Such robust pre-processing is paramount for the practical deployment of cognitive monitoring in operational environments like UAV control. Consequently, a significant body of work now utilizes these quality-assured signals as the foundation for reliable cognitive state evaluation.

Yang et al. [

28] introduce a real-time emotion detection algorithm for UAV operators, leveraging 2D feature maps and CNN analysis. The approach transforms 1D EEG signals into 2D representations via Differential Entropy (DE) extraction, 2D mapping, and sparse computation; the CNN then exploits its superior capability for automatic deep feature learning to decipher the embedded emotional information, accomplishing successful three-state classification. Shi et al. [

29] propose a multi-source domain adaptation method for EEG-based emotion recognition. It first transfers multiple source domains to the target domain individually to mitigate interference from diverse EEG sources; then employs a feature processing mechanism to progressively disentangle domain-specific interference factors like physiological differences and emotional fluctuations; finally, introduces a domain-specific classifier based on a Long Short-Term Memory network to capture temporal dependencies and enhance the model’s capacity for complex feature expression.

Jiang et al. [

30] adopted an attention-based multi-scale feature fusion network (AM-MSFFN) and achieved state-of-the-art performance in EEG-based emotion recognition. Specifically, the classification accuracy for both arousal and valence dimensions exceeded 99% on the DEAP dataset. By integrating multi-scale feature extraction and the attention mechanism, this model effectively addressed subject-specific variations and noise interference, thereby resolving the generalization issue. Tang et al. [

31] proposed a graph representation learning framework, which can automatically construct and optimize the graph structure of EEG signals for classification. This model demonstrated outstanding performance in both subject-dependent and subject-independent experiments, exhibiting strong generalization ability. Xie et al. [

32] converted EEG signals into time-frequency images and applied a convolutional neural network, achieving a high classification accuracy of 88.83% in the automatic sleep staging task.

Although EEG-based cognitive state assessment has proven to be effective, EEG signals exhibit limitations, such as non-stationarity, instability, and nonlinearity. Coupled with differences in subjects’ head shapes, brain patterns, and habits, EEG signals become highly complex, significantly impacting the generalization capability and accuracy of assessment methods [

14]. In contrast, brain functional connectivity quantifies the strength and directionality of functional connections, reflecting associations between different brain regions, thereby providing deeper insights into information flow and integration within the brain, offering better accuracy [

33].

However, cross-subject generalization is crucial for addressing the aforementioned issue of individual differences. Due to the significant inter-subject variability inherent in EEG signals, models trained on data from one subject often fail to generalize effectively to others, substantially limiting the practical deployment of cognitive state assessment models. To mitigate this, researchers have proposed various cross-subject approaches. For instance, Xiong et al. [

34] adopted a multi-source domain adaptation method to reduce interference from diverse EEG sources, while Zhang et al. [

35] combined source microstate analysis with style transfer mapping, achieving performance in cross-subject emotion recognition that surpassed traditional features such as differential entropy. Nevertheless, existing methods still exhibit limitations: most approaches relying on raw EEG signals or conventional features may not adequately capture the fundamental characteristic of inter-regional neural coordination within the brain.

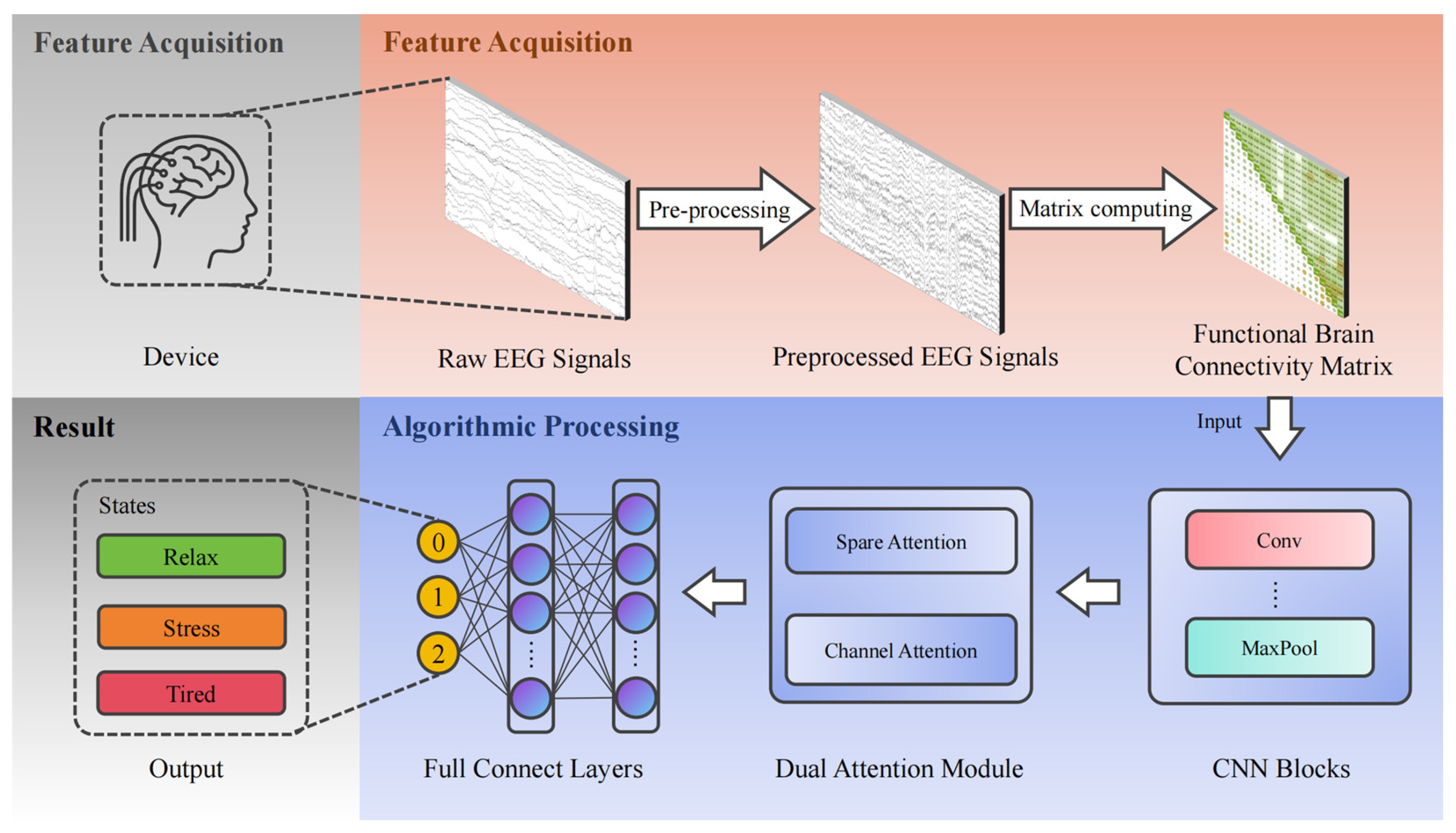

Therefore, this paper addresses the aforementioned concerns and establishes a UAV operator’s cognitive state evaluation model, namely the dual attention module-based convolutional neural network (DAM-CNN). Specifically, this study adopts brain functional connectivity for evaluation rather than standard EEG signals, providing a richer spatial, frequency, and inter-regional brain activation information. In addition, this study proposes an advanced dual-attention mechanism by integrating Position Attention Module (PAM) and Channel Attention Module (CAM) to understand contextual dependencies. Then the model has been empirically validated across different subjects to demonstrate its solid generalization. Experimental verification shows that the recognition accuracy of this method is significantly improved, demonstrating its practical values for promoting dynamic human–UAV collaboration and ensuring smooth interaction. The detailed cognitive state assessment workflow is presented in

Figure 1.

2. Model Establishment

2.1. Cognitive State Classification Process

During EEG signal acquisition, we cannot directly obtain usable brain functional connectivity matrices. It is often necessary to filter clutter from the raw signals, select the required electrical signals, and finally compute the correlation coefficient matrix from the processed signals. This matrix is then used as the dataset input to a CNN model for training, resulting in a classifiable model.

Electrodes were positioned based on the international 10–20 system using a 20-channel Ag/AgCl active EEG system. The electrodes used were: Fp1, Fpz, Fp2, F7, F3, Fz, F4, F8, T7, C3, Cz, C4, T8, P7, P3, Pz, P4, P8, O1, and O2. Throughout the acquisition step, the electrode impedance was maintained below 5 kΩ, as per the specifications of the Winfull Instruments Co., Ltd. (Shanghai, China) system. This threshold is within the established standard for high-quality EEG recordings, ensuring a strong signal-to-noise ratio and the overall reliability of the acquired neural data. The captured EEG signals will record voltage readings from the aforementioned 20 electrodes across N sampling points, ultimately forming a 20 × N matrix.

It is important to note that during the EEG signal acquisition process, data corruption may occur due to poor electrode contact, which can manifest as significant baseline drift or various artifacts. Additionally, electromyographic effects originating from eye blinks or movements can introduce substantial signal noise. Therefore, preprocessing is an essential step to enhance the overall data quality. For this study, we utilized the EEGLab toolbox (Swartz Center for Computational Neuroscience, San Diego, CA, USA) for the preprocessing of the EEG data, which included procedures for electrode localization, data filtering, interpolation of faulty channels, baseline correction, rejection of artifact-contaminated segments, and finally, artifact removal via Independent Component Analysis (ICA). This study used ICLabel (Swartz Center for Computational Neuroscience, San Diego, CA, USA) to pre-classify all components. Then, these automated labels were verified through manual review. Any discrepancies between the automated classification and the manual inspection were resolved in favor of the manual assessment. This strategy ensures both the consistency of a standardized pipeline and the accuracy of expert oversight. The detailed processing workflow is depicted in

Figure 2.

After EEG signal preprocessing, brain functional connectivity was studied by computing the correlation between 20 EEG electrodes, providing data support for subsequent cognitive state assessment modeling. This paper employs the Pearson correlation coefficient for functional connectivity construction. as it is a well-established and effective metric for assessing linear synchrony between EEG signals from different brain regions, as demonstrated in numerous cognitive state evaluation studies [

36,

37,

38,

39]. The Pearson method is particularly suitable for the objectives as it provides a robust measure of the quantitative dependencies in the time-domain signals, which are indicative of coordinated neural activity by capturing a broader, more integrated view of brain network coordination relevant to the sustained cognitive states involved in UAV operation. The Pearson correlation coefficient between the i-th and j-th electrodes is calculated as follows:

where

represents the EEG time-domain signal of the i-th electrode at the t-th sampling point, and t represents the total number of sampling points. The correlation coefficients between all 20 electrodes form a brain functional connectivity matrix, serving as the input for the cognitive state classification model.

2.2. CNN Model Establishment

Common models for state classification using EEG signals include EEGNet and ShallowConvNet [

40,

41]. The EEGNet architecture is based on depth-wise separable convolution, sequentially comprising a temporal convolutional layer, a spatial convolutional layer (depth-wise convolution), and a feature mixing layer (pointwise convolution), supplemented with normalization constraints and dropout regularization. Although EEGNet has a compact structure, its performance remains notably sensitive to several key hyperparameters. For new datasets, a certain degree of hyperparameter tuning is still required to achieve optimal performance. The ShallowConvNet architecture consists of two convolutional layers (temporal convolution followed by spatial convolution), a square nonlinear activation function (f(x) = x

2), an average pooling layer, and a logarithmic nonlinear function. Specifically designed for oscillatory signal classification by extracting features related to log-band power, the ShallowConvNet architecture consequently possesses certain inherent limitations.

Common analytical methods for brain functional connectivity matrices include Support Vector Machine (SVM) [

42,

43,

44], Random Forests (RF) [

45], and Convolutional Neural Networks (CNN) [

46]. While SVM is often suitable for small-sample learning scenarios, it typically involves complex parameter configuration and exhibits poor performance with large datasets, including prolonged training times and insufficient robustness. RF requires constructing numerous random trees, consuming substantial computational resources and resulting in extended prediction durations during the inference phase. In contrast, CNNs feature relatively fewer parameters, demonstrate faster training speeds, and maintain strong generalization capabilities, while also possessing certain robustness to input matrix transformations due to their inherent translation and rotation invariance properties. Therefore, this study selects a CNN-based model for classifying brain functional connectivity matrices, with SVM, RF, EEGNet and ShallowConvNet all serving as baseline models for comparison purposes.

Using the entire signal over a period as input is overly complex, and hinders effective feature extraction. This paper uses brain functional connectivity matrices between 20 electrodes within a time window as feature inputs. This reduces data volume and at the same time better reflects activity relationships between brain regions.

Let the original brain functional connectivity matrix be:

where N is the number of electrodes (N = 20 in this paper). After normalization, we obtain the normalized matrix

. To meet CNN input requirements, reshape it into a 4D tensor (batch size, channels, height, width):

The final dataset contains N samples:

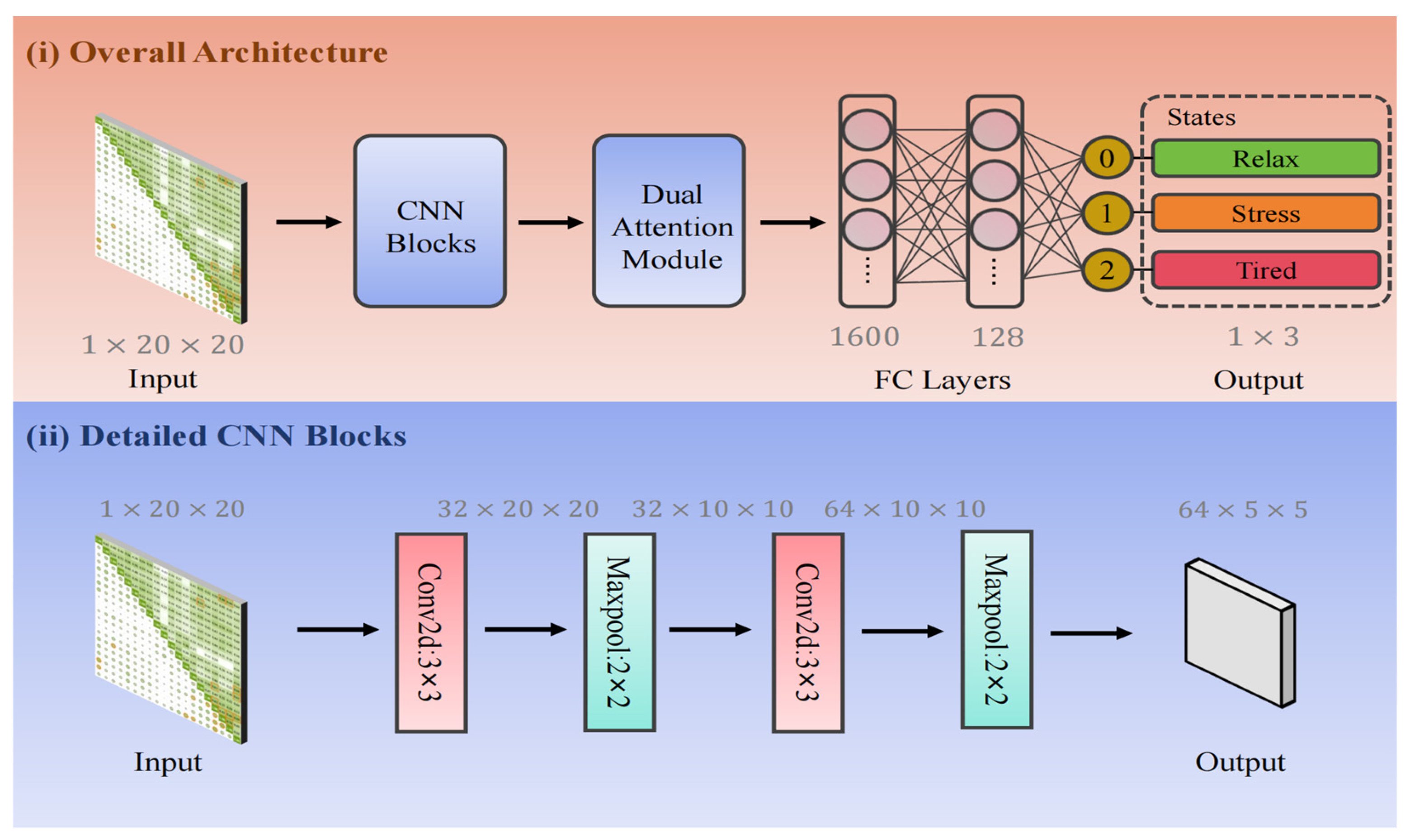

We first input these N samples into the CNN’s convolutional feature extraction layers, consisting of two convolutional and pooling layers:

, are the convolutional kernel weights for the first and second layers, respectively. Both layers use 3 × 3 kernels to simulate functional interactions between neighboring brain regions. ∗ is the 2D convolution. , are the biases for the first and second convolutional operations. Both pooling layers employ max pooling with a kernel size of 2. The final output is

After obtaining the feature output Z, the subsequent classification is performed through fully connected layers. Within the methodology presented in this paper, the fully connected layer component first effectively flattens the input tensor into a one-dimensional vector, then systematically employs two distinct linear transformation layers to achieve progressive dimensionality reduction and final categorical classification. However, employing solely the standard CNN architecture without additional enhancements produces inadequate and suboptimal classification performance. This fundamental limitation primarily arises because the meaningful information is not uniformly distributed across the entire functional connectivity matrix—in fact, meaningful information tends to be concentrated in specific regions while numerous irrelevant or redundant connections significantly degrade the model’s effectiveness and generalization capability [

47]. To resolve this fundamental challenge, we implement a sophisticated attention mechanism and construct an advanced CNN framework incorporating a Dual Attention Module, thereby creating the DAM-CNN architecture. This strategic integration fundamentally empowers the CNN model to dynamically and selectively concentrate on the most critical neurophysiological features while actively suppressing non-essential distractions, consequently enhancing the model’s representational power. The complete architectural configuration of our proposed model is visually presented in

Figure 3.

2.3. Dual Attention Module (DAM)

To model long-range functional connectivity between brain regions, a self-attention mechanism is introduced. Self-attention is an internal attention mechanism that associates different positions within a single sequence, encoding sequence data based on importance scores [

48]. It is popular for improving long-range dependency modeling [

49]. The attention function maps a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors. The output is computed as a weighted sum of the values, with weights assigned based on the compatibility function between the query and the corresponding key.

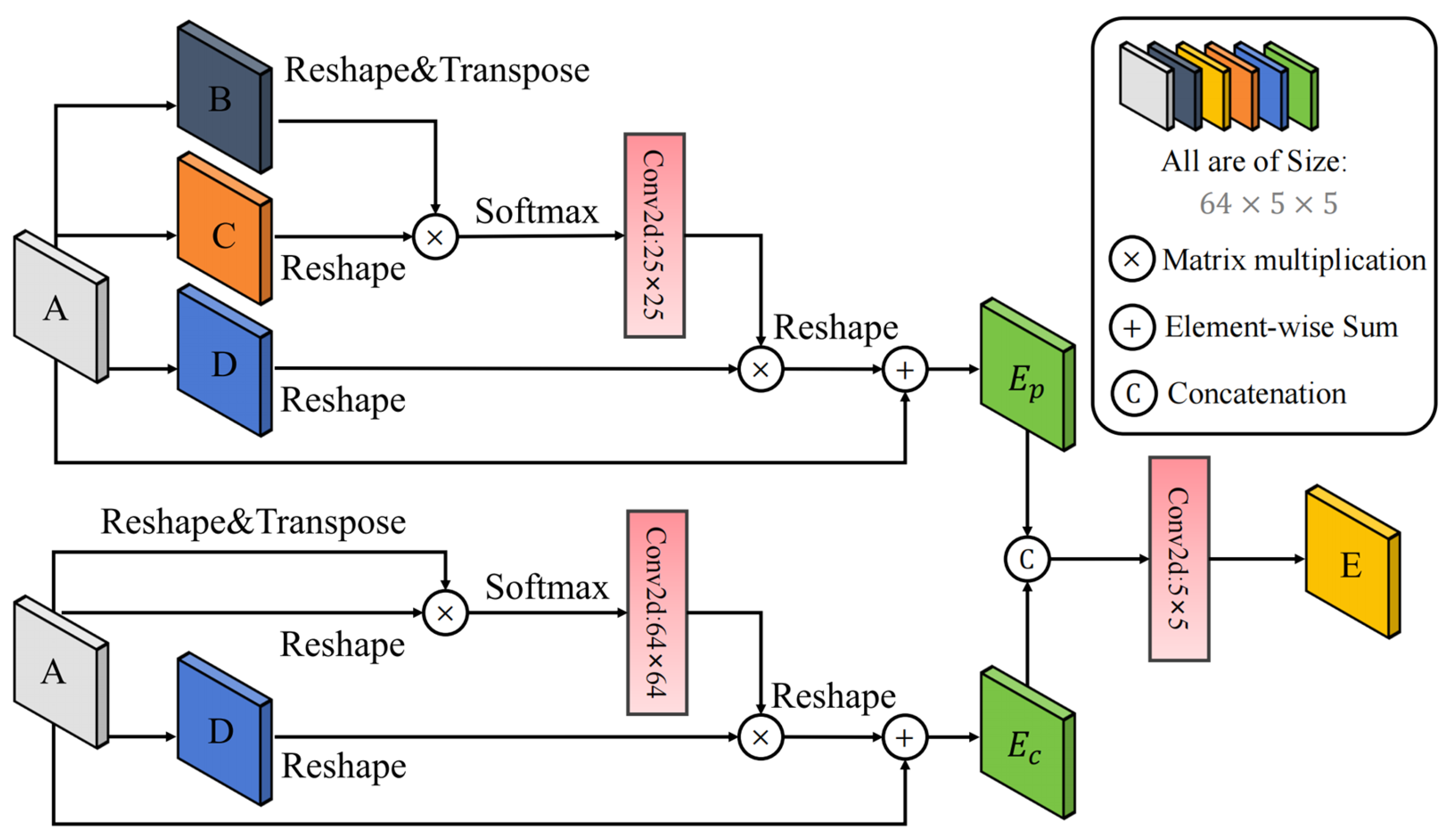

The DAM with a self-attention mechanism combines a Position Attention Module (PAM) and a Channel Attention Module (CAM) to enhance feature representation in segmentation tasks. PAM learns spatial interdependencies of features, while CAM models channel interdependencies. Together, they capture contextual dependencies over local features, improving segmentation results.

Taking the spatial attention module (upper part of

Figure 4) as an example, we first apply a convolutional layer to obtain dimension-reduced features. These features are then input to the Position Attention Module, generating new features containing long-range spatial context through three steps: First, a spatial attention matrix is generated, modeling spatial relationships between any two electrodes. Next, matrix multiplication is performed between this attention matrix and the original features. Finally, an element-wise summation is performed between the resulting matrix and the original features to obtain the final representation reflecting long-range context. The output for position attention features is given by:

where

A is the input,

B =

ωB⋅

A,

C =

ωC⋅

A,

D =

ωD⋅

A, and

α is a scale parameter.

Simultaneously, long-range contextual information along the channel dimension is captured by the Channel Attention Module. The process of capturing channel relationships is similar to PAM, except the channel attention matrix is computed in the channel dimension. The output for channel attention features is given by:

where

A is the input and

β is a weight learned starting from 0.

Finally, outputs from both attention modules are aggregated strategically to obtain contextually enhanced feature representations.

3. Experiment and Analysis

3.1. Subjects

With the ethics approval obtained from the Medical and Experimental Animal Ethics Committee of Northwestern Polytechnical University (Project ID: 20250201), this study acquired 10 subjects aged between 22 and 24 to conduct research. Informed written consents were attained from all participants. The selection of participants follows a standardized and rigorous protocol that they have to meet the following requirements:

- (1)

All participants are with normal hearing.

- (2)

All participants have normal or correct-to-normal vision and free from color vision deficiencies.

- (3)

All participants have adequate sleep before experiments.

- (4)

All participants are asked to avoid strenuous exercise before experiments.

- (5)

All participants are in good health, with no history of mental, intelligent, or neurological illness, and no physical disabilities.

- (6)

All participants are right-handed.

- (7)

All participants are computer-literate.

- (8)

All participants are highly educated.

- (9)

All participants are capable of independently completing experimental tasks.

Notably, the participant pool was defined by the target application, that is, the operation of a UAV swarm, which is typically performed by personnel with similar training and experience levels, leading to a degree of inherent homogeneity in the user population. Hence, in order to ensure participant proficiency with the experimental task, all individuals completed a structured training course prior to data collection. This consisted of two hours of supervised practice using the UAV swarm simulation, continuing until each participant successfully achieved a predefined performance benchmark by completing a human–UAV teaming collaboration task. Although participants were not professionally certified operators, this targeted training protocol ensured they possessed the necessary operational competence to perform the experimental tasks reliably. Consequently, the cognitive load responses measured during the study reflect valid reactions to the task demands. The specific details and scores of the participants are shown in

Table 1.

The overall experiment for each participant is 12 min, and experimental sessions are randomly scheduled across morning, midday, and evening time periods.

3.2. Experimental Task and Procedure

The experiment required participants to complete two tasks: a primary task involving the operation of 50 UAVs to complete a cruising mission, and a secondary task consisting of mental arithmetic exercises. This arithmetic task is a well-established, validated, and standardized method in neuron-ergonomics literature for inducing different levels of cognitive loads [

50,

51,

52]. Additionally, it ensures reproducibility for the incremental manipulation of working memory and attentional demand, which is essential for isolating specific neural correlates of cognitive states.

The primary task simulated the operational demands on UAV operators, requiring participants to complete the mission within 2 min. This 2 min duration was chosen to balance several critical factors, as it was designed to mitigate participant fatigue that could confound EEG signals across multiple task repetitions, while also ensuring a sufficient time window for stable data acquisition to capture sustained cognitive engagement. Through extensive testing, this specific duration was established as the optimal period that allowed participants to meaningfully engage with the swarm guidance task while simultaneously managing the arithmetic distractor, thereby successfully inducing the targeted high-workload state.

The 50 UAVs were divided into 4 groups, and participants were required to verbally command these 4 groups to avoid radar detection while following designated routes. The secondary task comprised three difficulty levels, each requiring participants to complete 5 sets of random two-digit arithmetic problems within a fixed time limit. With each increasing difficulty level, the allotted time decreased by 5 s. The varying difficulty levels of the secondary task were designed to apply different levels of pressure on participants and objectively reflect their cognitive state through calculation accuracy rates.

Prior to the experiment, the researcher set the secondary task difficulty by determining the available time for each arithmetic problem. Participants familiarized themselves with interaction methods in the simulated task and practiced the cruising procedure. After completing preparation, participants wore EEG caps. Once the task began, participants commanded 4 UAV groups to cruise along designated routes. The system recorded group number, frequency, and timestamp whenever UAVs deviated from the prescribed route or failed to avoid radar detection. During primary task execution, the researcher randomly initiated secondary tasks during selected periods while simultaneously recording EEG signals. When secondary tasks were activated, participants needed to maintain normal UAV trajectory while performing mental calculations of two-digit arithmetic expressions displayed on the left side of the screen, entering answers via keyboard within the specified time limit. Arithmetic expressions were presented at equal time intervals.

Upon secondary task completion, the experimental program automatically calculated participants’ response accuracy rates and printed primary task error reports. Researchers then terminated EEG recording, and participants completed NASA-TLX questionnaires. Objective scores were calculated based on mental arithmetic accuracy and primary task error rates, while subjective scores were derived from NASA-TLX questionnaire results. Here, the NASA-TLX questionnaire was not used as a direct input feature for the proposed model. Instead, it serves as a critical ground-truth validation and experimental design purpose. Specifically, the NASA-TLX scores were collected to confirm that the designed tasks (UAV guidance, mental arithmetic, and their combination) successfully induced different levels of subjective cognitive load in the participants. In addition, the scores of the task performance and NASA-TLX were integrated to compute a composite cognitive state rating. This approach ensures that the final cognitive state labels (i.e., Low, Medium, High load) were not based solely on task efficacy, which can be ambiguous, but were also grounded in the participants’ subjective experiences. The NASA-TLX questionnaire was therefore instrumental in creating a more robust valid ground-truth for model training. After all the 10 participants completed their experiments, the final state ratings were sorted in descending order and equally divided into three groups, each assigned distinct cognitive state labels.

Researchers compiled all EEG signal files with corresponding cognitive state labels into a complete dataset for model training and testing.

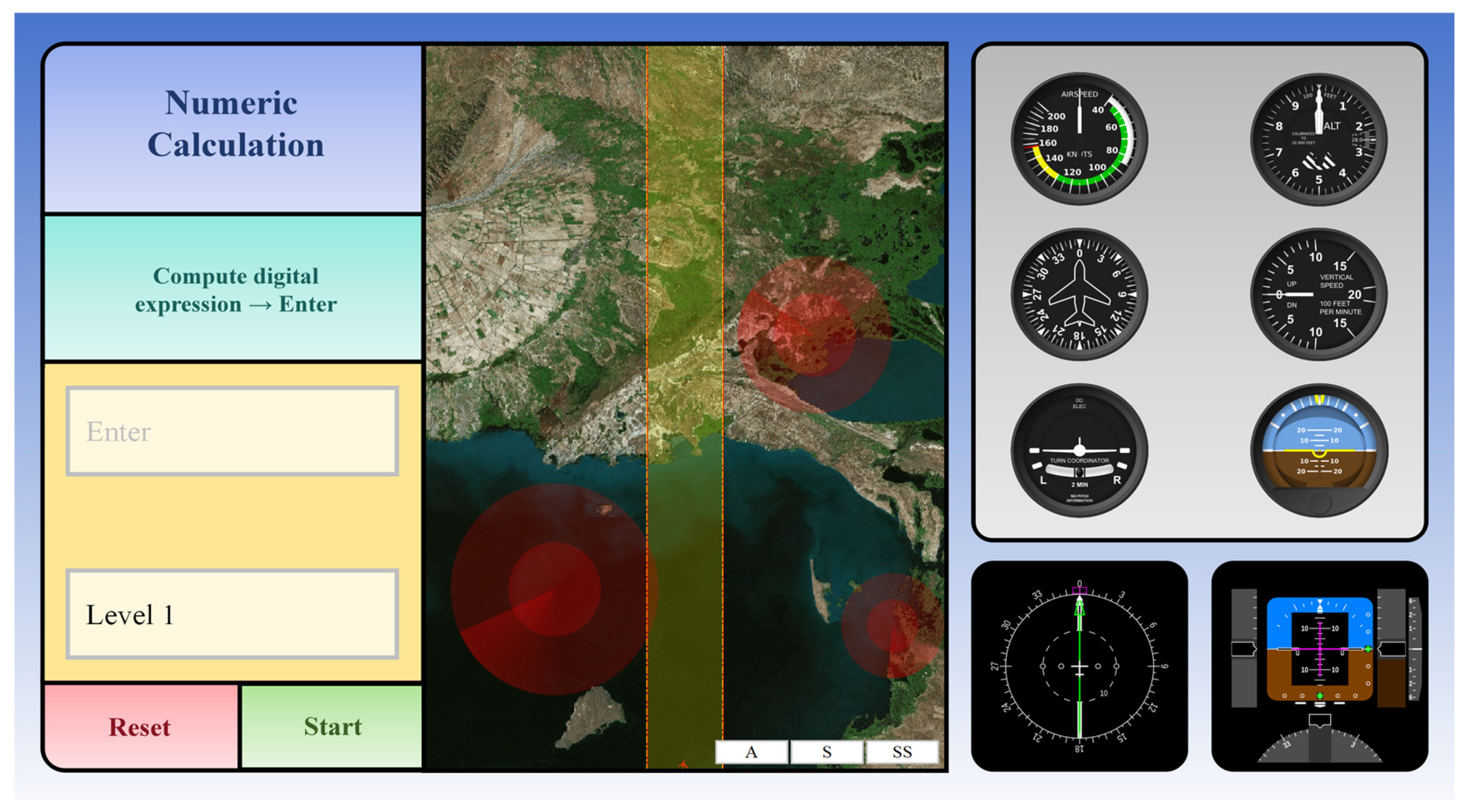

3.3. Experimental Interface

The experimental interface layout is structured in the following configuration: The central area prominently displays the UAV navigation map, with red sections clearly indicating radar detection zones and yellow areas representing designated UAV route boundaries. The right section presents multiple types of situational awareness information, while the left side features a dedicated digital arithmetic module. This particular module contains a green indicator box where target arithmetic expressions for calculation will be displayed after experiment initiation. Mental arithmetic difficulty is selected by the experimenter using an orange drop-down menu, with task initiation and system reset controlled via the green “start” and red “restart” buttons, respectively. Participants are required to input their mental calculation results in the yellow entry box positioned below the display area and must confirm their answers by pressing the “Enter” key on the computer keyboard. The complete detailed layout is illustrated in

Figure 5.

3.4. Results and Analysis

Following preprocessing of the acquired EEG signals, the data were segmented using a 5 s sliding window with a 0.5 s step size. For each temporal window, pairwise Pearson correlation coefficients were computed across all 20 electrode signals to construct functional connectivity matrices.

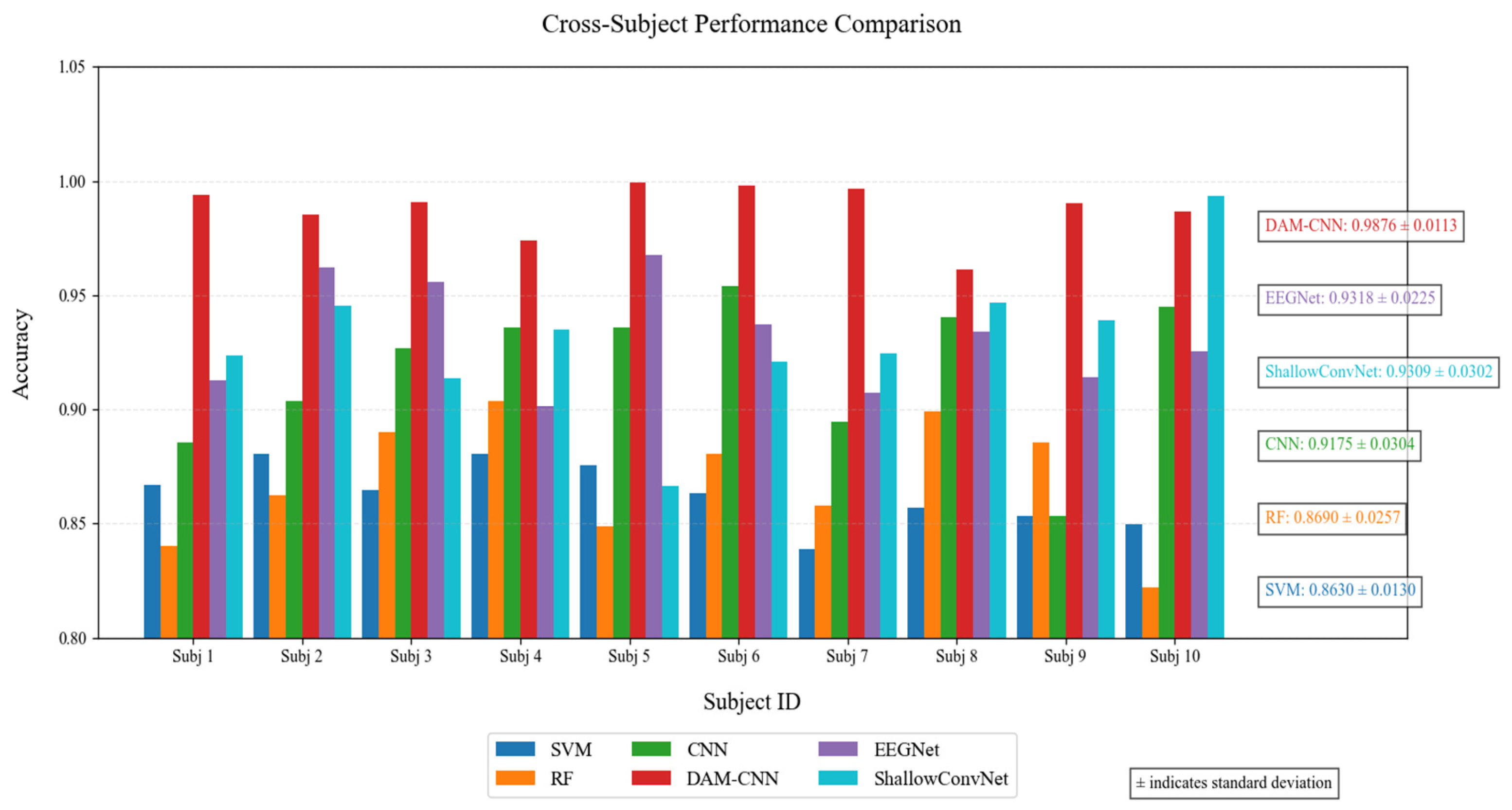

For the baseline models, classification was implemented using the preprocessed EEG signals as input. For the DAM-CNN model, the functional connectivity matrices served as input. We selected two traditional machine learning methods (SVM and RF) along with three deep learning approaches (CNN, EEGNet, and ShallowConvNet) as baseline models. This study specifically designed the cross-subject validation to test generalization within this realistic operational context through leave-one-subject-out cross-validation. A comparative analysis was performed between these five baseline models and the proposed model in terms of average accuracy and robustness, as illustrated in

Figure 6.

On this dataset, the proposed DAM-CNN method achieved a mean three-class classification accuracy of 98.76% across 10 subjects. The traditional machine learning methods, SVM and RF, yielded mean accuracies of 86.30% and 86.90%, respectively. The deep learning methods EEGNet, and ShallowConvNet attained mean accuracies of 93.18%, and 93.09% across 10 subjects, while the CNN approach employed by Yang et al. (using raw EEG signals as input) [

28] achieved a mean accuracy of 91.75% on the 10-subject dataset. Under identical experimental conditions, the proposed method demonstrated accuracy improvements of 12.46%, 11.86%, 5.58%, and 5.67% relative to the four baseline models across 10 subjects, representing a 7.01% mean accuracy improvement compared to Yang et al.’s model [

28]. This validates that the cross-subject cognitive state assessment framework based on brain functional connectivity effectively processes EEG signals while fully leveraging task context information. As clearly shown in

Figure 7, model accuracy varies across different subjects. We calculated the accuracy variance across 10 subjects: SVM exhibited a variance of 0.0130, RF 0.0257, EEGNet 0.0225, ShallowConvNet 0.0302, Yang et al.’s CNN method 0.0304, and the proposed DAM-CNN model 0.0113. Comparatively, the variances were reduced by 0.0017, 0.0144, 0.0112, 0.0189, and 0.0191, respectively. These results clearly demonstrate that DAM-CNN exhibits superior stability across different subjects, indicating enhanced generalization capability. The specific test metrics for each model are shown in

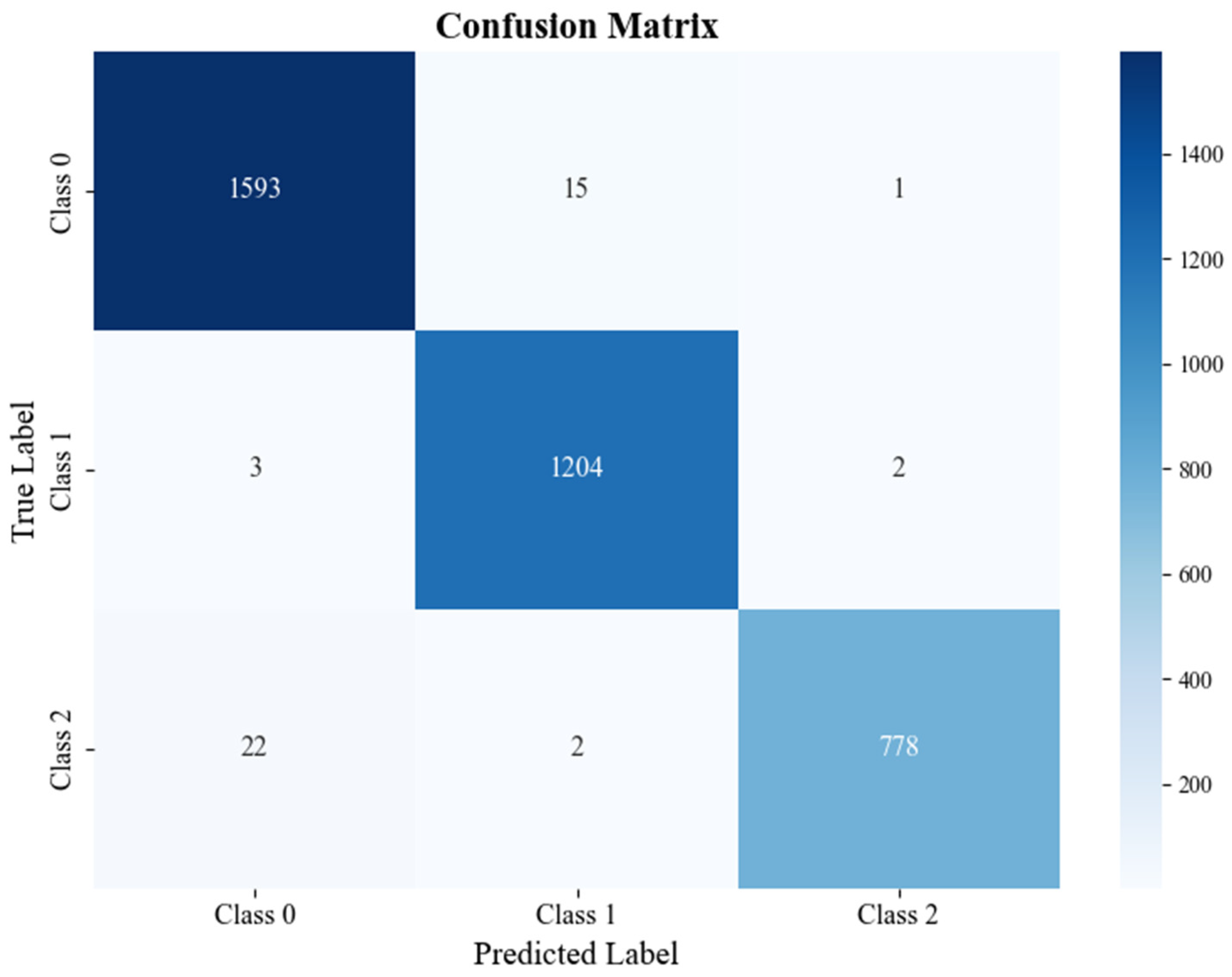

Table 2. Meanwhile, we calculated the overall prediction results of the DAM-CNN model on the 10-subject dataset and plotted the corresponding confusion matrix, as shown in

Figure 8.

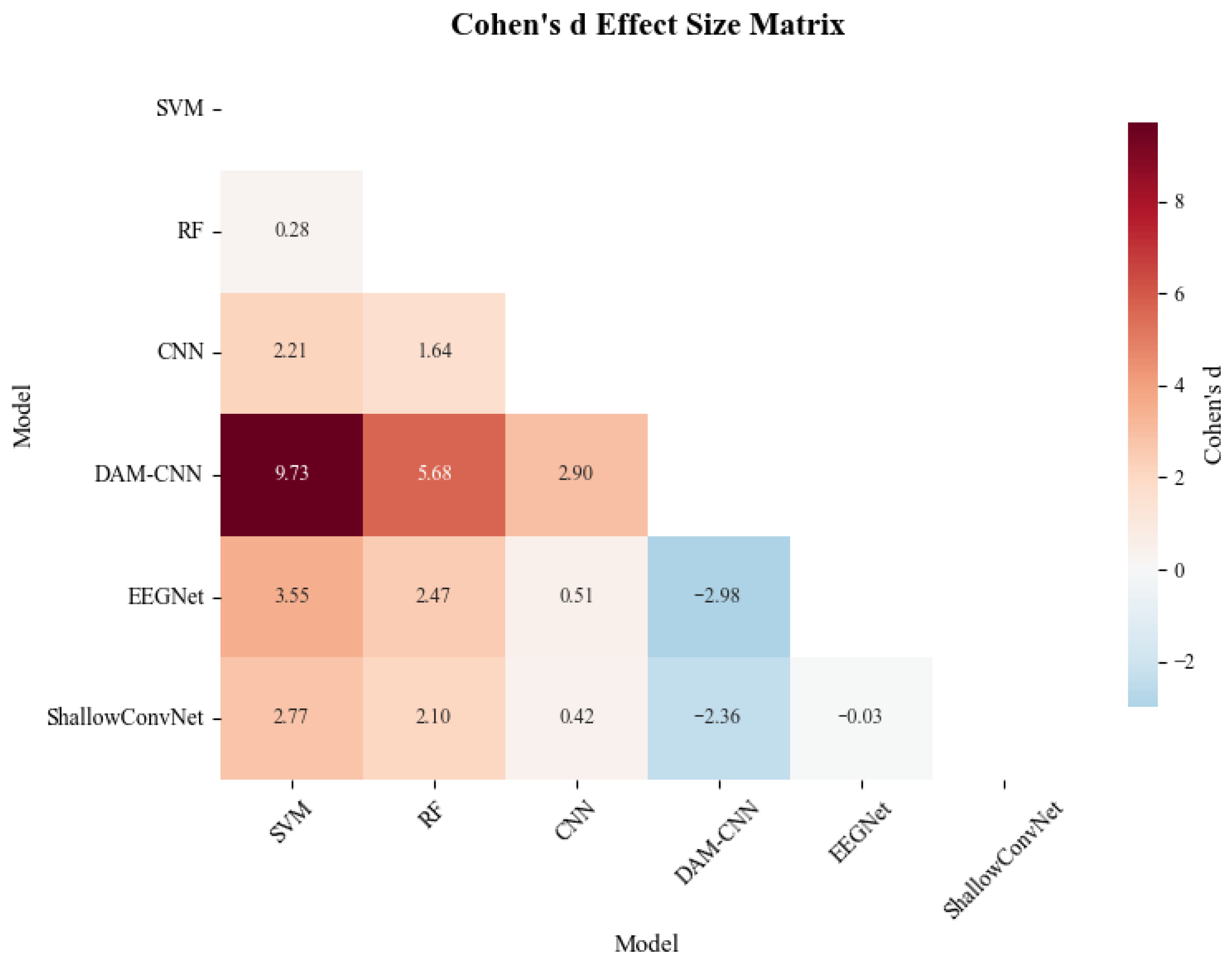

The application of neurophysiological monitoring, particularly through EEG, holds significant promise for enhancing safety and performance in aviation and unmanned systems operations [

58,

59,

60]. Unlike subjective self-reports or performance metrics alone, EEG provides an objective, continuous, and real-time window into an operator’s cognitive state. This capability is crucial in high-stakes environments where cognitive overload, fatigue, or a loss of situational awareness can lead to critical errors. The key advantages driving this research include the potential for real-time cognitive state assessment, which could enable adaptive systems to mitigate overload by simplifying interfaces or reallocating tasks. Furthermore, it facilitates a shift towards proactive safety paradigms by identifying cognitive degradation before it results in an operational failure, and it provides a quantitative basis for personalized training by revealing individual neurocognitive responses to complex scenarios. As depicted in

Figure 9, it quantifies the magnitude of performance differences between the proposed DAM-CNN and baseline models. The matrix reveals that DAM-CNN achieves extremely large effect sizes when compared to SVM (d = 9.73), RF (d = 5.68), and CNN (d = 2.90), demonstrating its substantially superior performance. Meanwhile, EEGNet and ShallowConvNet show large negative effect sizes (−2.98 and −2.36, respectively) relative to DAM-CNN, indicating their inferiority. This visualization corroborates that DAM-CNN not only outperforms all baseline models significantly but also does so with a large margin, aligning with the quantitative accuracy and variance analyses presented earlier. Our work directly contributes to this evolving field by developing a robust framework for cross-subject cognitive state assessment, a necessary step toward the practical deployment of such brain-aware systems in real-world aviation contexts.

Traditional EEG-based cognitive state assessment methods suffer from excessive data volume, numerous interfering factors, and insufficient feature representation in deep learning frameworks, failing to adequately capture inter-electrode correlations. These limitations often lead to inaccurate assessment of operators’ cognitive states, making it difficult to promptly adjust their operational readiness. In contrast, the cognitive state classification model developed in this study consistently and accurately determines operators’ cognitive states by comprehensively capturing functional connectivity relationships between electrodes. This approach provides reliable support for successful real-time UAV mission operations, significantly enhancing operators’ decision-making accuracy and overall system interaction fluency, thereby facilitating dynamic human–UAV collaboration and seamless task execution.

Notably, the performance of the proposed architecture might be impacted by a more diverse operator population. Specifically, age diversity could introduce variability in baseline neural rhythms and cognitive processing speed, potentially requiring model adaptation for different age cohorts within a broader operator population. While varying fatigue levels might manifest as a confounding factor, altering EEG signatures in ways that could be mis-classified as other cognitive states. In addition, differences in operational skill could affect the cognitive load experienced for the same task, meaning our model may need to be conditioned on expertise level for optimal accuracy in a heterogeneous group.

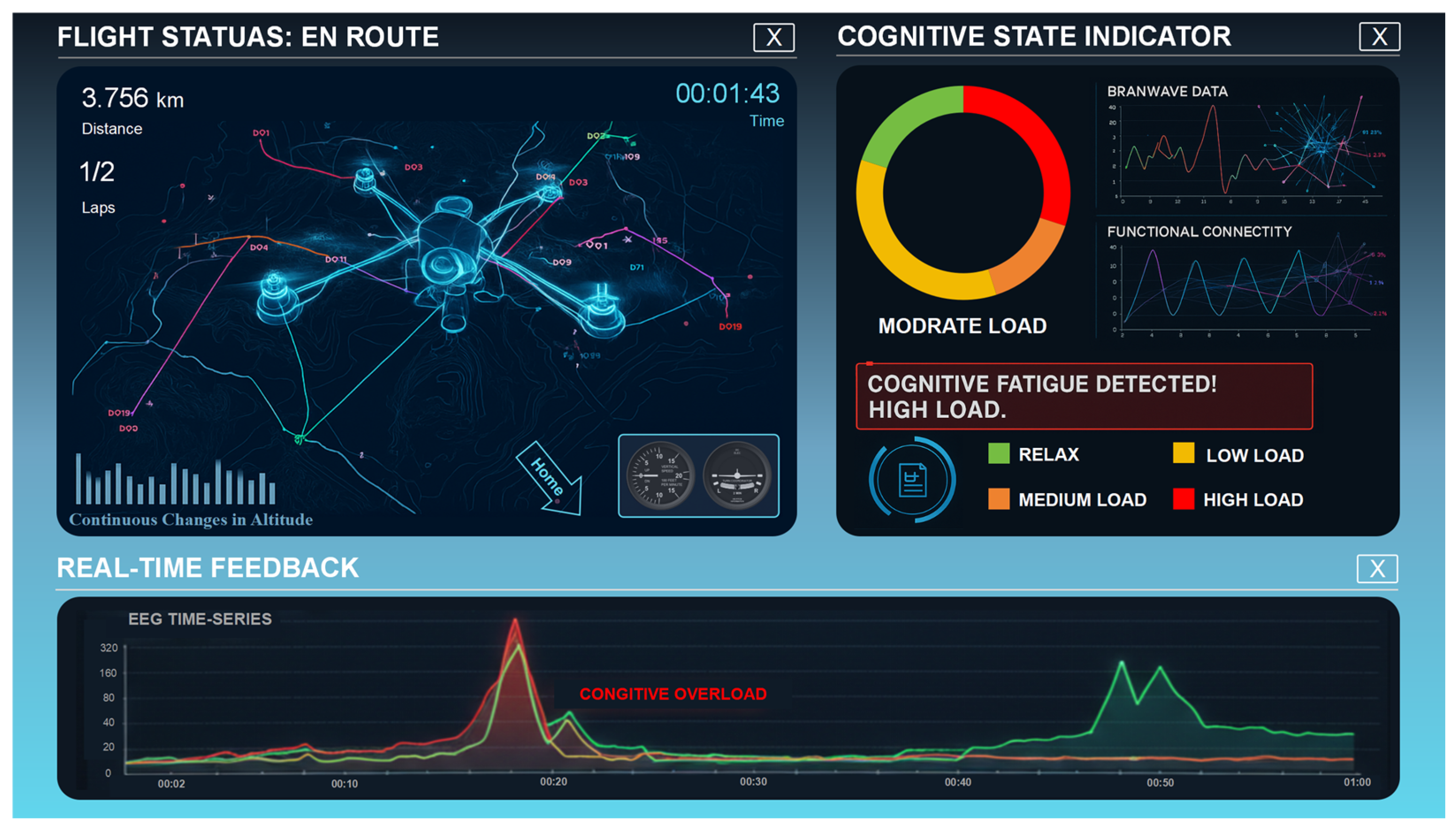

The ultimate goal of this work is to integrate the proposed EEG-based cognitive state evaluation model directly into the UAV operational loop. Given that UAV operators work in a more stable environment compared to pilots, and considering the non-intrusive nature of EEG signal acquisition, the integration process is relatively straightforward. This work envisions that operators wear a lightweight EEG headset to transmit brainwave signals to the ground control station software. The built-in DAM-CNN model would then process the brain functional connectivity data in real-time, providing a continuous assessment of the operator’s cognitive state. This output could then be visualized on the console interface via a simple, intuitive indicator. In an advanced autonomous supervision framework, this real-time cognitive readout could trigger adaptive system responses, such as simplifying the interface during high mental workload, issuing alerts for cognitive fatigue, or even suggesting a transfer of control to a secondary operator to prevent performance degradation and enhance overall mission safety and efficacy. In subsequent research, new software will be developed specifically for the UAV console. This program will adapt the interface and modulate the amount of information displayed based on the cognitive state classification results, thereby aligning the human–machine interaction with the operator’s current cognitive capacity. When necessary, the system will issue warnings to prompt the operator to suspend operations at an appropriate time, notify relevant personnel to intervene promptly, or even assume control of the UAV to execute avoidance maneuvers and prevent safety-critical incidents. Sample demonstration of the implementation can be visualized in

Figure 10 below.

4. Conclusions

To achieve more robust and accurate assessment of cognitive states in unmanned system operators, this study innovatively transforms EEG signals into brain functional connectivity matrices, highlighting the association strength between neural activities in different cerebral regions. By constructing a specialized convolutional neural network model integrated with a Dual Attention Module (DAM), we have effectively enhanced the classification accuracy of cognitive states based on EEG signals while simultaneously reducing inter-subject variance across participants. These significant findings establish a crucial technical foundation for enabling smooth and efficient human-UAV interaction in future operational scenarios.

The findings of this study have direct and significant implications for enhancing UAV operational reliability, safety assurance, and the development of adaptive autonomy. By enabling a robust, cross-subject, and real-time assessment of the operator’s cognitive state, our DAM-CNN model provides a critical missing data stream for next-generation human–machine systems. This capability directly contributes to operational reliability by allowing for the early fatigue or overload detection of cognitive decline before it leads to performance errors. From a safety assurance perspective, this real-time cognitive monitoring acts as a vital safeguard, creating an opportunity for proactive intervention. This could range from alerting the operator and supervisory personnel to the system temporarily simplifying the interface or assuming lower-level control tasks to prevent mishaps. Furthermore, this work lays a foundational stone for sophisticated human–machine teaming architectures. The reliable cognitive state output can serve as a key input for adaptive autonomous systems, enabling a UAV’s level of automation and information presentation to dynamically align with the operator’s current cognitive capacity. Ultimately, this paves the way for more resilient and collaborative human–machine teams and can also be integrated into future training and evaluation frameworks to objectively assess and enhance operator proficiency under various cognitive demands.

However, this study has certain limitations. The limited number of participants, with their age distribution concentrated within a narrow range, gender imbalance, and relatively homogeneous physical conditions, constrains our ability to more comprehensively validate the model’s accuracy and robustness. Although the model does not require parameter recalibration for different subjects, retraining still remains necessary. In future research, we will expand both the sample size and diversity of participants, while developing more robust models capable of generalizing across different subjects with a single training instance effectively.