Refined Extraction of Sugarcane Planting Areas in Guangxi Using an Improved U-Net Model

Highlights

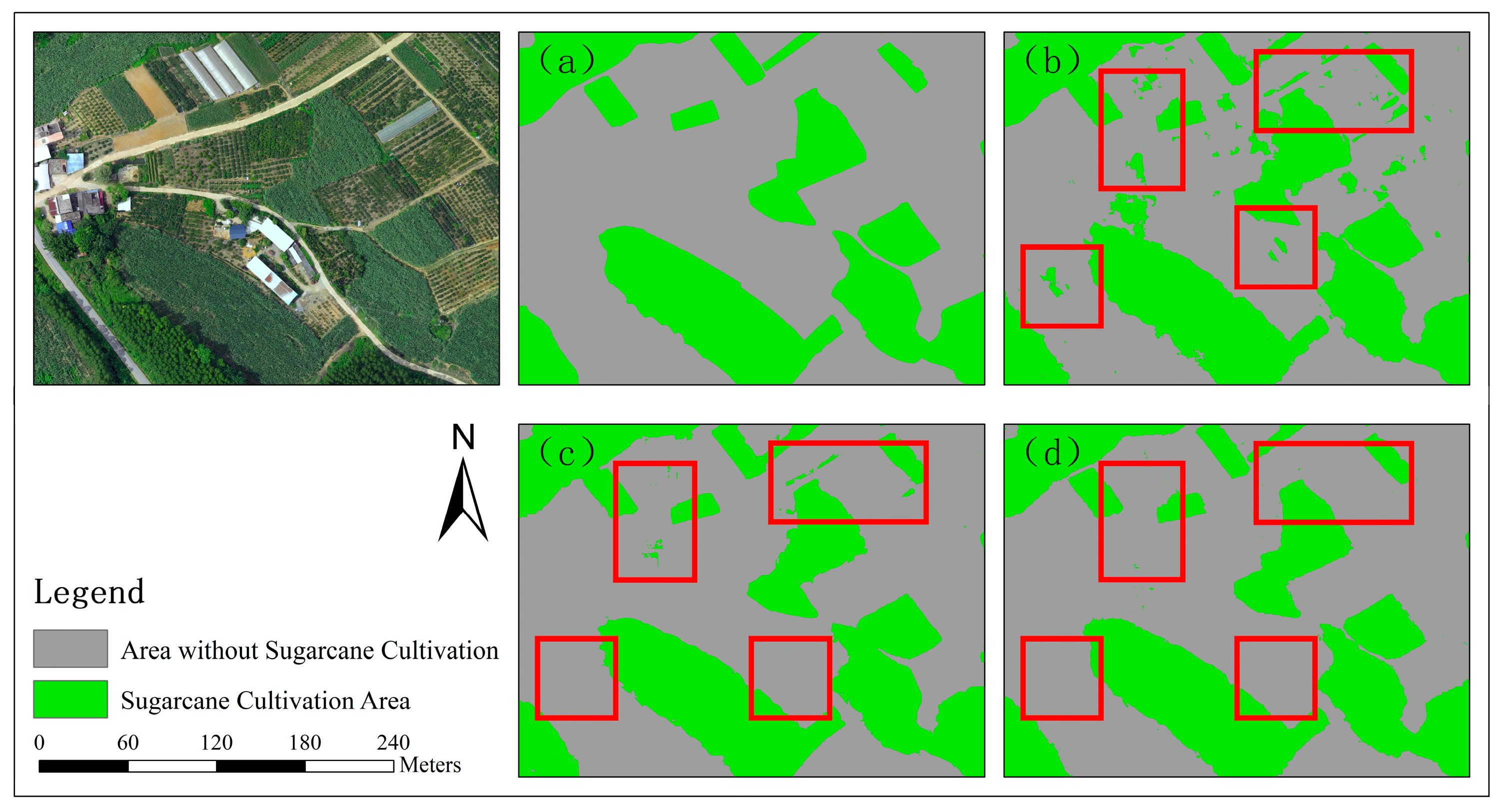

- The proposed RCAU-Net model, which integrates ResNet50, CBAM, and ASPP modules, achieved 97.19% overall accuracy and a 94.47% mean Intersection over Union, significantly refining the accuracy of sugarcane extraction based on UAV imagery.

- The model effectively suppresses misclassification of spectrally similar crops, minimizes internal holes in large continuous patches, reduces false extractions in small or boundary regions, and produces results with smoother and more accurate boundaries.

- The model developed in this study enables high-precision, large-scale sugarcane monitoring, providing critical support for sugar industry supply security and smart agricultural management.

- The framework offers a transferable solution for the refined extraction of similar economic crops, facilitating efficient and accurate UAV remote sensing applications in agriculture.

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area Overview

2.2. Image Data Acquisition

2.3. Dataset

2.3.1. Data Annotation

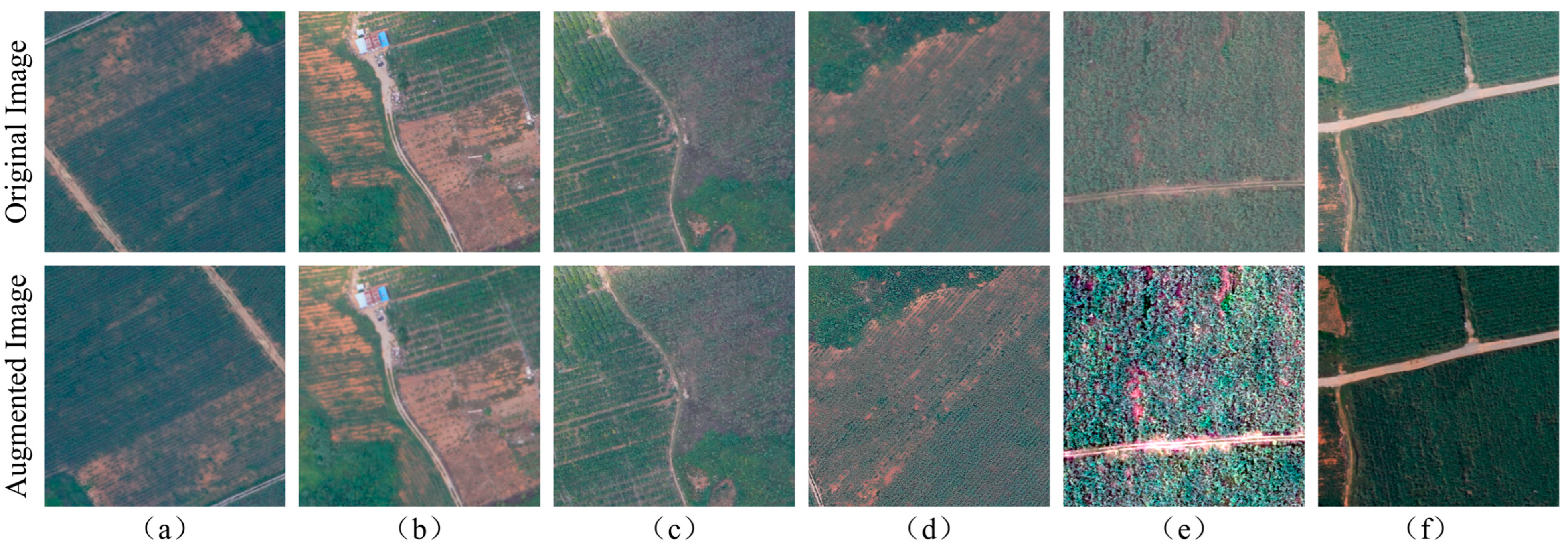

2.3.2. Data Augmentation

2.4. Method

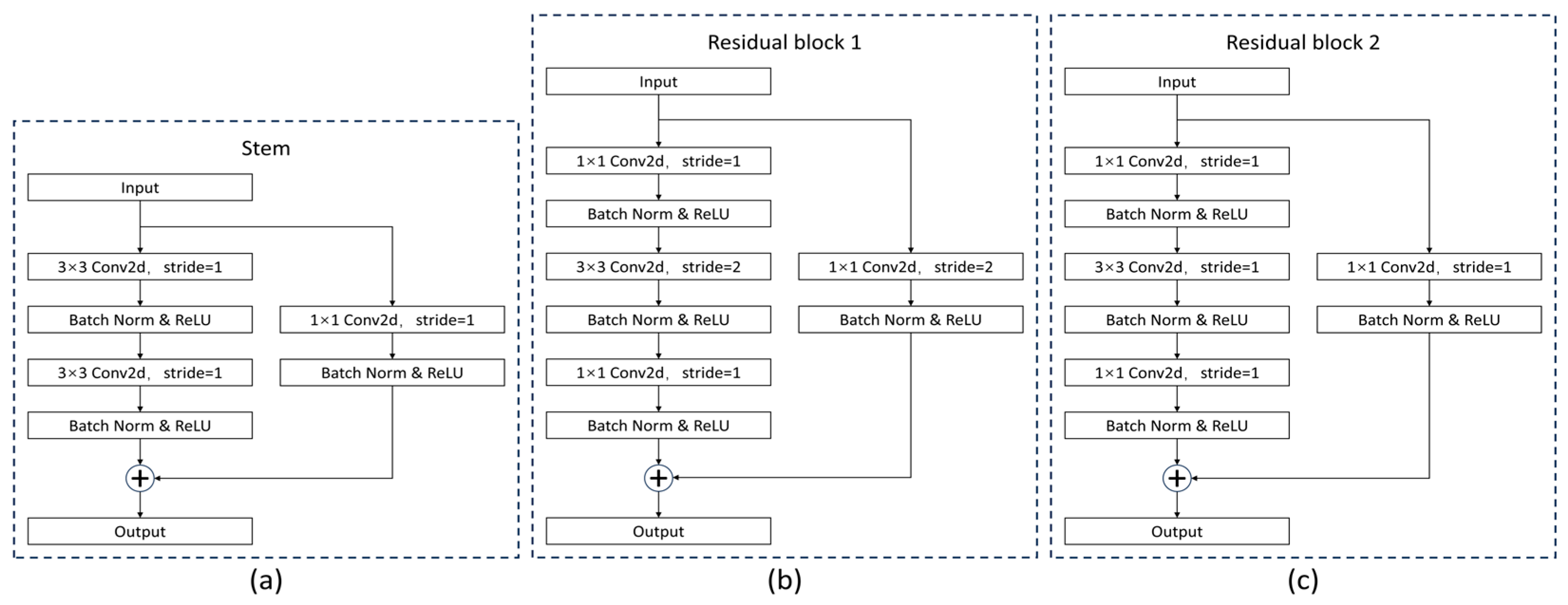

2.4.1. Residual Block

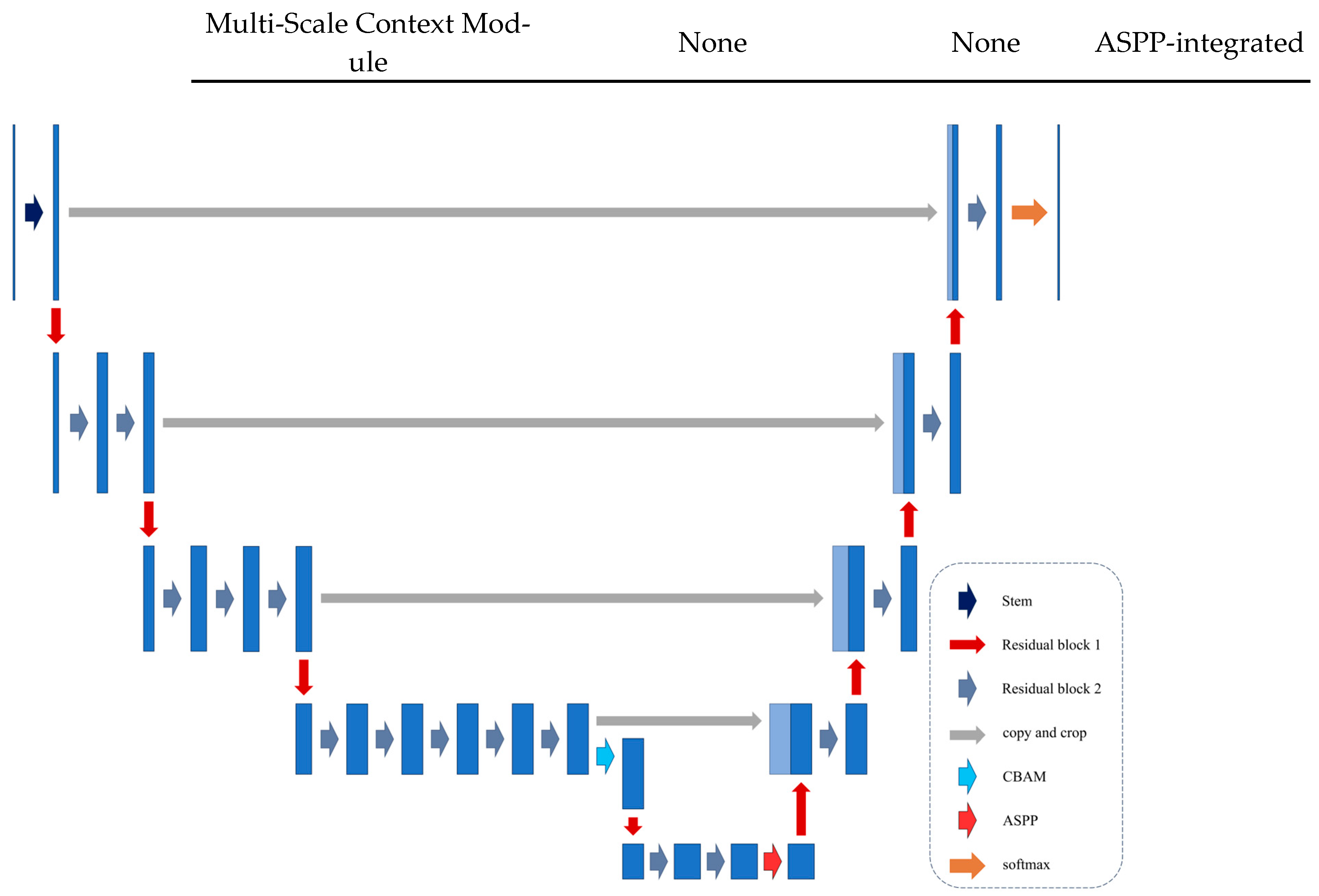

- Stem module: This is an input adaptation layer inspired by the basic block, adjusting channel dimensions.

- Residual block 1: This block replaces max-pooling with stride = 2 convolution for downsampling, minimizing information loss.

- Residual block 2: This block maintains resolution in non-downsampling layers.

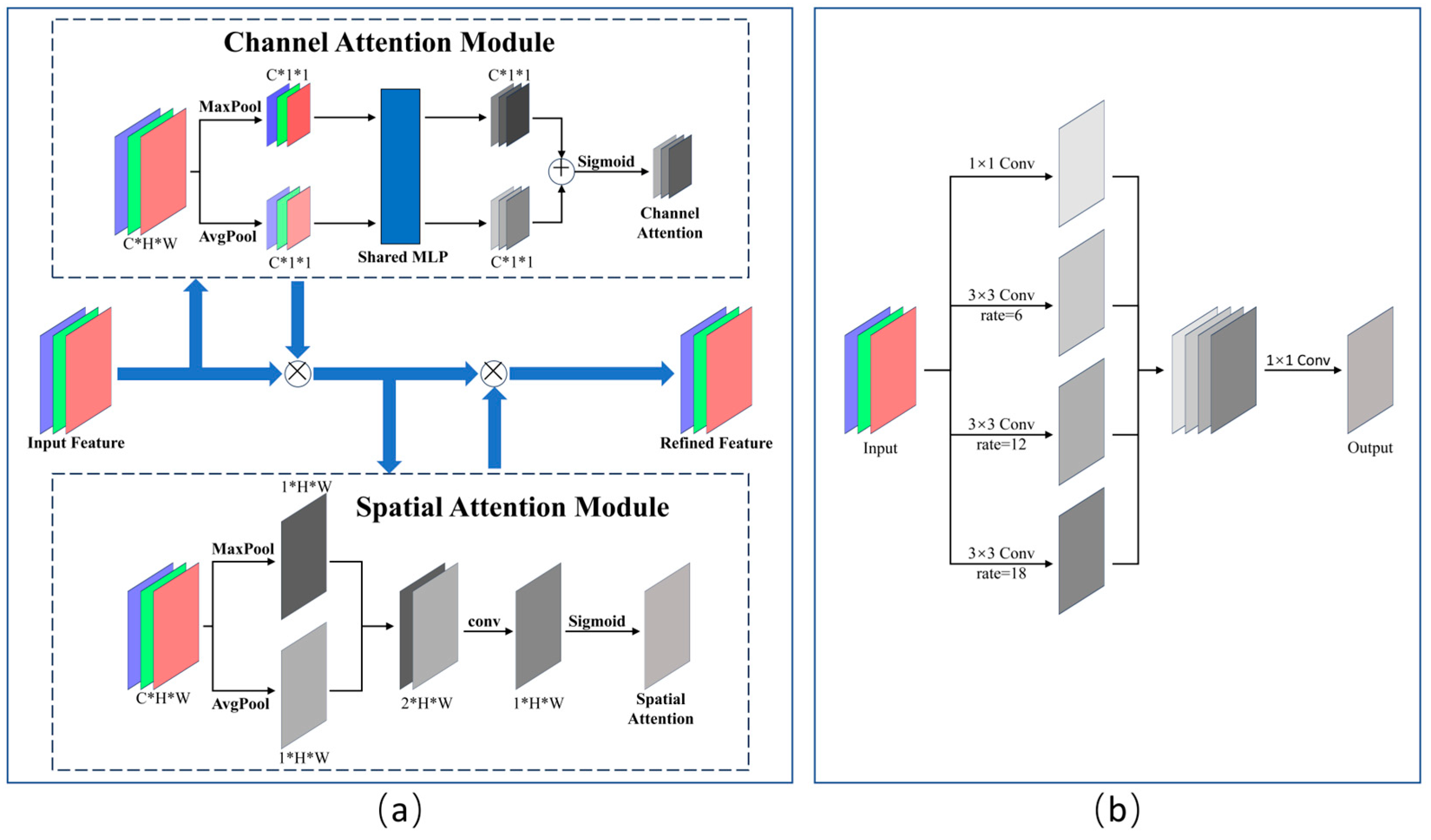

2.4.2. Convolutional Block Attention Module (CBAM)

2.4.3. Atrous Spatial Pyramid Pooling (ASPP)

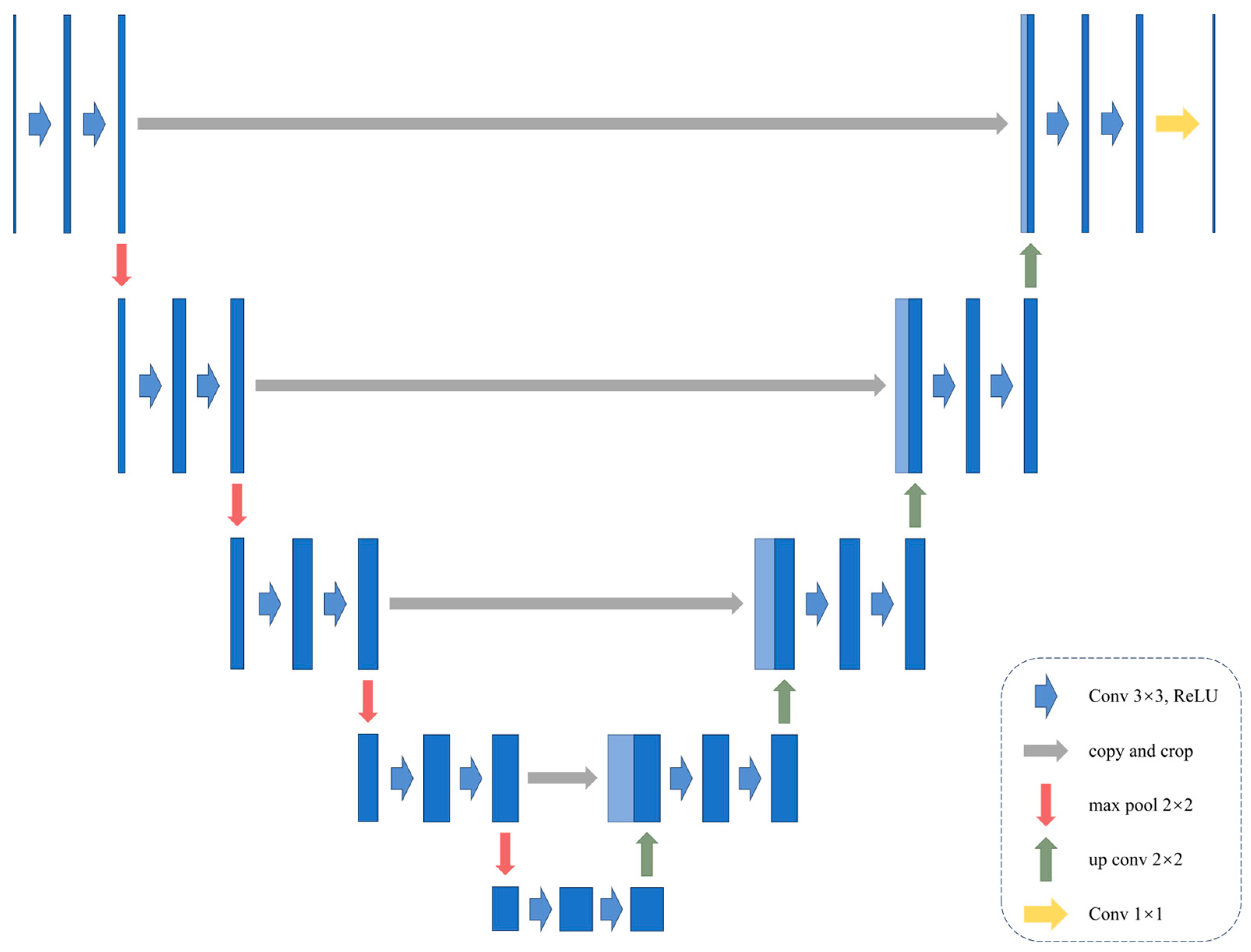

2.4.4. RCAU-Net

2.5. Experimental Setup

2.6. Accuracy Evaluation Metrics

- TP (True Positive): correctly predicted positive samples;

- FP (False Positive): negative samples misclassified as positive;

- TN (True Negative): correctly predicted negative samples;

- FN (False Negative): positive samples misclassified as negative.

- (1)

- Overall Accuracy (OA)

- (2)

- Recall

- (3)

- Mean Intersection-Over-Union (mIoU)

- (4)

- Kappa Coefficient

3. Results

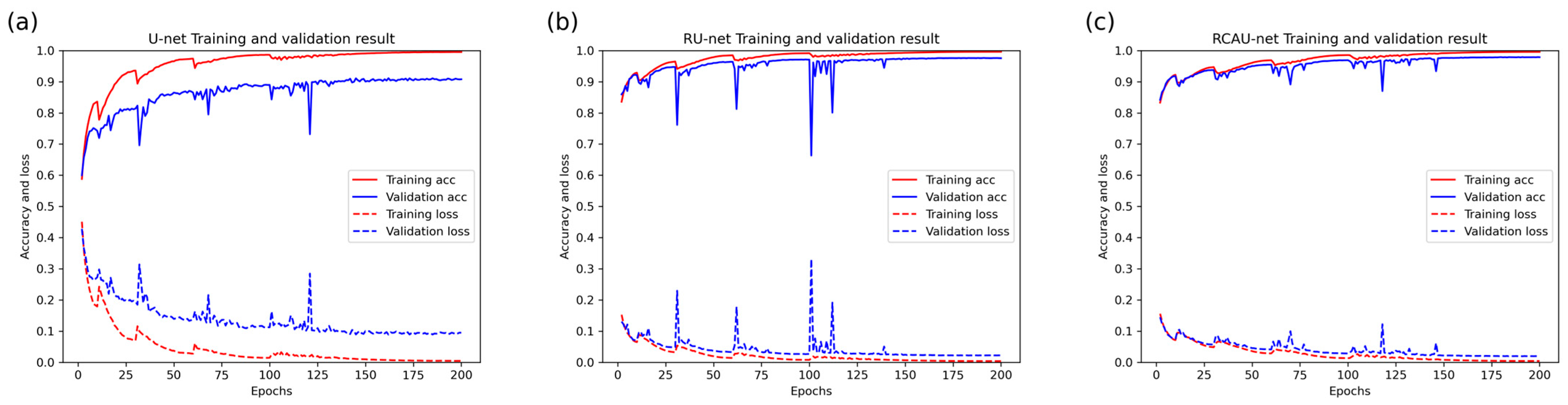

3.1. Comprehensive Performance Evaluation of Progressive Model Enhancements

3.2. Visualization and Quantitative Analysis of Results

4. Conclusions

- The CBAM intensifying focus on critical features;

- ASPP fusing multi-scale contextual information;

- Residual blocks alleviating gradient vanishing.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xin, S.Z.; Lin, X.; Yang, J.; Yang, Y.; Li, H.X.; Chen, G.; Liu, J.H.; Deng, Y.H.; Yi, H.Y.; Xia, Y.H.; et al. Research Status of Sugarcane Varieties and Product Processing in China. Farm Prod. Process. 2020, 12, 73–76+79. [Google Scholar] [CrossRef]

- Østergaard, P.A.; Duic, N.; Noorollahi, Y.; Kalogirou, S. Renewable Energy for Sustainable Development. Renew. Energy 2022, 199, 1145–1152. [Google Scholar] [CrossRef]

- Deng, Y.C.; Liu, X.T.; Huang, Y.; Fan, B.N.; Lu, W.; Zhang, F.J.; Ding, M.H.; Wu, J.M. Investigation on Sugarcane Production in Chongzuo Sugarcane Area of Guangxi in 2022. China Seed Ind. 2022, 10, 48–51. [Google Scholar] [CrossRef]

- Lin, N.; Chen, H.; Zhao, J.; Chi, M.X. Application and Prospects of Lightweight UAV Remote Sensing in Precision Agriculture. Jiangsu Agric. Sci. 2020, 48, 43–48. [Google Scholar] [CrossRef]

- Reddy Maddikunta, P.K.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q.-V. Unmanned Aerial Vehicles in Smart Agriculture: Applications, Requirements, and Challenges. IEEE Sens. J. 2021, 21, 17608–17619. [Google Scholar] [CrossRef]

- Wang, X.C.; Zhao, T.T.; Guo, H. Optimization Path of New Quality Productivity Empowering Smart Agriculture Development. Agric. Econ. 2025, 6, 3–6. [Google Scholar] [CrossRef]

- Phang, S.K.; Chiang, T.H.A.; Happonen, A.; Chang, M.M.L. From Satellite to UAV-Based Remote Sensing: A Review on Precision Agriculture. IEEE Access 2023, 11, 127057–127076. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A Compilation of UAV Applications for Precision Agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Yang, H.; Chen, E.; Li, Z.; Zhao, C.; Yang, G.; Pignatti, S.; Casa, R.; Zhao, L. Wheat Lodging Monitoring Using Polarimetric Index from RADARSAT-2 Data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 157–166. [Google Scholar] [CrossRef]

- Liang, J.; Zheng, Z.W.; Xia, S.T.; Zhang, X.T.; Tang, Y.Y. Crop Identification and Evaluation Using Red-Edge Features of GF-6 Satellite. J. Remote Sens. 2020, 24, 1168–1179. [Google Scholar]

- Cai, Z.W.; He, Z.; Wang, W.J.; Yang, J.Y.; Wei, H.D.; Wang, C.; Xu, B.D. Meter-Resolution Cropland Extraction Based on Spatiotemporal Information from Multi-Source Domestic GF Satellites. J. Remote Sens. 2022, 26, 1368–1382. [Google Scholar]

- Li, D.R.; Li, M. Research Progress and Application Prospects of UAV Remote Sensing Systems. Geomat. Inf. Sci. Wuhan Univ. 2014, 39, 505–513+540. [Google Scholar] [CrossRef]

- Pajares, G. Overview and Current Status of Remote Sensing Applications Based on Unmanned Aerial Vehicles (UAVs). Photogram. Engng. Rem. Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned Aircraft Systems in Remote Sensing and Scientific Research: Classification and Considerations of Use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Boursianis, A.D.; Papadopoulou, M.S.; Diamantoulakis, P.; Liopa-Tsakalidi, A.; Barouchas, P.; Salahas, G.; Karagiannidis, G.; Wan, S.; Goudos, S.K. Internet of Things (IoT) and Agricultural Unmanned Aerial Vehicles (UAVs) in Smart Farming: A Comprehensive Review. Internet Things 2022, 18, 100187. [Google Scholar] [CrossRef]

- Hu, L.W.; Zhou, Z.F.; Yin, L.J.; Zhu, M.; Huang, D.H. Identification of Rapeseed at Seedling Stage Based on UAV RGB Images. J. Agric. Sci. Technol. 2022, 24, 116–128. [Google Scholar] [CrossRef]

- Ore, G.; Alcantara, M.S.; Goes, J.A.; Oliveira, L.P.; Yepes, J.; Teruel, B.; Castro, V.; Bins, L.S.; Castro, F.; Luebeck, D.; et al. Crop Growth Monitoring with Drone-Borne DInSAR. Remote. Sens. 2020, 12, 615. [Google Scholar] [CrossRef]

- Huang, Q.; Feng, J.; Gao, M.; Lai, S.; Han, G.; Qin, Z.; Fan, J.; Huang, Y. Precise Estimation of Sugarcane Yield at Field Scale with Allometric Variables Retrieved from UAV Phantom 4 RTK Images. Agronomy 2024, 14, 476. [Google Scholar] [CrossRef]

- Amarasingam, N.; Powell, K.; Sandino, J.; Bratanov, D.; Ashan Salgadoe, A.S.; Gonzalez, F. Mapping of Insect Pest Infestation for Precision Agriculture: A UAV-Based Multispectral Imaging and Deep Learning Techniques. Int. J. Appl. Earth Obs. Geoinf. 2025, 137, 104413. [Google Scholar] [CrossRef]

- Wang, Z.; Nie, C.; Wang, H.; Ao, Y.; Jin, X.; Yu, X.; Bai, Y.; Liu, Y.; Shao, M.; Cheng, M.; et al. Detection and Analysis of Degree of Maize Lodging Using UAV-RGB Image Multi-Feature Factors and Various Classification Methods. ISPRS Int. J. Geo-Inf. 2021, 10, 309. [Google Scholar] [CrossRef]

- Dimyati, M.; Supriatna, S.; Nagasawa, R.; Pamungkas, F.D.; Pramayuda, R. A Comparison of Several UAV-Based Multispectral Imageries in Monitoring Rice Paddy (A Case Study in Paddy Fields in Tottori Prefecture, Japan). ISPRS Int. J. Geo-Inf. 2023, 12, 36. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-Ground Biomass Estimation and Yield Prediction in Potato by Using UAV-Based RGB and Hyperspectral Imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Narmilan, A.; Gonzalez, F.; Salgadoe, A.S.A.; Powell, K. Detection of White Leaf Disease in Sugarcane Using Machine Learning Techniques over UAV Multispectral Images. Drones 2022, 6, 230. [Google Scholar] [CrossRef]

- Xu, J.-X.; Ma, J.; Tang, Y.-N.; Wu, W.-X.; Shao, J.-H.; Wu, W.-B.; Wei, S.-Y.; Liu, Y.-F.; Wang, Y.-C.; Guo, H.-Q. Estimation of Sugarcane Yield Using a Machine Learning Approach Based on UAV-LiDAR Data. Remote. Sens. 2020, 12, 2823. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.-S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Jiang, H.; Peng, M.; Zhong, Y.; Xie, H.; Hao, Z.; Lin, J.; Ma, X.; Hu, X. A Survey on Deep Learning-Based Change Detection from High-Resolution Remote Sensing Images. Remote. Sens. 2022, 14, 1552. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean Yield Prediction from UAV Using Multimodal Data Fusion and Deep Learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Yang, M.-D.; Tseng, H.-H.; Hsu, Y.-C.; Tsai, H.P. Semantic Segmentation Using Deep Learning with Vegetation Indices for Rice Lodging Identification in Multi-Date UAV Visible Images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Vine Disease Detection in UAV Multispectral Images Using Optimized Image Registration and Deep Learning Segmentation Approach. Comput. Electron. Agric. 2020, 174, 105446. [Google Scholar] [CrossRef]

- Modi, R.U.; Kancheti, M.; Subeesh, A.; Raj, C.; Singh, A.K.; Chandel, N.S.; Dhimate, A.S.; Singh, M.K.; Singh, S. An Automated Weed Identification Framework for Sugarcane Crop: A Deep Learning Approach. Crop Prot. 2023, 173, 106360. [Google Scholar] [CrossRef]

- Adrian, J.; Sagan, V.; Maimaitijiang, M. Sentinel SAR-Optical Fusion for Crop Type Mapping Using Deep Learning and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2021, 175, 215–235. [Google Scholar] [CrossRef]

- Wang, B.; Chen, Z.L.; Wu, L.; Xie, P.; Fan, D.L.; Fu, B.L. Road Extraction from High-Resolution Remote Sensing Images Using U-Net with Connectivity. J. Remote Sens. 2020, 24, 1488–1499. [Google Scholar]

- Chen, Z.; Wang, C.; Li, J.; Xie, N.; Han, Y.; Du, J. Reconstruction Bias U-Net for Road Extraction from Optical Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2284–2294. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation 2015. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Xu, H.M. Research on Classification Method of High-Resolution Remote Sensing Images Based on Deep Learning U-Net Model. Master’s Thesis, Southwest Jiaotong University, Chengdu, China, 2018. Available online: https://d.wanfangdata.com.cn/thesis/CiBUaGVzaXNOZXdTMjAyNTA2MTMyMDI1MDYxMzE2MTkxNhIJRDAxNDYzOTEwGgh3ZmR0dDZoZQ== (accessed on 27 October 2025).

- Zhao, X.; Yuan, Y.; Song, M.; Ding, Y.; Lin, F.; Liang, D.; Zhang, D. Use of Unmanned Aerial Vehicle Imagery and Deep Learning UNet to Extract Rice Lodging. Sensors 2019, 19, 3859. [Google Scholar] [CrossRef]

- Solórzano, J.V.; Mas, J.F.; Gao, Y.; Gallardo-Cruz, J.A. Land Use Land Cover Classification with U-Net: Advantages of Combining Sentinel-1 and Sentinel-2 Imagery. Remote Sens. 2021, 13, 3600. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Proceedings of the Computer Vision—ECCV 2016—14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module 2018. In Computer Vision—ECCV 2018; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Huang, T.; Xu, J. Spatio-Temporal Evolution Characteristics, Causes and Countermeasures of Cultivated Land Conversion in Guangxi over the Past 40 Years. Chin. J. Agric. Resour. Reg. Plan. 2023, 44, 40–51. Available online: https://d.wanfangdata.com.cn/periodical/zgnyzyyqh202310007 (accessed on 27 October 2025).

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Yang, Z.; Sinnott, R.O.; Bailey, J.; Ke, Q. A Survey of Automated Data Augmentation Algorithms for Deep Learning-Based Image Classification Tasks. Knowl. Inf. Syst. 2023, 65, 2805–2861. [Google Scholar] [CrossRef]

| No. | Location | Latitude–Longitude Range | Number of Orthomosaic Scenes | Acquisition Period | Area per Scene (km2) |

|---|---|---|---|---|---|

| 1 | Wuning Town, Wuming District, Nanning | 108°10′1″ E~108°10′55″ E 23°7′55″ N~23°8′56″ N | 2 | January & February 2023 | 2.88 |

| 2 | Siyang/Jiao’an Town Border, Shangsi County, Fangchenggang | 107°56′28″ E~107°57′29″ E 22°7′1″ N~22°7′34″ N | 2 | February & March 2023 | 1.80 |

| 3 | Xinhe Town, Jiangzhou District, Chongzuo | 107°14′31″ E~107°15′22″ E 22°32′10″ N~22°33′00″ N | 1 | February 2023 | 2.25 |

| 4 | Changping Township, Fusui County, Chongzuo | 107°52′23″ E~107°53′31″ E 22°42′14″ N~22°43′08″ N | 1 | April 2023 | 3.20 |

| 5 | Laituan Town, Jiangzhou District, Chongzuo | 107°31′19″ E~107°32′31″ E 22°25′08″ N~22°26′13″ N | 2 | March & April 2023 | 4.41 |

| 6 | Luwo Town, Wuming District, Nanning | 108°17′40″ E~108°18′16″ E 23°15′00″ N~23°15′35″ N | 1 | April 2023 | 1 |

| Feature/Component | U-Net | RU-Net | RCAU-Net |

|---|---|---|---|

| Encoder Backbone | Standard convolutional blocks | ResNet50-based | ResNet50-based |

| Core Building Block | Double convolution and ReLU | Residual blocks | Residual blocks |

| Attention Mechanism | None | None | CBAM-integrated |

| Multi-Scale Context Module | None | None | ASPP-integrated |

| Parameter | Specification |

|---|---|

| CPU | Xeon Gold 6430 |

| GPU | RTX 4090 (24 GB VRAM) |

| CUDA Version | 12.4 |

| Input Size | (512, 512, 3) |

| Epochs | 200 |

| Batch Size | 16 |

| Optimizer | Adam |

| Clipvalue | 0.5 |

| Learning Rate Schedule | Warm-Up Cosine Decay |

| Warm-up Phase | 2 cycles |

| Initial LR | 1 × 10−6 |

| Maximum LR | 1 × 10−4 |

| Minimum LR | 1 × 10−6 |

| Early Stopping | 20 epochs |

| Confusion Matrix | Predicted Positive | Predicted Negative |

|---|---|---|

| Actual Positive | TP | FN |

| Actual Negative | FP | TN |

| Model | Precision | OA | F1 Score | Recall | mIoU | Kappa |

|---|---|---|---|---|---|---|

| U-net | 0.9049 | 0.8999 | 0.8988 | 0.8956 | 0.8445 | 0.8379 |

| RU-net | 0.9570 | 0.9552 | 0.9547 | 0.9530 | 0.9219 | 0.9195 |

| RCAU-net | 0.9731 | 0.9719 | 0.9716 | 0.9699 | 0.9447 | 0.9419 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yue, T.; Ling, Z.; Tang, Y.; Huang, J.; Fang, H.; Ma, S.; Tang, J.; Chen, Y.; Huang, H. Refined Extraction of Sugarcane Planting Areas in Guangxi Using an Improved U-Net Model. Drones 2025, 9, 754. https://doi.org/10.3390/drones9110754

Yue T, Ling Z, Tang Y, Huang J, Fang H, Ma S, Tang J, Chen Y, Huang H. Refined Extraction of Sugarcane Planting Areas in Guangxi Using an Improved U-Net Model. Drones. 2025; 9(11):754. https://doi.org/10.3390/drones9110754

Chicago/Turabian StyleYue, Tao, Zijun Ling, Yuebiao Tang, Jingjin Huang, Hongteng Fang, Siyuan Ma, Jie Tang, Yun Chen, and Hong Huang. 2025. "Refined Extraction of Sugarcane Planting Areas in Guangxi Using an Improved U-Net Model" Drones 9, no. 11: 754. https://doi.org/10.3390/drones9110754

APA StyleYue, T., Ling, Z., Tang, Y., Huang, J., Fang, H., Ma, S., Tang, J., Chen, Y., & Huang, H. (2025). Refined Extraction of Sugarcane Planting Areas in Guangxi Using an Improved U-Net Model. Drones, 9(11), 754. https://doi.org/10.3390/drones9110754