1. Introduction

In recent years, the technological revolution in geospatial data acquisition has been significantly driven by the adoption of Unmanned Aerial Vehicles (UAVs), also known as drones [

1]. These tools have transformed the way topographic and photogrammetric surveying is conducted, particularly in urban environments, by allowing the capture of high-resolution images quickly and at considerably lower costs than traditional methods, such as total stations or the Global Navigation Satellite System (GNSS) [

2].

In architecture and construction, UAV integration has improved workflows that previously relied on costly equipment and lengthy survey times. Although traditional surveying systems are still in use, they have some notable disadvantages [

3]. These include security risks and limited accessibility in hard-to-reach areas, longer turnaround times and higher operating costs, as well as difficulties in surveying complex geometries [

4]. By contrast, UAVs provide a safer and more cost-effective solution, enabling faster and less expensive data collection [

2].

The ability to conduct scheduled autonomous flights, together with the use of specialized software for image processing, has facilitated the acquisition of accurate and up-to-date information for decision making [

5]. Among the main products generated from these images are orthomosaics, Digital Surface Models (DSMs), Digital Terrain Models (DTMs), and dense point clouds (DPCs), all of which are valuable for urban planning, design, and management [

6]. Compared with satellite imagery, UAV-based photogrammetry offers near-real-time data with higher spatial detail.

The quality of the photogrammetric products generated by UAVs is highly dependent on proper flight planning. Parameters such as flight height, image overlap, camera tilt, and flight pattern directly influence the spatial resolution of the images and the accuracy of the generated models [

7]. The flight height influences the level of detail captured; overlap ensures the continuity necessary for Three-dimensional (3D) reconstruction; camera tilt and flight pattern determine the coverage and quality of the information obtained [

8].

In urban contexts, for instance, in a medium-sized Latin American city as a representative case, the correct selection and optimization of these parameters is essential to maximize the visual quality of orthomosaics and reduce processing times. Recent studies such as those by Jara Valdebenito [

5] and Cruz Toribio [

6] demonstrate that an appropriate flight configuration not only improves the quality of the generated products but also reduces the demand for computational resources.

In this context, this study aims to analyze the impact of different flight parameters on the quality of photogrammetric products generated with UAVs in a consolidated urban environment with typical lots. To this end, a comparative experiment is conducted in the city of Cuenca (Ecuador), evaluating different configurations of flight height, frontal overlap, lateral overlap, camera tilt, and flight pattern, to establish guidelines for more efficient flight planning in terms of both time and quality. Highlights of this work are listed as follows:

Investigating how different UAV fight configurations influence the visual quality and efficiency of urban orthomosaic generation.

Analyzing various flight parameters such as flight height, image overlap, camera angle, and flight pattern.

Providing guidelines for optimizing photogrammetric missions in dense urban environments.

The subsequent sections of this paper are organized as follows:

Section 2 summarizes state-of-the-art works related to UAV flights, digital photogrammetry, flight parameters, and orthomosaic visual quality and processing time. Steps of research methodology, evaluation metrics, and experiments are presented in

Section 3.

Section 4 presents the results of experiments, statistical analysis of relationships between different flight parameters, and discussions of the optimization of UAV flight parameters. Conclusions are shown in

Section 5.

2. Related Work

2.1. UAV in Construction and Urban Planning

UAVs transitioned from their initial military applications to become indispensable tools across diverse civilian, industrial, and commercial sectors. Their remarkable ability to capture high-resolution geospatial data has significantly improved workflows in architecture, civil engineering, and urban planning [

9,

10,

11]. This technological shift addresses long-standing challenges in data acquisition, offering unprecedented levels of efficiency and detail.

UAV are particularly well-suited for urban surveys, especially in challenging or extensive terrains, due to their capacity for autonomous, high-precision flights and detailed imagery acquisition [

2]. Beyond simple image capture, UAVs enable the creation of comprehensive 3D models, digital twins, and site analysis crucial for every stage of a construction project, from initial planning and design to progress monitoring and final inspection. The integration of UAV technology streamlines data collection, leading to improved efficiency, accuracy, and safety in project planning and execution, significantly reducing the need for dangerous manual inspections [

12,

13].

Multi-rotor UAVs, in particular, offer significant advantages in urban environments due to their superior maneuverability, stability, and Vertical Takeoff and Landing (VTOL) capabilities. These characteristics enable precise hovering and accurate trajectory control, which are beneficial for capturing thorough information in areas characterized by complex urban canyons, low-rise buildings, and relatively flat topography [

14,

15]. Their ability to navigate confined spaces and perform close-range inspections makes them ideal for tasks such as facade inspection, structural assessment, and interior mapping when equipped with appropriate sensors [

16]. This adaptability facilitates the acquisition of homogeneous, high-quality data, crucial for generating reliable cartographic products and precise 3D models [

17].

Meanwhile, fixed-wing UAVs are primarily employed for vast areas due to their higher operational speeds and longer endurance [

18]. Emerging research highlights their growing role in urban mapping, regional infrastructure monitoring, and environmental surveys, particularly when integrated with advanced sensors for thermal, multispectral, or Light Detection and Ranging (LiDAR) data acquisition [

19,

20,

21]. Their ability makes them suitable for large-scale projects, complementing the capabilities of multi-rotor systems by providing a broader contextual overview [

18].

Moreover, the legislation regarding UAV flights within urban environments is also studied in Europe and the USA. For example, the European Union Aviation Safety Agency (EASA) adopted a harmonized and risk-based regulatory approach, classifying operations into three categories based on low, medium, and high risks and regulating flight region, time, and altitude to ensure safe and efficient access to urban airspace in U-space concept [

22]. Meanwhile, the Federal Aviation Administration (FAA) proposed UAV Traffic Management (UTM) regulation to integrate UAVs into existing airspace safely, and UAVs must be managed in real-time to avoid collisions and ensure safe operations in urban areas [

23]. Furthermore, Both EASA and FAA legislations regulate how UAV operators must handle personal data collected during flights for privacy and data protection [

24].

Recent advancements in multi-rotor technology include enhanced obstacle avoidance systems, Real-Time Kinematic (RTK) and Post-Processed Kinematic (PPK) GPS integration for improved absolute positioning precision without extensive Ground Control Points (GCPs), and extended flight times, further improving their applicability in complex urban settings [

25,

26,

27]. The growing sophistication of multi-rotor platforms allows for rapid deployment and data acquisition, making them ideal for time-sensitive construction progress monitoring, volumetric calculations, and post-disaster assessments [

1,

28].

The choice between multi-rotor and fixed-wing UAV often depends on the project’s scale, required detail, and operational environment, with hybrid systems emerging to combine the advantages of both [

29]. Therefore, multi-rotor UAVs are selected for urban photogrammetric surveys in this study, considering the cost-effective and high-quality requirements of urban mapping.

2.2. Digital Photogrammetry Applied to UAVs

Digital photogrammetry is a well-established geomatic technique that leverages principles of geometry and computer vision, deriving accurate 3D information about objects and surfaces from 2D images [

30]. In the context of UAV operations, photogrammetry relies on the acquisition of multiple overlapping images, which are then processed using sophisticated Structure-from-Motion and Multi-View Stereo algorithms [

31,

32]. These algorithms are fundamental to reconstructing scenes from unordered image collections, making them highly suitable for UAV-based data.

Structure-from-Motion (SfM) algorithms are designed to automatically identify common feature points across a series of overlapping images. The robustness of SfM allows for the processing of images captured from various angles and heights, such as complex urban environments and high-relief terrains [

33]. Through a robust bundle adjustment process, SfM simultaneously determines the camera’s precise position and orientation for each image, as well as the coordinates of the observed features [

34,

35]. This initial output, typically a sparse point cloud, serves as the geometric foundation for subsequent detailed processing steps.

Following SfM, Multi-View Stereo (MVS) algorithms perform dense image matching and depth estimation, leveraging the known camera positions and orientations to reconstruct a high-quality 3D model of the scene. This process typically involves projecting pixels from multiple images into 3D space and identifying corresponding points to build a dense representation [

36]. These dense point clouds serve as the foundation for subsequent processing steps, leading to the generation of a variety of accurate digital models, such as Digital Surface Models (DSMs), Digital Terrain Models (DTMs), and high-resolution orthomosaics [

37,

38]. For example, SfM and MVS can be used to create detailed 3D models of historical monuments, statues, and facades, documenting sites that are undergoing restoration or are at risk of environmental degradation [

39].

The ultimate quality and performance of these photogrammetric products is contingent upon several critical factors. These include the resolution of the acquired images, the spatial distribution of the viewpoints during the flight, the radiometric quality of the imagery, and the meticulous calibration of the UAV’s onboard sensors [

40,

41]. The strategic use of GCPs remains a crucial aspect for achieving high absolute precision and to georeference the derived models to a specific coordinate system. While advancements in direct georeferencing reduce their number, GCPs provide an independent position check and anchor the model to real-world coordinates, minimizing error propagation [

42,

43].

Recent studies emphasize the importance of robust camera calibration, including focal length, principal point, lens distortions, and the camera’s position and orientation, as well as self-calibration techniques [

44,

45]. Therefore, it is necessary to calibrate the optimal parameters for the UAV flights in order to improve the quality of output products.

2.3. Flight Parameters

Effective planning of photogrammetric UAV flights requires careful selection of parameters that directly affect both the quality of the final products and the efficiency of the data acquisition and processing workflow. These parameters must be optimized to achieve the desired level of detail and accuracy while maintaining operational feasibility.

- -

Flight Height: Flight height, defined as the vertical distance between the UAV and the ground, is a primary determinant of spatial resolution. Lower altitudes provide higher-resolution images and finer Ground Sample Distance (GSD), yielding more detailed geometric information. However, they also increase the number of images required to cover a given area, enlarging dataset size and processing time [

12]. For flat urban areas, a balance between resolution and efficiency is recommended [

14]. Best practices suggest selecting height according to the specific mapping objectives, the sensor characteristics, and the target GSD [

46]. For instance, in dense urban areas, flights at lower altitudes help preserve the desired GSD and minimize occlusions, while combining nadir and oblique imagery can capture facades and courtyard walls effectively [

47].

- -

Image Overlap: Image overlap refers to the percentage of scene coverage common between adjacent photographs. High overlap ensures continuity and redundancy, which are essential for robust feature matching and depth calculation in SfM/MVS workflows [

48]. Recommended values generally exceed 75% for forward overlap and 70% for lateral overlap [

31]. Zhong [

49] further highlights that optimal overlap ranges between 60% and 80% depending on terrain complexity and urban morphology. Recent developments explore adaptive overlap strategies, dynamically adjusting to real-time variations in topography or building heights to prevent redundant data collection in flat areas while ensuring sufficient coverage for vertical surfaces [

33,

50].

- -

Camera Tilt: Camera tilt, defined as the orientation angle of the sensor relative to the ground, significantly influences the type of information captured. Nadir images are optimal for horizontal surfaces, yielding high planimetric accuracy in orthomosaics and precise mapping of rooftops and ground features [

15]. Conversely, oblique imagery captures vertical structures, enhancing 3D city models, architectural documentation, and building inspections [

51]. While their contribution is less critical in uniformly low-rise areas, oblique views still enrich model completeness and visualization by integrating facade and contextual details [

14]. Increasingly, UAVs integrate multi-camera systems, enabling simultaneous nadir and oblique acquisitions to produce more geometrically consistent urban models [

52,

53]. For dense environments or street-canyon contexts, oblique captures with tilt angles between 30° and 45° significantly improve vertical reconstruction and mitigate incomplete facades [

54].

- -

Flight Pattern: The flight pattern, or trajectory followed by the UAV, determines coverage uniformity, acquisition speed, and data processing effectiveness. Grid patterns—comprising parallel flight lines with planned overlap—are the most widely used, providing homogeneous coverage and facilitating accurate orthomosaic and dense point cloud generation [

55]. In contrast, linear or unidirectional paths without systematic overlap often result in gaps, making them unsuitable for precise mapping [

56]. Advanced planning tools now propose adaptive flight paths that account for terrain relief, building heights, and environmental conditions (e.g., wind), thereby minimizing redundancy and maximizing coverage [

57,

58]. For specialized applications, such as cultural heritage documentation or detailed architectural surveys, circular or spiral trajectories around specific structures enable complete multi-angle coverage [

59].

Since flight parameters such as altitude and overlap directly determine GSD, redundancy, and spatial completeness of the imagery, they are decisive for both the visual quality of orthomosaics and the computational efficiency of the workflow. Their optimization is therefore critical for balancing accuracy and processing costs in urban photogrammetric surveys.

2.4. Orthomosaic Visual Quality and Processing Time

In architecture and construction projects, both the visual quality of orthomosaics and the efficiency of the processing workflow are fundamental to informed decision making [

9]. High-quality orthomosaics—characterized by sharp details, accurate color representation, minimal distortions, and precise geometric fidelity—provide reliable visual and metric information for site analysis, progress monitoring, as-built documentation, and stakeholder communication. Optimizing flight parameters to capture sharp, high-resolution imagery while minimizing processing times significantly enhances productivity and the overall quality of derived geospatial products [

14].

This approach differs from studies that prioritize absolute georeferencing accuracy primarily through the extensive use of Ground Control Points (GCPs). Instead, emphasis is placed on achieving high visual quality and operational efficiency, thereby simplifying and accelerating both data acquisition and processing, often with reduced reliance on GCPs [

31]. While GCPs undoubtedly improve absolute accuracy by anchoring the photogrammetric model to a known coordinate system and reducing systematic errors, their establishment and surveying are time- and labor-intensive.

Recent advances in direct georeferencing using high-precision Global Navigation Satellite System/Inertial Measurement Unit (GNSS/IMU) technology onboard UAV are reducing the need for dense GCP networks, particularly in applications where relative precision, visual clarity, and rapid deployment are paramount [

60]. For instance, mapping a 2 km

2 construction site at 2 cm GSD might traditionally require 12–15 well-distributed GCPs, demanding approximately 3–4 h of fieldwork for placement and surveying. With RTK/PPK GNSS systems integrated with high-grade IMUs, this requirement may be reduced to only 2–3 checkpoints, cutting field time by more than 70% [

61]. Processing efficiency is also enhanced: datasets that might require around 4 h for aerial triangulation without GNSS/IMU support can often be processed in about 90 min when precise exterior orientation is available, as the need for extensive tie-point searches and full bundle adjustments is diminished [

62].

Processing time for orthomosaic generation is inherently dependent on factors such as survey area, number of images, target resolution, and available computational resources. The computational demands of SfM/MVS workflows—particularly during dense point cloud generation and orthomosaic creation—are considerable, often requiring substantial RAM, CPU/GPU power, and storage capacity [

38]. To address these challenges in large-scale projects, cloud-based platforms and parallel computing techniques are increasingly employed. These solutions accelerate turnaround times by distributing computational loads across multiple servers, while granting access to high-performance infrastructure without the need for significant hardware investments [

63,

64].

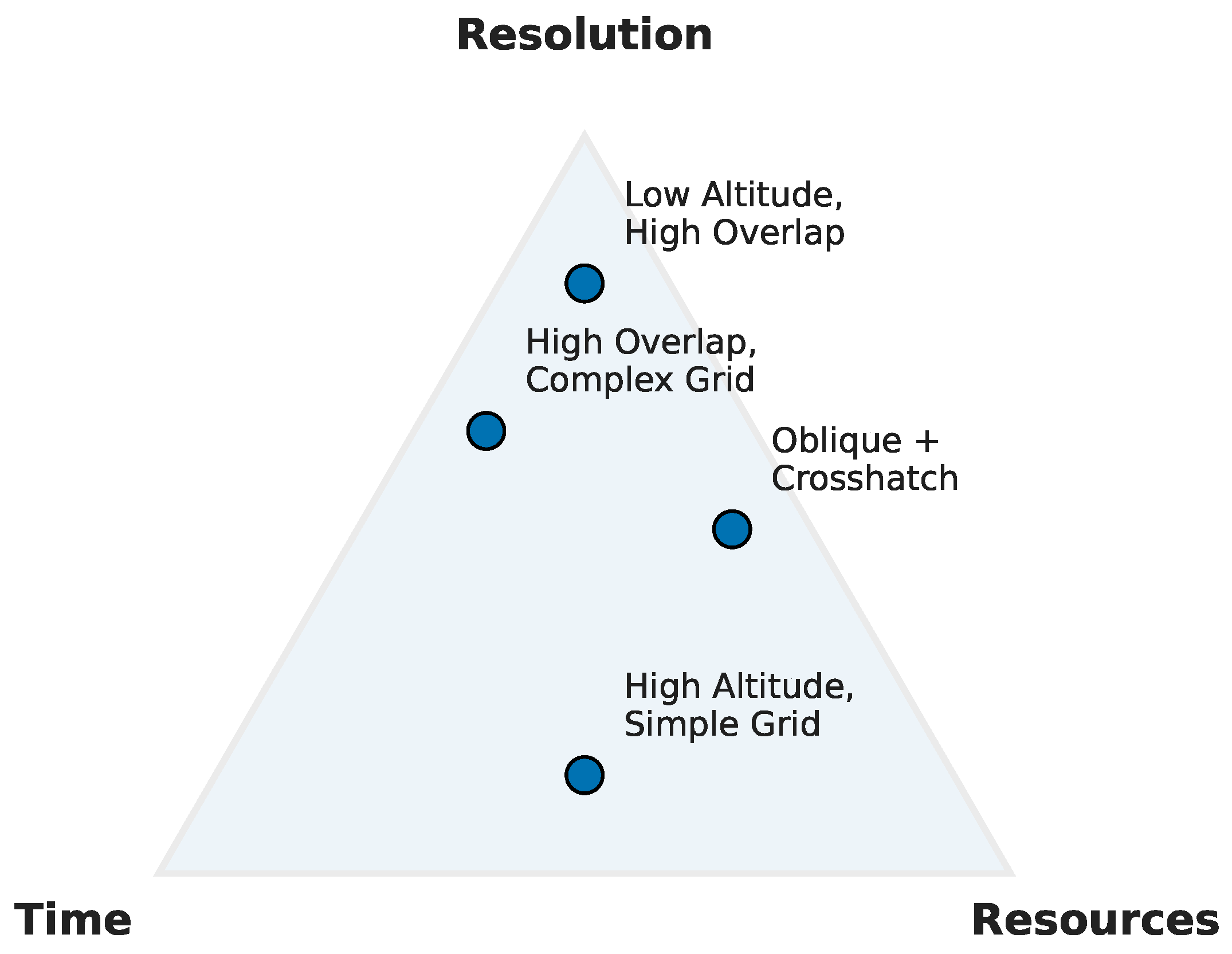

As shown in

Figure 1, a triangular trade-off exists between resolution, processing time, and computational resources in urban photogrammetric surveys. Flight parameters mediate this trade-off: higher overlaps, complex flight patterns, and oblique imagery enhance resolution and model completeness but increase processing demands, whereas higher altitudes and simple grid missions reduce time and computational load at the expense of resolution and facade detail.

Continuous advances in photogrammetric software, such as optimized image matching algorithms, faster bundle adjustment routines, and hardware-accelerated workflows, continue to improve the quality of orthomosaics and reduce processing times. These advances are making UAV photogrammetry increasingly accessible and practical, enabling applications ranging from rapid response mapping to daily construction monitoring.

3. Methodology

3.1. Definition of the Study Area and Selection of the Typical Lot

The study was conducted in a consolidated urban area of Cuenca, Ecuador, specifically in the southern sector of the El Batán parish, in the Ex CREA area. As shown in

Figure 2a, the selected polygon corresponds to a complete block bounded by Avenida México, Avenida Unidad Nacional, Calle Colombia, Calle Argentina, and Calle Padua, covering an area of approximately 1.5 hectares. This area was selected for its representative urban morphology, predominantly flat topography, and the coexistence of residential and commercial uses.

Figure 2b shows the interior of the study block, where 33 properties were identified with varying typologies in terms of shape, size, and land use. To characterize a “typical lot”, the Cuenca Municipal Decentralized Autonomous Government (GAD) geovisor was used. This geovisor provides detailed cadastral information, such as the property code, frontage dimensions, land area, and built-up area for each lot.

The GAD Cuenca GeoViewer was used to define the typical lot. This tool provides data such as cadastral key, frontage, depth, and area of each property. These data were tabulated and statistically analyzed to determine the average values representative of the block’s properties.

The results indicate that the typical lot has an average frontage of 13.60 m, a depth of 20.90 m, and an area of 301.88 m

2;. These dimensions coincide with the predominant land subdivision and occupation typologies described by Hermida [

65], who analyzes the morphotypological patterns of urbanization in intermediate Andean cities such as Cuenca (Ecuador).

The choice of this typical lot is justified by its representativeness of the urban configuration in consolidated sectors of the city. This approach allows the results derived from the photogrammetric analysis to be extrapolated to a significant number of similar urban situations, favoring their application in planning, urban design, and architectural survey projects in comparable contexts.

3.2. Parametric Sweep

Flights were carried out using a DJI Phantom 4 PRO V2, selected to enhance orthomosaic visual quality and operational efficiency. An aerial optimized F2.8 wide-angle lens with a 24 mm equivalent focal length was used to capture sharp and vivid photos at high resolutions. A 6-camera navigation system was used to constantly calculate the relative speed and distance between the aircraft and the objects. A flight autonomy made up an infrared sensing system, dual-band satellite positioning of GPS and GLONASS, and redundant IMUs and compasses, enhancing the reliability and efficiency of flight. An infrared sensing system was used to measure the distance between the aircraft and any obstacles using infrared 3D scanning. Therefore, GCPs and other georeferencing factors were not considered for evaluating flight efficiency due to these built-in functions.

In order to identify the optimal parameters for photogrammetric surveys with UAVs in medium-sized urban areas of approximately 1.5 ha, an experiment based on a parametric sweep was designed, comprising 96 flight combinations. The methodology included systematic flight planning, standardized image processing, and multi-criteria evaluation of the products, emphasizing orthomosaic visual quality and operational efficiency.

Each flight plan was identified by a structured nomenclature that encodes the parameters used: flight height (H60, H90), frontal overlap (TF70, TF75, TF80), lateral overlap (TL65, TL70, TL75, TL80), camera angle (A45, A90), flight pattern type (TN: normal; TG: grid), and number of photographs obtained (F35). For example, the code “01_H60_TF70_TL65_A90_TN_F35” represents the first flight at 60 m altitude, with 70% frontal overlap, 65% lateral overlap,

camera angle, normal flight pattern, and 35 images captured. All parameters of 96 UAV flights are shown in

Table 1 and

Table 2.

All flights were planned using Dronelink software with the Growth subscription, which defined the polygon of the study area and automatically configured the parameter combinations. During planning, Dronelink provided estimates of flight time and number of photographs, which were later compared with actual field values. Environment conditions were confirmed before the take-off of UAV flights to ensure success, and thus, it was chosen to be the windless and sunny days.

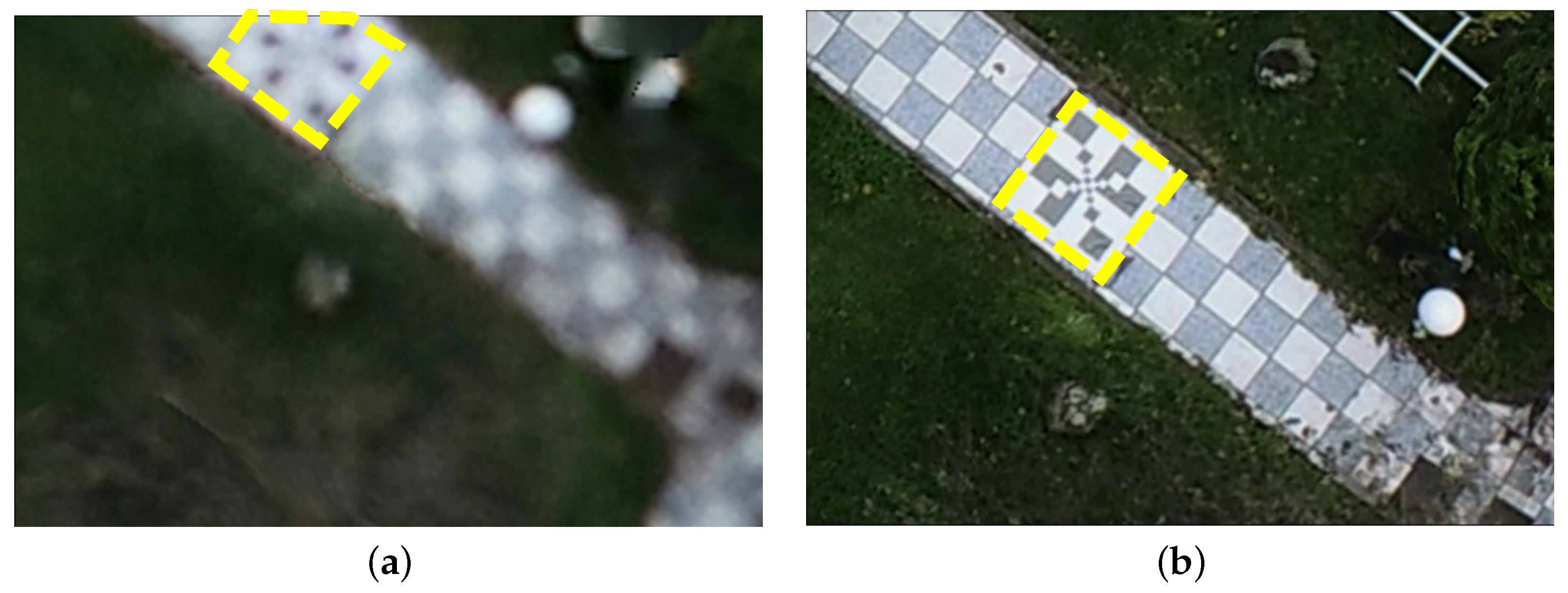

The information from each flight was systematized into a structured database that includes operational variables such as flight time and distance traveled, processing times such as aerial triangulation and orthomosaic generation, and three visual quality indicators derived from the generated orthomosaic. The latter were evaluated using a control target located in the study area as shown in

Figure 3a, with a 1 m × 1 m black-and-white grid used to evaluate geometric precision and sharpness in each orthomosaic. Total operating times were obtained by summing the flight, aerial triangulation, and orthomosaic generation phases, allowing the overall performance to be evaluated for each configuration.

For each orthomosaic, the sharpness with which the internal subdivisions of the 1 × 1 square meter target, composed of black and white patterns of decreasing sizes (50 cm, 25 cm, 12.5 cm, and 6.25 cm), could be distinguished, was evaluated, as shown in

Figure 3b. This analysis provided an objective measure of the level of visual resolution achieved in each configuration.

3.3. Image Processing

After completing the flights, the image set from each experimental configuration was organized. Images were stored in individual folders using the standardized nomenclature described previously, ensuring traceability and consistency.

Each set of images was aerially triangulated using iTwin Capture Modeler Master software in the version 24.01.07.4760, because it has higher integration with Geovisor and UAV system in this investigation than other platforms, such as Pix4D and Agisoft Metashape. This process involved automatically estimating camera interior and exterior orientation parameters and establishing geometric correspondences between consecutive images. Processing consistency was ensured by maintaining the software’s default settings.

From the aerial triangulation results, orthomosaics were generated for each of the 96 flight configurations. Orthorectification was also performed using iTwin Capture Modeler Master, preserving the original capture resolution and exporting the products in the georeferenced .TIF format. Each orthomosaic was stored in its corresponding folder using the flight nomenclature, enabling direct association with experimental parameters and evaluation metrics.

Moreover, all UAV image processing and orthomosaic generation were carried out on a workstation running Windows 10, equipped with an Intel Core i7-12700 CPU (20 threads), 128 GB of DDR4 RAM at 3600 MHz, a 2 TB storage unit, and an NVIDIA RTX A2000 graphics card (6 GB VRAM). This configuration provided the computational capacity required for efficient image processing and ensured reproducible conditions across all experiments.

3.4. Evaluation of the Quality of Orthomosaics

The evaluation of the generated orthomosaics focused on three key criteria: (i) accuracy in locating the control target within the orthomosaic, (ii) level of detail visible on the target, and (iii) overall orthomosaic visual quality. These criteria were applied both to compare different flight configurations and to quantify the impact of each parameter combination on the photogrammetric results.

3.4.1. Target Location Accuracy

Each orthomosaic generated in .TIF format was loaded to CivilCAD 2025 software, where the target previously installed in the field was located. It is important to note that this process was not intended to metrically georeference the product, but only to locate the image on the print layout and evaluate the target’s relative location consistency. All orthomosaics were exported to PDF under consistent conditions: fixed scale and letter format, which allowed for standardized visual comparison.

Based on the relative location of the target within the layout, a qualitative rating between 1 and 3 was assigned:

This evaluation identified variations attributable to errors in the orthomosaic’s georeferencing due to different parameter combinations. Although this study does not address georeferencing or ground control points, the shifting of the obtained images is evident.

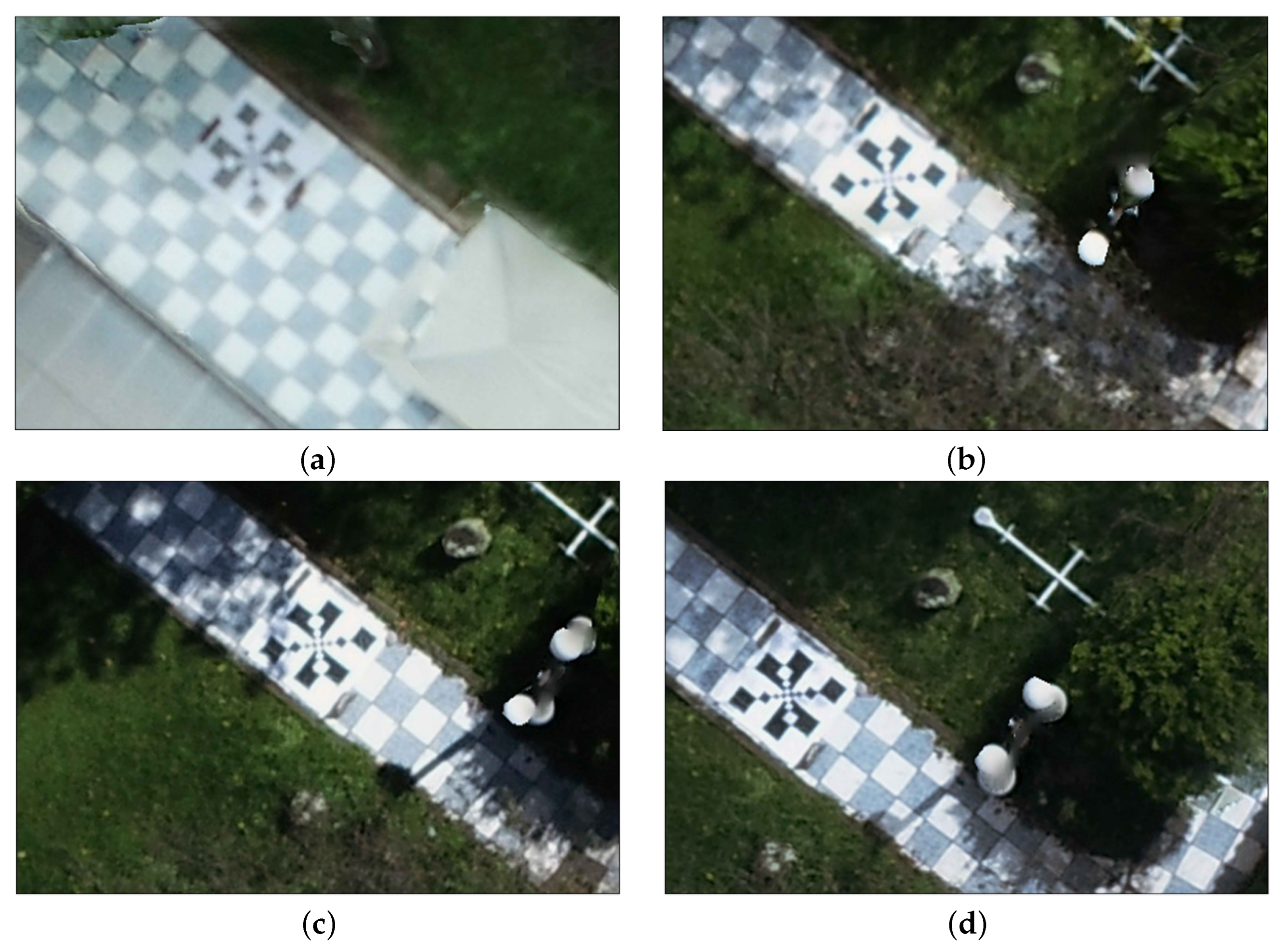

3.4.2. Level of Visual Detail on the Target

A rating scale of 1 to 5 was applied, based on the smallest clearly distinguishable subdivision size:

Only the general outline of the target is visible, as shown in

Figure 5.

Figure 5.

Only the general outline visible.

Figure 5.

Only the general outline visible.

The 50 cm × 50 cm subdivision is clearly visible, as shown in

Figure 6a.

The 25 cm × 25 cm subdivision is clearly visible, as shown in

Figure 6b.

The 12.5 cm × 12.5 cm subdivision is clearly visible, as shown in

Figure 6c.

The 6.25 cm × 6.25 cm subdivision is clearly visible, as shown in

Figure 6d.

Figure 6.

Examples of visual detail on the target. (a) 50 × 50 cm subdivision visible. (b) 25 × 25 cm subdivision visible. (c) 12.5 × 12.5 cm subdivision visible. (d) 6.25 × 6.25 cm subdivision visible.

Figure 6.

Examples of visual detail on the target. (a) 50 × 50 cm subdivision visible. (b) 25 × 25 cm subdivision visible. (c) 12.5 × 12.5 cm subdivision visible. (d) 6.25 × 6.25 cm subdivision visible.

This scale allowed us to quantify the degree of effective resolution achieved by each combination of flight height, overlap, camera angle, and flight pattern.

3.4.3. General Quality of the Orthomosaic

Finally, a comprehensive visual evaluation of each orthomosaic was performed, considering the sharpness, homogeneity, absence of aberrations, and overall consistency of the product. This analysis considered typical effects such as geometric distortions, blurred areas, or inconsistencies due to poor overlapping.

A qualitative rating of 1 to 5 was assigned using the following criteria. 1: Significant visual deficiencies, although the area is distinguished as of interest, as shown in

Figure 7a. 2: Severe aberrations affecting readability, as shown in

Figure 7b. 3: Moderate aberrations, as shown in

Figure 7c. 4: Small aberrations, as shown in

Figure 7d. 5: High quality without visible aberrations, as shown in

Figure 7e.

These three metrics were subsequently used in a multi-criteria analysis to determine the optimal flight configurations, in terms of balance between visual quality and efficiency in orthomosaic processing time.

4. Results

Once the qualification stage was completed, a comparative analysis of the results obtained from the 96 flights was conducted, considering not only the scores assigned to each orthomosaic but also the associated operational variables, such as flight time, number of photographs captured, and processing time.

Table 3 and

Table 4 summarize the results obtained from the 96 UAV flights. The first column corresponds to the flight identifier, which is directly related to the classification presented in

Table 1 and

Table 2. The second column reports the number of photographs captured during each mission, while the third column provides the flight time as recorded by Dronelink. The fourth column shows the total image processing time obtained in the Bentley iTwin platform, including both aerotriangulation and orthophoto generation. The fifth, sixth, and seventh columns correspond to the Likert-scale evaluations of location accuracy, target quality, and overall orthophoto quality, respectively, (as defined in

Section 3.4). Finally, the last column presents the overall rating, computed as the average of the three individual quality measures: Equation (

1)

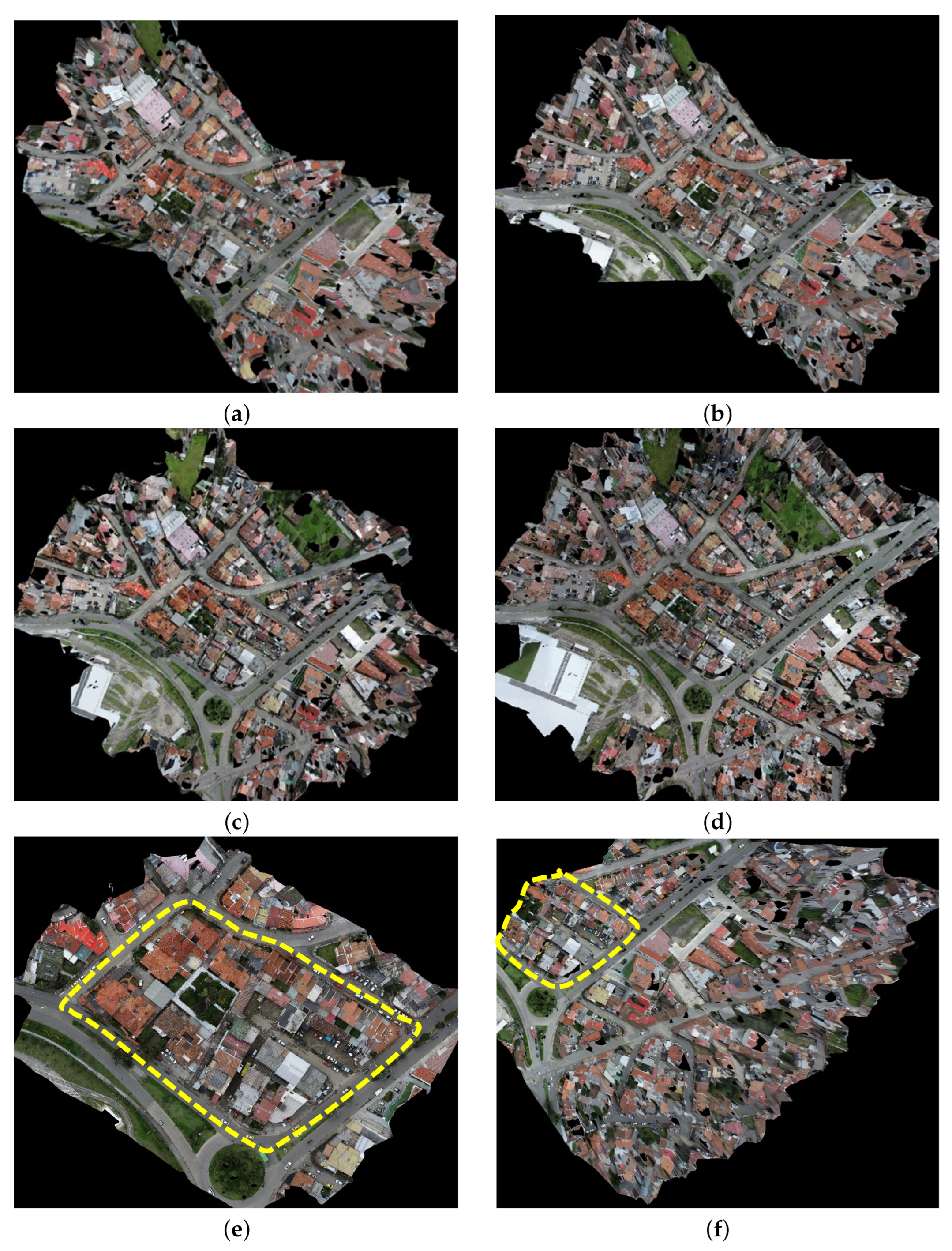

As an introduction to the analysis of the results, two flights are explained, one with a score of 5/5 and a second flight with a score of 1/5.

Flight orthomosaic rated 5/5, as shown in

Figure 7e. This image shows the entire study area in good quality, with quality measures of 5, 5, 5, respectively.

Flight orthomosaic with a 1/5 rating, as shown in

Figure 7f. This image does not show the entire study area, as the camera angle was 45 degrees, causing the camera to capture a larger area outside the study area with the quality measures of 1, 1, 1, respectively.

Relationships between flight parameters, orthomosaic visual quality, and operational efficiency are then analyzed and discussed using statistical analysis on the results in table.

4.1. Relationship Between Flight Parameters and Orthomosaic Visual Quality

4.1.1. Flight Height

Figure 8 shows that the highest scores were concentrated in flights taken at a height of 60 m, although with greater dispersion. In contrast, at 90 m, more homogeneous but generally lower scores were observed. This suggests that capturing at lower heights allows for greater detail and precision, resulting in better-quality orthomosaics, albeit at a higher computational cost. The Correlation Coefficient of statistical analysis on test results was −0.469, and the

p-value of it was less than 0.001, indicating that the negative trend between flight height and overall rating was fair and the significance of it was strong.

4.1.2. Frontal Overlap

Contrary to the expectation that greater frontal overlap implies better results, the data in

Figure 9a show that the highest-scoring flights were concentrated in flights with overlaps of 70% and 75%. In contrast, values of 80% did not necessarily offer better results and even presented lower scores. This indicates the existence of a threshold beyond which increasing the overlap does not improve the visual quality of the product and, instead, may generate unnecessary redundancies. The Correlation Coefficient between frontal overlap and overall rating was −0.259, and the

p-value was 0.011 according to the statistical test, which means that the negative trend was weak with a strong significance.

4.1.3. Lateral Overlap

Figure 9b reveals that lateral overlap has a less direct correlation with orthomosaic visual quality. Multiple flights with overlaps of 65%, 70%, 75%, and 80% were identified as achieving the highest rating. These results suggest that greater lateral overlap does not contribute more consistently to improving the quality of the generated product. The Correlation Coefficient of lateral overlap vs. overall rating was 0.126, and the

p-value in statistical analysis was 0.218, showing that there was almost no correlation between these two factors, and the significance of their trend was also weak.

4.1.4. Camera Angle

Figure 9c shows that the best scores were obtained with perpendicular camera angles (

). This configuration ensures that the images are centered and aligned with the target surface, minimizing geometric distortions. Conversely, the use of oblique angles (

) led to the capture of images outside the defined area, generating distortions and decreasing the quality of the orthomosaics obtained. The Correlation Coefficient of camera angle and overall rating was 0.706 and the

p-value was less than 0.001, and the statistical analysis indicates that the positive trend between the camera angle and overall rating was in a relatively high level and the significance of this correlation was quite strong.

4.1.5. Number of Photographs

Figure 9d shows that a greater number of photographs can guarantee better orthomosaic visual quality. While a low number of images is generally associated with low ratings, there is a high dispersion in cases with many photographs, suggesting that other factors, such as the quality of the overlap or the camera angle, have a more significant impact on the final product quality. Meanwhile, the Correlation Coefficient and

p-value between the number of photographs and overall rating were 0.609 and less than 0.001, respectively, and thus, the positive trend and significance of their relationship were both quite strong.

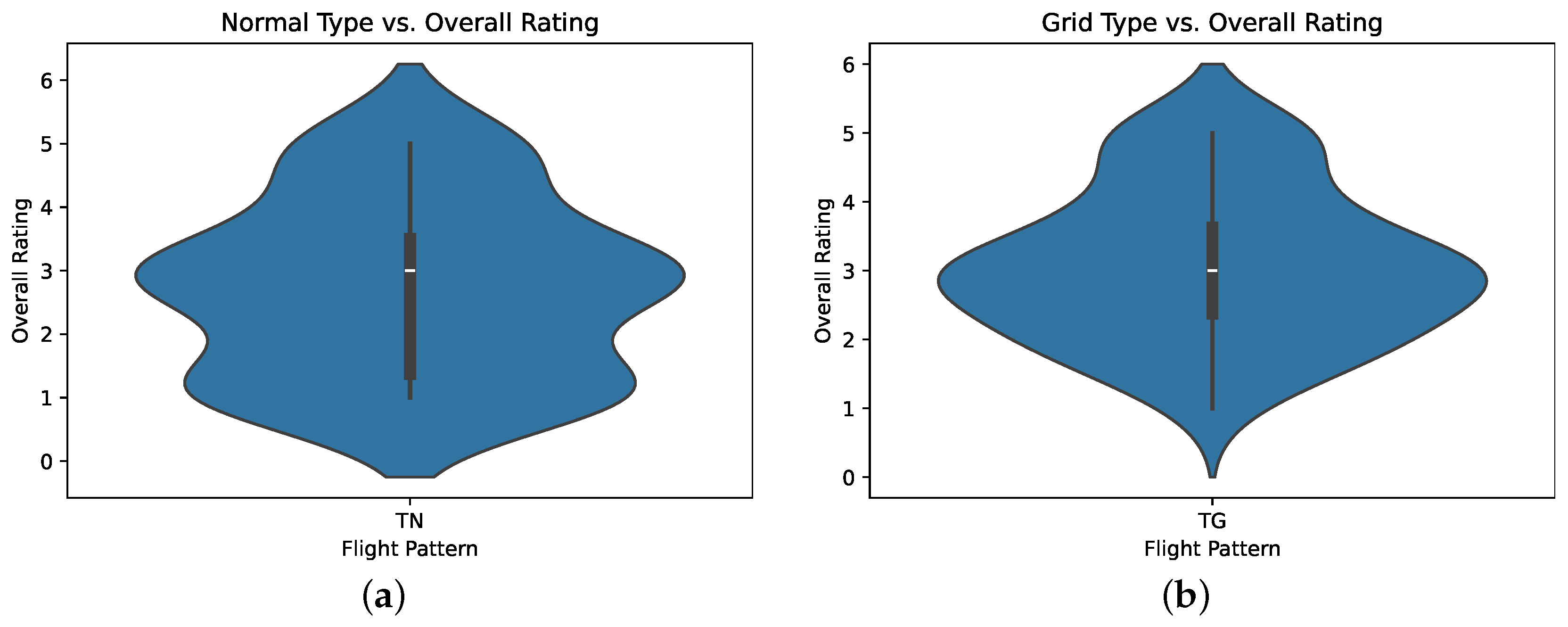

4.1.6. Flight Pattern

Figure 10 shows that flights performed with a grid-type pattern obtained slightly higher scores than flights carried out with a normal pattern. This is due to the greater number of photographs, which translates into a greater amount of detail in the orthomosaics. Statistical analysis results show that the Correlation Coefficient between flight pattern and overall rating was 0.148, while the

p-value was 0.149, which means that there was almost no trend between these two factors, and the significance of their correlation was weak as well.

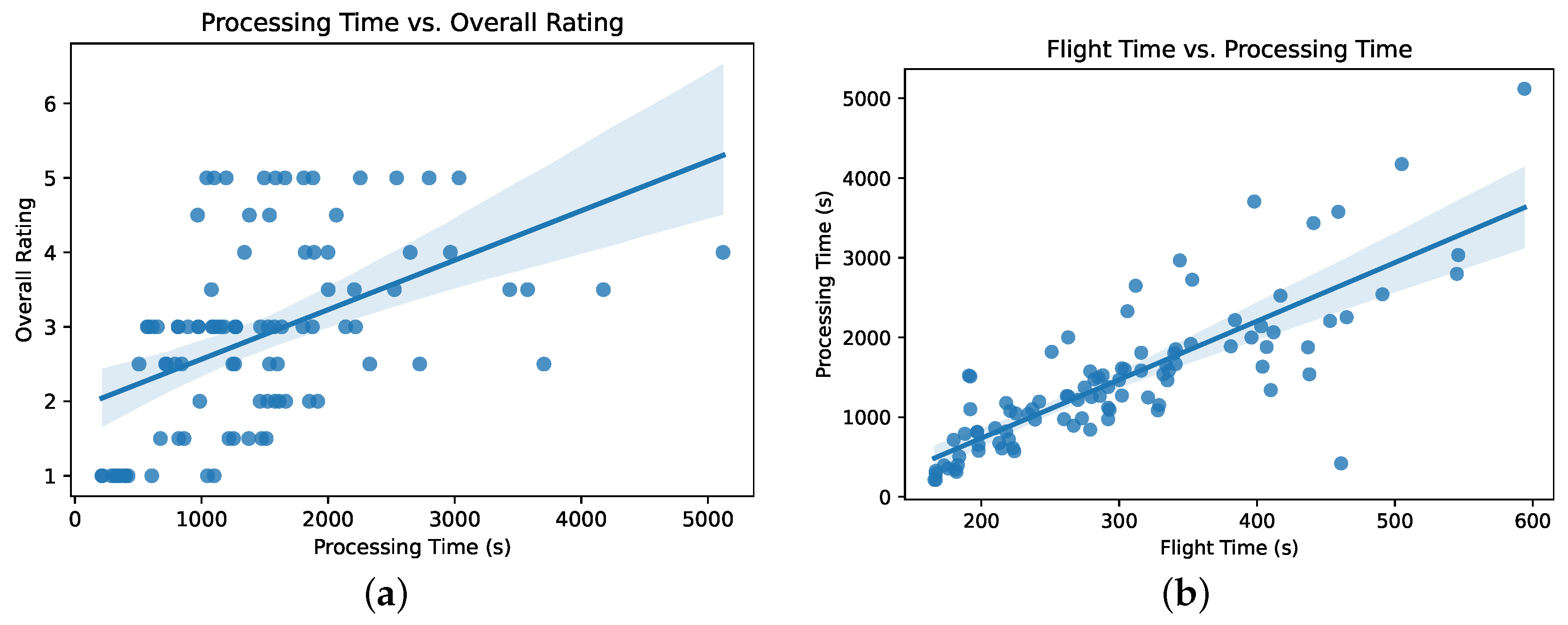

4.2. Relationship Between Operational Efficiency and Orthomosaic Visual Quality

Figure 11a shows that longer processing times do not necessarily translate into better scores in a certain range. The highest average score was achieved in the processing range of 1041 to 2798 s and approximately 17 to 46 min, while times greater than 3700 s and more than 1 h were associated with moderate or low scores. This reinforces the idea that efficiency in data capture and processing should not be sacrificed in favor of excessive data capture. Additionally, the Correlation Coefficient and

p-value of processing time and overall rating were 0.481 and less than 0.001, which indicate that there is a positive trend between the processing time and overall rating with a strong significance.

4.3. Relationship Between Flight Parameters and Operational Efficiency

4.3.1. Flight Time

Finally,

Figure 11b shows that a longer flight time is related to a longer processing time, as other parameters also play a role. This is because the longer flight time is related to a longer distance traveled and thus a larger number of photos, resulting in a longer processing time. In addition, the Correlation Coefficient between flight time and processing time was 0.811, and its

p-value was less than 0.001, indicating that the positive trend between these two was very high with a very strong significance on this correlation.

4.3.2. Flight Height

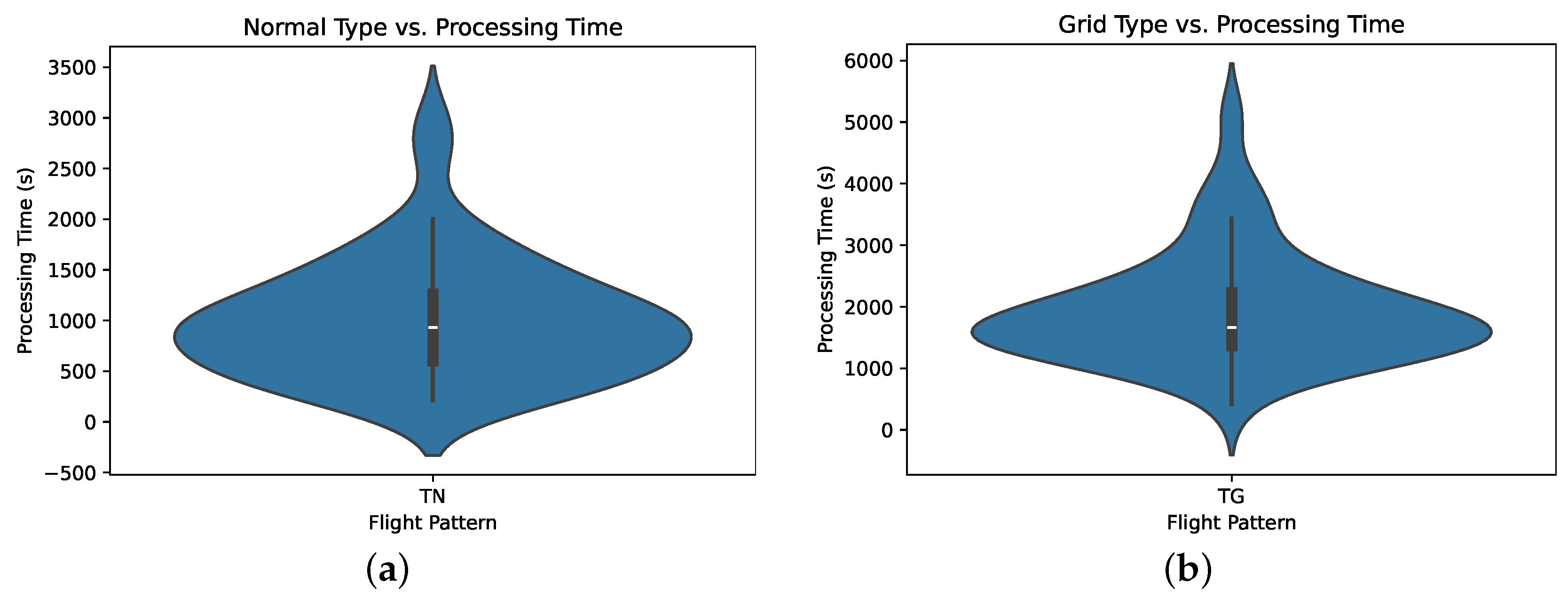

The data presented in

Figure 12 confirm that processing time tends to increase with lower flight height, such as at 60 m. This is because flying at lower heights requires capturing a greater number of photographs to cover the same area, which increases the software’s workload. In contrast, at 90 m, shorter and more consistent processing times were recorded due to the smaller number of photographs generated. Moreover, statistical analysis suggests that the Correlation Coefficient of flight height and processing time was −0.478, and the

p-value was less than 0.001, so that the negative trend between them was fairly high and their significance was strong.

4.3.3. Flight Pattern

The analysis of

Figure 13 shows that normal flights tend to require less processing time than grid flights. This difference is primarily due to the greater number of images captured in grid flights, which increases the computational effort. Although in certain sections of the graph both types present similar times, at the highest values, a clear trend toward longer processing times is observed for grid flights. Furthermore, the Correlation Coefficient between flight pattern and processing time was 0.531 and the

p-value of it was less than 0.001, meaning that there was a strong positive trend between flight pattern and processing time and the significance of it was quite strong.

5. Conclusions

The statistical analysis of the results provides insights into balancing image quality and operational efficiency when defining UAV flight parameters for urban photogrammetric surveys. Flight parameter configurations should be defined according to the type of cartographic product required and the specific environmental conditions. Based on the experimental results and comparative analysis, the following conclusions can be drawn:

When the objective is to cover large areas and the level of detail in the orthomosaic is not a priority, higher flight heights are recommended, as they reduce the number of captured images and shorten processing times. However, maximum height is constrained by national aviation regulations. In contrast, lower flight heights contribute to greater detail and accuracy, resulting in higher-quality orthomosaics, albeit at the cost of increased computational requirements.

The statistical analysis shows little correlation between frontal and lateral overlaps and the overall quality rating. Similarly, the influence of flight pattern on orthomosaic visual quality is not statistically significant. Therefore, it is advisable to adjust these parameters according to the specific conditions of each UAV survey.

In contrast, three factors show strong correlations with orthomosaic visual quality: camera angle, processing time, and number of photographs. Quality improves significantly when using a nadir (90°) camera angle, because orthomosaic generation benefits from a vertical view aligned with the ground surface. There are also clear positive trends between the number of photographs, processing time, and product quality within a certain range. Thus, it is recommended to capture between 60 and 100 photographs to maintain quality while limiting processing demands.

The analysis also confirms that flight time is strongly related to processing time: longer flights generate larger datasets, which increase computational requirements. Grid flight patterns demand more processing time than normal patterns, although they produce slightly higher-quality results. As expected, greater flight time leads to longer processing times due to the larger number of images required for reconstruction.

For the specific case of surveying a typical lot of approximately 300 m2 in the city of Cuenca (Ecuador), the optimal combination of parameters was a flight height of 60 m, 70% frontal overlap, 80% lateral overlap, a nadir 90° camera angle, and a grid flight pattern. These parameters can also be applied in other cases involving plots with similar characteristics.

Author Contributions

Conceptualization, J.L.-R. and E.J.R.; methodology, J.L.-R.; software, J.L.-R. and C.C.; validation, C.C. and J.L.-R.; formal analysis, J.L., M.B.-R. and G.A.; investigation, C.C. and J.L.-R.; resources, C.C. and J.L.-R.; data curation, C.C. and J.L.-R.; writing—original draft preparation, C.C. and J.L.; writing—review and editing, E.J.R., J.L., M.B.-R. and G.A.; visualization, J.L. and C.C.; supervision, J.L.-R.; project administration, J.L.-R.; funding acquisition, M.B.-R. and G.A. All authors have read and agreed to the published version of the manuscript.

Funding

Marcelo Becerra-Rozas is supported by DI Iniciación PUCV 2025/PUCV/039.725/2025.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Acknowledgments

Jingwei Liu is supported by Concurso Postdoctorado UC 2024/UC/Pr-211-24. The authors are grateful to the editor and anonymous reviewers for their constructive comments and valuable suggestions to improve the quality of the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAV | Unmanned Aerial Vehicles |

| GNSS | Global Navigation Satellite System |

| DSM | Digital Surface Model |

| DTM | Digital Terrain Models |

| DPC | Dense Point Clouds |

| 3D | Three-dimensional |

| VTOL | Vertical Takeoff and Landing |

| RTK | Real-Time Kinematic |

| PPK | Post-Processed Kinematic |

| SfM | Structure-from-Motion |

| MVS | Multi-View Stereo |

| GSD | Ground Sample Distance |

References

- Molina, A.A.; Huang, Y.; Jiang, Y. A Review of Unmanned Aerial Vehicle Applications in Construction Management: 2016–2021. Standards 2023, 3, 95–109. [Google Scholar] [CrossRef]

- Tucci, G.; Gebbia, A.; Conti, A.; Fiorini, L.; Lubello, C. Monitoring and Computation of the Volumes of Stockpiles of Bulk Material by Means of UAV Photogrammetric Surveying. Remote Sens. 2019, 11, 1471. [Google Scholar] [CrossRef]

- AgroTop SL. Cómo los Drones Están Revolucionando la Topografía en 2025. 2025. Available online: https://agrotopsl.es/como-los-drones-estan-revolucionando-la-topografia-en-2025/ (accessed on 14 October 2025).

- Verdugo, J.A.Y.; Mocha, J.B.C. Análisis Comparativo Entre Levantamientos Topográficos Con Estatión Total y Vehículos Aéreos No Tripulados 534 (UAVS). Ph.D. Thesis, Universidad Politécnica Salesiana location, Cuenca, Ecuador, 2024. [Google Scholar]

- Valdebenito, E.J. Aplicación de Técnicas de Fotogrametría Con Drones Para la Detección y Cuantificación de Deterioro de Pavimentos y su Posible Aplicación en Evaluación de Pavimentos Aeroportuarios. Ph.D. Thesis, Universidad de Concepción, Concepción, Chile, 2025. [Google Scholar]

- Toribio, J.C. Cálculo del Índice de Regularidad Internacional (IRI) a través de imágenes obtenidas de un Vehículo Aéreo No Tripulado. Ph.D. Thesis, Universidad de Costa Rica, San Pedro Montes de Oca, Costa Rica, 2022. [Google Scholar] [CrossRef]

- Chodura, N.; Greeff, M.; Woods, J. Evaluation of Flight Parameters in UAV-based 3D Reconstruction for Rooftop Infrastructure Assessment. arXiv 2025, arXiv:2504.02084. [Google Scholar] [CrossRef]

- Pargieła, K. Optimising UAV Data Acquisition and Processing for Photogrammetry: A Review. Geomat. Environ. Eng. 2023, 17, 29–59. [Google Scholar] [CrossRef]

- Jacob-Loyola, N.; Rivera, F.M.L.; Herrera, R.F.; Atencio, E. Unmanned Aerial Vehicles (UAVs) for Physical Progress Monitoring of Construction. Sensors 2021, 21, 4227. [Google Scholar] [CrossRef]

- Jofré-Briceño, C.; Rivera, F.M.L.; Atencio, E.; Herrera, R.F. Implementation of Facility Management for Port Infrastructure through the Use of UAVs, Photogrammetry and BIM. Sensors 2021, 21, 6686. [Google Scholar] [CrossRef]

- Nwaogu, J.M.; Yang, Y.; Chan, A.P.C.; lin Chi, H. Application of drones in the architecture, engineering, and construction (AEC) industry. Autom. Constr. 2023, 150, 104827. [Google Scholar] [CrossRef]

- Siebert, S.; Teizer, J. Mobile 3D mapping for surveying earthwork projects using an Unmanned Aerial Vehicle (UAV) system. Autom. Constr. 2014, 41, 1–14. [Google Scholar] [CrossRef]

- Molina, A.L.A.; Huang, Y. Using Unmanned Aircraft Systems for Construction Verification, Volume Calculations, and Field Inspection. Ph.D. Thesis, East Carolina University, Greenville, NC, USA, 2023. [Google Scholar]

- Agüera-Vega, F.; Ferrer-González, E.; Martínez-Carricondo, P.; Sánchez-Hermosilla, J.; Carvajal-Ramírez, F. Influence of the Inclusion of Off-Nadir Images on UAV-Photogrammetry Projects from Nadir Images and AGL (Above Ground Level) or AMSL (Above Mean Sea Level) Flights. Drones 2024, 8, 662. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Lyu, C.; Lin, S.; Lynch, A.; Zou, Y.; Liarokapis, M. UAV-based deep learning applications for automated inspection of civil infrastructure. Autom. Constr. 2025, 177, 106285. [Google Scholar] [CrossRef]

- Vangu, G.M.; Miluț, M.; Croitoru, A.C. Use of Modern Technologies Such as UAV, Photogrammetry, 3D Modeling, and GIS for Urban Planning. J. Appl. Eng. Sci. 2025, 15, 165–174. [Google Scholar] [CrossRef]

- Parente, D.C.; Felix, N.C.; Picanço, A.P. Use of unmanned aerial vehicle (UAV) in the identification of surface pathology in asphalt pavement. Rev. ALCONPAT 2017, 7, 160–171. [Google Scholar] [CrossRef]

- Du, Z.; Yang, Y.; Zhu, J.; Lyu, Y. Online Parameter Estimation for Fixed-Wing UAV Based on DREM Method and Adaptive Control. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 1363–1376. [Google Scholar] [CrossRef]

- Cui, X.; Wang, Y.; Yang, S.; Liu, H.; Mou, C. UAV path planning method for data collection of fixed-point equipment in complex forest environment. Front. Neurorobot. 2022, 16, 1105177. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Q. Large-scale mapping using UAV-based oblique photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2025, 48, 183–188. [Google Scholar] [CrossRef]

- Agapiou, A. Drones in Construction: A Comparative International Review of the Legal and Regulatory Landscape. Proc. Inst. Civ. Eng. Manag. Procure. Law 2021, 174, 118–125. [Google Scholar] [CrossRef]

- Mandourah, A.; Hochmair, H. Analyzing the violation of drone regulations in three VGI drone portals across the US, the UK, and France. Geo-Spat. Inf. Sci. 2024, 27, 364–383. [Google Scholar] [CrossRef]

- Lee, D.; Hess, D.J.; Heldeweg, M.A. Safety and privacy regulations for unmanned aerial vehicles: A multiple comparative analysis. Technol. Soc. 2022, 71, 102079. [Google Scholar] [CrossRef]

- Ito, T.; Funada, R.; Mochida, S.; Kawagoe, T.; Ibuki, T.; Sampei, M. Design and experimental verification of a hoverable quadrotor composed of only clockwise rotors. Adv. Robot. 2023, 37, 667–678. [Google Scholar] [CrossRef]

- Mokhtar, M.; Matori, A.; Yusof, K.W.; Chandio, I.; Duong, V.; Dano, D.U.L. A Study of Unmanned Aerial Vehicle Photogrammetry for Environment Mapping: Preliminary Observation. Adv. Mater. Res. 2012, 626, 440–444. [Google Scholar] [CrossRef]

- Kim, S.S.; Suk, J.W.; Lim, E.T.; Jung, Y.H.; Koo, S. Quality Asessment of Three Dimensional Topographic and Building Modelling using High-performance RTK Drone. J. Korea-Acad.-Ind. Coop. Soc. 2023, 24, 11–19. [Google Scholar] [CrossRef]

- Yıldız, S.; Kivrak, S.; Arslan, G. Using drone technologies for construction project management: A narrative review. J. Constr. Eng. Manag. Innov. 2021, 4, 229–244. [Google Scholar] [CrossRef]

- Guan, S.; Zhu, Z.; Wang, G. A Review on UAV-Based Remote Sensing Technologies for Construction and Civil Applications. Drones 2022, 6, 117. [Google Scholar] [CrossRef]

- Atencio, E.; Plaza-Muñoz, F.; Rivera, F.M.L.; Lozano-Galant, J.A. Calibration of UAV flight parameters for pavement pothole detection using orthogonal arrays. Autom. Constr. 2022, 143, 104545. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points Used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Dai, W.; Zheng, G.; Antoniazza, G.; Zhao, F.; Chen, K.; Lu, W.; Lane, S.N. Improving UAV-SfM photogrammetry for modelling high-relief terrain: Image collection strategies and ground control quantity. Earth Surf. Process. Landf. 2023, 48, 2884–2899. [Google Scholar] [CrossRef]

- Ullman, S. The Interpretation of Structure from Motion. Proc. R. Soc. London Ser. Biol. Sci. 1979, 203, 405–426. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the World from Internet Photo Collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate, Dense, and Robust Multiview Stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Peng, Y.; Tang, Z.; Li, Z. Three-Dimensional Reconstruction of Railway Bridges Based on Unmanned Aerial Vehicle–Terrestrial Laser Scanner Point Cloud Fusion. Buildings 2023, 13, 2841. [Google Scholar] [CrossRef]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV photogrammetry for mapping and 3D modeling-Current status and future perspectives. In Proceedings of the International Conference on Unmanned Aerial Vehicle in Geomatics (UAV-g), Zurich, Switzerland, 14–16 September 2011; Volume XXXVIII-1/C22. [Google Scholar] [CrossRef]

- Pepe, M.; Alfio, V.S.; Costantino, D. UAV Platforms and the SfM-MVS Approach in the 3D Surveys and Modelling: A Review in the Cultural Heritage Field. Appl. Sci. 2022, 12, 12886. [Google Scholar] [CrossRef]

- Gómez-Zurdo, R.S.; Martín, D.G.; González-Rodrigo, B.; Sacristán, M.M.; Marín, R.M. Aplicación de la fotogrametría con drones al control deformacional de estructuras y terreno. Inf. Construcción 2021, 73, e379. [Google Scholar] [CrossRef]

- Luhmann, T.; Robson, S.; Kyle, S.; Boehm, J. Close Range Photogrammetry and 3D Imaging; De Gruyter Brill: Berlin, Germany, 2013. [Google Scholar] [CrossRef]

- Martínez-Carricondo, P.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Mesas-Carrascosa, F.J.; García-Ferrer, A.; Pérez-Porras, F.J. Assessment of UAV-photogrammetric mapping accuracy based on variation of ground control points. Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 1–10. [Google Scholar] [CrossRef]

- Ferrer-González, E.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P. UAV Photogrammetry Accuracy Assessment for Corridor Mapping Based on the Number and Distribution of Ground Control Points. Remote Sens. 2020, 12, 2447. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Foresti, G.L.; Lanzino, R.; Marini, M.R.; Mecca, A.; Scarcello, F. A Novel Transformer-Based IMU Self-Calibration Approach through On-Board RGB Camera for UAV Flight Stabilization. Sensors 2023, 23, 2655. [Google Scholar] [CrossRef]

- Kelly, J.; Sukhatme, G.S. Visual-Inertial Sensor Fusion: Localization, Mapping and Sensor-to-Sensor Self-calibration. Int. J. Robot. Res. 2010, 30, 56–79. [Google Scholar] [CrossRef]

- Becerra-Rozas, M.; Lemus-Romani, J.; Cisternas-Caneo, F.; Crawford, B.; Soto, R.; Astorga, G.; Castro, C.; García, J. Continuous Metaheuristics for Binary Optimization Problems: An Updated Systematic Literature Review. Mathematics 2023, 11, 129. [Google Scholar] [CrossRef]

- Mayer, Z.; Epperlein, A.; Vollmer, E.; Volk, R.; Schultmann, F. Investigating the Quality of UAV-Based Images for the Thermographic Analysis of Buildings. Remote Sens. 2023, 15, 301. [Google Scholar] [CrossRef]

- Verhoeven, G. Providing an Archaeological Bird’s-Eye View—An Overall Picture of Ground-Based Means to Execute Low-Altitude Aerial Photography (LAAP) in Archaeology. Archaeol. Prospect. 2009, 16, 233–249. [Google Scholar] [CrossRef]

- Zhong, J.; Zhou, Q.; Li, M.; Gruen, A.; Liao, X. A Novel Solution for Drone Photogrammetry with Low-overlap Aerial Images using Monocular Depth Estimation. arXiv 2025, arXiv:2503.04513. [Google Scholar] [CrossRef]

- de Luis-Ruiz, J.M.; Sedano-Cibrián, J.; Pereda-García, R.; Pérez-Álvarez, R.; Malagón-Picón, B. Optimization of Photogrammetric Flights with UAVs for the Metric Virtualization of Archaeological Sites. Application to Juliobriga (Cantabria, Spain). Appl. Sci. 2021, 11, 1204. [Google Scholar] [CrossRef]

- Wei, Y.; Chu, H.; Guo, Q.; Liao, Y. Three-Dimensional Modeling and Accuracy Evaluation Based on Unmanned Aerial Vehicle Oblique Photogrammetry. In Proceedings of the 3rd International Conference on Computer, Artificial Intelligence and Control Engineering, Xi’an China, 26–28 January 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 441–445. [Google Scholar] [CrossRef]

- Eckenhoff, K.; Geneva, P.; Huang, G. MIMC-VINS: A Versatile and Resilient Multi-IMU Multi-Camera Visual-Inertial Navigation System. arXiv 2020, arXiv:2006.15699. [Google Scholar] [CrossRef]

- Chiabrando, F.; Lingua, A.; Maschio, P.; Losè, L.T. The influence of flight planning and camera orientation in UAVs photogrammetry. A test in the area of Rocca San Silvestro (LI), TUSCANY. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 163–170. [Google Scholar] [CrossRef]

- Vacca, G.; Dessì, A.; Sacco, A. The Use of Nadir and Oblique UAV Images for Building Knowledge. ISPRS Int. J.-Geo-Inf. 2017, 6, 393. [Google Scholar] [CrossRef]

- Eisenbeiss, H.; Sauerbier, M. Investigation of uav systems and flight modes for photogrammetric applications. Photogramm. Rec. 2011, 26, 400–421. [Google Scholar] [CrossRef]

- Maes, W.H. Practical Guidelines for Performing UAV Mapping Flights with Snapshot Sensors. Remote Sens. 2025, 17, 606. [Google Scholar] [CrossRef]

- Meng, W.; Zhang, X.; Zhou, L.; Guo, H.; Hu, X. Advances in UAV Path Planning: A Comprehensive Review of Methods, Challenges, and Future Directions. Drones 2025, 9, 376. [Google Scholar] [CrossRef]

- de Moraes, F.R.; da Silva, I. Assessment of Submillimeter Precision via Structure from Motion Technique in Close-Range Capture Environments. arXiv 2024, arXiv:2409.15602. [Google Scholar] [CrossRef]

- Zhao, R.; Huang, Y.; Luo, H.; Huang, X.; Zheng, Y. A Framework for Using UAVs to Detect Pavement Damage Based on Optimal Path Planning and Image Splicing. Sustainability 2023, 15, 2182. [Google Scholar] [CrossRef]

- He, Y.; Yang, W.; Su, Q.; He, Q.; Li, H.; Lin, S.; Zhu, S. Three-Dimensional Real-Scene-Enhanced GNSS/Intelligent Vision Surface Deformation Monitoring System. Appl. Sci. 2025, 15, 4983. [Google Scholar] [CrossRef]

- Nasrallah, A.A.; Abdelfatah, M.A.; Attia, M.I.E.; El-Fiky, G.S. Positioning and detection of rigid pavement cracks using GNSS data and image processing. Earth Sci. Inform. 2024, 17, 1799–1807. [Google Scholar] [CrossRef]

- Zeybek, M.; Taşkaya, S.; Elkhrachy, I.; Tarolli, P. Improving the Spatial Accuracy of UAV Platforms Using Direct Georeferencing Methods: An Application for Steep Slopes. Remote Sens. 2023, 15, 2700. [Google Scholar] [CrossRef]

- Han, S.; Han, D. Enhancing Direct Georeferencing Using Real-Time Kinematic UAVs and Structure from Motion-Based Photogrammetry for Large-Scale Infrastructure. Drones 2024, 8, 736. [Google Scholar] [CrossRef]

- Sun, C.; Che, G.; Dong, X.; Zou, R.; Feng, L.; Ding, X. Review on Algorithm for Fusion of Oblique Data and Radar Point Cloud. In Communications, Signal Processing, and Systems, Proceedings of the 12th International Conference on Communications, Signal Processing, and Systems: Volume 2; Wang, W., Liu, X., Na, Z., Zhang, B., Eds.; Springer: Singapore, 2024; pp. 527–535. [Google Scholar]

- Hermida, M.A.; Hermida, C.; Cabrera, N.; Calle, C. Urban density as variable of city analysis. The case of Cuenca, Ecuador. EURE Rev. Latinoam. Estud. Urbano Reg. 2015, 41, 25–44. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).