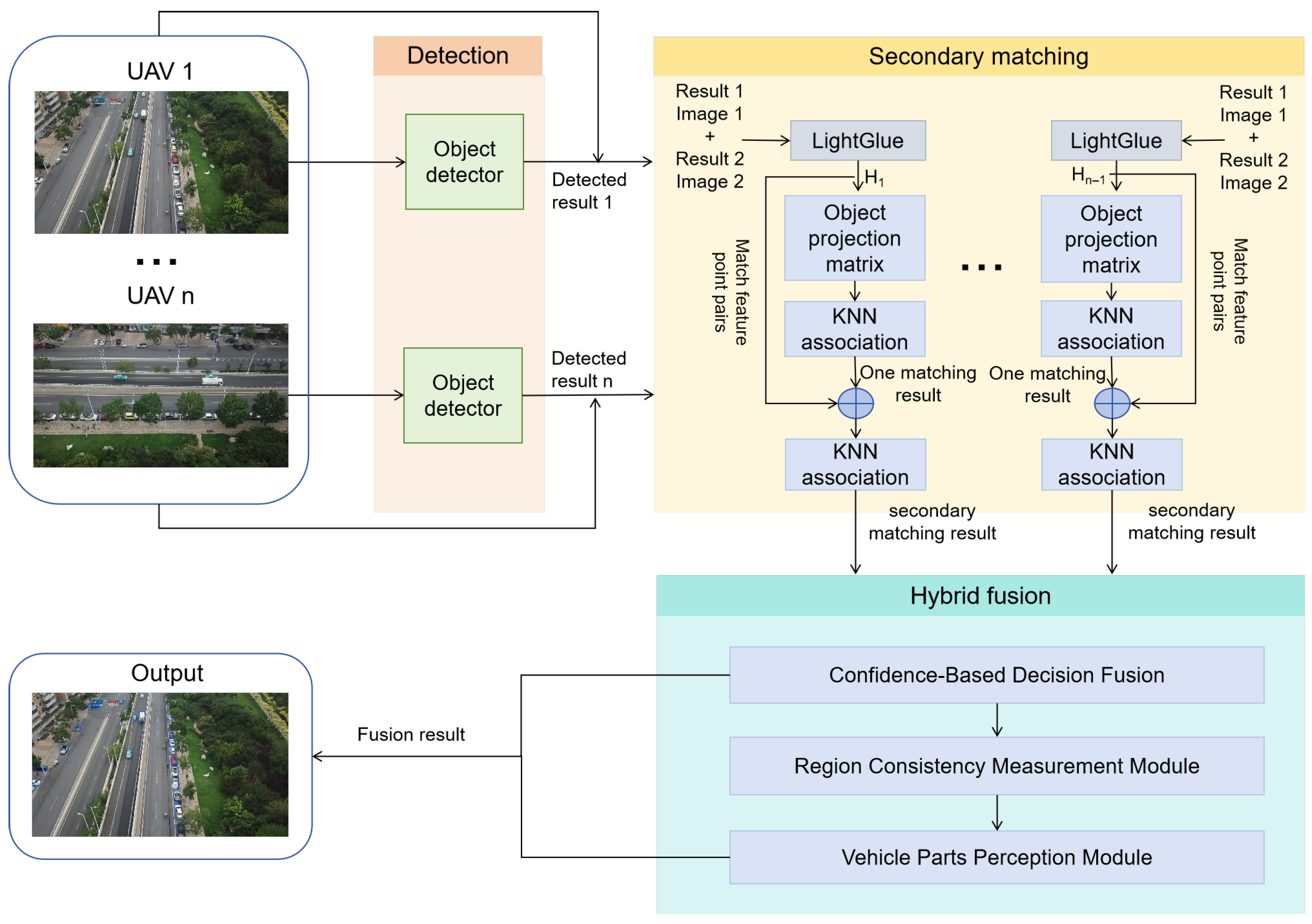

2.2. Secondary Matching Method

The secondary matching method proposed in this paper is based on the LightGlue-based projection matching approach. However, using LightGlue alone for projection matching may result in misalignment, causing the prompted location on the host UAV’s image to deviate from the true position. In cases of slight projection misalignment, the prompted location may partially shift relative to the actual object position. When the misalignment is severe, the prompted location may become completely displaced, pointing to an entirely wrong position. To address this issue, we propose a secondary matching method that integrates both object information and background context to improve matching accuracy and robustness.

In cross-drone target association, we employed an image rotation strategy to enhance the quality of feature matching [

10]. Specifically, each image was rotated by integer multiples of 90°, including 0°, 90°, 180°, and 270°, to determine the optimal matching angle under different viewpoints. LightGlue was then applied at each rotation angle to extract and match feature points across the (

Table S1). To improve computational efficiency, we retained only the rotation angle that yielded the highest number of feature points and used it as the benchmark for subsequent matching. Additionally, we utilized the Random Sample Consensus (RANSAC) algorithm (

Table S1) to exclude false matches and ensure the robustness of the matching results [

18].

The matched feature points are used to compute the projection transformation matrix

from view B to view A. The transformation matrix

can be used to calculate the pixel coordinates

in view B corresponding to the projected pixel coordinates

in view A. The calculation formula is shown in (

1).

Using the

, the detection results

under view B can be projected to view A to get the potential object set

. After projection, the results whose center point coordinates are less than 0 or bigger than the length and width of the image are considered to be not in the common field of view and deleted.The Euclidean distances between each object center in

and each object center in

are used as matching criteria. These distances are calculated sequentially, as shown in (

2).

is the constructed distance cost matrix, where m is the number of objects in , and n is the number of objects in . The element represents the Euclidean distance between the i-th object center in and the j-th object center in .

The K-Nearest Neighbor (KNN) method (

Table S1) is used for matching [

19], with a distance threshold set at 200. A match is considered valid only if the Euclidean distance between the centers of two objects is less than this threshold. The pseudocode for the algorithm is shown in Algorithm 1.

Three sets are obtained after matching: the matching set [, ], the unmatched set which is the set of unmatched object coordinates from the results of view A, and the unmatched projection set [, ]. is the set of unmatched object coordinates in the set of potential objects, and is the set of object coordinates in view B before transformation, corresponding to the coordinates in .

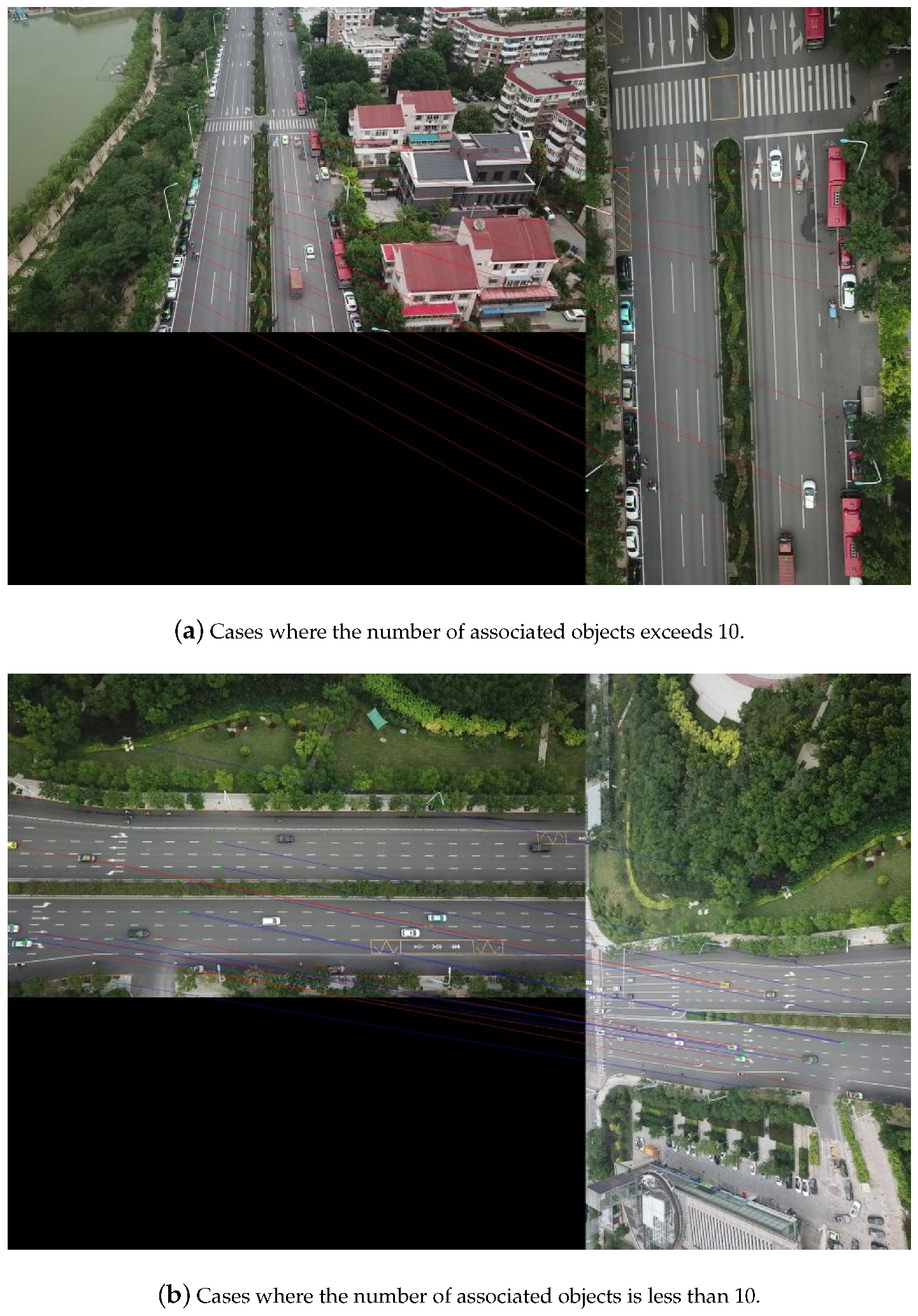

To mitigate the impact of projection misalignment, the matched result [

,

] obtained from the LightGlue-based projection matching method, along with the pre-projection object coordinates

corresponding to

, are combined to form a new set of matched objects across the two views [

,

]. The center positions of corresponding objects

in and

are treated as a pair of matching points. If the number of matched object coordinate pairs is sufficient (greater than 10), a new transformation matrix

is computed directly, using only the object location information. If the number of matched objects is insufficient, the 10 closest feature point pairs from the background features matched by LightGlue are selected and combined with the matched object points to jointly compute

, as shown in

Figure 3, where red lines connect matched object pairs and blue lines connect matched background feature points. Incorporating matched object information allows for more object constraints on the matching results, yielding more accurate projections. Moreover, this strategy is not limited by the number of matched objects; when object information is insufficient, the transformation matrix can still be reliably estimated by combining object and background features (

Supplementary S3).

After computing the new transformation matrix

, the distance cost matrix between

and

is recalculated. The KNN algorithm is then applied for object association, obtaining three sets of object information after matching: the matched object set [

,

], the unmatched object set

, and the unmatched projected object set [

,

]. These three sets form the foundation for the subsequent fusion process.

| Algorithm 1 Pseudo-code of KNN-based object association algorithm |

- Require:

Distance cost matrix - Ensure:

Matched indices list ; unmatched indices and - 1:

Initialize lists: - 2:

Initialize maps: - 3:

// Phase 1: Find K-Nearest Neighbors - 4:

for to do - 5:

- 6:

indices of top k smallest values in - 7:

for each j in do - 8:

if then - 9:

Append to - 10:

end if - 11:

end for - 12:

end for - 13:

// Phase 2: Forward matching (B to A) - 14:

for each in do - 15:

if or then - 16:

- 17:

end if - 18:

end for - 19:

// Phase 3: Backward matching (A to B) - 20:

for each in do - 21:

if

or

then - 22:

- 23:

end if - 24:

end for - 25:

// Phase 4: Mutual verification - 26:

for each in do - 27:

- 28:

if then - 29:

Append to - 30:

end if - 31:

end for - 32:

// Identify unmatched objects - 33:

- 34:

- 35:

return , ,

|

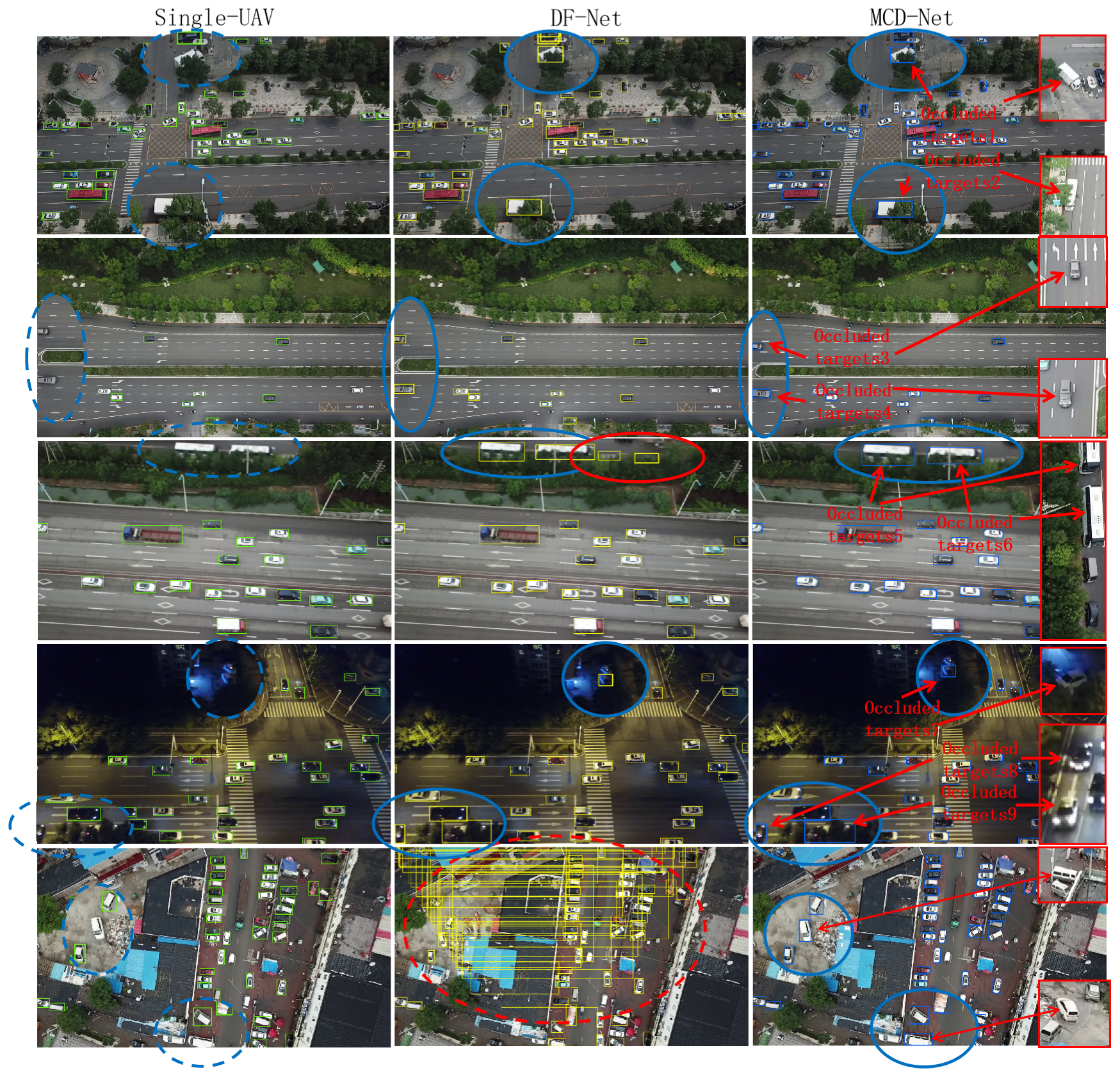

2.3. Hybrid Fusion

For the set [, ], there are two cases. The first one is that if the projection produces a deviation, the pixel region in view A delineated by the position of corresponding to the object region in view B of is inconsistent. The second one is that if the projection is correct, the pixel region in view A delineated by the position really has a object, but it may not be detected due to occlusion. To address the abovementioned problems, we design a hybrid fusion method to screen. Confidence-Based Decision Fusion is performed first to conduct a preliminary screening of the three sets. Then, the consistency measurement module is used to process the set of unmatched projections, thereby excluding non-corresponding results. Finally, the Vehicle Parts Perception module is used to classify the potential object area () in view A to reduce false detection and missing detection. The final result will be displayed on the selected host view. In the following sections, we will introduce the specific modules in hybrid fusion.

2.3.1. Confidence-Based Decision Fusion

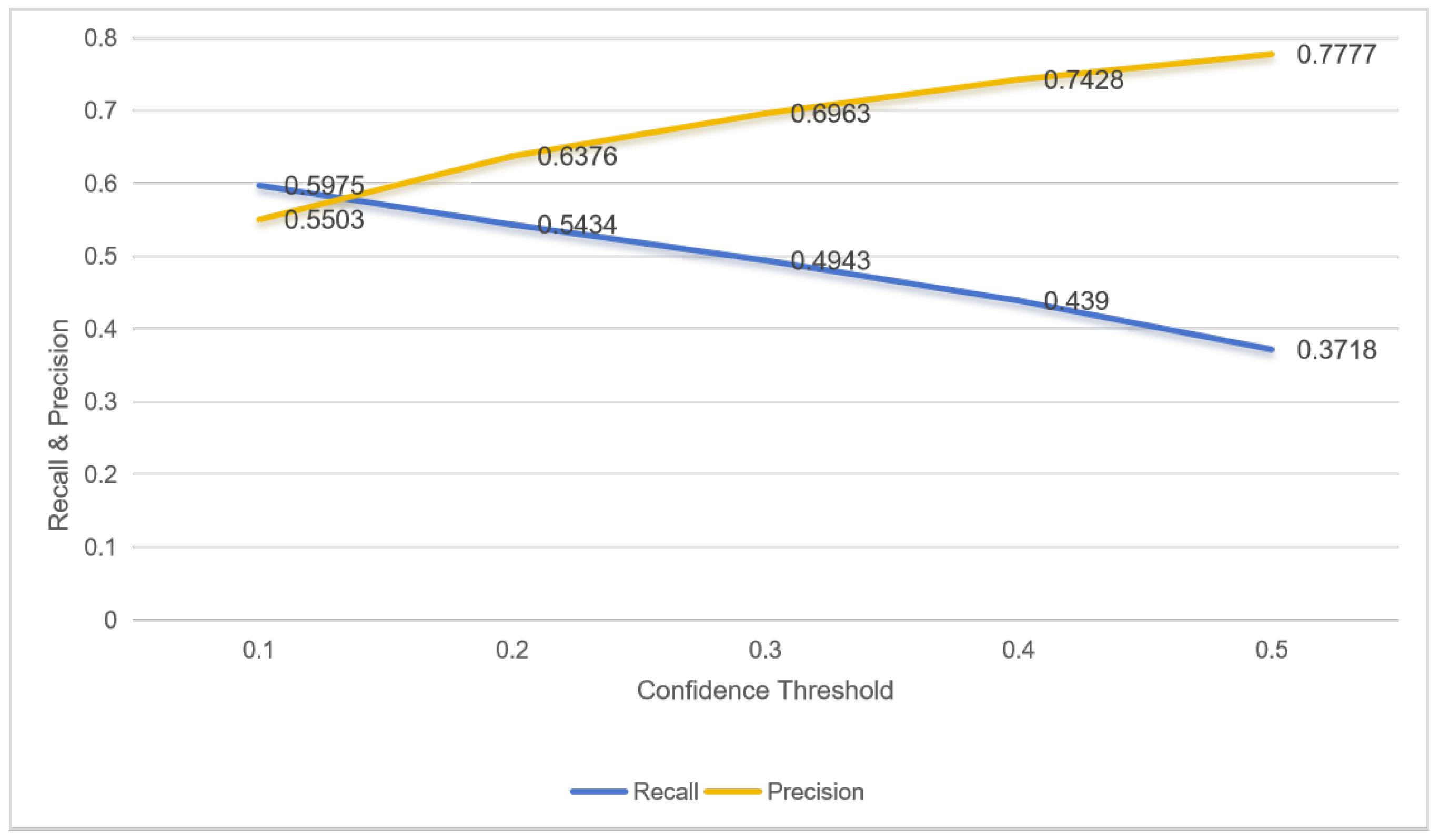

In detection tasks, a confidence threshold is typically set, and only results above this threshold are considered reliable. Bytetrack suggests that low-confidence detection results may contain information about occluded objects [

20]. This paper verifies this on the MDMT dataset using a single-UAV detection task, by varying the confidence threshold and calculating the recall and precision of occluded objects in the results. The trends of these two metrics with changing confidence thresholds are shown in

Figure 4. As can be seen from the figure, the precision of occluded objects increases as the confidence threshold rises, while the recall decreases. This indicates that using a lower confidence threshold can indeed capture more information about occluded objects, but at the same time introduces more false detections.

Considering that this paper objects multi-UAV tasks, detection results can be obtained from multiple sources. To more reasonably preserve these low-confidence results while reducing the risk of false detections, this paper proposes Confidence-Based Decision Fusion, which applies different confidence thresholds to filter the results from single-UAV and multi-UAV detections, respectively.

For the unmatched set and the unmatched projection set [, ] with single-UAV results, we set a base confidence threshold of conf1 and delete results below conf1. The unmatched projection set will be further filtered by subsequent modules.

For the matching objects set [, ] that both UAVs detect and match, we set a low confidence threshold conf2 (conf2 < conf1). Because the same object may exhibit different characteristics under different viewpoints, the confidence score for object detection in one viewpoint might be low due to occlusion or other factors. However, when observed from another viewpoint, the object may be largely unoccluded, resulting in a higher confidence score. Moreover, even if the object is occluded in both viewpoints, leading to low confidence scores, the integrated judgment combining information from both viewpoints is significantly more reliable than relying solely on a low-confidence result from single viewpoint. Since the final result will be overlaid onto viewpoint A for display, results with medium confidence above conf2 in are directly retained.

2.3.2. Region Consistency Measurement Module

Due to inaccuracies in projection transformation, pixel coordinate mapping across different viewpoints often fails to ensure that corresponding points align with the exact same real-world location. To address this, we leverage the computation of image feature similarity to determine whether regions before and after projection correspond to the same physical location. However, under different viewpoints, image appearance may be affected by complex factors such as illumination changes, scale variations, and occlusion, leading to changes in visual features. Traditional feature extraction methods (e.g., color histograms and structural similarity) often struggle to handle these challenges effectively. While trained neural networks can manage certain complex scenarios to some extent, their performance heavily depends on the quality and diversity of the training data, which limits their stability and generalization capability.

To address this, we introduces the Contrastive Language–Image Pretraining (CLIP) model as the image feature extractor [

21]. The image encoder of CLIP is based on the Vision Transformer architecture. Through image–text contrastive learning, it maps visual features into a multimodal semantic space aligned with text, enabling a deep understanding of image content. Moreover, since CLIP is pretrained on large-scale cross-modal data, it possesses strong feature representation capability and zero-shot transfer ability. As a result, its image feature extraction is highly stable and can be applied directly without fine-tuning.

Leveraging these advantages, we design a Region Consistency Measurement module (RCM Module) based on CLIP’s image encoder. The specific process is as follows: First, based on the projection set [

,

], we extract the corresponding image patches from viewpoint A and viewpoint B, respectively. These image patches are then fed into CLIP’s image encoder to extract features. The encoder outputs 512-dimensional feature vectors

and

, representing the features of the two regions. Subsequently, these feature vectors undergo L2 normalization to ensure that the cosine similarity computation depends solely on the direction of the vectors, rather than their magnitudes. This step is critical for obtaining accurate and scale-invariant similarity measurements. Finally, the cosine similarity between the two feature vectors is computed as shown in (

3). A similarity threshold of 0.9 is set; if the calculated similarity exceeds this threshold, the two regions are considered to correspond to the same physical area and are passed to the next step for further verification to confirm the presence of an object.

The RCM module proposed in this paper provides an effective solution for addressing the issue of misaligned regions across multiple viewpoints. Based on the CLIP pretrained model, this module can determine projection discrepancies by calculating the semantic similarity between images without requiring additional training. Compared to traditional methods, this approach enhances the system’s adaptability in complex scenarios.

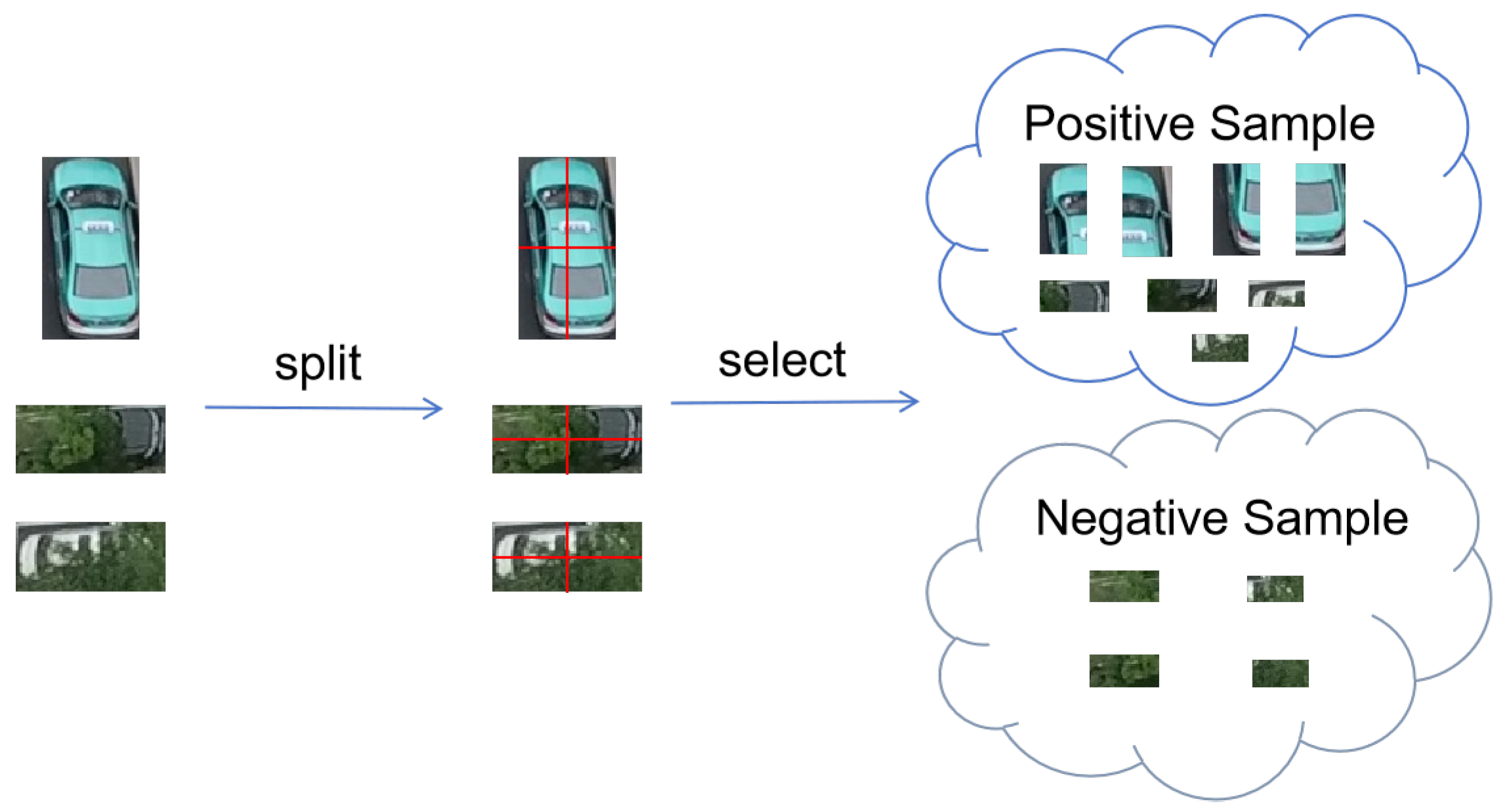

2.3.3. Vehicle Parts Perception Module

Objects occlusion will seriously affect the performance of UAV detection algorithms. While making masks for occluded objects can improve the network’s ability to perceive occlusions [

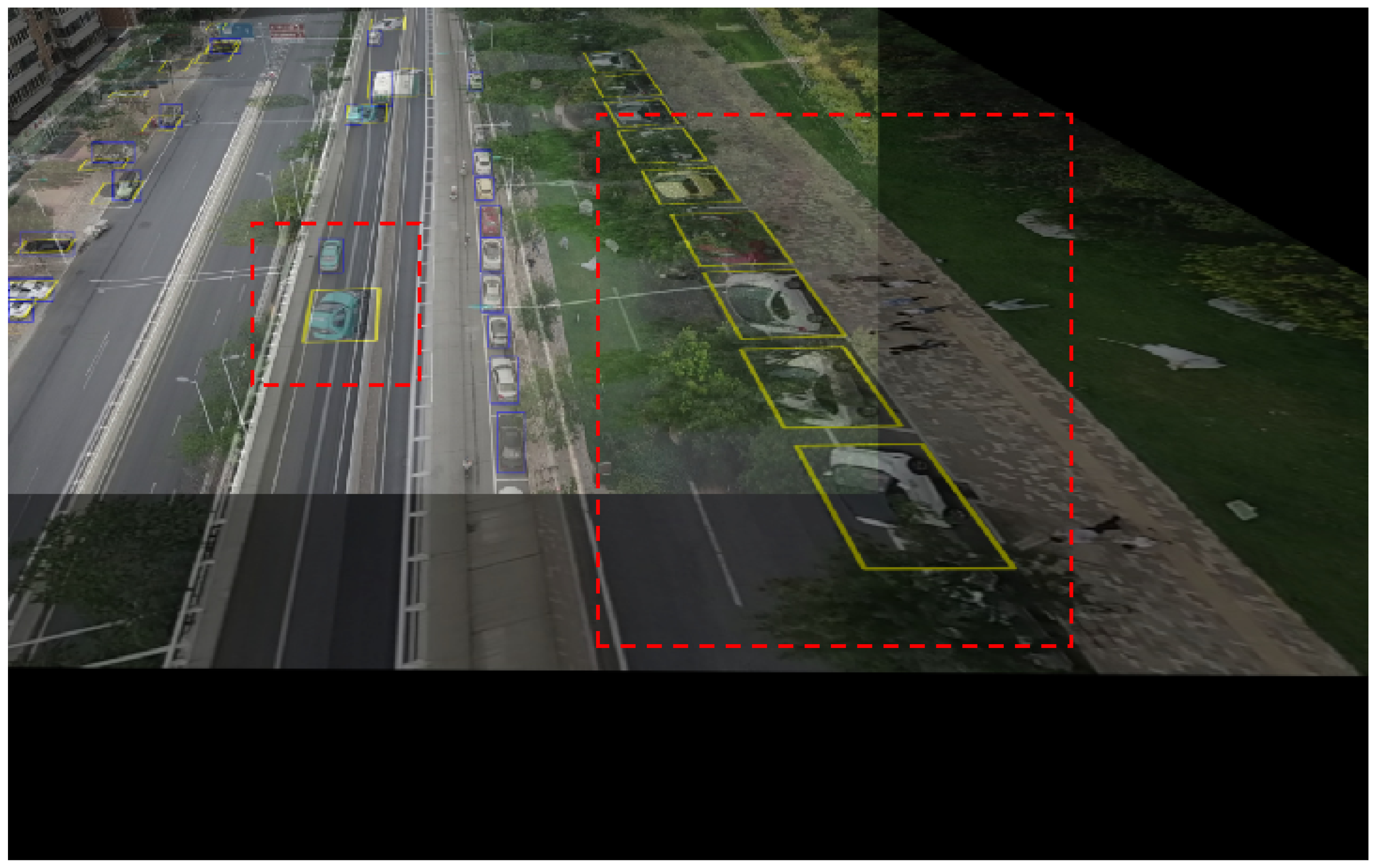

22], fine annotations take a lot of time and cannot cover all occlusion cases. We observed that when splitting images of occluded vehicles, some of the resulting image blocks are often unoccluded. For example, in the case shown in

Figure 5, although the overall occlusion rate exceeds fifty percent, after dividing the image into four quadrants and examining each block individually, it becomes evident that the two left blocks consist almost entirely of background information, while the two right blocks retain relatively intact features of vehicle parts.

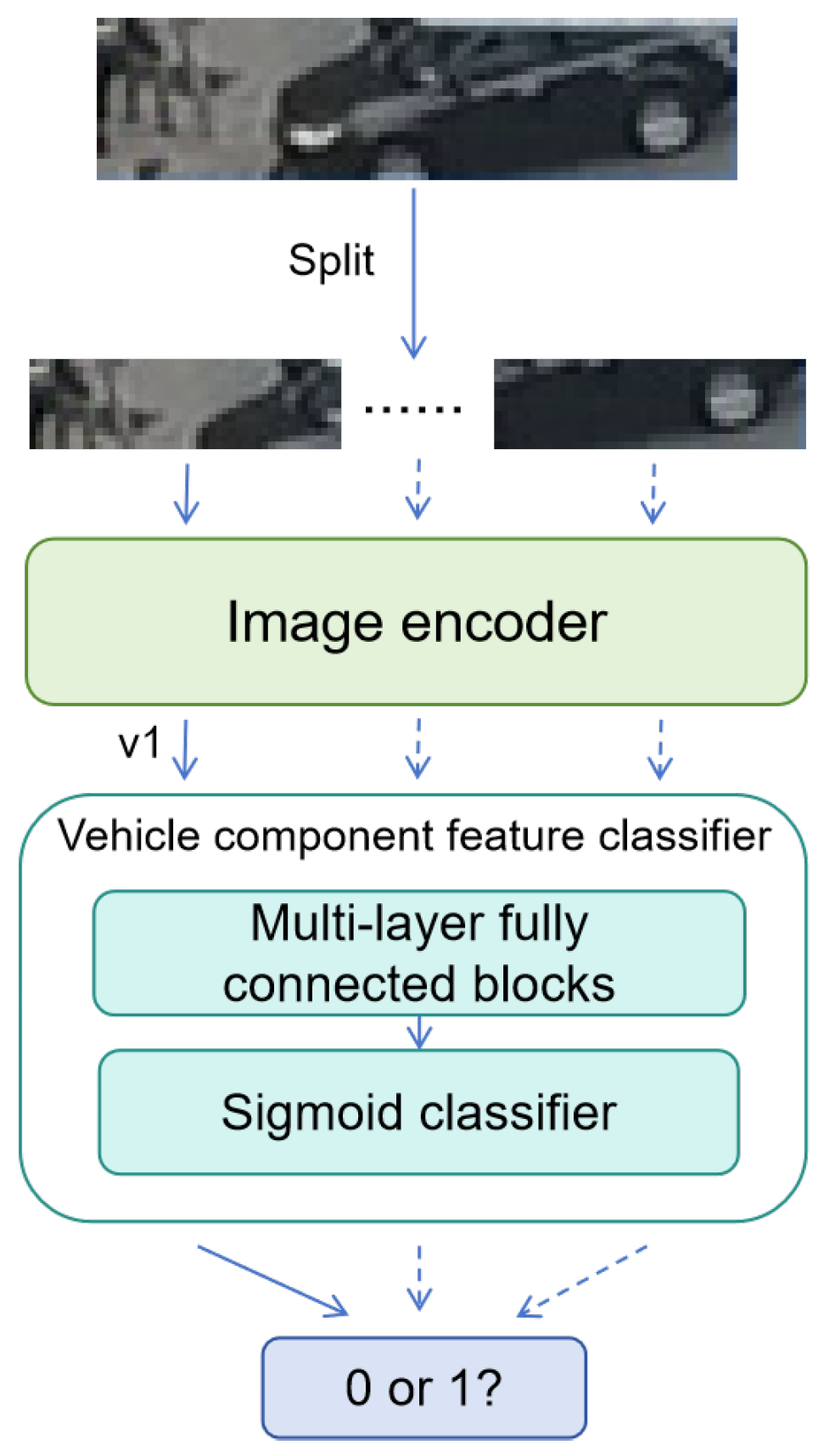

To avoid requiring the detection network to directly handle the complex problem of occlusion, we proposes a Vehicle Parts Perception module (VPP module) to perceive vehicle part features based on local semantic characteristics. This method divides the suspicious region image blocks—deemed to pass by the region consistency measurement module—into several smaller patches, then extracts semantic features from each patch and classifies them according to vehicle part characteristics. Considering that specific vehicle parts (e.g., windows, wheels) are often too small under the UAV’s perspective, making annotation and detection challenging, the method does not classify specific part types, but instead categorizes whether a region contains vehicle parts. By analyzing local information, this approach confirms the presence of a object without relying on a complete, holistic view of the entire object.

The framework of the VPP module is illustrated in

Figure 6. First, multiple image patches are extracted from each potential object region to cover different locations. This approach ensures that even if parts of the object are occluded, characteristic features indicating its presence can still be captured. Next, the image encoder of CLIP is used to extract feature vectors from each image patch. These feature vectors contain rich semantic information, which benefits the subsequent classification task. Finally, the feature vectors are fed into a vehicle part feature classifier for classification.

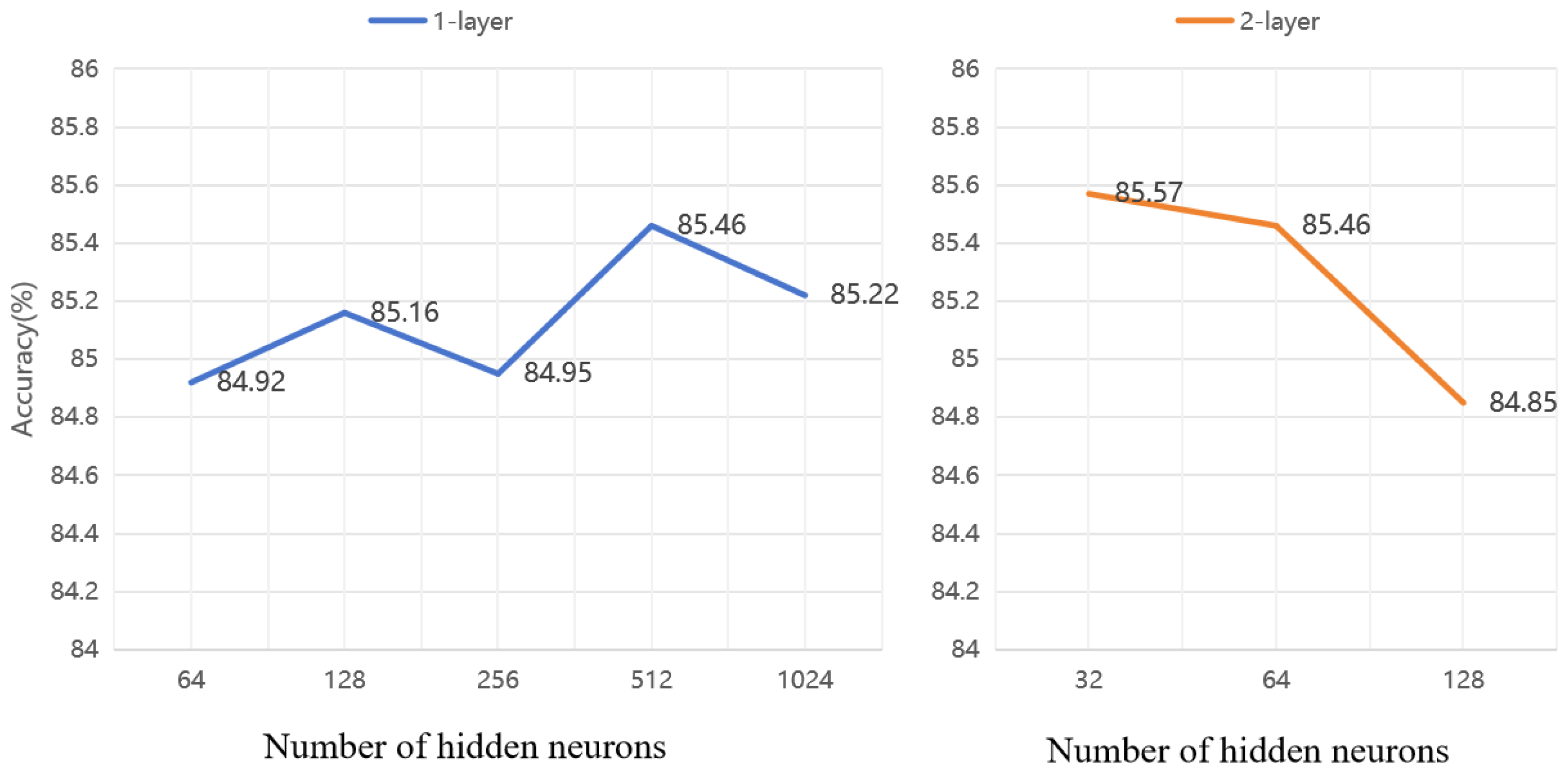

The classifier consists of a two-layer fully connected block, with the specific structure as follows: the input layer receives a 512-dimensional feature vector, consistent with the dimensionality of the image features extracted by CLIP. The two hidden layers contain 512 and 32 neurons, respectively, enhancing the model’s expressive capacity. The output layer produces two classes, corresponding to the positive sample (vehicle part present) and negative sample (no vehicle part present). Cross-entropy loss is used as the classification loss for label prediction, as shown in (

4);

is the output of the classifier and

y is the label. Considering the occlusion issue, if at least one image patch within a region is classified as a positive sample, the region is deemed to contain a object.

We simplifies the complex occlusion problem by transforming it into a vehicle part classification task on image patches. This approach not only reduces the time cost associated with fine-grained annotation, but also enhances the robustness and accuracy of the system. Meanwhile, the integration of multi-view information helps to narrow down the potential object regions, providing an effective prerequisite for the classifier, and can overcome the challenges posed by occlusion to a certain extent and improves the overall performance of object detection.