Online Multi-AUV Trajectory Planning for Underwater Sweep Video Sensing in Unknown and Uneven Seafloor Environments

Highlights

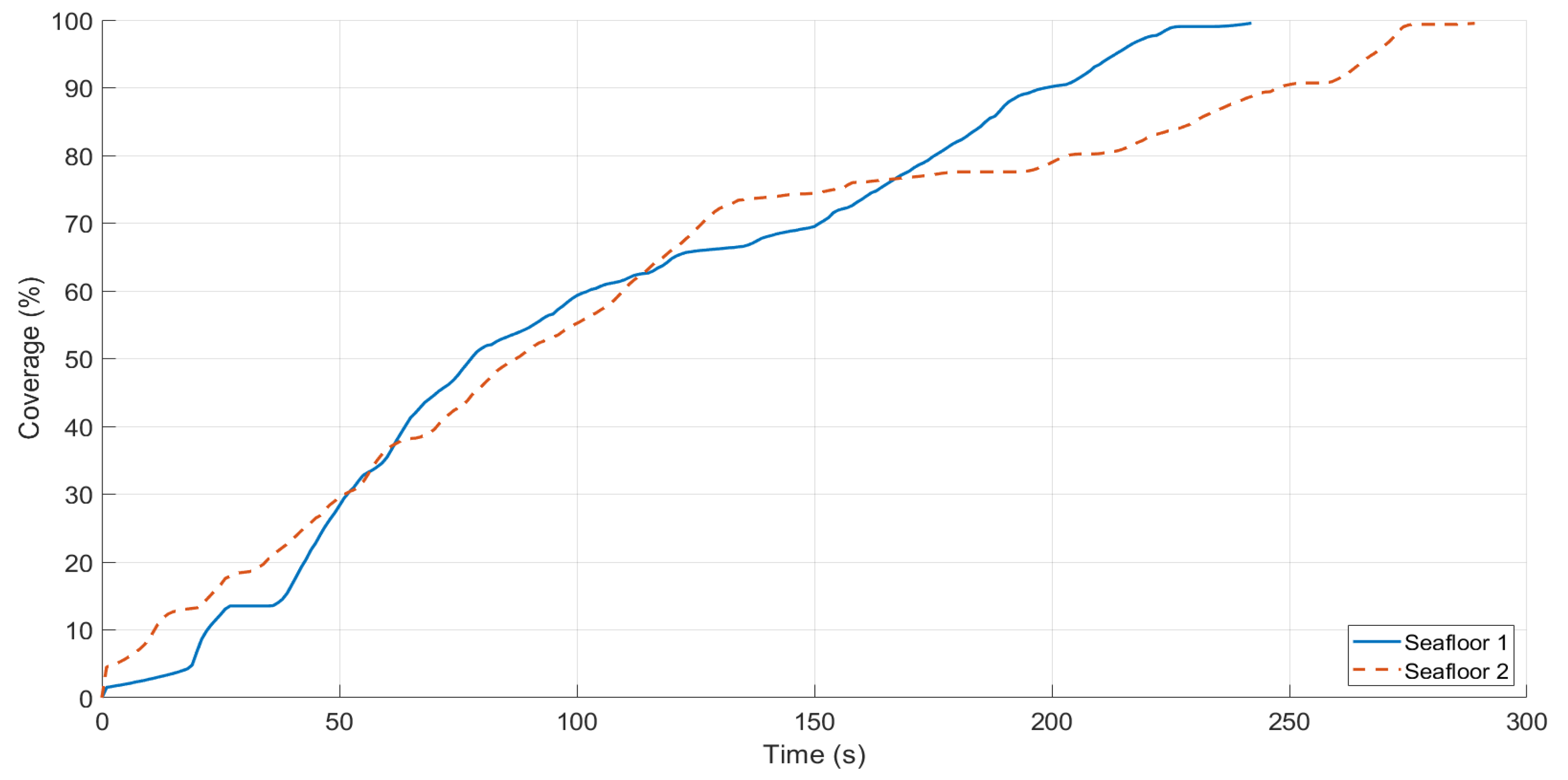

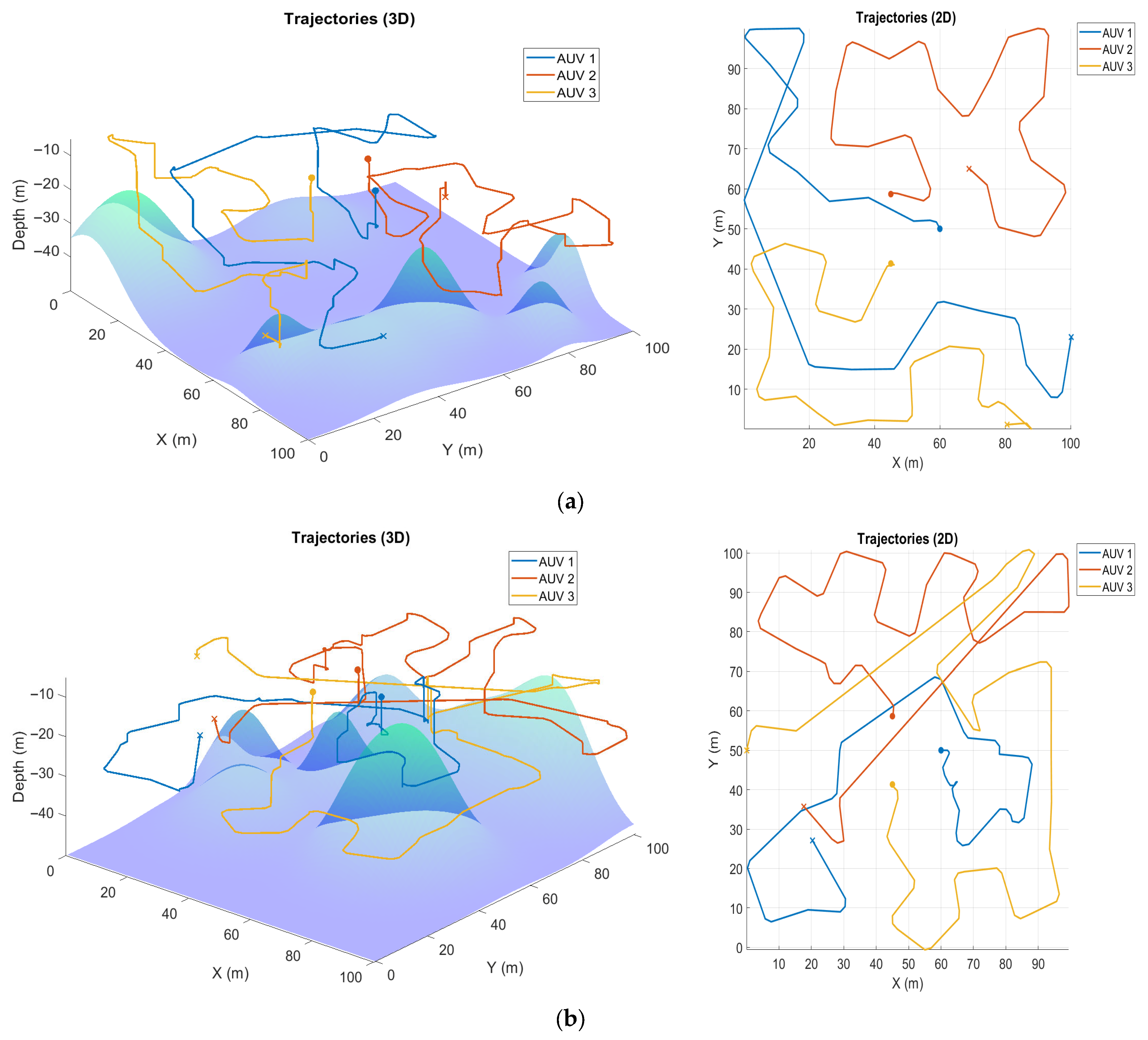

- The proposed online multi-AUV method achieves reliable sweep video coverage of unknown and uneven seafloors while maintaining safety margins and adapting to terrain occlusions.

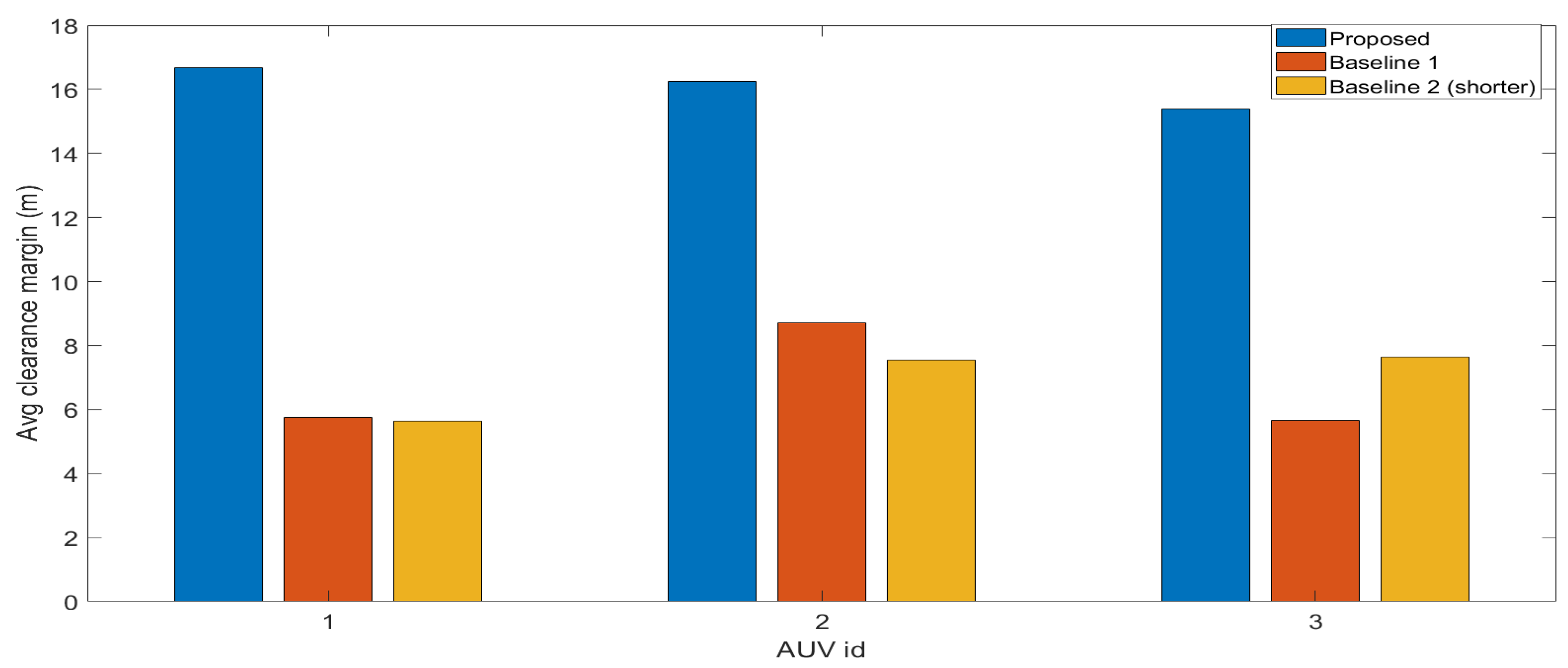

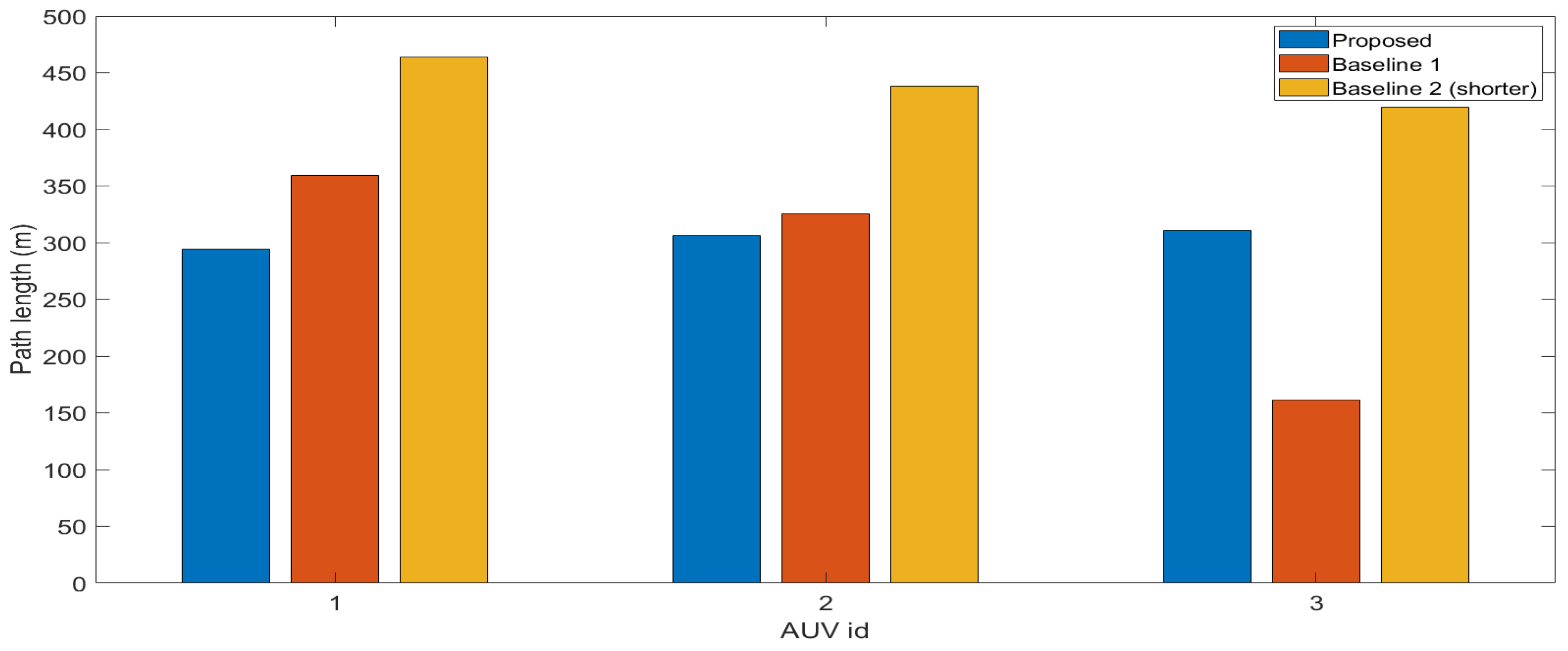

- Benchmarking against lawnmower strategies shows that the proposed approach provides higher coverage, safer trajectories, and more efficient mapping under challenging terrain conditions.

- The proposed method offers an effective solution for occlusion-aware underwater sensing missions over unknown and uneven seafloor environments where fixed-pattern approaches are inadequate.

- The framework can be extended to larger multi-AUV systems and real-world deployments, enabling more efficient video sensing applications.

Abstract

1. Introduction

1.1. Contribution

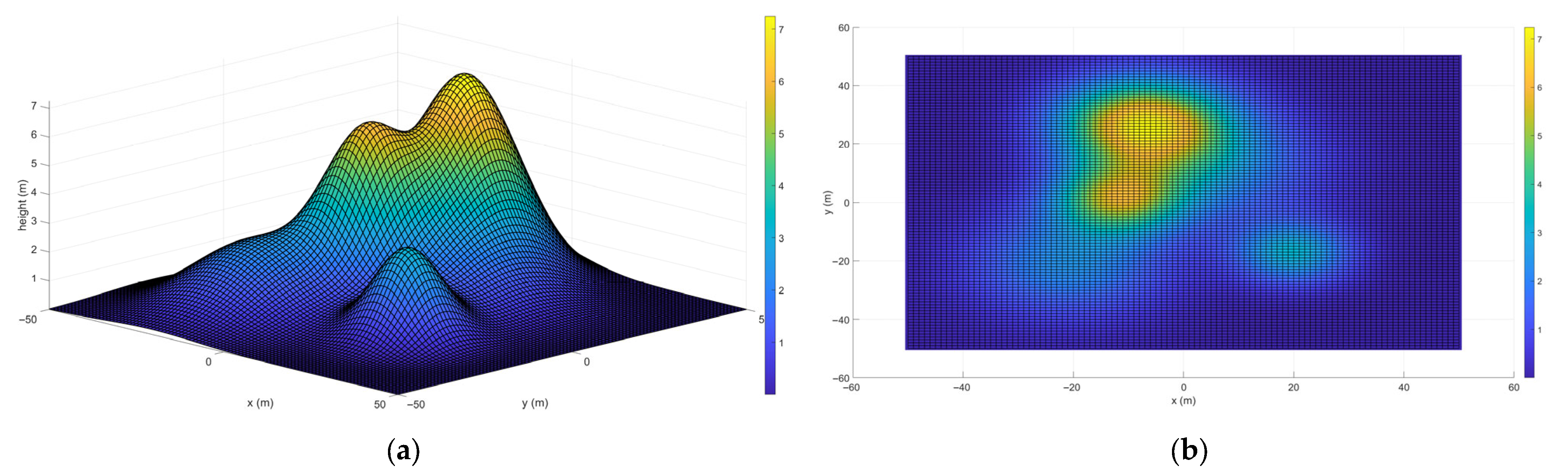

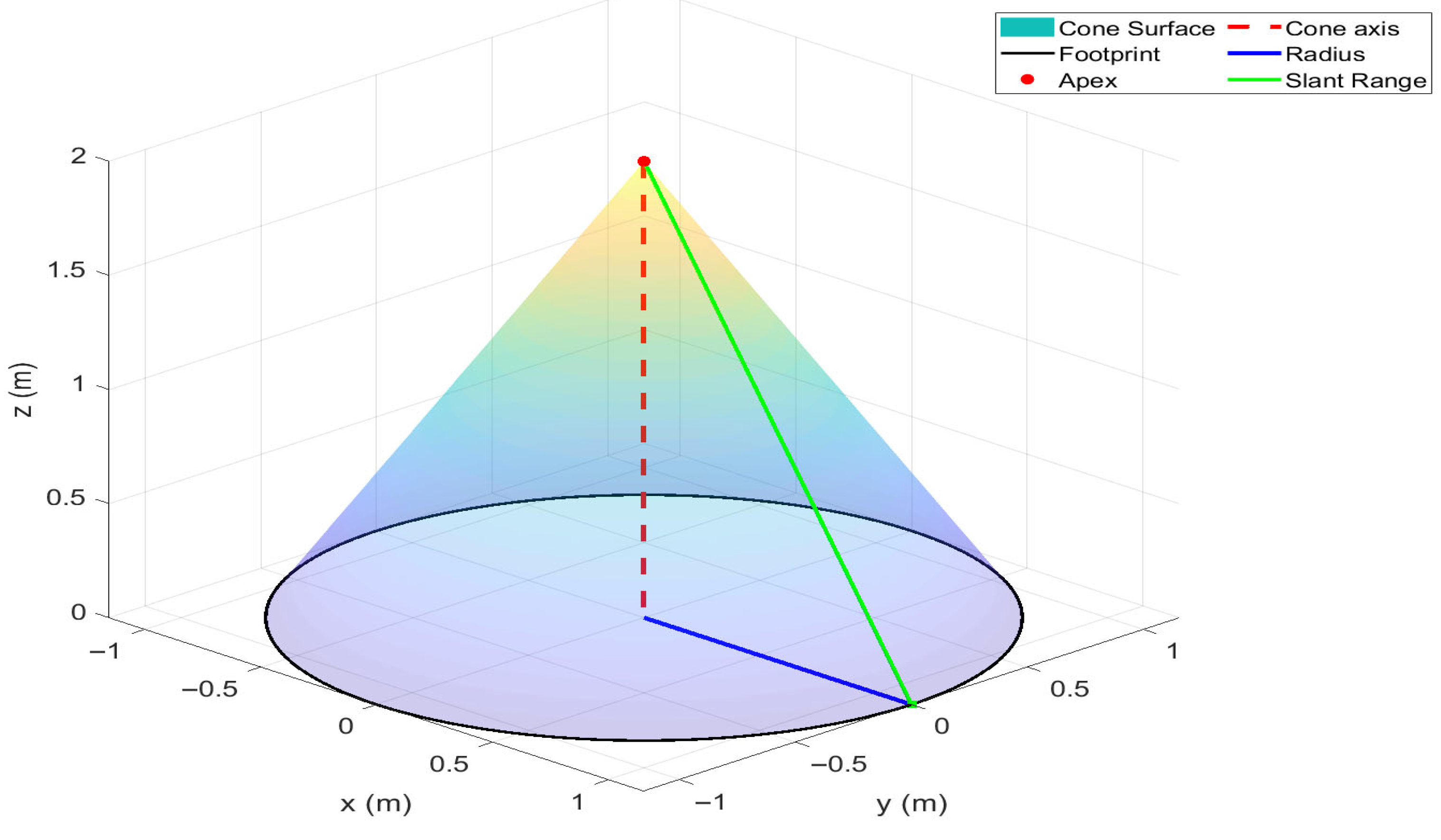

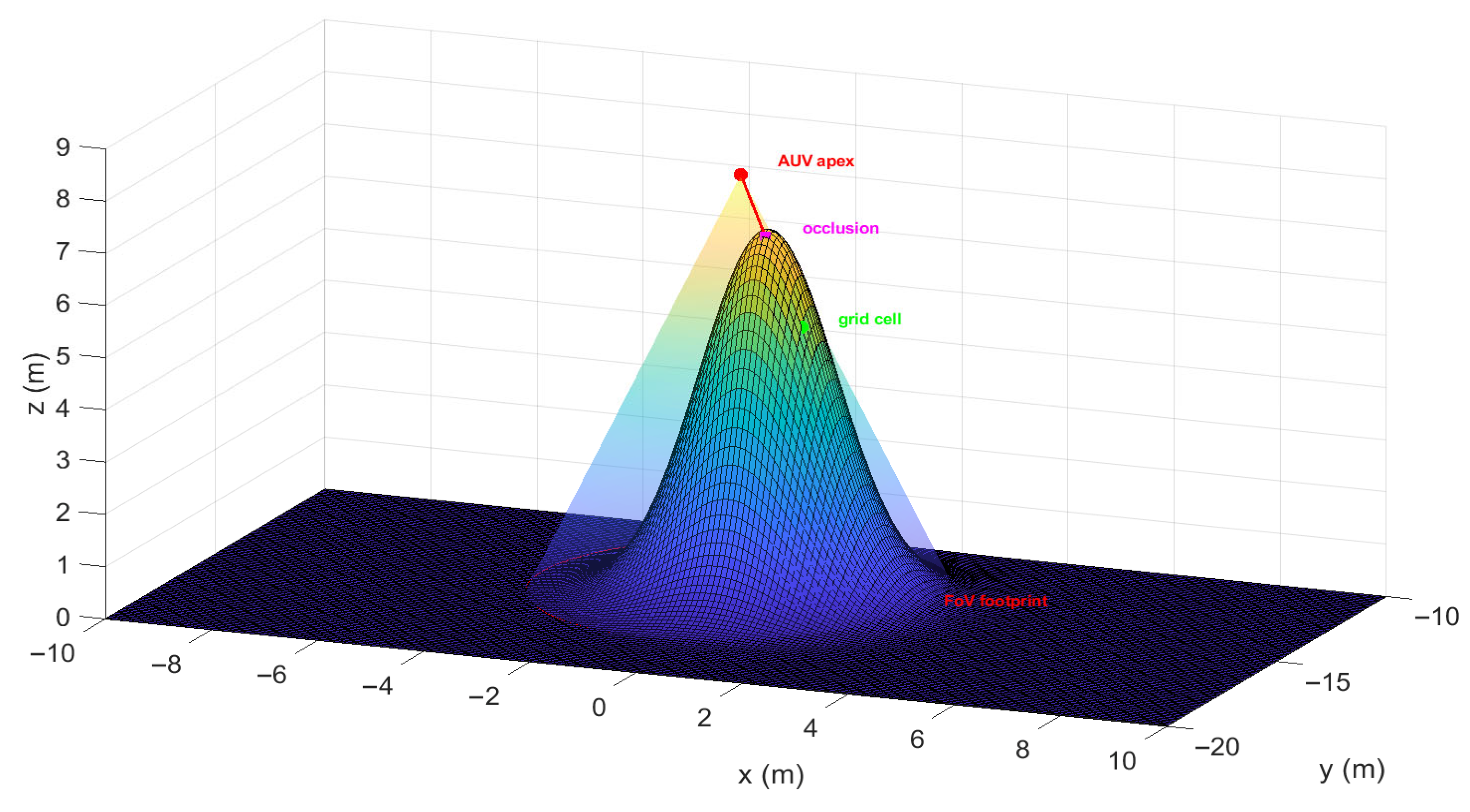

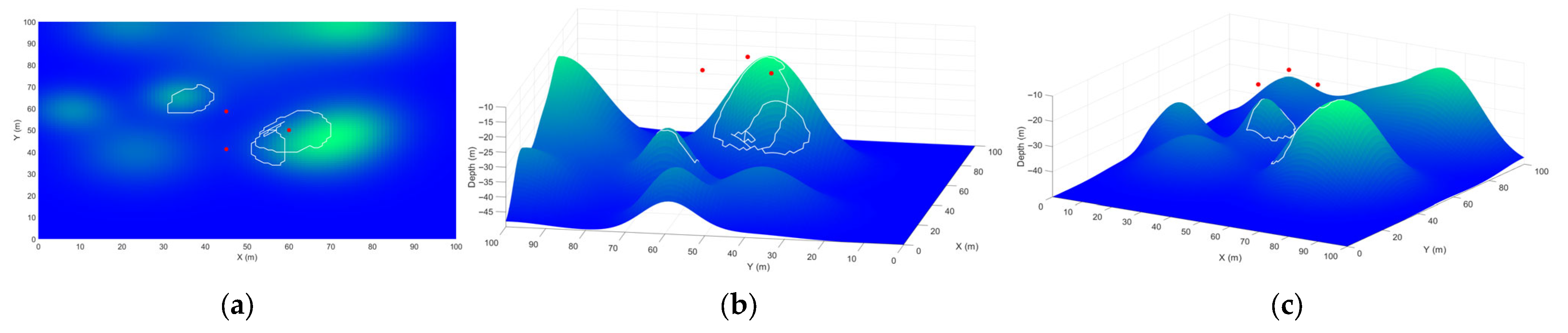

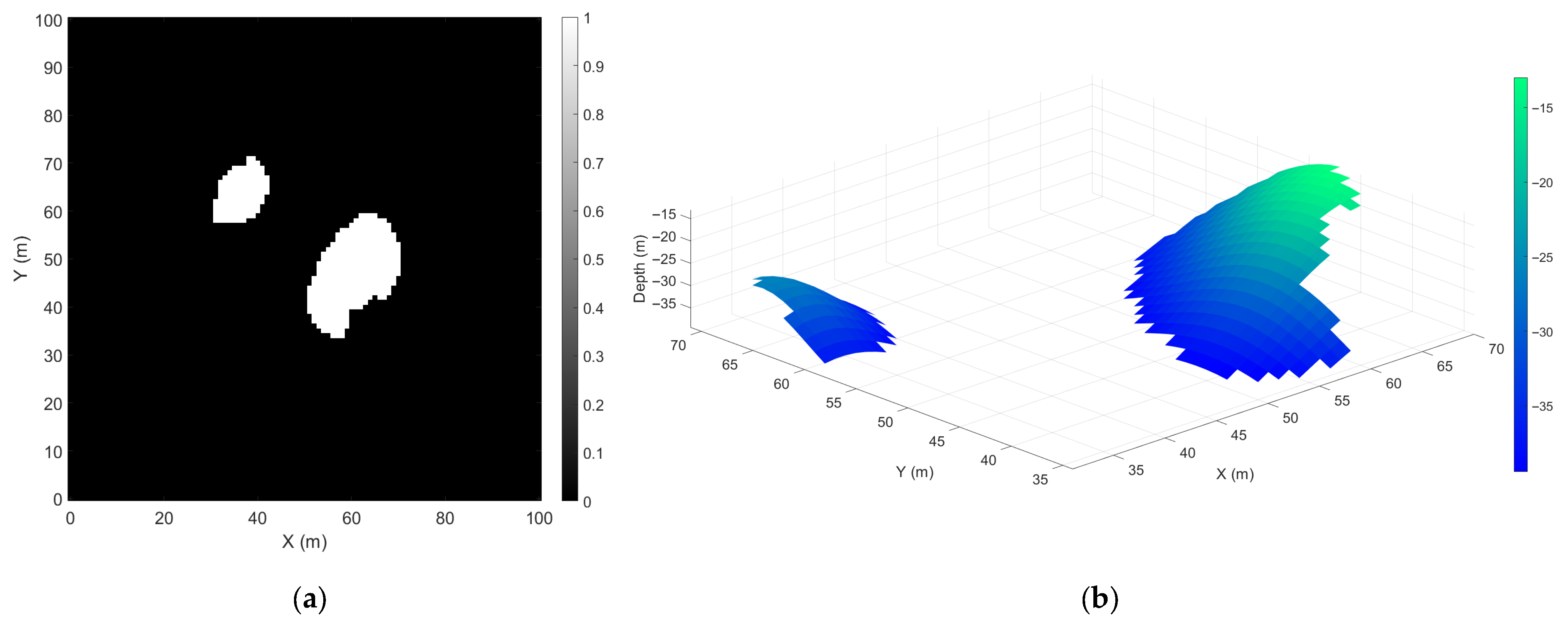

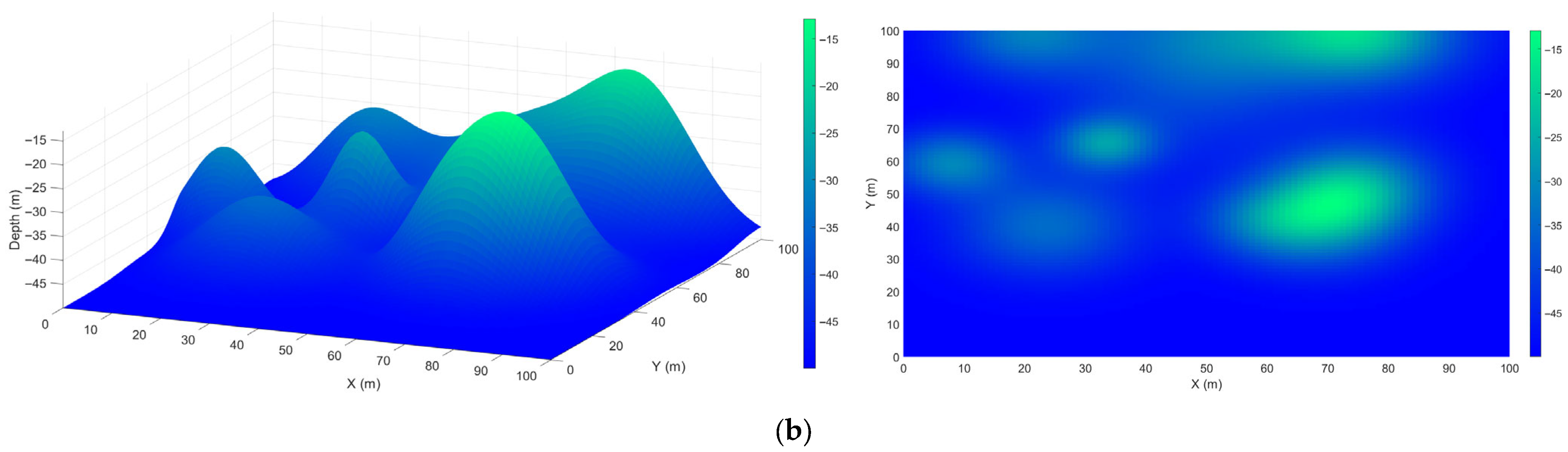

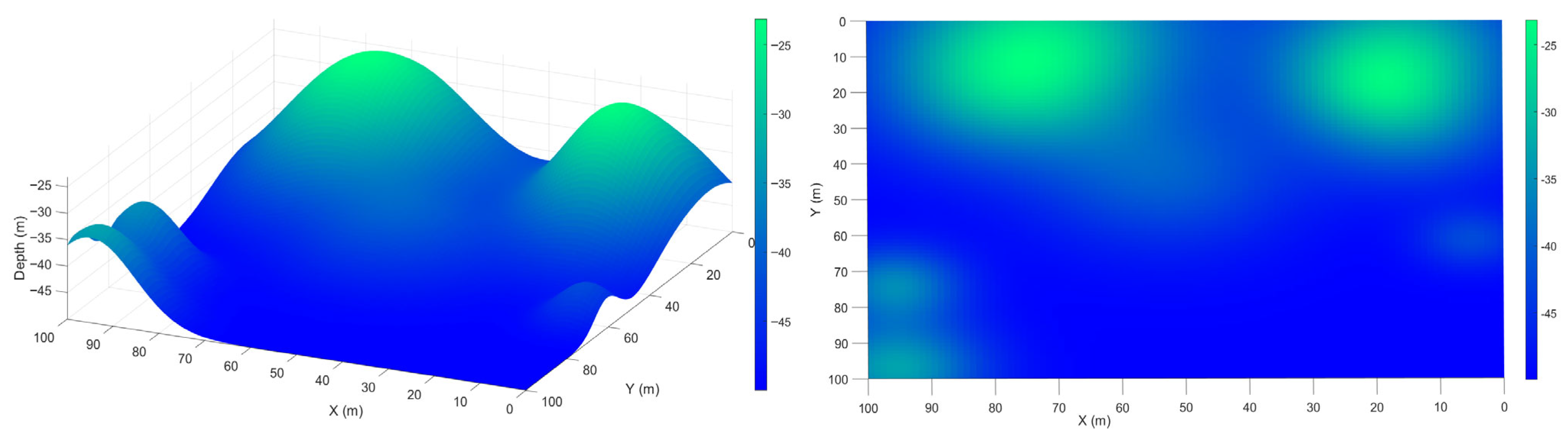

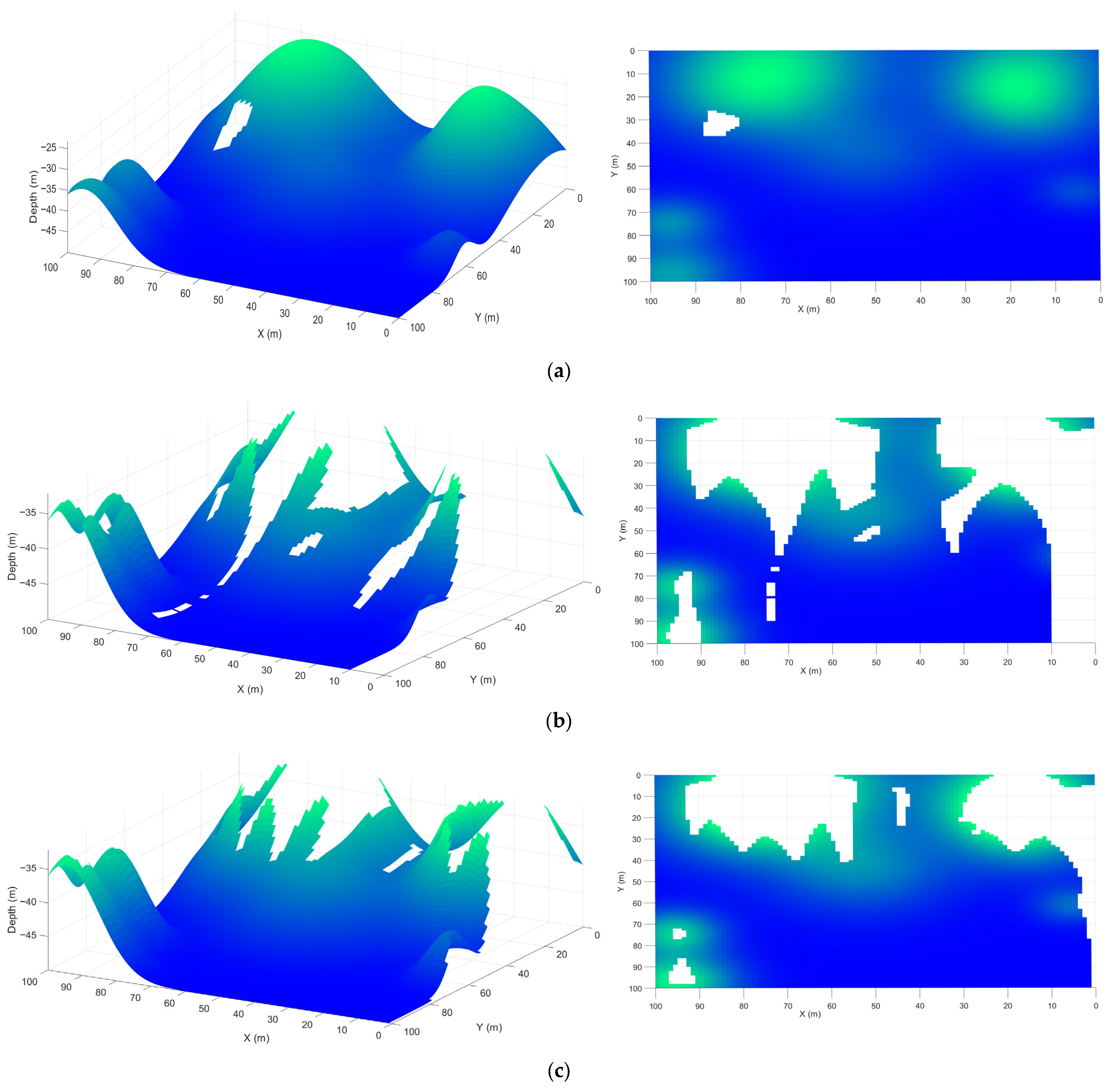

- Online occlusion-aware multi-AUV coverage framework: An online method for sweep coverage of unknown and uneven seafloors in video sensing tasks. The environment is modeled as a 2.5D elevation grid where depths are progressively revealed through sensing. An occlusion-aware sensor model ensures that only truly visible cells within the FoV contribute to both the terrain estimate and coverage map, enabling safe and terrain-aware coverage expansion.

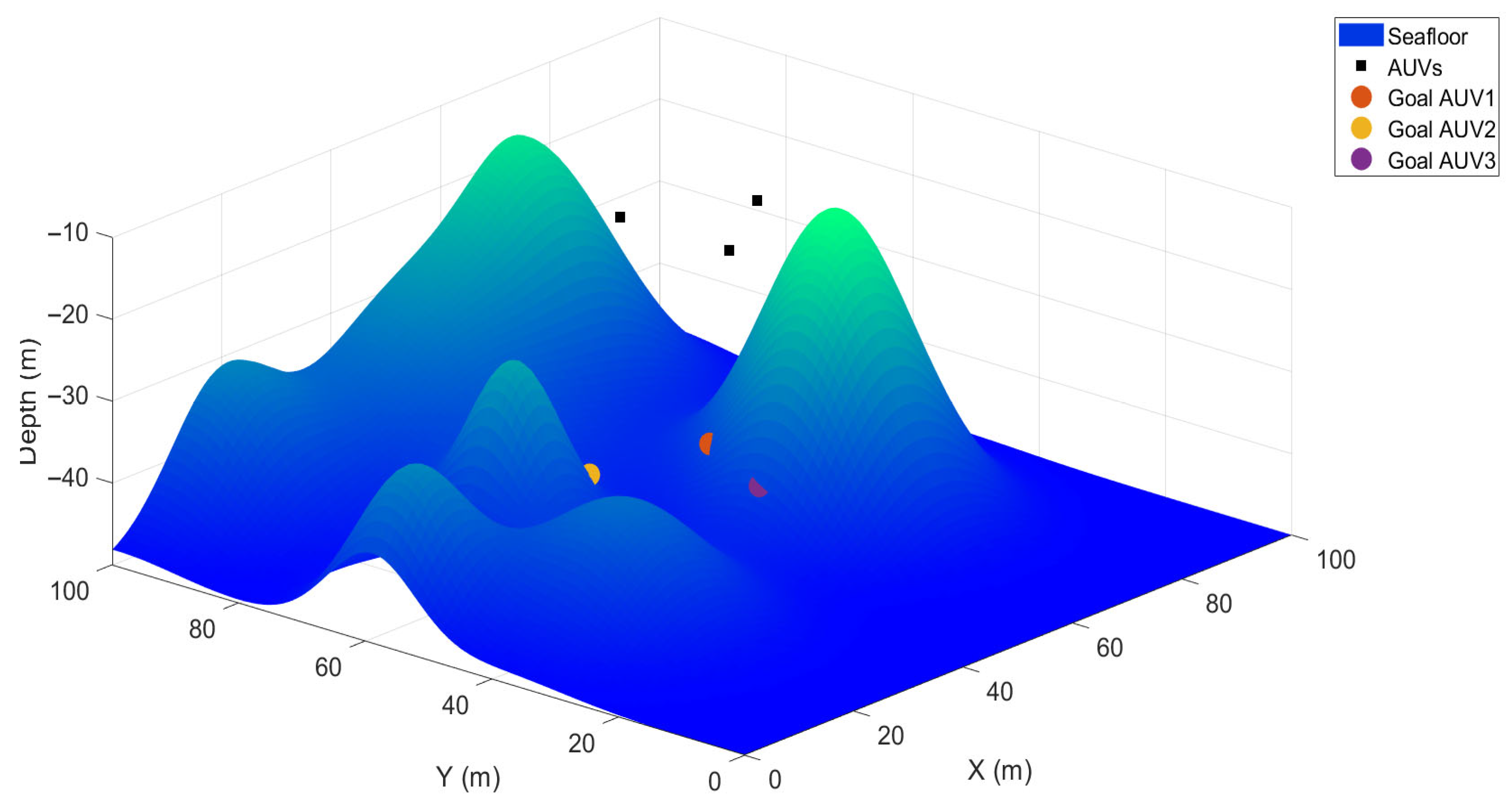

- Goal generation and assignment: Frontier cells, located at the boundary between explored and unexplored regions, are extracted as candidate goals. Each AUV is assigned to a nearby unallocated frontier using a greedy nearest frontier rule with a spacing constraint, distributing the fleet without centralized optimization and requiring only pose, goal, and maps broadcasts. Safe goal altitudes are then computed from nearest known terrain estimates, ensuring that the FoV footprint remains within range and satisfies terrain clearance and depth limits.

- Adaptive trajectory tracking and termination: Short-horizon MPC generates trajectories that balance goal progress, clearance safety, and actuation limits. Each AUV maintains its current until a reach tolerance is met, after which reassignment occurs. The mission terminates once the global coverage ratio exceeds a predefined threshold.

1.2. Related Work

1.3. Article Organization

2. Materials and Methods

2.1. System Model

2.2. Problem Statement

2.3. Proposed Solution

| Algorithm 1. Occlusion-Aware Multi-AUV sweep coverage | |

| Inputs: Number of AUVs (); grid (); camera parameters (, ); safety parameters (,, ); target coverage (); control limits (, , , ); prediction horizon (); sampling period (); footprint scale (); goal-reaching tolerance () | |

| Outputs: coverage map (; elevation estimate (); coverage history (); hitting time () | |

| 1 | Init: for |

| 2 | while do |

| 3 | Sense and update (per AUV ): |

| 4 | for each do |

| 5 | if then |

| 6 | ; |

| 7 | end if |

| 8 | end for |

| 9 | Frontiers: |

| 10 | |

| 11 | Assign goals and altitude: |

| 12 | for each AUV where do |

| 13 | , s.t. |

| 14 | if exist then |

| 15 | |

| 16 | |

| 17 | ; |

| 18 | |

| 19 | |

| 20 | end if |

| 21 | end for |

| 22 | Track and trigger: |

| 23 | for each AUV with do |

| 24 | solve MPC (Horizon ); apply first input |

| 25 | If |

| 26 | end if |

| 27 | end for |

| 28 | |

| 29 | end while |

| 30 | Return: ; ; |

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AUVs | Autonomous underwater vehicles |

| FoV | Field of View |

| MPC | Model Predictive Control |

| LoS | Line of Sight |

| CPP | Coverage Path Planning |

References

- Liu, Z.; Meng, X.; Liu, Y.; Yang, Y.; Wang, Y. AUV-Aided Hybrid Data Collection Scheme Based on Value of Information for Internet of Underwater Things. IEEE Internet Things J. 2022, 9, 6944–6955. [Google Scholar] [CrossRef]

- Jahanbakht, M.; Xiang, W.; Hanzo, L.; Azghadi, M.R. Internet of Underwater Things and Big Marine Data Analytics—A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2021, 23, 904–956. [Google Scholar] [CrossRef]

- Huang, H.; Wen, X.; Niu, M.; Miah, M.S.; Wang, H.; Gao, T. Multi-Objective Path Planning of Autonomous Underwater Vehicles Driven by Manta Ray Foraging. J. Mar. Sci. Eng. 2024, 12, 88. [Google Scholar] [CrossRef]

- Kundavaram, R.R.; Onteddu, A.R.; Devarapu, K.; Narsina, D.; Nizamuddin, M. Advances in Autonomous Robotics for Environmental Cleanup and Hazardous Waste Management. Asia Pac. J. Energy Environ. 2025, 12, 1–16. [Google Scholar] [CrossRef]

- Kemna, S.; Hamilton, M.J.; Hughes, D.T.; LePage, K.D. Adaptive Autonomous Underwater Vehicles for Littoral Surveillance. Intell. Serv. Robot. 2011, 4, 245–258. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, D.; Wang, C.; Sha, Q. Hybrid Form of Differential Evolutionary and Gray Wolf Algorithm for Multi-AUV Task Allocation in Target Search. Electronics 2023, 12, 4575. [Google Scholar] [CrossRef]

- Almuzaini, T.S.; Savkin, A.V. Multi-Auv Path Planning for Underwater Video Surveillance Over an Uneven Seafloor. In Proceedings of the 2025 17th International Conference on Computer and Automation Engineering (ICCAE), Perth, Australia, 20–22 March 2025; pp. 557–561. [Google Scholar]

- Bianchi, C.N.; Azzola, A.; Cocito, S.; Morri, C.; Oprandi, A.; Peirano, A.; Sgorbini, S.; Montefalcone, M. Biodiversity Monitoring in Mediterranean Marine Protected Areas: Scientific and Methodological Challenges. Diversity 2022, 14, 43. [Google Scholar] [CrossRef]

- Di Ciaccio, F.; Troisi, S. Monitoring Marine Environments with Autonomous Underwater Vehicles: A Bibliometric Analysis. Results Eng. 2021, 9, 100205. [Google Scholar] [CrossRef]

- Silarski, M.; Sibczyński, P.; Bezshyyko, O.; Kapłon, Ł.; Kumar, V.; Niedźwiecki, S.; Nowakowski, M.; Moskal, P.; Sharma, S.; Sobczuk, F. Monte Carlo Simulations of the Underwater Detection of Illicit War Remnants with Neutron-Based Sensors. Eur. Phys. J. Plus 2023, 138, 751. [Google Scholar] [CrossRef]

- Li, D.; Wang, P.; Du, L. Path Planning Technologies for Autonomous Underwater Vehicles-A Review. IEEE Access 2019, 7, 9745–9768. [Google Scholar] [CrossRef]

- Murad, M.F.A.B.M.; Samah, M.I.B.; Ismail, Z.H.; Sammut, K. Surveillance of Coral Reef Development Using an Autonomous Underwater Vehicle. In Proceedings of the 2016 IEEE/OES Autonomous Underwater Vehicles (AUV), Tokyo, Japan, 6–9 November 2016; pp. 14–19. [Google Scholar]

- Ramírez, I.S.; Bernalte Sánchez, P.J.; Papaelias, M.; Márquez, F.P.G. Autonomous Underwater Vehicles and Field of View in Underwater Operations. J. Mar. Sci. Eng. 2021, 9, 277. [Google Scholar] [CrossRef]

- Nam, L.H.; Huang, L.; Li, X.J.; Xu, J.F. An Approach for Coverage Path Planning for UAVs. In Proceedings of the 2016 IEEE 14th International Workshop on Advanced Motion Control (AMC), Auckland, New Zealand, 22–24 April 2016; pp. 411–416. [Google Scholar]

- Tiwari, S. Mosaicking of the Ocean Floor in the Presence of Three-Dimensional Occlusions in Visual and Side-Scan Sonar Images. In Proceedings of the Symposium on Autonomous Underwater Vehicle Technology, Monterey, CA, USA, 2–6 June 1996; pp. 308–314. [Google Scholar]

- Zacchini, L.; Franchi, M.; Bucci, A.; Secciani, N.; Ridolfi, A. Randomized MPC for View Planning in AUV Seabed Inspections. In Proceedings of the OCEANS 2021: San Diego—Porto, San Diego, CA, USA, 20–23 September 2021; pp. 1–6. [Google Scholar]

- Zhang, J.; Zhou, W.; Deng, X.; Yang, S.; Yang, C.; Yin, H. Optimization of Adaptive Observation Strategies for Multi-AUVs in Complex Marine Environments Using Deep Reinforcement Learning. J. Mar. Sci. Eng. 2025, 13, 865. [Google Scholar] [CrossRef]

- Cai, K.; Zhang, G.; Sun, Y.; Ding, G.; Xu, F. Multi Autonomous Underwater Vehicle (AUV) Distributed Collaborative Search Method Based on a Fuzzy Clustering Map and Policy Iteration. J. Mar. Sci. Eng. 2024, 12, 1521. [Google Scholar] [CrossRef]

- Cao, X.; Yu, A. Multi-AUV Cooperative Target Search Algorithm in 3-D Underwater Workspace. J. Navig. 2017, 70, 1293–1311. [Google Scholar] [CrossRef]

- Choset, H. Coverage for Robotics—A Survey of Recent Results. Ann. Math. Artif. Intell. 2001, 31, 113–126. [Google Scholar] [CrossRef]

- Hameed, I.A. Intelligent Coverage Path Planning for Agricultural Robots and Autonomous Machines on Three-Dimensional Terrain. J. Intell. Robot. Syst. 2014, 74, 965–983. [Google Scholar] [CrossRef]

- Galceran, E.; Campos, R.; Palomeras, N.; Ribas, D.; Carreras, M.; Ridao, P. Coverage Path Planning with Real-Time Replanning and Surface Reconstruction for Inspection of Three-Dimensional Underwater Structures Using Autonomous Underwater Vehicles. J. Field Robot. 2015, 32, 952–983. [Google Scholar] [CrossRef]

- Hameed, I.A. Coverage Path Planning Software for Autonomous Robotic Lawn Mower Using Dubins’ Curve. In Proceedings of the 2017 IEEE International Conference on Real-time Computing and Robotics (RCAR), Okinawa, Japan, 14–18 July 2017; pp. 517–522. [Google Scholar]

- Basilico, N.; Carpin, S. Deploying Teams of Heterogeneous UAVs in Cooperative Two-Level Surveillance Missions. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 610–615. [Google Scholar]

- Tan, C.S.; Mohd-Mokhtar, R.; Arshad, M.R. A Comprehensive Review of Coverage Path Planning in Robotics Using Classical and Heuristic Algorithms. IEEE Access 2021, 9, 119310–119342. [Google Scholar] [CrossRef]

- Ghaddar, A.; Merei, A.; Natalizio, E. PPS: Energy-Aware Grid-Based Coverage Path Planning for UAVs Using Area Partitioning in the Presence of NFZs. Sensors 2020, 20, 3742. [Google Scholar] [CrossRef]

- Xing, S.; Wang, R.; Huang, G. Area Decomposition Algorithm for Large Region Maritime Search. IEEE Access 2020, 8, 205788–205797. [Google Scholar] [CrossRef]

- Choset Howie and Pignon, P. Coverage Path Planning: The Boustrophedon Cellular Decomposition. In Field and Service Robotics; Zelinsky, A., Ed.; Springer: London, UK, 1998; pp. 203–209. [Google Scholar]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding Horizon “Next-Best-View” Planner for 3D Exploration. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1462–1468. [Google Scholar]

- Selin, M.; Tiger, M.; Duberg, D.; Heintz, F.; Jensfelt, P. Efficient Autonomous Exploration Planning of Large-Scale 3-D Environments. IEEE Robot. Autom. Lett. 2019, 4, 1699–1706. [Google Scholar] [CrossRef]

- Vidal, E.; Palomeras, N.; Istenič, K.; Gracias, N.; Carreras, M. Multisensor Online 3D View Planning for Autonomous Underwater Exploration. J. Field Robot. 2020, 37, 1123–1147. [Google Scholar] [CrossRef]

- Yamauchi, B. A Frontier-Based Approach for Autonomous Exploration. In Proceedings of the 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97. “Towards New Computational Principles for Robotics and Automation”, Monterey, CA, USA, 10–11 July 1997; pp. 146–151. [Google Scholar]

- Quin, P.; Alempijevic, A.; Paul, G.; Liu, D. Expanding Wavefront Frontier Detection: An Approach for Efficiently Detecting Frontier Cells. In Proceedings of the Australasian Conference on Robotics and Automation, ACRA, Melbourne, Australia, 2–4 December 2014. [Google Scholar]

- Quin, P.; Nguyen, D.D.K.; Vu, T.L.; Alempijevic, A.; Paul, G. Approaches for Efficiently Detecting Frontier Cells in Robotics Exploration. Front. Robot. AI 2021, 8, 616470. [Google Scholar] [CrossRef]

- Wang, R.; Zhang, J.; Lyu, M.; Yan, C.; Chen, Y. An Improved Frontier-Based Robot Exploration Strategy Combined with Deep Reinforcement Learning. Robot. Auton. Syst. 2024, 181, 104783. [Google Scholar] [CrossRef]

- Vidal, E.; Palomeras, N.; Istenič, K.; Hernández, J.D.; Carreras, M. Two-Dimensional Frontier-Based Viewpoint Generation for Exploring and Mapping Underwater Environments. Sensors 2019, 19, 1460. [Google Scholar] [CrossRef]

- Mayne, D.Q.; Rawlings, J.B.; Rao, C.V.; Scokaert, P.O.M. Constrained Model Predictive Control: Stability and Optimality. Automatica 2000, 36, 789–814. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, X.; Luo, M.; Yang, C. MPC-Based 3-D Trajectory Tracking for an Autonomous Underwater Vehicle with Constraints in Complex Ocean Environments. Ocean Eng. 2019, 189, 106309. [Google Scholar] [CrossRef]

- Gong, P.; Yan, Z.; Zhang, W.; Tang, J. Lyapunov-Based Model Predictive Control Trajectory Tracking for an Autonomous Underwater Vehicle with External Disturbances. Ocean Eng. 2021, 232, 109010. [Google Scholar] [CrossRef]

- Chen, Y.; Bian, Y. Tube-Based Event-Triggered Path Tracking for AUV against Disturbances and Parametric Uncertainties. Electronics 2023, 12, 4248. [Google Scholar] [CrossRef]

- Xu, F.; Zhang, L.; Zhong, J. Three-Dimensional Path Tracking of Over-Actuated AUVs Based on MPC and Variable Universe S-Plane Algorithms. J. Mar. Sci. Eng. 2024, 12, 418. [Google Scholar] [CrossRef]

- Eskandari, M.; Savkin, A. V Kinodynamic Motion Model-Based MPC Path Planning and Localization for Autonomous AUV Teams in Deep Ocean Exploration. In Proceedings of the 2025 33rd Mediterranean Conference on Control and Automation (MED), Tangier, Morocco, 10–13 June 2025; pp. 162–167. [Google Scholar]

- Gu, J.; Cao, Q. Path Planning for Mobile Robot in a 2.5-dimensional Grid-based Map. Ind. Robot Int. J. Robot. Res. Appl. 2011, 38, 315–321. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, Y.; Li, B.; Wang, X.; Yang, L. Experimental Analysis of Deep-Sea AUV Based on Multi-Sensor Integrated Navigation and Positioning. Remote Sens. 2024, 16, 199. [Google Scholar] [CrossRef]

- Savkin, A.V.; Verma, S.C.; Anstee, S. Optimal Navigation of an Unmanned Surface Vehicle and an Autonomous Underwater Vehicle Collaborating for Reliable Acoustic Communication with Collision Avoidance. Drones 2022, 6, 27. [Google Scholar] [CrossRef]

- Wang, C.; Savkin, A.V.; Garratt, M. A Strategy for Safe 3D Navigation of Non-Holonomic Robots among Moving Obstacles. Robotica 2018, 36, 275–297. [Google Scholar] [CrossRef]

- Savkin, A.V.; Huang, H. Proactive Deployment of Aerial Drones for Coverage over Very Uneven Terrains: A Version of the 3D Art Gallery Problem. Sensors 2019, 19, 1438. [Google Scholar] [CrossRef]

- Huang, H.; Savkin, A.V.; Ni, W. Online UAV Trajectory Planning for Covert Video Surveillance of Mobile Targets. IEEE Trans. Autom. Sci. Eng. 2022, 19, 735–746. [Google Scholar] [CrossRef]

- Jiang, W.; Yang, X.; Tong, F.; Yang, Y.; Zhou, T. A Low-Complexity Underwater Acoustic Coherent Communication System for Small AUV. Remote Sens. 2022, 14, 3405. [Google Scholar] [CrossRef]

- Ericson, L.; Jensfelt, P. Beyond the Frontier: Predicting Unseen Walls from Occupancy Grids by Learning From Floor Plans. IEEE Robot. Autom. Lett. 2024, 9, 6832–6839. [Google Scholar] [CrossRef]

- Shi, J.; Zhou, M. A Data-Driven Intermittent Online Coverage Path Planning Method for AUV-Based Bathymetric Mapping. Appl. Sci. 2020, 10, 6688. [Google Scholar] [CrossRef]

- Özkahraman, Ö.; Ögren, P. Efficient Navigation Aware Seabed Coverage Using AUVs. In Proceedings of the 2021 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), New York, NY, USA, 25–27 October 2021; pp. 63–70. [Google Scholar]

- Zacchini, L.; Franchi, M.; Ridolfi, A. Sensor-Driven Autonomous Underwater Inspections: A Receding-Horizon RRT-Based View Planning Solution for AUVs. J. Field Robot. 2022, 39, 499–527. [Google Scholar] [CrossRef]

- Savkin, A.V.; Huang, H. Asymptotically Optimal Path Planning for Ground Surveillance by a Team of UAVs. IEEE Syst. J. 2022, 16, 3446–3449. [Google Scholar] [CrossRef]

- Cai, C.; Chen, J.; Yan, Q.; Liu, F. A Multi-Robot Coverage Path Planning Method for Maritime Search and Rescue Using Multiple AUVs. Remote Sens. 2023, 15, 93. [Google Scholar] [CrossRef]

- Mu, X.; Gao, W. Coverage Path Planning for Multi-AUV Considering Ocean Currents and Sonar Performance. Front. Mar. Sci. 2025, 11, 1483122. [Google Scholar] [CrossRef]

- Xie, Y.; Hui, W.; Zhou, D.; Shi, H. Three-Dimensional Coverage Path Planning for Cooperative Autonomous Underwater Vehicles: A Swarm Migration Genetic Algorithm Approach. J. Mar. Sci. Eng. 2024, 12, 1366. [Google Scholar] [CrossRef]

- Li, Y.; Ma, M.; Cao, J.; Luo, G.; Wang, D.; Chen, W. A Method for Multi-AUV Cooperative Area Search in Unknown Environment Based on Reinforcement Learning. J. Mar. Sci. Eng. 2024, 12, 1194. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almuzaini, T.S.; Savkin, A.V. Online Multi-AUV Trajectory Planning for Underwater Sweep Video Sensing in Unknown and Uneven Seafloor Environments. Drones 2025, 9, 735. https://doi.org/10.3390/drones9110735

Almuzaini TS, Savkin AV. Online Multi-AUV Trajectory Planning for Underwater Sweep Video Sensing in Unknown and Uneven Seafloor Environments. Drones. 2025; 9(11):735. https://doi.org/10.3390/drones9110735

Chicago/Turabian StyleAlmuzaini, Talal S., and Andrey V. Savkin. 2025. "Online Multi-AUV Trajectory Planning for Underwater Sweep Video Sensing in Unknown and Uneven Seafloor Environments" Drones 9, no. 11: 735. https://doi.org/10.3390/drones9110735

APA StyleAlmuzaini, T. S., & Savkin, A. V. (2025). Online Multi-AUV Trajectory Planning for Underwater Sweep Video Sensing in Unknown and Uneven Seafloor Environments. Drones, 9(11), 735. https://doi.org/10.3390/drones9110735