1. Introduction

In recent years, unmanned aerial vehicles (UAVs) have played a significant role in various military operations, including the Russia–Ukraine conflict, and have become a pivotal force in modern warfare [

1]. Owing to their affordability, rapid deployment, and high maneuverability, UAVs have been widely applied in surveillance [

2], search [

3], communication [

4], and transportation [

5]. Nevertheless, an individual UAV is inherently limited by restricted payload capacity, short endurance, and constrained onboard functionality [

6], making it challenging to accomplish diverse missions in complex environments. In contrast, multiple UAVs can achieve enhanced flexibility, robustness, and broader task coverage through cooperative sensing, distributed decision-making, and task allocation [

7], thus becoming a research frontier in intelligent control and swarm intelligence.

With the rapid development of deep reinforcement learning (DRL) [

8], multi-agent deep reinforcement learning (MADRL) has been increasingly applied to UAV encirclement tasks, aiming to overcome the limitations of traditional rule-based and model predictive control approaches in high-dimensional, nonlinear, and adversarial environments [

9]. Early studies such as Value-Decomposition Networks (VDN) [

10] and QMIX [

11] enabled multi-agent cooperation through value function decomposition, which proved effective in discrete action spaces. However, these approaches cannot be directly applied to physical systems with continuous control inputs, such as UAVs. To address this issue, Lowe et al. proposed Multi-Agent Deep Deterministic Policy Gradient (MADDPG) [

12], which incorporates the centralized training and decentralized execution (CTDE) paradigm. This framework allows multiple agents to efficiently perform cooperative learning and dynamic coordination in continuous action spaces. Owing to these advantages, MADDPG has shown strong potential in UAV swarm control, formation, and encirclement. Subsequently, variants such as COMA [

13] and MAPPO [

14] were developed to improve credit assignment and stability; nevertheless, MADDPG remains one of the most representative and practical policy gradient methods in continuous UAV control.

Encirclement (pursuit–evasion) is a representative multi-agent cooperative control problem with critical applications in battlefield target interception, counter-UAV operations, and sensitive area defense [

15]. The encirclement task requires UAV swarm to jointly capture a maneuverable target in complex scenarios, which poses high demands on maneuverability, task allocation, and global coordination. For example, Du et al. [

16] applied curriculum learning with multirotor UAVs to achieve cooperative encirclement of intruders in urban airspace. Fang et al. [

17] investigated the interception of a high-speed evader by distributed encircling UAVs, confirming the advantages of decentralized control in high-speed environments. Zhang et al. [

18] proposed the GM-TD3 algorithm for intercepting high-speed targets with multiple fixed-wing UAVs in obstacle-rich environments, further enhancing stability and coordination.

In complex environments, UAV swarm must achieve mission objectives while avoiding collisions with both environmental obstacles and teammates. Traditional obstacle avoidance methods, such as geometric approaches, artificial potential fields, and optimization-based control, are often limited in handling high-dimensional continuous state–action spaces and are prone to local optima in unstructured environments [

19]. In contrast, MADRL-based methods have demonstrated stronger adaptability and flexibility. Xiang and Xie [

20] introduced coupled rules in adversarial tasks, significantly improving the success rate of UAV swarm. Li et al. [

21] proposed a dual-layer experience replay mechanism to enhance policy generalization. Zhao et al. [

22] combined meta-learning with MADDPG and proposed the MW-MADDPG framework to strengthen group decision-making. Cao and Chen [

23] incorporated attention mechanisms into MADDPG, accelerating convergence and improving decision accuracy. Xie et al. [

24] addressed obstacle avoidance for multirotor UAV formations in environments with static and dynamic obstacles, demonstrating the adaptability of DRL in diverse scenarios.

In addition to task complexity, UAV platform characteristics are also critical to cooperative control. Multirotor UAVs, while offering hovering and high maneuverability, are limited in range and speed, making them unsuitable for high-speed encirclement. By contrast, fixed-wing UAVs have distinct advantages in endurance and velocity, making them more suitable for long-range cruising and fast target interception [

25]. However, fixed-wing UAVs also introduce nonlinear and coupled challenges due to constraints such as minimum speeds, minimum turning radii, and inability to hover [

26]. To address these challenges, DRL methods have been increasingly applied to fixed-wing UAV decision-making and control. For example, Yan et al. [

27] proposed the PASCAL algorithm, which integrates curriculum learning with MADRL to achieve collision-free formation flight of large-scale fixed-wing UAV swarms. Yan et al. [

28] further enhanced efficiency and scalability by employing attention-based DRL with parameter sharing. Zhuang et al. [

29] studied penetration strategies for high-speed fixed-wing UAVs, validating the effectiveness of DRL in maneuvering tasks. Li et al. [

30] combined DQN with PID control for autonomous longitudinal landing, demonstrating the potential of DRL in low-level flight dynamics control.

Despite these advances, several limitations remain. First, many studies focus on single-task scenarios, such as those that involve only encirclement or only obstacle avoidance, whereas real-world missions typically require UAVs to simultaneously execute multiple tasks, including encirclement, obstacle avoidance, and formation maintenance. Second, although curriculum learning has shown promise in improving training efficiency and convergence in MADRL [

31], its application to fixed-wing UAV cooperative missions remains underexplored, particularly in terms of systematically integrating composite tasks with dynamic constraints.

Based on the above background, this paper proposes a composite deep reinforcement learning method with curriculum learning to address the challenges faced by fixed-wing UAV swarm in simultaneously executing encirclement and obstacle avoidance tasks in complex environments. The main contributions are summarized as follows:

Task modeling: A cooperative multi-agent fixed-wing UAV environment is developed, integrating encirclement and obstacle avoidance with realistic fixed-wing dynamics, maneuverable multirotor evaders, and laser-based local perception;

Method design: A composite MADDPG framework enhanced with curriculum learning is introduced, employing progressively staged tasks and reward optimization to accelerate convergence and enhance policy generalization;

Performance validation: Comparative experiments are conducted in a simulation environment, evaluating metrics such as encirclement duration, capture success rate, and obstacle avoidance safety rate, thereby verifying the effectiveness and superiority of the proposed method in complex scenarios.

2. Problem Formulation

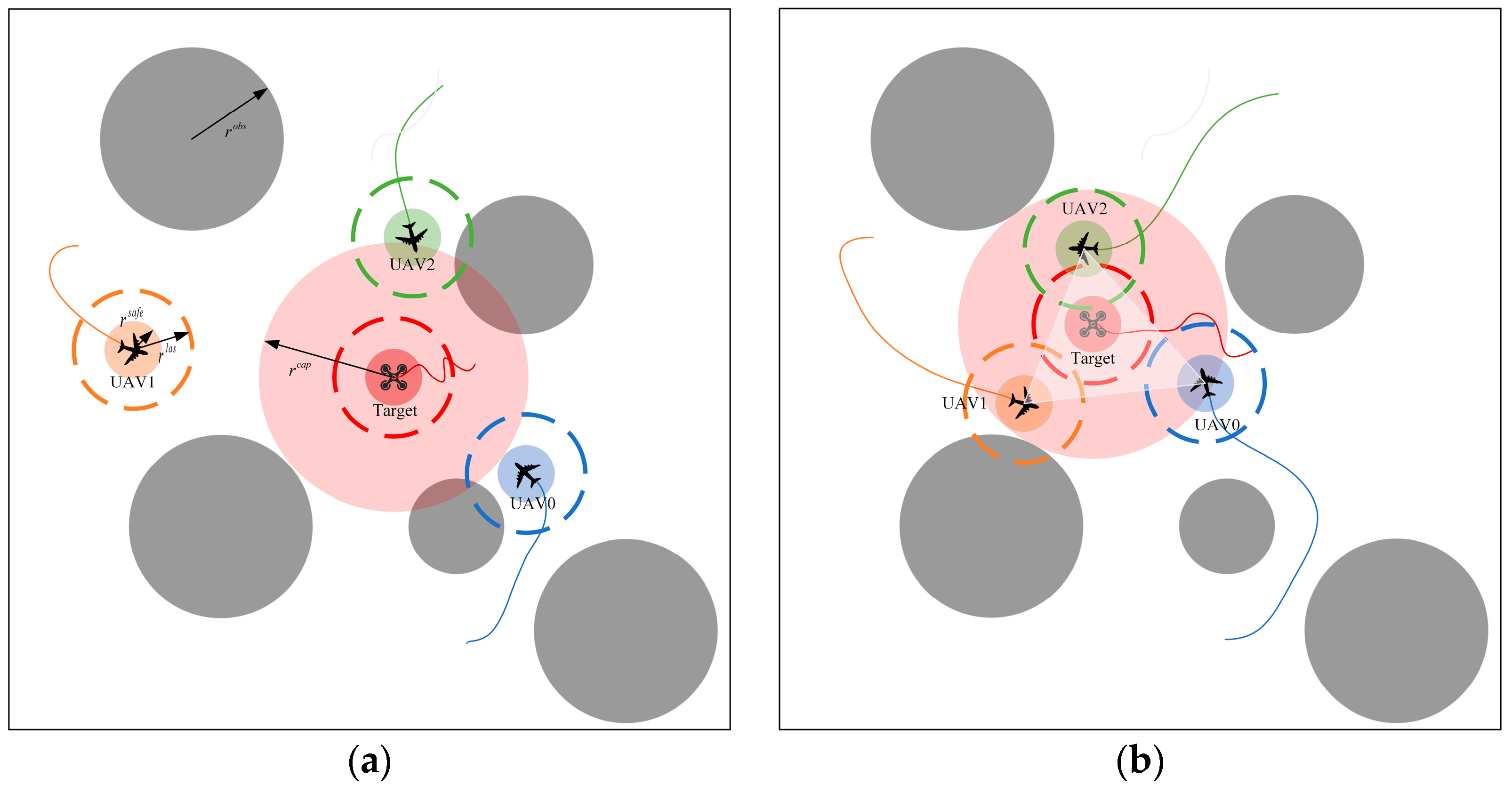

This paper investigates multi-agent deep reinforcement learning (MADRL) for encirclement tasks in obstacle-rich environments, focusing on a scenario where multiple fixed-wing UAVs encircle a multirotor UAV. At the beginning of each episode, the positions and orientations of the UAVs, as well as the positions and radius of the obstacles, are randomly initialized. The scenario setup is illustrated in

Figure 1a. The pursuit is considered successful only if all fixed-wing encircling UAVs (UAVs_e), without collision or leaving the bounded area, are able to keep the multirotor target UAV (UAV_t) within the capture range for a specified number of steps, as illustrated in

Figure 1b.

The environment is modeled as a rectangular area with dimensions . The UAVs_e swarm consists of agents, where the position of the -th fixed-wing UAV is and its safety radius is denoted as . The UAV_t is defined as the target, whose position is represented as with a capture radius .

The environment also contains multiple clusters of trees and restricted zones, abstracted as static circular obstacles. The total number of obstacles is , where the center of the -th obstacle is denoted as and its radius as

The perception of each UAV is modeled using laser beams. A total of beams are employed, with a detection range of . The direction of the -th beam is denoted as , and its detected distance is given by .

2.1. UAV Modeling

Establishing the UAV motion equations involves coordinate transformations. The ground coordinate system is fixed on the Earth, defined according to the North-East-Down convention, with its origin located at the takeoff point. The axis points north, the axis points vertically downward, and the axis is perpendicular to the plane, pointing east.

The trajectory coordinate system has its origin at the UAV’s center of mass. The axis is aligned with the velocity direction, lies in the vertical plane containing , perpendicular to , and points downward, while is perpendicular to the plane and points to the right.

The primary forces acting on a fixed-wing UAV originate from engine thrust and aerodynamic forces. Aerodynamic forces depend on the deflection angles of ailerons, rudder, and elevator, as well as the UAV’s airspeed and body shape. Neglecting the three-dimensional attitude of the fixed-wing UAV, the acceleration in the trajectory coordinate system is simplified as:

where the flight path angle

is defined as the angle between the

axis and the

plane, positive upward; thrust

is generated by the engine along the body

axis; drag

opposes the velocity direction; gravity

has magnitude

and points vertically downward; lift

is generated by the wings and is perpendicular to the incoming airflow; lateral force

is generated by banking and yaw control surfaces. The coordinate system together with the force and attitude angles is illustrated in

Figure 2.

In the simplified model, the velocity coordinate system coincides with the body coordinate system, and the flight altitude is assumed low, with the ground approximated as a plane. The transformation matrix from the trajectory coordinate system to the ground coordinate system is given by:

where the heading angle

is defined as the angle between the projection of the

axis onto the

plane and the

axis, positive to the right, and

,

,

are the rotation matrices about the

,

, and

axes, respectively.

The UAV velocity can be expressed as:

Since the

axis in the ground coordinate system points downward, which is opposite to the figure coordinate system

used for visualization in which the

axis points upward, the final plotting requires the following transformation:

For multirotor UAVs, the kinematic equations in the image coordinate system are directly employed for modeling.

To reduce computational complexity and highlight the core design of the control algorithm, all UAV dynamic models in this study are based on simplified assumptions, without considering actuator dynamics, wind field effects, or six-degree-of-freedom (6 DoF) coupling.

2.2. Constraint Settings and State Calculation

In reality, the magnitude of forces and the payload capacity of UAVs are limited, so velocity cannot change abruptly. In this study, the motion space is defined in terms of acceleration, and the acceleration vector is constrained as:

where

denotes the interval clipping function, which saturates the value to the boundary if it exceeds the upper or lower limits. The maximum and minimum accelerations along the three axes differ for fixed-wing UAVs, while they are identical for multirotor UAVs. The simplified computation of acceleration limits is given by:

where

denotes the time step,

the time scaling coefficient,

and

the maximum and minimum UAV speeds, respectively, and

the minimum turning radius.

For multirotor UAVs, the minimum speed is 0. For fixed-wing UAVs, a certain forward flight speed is required, so the velocity range is limited. The speed magnitude is constrained as:

where

is the velocity saturation operator that limits the speed magnitude within

. The discrete-time position update is given by:

2.3. Laser-Based Environment Perception

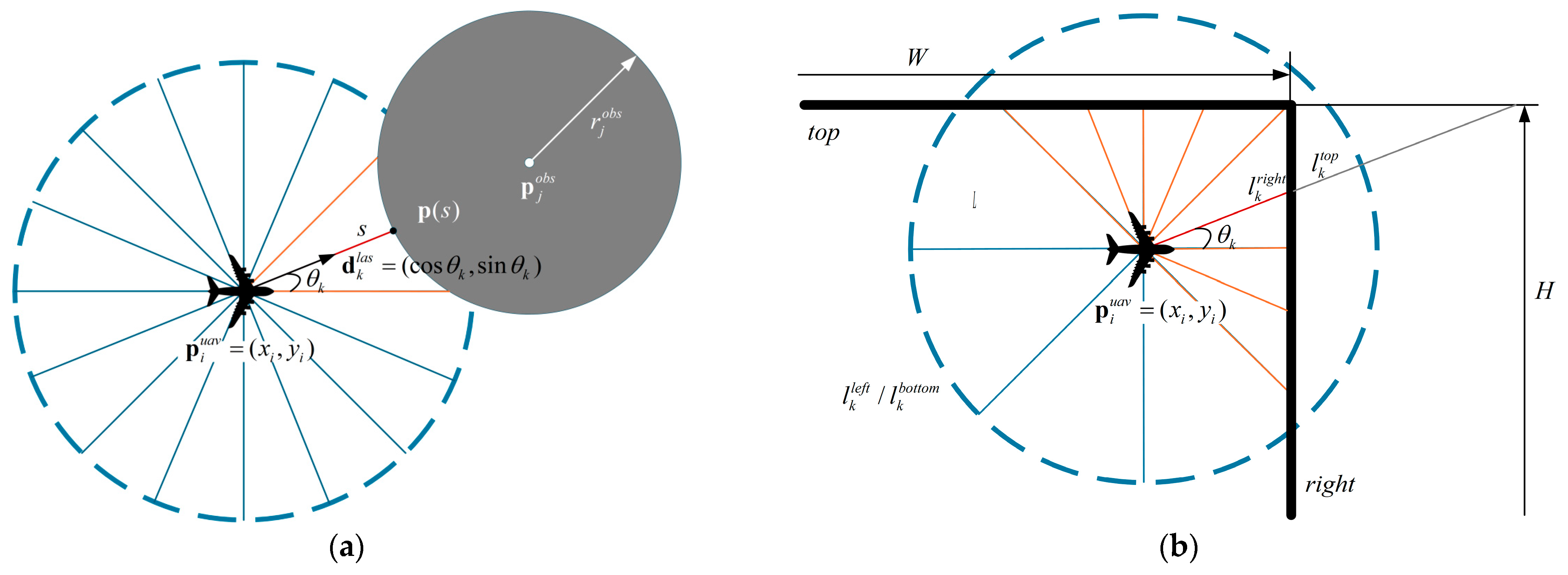

The core of radar-based detection is to acquire spatial information by analyzing the interaction between electromagnetic waves and targets or the environment. In this work, to ensure computational efficiency while maintaining reasonable positioning accuracy, the radar beam propagation and environmental obstacles are geometrically simplified, allowing real-time computation of beam lengths at different angles.

The simplified model abstracts the radar-emitted electromagnetic waves as straight rays of length , divided into beams.

Figure 3a shows the intersections of laser rays with circular obstacle. Using analytic geometry, the intersection points between the

-th ray and the surface of the

-th obstacle are obtained by solving the corresponding system of Equation (9):

where

denotes the intersection point coordinates between the UAV laser ray and the circular obstacle,

is the direction vector of the laser, and

is the parameterized distance along the ray.

For a rectangular environment with radar-detectable boundaries, the intersections of the ray with the top, bottom, left, and right boundaries are evaluated individually:

As an example,

Figure 3b illustrates the intersection points with the top and right boundaries.

Since a ray may be intercepted by nearby obstacles first, the minimum distance is taken as the real-time ray length:

3. Proposed Method

This section first introduces the basic modeling approach for multi-UAV collision avoidance, i.e., the Partially Observable Markov Game (POMG), and defines the state space, action space, and reward function within this framework. Subsequently, the application mechanism of Multi-Agent Deep Deterministic Policy Gradient (MADDPG), a representative framework in MADRL, is presented for collaborative pursuit and obstacle avoidance tasks.

Furthermore, the concept of Curriculum Learning (CL) is incorporated, employing a graded environment difficulty design and dynamic task scheduling to significantly enhance training stability and the generalization capability of learned policies. Based on this idea, a curriculum-learning-enhanced composite multi-agent deep reinforcement learning algorithm (MADRL_CL), is proposed.

This method not only effectively addresses collision avoidance and pursuit challenges of fixed-wing UAVs in highly dynamic target and complex obstacle environments, but also demonstrates advantages in collaboration efficiency and learning convergence.

3.1. MADRL

3.1.1. POMG

The multi-UAV collaborative obstacle avoidance and pursuit problem is a typical cooperative MADRL task. It is commonly modeled as a POMG [

32], formally defined as an 8-tuple:

where

denotes the set of agents;

is the joint state space;

is the joint action space;

is the joint observation space;

is the joint reward function;

is the state transition probability function;

is the observation function; and

is the discount factor.

At each time step

, each agent

receives a local observation

from the environment and selects an action

according to its policy. All agents act simultaneously without sequential dependencies. The joint action

drives the state transition

, and the team receives a shared reward

. The objective is to find an optimal policy

that maximizes the expected cumulative return

given the environment dynamics:

3.1.2. State Space and Action Space

The state space of the -th UAV is defined as , where the environment state includes the boundaries and obstacle positions, and the UAV state contains the UAV's position and velocity.

Since the environment is partially observable, the environment state is perceived by the UAV through laser-based detection, the states of other UAVs_e are obtained via inter-UAV communication, and the UAV_t’s state is provided by the ground station.

The observation of the

-th UAV_e is given by:

where self-observation

represents the UAV’s position and velocity; team observation

represents the positions of other UAVs; goal observation

represents the distance and relative angle to the UAV_t; laser observation

represents the lengths of rays emitted in multiple directions.

The observation of the UAV_t is:

where enemy observation

denotes the distances from each UAV to the target.

The action space of fixed-wing UAVs is defined in the trajectory coordinate system as:

While the action space of multirotor UAV is defined in the image coordinate system as:

Furthermore, since different state variables may have different dimensions and ranges, directly using them can lead to unstable or inefficient learning. In deep reinforcement learning, state and observation spaces are normalized as a preprocessing step, calculated as:

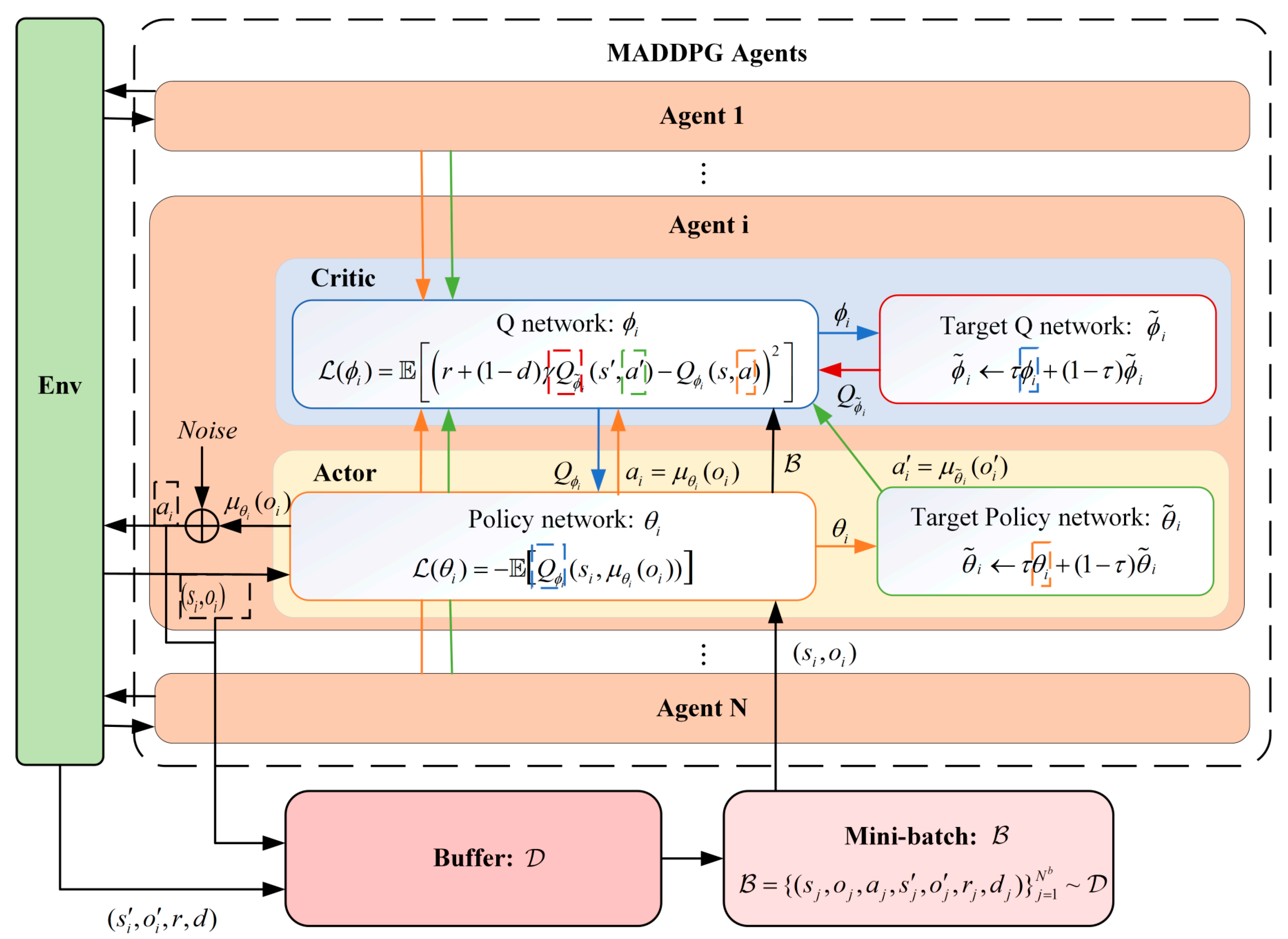

3.1.3. MADDPG

MADDPG is a typical actor–critic based multi-agent reinforcement learning method, extending single-agent DDPG to multi-agent scenarios. The core idea is that each agent’s Critic can access information from all other agents, enabling centralized training with decentralized execution (CTDE). Specifically, during training, a centralized Critic with global observation guides the Actor’s learning to improve stability, while during execution, only the Actor with local observation is used, aligning with real-world conditions.

The Actor network, i.e., the policy network, is defined as , indicating that the Actor selects action based solely on its own observation . The Critic network evaluates the value using the joint observation and joint action of all agents.

Since the update relies on bootstrapping, directly using the online network parameters to compute target values would couple the target function with the network parameters being updated. This can lead to instability or divergence during training. To mitigate this, a target network mechanism is introduced. The target Actor and target Critic networks are updated via a soft update:

where

is the update rate, and

denote the parameters of the target Q-network and target policy network, respectively.

The Critic network of agent

is updated by minimizing the temporal-difference (TD) loss:

where

is the experience replay buffer, and

indicates episode termination. This minimizes the mean squared error between the predicted value and TD-target, enabling the Critic to learn accurate value estimates.

Simultaneously, the Actor network is optimized using the deterministic policy gradient:

During action selection, exploration noise is added in a decaying manner during training, while deterministic outputs are used during evaluation. The final action executed in the training phase is:

where

is a random noise within a certain range and

is the decay scaling factor.

The overall process is illustrated in

Figure 4.

3.2. Curriculum Learning Strategy Design

During the pursuit process, the core challenge for fixed-wing UAVs compared to multirotor UAVs lies in their flight characteristics: they cannot hover or fly backward when encountering obstacles. Determining how to perform deceleration and turning control in advance based on radar detection distances becomes a critical challenge for deep reinforcement learning algorithms.

Due to the size of the pursuit area, obstacle distribution, and dynamic interference from other UAVs, collisions may occur, potentially terminating training episodes prematurely. If highly complex environments are used at the initial stage, UAVs may adopt local suboptimal strategies such as hovering in place to avoid severe collision penalties. Frequent collisions during exploration also hinder the search for global optimal solutions, significantly increasing training difficulty. Therefore, a progressively increasing task and environment complexity strategy is more reasonable, allowing UAV agents to gradually optimize their parameters and eventually master effective pursuit control policies.

To incrementally enhance task difficulty and generalization, we employ a CL mechanism to design the pursuit task in a staged manner. By gradually introducing obstacles, target mobility, and spatial constraints, the stability and convergence efficiency of training are effectively improved.

The task is divided into four curriculum levels: Level 0 to Level 3:

Level 0: basic environment, no obstacles, stationary target, small initial positions, long capture radius;

Level 1: introduces obstacles, enlarges the initial range, reduces the capture radius;

Level 2: enables the UAV_t to move, further enlarges the initial range, reduces the capture radius again;

Level 3: the initial range almost covers the entire scene, with the smallest capture radius.

To reduce training steps, a performance-driven curriculum scheduler is introduced. This mechanism dynamically adjusts task difficulty based on task performance and training progress. The system adaptively increases the curriculum level according to the pursuit success rate over a recent window of episodes, calculated as:

where

is the number of recent episodes in the sliding window, and an episode is considered successful if all UAVs enter the UAV_t’s capture range and remain within it for a specified number of steps. If any UAV leaves the capture range, the step count is reset to zero.

To prevent premature level promotion, a dual-condition mechanism is adopted. Level switching occurs only if both the performance threshold and training quantity conditions are satisfied. The threshold condition requires a minimum experience: the number of episodes in the current stage must be at least twice the check window, and the cumulative steps must exceed twice the replay buffer capacity. The pseudocode is shown in Algorithm 1.

| Algorithm 1: MADDPG_CL (Multi-Agent Deep Deterministic Policy Gradient with Curriculum Learning) |

| Input: Level = 0, number of agents , hyperparameters. |

| Output: Environmental rewards, task success rate. |

| 1. | Initialize replay buffer |

| 2. | do |

| 3. | Initialize actor, critic, target actor, target critic networks with parameters

|

| 4. | end for |

| 5. | do |

| 6. | Initialize environment and agent states |

| 7. | do |

| 8. | via actor network |

| 9. | Environment updates, returning reward and next state $s'$ |

| 10. | into |

| 11. | then |

| 12. | end if |

| 13. | break |

| 14. | end if |

| 15. | |

| 16. | do |

| 17. | |

| 18. | |

| 19. | |

| 20. | |

| 21. | end for |

| 22. | end for |

| 23. | |

| 24. | then |

| 25. | Level Level + 1 |

| 26. | end if |

| 27. | end for |

3.3. Reward Function Design

The reward function is the core mechanism guiding agents to learn effective pursuit strategies. It needs to account for multiple aspects of UAV behavior, including approaching the target, collision avoidance, and cooperative encirclement. In pursuit tasks, the primary capture reward is typically sparse, so shaping dense intermediate rewards is introduced to provide continuous feedback and prevent learning stagnation.

To keep the sparse and dense rewards within a reasonable scale, the terminal discounted reward is computed as:

where

is the discount factor,

is the episode length,

represents the sparse terminal reward, and

represents the continuous reward.

The terminal discounted reward also adjusts the relative weighting of different behavioral rewards and differentiates the reward logic between UAVs_e and UAV_t, forming an adversarial learning framework. The per-step reward

is defined as:

where

is a reward coefficient that can be positive or negative, and

is the normalized reward for each behavior.

3.3.1. Reward Function for Encircling UAVs

In collision avoidance and pursuit tasks, multiple UAVs_e need to autonomously plan optimal trajectories to efficiently encircle the UAV_t. This requires simultaneously satisfying safety constraints, time-optimality, and cooperative encirclement objectives. The total reward for UAVs_e consists of collision penalty, distance reward, distance rate reward, encirclement reward, completion reward, and time penalty, formulated as:

The components are defined as follows:

where

is the collision penalty coefficient, and

is the collision indicator.

where

is the distance reward coefficient, and

is the current distance between the UAV_e and the UAV_t.

where

is the rate coefficient, and

is the distance change from the previous step.

where

,

are areas of triangles formed by the UAV_t and any two UAVs_e, and

is the area formed by the three UAVs_e.

where

is the negative sum of other positive reward coefficients, and

is the time step indicator.

where

is the reward coefficient for entering the capture radius.

where

is the sparse reward coefficient for completing the encirclement objective when all UAVs_e remain within the capture radius for the required steps.

3.3.2. Reward Function for Target UAV

The reward mechanism for the UAV_t is adversarial to the UAVs_e, aiming to avoid encirclement and perform autonomous collision avoidance. The total reward is formulated as:

The components are defined as follows:

where

is the encirclement penalty coefficient,

is the center guidance reward coefficient, and

is the collision penalty coefficient.

4. Experiments and Results

The experiments were conducted in a custom UAV simulation environment built on Python 3.10.17 and Gymnasium 1.1.1, incorporating dynamics models for both fixed-wing and multirotor UAVs.

In terms of computational performance, all experiments were carried out on a laptop equipped with an Intel Core Ultra 9 275HX (2.70 GHz) processor (Intel, Santa Clara, CA, USA), an NVIDIA RTX 5060 GPU with CUDA acceleration (NVIDIA, Santa Clara, CA, USA), and 32 GB of RAM. During training, the GPU utilization was approximately 80%, with CPU usage around 10% and memory consumption of about 3 GB. Under these hardware conditions, the average training efficiency was approximately 10,000 episodes per 6 h, and the complete curriculum training of 40,000 episodes took about 24 h in total.

4.1. Parameter Settings

Table 1 summarizes the parameters of the environment, UAVs, and onboard radar sensors, balancing scenario realism with computational feasibility.

To validate the rationality of the adopted simplified model parameters, a comparative analysis was conducted based on the standard Coordinated Turn Model. The governing equations are given as:

where

is the flight speed,

is the turning radius,

is the gravitational acceleration,

is the roll angle, and

is the load factor.

Considering the maneuvering characteristics of small fixed-wing UAVs, when the flight speed is within 20–32 m/s and the maximum lateral acceleration is 2.5 m/s2, the corresponding roll angle is approximately 14.3°, and the load factor is = 1.03, which represents a light-load coordinated turn. This parameter range is consistent with typical small fixed-wing UAVs, whose load factors during level coordinated turns usually range between 1.0 and 1.4. These results demonstrate that the simplified point-mass model adopted in this study provides reasonable dynamic consistency and physical interpretability for planar flight tasks.

The hyperparameters of MADDPG are given in

Table 2, with most values adopted from common practices in multi-agent reinforcement learning. The learning rate and discount factor were tuned through preliminary experiments to ensure stable convergence. The parameter settings of the four curriculum levels are listed in

Table 3, where task complexity is progressively increased by adjusting UAV initial positions, obstacle density, and target maneuverability. The coefficients of the reward function are detailed in

Section 3.3. All experiments were conducted with fixed random seeds to ensure both randomness and reproducibility.

4.2. Experimental Results

A collision failure is determined if any UAV approaches an obstacle, another UAV, or the environment boundary closer than the predefined safety radius. An encirclement step is considered complete when all UAVs_e enter the UAV_t’s capture range without collision and form a surrounding trend, as illustrated in

Figure 1b. Within the maximum number of steps allowed per episode, if the UAVs_e swarm successfully completes the required number of encirclement steps, the pursuit is regarded as successful. During training, several issues may arise, including constraints imposed by the environment boundaries, collisions with obstacles, collisions among UAVs, and stuck in local optimum. These issues are partially illustrated in

Figure 5.

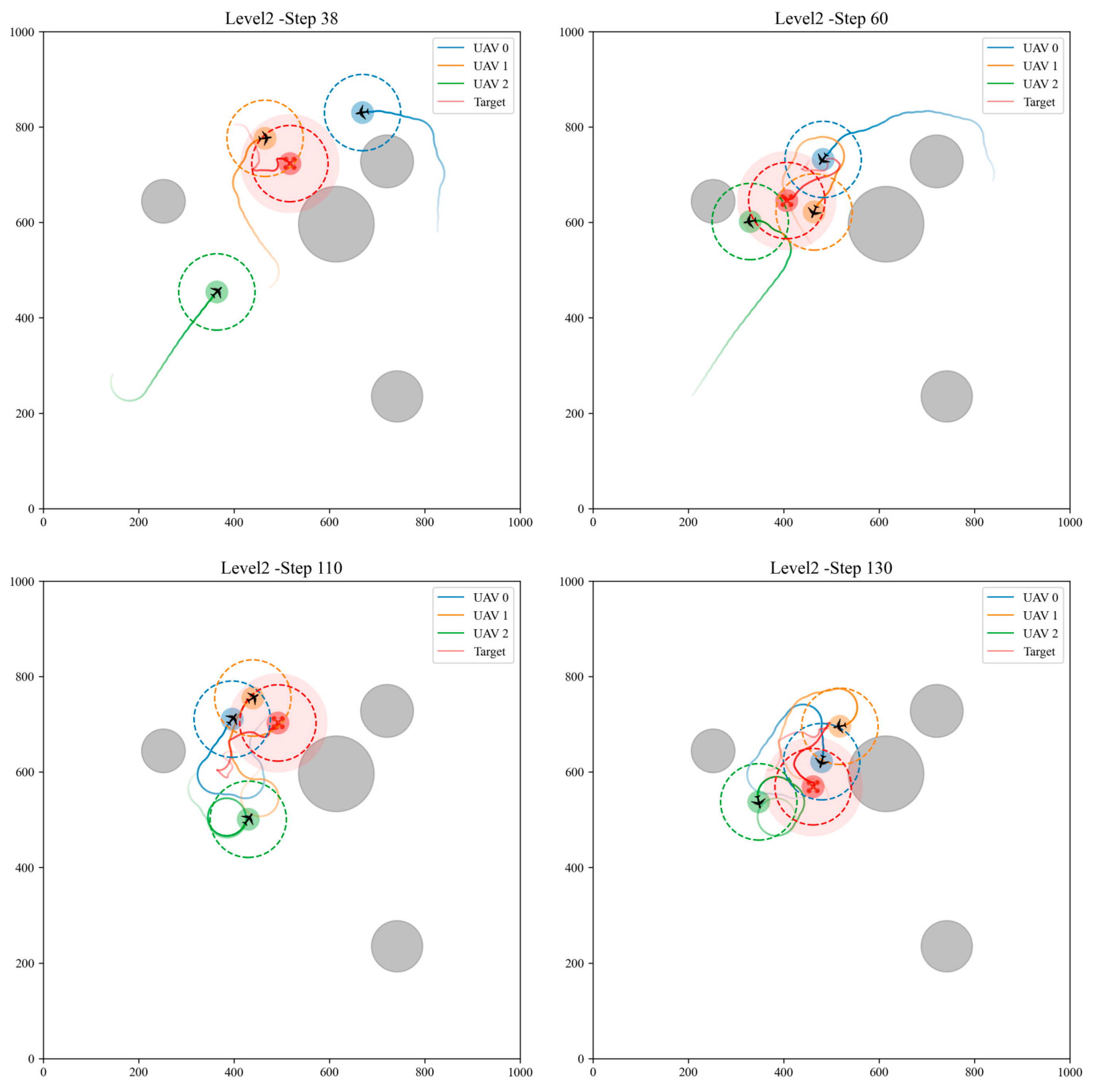

To more intuitively demonstrate the learning effect of the pursuit task, we plotted the successful pursuit scenarios at different training stages. The results are autonomously generated by the trained neural network policies, and GIF animations are provided in the

Supplementary Materials. To ensure clarity, the illustrations for Level 0 and Level 1 are displayed until the final step of task completion, as shown in

Figure 6. In Level 0, UAVs_e enter the capture range and circle around the UAV_t. Level 1 UAVs can avoid obstacles while approaching the UAV_t.

The illustrations for Level 2 and Level 3 show successful pursuit after exceeding 10 capture steps, with early termination. The Level 2 results are shown in

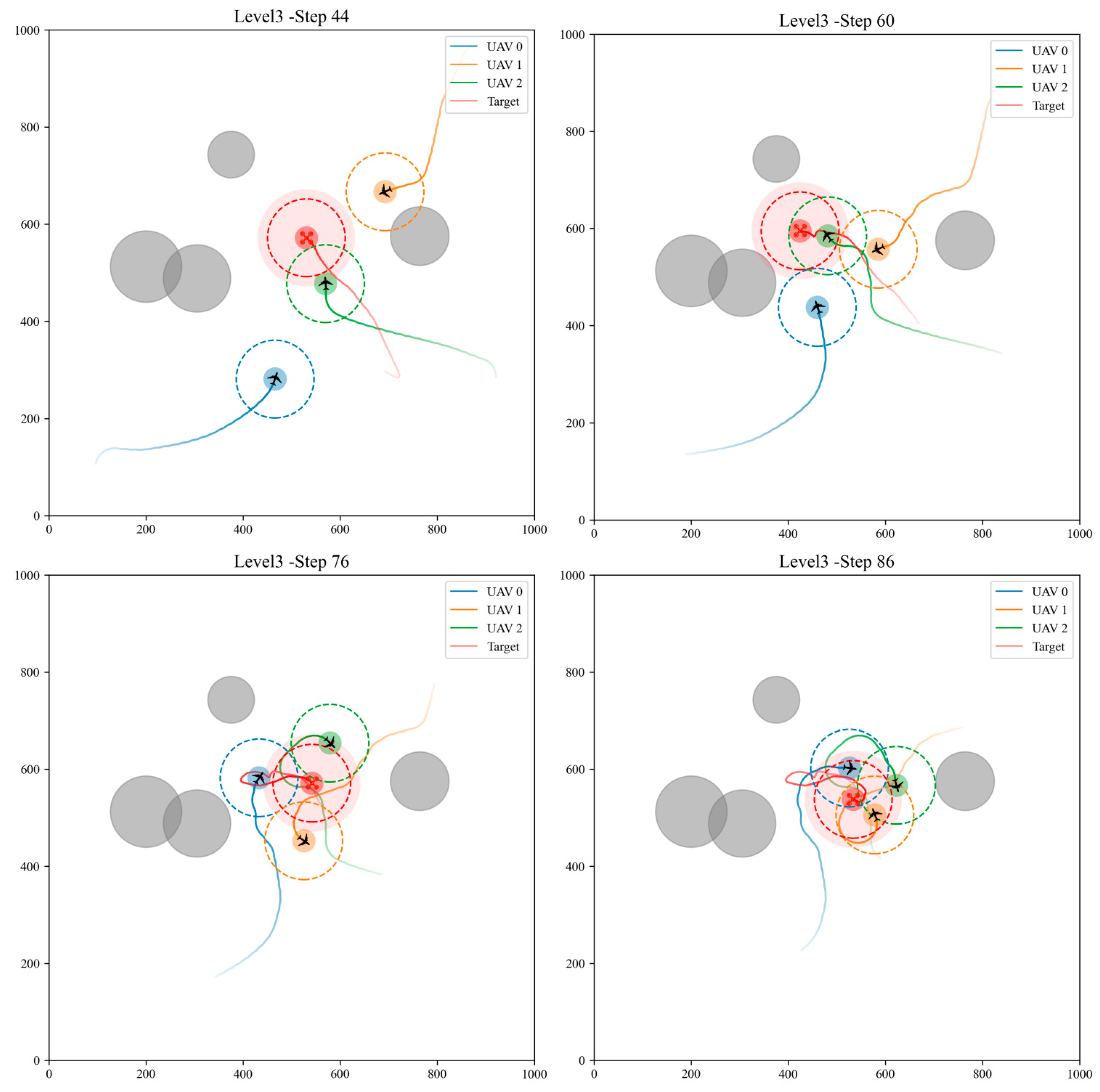

Figure 7, and Level 3 results in

Figure 8. In Level 2, the fixed-wing UAVs can turn in time to pursue when the multirotor moves backward. In Level 3, even when initial positions cover the entire scene, UAVs can still successfully complete the pursuit task.

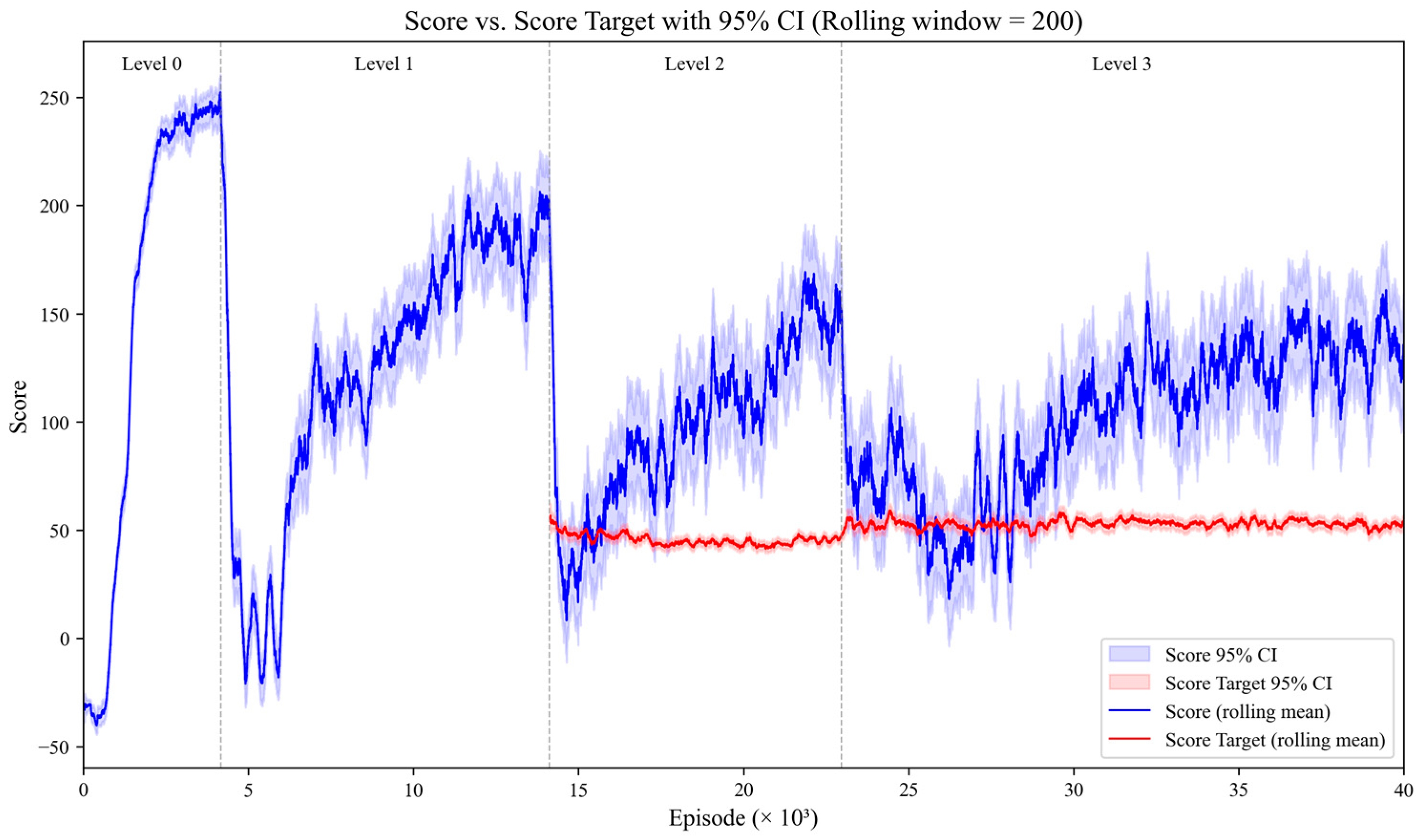

The reward function evolution during training is shown in

Figure 9. In Level 0, the UAVs_e first learn to fly within the environment boundaries and successfully encircle a stationary UAV_t. After entering Level 1, obstacles are introduced in the environment, resulting in negative rewards for collisions. UAVs gradually learn obstacle avoidance behavior. In Level 2, the UAV_t is capable of motion, making early-stage pursuit difficult and rewards relatively low; through learning, UAVs_e gradually master target identification and tracking strategies. Level 3 further enlarges the initial generation range and reduces the capture area, significantly increasing task difficulty. The final reward stabilizes around 125 points. As the task level increases, the required time for pursuit increases, and cumulative rewards decrease due to time penalties.

In experimental evaluation, 1000 episodes were conducted for each level, calculating capture success rates for every 100 episodes. To ensure robustness, error bars are included. The results are shown in

Figure 10, illustrating differences in performance under different levels and capture step requirements. Overall, the success rate remains above 70%, with failures mainly due to collisions with obstacles, teammates, or the UAV_t. Other failures are mostly due to not completing the pursuit within the limited steps. In obstacle-free, stationary target scenarios, the success rate exceeds 95%. With increasing capture step requirements, success rates slightly decline. In the most complex Level 3 scenario (global random initial positions, random initial headings, maximum steps 200, capture range within 100 m), the single-step encirclement success rates exceed 80%, and the 10-step success rates remain around 70%, demonstrating the method's effectiveness and robustness in complex environments.

5. Conclusions

This paper addresses the challenges of fixed-wing UAVs performing encirclement and obstacle avoidance tasks in complex environments, and proposes a curriculum-based composite deep reinforcement learning method. A multi-agent fixed-wing UAV collaborative task environment is constructed, integrating pursuit and obstacle avoidance, while fully accounting for UAV dynamic constraints, multirotor target UAV maneuverability, and local perception in obstacle-rich scenarios, thereby validating the effectiveness of the proposed approach.

Experimental results demonstrate that the composite MADDPG_CL framework effectively mitigates issues such as local optima and slow convergence during training, substantially enhancing policy stability and generalization. The multirotor target UAVs are also controlled via deep reinforcement learning, exhibiting strong maneuvering and adversarial capabilities that often induce collisions for the encircling UAVs, further increasing task complexity. In obstacle-free environments, fixed-wing encircling UAVs complete encirclement tasks efficiently, achieving success rates exceeding 95%. Even in complex environments with moving targets and random initial conditions, success rates remain around 70%. The proposed method also demonstrates robust performance in collision avoidance and extended capture duration.

Overall, this study provides a novel solution for intelligent collaborative control of fixed-wing UAV swarm in high-risk, complex environments. Although the UAVs_e used in the current training are homogeneous, the training framework itself supports heterogeneity in both parameters and dynamics models. Moreover, training results with UAV_t demonstrate that the same neural network can adapt to cooperative tasks involving different types of UAVs. Future work will focus on more realistic scenarios, incorporating communication constraints, sensor noise, and dynamic obstacles, and will explore transfer learning and imitation learning to further enhance the adaptability and robustness of the framework in real-world systems. Validation experiments will be conducted on the PX4-SITL platform and the Gazebo 6-DOF simulation environment to achieve seamless integration and testing of the algorithms within a high-fidelity flight control system.