1. Introduction

With the rapid development of UAV technology, UAVs can quickly reach locations inaccessible by other means of transportation due to their high mobility and flexibility. In areas with insufficient network coverage, UAVs can quickly serve as mobile communication relay stations. Consequently, UAV systems have been widely used for traffic monitoring, forest rescue, precision agriculture, disaster emergency response, and aerial remote sensing. However, their performance in complex scenarios is severely restricted by their limited onboard computing resources, insufficient processing power, and battery capacity, as well as communication latency and stability issues faced in high-demand real-time tasks.

In recent years, Internet of Things (IoT) technology has developed rapidly. The IoT connects a large number of devices together to collect and process device data [

1]. The numerous IoT devices require a large amount of storage and computing resources [

2]. Traditional cloud computing technology uses remote cloud servers as storage and computing servers for IoT devices. In recent years, computing-intensive tasks have emerged due to the rapid development of mobile terminals and IoT devices. However, traditional cloud computing technology involves transmitting these tasks, which can cause traffic congestion and time delays. In response to the above problems, mobile edge computing (MEC) has emerged [

3].

By offloading computation-intensive tasks from IoT devices to nearby MEC servers, MEC technology leverages proximate storage and computational resources. This approach effectively mitigates the network congestion and latency issues inherent in traditional cloud computing paradigms [

4]. Among the various MEC technologies, computation offloading can effectively reduce processing delays for mobile UAVs and conserve device energy consumption [

5]. In dynamic MEC networks, RL algorithms have been extensively explored for addressing computation offloading problems [

6]. Applying mobile edge computing technology to unmanned systems and offloading computing tasks to edge nodes can effectively expand the computing power of UAVs, reduce energy consumption, and improve response efficiency. This technology is the key to overcoming the bottleneck of UAV resources [

7].

Due to inherent flaws in RL, training RL algorithms requires powerful cloud servers, which drones must disclose sensitive data to. Attackers can easily obtain this sensitive information and use it to infer the value function in the RL algorithm. The value function represents the private information about the drone’s action preferences in a given state, leading to privacy leaks [

8,

9,

10].

However, the Differential Privacy (DP) technology introduced in existing research will reduce the performance of offloading strategies. Furthermore, the extent of performance loss caused by the addition of DP is unknown until after the offloading strategy has been trained. This leads to significant performance loss in the offloading strategy due to the introduction of DP, wasting computational power and resources. Therefore, establishing the relationship between privacy budget and performance loss before offloading strategy training is an important and necessary research issue, but existing research has failed to establish this quantitative relationship. These limitations pose challenges to the application of RL-based computation offloading methods in practical tasks.

For this purpose, we propose a UAV-centric privacy-preserving RL-based computation offloading approach. This approach adopts the LDP technique to perturb the decision trajectories of UAVs. In order to ensure that the RL-based computation offloading approach converges, we do not simply add LDP noise into decision trajectories. Instead, we model the computation offloading problem as a finite-horizon Markov decision process (FH-MDP), and then add LDP noise to randomize the frequency of each state–action pair in decision trajectories. Finally, we provide a regret bound for the proposed approach. The contributions of this paper are summarized as follows:

- 1.

We propose a privacy-preserving computation offloading approach for multi-UAV MEC from a UAV-centric perspective. To achieve desirable UAV performance, we establish the relationship between privacy budget and the performance of the offloading policy.

- 2.

To protect the privacy of decision trajectories of UAVs, we firstly model the computation offloading problem as a finite-horizon Markov decision process. Then, the LDP mechanism is employed to produce Gaussian noise or Randomized Response noise and perturb the count of state–action pairs in the decision trajectory with Gaussian noise or Randomized Response noise.

- 3.

We theoretically derive the regret bound and achieve -LDP for the Gaussian perturbation mechanism and -LDP for the Randomized Response mechanism. Then, we conduct experiments to evaluate the effectiveness of the proposed approach.

The remainder of this paper is structured as follows.

Section 2 reviews related works.

Section 3 introduces the system model and problem formulation. The proposed approach is detailed in

Section 4. Theoretical analysis and experimental evaluation are presented in

Section 5 and

Section 6, respectively. Supplementary discussions are provided in

Section 7, and

Section 8 concludes the paper.

2. Related Works

Currently, many papers have proposed new methods for optimizing computational offloading strategies, which can be roughly divided into two categories. The first category involves algorithms based on optimization problems. For example, Yan et al. [

11] studied the optimization problem of computational offloading strategies, constructed a potential game in an End-Edge Cloud Computing (EECC) environment, in which each user device selfishly minimizes its payoff, and developed two potential game-based algorithms. He et al. [

12] used a queuing model to characterize all computing nodes in an environment and established a mathematical model to describe the scenario. They transformed the offloading decision of the target mobile device into three multi-variable optimization problems to study the trade-off between cost and performance. Zhao et al. [

13] approached the partial task offloading problem as a dynamic long-term optimization problem to minimize task delays. Lyapunov stochastic optimization tools were used to separate long-term delay minimization from stability constraints, transforming the problem into a per-slot scheduling problem that meets the system’s time-dependent stability requirements. Zhang et al. [

14] established a joint optimization problem for offloading decisions, resource allocation, and trajectory planning. Dynamic Terminal Users (TUs) moved using a Gaussian–Markov stochastic model. The goal of the optimization problem was to maximize the minimum secure computing capacity of the TUs. A Joint Dynamic Programming and Bidding (JDPB) algorithm was proposed to solve the optimization problem. Liu et al. [

15] introduced cooperative transmission and a reconfigurable intelligent surface (RIS) to jointly optimize user association, beamforming, power allocation, and the task partitioning strategy for local computation and offloading. They exploited the implicit relationships between these and transformed them into explicit forms for optimization. Yang et al. [

16] minimized the total energy consumption of all devices by jointly optimizing the binary offloading patterns, CPU frequency, offloading power, offloading time, and intelligent reflecting surface (IRS) phase shift across all devices. Two greedy and penalty-based algorithms were proposed to solve the challenging nonconvex and discontinuous problem.

However, traditional optimization-based algorithms typically assume that the environment is known or static, requiring accurate system models such as channel models. Dynamic changes in the environment require remodeling and solving. And high-dimensional, continuous action spaces, or non-linear problems, are difficult to solve and require a large amount of computation. RL, on the other hand, excels at processing high-dimensional action state spaces through autonomous learning through interaction with the environment. RL can better adapt to dynamic and uncertain environments, discover strategies that are difficult to obtain through analytical methods, and does not require a precise model of the environment, relying on data-driven learning [

17]. Therefore, applying RL technology to the training of computational offloading strategy decisions is a relatively common approach in current research.

There are many papers that have proposed RL methods for optimizing strategies in computation offloading. Huang et al. [

18] introduced a deep reinforcement learning (DRL) framework that dynamically adjusts task offloading and wireless resources based on changing channel conditions, simplifying the optimization process. Lei et al. [

19] developed a Q-learning and DRL framework that balances immediate rewards with long-term goals to minimize time delay and energy cost in mobile edge computing. Wang et al. [

20] proposed a task offloading method based on meta-reinforcement learning, which can quickly adapt to new environments with a small number of gradient updates and samples. They proposed a method of coordinating first-order approximation and clipping proxy objectives, and customized sequence-to-sequence (seq2seq) neural networks to model the offloading strategy. Feng et al. [

21] developed a distributed offloading algorithm using deep Q-learning to reduce latency and manage energy cost in edge components of the Internet of Vehicles. Liu et al. [

22] applied reinforcement learning based on a Markov chain to reduce task completion times within energy limits and created a cooperative task migration algorithm. The above works use RL and other approaches to optimize system latency and energy cost in computation offloading, but do not consider privacy protection.

Recently, the existing works aim to preserve users’ offloading preferences [

23], localization [

24], and usage patterns [

25] for RL-based computation offloading approaches. To provide strict and provable privacy guarantees, DP is adopted to mitigate privacy leakage [

9]. Privacy protection in computation offloading can be divided into two main areas: (1) User data privacy protection: Cui et al. [

26] proposed a novel lightweight certificate-less edge-assisted encryption scheme (CL-EAED) to offload computationally intensive tasks to edge servers, ensuring that edge-assisted processing does not expose sensitive information, effectively preventing data leakage and improving the efficiency and security of task offloading. A security-aware resource allocation algorithm is proposed for multimedia in MEC [

27], focusing on data confidentiality and integrity to minimize latency and energy cost while ensuring security. (2) Privacy protection of offloading strategies: Xu et al. [

28] developed a two-stage optimization strategy for offloading in IoT, with the aim of maximizing resource use while minimizing time delay and balancing privacy and performance. Wang et al. [

24] proposes a disturbance region determination mechanism and an offloading strategy generation mechanism, which adaptively selects a suitable disturbance region according to a customized privacy factor and then generates an optimal offloading strategy based on the disturbance location within the determined region. However, when privacy protection is introduced, the policy performance will be lost, and the policy performance loss caused by privacy protection in the above studies is inestimable. In order to address this limitation, we propose our approach and make an intuitive comparison with the existing solutions in

Table 1.

3. System Model and Problem Formulation

3.1. Network Model

In this paper, the network model of multi-UAV MEC is shown in

Figure 1, which contains

M UAVs,

J edge computing servers (ECSs), and a cloud server (CS). In this system, each UAV uploads its own decision trajectory to the cloud server. The decision trajectory of the

m-th UAV consists of a sequence of states

s, actions

a, and rewards

r in chronological order. Formally, the decision trajectory of the

m-th UAV is

. Note that the decision trajectory may encompass sensitive UAV information from which its preferences and location can be deduced. The cloud server will adopt the RL algorithm to train the efficiency of the offloading policy, and then the cloud server will deploy the trained policy to the UAVs. In time slot

t, the task of the

m-th UAV is denoted by five variables

, where

is the size of the task in bits,

is the CPU frequency of the

m-th UAV,

is the CPU frequency of the ECS

j,

represents the remaining battery capacity of the drone, and

represents the deadline for this task. We assume that all UAVs have the same CPU frequency, while each ECS also has the same CPU frequency [

23]. Then, the UAV makes the decision based on its own offloading policy. In this paper, we consider a full offloading model in which the UAV makes the offloading decision.

3.2. Cost Model

There are two kinds of costs, time delay and energy cost. Note that due to the abundant energy in cloud servers, the energy consumption and latency of their training offloading policy are not taken into account. If the

m-th UAV processes the task locally, the local time delay

is taken by its onboard CPU to complete the computation. This local execution delay is calculated as follows:

Here, the local time delay

is proportional to the total computational workload, which is the product of the task size

and the the number of CPU cycles

d required per bit. It is inversely proportional to the UAV’s processing speed

. A larger task or a more complex task will take longer, while a faster CPU will reduce the delay. According to [

29], the local energy consumption

is given by

where

is the effective capacitance coefficient based on the CPU architecture [

30]. The local energy consumption is proportional to the square of the CPU frequency (

) and the total number of CPU cycles (

). If the

m-th UAV decides to offload a task to an ECS

j, the offloading time delay

is the sum of two components: (1) the time taken to transmit the task data to the ECS over the wireless channel; (2) the time for the ECS to execute the task:

Here, the first term

is the transmission delay. It depends on the task size

and the achievable transmission rate

. The second term

is the remote computation delay at the ECS, analogous to the local time delay but using the ECS’s CPU frequency

. Based on [

29], the energy cost of offloading

from UAV

m to the ECS

j is given by

where

p is the transmission power of the

m-th UAV. The energy cost of loading is the product of the transmission power

p and the transmission time (

).

3.3. Privacy Threat

We consider an honest-but-curious cloud server with the capability to accurately execute the RL algorithm process for training offloading policy, though it is susceptible to leaking decision trajectories of UAVs to potential adversaries. Once the adversary acquires the UAV’s decision trajectories, they can utilize techniques such as inverse reinforcement learning (IRL) to extract the UAV’s offloading preferences and usage patterns from the trajectory [

9,

23]. Then, this privacy information can be used to determine whether a specific UAV is present in the current area, potentially compromising UAV location privacy. Privacy concerns related to this issue have been extensively discussed in [

24] with a heuristic privacy metric, and a similar privacy metric was considered in the healthcare IoT [

31] and mobile blockchain network [

32]. However, the existing approaches primarily focus on ensuring privacy from the perspective of offloading behavior in the case of a trusted cloud server, neglecting to address the potential privacy risks posed by an honest-but-curious cloud server. Hence, a previous work [

33] introduced a privacy-aware offloading approach based on DP. However, the proposed approach fails to preserve UAV privacy during the training process of the offloading policy. Similarly to this paper, existing works [

34] have proposed privacy-aware offloading approaches based on LDP. However, they fail to anticipate the impact of the privacy budget on the performance of the offloading policy before training.

3.4. Problem Formulation

In this section, we formulate an FH-MDP

, where

is the state space,

is the action space,

is a transition distribution for the new state under the current state,

r is a distribution of reward with mean

, and

is the horizon. In this context, the offloading policy

is defined as a series of mappings from states to actions. Formally,

. Under the LDP privacy guarantee, we aim to find the optimal offloading policy

to minimize the total cost

of time delay and energy consumption for all UAVs, where

and

is the optimal expected cumulative rewards under a state–action pair, which can be derived by the Bellman equations [

35]. The objective is to minimize a weighted total cost

that balances the time delay and energy consumption of the system. The cost function is defined as

and represent the composite delay and energy cost for m-th UAV, respectively, which are the sum of local and offloading components depending on the chosen action. is the normalization function to scale the delay and energy values to a comparable range, and the weighted average allows the system to prioritize either low latency (when is close to 1) or energy efficiency (when is close to 0).

Based on the formulated FH-MDP, the state, action, and reward of this paper are defined as follows:

- 1.

State: The state , where is the transmission rate and . If , it means that the task of the m-th UAV has been terminated. The reason for this may be that the remaining battery capacity of the drone has been consumed, or the task has reached the deadline. If , it indicates that the task pf the m-th UAV has been completed; otherwise, it denotes that the processing is still ongoing.

- 2.

Action: The action , If , it indicates that the m-th UAV processes its task locally; otherwise, the m-th UAV offloads its task to the ECS.

- 3.

Reward: The reward is the total cost of all UAVs. Formally, .

The above defines a standard FH-MDP for the computation offloading problem. However, as discussed in the privacy threat model (

Section 3.3), directly using the original decision trajectory

for policy training on the edge server introduces privacy risks. To address this issue, we introduce an LDP constraint. The core idea is that each drone perturbs its local trajectory

before offloading. The edge server then learns the offloading policy based solely on these perturbed trajectories. The next section (

Section 4) will elaborate on the LDP perturbation mechanism and the corresponding offloading policy learning mechanism under this privacy guarantee.

4. Proposed Approach

4.1. Overview

In the proposed approach, the core idea is to add LDP noise to the decision trajectory of each UAV during policy training. However, simply introducing LDP noise into the decision trajectory will disrupt the temporal coherence of the decision trajectory, which will prevent algorithmic convergence. Hence, we adopt the Gaussian perturbation mechanism and the Randomized Response mechanism based on [

36] to randomize the frequency of each state–action pair in the decision trajectory. Then, the honest-but-curious cloud server adopts the perturbed decision trajectories to train the offloading policy. In general, the proposed approach is divided into two parts, namely the

LDP perturbation mechanism and

offloading policy learning mechanism, with their detailed contents provided in the following.

4.2. LDP Perturbation Mechanism

During the training process, each UAV adopts the LDP mechanism to perturb its decision trajectory locally for privacy preservation. Then, the perturbed decision trajectory is offloaded to the cloud served by each UAV. In this paper, we provide two LDP perturbation mechanisms, the Gaussian perturbation mechanism and the Randomized Response mechanism. The details are shown in Algorithm 1 and Algorithm 2, respectively.

4.2.1. Gaussian Perturbation Mechanism

At the beginning of Algorithm 1, the input is the decision trajectory of the

m-th UAV

, and the LDP parameters

and

c. For each state–action pair

in

(Line 1), the cumulative reward

and visiting frequency to the state–action pair

are calculated by Equations (

6) and (

7), respectively (Line 2):

and

where

is the indicator function. Then, the noises

and

are generated independently by Gaussian noise with a standard deviation of

(Line 3), where

[

36]. The perturbed cumulative reward

and perturbed visiting frequency to the state–action pair

are calculated (Lines 4–5). Moreover, the visiting frequency to the trajectory

is calculated as follows (Line 7):

Then the perturbed

is calculated based on the noise

(Lines 8–9). Finally, the perturbed cumulative reward

, perturbed visiting frequency to the state–action pair

, and perturbed visiting frequency to the trajectory

are returned (Line 12).

| Algorithm 1: Gaussian perturbation mechanism. |

- Input:

, Parameters: , c; - 1:

for

do - 2:

Calculate , and ; - 3:

; - 4:

; - 5:

; - 6:

for do - 7:

Calculate ; - 8:

; - 9:

; - 10:

end for - 11:

end for - 12:

return , ,

|

4.2.2. Randomized Response Mechanism

The core of the Random Response mechanism algorithm is to perturb the binary indicators in the decision trajectory through biased Bernoulli sampling. These Bernoulli distributions are parameterized by the privacy budget

. The detailed algorithm is shown in Algorithm 2.

| Algorithm 2: Randomized Response mechanism. |

- Input:

, Parameters: - 1:

for

do - 2:

for do - 3:

Let - 4:

Sample - 5:

Update , where , - 6:

Sample - 7:

Update - 8:

if then - 9:

for do - 10:

Let - 11:

Sample - 12:

Update - 13:

end for - 14:

end if - 15:

end for - 16:

end for - 17:

return , ,

|

At the beginning of Algorithm 2, the input is the decision trajectory of the m-th UAV . The algorithm then iterates through each time step t in the trajectory (line 2). For each time step, the algorithm first checks whether the current state–action pair matches the target pair using the indicator function (line 3).

The perturbation process consists of three main parts: (1) Reward Perturbation (lines 4–5): For the reward component, the algorithm samples a Bernoulli variable with a probability proportional to the actual reward value when the state–action pair matches. The sampling probability is carefully designed to strike a balance between authenticity and random noise. The perturbed reward is then updated using a linear transformation to ensure an unbiased estimate. (2) Access Count Perturbation (Lines 6–7): Similarly, for access counts, the algorithm samples from a Bernoulli distribution, where the probability reflects whether a state–action pair was actually accessed. This creates a noisy access record while preserving statistical properties. (3) Transition Count Perturbation (Lines 8–14): For state transitions, the algorithm also considers the next state and perturbs the transition indicator. This is crucial for preserving the Markov property in the learning model.

The key parameters k and c are derived from the privacy budget and ensure that the expected value of each perturbation is equal to its true value, thus providing unbiased estimates under local differential privacy. The Bernoulli parameter is derived from the privacy budget using the transformation , thus ensuring the -LDP guarantee.

It should be noted that, according to [

36], the noises

,

, and

satisfy

and

The above requirements can be satisfied by several perturbation mechanisms, such as Gaussian, Laplace, Randomized Response, and bounded noise mechanisms. But the regret and privacy guarantees of these perturbation mechanisms are of great importance. It can be seen from the above definition that these four functions are increasing functions with respect to

m and are decreasing functions with respect to

. Moreover, according to the characteristics of the local differential privacy mechanism, these four limited strict positive functions can be calculated. We will show the calculation in

Section 5.

4.3. Offloading Policy Learning Mechanism

In the offloading policy learning mechanism, the policy is updated by a modified Bellman equation, with details shown in Algorithm 3. In the algorithm, the inputs are the privacy parameters

,

,

, and the outputs of Algorithm 1. Then, for each UAV, the privatization reward

and privatization transition function

are calculated based on Equation (

13) and Equation (

14), respectively, which are shown as follows (Line 2).

and

where

is a parameter for precision selection and

. Based on Prop.4 of [

36], to achieve the

LDP, the

and

, as well as

and

, should meet the following constraints, respectively:

and

where

. Then, the modified Bellman equation is shown as follows to update the

Q function (Line 3).

where

is a bias compensation term to ensure convergence under LDP noise. Therefore, the offloading policy is calculated by (Line 3)

| Algorithm 3: Offloading policy learning mechanism. |

- Input:

, , , Parameters , , - 1:

for

do - 2:

Compute , via Equation ( 13) and Equation ( 14), respectively; - 3:

According to Equations ( 17) and ( 18), update the offloading policy ; - 4:

Send to m-th UAV. After executing the offloading policy, m-th UAV collects

the decision trajectory ; - 5:

m-th UAV sends back privatized version via Algorithm 1; - 6:

end for

|

Finally, the cloud server sends to the m-th UAV, which will collect the decision trajectory and send back a privatized version via Algorithm 1.

Our perturbation mechanism adds LDP noise to the frequency of state–action pairs, maintaining the convergence of reinforcement learning while preserving privacy. The Markov property relies on the independence of state transitions, and the frequency perturbation is performed on aggregate statistics, which does not destroy the Markov property of individual state transitions. According to the theory of Garcelon et al. [

36], as long as the perturbed reward and transition probability estimates are asymptotically consistent and updated via the modified Bellman equation, the RL algorithm will converge under the LDP condition. In

Section 5, we prove that the regret bound is

, which theoretically guarantees the convergence of the algorithm in the long run.

5. Theoretical Analysis

In this section, we give the formal proof of LDP and regret bound of the proposed approach. The derivation of the regret bound implies the convergence of the algorithm under LDP perturbations. That is, when the number of training rounds is large enough, the policy performance will be close to the optimal policy. Firstly, we prove that the Gaussian perturbation mechanism has the LDP guarantee through Theorem 1.

Theorem 1. Given and , and , the Gaussian perturbation mechanism can achieve -LDP.

Proof. Based on Prop.10 in [

36], we assume that for the two trajectories

,

, and the perturbed trajectories

and

.

For a given vector

, and since the Gaussian distribution is symmetric,

, we have

However, considering the squared term and following from Cauchy–Schwartz, we have the inequality

where

, where

c is a constant value. Then, for

,

should satisfy

and

. Therefore,

, we have

The same goes for

and

. Then, because

,

, and

are independent, for any two trajectories

and

, we have

Thus, by choosing , it holds that for , and so we can conclude that the Gaussian mechanism is -LDP. □

Then, we prove that the Randomized Response mechanism has the LDP guarantee through Theorem 2.

Theorem 2. For and the parameter , Algorithm 2 is .

Proof. Like the proof of Theorem 1, consider two trajectories , , the perturbed trajectory and .

For a given

, and for each

, define

belongs to

because

, and we have

where

.

Then, for a given

, since

, the above formula can be simplified as

where

. Therefore, through the inequality (

24), we get

The same is true for and . From this, it can be concluded that when , the formula based on the Randomized Response mechanism is LDP. □

Then, we prove the regret bound of the proposed approach. Before proving, we provide the definition of regret.

Definition 1. Given the finite-horizon Markov decision process (FH-MDP) in Section 3.4, the regret in this paper is defined as the performance of the offloading policy, which is defined as the cumulative difference between and for all UAVs: Theorem 3. For any number of states , actions , and , the lower regret bound of the proposed approach satisfies , where is the FH-MDP in this paper.

Proof. We set the FH-MDP with states and actions. Our FH-MDP is a -ary tree with states, and each node has child nodes. We use to represent the leaf nodes of this tree. Each leaf node can be converted to receive a reward of 1 or 0. And each leaf node converts to reward 0 and reward 1 with the same probability. And we set that there exists a unique action and leaf such that , for a chosen , and when , , .

We assume that

,

is the depth of the tree, and the depth of the leaf node is b-1 or b-2. Here, we assume that all leaf nodes

are at

. So the number of leaf nodes is

. Thus, our value function is as follows for a policy

:

Therefore, according to Definition 1, the regret is given as follows:

where we define that

Thus, is a function of the history observed by the algorithm, and is the optimal state–action pair.

Considering the LDP setting, the history can be written as

where

is a local privacy protection mechanism satisfying

-LDP and

is the history trajectory. Thus

is a function of

. And according to [

37], we have

and because of the privacy mechanism

, we also have

We set and , and is a special case when .

According to Equations (

30) and (

31), we have

Therefore, according to [

36], we get

and

Therefore, combining Equations (

33) and (

34),

Here, we set that

is the expectation of the random variable

, and then there is

Thus, according to Jensen’s inequality, we have

So when

, we have

Therefore, we have

and

where there is

that

, because

O is a finite random variable. Finally, we can prove that there exists an FH-MDP such that its regret is

. □

6. Experiment Evaluation

6.1. Experimental Settings

In this section, we conduct the experiments to evaluate the convergence and the regret of the proposed approach. The experiments are simulated using Python and tested on drones. In the experiment, according to [

38], we set the number of UAVs to

and the number of ECSs to

. For each UAV, the discount factor

is selected from

, the task size

is selected from {100 KB, 300 KB}, and the transmission rate

p is selected from {2 KB/s, 5 KB/s}. The CPU frequency of the

m-th UAV

and

j-th ECS

are set to 1 GHz and 3 GHz, respectively, while the number of CPU cycles required to complete each bit of the task is

. The effective capacitance coefficient

is set to

. These parameters were chosen based on the performance of commercial drones and edge servers. For example, the task size {100 KB, 300 KB} represents a single image frame or sensor data packet, while the CPU frequency (1 GHz for UAVs and 3 GHz for edge servers) is consistent with typical embedded processors such as the ARM Cortex-A78 and lightweight edge servers. The transmission rate simulates realistic wireless channel conditions over 4G/5G links. Then, to train the offloading policy, the episode is set to 7500, and there are

learning steps in each episode.

Finally, we designed five experiments:

(1) We adopt the average loss to evaluate the convergence of the proposed approach by varying task sizes and privacy budgets using the Gaussian perturbation mechanism and the Randomized Response mechanism, respectively.

(2) We show the regret value of the proposed approach under different transmission rates and privacy budgets using the Gaussian perturbation mechanism and the Randomized Response mechanism, respectively.

(3) We compare our approach with a scheme without privacy protection to analyze the performance of the scheme after adding LDP perturbation.

(4) We select different discount factors, a fixed task size, and a transmission rate under the Gaussian perturbation mechanism to evaluate the impact of discount factors on the approach.

(5) We compare our approach with other privacy protection schemes based on reinforcement learning to see the advantages of our scheme.

6.2. Experiment Results

In this section, we use average loss to comprehensively evaluate user-related performance metrics, including time delay and energy consumption. The metric defined by Equation (

5) is a weighted sum of time delay and energy consumption that directly reflects the performance at the user level. Furthermore, we use regret to measure the cumulative performance gap between the private mechanism and the offloading policy.

6.2.1. Convergence Evaluation of the Approach

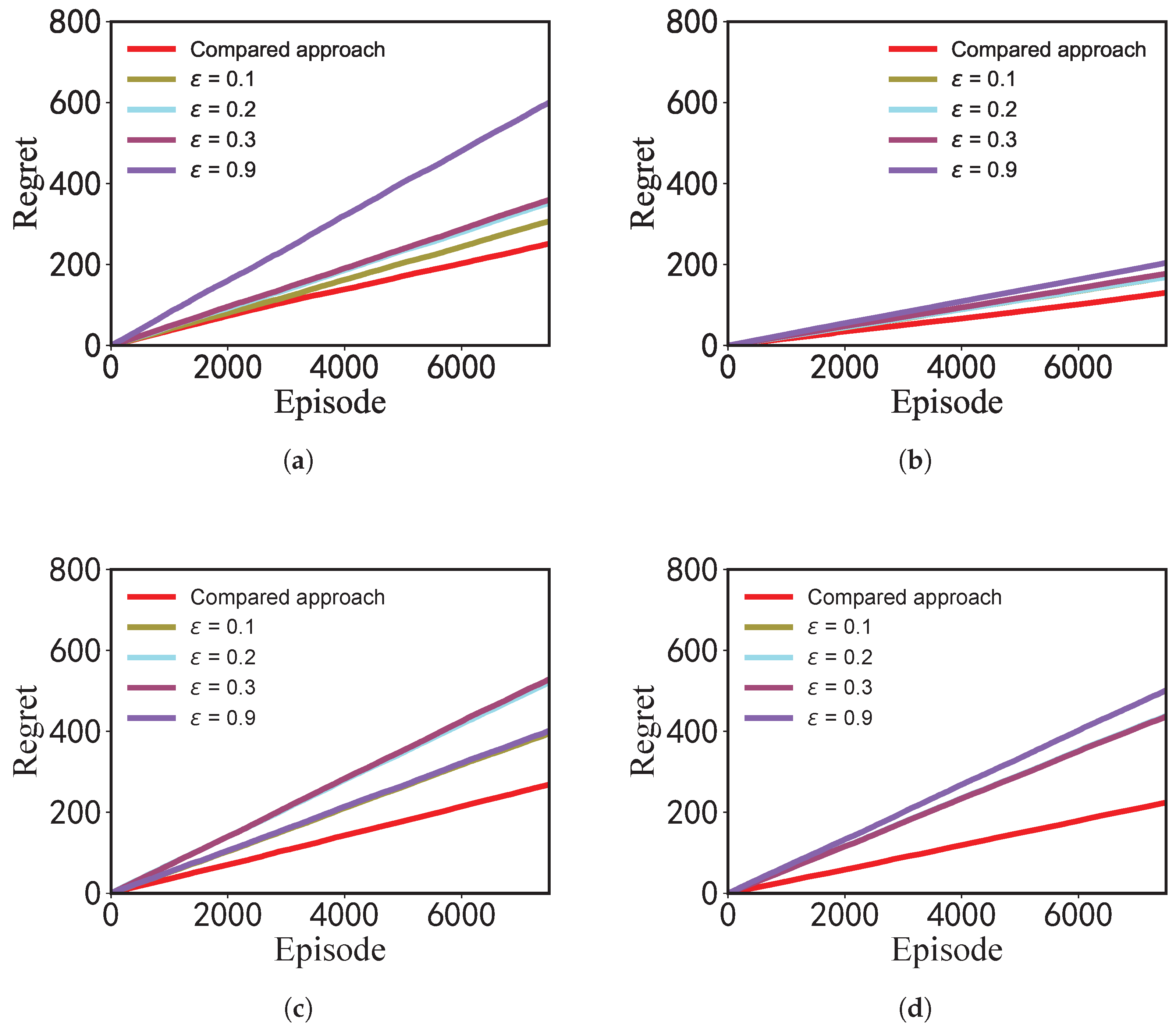

The convergence of the proposed approach based on the Gaussian perturbation mechanism and the Randomized Response mechanism is shown in

Figure 2. The privacy parameters

are set to

, respectively, and we set the task sizes to

KB and 300 KB. From the experimental results, we can see the following: (1) The average loss of the offloading strategy gradually decreases with an increase in iterations, and finally, the algorithm reaches convergence. (2) For different task sizes, when the iterations reach 500, the algorithm based on the Gaussian perturbation mechanism reaches convergence, and when the iterations reach 300, the the algorithm based on the Randomized Response mechanism reaches convergence. The convergence speed of the Randomized Response mechanism is faster than the convergence speed of the algorithm based on the Gaussian perturbation mechanism. (3) As the task size increases, the convergence speed of the algorithm gradually slows down, and the task size has a certain influence on the convergence speed of the algorithm. (4) The offloading policy based on the Random Response perturbation mechanism has less performance loss compared to the Gaussian perturbation mechanism.

Although an increase in task size will lead to a slower convergence speed, the algorithm can still converge quickly within a reasonable range. Therefore, the proposed approach has good robustness and adaptability under different task sizes and privacy parameters.

6.2.2. Regret Value Evaluation of the Approach

The regret value of the proposed approach based on the Gaussian perturbation mechanism and the Randomized Response mechanism is shown in

Figure 3. The privacy parameters

are set to

, respectively, and we set the transmission rate

p to 2 KB/s and 5 KB/s. We can see the following: (1) As the number of iterations increases, the regret value of the approach gradually increases. The deviation between the trained strategy performance and the optimal strategy performance gradually accumulates, and the regret value can be quantitatively estimated. (2) As the transmission rate of the wireless communication increases, the regret value generally shows a slight decreasing trend. For the same transmission rate of the wireless communication, the regret values under different privacy parameters are different. (3) For the same transmission rate of the wireless communication, the regret value of the approach is greatly affected by the privacy parameter. (4) Compared with the approach based on the Gaussian perturbation mechanism, the approach based on the Random Response perturbation mechanism has a smaller regret value.

6.2.3. Comparison with Non-Private RL Baseline

To explicitly evaluate the performance loss imposed by the LDP perturbation mechanism in offloading policy learning, we compared our approach with a non-private reinforcement learning baseline [

39] (the red “Compared approach” curve in

Figure 2 and

Figure 3). We adapted the states, actions in the literature [

39].

Figure 2 show that the non-private baseline achieves the lowest average loss and the fastest convergence, as expected. Our LDP perturbation approach experiences a slight increase in loss and a slight slowdown in convergence, especially under strict privacy budgets.

Figure 3 shows that the non-private baseline maintains the lowest regret throughout training. The regret of our LDP perturbation approach increases with increasing privacy requirements, but remains within an acceptable range.

Adding LDP perturbation results in a controllable but quantifiable performance loss, which is the inherent cost of achieving strict privacy guarantees. This loss can be calculated based on our theoretical analysis and adjusted using the privacy budget , making our approach practical even in environments with varying privacy requirements.

6.2.4. The Impact of Different Discount Factors on the Approach

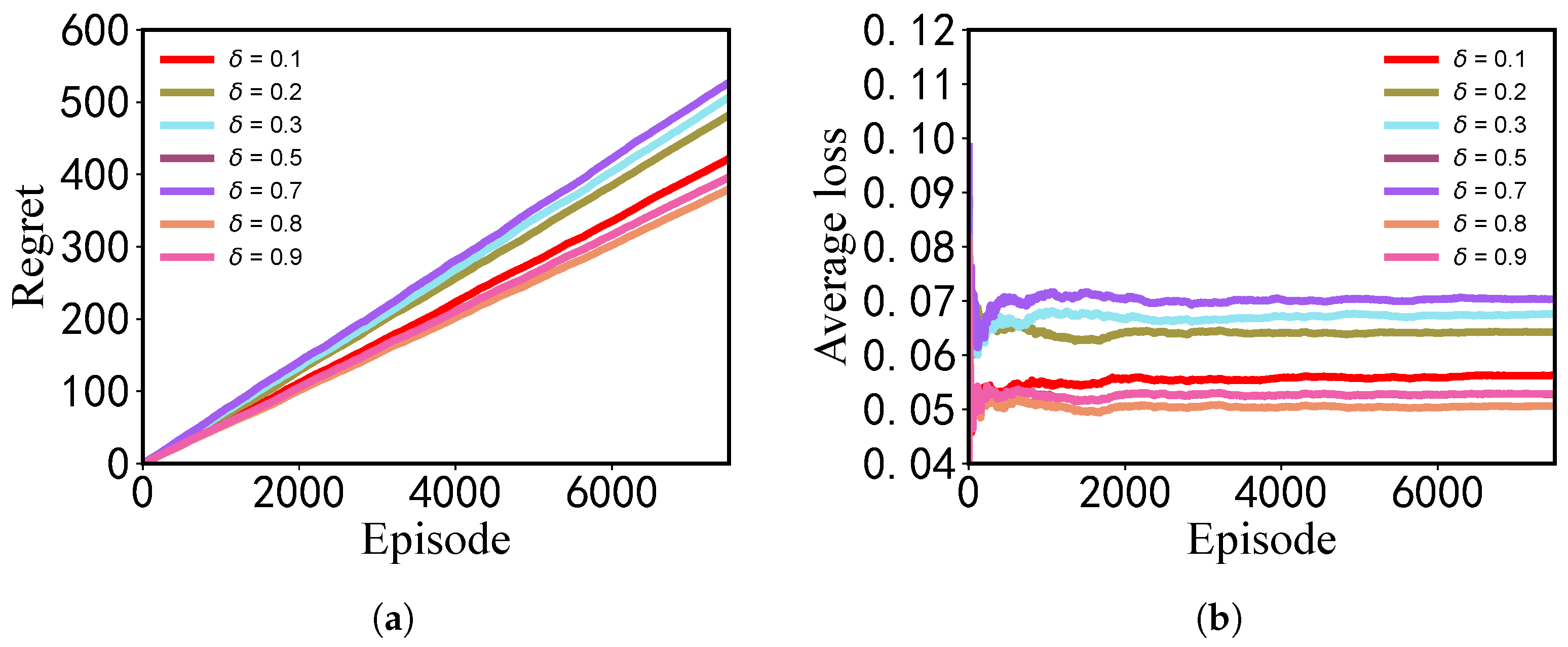

The impact of different discount factors based on the Gaussian perturbation mechanism on the approach is shown in

Figure 4. The discount factor

is set to

, the task size

is set to 100 KB, and the transmission rate

p is set to 2 KB/s. The following can be seen from the figure: (1) With the increase of the number of iterations, the regret value of the approach gradually increases under different discount factors, but different discount factors bring different regret values. Among them, when

the regret value is the smallest, while the regret value is the largest when

. (2) For different discount factors, when the number of iterations is less than 2000, the average performance loss fluctuates greatly. When the number of iterations reaches 2000, the average performance loss of the approach reaches stability. Corresponding to the regret value, the average performance loss is the smallest when

, and the average performance loss is the largest when

.

6.2.5. Impact of Privacy Mechanisms on Network Performance

While the LDP perturbation mechanism proposed in this paper effectively protects the privacy of drone offloading preferences, it also affects network performance in three aspects:

- 1.

Communication overhead: Since each drone must locally perturb its trajectory before uploading, this adds a small amount of computational and communication overhead. However, experiments show that this overhead is acceptable.

- 2.

Convergence speed: Privacy noise slows down the convergence of policy learning, especially when

is small, as shown in

Figure 2.

- 3.

Policy performance loss: The addition of LDP noise perturbs the estimated state–action frequency, thus affecting the learned offloading policy.

However, through theoretical analysis in

Section 5, we establish a theoretical regret bound

, which not only provides strict privacy guarantees but also allows for a tunable performance trade-off, making it suitable for practical drone applications.

6.2.6. The Comparison with Other RL Baseline

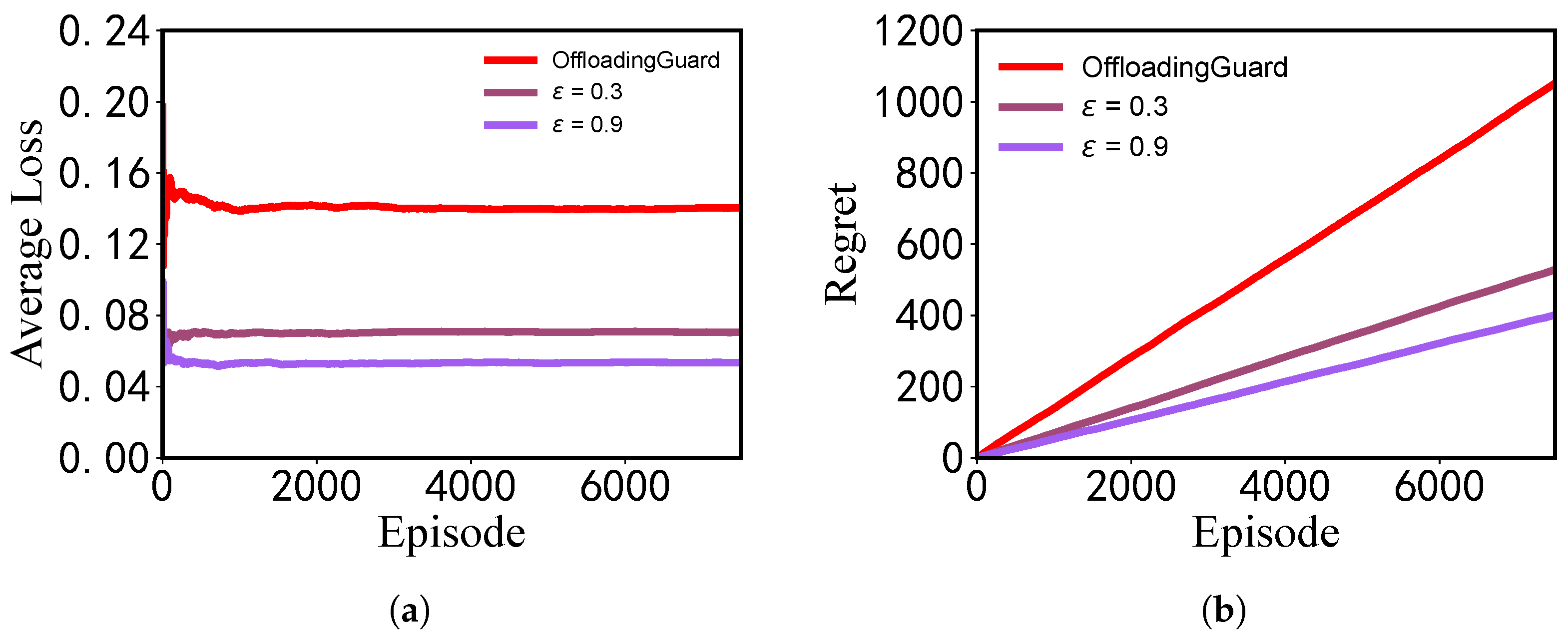

We compared our approach with OffloadingGuard [

33]. In the experiment, we selected the Random Response perturbation mechanism in our approach, and set the privacy parameter

to

and the task size to

KB. A comparison of the convergence performance of the two methods is shown in

Figure 5.

The experiment shows the following: (1) Our approach has a lower average loss than OffloadingGuard and converges faster than OffloadingGuard as the number of iterations increases. (2) The regret values of both methods increase with increasing iterations, but our approach has a smaller regret value than OffloadingGuard, indicating that the introduction of the privacy mechanism in our approach reduces the performance loss of the offloading strategy. Our approach is superior to OffloadingGuard.

Another major innovation of our approach is that it theoretically establishes a quantitative relationship between the introduced privacy perturbation mechanism and the performance loss of the offloading strategy, reducing unnecessary resource waste.

In practical applications, it is necessary to balance privacy protection and algorithm performance. Our experimental results verify the effectiveness and robustness of the approach in different wireless communication link conditions and with different privacy parameters.

7. Discussion

7.1. Partially Offloading

Our research focuses on a full offloading model, where computing tasks are performed entirely locally on the drone or entirely by edge computing servers. However, partially offloading computing tasks to edge servers, where both local and edge servers perform the computation, is a more efficient and versatile approach in MEC [

40]. In this section, we discuss the feasibility of extending our approach to support partial offloading.

Extending our approach to partial offloading involves the following changes: (1) Action space: The action space is generalized from a binary action space to a continuous fraction. For example, the action can be redefined as , where represents the proportion of the task offloaded to the edge server, and the remainder is executed locally. (2) Cost model: The time delay and energy cost functions need to be restructured. The total time delay of a task is the maximum of the local computation time delay and the offload delay. The energy consumption is the sum of the local computation energy and the offload transmission energy. (3) State space: The state space contains more detailed information about the current computational task load of the drone and edge server.

The core privacy protection mechanisms of our approach remain highly applicable: (1) LDP perturbation mechanism: The LDP perturbation mechanism (Algorithms 1 and 2) operates on the frequencies of state–action pairs in the decision trajectory. When expanding the continuous action space, the perturbation mechanism remains essentially unchanged. In theory, the -LDP privacy guarantees would still hold. (2) Offloading policy learning mechanism: The offloading policy learning mechanism (Algorithm 3) needs to be adapted to the expanded action space, possibly using deep RL techniques.

7.2. Malicious Servers

Our approach protects the privacy of the offloading policy training in an RL algorithm based on honest-but-curious servers. However, malicious servers may still interfere with the algorithm’s execution process or steal sensitive data. To address these malicious servers, we can deploy the RL algorithm training process in a trusted execution environment (TEE). TEEs implement secure computing through memory isolation within an independent processing environment, offering hardware-based security and integrity protection. Currently, there are many mature TEE solutions, such as ARM Trustzone and Intel SGX. The existing TEE ecosystem is relatively mature and supports deployment on multiple CPU architectures. Although our solution does not directly design protection mechanisms against malicious servers, by leveraging TEEs and existing mature technologies we can deploy our solution on potentially malicious servers, maintaining the same functionality and performance without changing the core structure of our algorithm, effectively extending our approach to malicious-server scenarios [

41].

7.3. Partical Use

The proposed UAV-centric privacy-preserving computation offloading scheme offers significant practical advantages in scenarios where UAVs handle privacy-sensitive tasks. It effectively addresses a critical vulnerability: if an honest-but-curious server discloses offloading strategies, attackers controlling edge computing servers (ECSs) could exploit this information to lure UAVs into offloading sensitive tasks to compromised ECSs. In public safety surveillance, this approach prevents the exposure of UAV patrol routes and deters the induced offloading of high-residency video footage. In precision agriculture, it safeguards crop data offloading policies and prevents leaks of yield-related information. For infrastructure inspection, it thwarts the disclosure of defect-data offloading preferences and blocks unauthorized transfers of vulnerability logs. In emergency relay scenarios, it avoids leakage of rescue-zone offloading strategies and thereby prevents voice data breaches. By incorporating local differential privacy (LDP) mechanisms, the proposed method perturbs the frequencies of state–action pairs, thereby securing offloading strategies and fundamentally eliminating the risk of such malicious induction. This provides essential protection for the future large-scale deployment of UAV-assisted edge computing networks.

8. Conclusions

In conclusion, we propose a UAV-centric privacy-preserving offloading approach via RL. Differing from existing works, the proposed approach adds LDP noise to randomize the frequency of each state–action pair in the decision trajectories. We provide two perturbation mechanisms, the Gaussian perturbation mechanism and the Random Response mechanism, and prove that they each achieve the -LDP and -LDP guarantee, respectively. Furthermore, we theoretically derive the regret bound of this approach as , and establish a quantitative relationship between the privacy budget and the performance loss of the offloading strategy before training the offloading strategy. Experiments verify the convergence and efficiency of our approach under different task sizes, transmission rates, and privacy parameters. Theoretical analysis and experimental results demonstrate that our approach provides a feasible and practical solution for protecting drone privacy in real-world MEC applications, enabling system designers to effectively balance privacy and performance based on specific mission requirements.

However, our work has some limitations. Our current system model assumes complete task offloading, but partial offloading is often more efficient and applicable. Furthermore, exploring the combination of other privacy-preserving techniques with our offloading approach and discussing better privacy–utility trade-offs is another promising direction.

Author Contributions

Conceptualization, D.W.; methodology, C.G.; software, C.G.; validation, D.W. and C.G.; formal analysis, C.G.; investigation, D.W.; writing—original draft preparation, C.G.; writing—review and editing, D.W.; visualization, K.L. and W.L.; supervision, D.W.; funding acquisition, D.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China (No. 62302363), the Major Research Plan of the National Natural Science Foundation of China (Grant No. 92267204), the Fundamental Research Funds for the Central Universities (Project No. ZYTS25072), and the Open Foundation of Key Laboratory of Cyberspace Security, Ministry of Education of China (No. KLCS20240404).

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhou, Y.; Liu, L.; Wang, L.; Hui, N.; Cui, X.; Wu, J.; Peng, Y.; Qi, Y.; Xing, C. Service-aware 6G: An intelligent and open network based on the convergence of communication, computing and caching. Digit. Commun. Netw. 2020, 6, 253–260. [Google Scholar] [CrossRef]

- Javanmardi, S.; Nascita, A.; Pescapè, A.; Merlino, G.; Scarpa, M. An integration perspective of security, privacy, and resource efficiency in IoT-Fog networks: A comprehensive survey. Comput. Netw. 2025, 270, 111470. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. Mobile Edge Computing: Survey and Research Outlook. arXiv 2017, arXiv:1701.01090. [Google Scholar]

- Cai, Q.; Zhou, Y.; Liu, L.; Qi, Y.; Pan, Z.; Zhang, H. Collaboration of Heterogeneous Edge Computing Paradigms: How to Fill the Gap Between Theory and Practice. IEEE Wirel. Commun. 2024, 31, 110–117. [Google Scholar] [CrossRef]

- Zhao, M.; Zhang, R.; He, Z.; Li, K. Joint Optimization of Trajectory, Offloading, Caching, and Migration for UAV-Assisted MEC. IEEE Trans. Mob. Comput. 2025, 24, 1981–1998. [Google Scholar] [CrossRef]

- Waqar, N.; Hassan, S.A.; Mahmood, A.; Dev, K.; Do, D.; Gidlund, M. Computation Offloading and Resource Allocation in MEC-Enabled Integrated Aerial-Terrestrial Vehicular Networks: A Reinforcement Learning Approach. IEEE Trans. Intell. Transp. Syst. 2022, 23, 21478–21491. [Google Scholar] [CrossRef]

- Saeedi, I.D.I.; Al-Qurabat, A.K.M. A comprehensive review of computation offloading in UAV-assisted mobile edge computing for IoT applications. Phys. Commun. 2025, 102810. [Google Scholar] [CrossRef]

- Lan, W.; Chen, K.; Li, Y.; Cao, J.; Sahni, Y. Deep Reinforcement Learning for Privacy-Preserving Task Offloading in Integrated Satellite-Terrestrial Networks. IEEE Trans. Mob. Comput. 2024, 23, 9678–9691. [Google Scholar] [CrossRef]

- Li, J.; Ou, W.; Ouyang, B.; Ye, S.; Zeng, L.; Chen, L.; Chen, X. Revisiting Location Privacy in MEC-Enabled Computation Offloading. IEEE Trans. Inf. Forensics Secur. 2025, 20, 4396–4407. [Google Scholar] [CrossRef]

- Wei, D.; Xi, N.; Ma, X.; Shojafar, M.; Kumari, S.; Ma, J. Personalized Privacy-Aware Task Offloading for Edge-Cloud-Assisted Industrial Internet of Things in Automated Manufacturing. IEEE Trans. Ind. Inform. 2022, 18, 7935–7945. [Google Scholar] [CrossRef]

- Ding, Y.; Li, K.; Liu, C.; Li, K. A Potential Game Theoretic Approach to Computation Offloading Strategy Optimization in End-Edge-Cloud Computing. IEEE Trans. Parallel Distributed Syst. 2022, 33, 1503–1519. [Google Scholar] [CrossRef]

- He, Z.; Xu, Y.; Zhao, M.; Zhou, W.; Li, K. Priority-Based Offloading Optimization in Cloud-Edge Collaborative Computing. IEEE Trans. Serv. Comput. 2023, 16, 3906–3919. [Google Scholar] [CrossRef]

- Zhao, W.; Shi, K.; Liu, Z.; Wu, X.; Zheng, X.; Wei, L.; Kato, N. DRL Connects Lyapunov in Delay and Stability Optimization for Offloading Proactive Sensing Tasks of RSUs. IEEE Trans. Mob. Comput. 2024, 23, 7969–7982. [Google Scholar] [CrossRef]

- Zhang, Y.; Kuang, Z.; Feng, Y.; Hou, F. Task Offloading and Trajectory Optimization for Secure Communications in Dynamic User Multi-UAV MEC Systems. IEEE Trans. Mob. Comput. 2024, 23, 14427–14440. [Google Scholar] [CrossRef]

- Liu, Z.; Li, Z.; Gong, Y.; Wu, Y. RIS-Aided Cooperative Mobile Edge Computing: Computation Efficiency Maximization via Joint Uplink and Downlink Resource Allocation. IEEE Trans. Wirel. Commun. 2024, 23, 11535–11550. [Google Scholar] [CrossRef]

- Yang, Y.; Gong, Y.; Wu, Y. Intelligent-Reflecting-Surface-Aided Mobile Edge Computing With Binary Offloading: Energy Minimization for IoT Devices. IEEE Internet Things J. 2022, 9, 12973–12983. [Google Scholar] [CrossRef]

- Li, K.; Wang, X.; He, Q.; Yang, M.; Huang, M.; Dustdar, S. Task Computation Offloading for Multi-Access Edge Computing via Attention Communication Deep Reinforcement Learning. IEEE Trans. Serv. Comput. 2023, 16, 2985–2999. [Google Scholar] [CrossRef]

- Huang, L.; Bi, S.; Zhang, Y.A. Deep Reinforcement Learning for Online Computation Offloading in Wireless Powered Mobile-Edge Computing Networks. IEEE Trans. Mob. Comput. 2020, 19, 2581–2593. [Google Scholar] [CrossRef]

- Lei, L.; Xu, H.; Xiong, X.; Zheng, K.; Xiang, W.; Wang, X. Multiuser Resource Control With Deep Reinforcement Learning in IoT Edge Computing. IEEE Internet Things J. 2019, 6, 10119–10133. [Google Scholar] [CrossRef]

- Wang, J.; Hu, J.; Min, G.; Zomaya, A.Y.; Georgalas, N. Fast Adaptive Task Offloading in Edge Computing Based on Meta Reinforcement Learning. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 242–253. [Google Scholar] [CrossRef]

- Feng, T.; Wang, B.; Zhao, H.; Zhang, T.; Tang, J.; Wang, Z. Task Distribution Offloading Algorithm Based on DQN for Sustainable Vehicle Edge Network. In Proceedings of the 2021 IEEE 7th International Conference on Network Softwarization (NetSoft), Tokyo, Japan, 28 June–2 July 2021; IEEE: New York, NY, USA, 2021; pp. 430–436. [Google Scholar]

- Liu, C.; Tang, F.; Hu, Y.; Li, K.; Tang, Z.; Li, K. Distributed Task Migration Optimization in MEC by Extending Multi-Agent Deep Reinforcement Learning Approach. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 1603–1614. [Google Scholar] [CrossRef]

- Wei, D.; Zhang, J.; Shojafar, M.; Kumari, S.; Xi, N.; Ma, J. Privacy-Aware Multiagent Deep Reinforcement Learning for Task Offloading in VANET. IEEE Trans. Intell. Transp. Syst. 2023, 24, 13108–13122. [Google Scholar] [CrossRef]

- Wang, Z.; Sun, Y.; Liu, D.; Hu, J.; Pang, X.; Hu, Y.; Ren, K. Location Privacy-Aware Task Offloading in Mobile Edge Computing. IEEE Trans. Mob. Comput. 2024, 23, 2269–2283. [Google Scholar] [CrossRef]

- Gan, S.; Siew, M.; Xu, C.; Quek, T.Q.S. Differentially Private Deep Q-Learning for Pattern Privacy Preservation in MEC Offloading. In Proceedings of the ICC 2023—IEEE International Conference on Communications, Rome, Italy, 28 May–1 June 2023; IEEE: New York, NY, USA, 2023; pp. 3578–3583. [Google Scholar]

- Cui, X.; Tian, Y.; Zhang, X.; Lin, H.; Li, M. A Lightweight Certificateless Edge-Assisted Encryption for IoT Devices: Enhancing Security and Performance. IEEE Internet Things J. 2025, 12, 2930–2942. [Google Scholar] [CrossRef]

- Li, Z.; Hu, H.; Huang, B.; Chen, J.; Li, C.; Hu, H.; Huang, L. Security and performance-aware resource allocation for enterprise multimedia in mobile edge computing. Multimed. Tools Appl. 2020, 79, 10751–10780. [Google Scholar] [CrossRef]

- Xu, X.; He, C.; Xu, Z.; Qi, L.; Wan, S.; Bhuiyan, M.Z.A. Joint Optimization of Offloading Utility and Privacy for Edge Computing Enabled IoT. IEEE Internet Things J. 2020, 7, 2622–2629. [Google Scholar] [CrossRef]

- Min, M.; Xiao, L.; Chen, Y.; Cheng, P.; Wu, D.; Zhuang, W. Learning-Based Computation Offloading for IoT Devices With Energy Harvesting. IEEE Trans. Veh. Technol. 2019, 68, 1930–1941. [Google Scholar] [CrossRef]

- Ji, T.; Luo, C.; Yu, L.; Wang, Q.; Chen, S.; Thapa, A.; Li, P. Energy-Efficient Computation Offloading in Mobile Edge Computing Systems With Uncertainties. IEEE Trans. Wirel. Commun. 2022, 21, 5717–5729. [Google Scholar] [CrossRef]

- Wang, K.; Chen, C.; Tie, Z.; Shojafar, M.; Kumar, S.; Kumari, S. Forward Privacy Preservation in IoT-Enabled Healthcare Systems. IEEE Trans. Ind. Inform. 2022, 18, 1991–1999. [Google Scholar] [CrossRef]

- Nguyen, D.C.; Pathirana, P.N.; Ding, M.; Seneviratne, A. Privacy-Preserved Task Offloading in Mobile Blockchain with Deep Reinforcement Learning. IEEE Trans. Netw. Serv. Manag. 2020, 17, 2536–2549. [Google Scholar] [CrossRef]

- Pang, X.; Wang, Z.; Li, J.; Zhou, R.; Ren, J.; Li, Z. Towards Online Privacy-preserving Computation Offloading in Mobile Edge Computing. In Proceedings of the IEEE INFOCOM 2022-IEEE Conference on Computer Communications, Virtual, 2–5 May 2022; IEEE: New York, NY, USA, 2022; pp. 1179–1188. [Google Scholar]

- You, F.; Yuan, X.; Ni, W.; Jamalipour, A. Learning-Based Privacy-Preserving Computation Offloading in Multi-Access Edge Computing. In Proceedings of the GLOBECOM 2023-2023 IEEE Global Communications Conference, Kuala Lumpur, Malaysia, 4–8 December 2023; IEEE: New York, NY, USA, 2023; pp. 922–927. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Garcelon, E.; Perchet, V.; Pike-Burke, C.; Pirotta, M. Local Differential Privacy for Regret Minimization in Reinforcement Learning. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021), Online, 6–14 December 2021; pp. 10561–10573. [Google Scholar]

- Bubeck, S.; Cesa-Bianchi, N. Regret Analysis of Stochastic and Nonstochastic Multi-armed Bandit Problems. Found. Trends Mach. Learn. 2012, 5, 1–122. [Google Scholar] [CrossRef]

- Tao, M.; Li, X.; Feng, J.; Lan, D.; Du, J.; Wu, C. Multi-Agent Cooperation for Computing Power Scheduling in UAVs Empowered Aerial Computing Systems. IEEE J. Sel. Areas Commun. 2024, 42, 3521–3535. [Google Scholar] [CrossRef]

- Ren, Y.; Sun, Y.; Peng, M. Deep Reinforcement Learning Based Computation Offloading in Fog Enabled Industrial Internet of Things. IEEE Trans. Ind. Inform. 2021, 17, 4978–4987. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, J.; Letaief, K.B. Dynamic Computation Offloading for Mobile-Edge Computing With Energy Harvesting Devices. IEEE J. Sel. Areas Commun. 2016, 34, 3590–3605. [Google Scholar] [CrossRef]

- Wang, W.; Ji, H.; He, P.; Zhang, Y.; Wu, Y.; Zhang, Y. WAVEN: WebAssembly Memory Virtualization for Enclaves. In Proceedings of the 32nd Annual Network and Distributed System Security Symposium, NDSS 2025, San Diego, CA, USA, 24–28 February 2025. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).