TMRGBT-D2D: A Temporal Misaligned RGB-Thermal Dataset for Drone-to-Drone Target Detection

Abstract

1. Introduction

2. Related Works

2.1. RGBT Object Detection

2.1.1. Pre-Fusion

2.1.2. Mid-Fusion

2.1.3. Post-Fusion

2.2. Drone-to-Drone Detection

2.3. Drone-to-Drone Datasets

3. The Proposed Dataset

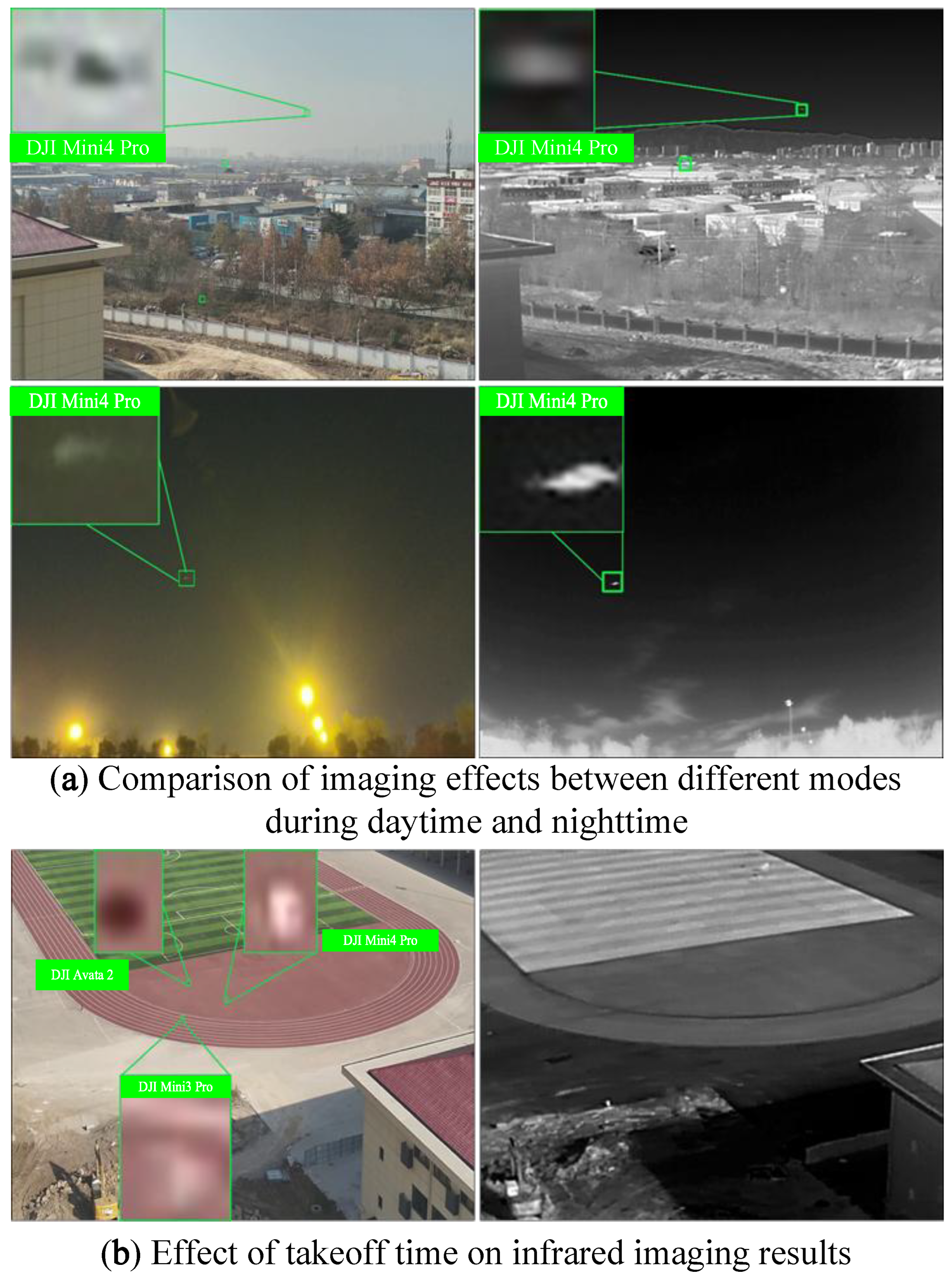

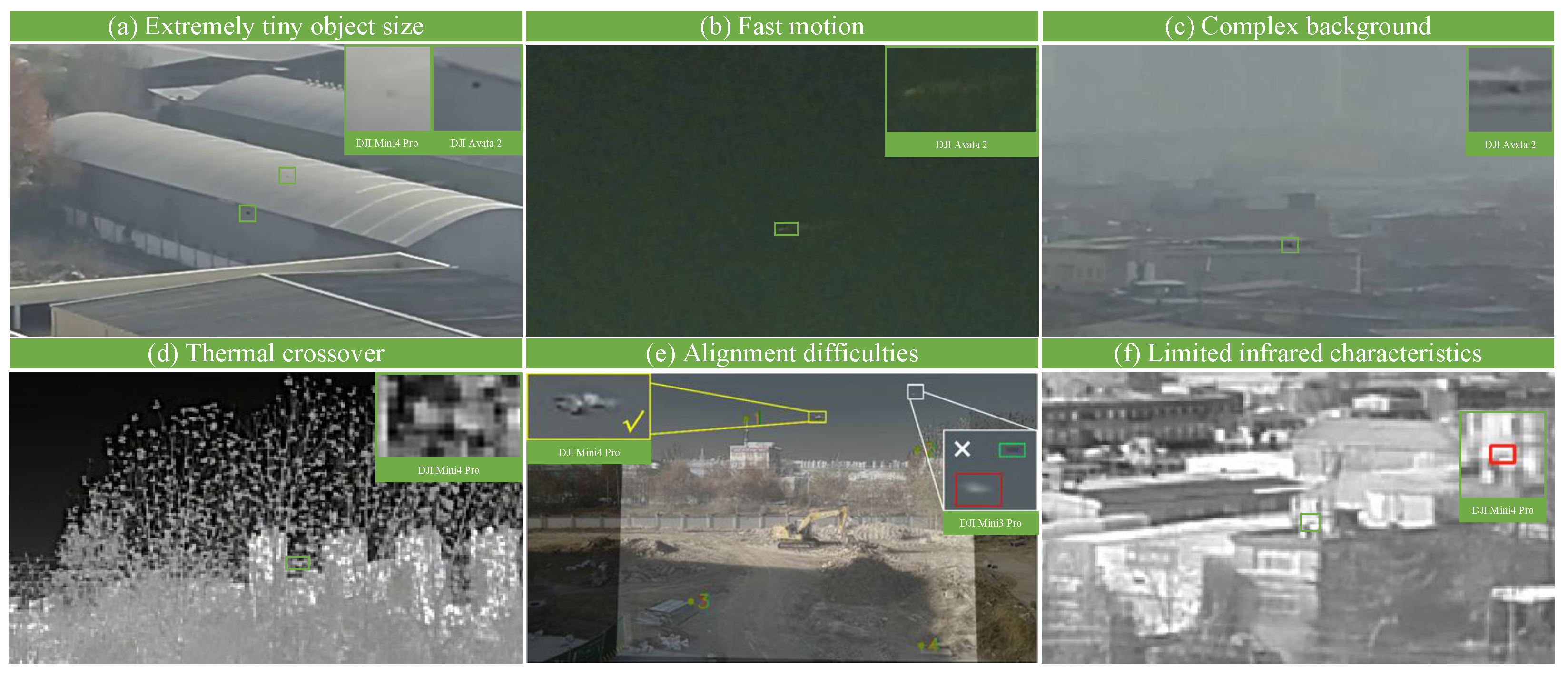

3.1. Data Collection and Annotations

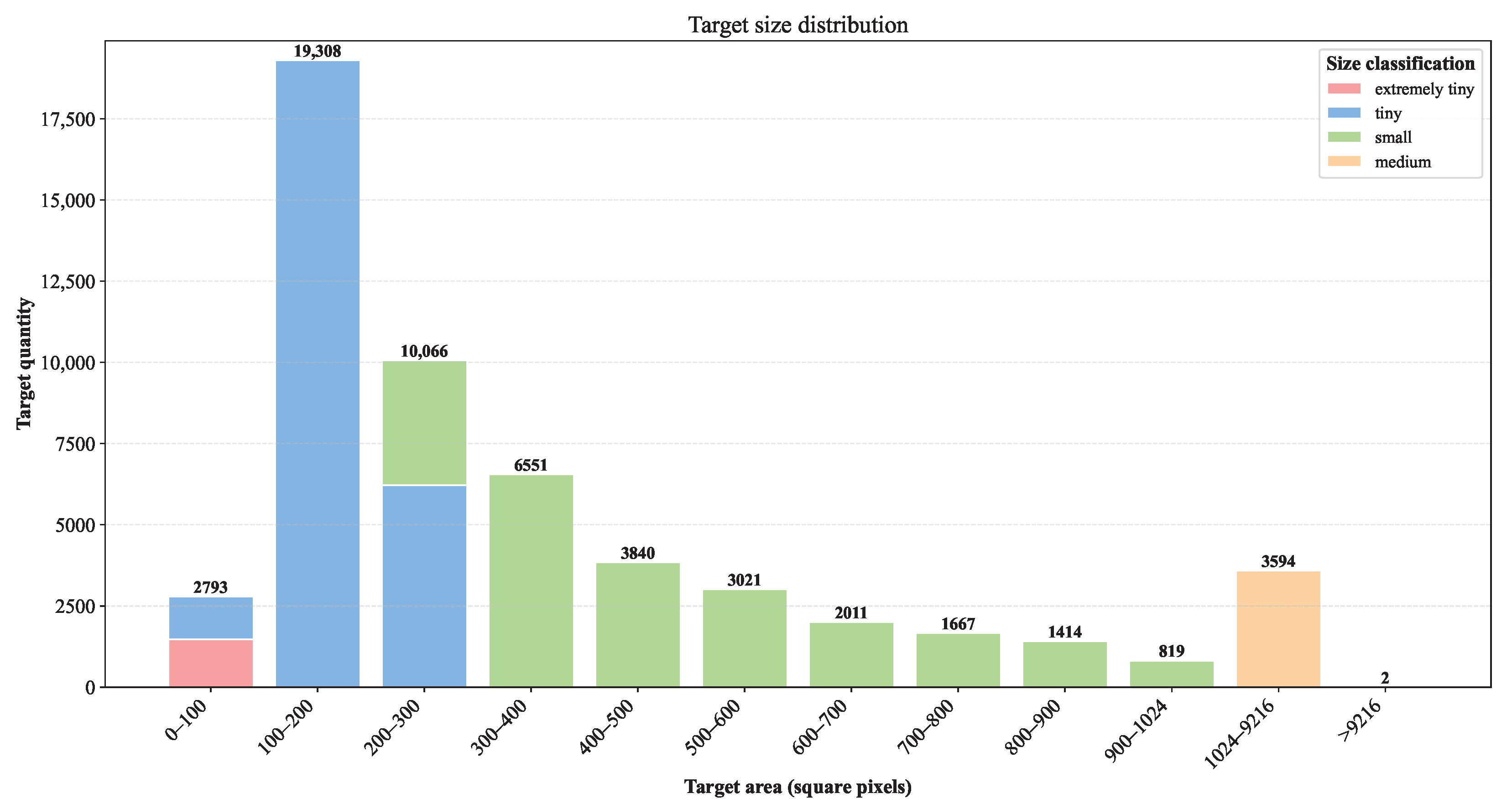

3.2. Dataset Properties and Statistics

- Extremely small targets (1–64): 1469.

- Tiny targets (64–256): 26,854.

- Small targets (256–1024): 23,167.

- Medium targets (1024–9216): 3594.

- Large targets (≥): 2.

- Extremely small targets (1–64 ): 1234.

- Tiny targets (64–256 ): 31,538.

- Small targets (256–1024 ): 6059.

- Medium targets (1024–9216 ): 406.

4. Experiments

4.1. Experiments Setup

4.2. Baseline Results

4.3. The Impact of Temporal Information

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pan, L.; Song, C.; Gan, X.; Xu, K.; Xie, Y. Military Image Captioning for Low-Altitude UAV or UGV Perspectives. Drones 2024, 8, 421. [Google Scholar] [CrossRef]

- Ahirwar, S.; Swarnkar, R.; Bhukya, S.; Namwade, G. Application of Drone in Agriculture. Int. J. Curr. Microbiol. Appl. Sci. 2019, 8, 2500–2505. [Google Scholar] [CrossRef]

- Raivi, A.M.; Huda, S.M.A.; Alam, M.M.; Moh, S. Drone Routing for Drone-Based Delivery Systems: A Review of Trajectory Planning, Charging, and Security. Sensors 2023, 23, 1463. [Google Scholar] [CrossRef] [PubMed]

- Hassanalian, M.; Abdelkefi, A. Classifications, applications, and design challenges of drones: A review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Yuan, S.; Yang, Y.; Nguyen, T.H.; Nguyen, T.M.; Yang, J.; Liu, F.; Li, J.; Wang, H.; Xie, L. MMAUD: A Comprehensive Multi-Modal Anti-UAV Dataset for Modern Miniature Drone Threats. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 2745–2751. [Google Scholar] [CrossRef]

- Yang, B.; Matson, E.T.; Smith, A.H.; Dietz, J.E.; Gallagher, J.C. UAV Detection System with Multiple Acoustic Nodes Using Machine Learning Models. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 493–498. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhang, X. Micro-UAV Detection and Identification Based on Radio Frequency Signature. In Proceedings of the 2019 6th International Conference on Systems and Informatics (ICSAI), Shanghai, China, 2–4 November 2019; pp. 1056–1062. [Google Scholar] [CrossRef]

- Wang, B.; Li, Q.; Mao, Q.; Wang, J.; Chen, C.L.P.; Shangguan, A.; Zhang, H. A Survey on Vision-Based Anti Unmanned Aerial Vehicles Methods. Drones 2024, 8, 518. [Google Scholar] [CrossRef]

- Wang, Q.; Tu, Z.; Li, C.; Tang, J. High performance RGB-Thermal Video Object Detection via hybrid fusion with progressive interaction and temporal-modal difference. Inf. Fusion 2025, 114, 102665. [Google Scholar] [CrossRef]

- Tang, L.; Deng, Y.; Ma, Y.; Huang, J.; Ma, J. SuperFusion: A Versatile Image Registration and Fusion Network with Semantic Awareness. IEEE/CAA J. Autom. Sin. 2022, 9, 2121–2137. [Google Scholar] [CrossRef]

- Wang, D.; Liu, J.; Fan, X.; Liu, R. Unsupervised Misaligned Infrared and Visible Image Fusion via Cross-Modality Image Generation and Registration. In Proceedings of the IJCAI, Vienna, Austria, 23–29 July 2022; pp. 3508–3515. [Google Scholar]

- Xie, H.; Zhang, Y.; Qiu, J.; Zhai, X.; Liu, X.; Yang, Y.; Zhao, S.; Luo, Y.; Zhong, J. Semantics lead all: Towards unified image registration and fusion from a semantic perspective. Inf. Fusion 2023, 98, 101835. [Google Scholar] [CrossRef]

- Li, H.; Liu, J.; Zhang, Y.; Liu, Y. A Deep Learning Framework for Infrared and Visible Image Fusion Without Strict Registration. Int. J. Comput. Vis. 2023, 132, 1625–1644. [Google Scholar] [CrossRef]

- Zhang, L.; Zhu, X.; Chen, X.; Yang, X.; Lei, Z.; Liu, Z. Weakly Aligned Cross-Modal Learning for Multispectral Pedestrian Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5126–5136. [Google Scholar] [CrossRef]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; So Kweon, I. Multispectral Pedestrian Detection: Benchmark Dataset and Baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Wanchaitanawong, N.; Tanaka, M.; Shibata, T.; Okutomi, M. Multi-Modal Pedestrian Detection with Large Misalignment Based on Modal-Wise Regression and Multi-Modal IoU. In Proceedings of the 2021 17th International Conference on Machine Vision and Applications (MVA), Aichi, Japan, 25–27 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Tu, Z.; Li, Z.; Li, C.; Tang, J. Weakly Alignment-Free RGBT Salient Object Detection With Deep Correlation Network. IEEE Trans. Image Process. 2022, 31, 3752–3764. [Google Scholar] [CrossRef]

- He, M.; Wu, Q.; Ngan, K.N.; Jiang, F.; Meng, F.; Xu, L. Misaligned RGB-Infrared Object Detection via Adaptive Dual-Discrepancy Calibration. Remote Sens. 2023, 15, 4887. [Google Scholar] [CrossRef]

- Wang, K.; Lin, D.; Li, C.; Tu, Z.; Luo, B. Alignment-Free RGBT Salient Object Detection: Semantics-guided Asymmetric Correlation Network and A Unified Benchmark. IEEE Trans. Multimed. 2024, 26, 10692–10707. [Google Scholar] [CrossRef]

- Song, K.; Xue, X.; Wen, H.; Ji, Y.; Yan, Y.; Meng, Q. Misaligned Visible-Thermal Object Detection: A Drone-Based Benchmark and Baseline. IEEE Trans. Intell. Veh. 2024, 9, 7449–7460. [Google Scholar] [CrossRef]

- Shen, J.; Chen, Y.; Liu, Y.; Zuo, X.; Fan, H.; Yang, W. ICAFusion: Iterative cross-attention guided feature fusion for multispectral object detection. Pattern Recognit. 2024, 145, 109913. [Google Scholar] [CrossRef]

- Yuan, M.; Wei, X. C2Former: Calibrated and Complementary Transformer for RGB-Infrared Object Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5403712. [Google Scholar] [CrossRef]

- Neubeck, A.; Van Gool, L. Efficient Non-Maximum Suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar] [CrossRef]

- Chen, Y.T.; Shi, J.; Ye, Z.; Mertz, C.; Ramanan, D.; Kong, S. Multimodal object detection via probabilistic ensembling. In Proceedings of the Computer Vision—ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part IX. Springer: Cham, Switzerland, 2022; pp. 139–158. [Google Scholar]

- Li, J.; Ye, D.H.; Chung, T.; Kolsch, M.; Wachs, J.; Bouman, C. Multi-target detection and tracking from a single camera in Unmanned Aerial Vehicles (UAVs). In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 4992–4997. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Ashraf, M.W.; Sultani, W.; Shah, M. Dogfight: Detecting Drones from Drones Videos. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7063–7072. [Google Scholar] [CrossRef]

- Zheng, Y.; Chen, Z.; Lv, D.; Li, Z.; Lan, Z.; Zhao, S. Air-to-Air Visual Detection of Micro-UAVs: An Experimental Evaluation of Deep Learning. IEEE Robot. Autom. Lett. 2021, 6, 1020–1027. [Google Scholar] [CrossRef]

- Guo, H.; Zheng, Y.; Zhang, Y.; Gao, Z.; Zhao, S. Global-Local MAV Detection Under Challenging Conditions Based on Appearance and Motion. IEEE Trans. Intell. Transp. Syst. 2024, 25, 12005–12017. [Google Scholar] [CrossRef]

- Lyu, Y.; Liu, Z.; Li, H.; Guo, D.; Fu, Y. A Real-time and Lightweight Method for Tiny Airborne Object Detection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 3016–3025. [Google Scholar] [CrossRef]

- Zhou, X.; Yang, G.; Chen, Y.; Li, L.; Chen, B.M. VDTNet: A High-Performance Visual Network for Detecting and Tracking of Intruding Drones. IEEE Trans. Intell. Transp. Syst. 2024, 25, 9828–9839. [Google Scholar] [CrossRef]

- Hao, H.; Peng, Y.; Ye, Z.; Han, B.; Zhang, X.; Tang, W.; Kang, W.; Li, Q. A High Performance Air-to-Air Unmanned Aerial Vehicle Target Detection Model. Drones 2025, 9, 154. [Google Scholar] [CrossRef]

- Huang, M.; Mi, W.; Wang, Y. EDGS-YOLOv8: An Improved YOLOv8 Lightweight UAV Detection Model. Drones 2024, 8, 337. [Google Scholar] [CrossRef]

- Cheng, Q.; Wang, Y.; He, W.; Bai, Y. Lightweight air-to-air unmanned aerial vehicle target detection model. Sci. Rep. 2024, 14, 2609. [Google Scholar] [CrossRef]

- Zuo, G.; Zhou, K.; Wang, Q. UAV-to-UAV Small Target Detection Method Based on Deep Learning in Complex Scenes. IEEE Sens. J. 2025, 25, 3806–3820. [Google Scholar] [CrossRef]

- Rozantsev, A.; Lepetit, V.; Fua, P. Detecting Flying Objects Using a Single Moving Camera. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 879–892. [Google Scholar] [CrossRef]

- Chu, Z.; Song, T.; Jin, R.; Jiang, T. An Experimental Evaluation Based on New Air-to-Air Multi-UAV Tracking Dataset. In Proceedings of the 2023 IEEE International Conference on Unmanned Systems (ICUS), Hefei, China, 13–15 October 2023; pp. 671–676. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, Z.; Laganière, R.; Zhang, H.; Ding, L. A UAV to UAV tracking benchmark. Knowl.-Based Syst. 2023, 261, 110197. [Google Scholar] [CrossRef]

- Tu, Z.; Wang, Q.; Wang, H.; Wang, K.; Li, C. Erasure-based Interaction Network for RGBT Video Object Detection and A Unified Benchmark. arXiv 2023, arXiv:2308.01630. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2025; pp. 1–21. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle WA, USA, 17–21 June 2024; pp. 16965–16974. [Google Scholar] [CrossRef]

- Feng, Y.; Huang, J.; Du, S.; Ying, S.; Yong, J.H.; Li, Y.; Ding, G.; Ji, R.; Gao, Y. Hyper-YOLO: When Visual Object Detection Meets Hypergraph Computation. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 2388–2401. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Lei, J.; Xie, W.; Fang, Z.; Li, Y.; Du, Q. SuperYOLO: Super Resolution Assisted Object Detection in Multimodal Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5605415. [Google Scholar] [CrossRef]

- Ye, Z.; Peng, Y.; Liu, W.; Yin, W.; Hao, H.; Han, B.; Zhu, Y.; Xiao, D. An Efficient Adjacent Frame Fusion Mechanism for Airborne Visual Object Detection. Drones 2024, 8, 144. [Google Scholar] [CrossRef]

| Name | Data Scale | Target Type | Data Type | Modality | Characteristics |

|---|---|---|---|---|---|

| FL | 14 videos, 38,948 images | Multi-object | Video | Single-channel grayscale | Lacks color info, limits color feature use. Ideal for fast target detection/tracking |

| NPS | 50 videos, 70,250 images | Multi-object | Video | Visible light | Irregular motion, small size, occlusion, dynamic background |

| Det-Fly | 13,000 images | Single-object | Image | Visible light | Diverse perspectives. Limited to one drone type, reduces generalization |

| AOT | 164 h video, 5.9 M images | Multi-object | Video | Single-channel grayscale | Largest aviation vision dataset. Contains depth information for depth estimation |

| ARD-MAV | 60 videos, 106k+ images | Multi-object | Video | Visible light | Complex scenarios: background clutter, occlusion, small targets |

| UAVfly | 10,281 images | Single-object | Image | Visible light | Diverse geography. One drone type only, large target size |

| MOT-FLY | 16 videos, 11k+ images | Multi-object | Video | Visible light | Civil multi-UAV tracking focus. Significant rural/urban coverage |

| U2U | 54 videos, 25k images | Single-object | Video | Visible light | Fixed-wing UAVs. Includes level flight, steep dives, maneuvers |

| Model | Visible | Infrared | Visible+Infrared | Params (M) | FLOPs (G) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

P (%) |

R (%) |

AP50 (%) |

mAP 50–95 |

P (%) |

R (%) |

AP50 (%) |

mAP 50–95 |

P (%) |

R (%) |

AP50 (%) |

mAP 50–95 | |||

| YOLOv5 | 80.3 | 43.0 | 50.5 | 30 | 95.5 | 80.8 | 88.2 | 60.9 | 89.8 | 57.4 | 65.9 | 41.5 | 2.2 | 5.8 |

| YOLOv8 | 82.0 | 43.0 | 51.9 | 28.5 | 94.0 | 82.3 | 89.1 | 61.7 | 90.6 | 57.9 | 66.7 | 42.4 | 2.7 | 6.8 |

| YOLOv9 | 83.0 | 42.8 | 50.7 | 27.2 | 95.7 | 80.6 | 87.9 | 60.1 | 88.5 | 57.8 | 65.5 | 40 | 1.7 | 6.4 |

| YOLOv11 | 82.4 | 43.7 | 51.5 | 28.1 | 94.5 | 82.3 | 88.7 | 60.8 | 90.1 | 58.0 | 66.6 | 41.9 | 2.6 | 6.3 |

| Hyper-YOLO | 80.8 | 43.6 | 52.0 | 29.1 | 96.5 | 82.0 | 89.5 | 62.1 | 88.1 | 58.8 | 67.2 | 43.0 | 3.6 | 9.5 |

| RT-DETR | 85.0 | 52.3 | 60.1 | 30.2 | 97.6 | 86.0 | 90.3 | 68.9 | 92.3 | 65.8 | 73.5 | 48.7 | 42.8 | 130.5 |

| SuperYOLO | – | – | – | – | – | – | – | – | 95.9 | 72.0 | 76.3 | 40.3 | 7.7 | 14.0 |

| ICAFusion | – | – | – | – | – | – | – | – | 96.4 | 80.8 | 85.2 | 52.5 | 120.2 | 55.1 |

| ADCNet | – | – | – | – | – | – | – | – | 96.1 | 70.6 | 73.5 | 40.6 | 100.2 | 59.8 |

| ProbEn3 | – | – | – | – | – | – | – | – | – | – | 82.6 | 45.3 | 180.4 | 70.9 |

| Model | Visible | Infrared | Visible + Infrared | Params (M) | FLOPs (G) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

P (%) |

R (%) |

AP50 (%) |

mAP 50–95 |

P (%) |

R (%) |

AP50 (%) |

mAP 50–95 |

P (%) |

R (%) |

AP50 (%) |

mAP 50–95 | |||

| YOLOv5 | 80.3 | 43.0 | 50.5 | 30 | 95.5 | 80.8 | 88.2 | 60.9 | 89.8 | 57.4 | 65.9 | 41.5 | 2.2 | 5.8 |

| YOLOv5-EAFF | 82.6 | 43.4 | 52.1 | 31 | 95.9 | 81.8 | 89.6 | 61.7 | 90.2 | 58.5 | 67.2 | 42.6 | 2.3 | 6.6 |

| YOLOv8 | 82.0 | 43.0 | 51.9 | 28.5 | 94.0 | 82.3 | 89.1 | 61.7 | 90.6 | 57.9 | 66.7 | 42.4 | 2.7 | 6.8 |

| YOLOv8-EAFF | 83.4 | 43.6 | 52.7 | 29.1 | 96.1 | 84.4 | 90.3 | 63.1 | 91.2 | 58.9 | 68.9 | 43.6 | 2.7 | 7.6 |

| YOLOv11 | 82.4 | 43.7 | 51.5 | 28.1 | 94.5 | 82.3 | 88.7 | 60.8 | 90.1 | 58.0 | 66.6 | 41.9 | 2.6 | 6.3 |

| YOLOv11-EAFF | 83.7 | 44.4 | 53.3 | 29.2 | 96.3 | 84.9 | 90.5 | 63.5 | 91.7 | 59.0 | 69.1 | 43.8 | 2.6 | 7.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, H.; Peng, Y.; Ye, Z.; Han, B.; Tang, W.; Kang, W.; Zhang, X.; Li, Q.; Liu, W. TMRGBT-D2D: A Temporal Misaligned RGB-Thermal Dataset for Drone-to-Drone Target Detection. Drones 2025, 9, 694. https://doi.org/10.3390/drones9100694

Hao H, Peng Y, Ye Z, Han B, Tang W, Kang W, Zhang X, Li Q, Liu W. TMRGBT-D2D: A Temporal Misaligned RGB-Thermal Dataset for Drone-to-Drone Target Detection. Drones. 2025; 9(10):694. https://doi.org/10.3390/drones9100694

Chicago/Turabian StyleHao, Hexiang, Yueping Peng, Zecong Ye, Baixuan Han, Wei Tang, Wenchao Kang, Xuekai Zhang, Qilong Li, and Wenchao Liu. 2025. "TMRGBT-D2D: A Temporal Misaligned RGB-Thermal Dataset for Drone-to-Drone Target Detection" Drones 9, no. 10: 694. https://doi.org/10.3390/drones9100694

APA StyleHao, H., Peng, Y., Ye, Z., Han, B., Tang, W., Kang, W., Zhang, X., Li, Q., & Liu, W. (2025). TMRGBT-D2D: A Temporal Misaligned RGB-Thermal Dataset for Drone-to-Drone Target Detection. Drones, 9(10), 694. https://doi.org/10.3390/drones9100694