Abstract

Trajectory planning for logistics UAVs in complex environments faces a key challenge: balancing global search breadth with fine constraint accuracy. Traditional algorithms struggle to simultaneously manage large-scale exploration and complex constraints, and lack sufficient modeling capabilities for multi-UAV systems, limiting cluster logistics efficiency. To address these issues, we propose a GWOP algorithm based on dual-layer fusion of GWO and GRPO and incorporate a graph attention network (GAT). First, CEC2017 benchmark functions evaluate GWOP convergence accuracy and balanced exploration in multi-peak, high-dimensional environments. A hierarchical collaborative architecture, “GWO global coarse-grained search + GRPO local fine-tuning”, is used to overcome the limitations of single-algorithm frameworks. The GAT model constructs a dynamic “environment–UAV–task” association network, enabling environmental feature quantification and multi-constraint adaptation. A multi-factor objective function and constraints are integrated with multi-task cascading decoupling optimization to form a closed-loop collaborative optimization framework. Experimental results show that in single UAV scenarios, GWOP reduces flight cost (FV) by over 15.85% on average. In multi-UAV collaborative scenarios, average path length (APL), optimal path length (OPL), and FV are reduced by 4.08%, 14.08%, and 24.73%, respectively. In conclusion, the proposed method outperforms traditional approaches in path length, obstacle avoidance, and trajectory smoothness, offering a more efficient planning solution for smart logistics.

1. Introduction

With the rapid development of e-commerce retail, higher demands have been placed on express delivery, driving the evolution of smart logistics from “automated warehousing” to “full-chain drone logistics.” Drones have experienced significant growth due to their advantages in flexible low-altitude deployment and strong coverage in remote areas. The market is projected to grow at an annual rate of 55%, expanding from USD 544 million in 2022 to USD 18.311 billion by 2030 [1]. Application scenarios include urban distributed warehouse departures, time-windowed multi-point deliveries, and agricultural supply transportation in complex rural terrains. However, the large-scale adoption of logistics drones heavily depends on adaptive trajectory planning that balances delivery cost and safety. Compared to scenarios such as infrastructure inspection [2], agricultural pest control [3], and disaster search and rescue [4], logistics drone applications impose stricter constraints, necessitating further optimization of traditional trajectory planning.

The core challenge in logistics drone trajectory planning is to find the optimal path from start to destination under multiple constraints. Due to the complexity of logistics environments, classical algorithms [5,6] often fall into local optima. To address this, [7] integrated a triple-improved adaptive neighborhood search into the A* algorithm, introducing a 5-neighborhood search mechanism to enhance planning efficiency. Ref. [8] proposed an improved Dubins-RRT* algorithm to incorporate curvature constraints, enabling adaptation to multiple delivery waypoints. In recent years, meta-heuristic algorithms have become dominant due to their ability to handle complex constraints and high-dimensional spaces.

For example, [2] introduced a cooperative coverage path optimization method based on particle swarm optimization (PSO), achieving parallel optimization of path efficiency, image quality, and computational complexity. Ref. [9] combined GWO with differential evolution (DE) to propose HGWODE, optimizing trajectory length and smoothness. Ref. [10] enhanced adaptability in dynamic environments by introducing roulette selection and Levy flight strategies, improving path length optimization by 82.4%. Ref. [4] proposed a heuristic cross-search and rescue optimization (HC-SAR), enhancing drone conflict avoidance in 3D threat environments through real-time correction and B-spline interpolation. Ref. [11] integrated reinforcement learning (RL) with the artificial bee colony algorithm (ABC), dynamically adjusting search dimensions to improve path accuracy.

In multi-drone collaboration, hybrid and learning-based frameworks have gained attention. Ref. [12] proposed a hybrid algorithm combining improved Golden Jackal Optimization (MGJO) and Dynamic Window Approach (DWA), reducing local optima occurrences in large-scale scenarios from six to two. Ref. [13] introduced an improved Marine Predators Algorithm (MMPA), enhancing convergence speed by 40% through adaptive parameters and Cauchy mutation. Ref. [14] proposed a Multi-Agent Cooperative Navigation System (MACNS) based on Graph Neural Networks (GNNs), integrating PPO for dynamic traffic coordination. Ref. [15] proposed a RL-based Multi-Strategy Cuckoo Search (RLMSCS), reducing threat cost by 21.1% through dynamic parameter adjustment. Ref. [16] explored learning-based adaptive information path planning (AIPP), while [14] proposed GPPO, combining GNN and PPO to improve multi-agent coordination. These studies demonstrate the growing effectiveness of learning-enhanced algorithms in UAV logistics.

The Gray Wolf Optimization (GWO) algorithm, introduced in 2014, simulates wolf pack hierarchy to achieve rapid convergence in UAV trajectory planning. Ref. [17] proposed BGWO with a beetle antenna strategy, improving benchmark function accuracy by 35%. Ref. [3] introduced NAS-GWO, integrating Gaussian mutation and spiral functions to reduce cost values by 13.48%. Ref. [18] proposed RGWO with adaptive inertia weights and escape mechanisms to enhance search efficiency.

Despite its strengths, GWO faces limitations in complex urban environments: (1) It relies solely on α/β/δ wolves for global search without a hierarchical framework of “coarse-grained exploration + fine-grained optimization”. (2) It lacks dynamic coupling between “environmental features” and “UAV tasks,” limiting adaptability. (3) It struggles with multi-dimensional modeling in cooperative operations, requiring a shift from “feasible” to “high-quality” solutions.

To address these issues, this study proposes a hierarchical collaboration framework combining the CAT feature input layer with the GRPO decision-making strategy. By integrating GWO with Generalized Reinforcement Policy Optimization (GRPO) and incorporating GAT-based dynamic association decoupling and multi-task cascading optimization, the framework enhances global search and real-time strategy adaptation. It supports dynamic task priority changes [19] and sudden obstacle avoidance [20] without reinitializing the population, showing promise in smart logistics [21] and collaborative inspection [22]. Compared to hybrid methods like GWO-DE [9] and RL-ACO [23], the GWOP framework achieves breakthroughs through the following mechanisms:

- Two-layer Fusion mechanism: A two-layer fusion architecture integrating “GWO global search + GRPO strategy optimization” is proposed to overcome the performance separation between “global search” and “local convergence” inherent in single algorithms. By leveraging the α/β/δ wolf cooperation mechanism in GWO for large-scale space exploration, combined with GRPO’s reward normalization and fine trajectory adjustment via KL constraints, a hierarchical collaboration of “coarse-grained search + fine-grained optimization” is achieved. This provides an effective framework for addressing path planning challenges in complex environments.

- GAT Feature Correlation Modeling: We construct an “environment—unmanned aerial vehicle” dynamic correlation network. By adaptively allocating attention weights, the network can capture in real-time the correlation strength between the dynamic distribution of environmental obstacles and logistics tasks. Integrated with GAT-based graph convolution quantization and global adaptive parallel decoupling strategies, it effectively quantifies dynamic environmental features, enabling seamless switching between static map pre-planning and online dynamic obstacle avoidance.

- CEC2017 test model: The improved double-layer fusion GWOP algorithm was evaluated against multiple other algorithms using the CEC2017 test functions, and its adaptability was demonstrated through analyses of convergence accuracy and fitness value. The algorithm’s strengths in balancing exploration across multimodal and high-dimensional problems were examined, leading to the conclusion that the GWOP algorithm exhibits superior performance in complex optimization environments.

- Modeling of target constraint conditions: Considering the actual flight scenarios of logistics drones in a comprehensive manner, a multi-factor objective function and corresponding constraint conditions are established to enable dynamic strategy adjustment under multiple constraints. This approach addresses the limitations of traditional algorithms in terms of “coarse feature extraction and reliance on static models” within complex environments, thereby advancing path planning from “physical environment adaptation” to “task semantic-driven decision-making.”

- Multi-task Cascading Decoupling Optimization: By integrating GAT association decoupling and GRPO gradient optimization, the issues of “task conflict” and “resource imbalance” in multi-logistics UAV collaborative operations are resolved. This achieves collaborative optimization across “path length—obstacle avoidance effect—trajectory smoothness,” integrating architectural diversity and breaking the limitation of “single-index optimization” in traditional algorithms. It promotes the evolution of intelligent logistics route planning from “feasible solutions” to “high-quality solutions.”

The organization of this paper is structured as follows: Section 2 outlines the working process of the classic Gray Wolf Optimizer (GWO) algorithm. Section 3 introduces the proposed double-layer fusion GWOP algorithm, which integrates GWO and GRPO, and further incorporates the GAT layer attention mechanism and B-spline trajectory smoothing technique. Section 4 describes the real-world unmanned aerial vehicle (UAV) flight environment and experimental procedures, while also evaluating the performance of multiple algorithms on the CEC2017 test functions. Section 5 establishes the mathematical model of the objective function and constraint conditions for the UAV flight environment, based on which comparative experiments are conducted between the improved double-layer GWOP algorithm and the classic GWO algorithm. Following the initial results, six additional classical algorithms are introduced to assess the robustness of the proposed method. Section 6 presents a comprehensive discussion and comparative analysis of the experimental outcomes involving the improved double-layer GWOP algorithm and other classical algorithms. Finally, Section 7 provides a summary of the study along with recommendations for future research directions.

2. GWO Trajectory Planning Algorithm

All Gray Wolf Optimizer (GWO) is a population-based optimization algorithm inspired by the hunting behavior and social hierarchy of gray wolves. The algorithm categorizes individuals into four roles: alpha (α), beta (β), delta (δ), and omega (ω), as illustrated in Figure 1, encompassing the entire process of hunting, encircling, and attacking prey. It achieves a balance between global exploration and local exploitation through the iterative adjustment of coefficients A and C, where A decreases over iterations and C is a random number within a defined range.

Figure 1.

GWO algorithm framework.

The process of the Grey Wolf Optimizer (GWO) algorithm is outlined in Algorithm 1, involving key parameters such as population size , dimensionality , maximum number of iterations , and boundary initialization . During the iterative process, a new position is constructed using the decreasing coefficient which varies with each iteration, along with random coefficients and . After applying boundary constraints to the newly generated position, the three hierarchical roles of gray wolves (α, β, δ) are updated based on their fitness values.

| Algorithm 1: GWO Algorithm Workflow |

| Input: , , , |

| Output: ; |

| 1 |

| 2 |

| 3 |

| 4 |

| 5 |

| 6 |

| 7 |

| 8 |

| 9 |

| 10 , |

| 11 |

| 12 |

| 13 , |

| 14 |

| 15 |

| 16 |

| 17 |

| 18 |

| 19 end |

| 20 |

| 21 |

| 22 |

| 23 |

| 24 |

| 25 |

| 26 |

| 27 , |

Initialize the position of each gray wolf individual by setting the population size of the gray wolf group as , and initialize the position of the gray wolf as follows.

In the formula, represents the index of individuals within the gray wolf population. and denote the lower and upper bound vectors of the search space, respectively. signifies the d-dimensional real number space. indicates the random vector for the gray wolf, which is uniformly distributed in the interval [0, 1]. The symbol denotes element-wise multiplication.

By calculating the fitness of each gray wolf in the initial population, the “leadership hierarchy” of the population is determined. This process is used to identify the positions of the top three individuals: the best (α), the second-best (β), and the third-best (δ) wolves.

In the formula, , , and represent the position vectors of α, β, and δ wolves at the initial time , respectively. denotes the position vector of the first gray wolf at the initial time. performs traversal over all gray wolves for index . represents the fitness value of the gray wolf. The traversal range for excludes gray wolves already selected as α, while the traversal range for excludes gray wolves already selected as α and β. The fitness function is evaluated to determine the fitness value of each individual’s position. To balance global and local exploration diversity, a dynamic adjustment model is introduced as follows.

In the formula, denotes the current number of iterations, represents the control parameter at iteration t, and indicates the maximum number of iterations of the algorithm. The positions of α, β, and δ wolves are utilized to continuously update the positions of the remaining wolves [24] as follows.

In the formula, , , and represent the distance vectors between the gray wolf and the α, β, and δ wolves at iteration t, respectively. , , and denote the position vectors of the α, β, and δ wolves at iteration t − 1. represents the position vector of the gray wolf at iteration t − 1. The symbol stands for element-wise product, and denotes the absolute value operation. Since represents random numbers in the range [0, 1], , together with , , and , represent random coefficient vectors in the range [0, 2]. The candidate location model [25] is calculated as follows.

In the formula, , , , , , , , and have the same meanings as in Formula (4) above, and has the same meaning as in Formula (3) above. , , and represent the candidate position vectors guided by the leader wolves α, β, and δ, respectively. Since represents a random number with a value range of [0, 1], , , , and represent the direction control coefficients with a value range of . By combining the guidance of the leader wolves with random disturbances, the average of the final three candidate positions is used to update the new position of the gray wolf [26,27].

After completing the position update of the new gray wolf, boundary constraint processing is performed.

In the formula, represents the dimension index, and denote the lower and upper bounds of the dimension of the search space, respectively, and represents the dimensional component of the position of the gray wolf at iteration t. The best three solutions are reselected by calculating the fitness of the new population.

In the formula, , , and denote the position vectors of α, β, and δ wolves in generation t, respectively, i denotes the individual index of grey wolves. represents the selection of the three individuals with the best fitness values. represents the position vector of the gray wolf at iteration t, and represents the fitness value of the gray wolf at iteration t. Finally, the global optimal solution is output.

In the formula, denotes the global optimal solution, represents the optimal fitness value, signifies the maximum number of iterations, and indicates the position of the α-wolf at the final iteration.

3. Double-Layer GWOP Fusion Algorithm Design

3.1. GAT Layer Attention Mechanism

GAT (Graph Attention Mechanism) is a neural network built upon graph structures, and its algorithmic flow is outlined in Algorithm 2. By constructing association weights between nodes through adaptive learning, GAT accurately captures the relationships between nodes and their neighbors. It relies on the linear transformation of node features combined with the Softmax normalization function to perform weighted aggregation of neighbor information. Furthermore, GAT enhances the representation of key feature information through multi-head attention concatenation.

| Algorithm 2: GAT Attention Mechanism Workflow |

| Input: , , , , , , , |

| Output: |

| 1 |

| 2 |

| 3 |

| 4 |

| 5 |

| 6 |

| 7 |

| 8 |

| 9 |

| 10 |

| 11 |

| 12 , |

| 13 |

| 14 |

| 15 |

| 16 |

| 17 |

| 18 |

| 19 |

| 20 |

| 21 |

| 22 |

| 23 |

| 24 |

| 25 |

The weight matrix , attention coefficient vector , and parameter vector of each attention head [28] are initialized as follows.

In the formula, represents the real number field, denotes the feature dimension of the node, indicates the initial feature dimension of the node, and represents the dimension of the weight matrix . K represents the number of attention heads. For each attention head k, the initial feature is linearly transformed using the weight matrix .

In the formula, represents the initial node feature matrix, denotes the number of batch nodes, and indicates the node feature matrix after transformation. To capture the “UAV-obstacle” obstacle avoidance association [29] and achieve a better trajectory, by combining the attention vector with dynamic calculation, the feature correlation coefficient [30,31] after concatenating neighboring node changes is computed as follows:

In the formula, represents the attention vector of the k attention head, and denotes the activation function. represents the feature change in node j under the k attention head, as well as the feature change in its neighbors under the same attention head. denotes the feature concatenation operation. To address the issue that “different neighbors have different effects on the current node,” for each node i, the neighbor attention coefficient is normalized using the following function [32]:

In the formula, denotes the set of neighbors for node i, and represents the normalized attention weight assigned by node i to its j neighbor under the k attention head. By incorporating the characteristics of unmanned aerial vehicles (UAVs) into the “nearest obstacle distance” feature, and subsequently aggregating the flight path features of UAVs, adaptive aggregation of neighboring features can be achieved [33].

In the formula, denotes that node i is under the k attention head, aggregating the neighbor features , which signifies the weighted summation operation; represents the output feature of node i under the k attention head, and ELU refers to the exponential linear unit, primarily utilized to break the dependency of linear features.

In the formula, α represents a constant. The complementary modes of different attention heads capture nodes by utilizing multi-attention head concatenation to output the node matrix Z:

In the formula, denotes the output feature matrix of the k attention head, Concat refers to the feature concatenation operation, and represents the feature dimension after concatenation. Finally, by quantifying the prediction error of the model, overfitting can be prevented, enabling GAT to progressively learn more characteristic information about dangerous obstacles:

In the formula, denotes the predicted loss for the specified batch; Z signifies the features of the model’s output nodes, and target indicates the true value labels of the nodes. represents the regularization coefficient; denotes the set of model parameters, refers to the regularization term, and represents the total loss. stands for the learning rate, and represents the gradient of the loss function with respect to parameter , which is primarily used to characterize the current changing trend of the loss function with respect to the parameter.

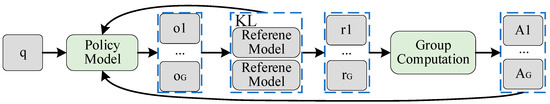

3.2. Group Relative Policy Optimization

The GRPO (Group Relative Policy Optimization) algorithm is illustrated in Figure 2. Its design eliminates the Critic model, thereby overcoming the limitations of large-scale training inherent in traditional reinforcement learning methods. The core idea of GRPO is to derive an estimation benchmark by comparing multiple output results against one another, enabling optimal conclusion inference for value-free networks.

Figure 2.

GRPO algorithm framework.

The GRPO algorithm process is outlined in Algorithm 3, starting with the initial strategy . In the nested loop, a batch is first sampled from the dataset D, and G actions are collected from this batch using strategy to compute the action rewards. By constructing the objective function and integrating it with the dominant function , the gradient strategy parameter G is updated.

| Algorithm 3: GRPO Algorithm Workflow |

| Input: , , , , , |

| Output: |

| 1 |

| 2 |

| 3 |

| 4 |

| 5 |

| 6 |

| 7 |

| 8 |

| 9 |

| 10 |

| 11 |

| 12 , |

| 13 |

| 14 |

| 15 |

| 16 |

| 17 |

| 18 |

| 19 |

| 20 |

| 21 |

| 22 |

| 23 |

| 24 |

| 25 |

| 26 |

The GRPO objective function primarily comprises three components: the sampling ratio of old and new policies, the policy clipping objective, and the KL divergence regularization term. In contrast to the classic PPO algorithm, the GRPO algorithm does not require a value network and predominantly employs group sampling to achieve efficient advantage estimation. The continuous update model of the strategy is realized by enhancing the reward-penalty mechanism within the objective function [34] as follows:

In the above formula, denotes the objective function value of the GRPO model; E represents the mathematical expectation for sampling from the problem distribution and the output group generated by the old strategy. signifies sampling the input based on the task probability. indicates generating G candidate outputs from the old strategy q for each sampled . refers to averaging the G candidate outputs within each group. and represent the strategy clipping objective and the KL divergence regularization term, respectively [35].

In the above formula, denotes the clipping threshold, and signifies the threshold limit of the strategy amplitude. represents the weight of the regularization term, primarily serving to balance strategy improvement and reference constraints. refers to the reference strategy, and indicates the KL divergence between strategy and the reference strategy . and represent the sampling ratio of the old and new strategies and the estimated intra-group advantage, respectively:

In the above formula, denotes the probability of the current strategy generating , and signifies the probability of the old strategy generating prior to the update. When , it indicates that the new strategy is more likely to generate ; when , it suggests that the new strategy reduces the generation probability of . represents the original reward for the i output, denotes the average of all rewards within the group, and signifies the standard deviation of all rewards within the group. When , it implies that the output performs better than the group average; when , it implies that the output performs worse than the group average.

3.3. Double-Layer GWOP Algorithm Design

The two-layer fusion GWOP algorithm integrates the combination process of GWO and GRPO, with the introduction of the GAT attention mechanism, as illustrated in the overall fusion framework shown in Figure 3. Through the three-dimensional raster coding environment depicted in the upper left of the figure, the representation of digital spatial grid information is completed [36]. By meticulously recording the performance of each trajectory algorithm in 3D route planning, fast search and accurate evaluation of the optimal route planning algorithm are achieved. The top right corner of the figure presents the fundamental framework of the Gray Wolf Optimization (GWO). Building upon this, the GAT attention mechanism in the bottom right corner and the GRPO algorithm are fused to form a comprehensive integration process of “GWO group search + GRPO strategy optimization + GAT graph structure perception.” Finally, the output results are applied to the attitude control of the UAV in the bottom right corner, providing an effective solution for real-time path planning of logistics UAVs in unknown environments.

Figure 3.

Double-Layer GWOP fusion algorithm framework.

The process of the GWOP fusion algorithm is outlined in Algorithm 4. Firstly, the GRPO algorithm is integrated into the GWO fitness calculation as . On this basis, the GAT attention mechanism is incorporated to establish the association of key feature information, serving as the fitness constraint term for the GWO algorithm. The solution for the complete optimal strategy is achieved via bidirectional collaborative feedback between GAT attention weights and GWO strategies.

| Algorithm 4: Double—Layer GWOP fusion Algorithm Workflow |

| Input: , , , , , , , , , , , , , |

| Output: , , , |

| 1 |

| 2 |

| 3 |

| 4 |

| 5 |

| 6 |

| 7 |

| 8 |

| 9 |

| 10 |

| 11 |

| 12 |

| 13 |

| 14 |

| 15 |

| 16 |

| 17 |

| 18 |

| 19 |

| 20 |

| 21 |

| 22 |

| 23 |

| 24 |

| 25 |

| 26 |

| 27 |

| 28 |

| 29 |

| 30 |

| 31 |

| 32 |

| 33 |

| 34 |

| 35 |

| 36 |

| 37 |

| 38 |

| 39 |

| 40 |

| 41 |

| 42 |

| 43 |

| 44 |

| 45 |

| 46 |

| 47 |

| 48 |

| 49 |

| 50 |

| 51 |

| 52 |

| 53 |

| 54 |

| 55 |

| 56 |

| 57 , |

| 58 |

| 59 |

| 60 |

| 61 |

| 62 |

| 63 |

| 64 |

| 65 |

| 66 |

| 67 |

| 68 |

| 69 |

| 70 |

The GWO “Wolf Pack” corresponds to a set of candidate strategies for logistics drones, which comprises multiple strategy parameters. Under any selected parameter of Strategy , dynamic trajectory planning and goods distribution are executed by the logistics unmanned aerial vehicle. The trajectory performance under Strategy is evaluated using the GRPO algorithm, and ultimately the optimal “α Wolf” guided population evolution is achieved. By integrating the two-tier architecture of GWO and GRPO, the GWOP algorithm realizes a high-quality global/local search strategy. The initialization of the GWOP model [37] is as follows:

In the formula, denotes the parameter vector of the reinforcement learning strategy, represents the real number field, and dim signifies the dimension of the strategy parameters. represents the strategy parameters of the i individual in the population. denotes the lower bound of the parameters, denotes the upper bound of the parameters, represents the random vector where each element follows a uniform distribution in (0,1). signifies element-wise multiplication. represents the weight matrix of the k attention head, represents the attention coefficient vector of the k attention head, k denotes the index of the attention head, and K represents the total number of attention heads. The reward value model in the fitness evaluation of GRPO [38] is as follows:

In the formula, denotes the original reward of the i sampling, and denotes the standardized reward of the i sampling. G represents the number of samplings for the same type of action, represents the arithmetic mean of the rewards for G samplings, and represents the standard deviation of the rewards for G samplings. The fitness of the i gray wolf individual in the modified GRPO objective function is [39,40,41]:

In the formula, denotes the task objective function, denotes the objective term, denotes the balance coefficient, denotes the graph structure constraint term, and G denotes the graph structure data. E represents the expectation operation, q represents the environmental state, and represents the state distribution; o represents the actions performed by the agent in state q, and represents the action distribution of the old policy. G denotes the number of action samples in the same state, and denotes the sum of for G action samples. represents the penalty coefficient of KL divergence, and represents KL divergence. denotes the parameters of the current candidate strategy, and denotes the parameters of the old strategy; represents the probability difference in the output action o between the new and old strategies in state q, represents the clipping coefficient, and indicates clipping the input value to the interval . The model for converting the maximization objective of GRPO into the minimization objective of GWO is:

In the formula, denotes the performance evaluation value of GRPO for strategy , and denotes the fitness function value of the i individual in GWO. Coefficients of the GWO update mechanism [42,43]:

In the formula, t denotes the current number of iterations, and denotes the maximum number of iterations. and represent uniformly distributed random numbers within the range (0, 1). represents the bounding coefficient, and represents the direction coefficient [44].

In the formula, denotes the original feature vector of node i, denotes the weight matrix of the k attention map, denotes the updated feature vector of node i, and represents the node set of the graph. represents the original attention coefficient between nodes i and j, represents the inner product operation between nodes, denotes the feature concatenation operation, and represents the edge set of the graph. represents the normalized attention weight of node i to neighbor j, denotes the exponential transformation of the original attention coefficient, represents the sum of the exponential attention coefficients for all neighbors of the node i, and denotes the neighbor set of node i. represents the new feature vector after node i aggregates the features of its neighbors, and denotes the weighted summation operation. represents the containment coefficient, which measures the distance from the Wolf pack and is used for candidate strategy updates [45]:

In the formula, , , , and respectively denote the current optimal strategy, suboptimal strategy, third optimal strategy, and the parameter vector of the current individual. represents element-wise multiplication. , , and respectively denote the parameter difference vectors of the α, β, and δ wolf. , , and respectively denote the candidate parameter vectors of the α, β, and δ wolf. denotes the final candidate strategy that integrates the three leader-guided strategies. The values of , , and are dynamically adjusted by the output of GAT [46]:

In the formula, denotes the control coefficient for the position update of the gray wolf, a denotes the basic control parameter of GWOP, denotes the scaling factor, represents the attention weight function calculated by the GAT model for node, where i is the current node and j is a neighboring node. The greedy update and convergence model [47] is as follows:

In the formula, denotes the set of learning parameters of GAT, denotes the learning rate, and denotes the gradient of the loss function with respect to . represents the task-specific loss, represents the balance coefficient, and represents the GWOP guiding loss. denotes the new position of the i gray wolf individual, and denotes the fitness function corresponding to the new position of the i gray wolf individual. represents the current position of the i gray wolf individual, and represents the fitness function corresponding to the current position of the i gray wolf individual.

3.4. B-Spline Trajectory Curve

In the application of B-spline trajectory curves to the delivery routes of logistics drones, given the node vector , the degree basis function is defined as follows:

In the formula, represents the continuous changing values of a non-decreasing sequence of node vectors, and indicates the degree B-spline basis function, where is the index of the basis function and p is the degree of the basis function. For control vertices , where is the dimension. The weighted sum of the control vertices of the B-spline curve:

In the above formula, represents the control vertices, is the B-spline curve function, is the total number of nodes; is the parameter variable and serves as the input for the B-spline curve. The minimum smooth model of the B-spline curve is as follows:

In the above formula, represents the objective of the trajectory curve, and are the weight coefficients of the curve; optimizes the B-spline curve, and is the second derivative of the B-spline curve with respect to the parameter ; is the parametric curve, is the square of the norm of the difference between the curves and , is the penalty for the deviation of the curve from the ideal trajectory , and is the penalty for the “non-smoothness” of the curve.

4. Experiment and Evaluation

4.1. Experimental Scenario

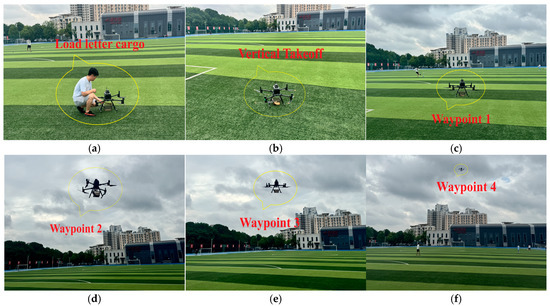

In order to verify the effectiveness of the double-layer GWOP algorithm, which integrates GWO and GRPO, in dynamic route planning for logistics UAVs, the entire flight experiment process of the logistics UAV from “loading—taking off—route planning” is recorded, as shown in Figure 4. Specifically, Figure 4a illustrates the process of loading envelope goods into the UAV logistics box; Figure 4b shows the vertical takeoff of the logistics UAV; and Figure 4c–f depict the various stages of the logistics UAV’s distribution route planning and flight process.

Figure 4.

Trajectory Planning and Delivery Process of Logistics UAV: (a) Load letter cargo; (b) Vertical Takeoff; (c) Waypoint 1; (d) Waypoint 2; (e) Waypoint 3; (f) Waypoint 4.

4.2. Experimental Results Analysis

The CEC2017 test functions are listed in Table 1. Among these, F1 to F10 are basic transformation functions, primarily used to evaluate convergence performance on unimodal/multimodal and complex curved landscapes through shifting and rotation operations. F11 to F20 represent hybrid functions, mainly designed to assess the algorithm’s ability to collaboratively optimize multiple sub-problems. F21 to F25 are also hybrid functions, specifically intended to test robustness and global search capabilities in highly complex environments, thereby evaluating the comprehensive performance of multiple sub-function superposition and local distortion.

Table 1.

CEC2017 test functions.

4.3. CEC2017 Test

4.3.1. CEC2017 Test Data

The results of the improved GWOP algorithm and seven other algorithms, namely GWO, PSO, SSA, DBO, NGO, TSO, and JSOA, on the CEC2017 test function data are shown in Table 2. The results of the improved GWOP algorithm and seven other algorithms, including GWO, PSO, SSA, DBO, NGO, TSO, and JSOA, on the CEC2017 test function data are shown in Table 2. The obtained data results include the minimum value Min, the mean value Mean, and the standard deviation Std. The obtained data results include the minimum value Min, the average value Mean, and the standard deviation Std.

Table 2.

Data obtained from different algorithms in the basic function.

4.3.2. CEC2017 Test Results

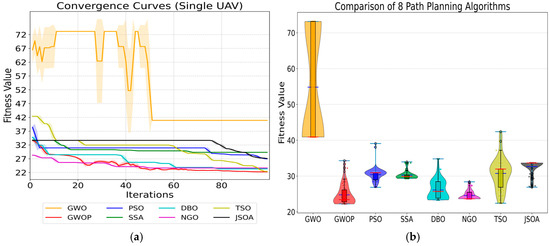

The results of the improved GWOP algorithm and seven other algorithms GWO, PSO, SSA, DBO, NGO, TSO, and JSOA on the CEC2017 test functions are presented in Figure 5. Based on the convergence curves of the unimodal, multimodal, and composite functions from F1 to F10, it can be observed that the improved two-layer fused GWOP algorithm achieves better convergence performance and lower fitness values compared to the original GWO algorithm. Moreover, the GWOP algorithm consistently obtains the lowest fitness values among all the compared algorithms, including PSO, SSA, DBO, NGO, TSO, and JSOA. Furthermore, GWOP demonstrates superior performance in terms of fitness accuracy for the mixed functions from F11 to F20 and for the complex functions from F21 to F25.

Figure 5.

CEC2017 test results.

4.4. Experimental Procedure

The path planning of logistics UAV involves four experiments, as shown in Figure 6. Experiment 1: The improved double-layer fusion GWOP algorithm is compared with the classical GWO algorithm under a single UAV scenario. Experiment 2: Building upon Experiment 1, six classical 3D trajectory planning algorithms (GWO, PSO, SSA, DBO, NGO, TSO, and JSOA) are introduced for comparison with the improved two-layer fusion GWOP algorithm to determine whether GWOP outperforms these seven algorithms. Experiment 3: The single UAV scenario from Experiment 1 is extended to multiple UAVs to compare the GWOP and GWO algorithms. Experiment 4: The single UAV scenario from Experiment 2 is similarly extended to multiple UAVs to conduct comparative experiments between GWOP and the other seven path planning algorithms.

Figure 6.

Experimental Procedure of the Double-Layer Fusion GWOP Algorithm.

5. UAV Trajectory Planning Model Design

5.1. Design of UAV Flight Environment

A target model is constructed by integrating the three key factors: shortest trajectory distance [48], smoothness, and minimum collision risk:

In this formula, , , and denote the weight coefficients corresponding to trajectory length, smoothness, and collision risk, respectively. Based on the specific requirements of trajectory planning for delivery tasks, this study sets the values of , , and .

Logistics unmanned aerial vehicle minimum trajectory distance model:

In the formula, represents the three-dimensional coordinates of the waypoint of the unmanned aerial vehicle’s trajectory planning, and represents the Euclidean distance between two points, which is . In the formula, indicates the total number of waypoints along the trajectory.

The maximum smoothness model of logistics drones:

In the formula, represents the turning angle at the waypoint of the drone’s flight path. Then, the angle between the two vectors needs to satisfy , where and .

Minimum collision risk model for logistics drones in flight among obstacles:

In the formula, represents the summation over all obstacles, represents the index of a single obstacle, represents the set of all obstacles; represents the collision risk between a single planning point and a single obstacle, represents the shortest distance from the trajectory point to the obstacle o, and represents a very small positive number.

5.2. Construct UAV Flight Constraint Model

5.2.1. Load Constraint

The payload capacity of an unmanned aerial vehicle (UAV) in delivery operations is determined by the difference between its maximum takeoff weight and its empty weight. To account for the payload limitations inherent in logistics UAVs used for cargo transportation, a payload constraint model is formulated:

In the formula, represents the payload, represents the maximum takeoff weight, represents the empty weight of the drone, and represents the upper limit of the maximum payload of the drone.

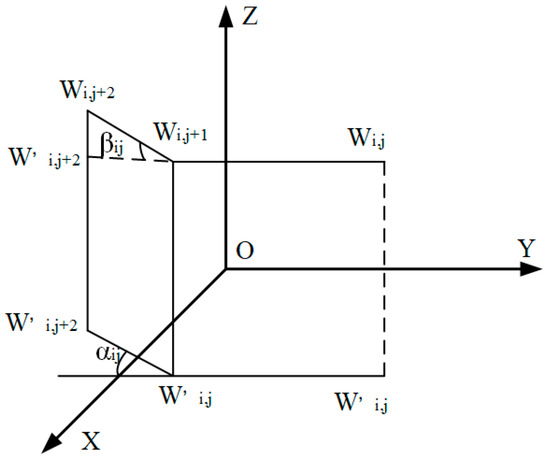

5.2.2. Turning Radius Constraint

In logistics unmanned aerial vehicle UAV delivery transportation, the turning angle constraints during flight include both vertical and horizontal limitations, as illustrated in Figure 7. Let trajectory point represent the point along the flight path, where and denote two consecutive trajectory segments during flight, and and are the projections of the corresponding flight trajectory onto the horizontal coordinate axis .

Figure 7.

Flight turn angle constraint.

Reasonable corner constraints significantly contribute to the generation of optimal flight path trajectories. When integrated with the aforementioned flight trajectory construction model:

In this formula, denotes the horizontal turning angle, denotes the vertical turning angle, and represents the unit vector corresponding to the positive direction of each coordinate axis.

5.2.3. Flight Posture Constraint

If the rotation matrix of the logistics unmanned aerial vehicle is set as , then the corresponding constraints of each attitude angle are as follows:

In this formula, denotes the pitch angle, denotes the roll angle, and denotes the yaw angle. The corresponding quaternion is represented by , where is the real part. Based on this representation, , , and are calculated.

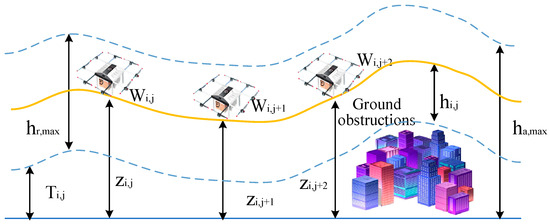

5.2.4. Flight Altitude Constraint

The flight height constraints for the unmanned aerial vehicle UAV are illustrated in Figure 8. The flight altitude of the logistics UAV must comply with the maximum allowable height limit. Specifically, denotes the terrain elevation at the waypoint along the route, represents the UAV’s flight altitude relative to sea level at that waypoint, is the maximum relative flight height, and is the maximum absolute flight height.

Figure 8.

Flight altitude constraint diagram.

The flight altitude of the unmanned aerial vehicle must satisfy the following constraint model:

In this formula, , , and denote the weights corresponding to the , , , , and track points along the flight route, respectively. represents the altitude coefficient, which has a value range of (0, 1), while denotes the optimized actual flight altitude when reaching the track point on the flight route.

5.2.5. Flight Speed Constraint

During the unmanned aerial vehicle (UAV) delivery process, the speed constraint is expressed as:

In this formula, denotes the horizontal flight speed, represents the total load during flight, is the aerodynamic drag coefficient during flight, and denotes the maximum total thrust limit of the unmanned aerial vehicle.

5.3. Simulation Example and Results Analysis

5.3.1. Comparing Single UAV Path Planning Experiments

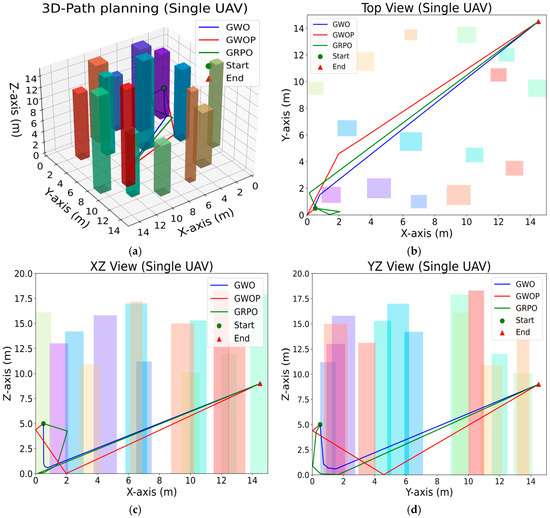

Experiment 1: Due to the limitations of visualization in the actual flight environment, supplementary experiments were carried out in a visualized three-dimensional environment, as illustrated in Figure 9. In this setup, the green circle denotes the starting point of the flight trajectory, the red triangle indicates the target endpoint of the delivery, and the colored columnar structures represent urban buildings acting as obstacles. In each trial, a single unmanned aerial vehicle (UAV) initiated its flight from the starting point and employed the improved GWOP algorithm, the classic GWO algorithm, and the GRPO algorithm, respectively, to navigate around obstacles. The optimal algorithm is defined as the one that reaches the target endpoint first, with a collision-free trajectory and the shortest path length. To ensure experimental reliability, simulations were conducted over 100, 300, and 500 iterations.

Figure 9.

Comparative Experiment of 2 Algorithms for Single UAV Path Planning: (a) 3D-Path planning Single UAV (100); (b) Top View Single UAV (100); (c) XZ View Single UAV (100); (d) YZ View Single UAV (100); (e) 3D-Path planning Single UAV (300); (f) Top View Single UAV (300); (g) XZ View Single UAV (300); (h) YZ View Single UAV (300); (i) 3D-Path planning Single UAV (500); (j) Top View Single UAV (500); (k) XZ View Single UAV (500); (l) YZ View Single UAV (500).

Based on the aforementioned three-dimensional environment, the comparison visualization results of a single unmanned aerial vehicle (UAV) operating under three different trajectory planning algorithms indicate that, in the 100-iteration Figure 9a, all trajectory planning algorithms successfully reach the target endpoint and complete the path from the starting point to the destination. However, as shown in Figure 9b–d, the GWOP algorithm generates a generally smoother obstacle-avoidance path, whereas the GWO and GRPO algorithms experience multiple collisions during trajectory planning. The 300-iteration Figure 9e–h and the 500-iteration Figure 9i–l consistently demonstrate that the improved GWOP algorithm significantly outperforms the classical GWO and GRPO algorithms in terms of obstacle avoidance space utilization and path smoothness. The training results of trajectory planning using a single UAV with the three algorithms across 100, 300, and 500 iterations are presented in Figure 10.

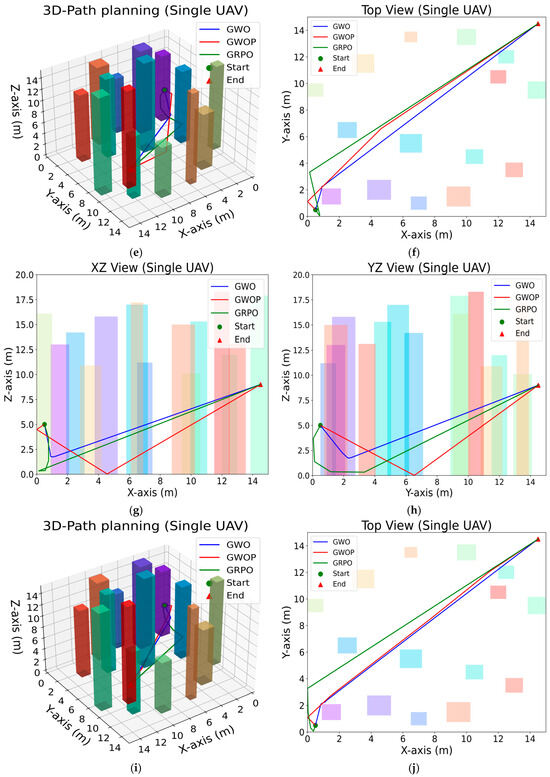

Figure 10.

Statistical Comparison of 3 Algorithms for Single UAV Path Planning: (a) Convergence Curves Single UAV (100); (b) Comparison 2 Path Planning Algorithms (100); (c) Convergence Curves Single UAV (300); (d) Comparison 2 Path Planning Algorithms (300); (e) Convergence Curves Single UAV (500); (f) Comparison 2 Path Planning Algorithms (500).

The training results in Figure 10a demonstrate that GWOP achieves rapid convergence within approximately 20 iterations, whereas the GWO and GRPO algorithms exhibit slower initial convergence and only gradually stabilize after around 40 iterations. Consequently, in the 100-iteration experiment, GWOP displays a significantly faster convergence rate compared to GWO and GRPO, reaching the optimal solution with fewer iterations. As shown in Figure 10b, the fitness value of the GWOP algorithm is approximately 25.63, while those of the GWO and GRPO algorithms are 31.58 and 34.58, respectively. This indicates that GWOP incurs lower cost consumption than GWO and GRPO in path planning. Figure 10c,d from the 300-iteration results, as well as Figure 10e,f from the 500-iteration results, further confirm that GWOP converges faster and achieves lower planning costs in trajectory planning.

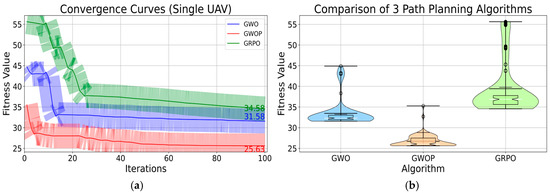

Experiment 2: Given the superior performance of GWOP over GWO observed in the previous experiment, six additional trajectory planning algorithms—PSO, SSA, DBO, NGO, TSO, and JSOA—are included for comparison. A total of eight experiments are conducted for trajectory planning from the starting point to the target endpoint, as illustrated in Figure 11. The starting point, target endpoint, and building obstacles are configured identically to those in Experiment 1.

Figure 11.

Comparative Experiment of 8 Algorithms for Single UAV Path Planning: (a) 3D-Path planning (Single UAV); (b) Top View (Single UAV); (c) XZ View (Single UAV); (d) YZ View (Single UAV).

Based on the visualization results comparing eight different trajectory planning algorithms for a single unmanned aerial vehicle (UAV) in the aforementioned three-dimensional environment, Figure 11a presents the trajectory curves generated by the eight algorithms from the starting point to the target endpoint. The trajectories reveal notable differences among the algorithms. Combined with Figure 11b–d, it is evident that the GWO algorithm frequently encounters obstacles during trajectory planning, whereas the PSO, SSA, and JSOA algorithms produce redundant obstacle avoidance paths. Therefore, it can be concluded that the GWOP algorithm generates a smoother obstacle avoidance trajectory. A further analysis of the data obtained from the eight algorithms is provided in Figure 12.

Figure 12.

Statistical Comparison of 8 Algorithms for Single UAV Path Planning: (a) Convergence Curves (Single UAV); (b) Comparison 8 Path Planning Algorithms (Single UAV).

The training results from the aforementioned Figure 12a indicate that the GWOP algorithm achieves convergence within approximately 20 iterations and subsequently stabilizes. In contrast, the GWO algorithm exhibits significant fluctuations during the first 53 iterations and converges to the highest cost. The DBO and NGO algorithms also converge within about 40 iterations, with relatively lower costs. Meanwhile, the PSO, TSO, SSA, and JSOA algorithms begin to converge after approximately 60 iterations, demonstrating the slowest convergence speed. As shown in Figure 12b, the fitness values of the GWOP algorithm are concentrated between 20 and 30, clearly outperforming the other seven algorithms in terms of fitness.

5.3.2. Comparing Multi-UAV Path Planning Experiments

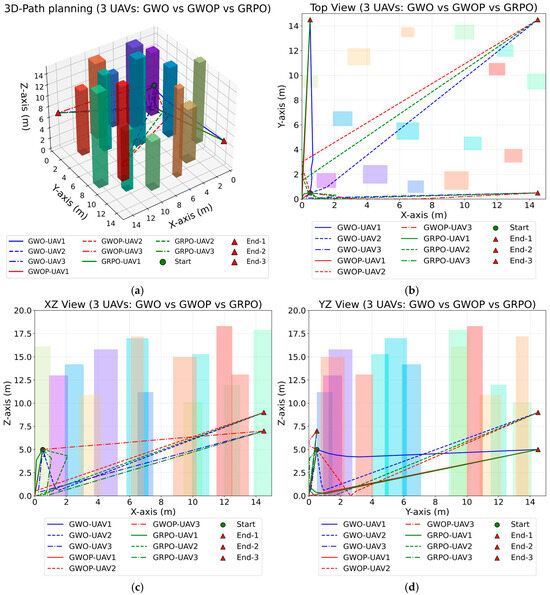

Experiment 3: Following the superior performance of the GWOP algorithm in the previous single UAV three-dimensional trajectory planning experiments, this experiment further introduces multiple UAVs to evaluate the robustness of the improved two-layer GWOP algorithm. The trajectory comparison of the improved GWOP algorithm, the original GWO algorithm, and the GRPO algorithm for multiple UAVs is presented in Figure 13. In this setup, three UAVs share the same departure point but have different target endpoints, with the differently colored columns representing urban building obstacles.

Figure 13.

Comparative Experiment of 3 Algorithms for Multi-UAV Path Planning: (a) 3D-Path planning (3 UAVs); (b) Top View (3 UAVs); (c) XZ View (3 UAVs); (d) YZ View S (3 UAVs).

Based on the aforementioned three-dimensional environment, the comparison visualization results of multiple unmanned aerial vehicles (UAVs) operating under three different trajectory planning algorithms demonstrate that, as shown in Figure 13a, all three UAVs using these algorithms exhibit basic obstacle avoidance capabilities and successfully complete the task of planning paths from the starting point to the target. From the top view in Figure 13b, it is evident that the UAVs under the GWOP algorithm achieve smoother obstacle avoidance maneuvers. In contrast, UAVs using the GWO and GRPO algorithms experience collisions with obstacles in certain cases, particularly among UAVs with different serial numbers. Notably, no collision behavior occurs during the trajectory planning of the three UAVs using the GWOP algorithm, which effectively reduces energy consumption and control complexity during flight. By synthesizing the various perspective views of the flight environment, it can be concluded that the improved GWOP algorithm offers significant advantages over the classical GWO and GRPO algorithms in multi-UAV flight scenarios. To validate the reliability of the conclusions drawn from the visualization results, further analysis is conducted on the convergence and violin distribution statistics, as presented in Figure 14.

Figure 14.

Statistical Comparison of 3 Algorithms for Multi-UAV Path Planning: (a) Convergence Curves (3 UAVs); (b) Comparison 3 Path Planning Algorithms (3 UAVs).

The training results from Figure 14a demonstrate that the GWOP algorithm achieves rapid convergence within 50 iterations for the trajectory planning of three drones. In contrast, UAV2 using the GWO algorithm begins to converge after 5 iterations, while the GRPO algorithm starts to converge after 20 iterations. However, the converged fitness values of both the GWO and GRPO algorithms are higher than those of all drones under the GWOP algorithm. As shown in Figure 14b, the fitness values of the GWOP algorithm are concentrated in the range of 15 to 27, whereas those of the GWO and GRPO algorithms are concentrated in the ranges of 20 to 36 and 21 to 39, respectively. This indicates that the GWOP algorithm outperforms the GWO and GRPO algorithms in terms of the quality of trajectories generated for multiple drones.

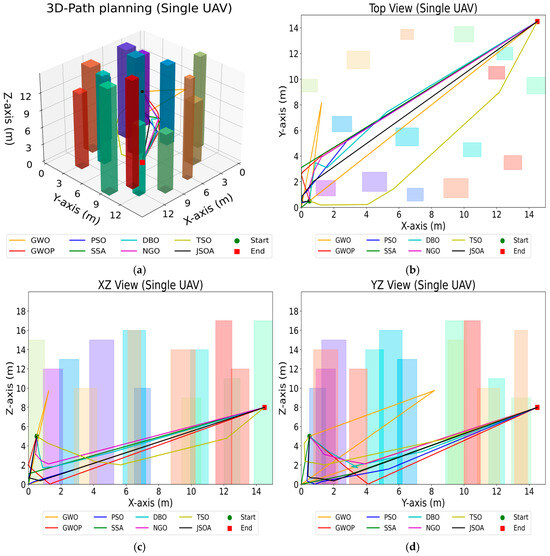

Experiment 4: Given the superior performance of the GWOP algorithm over the GWO algorithm in multi-drone trajectory planning, as demonstrated in the previous results, six additional trajectory planning algorithms—PSO, SSA, DBO, NGO, TSO, and JSOA—are introduced for further comparative analysis, as illustrated in Figure 15. A comparative experiment is conducted using eight different algorithms for trajectory planning from the starting point to the target endpoints. The starting point, building obstacles, and the number of drones remain consistent with those in Experiment 1, while the number of target endpoints is increased to three to match the current number of drones.

Figure 15.

Comparative Experiment of 8 Algorithms for Multi-UAV Path Planning: (a) 3D-Path planning (3 UAVs); (b) Top View (3 UAVs); (c) XZ View (3 UAVs); (d) YZ View (3 UAVs).

From the aforementioned three-dimensional environment involving multiple drones, the visualization results obtained using eight different trajectory planning algorithms demonstrate that, as shown in Figure 15a, all three drones successfully avoid the three-dimensional cylindrical obstacles and reach the target destination using each of the eight algorithms. From the top view in Figure 15b, it is evident that multi-drone operations based on the GWO, TSO, SSA, NGO, and JSOA algorithms exhibit varying degrees of collision. Meanwhile, trajectory planning using the PSO and DBO algorithms results in a local “clustering” phenomenon. In contrast, the GWOP algorithm generates more dispersed trajectories, effectively reducing the “potential conflict risk” in multi-drone collaborative planning. Figure 15c,d further confirm the superior performance of the GWOP algorithm in terms of spatial utilization. To provide a more in-depth evaluation, the convergence characteristics and violin distribution statistics presented in Figure 16 are further analyzed.

Figure 16.

Statistical Comparison of 8 Algorithms for Multi-UAV Path Planning: (a) Convergence Curves (3 UAVs); (b) Comparison 8PathPlanning Algorithms (3 UAVs).

The training results from Figure 16a demonstrate that the GWOP algorithm achieves rapid convergence in the trajectory planning of three drones, stabilizing around the 18th iteration. In contrast, the GWO algorithm exhibits significant fluctuations during the first 60 iterations and only stabilizes after the 70th iteration. The DBO and SSA algorithms converge within the first 55 iterations, but their fitness values are notably higher than those of the GWOP algorithm. The TSO, PSO, NGO, and JSOA algorithms converge the slowest, with convergence beginning after the 70th iteration. As shown in Figure 16b, the fitness values of the GWOP algorithm are concentrated in the range of 15 to 22, whereas those of the GWO algorithm fall within the range of 27 to 41. The fitness values of the DBO, NGO, TSO, PSO, SSA, and JSOA algorithms all exceed the range observed for the GWOP algorithm. Therefore, it can be concluded that the GWOP algorithm demonstrates a significant advantage over the other seven algorithms in terms of both convergence speed and trajectory quality in multi-drone trajectory planning.

6. Discussion

6.1. Discussion on the Results of a Single UAV

This section provides a summary and analysis of the results from the aforementioned experimental content. Key metrics are defined as follows: Optimal Path Length (OPL), Average Path Length (APL), Number of Obstacle Collisions (NOC), Fitness Value (FV), Number of UAVs (NOU), Trajectory Planning (TP), Algorithms (Algs), and Comparison (Comp).

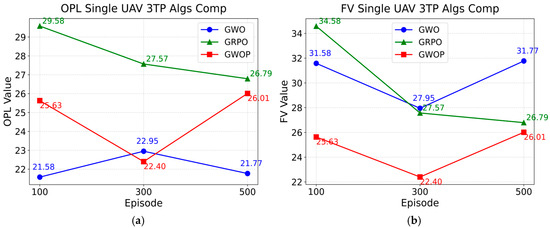

In Experiment 1, the GRPO algorithm was integrated with and used to improve the classical GWO algorithm. Subsequently, the enhanced two-layer GWOP algorithm was evaluated through ablation experiments by comparing it with the classical GWO and GRPO algorithms. The trajectory planning of a single unmanned aerial vehicle (UAV) from the starting point to the endpoint was carried out, and the results are summarized in Table 3. Specifically, the GWOP, GWO, and GRPO algorithms were each trained 100, 300, and 500 times, respectively, across three evaluation indicators: Optimal Path Length (OPL), Number of Collisions (NOC), and Fitness Value (FV). The results were then summarized and analyzed. An evaluation indicator was considered optimal when the OPL and FV values were minimized and no collisions (NOC = 0) were observed.

Table 3.

Comp Results Table of 3TP Algs for Single UAV.

Table 3 provides a detailed comparison and analysis of the GWOP algorithm, GWO algorithm, and GRPO algorithm in the context of a single drone’s flight, focusing on the OPL, NOC, and FV indicators. The results are summarized in Figure 17. As shown in Figure 17a, which illustrates the OPL values, the GWO algorithm achieved distances of 21.58 m and 21.77 m at Episode 100 and Episode 300, respectively, which are shorter than the GWOP algorithm’s 25.63 m and 26.01 m at the same episodes. However, the GWO algorithm encountered two collisions in both cases. Regarding the FV indicator in Figure 17b, the GWOP algorithm obtained fitness values of 25.63 m, 22.40 m, and 26.01 m at Episodes 100, 300, and 500, respectively. These values are significantly lower than those of the GWO algorithm (31.58 m, 27.95 m, and 31.77 m) and the GRPO algorithm (34.58 m, 27.57 m, and 26.79 m). Furthermore, as indicated by the NOC metric, the GWOP algorithm successfully avoided obstacles in all three iterations, whereas both the GWO and GRPO algorithms experienced multiple collisions before improvement. These results clearly demonstrate the superior performance of the improved GWOP algorithm in three-dimensional trajectory planning for logistics drones.

Figure 17.

Comp Results Figure of 3TP Algs for Single UAV: (a) OPL Single UAV 3TP Algs Comp; (b) FV Single UAV 3TP Algs Comp.

From Figure 17, it can be concluded that the improved double-layer fusion GWOP algorithm reduced the FV by 18.84% at 100 iterations, 19.86% at 300 iterations, and 18.13% at 500 iterations, with an average reduction of 18.94% across the three iteration levels compared to the improved GWO algorithm. Compared with the improved GRPO algorithm, the reductions were 25.88%, 18.75%, and 2.91% at 100, 300, and 500 iterations, respectively, yielding an average reduction of 15.85%.

In Experiment 2, the GWOP algorithm demonstrated significantly better performance than the GWO and GRPO algorithms in single UAV trajectory planning. To further evaluate the improved GWOP algorithm, six additional trajectory planning algorithms were introduced, and a comparative analysis was conducted using a total of eight different algorithms. Finally, experiments with 100 iterations were carried out for OPL, NOC, and FV, and the results are summarized in Table 4.

Table 4.

Comp Results Table of 8TP Algs for Single UAV.

Table 4 presents a detailed comparison and analysis of the GWOP algorithm against seven other algorithms—GWO, PSO, SSA, DBO, NGO, TSO, and JSOA—in the single-drone flight scenario, focusing on the OPL, NOC, and FV metrics. The results are illustrated in Figure 18. As shown in Figure 18a, the GWOP algorithm achieves a trajectory length of 22.24 m, whereas the other seven algorithms yield trajectory lengths of 30.92 m, 26.88 m, 29.34 m, 23.35 m, 23.66 m, 22.43 m, and 26.90 m, respectively. According to Figure 18b, the GWOP algorithm obtains a fitness value of 22.24 for the FV metric, while the corresponding values for the other algorithms are 40.92, 26.88, 29.34, 23.35, 23.66, 22.43, and 26.90. Regarding the NOC data, the trajectories generated by the GWOP, PSO, DBO, NGO, and TSO algorithms satisfy the collision-free requirement in the single-drone delivery task. Overall, the experimental results indicate that the GWOP algorithm outperforms all other tested algorithms.

Figure 18.

Comp Results Figure of 8TP Algs for Single UAV: (a) OPL Single UAV 8TP Algs Comp; (b) FV Single UAV 8TP Algs Comp.

From Figure 18a, it can be observed that the improved two-layer fused GWOP algorithm achieves an OPL value that is 15.15% lower than the average of the other seven algorithms. As shown in Figure 18b, the improved GWOP algorithm also demonstrates a 19.54% reduction in FV compared to the average of the other algorithms. In summary, these results indicate that the improved two-layer GWOP algorithm offers significant advantages in single UAV trajectory planning.

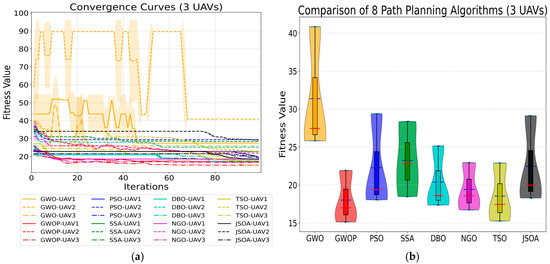

6.2. Discussion on the Results of Multiple UAVs

In Experiment 3, after verifying the superiority of the GWOP algorithm over multiple algorithms in the single UAV scenario, the number of UAVs was increased to evaluate its performance in a multi-UAV setting. The improved two-layer fused GWOP algorithm was compared with the classic GWO and GRPO algorithms in this multi-UAV scenario. The trajectory planning data from the starting point to the endpoint for multiple UAVs are presented in Table 5. Specifically, 100 iterations were conducted for each of the four evaluation indicators—APL, OPL, NOC, and FV—for the GWOP, GWO, and GRPO algorithms. The evaluation criteria consider APL, OPL, and FV optimal when their values are minimized, and NOC is zero, indicating no collisions.

Table 5.

Comp Results Table of 3TP Algs for Multi-UAVs.

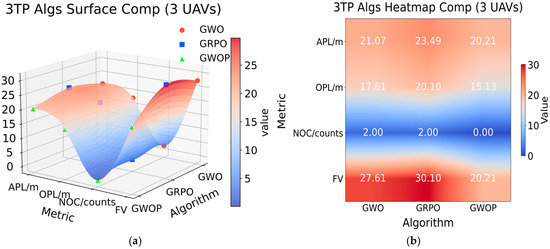

Table 5 presents the APL, OPL, NOC, and FV index data obtained from the flight trajectories of multiple drones using the GWOP, GWO, and GRPO algorithms. The data were visualized and analyzed, resulting in Figure 19. As shown in Figure 19a,b, the GWOP algorithm achieves APL, OPL, and FV values of 20.21 m, 15.13 m, and 20.21 m, respectively, which are significantly lower than the corresponding values for the GWO algorithm (21.07 m, 17.61 m, and 27.61 m) and the GRPO algorithm (23.49 m, 20.10 m, and 30.10 m). Moreover, the GWOP algorithm successfully completed conflict-free obstacle avoidance in the trajectory planning of three drones, whereas both the GWO and GRPO algorithms resulted in two collision incidents. Therefore, the experimental results demonstrate that the GWOP algorithm exhibits a clear advantage over the GWO and GRPO algorithms in multi-drone trajectory planning.

Figure 19.

Comp Results Figure of 3TP Algs for Multi-UAVs: (a) 3TP Algs Surface Comp (3 UAVs); (b) 3TP Algs Heatmap Comp (3 UAVs).

From Figure 19a,b, it can be observed that the improved double-layer fusion GWOP algorithm reduces the APL index by 4.08% compared to the GWO algorithm, the OPL index by 14.08%, and the FV index by 26.80%. Compared with the GRPO algorithm, the reductions are 13.96% for APL, 24.73% for OPL, and 32.86% for FV. These results further confirm the effectiveness of the improved GWOP algorithm in multi-UAV trajectory planning.

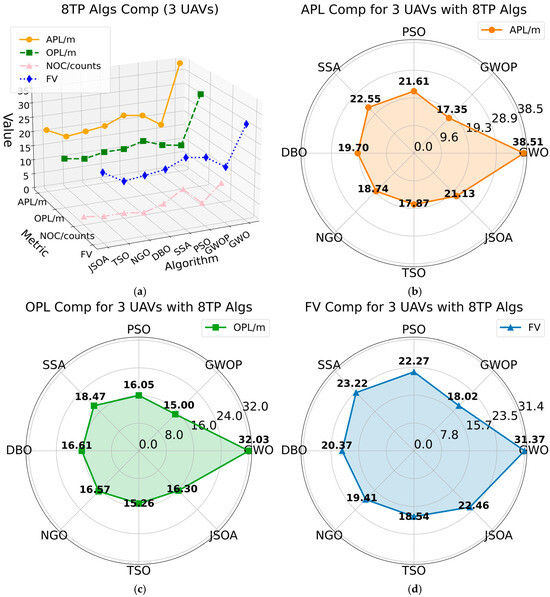

In Experiment 4, after demonstrating the superiority of the GWOP algorithm over two other algorithms in the multi-UAV scenario, six additional trajectory planning algorithms were introduced. A comparative analysis involving a total of eight algorithms was then conducted in the 3D multi-UAV trajectory planning environment to further validate the performance of the improved GWOP algorithm. The experimental results from 100 iterations for APL, OPL, NOC, and FV are summarized in Table 6. The evaluation criteria consider the shortest APL, OPL, and FV values as optimal, provided that no NOC occur.

Table 6.

Comp Results Table of 8TP Algs for Multi-UAVs.

Table 6 presents a detailed comparison and analysis of the GWOP algorithm against seven other algorithms—GWO, PSO, SSA, DBO, NGO, TSO, and JSOA—in the context of multiple drone flights, focusing on the APL, OPL, NOC, and FV metrics. The results are illustrated in Figure 20. According to the APL data in Figure 20b, the GWOP algorithm achieves a trajectory length of 17.35 m, whereas the other seven algorithms yield lengths of 38.51 m, 21.61 m, 22.55 m, 19.70 m, 18.74 m, 17.87 m, and 21.13 m, respectively. As shown in Figure 20c, the OPL value for the GWOP algorithm is 15.00 m, compared to 32.03 m, 16.05 m, 18.47 m, 16.61 m, 16.57 m, 15.26 m, and 16.30 m for the other algorithms. Regarding the FV metric in Figure 20d, the GWOP algorithm obtains a value of 18.02 m, while the corresponding values for the other algorithms are 31.37 m, 22.27 m, 23.22 m, 20.37 m, 19.41 m, 18.54 m, and 22.46 m. Finally, as indicated in Figure 20a, the GWO algorithm demonstrates a significant advantage over the other seven algorithms in terms of APL, OPL, NOC, and FV metrics in multi-drone trajectory planning.

Figure 20.

Comp Results Figure of 8TP Algs for Multi-UAVs: (a) 8TP Algs Comp (3 UAVs); (b) APL Comp for 3 UAVs with 8TP Algs; (c) OPL Comp for 3 UAVs with 8TP Algs; (d) FV Comp for 3 UAVs with 8TP Algs.

To summarize, as indicated in Figure 20a, the improved two-layer fusion GWOP algorithm demonstrates superior performance compared to the other seven algorithms. Specifically, it achieves an APL index that is 24.14% lower than the average, an OPL index that is 20.04% lower, and an FV index that is 19.98% lower. Furthermore, the trajectory curve generated by the GWOP algorithm for path planning from the starting point to the target endpoint satisfies the requirements of an optimal, conflict-free trajectory.

7. Conclusions

This study addresses the challenges of dynamic trajectory planning for unmanned aerial vehicles (UAVs) in logistics environments. First, a three-dimensional multi-objective flight model is established. Based on this framework, a two-layer fusion GWOP algorithm is proposed. Subsequently, the GAT attention mechanism is integrated to enable dynamic association. The key experimental findings are summarized as follows:

- The CEC2017 benchmark functions are employed for evaluation. Compared with the GWO algorithm, the improved two-layer fusion GWOP algorithm demonstrates superior convergence curves and fitness values across unimodal, multimodal, and complex functions F1–F10. When compared with six other algorithms—PSO, SSA, DBO, NGO, TSO, and JSOA—it achieves better fitness values in the hybrid functions F11–F20 and F21–F25.

- In the single UAV 3D trajectory planning experiment, the improved GWOP algorithm reduces the FV (fitness value) by 18.84%, 19.86%, and 18.13% at 100, 300, and 500 iterations, respectively, yielding an average reduction of 18.94% across all three. The NOC (number of collisions) is reduced from 2 to 0. Compared with the classic GRPO algorithm, the FV reductions are 25.88%, 18.75%, and 2.91% at the same iteration levels, with an average reduction of 15.85%, and the NOC is reduced from 1 to 0. These results highlight the algorithm’s superior path optimization capability and robust obstacle avoidance performance.

- When extended to a comparison involving eight trajectory planning algorithms, the GWOP algorithm achieves OPL (optimal path length) and FV values that are 15.15% and 19.54% lower than the average, respectively, further confirming its effectiveness in complex 3D trajectory planning for single UAVs.

- In the multi-UAV 3D scenario, the advantages of the GWOP algorithm become even more pronounced. Compared with the GWO algorithm, it reduces APL (average path length), OPL, and FV by 4.08%, 14.08%, and 26.80%, respectively. Compared with the GRPO algorithm, the reductions are 13.96%, 24.73%, and 32.86%, respectively. The NOC is also reduced from 2 to 0, further validating the algorithm’s superior obstacle avoidance and trajectory optimization capabilities.

- When evaluated against eight algorithms in a multi-UAV environment, the GWOP algorithm shows reductions of 24.14% in APL (average path length), 20.04% in OPL (optimal path length), and 19.98% in FV (fitness value) compared to the average. Collectively, these experiments demonstrate that the improved GWOP algorithm consistently outperforms other methods in terms of path length, obstacle avoidance, and trajectory smoothness, offering an efficient and reliable planning solution for UAV logistics operations.

Despite its strong performance, future research can further enhance the algorithm in three key directions:

First, improving adaptability to meteorological conditions by incorporating real-time weather data—such as wind intensity and rainfall—to better simulate real-world logistics uncertainties.

Second, expanding to large-scale UAV clusters to explore the algorithm’s scalability in coordinating dozens or even hundreds of UAVs, optimizing communication and path coordination mechanisms for complex swarm logistics tasks.

Third, extending the algorithm to cross-platform and multi-objective optimization, enabling its application in heterogeneous logistics systems such as autonomous ground vehicles and unmanned ships. This would involve integrating constraints like energy consumption and time windows, ultimately supporting the development of a globally coordinated intelligent logistics path planning system and advancing the evolution of the smart logistics ecosystem.

Author Contributions

J.D.: Conceptualization, Investigation, Validation, Writing—original draft, Writing—review and editing, Formal analysis, Data curation, Investigation. Y.Z.: Formal analysis, Data curation, Investigation, Project administration, Writing—original draft. Y.S.: Data curation, Investigation, Project administration. H.Z.: Conceptualization, Funding acquisition, Supervision, Writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by The National Social Science Fund of China (No. 22&ZD169) and the Key project of Civil Aviation Joint Fund of National Natural Science Foundation of China (No. U2133207).

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| OPL | Optimal Path Length |

| APL | Average Path Length |

| NOC | Number of Obstacle Collisions |

| FV | Fitness Value |

| NOU | Number of UAVs |

| TP | Trajectory Planning |

| Algs | Algorithms |

| Comp | Comparison |

References

- Niu, B.; Zhang, J.; Xie, F. Drone logistics’ resilient development: Impacts of consumer choice, competition, and regulation. Transp. Res. Part A Policy Pract. 2024, 185, 104126. [Google Scholar] [CrossRef]

- Shang, Z.; Bradley, J.; Shen, Z. A co-optimal coverage path planning method for aerial scanning of complex structures. Expert Syst. Appl. 2020, 158, 113535. [Google Scholar] [CrossRef]

- Liu, X.; Li, G.; Yang, H.; Zhang, N.; Wang, L.; Shao, P. Agricultural UAV trajectory planning by incorporating multi-mechanism improved grey wolf optimization algorithm. Expert Syst. Appl. 2023, 233, 120946. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, W.; Qin, W.; Tang, W. A novel UAV path planning approach: Heuristic crossing search and rescue optimization algorithm. Expert Syst. Appl. 2023, 215, 119243. [Google Scholar] [CrossRef]

- Solka, J.L.; Perry, J.C.; Poellinger, B.R.; Rogers, G.W. Fast computation of optimal paths using a parallel Dijkstra algorithm with embedded constraints. Neurocomputing 1995, 8, 195–212. [Google Scholar] [CrossRef]

- Phong, T.Q.; Hoai An, L.T.; Tao, P.D. Decomposition branch and bound method for globally solving linearly constrained indefinite quadratic minimization problems. Oper. Res. Lett. 1995, 17, 215–220. [Google Scholar] [CrossRef]

- Huang, J.; Chen, C.; Shen, J.; Liu, G.; Xu, F. A self-adaptive neighborhood search A-star algorithm for mobile robots global path planning. Comput. Electr. Eng. 2025, 123, 110018. [Google Scholar] [CrossRef]

- Wang, J.; Bi, C.; Liu, F.; Shan, J. Dubins-RRT* motion planning algorithm considering curvature-constrained path optimization. Expert Syst. Appl. 2026, 296, 128390. [Google Scholar] [CrossRef]

- Yu, X.; Jiang, N.; Wang, X.; Li, M. A hybrid algorithm based on grey wolf optimizer and differential evolution for UAV path planning. Expert Syst. Appl. 2023, 215, 119327. [Google Scholar] [CrossRef]

- Lou, T.; Yue, Z.; Jiao, Y.; He, Z. A hybrid strategy-based GJO algorithm for robot path planning. Expert Syst. Appl. 2024, 238, 121975. [Google Scholar] [CrossRef]

- Cui, Y.; Hu, W.; Rahmani, A. A reinforcement learning based artificial bee colony algorithm with application in robot path planning. Expert Syst. Appl. 2022, 203, 117389. [Google Scholar] [CrossRef]

- Wang, Y.; Tong, K.; Fu, C.; Wang, Y.; Li, Q.; Wang, X.; He, Y.; Xu, L. Hybrid path planning algorithm for robots based on modified golden jackal optimization method and dynamic window method. Expert Syst. Appl. 2025, 282, 127808. [Google Scholar] [CrossRef]

- Lyu, L.; Yang, F. MMPA: A modified marine predator algorithm for 3D UAV path planning in complex environments with multiple threats. Expert Syst. Appl. 2024, 257, 124955. [Google Scholar] [CrossRef]

- Xiao, Z.; Li, P.; Liu, C.; Gao, H.; Wang, X. MACNS: A generic graph neural network integrated deep reinforcement learning based multi-agent collaborative navigation system for dynamic trajectory planning. Inf. Fusion 2024, 105, 102250. [Google Scholar] [CrossRef]

- Yu, X.; Luo, W. Reinforcement learning-based multi-strategy cuckoo search algorithm for 3D UAV path planning. Expert Syst. Appl. 2023, 223, 119910. [Google Scholar] [CrossRef]

- Popović, M.; Ott, J.; Rückin, J.; Kochenderfer, M.J. Learning-based methods for adaptive informative path planning. Robot. Auton. Syst. 2024, 179, 104727. [Google Scholar] [CrossRef]

- Fan, Q.; Huang, H.; Li, Y.; Han, Z.; Hu, Y.; Huang, D. Beetle antenna strategy based grey wolf optimization. Expert Syst. Appl. 2021, 165, 113882. [Google Scholar] [CrossRef]

- Tang, H.; Sun, W.; Lin, A.; Xue, M.; Zhang, X. A GWO-based multi-robot cooperation method for target searching in unknown environments. Expert Syst. Appl. 2021, 186, 115795. [Google Scholar] [CrossRef]

- Li, K.; Ge, F.; Han, Y.; Wang, Y.A.; Xu, W. Path planning of multiple UAVs with online changing tasks by an ORPFOA algorithm. Eng. Appl. Artif. Intell. 2020, 94, 103807. [Google Scholar] [CrossRef]

- Niu, Y.; Yan, X.; Wang, Y.; Niu, Y. 3D real-time dynamic path planning for UAV based on improved interfered fluid dynamical system and artificial neural network. Adv. Eng. Inform. 2024, 59, 102306. [Google Scholar] [CrossRef]

- Lee, H. Research on multi-functional logistics intelligent Unmanned Aerial Vehicle. Eng. Appl. Artif. Intell. 2022, 116, 105341. [Google Scholar] [CrossRef]

- Yu, S.; Chen, J.; Liu, G.; Tong, X.; Sun, Y. SOF-RRT*: An improved path planning algorithm using spatial offset sampling. Eng. Appl. Artif. Intell. 2023, 126, 106875. [Google Scholar] [CrossRef]

- Fang, W.; Liao, Z.; Bai, Y. Improved ACO algorithm fused with improved Q-Learning algorithm for Bessel curve global path planning of search and rescue robots. Robot. Auton. Syst. 2024, 182, 104822. [Google Scholar] [CrossRef]

- Liu, X.; Shao, P.; Li, G.; Ye, L.; Yang, H. Complex hilly terrain agricultural UAV trajectory planning driven by Grey Wolf Optimizer with interference model. Appl. Soft Comput. 2024, 160, 111710. [Google Scholar] [CrossRef]

- Wang, Z.; Yuan, F.; Li, R.; Zhang, M.; Luo, X. Hidden AS link prediction based on random forest feature selection and GWO-XGBoost model. Comput. Netw. 2025, 262, 111164. [Google Scholar] [CrossRef]

- Zhu, C.; Bouteraa, Y.; Khishe, M.; Martín, D.; Hernando-Gallego, F.; Vaiyapuri, T. Enhancing unmanned marine vehicle path planning: A fractal-enhanced chaotic grey wolf and differential evolution approach. Knowl.-Based Syst. 2025, 317, 113481. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, H.; Yang, C.; Zhang, J.; Wang, J. Optimal design of mixed dielectric coaxial-annular TSV using GWO algorithm based on artificial neural network. Integration 2024, 97, 102205. [Google Scholar] [CrossRef]

- Lakzaei, B.; Haghir Chehreghani, M.; Bagheri, A. LOSS-GAT: Label propagation and one-class semi-supervised graph attention network for fake news detection. Appl. Soft Comput. 2025, 174, 112965. [Google Scholar] [CrossRef]

- Stamatopoulos, M.; Banerjee, A.; Nikolakopoulos, G. Conflict-free optimal motion planning for parallel aerial 3D printing using multiple UAVs. Expert Syst. Appl. 2024, 246, 123201. [Google Scholar] [CrossRef]

- Kim, M.; Jang, Y.; Sung, T. Graph-based technology recommendation system using GAT-NGCF. Expert Syst. Appl. 2025, 288, 128240. [Google Scholar] [CrossRef]

- Zhang, H.; An, X.; He, Q.; Yao, Y.; Zhang, Y.; Fan, F.; Teng, Y. Quadratic graph attention network (Q-GAT) for robust construction of gene regulatory network. Neurocomputing 2025, 631, 129635. [Google Scholar] [CrossRef]

- Zhao, J.; Yan, Z.; Zhou, Z.; Chen, X.; Wu, B.; Wang, S. A ship trajectory prediction method based on GAT and LSTM. Ocean Eng. 2023, 289, 116159. [Google Scholar] [CrossRef]

- Zhou, L.; Wang, H. MST-GAT: A multi-perspective spatial-temporal graph attention network for multi-sensor equipment remaining useful life prediction. Inf. Fusion 2024, 110, 102462. [Google Scholar] [CrossRef]

- Sheng, Z.; Song, T.; Song, J.; Liu, Y.; Ren, P. Bidirectional rapidly exploring random tree path planning algorithm based on adaptive strategies and artificial potential fields. Eng. Appl. Artif. Intell. 2025, 148, 110393. [Google Scholar] [CrossRef]

- Niu, Y.; Yan, X.; Wang, Y.; Niu, Y. Three-dimensional UCAV path planning using a novel modified artificial ecosystem optimizer. Expert Syst. Appl. 2023, 217, 119499. [Google Scholar] [CrossRef]

- Fu, B.; Chen, Y.; Quan, Y.; Zhou, X.; Li, C. Bidirectional artificial potential field-based ant colony optimization for robot path planning. Robot. Auton. Syst. 2025, 183, 104834. [Google Scholar] [CrossRef]

- Stache, F.; Westheider, J.; Magistri, F.; Stachniss, C.; Popović, M. Adaptive path planning for UAVs for multi-resolution semantic segmentation. Robot. Auton. Syst. 2023, 159, 104288. [Google Scholar] [CrossRef]

- Lin, H.; Shodiq, M.A.F.; Hsieh, M.F. Robot path planning based on three-dimensional artificial potential field. Eng. Appl. Artif. Intell. 2025, 144, 110127. [Google Scholar] [CrossRef]

- Xia, Q.; Liu, S.; Guo, M.; Wang, H.; Zhou, Q.; Zhang, X. Multi-UAV trajectory planning using gradient-based sequence minimal optimization. Robot. Auton. Syst. 2021, 137, 103728. [Google Scholar] [CrossRef]

- Hu, L.; Wei, C.; Yin, L. MAPPO-ITD3-IMLFQ algorithm for multi-mobile robot path planning. Adv. Eng. Inform. 2025, 65, 103398. [Google Scholar] [CrossRef]

- Lin, S.; Liu, A.; Wang, J.; Kong, X. An improved fault-tolerant cultural-PSO with probability for multi-AGV path planning. Expert Syst. Appl. 2024, 237, 121510. [Google Scholar] [CrossRef]

- Zhang, T.; Hu, H.; Liang, Y.; Liu, X.; Rong, Y.; Wu, C.; Zhang, G.; Huang, Y. A novel path planning approach to minimize machining time in laser machining of irregular micro-holes using adaptive discrete grey wolf optimizer. Comput. Ind. Eng. 2024, 193, 110320. [Google Scholar] [CrossRef]