Angle Effects in UAV Quantitative Remote Sensing: Research Progress, Challenges and Trends

Abstract

Highlights

- This paper summarizes the research progress on the angle effect in UAV quantitative remote sensing, covering theories, data acquisition techniques, data processing methods, and practical application.

- The article clearly outlines the current theoretical and technical challenges in studying the angle effect in UAV quantitative remote sensing and proposes future research directions.

- The article provides technical references and methodological support for practical engineering applications.

- The paper contributes to advancing theoretical innovation and technological breakthroughs in this field.

Abstract

1. Introduction

2. Research Methods

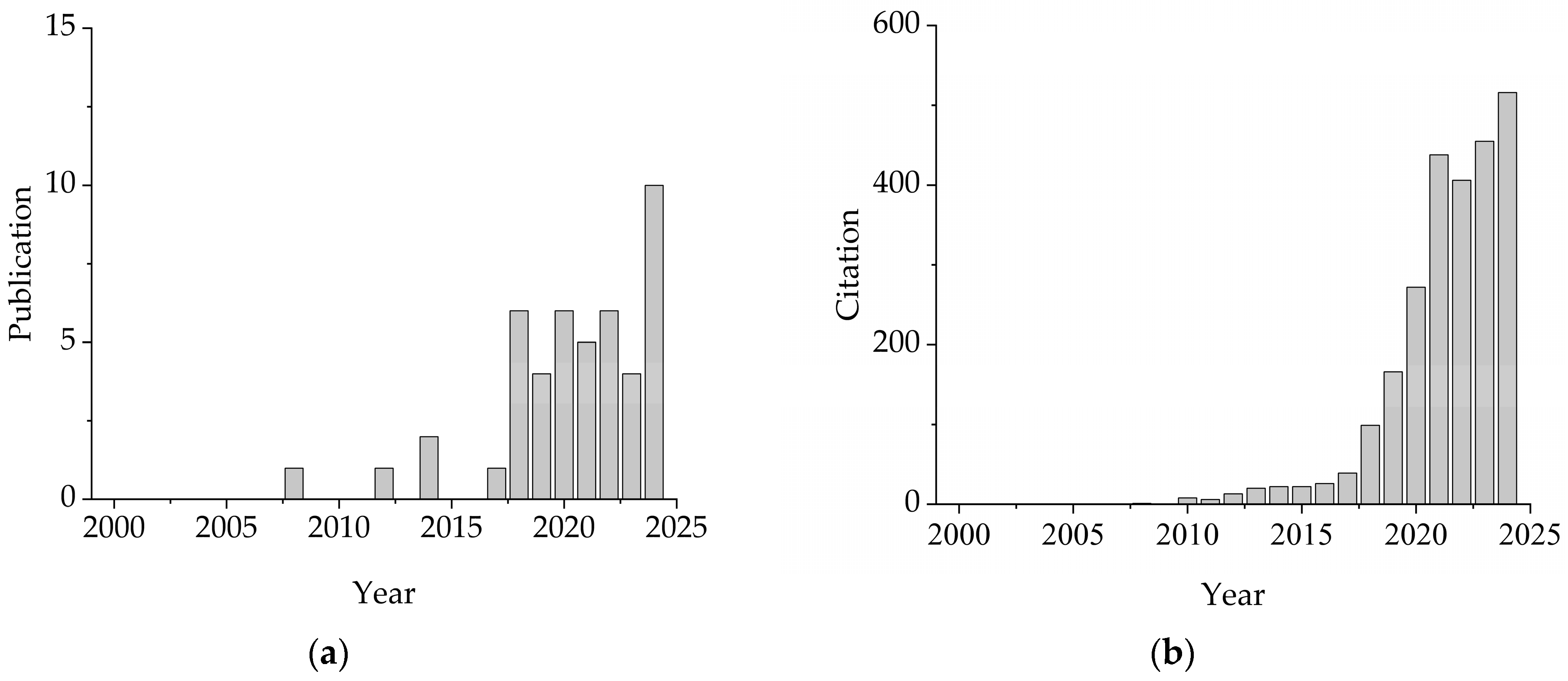

- Although UAV remote sensing has only recently gained popularity as a research field, the time span was set from 2000 to the present to ensure comprehensive literature coverage and to analyze the developmental trends of this field.

- Given that technical terms may include abbreviations or different word combinations, the keywords used for retrieval should be exhaustive and arranged in various combinations. The SQL query used in this study was:

- (Drone OR UAV OR “Unmanned Aerial Vehicle”)

- AND (BRDF OR “Bidirectional Reflectance” OR “Angle Effect” OR “Angular Effect”)

- AND (“Remote Sensing” OR “Quantitative Remote Sensing”)

- The topic of the paper should be in line with the angle effect in UAV quantitative remote sensing, so there should be a screening process.

- The paper should be published in a journal or conference, rather than in a simple investigation, review, or a certain chapter of a book.

3. The Theoretical Basis of the Angle Effect

3.1. The Bidirectional Reflection Characteristics and Anisotropic Mechanism of Ground Objects

- The radiative transport model is applicable to the reflection conditions of continuous vegetation canopies, such as vegetation in the growth period, large areas of grassland, etc., but not applicable to complex discontinuous vegetation canopies, such as forests, etc. The current research mainly combines PROSPECT-D with the SAI model or DART model to achieve the simulation of the optical characteristics of complex vegetation. The bidirectional gap ratio was introduced into the SAILH model to describe the hot spot effect [44,45]. The idea of random fields was introduced to extend the applicable objects of the radiative transport model to forests [46], etc.

- Geometric optical models are applicable to the inversion of discrete vegetation and rough surfaces, such as sparse forests, coniferous forests, orchards, etc. They can be used for macroscopic phenomena on a larger scale and provide a difference comparison for the scattering of ground objects and the atmosphere. At present, some mountain BRDF models applicable to coarse-resolution multi-slope remote sensing observations have been developed [47,48], but the multi-angle modeling and verification of complex mountains still need further research [49].

- Hybrid models, such as the GORT model, are applicable to sparse vegetation as well as discrete vegetation [50]. The combination of multiple models acting on a certain inversion task can effectively improve the inversion accuracy of the models. At present, a unified model of vegetation BRDF applicable to various vegetation types and different atmospheric conditions within the short-wave range of the sun has been developed [51]. This model creates more favorable conditions for the remote sensing inversion of vegetation parameters, and its modeling idea represents the long-term development direction of vegetation BRDF research [43].

- Computer simulation models can theoretically calculate the radiative transfer process expressed in any mathematical model, and can also be used as a tool to verify other models, such as the DIANA model based on the radiosity principle method [52], the RGM model [53], the Rapid model [54], the DART model based on the principle and method of ray tracing [55], the FLIGHT model [56], the Raytran model [57], etc. Computer models can accurately depict the radiation distribution of complex vegetation canopies and achieve realistic illumination and reflection effects. However, insufficient structural design, difficulty in understanding, and inversion have become the greatest shortcomings of computer simulation models. In recent years, in order to simulate large-scale surface and complex terrain, the LESS model, which can simultaneously perform forward ray tracing and backward ray tracing and make full use of the latest graphics technology to achieve more accurate and efficient simulation of large-scale scene remote sensing signals, has been developed [58,59].

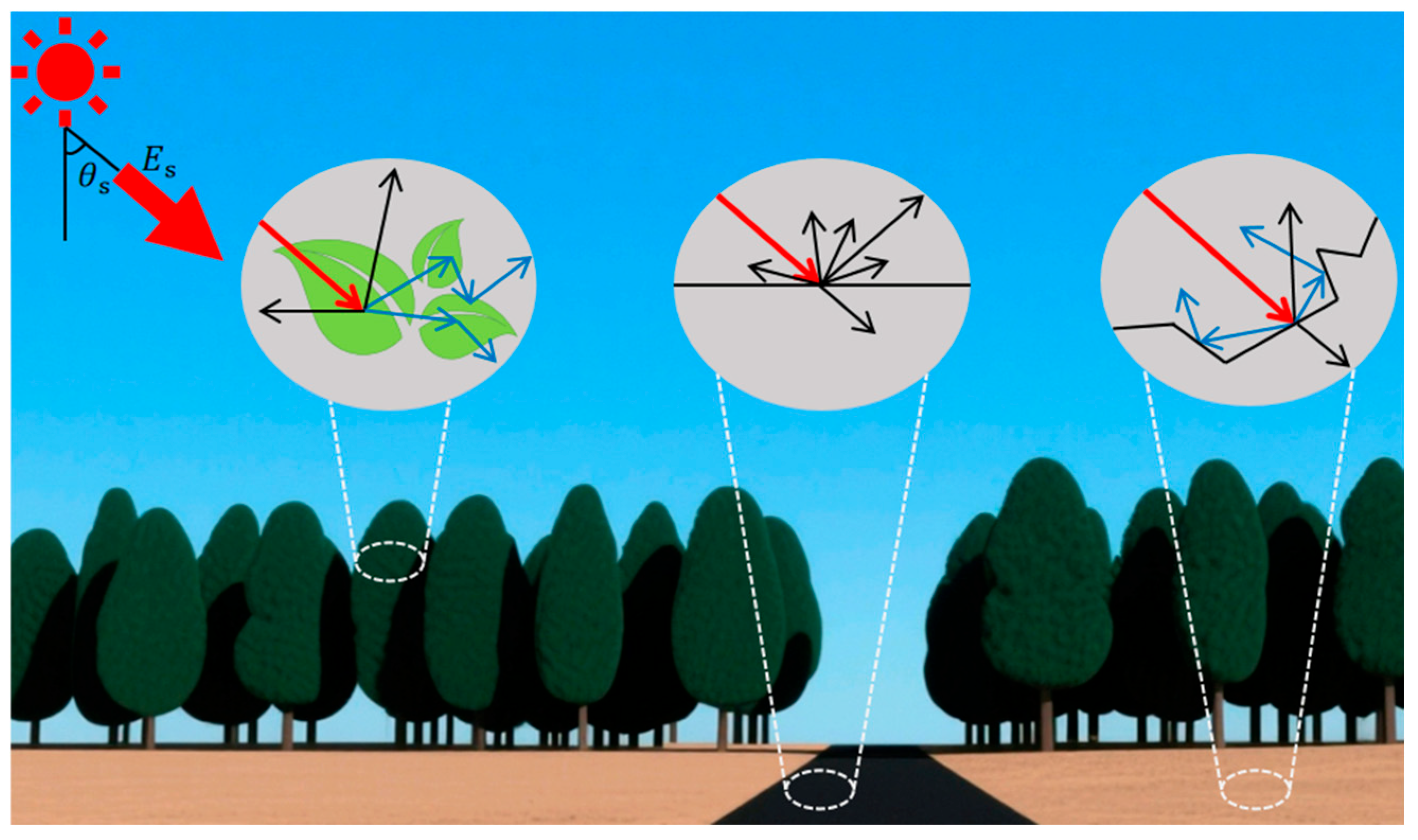

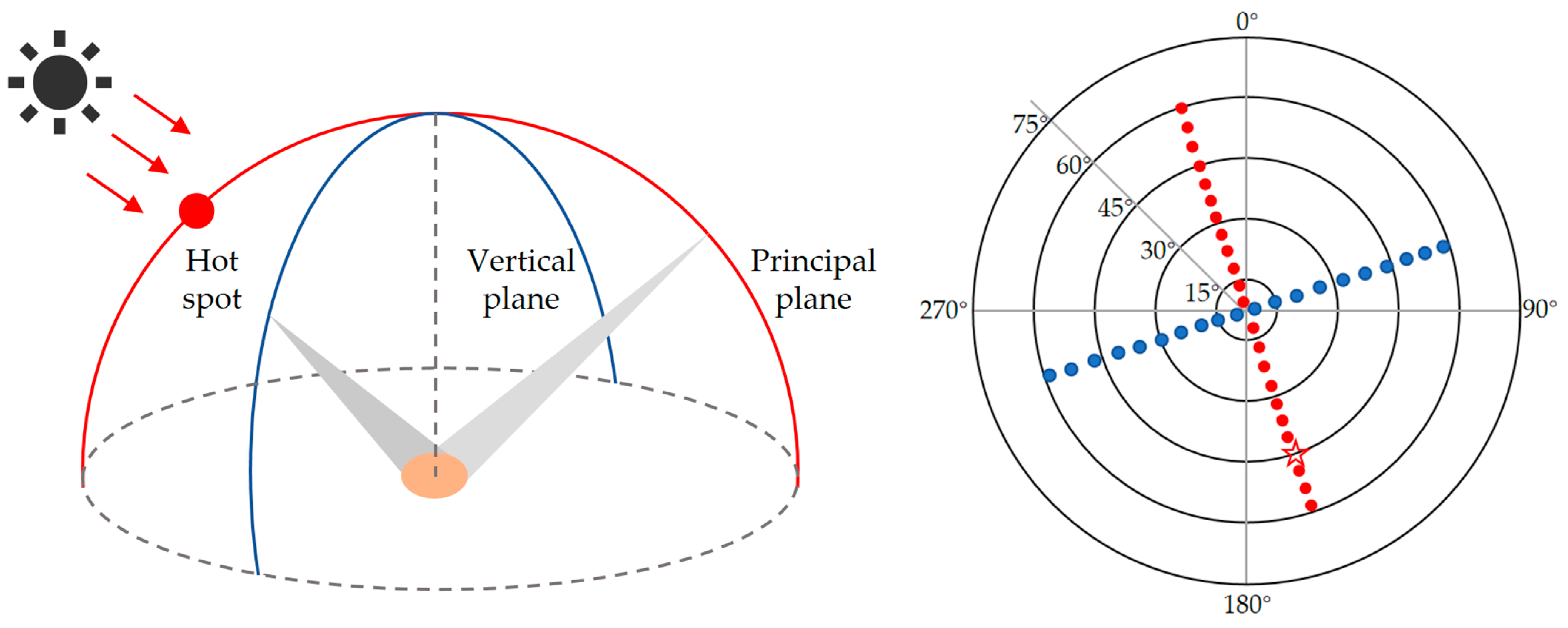

3.2. The Radiation Transport Mechanism of UAV Multi-Angle Observation

- Nadir observation (zenith angle < 10°) captures information on the vertical reflectance of the surface and is less affected by shadows but struggles to capture lateral structural features of the canopy.

- Tilted observation (zenith angle > 30°) enhances sensitivity to vertical canopy structure (e.g., stem density) and microscopic texture but is more susceptible to surface shadows and atmospheric scattering, as shown in Figure 3 below.

- Multi-angle observation combinations construct multi-angle datasets (e.g., hemispherical reflectance distribution) through multi-band flights or multi-lens synchronous acquisition, enabling joint retrieval of three-dimensional surface structures and physicochemical parameters.

4. Progress in Multi-Angle Data Acquisition Technology for UAV Remote Sensing

4.1. The Development of UAV Remote Sensing Platform Technology

4.2. Breakthroughs in Spectral Imaging Sensor Technology

4.3. Multi-Angle Data Collection Methods

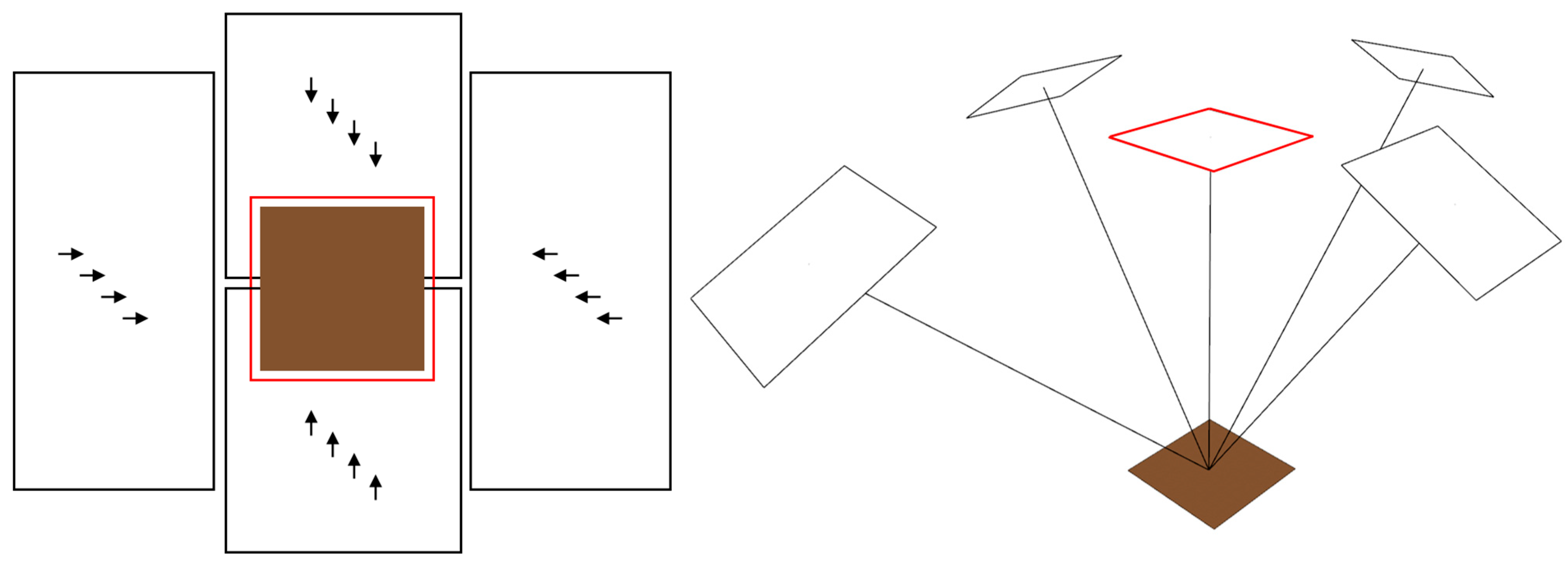

4.3.1. Nadir-Parallel Flight Path

4.3.2. Oblique-Parallel Flight Path

4.3.3. Crisscrossing Flight Route

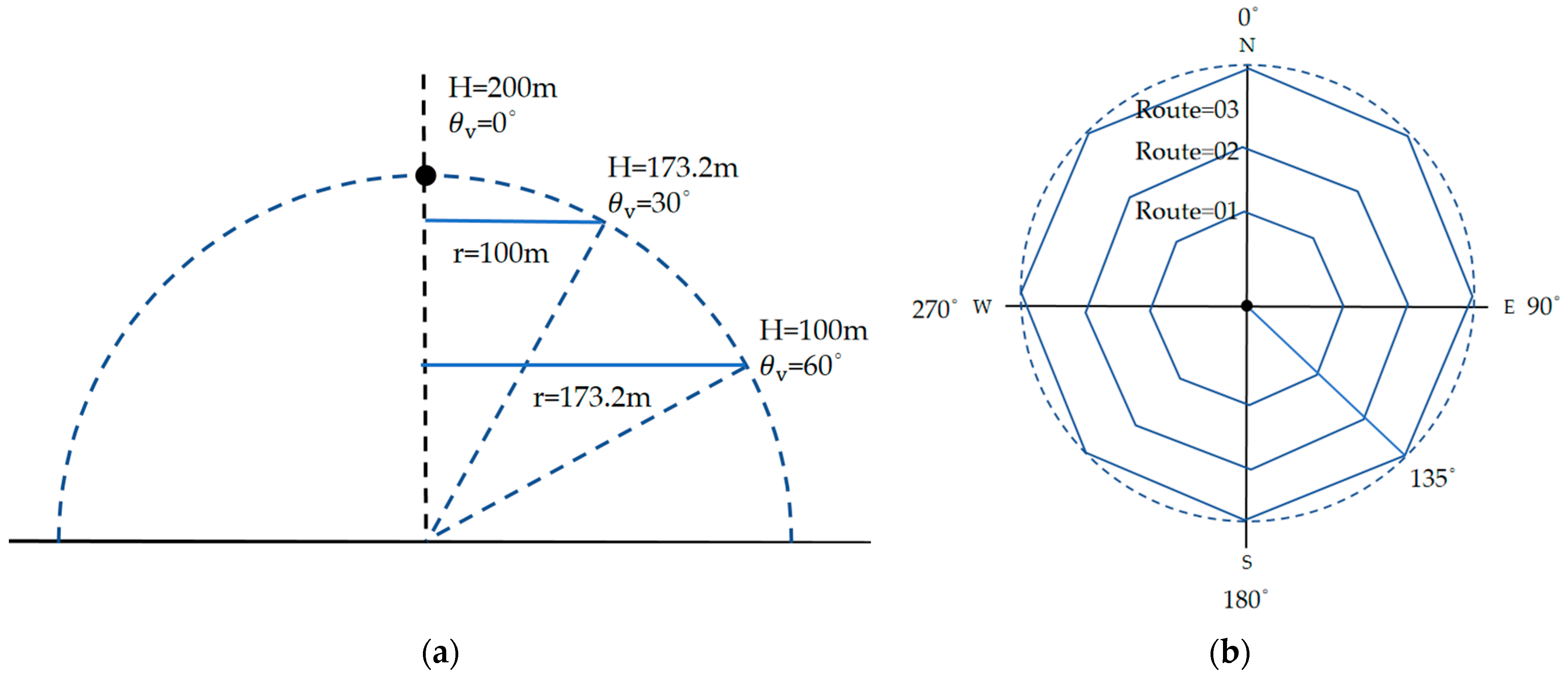

4.3.4. Spiral-Descending Flight Path

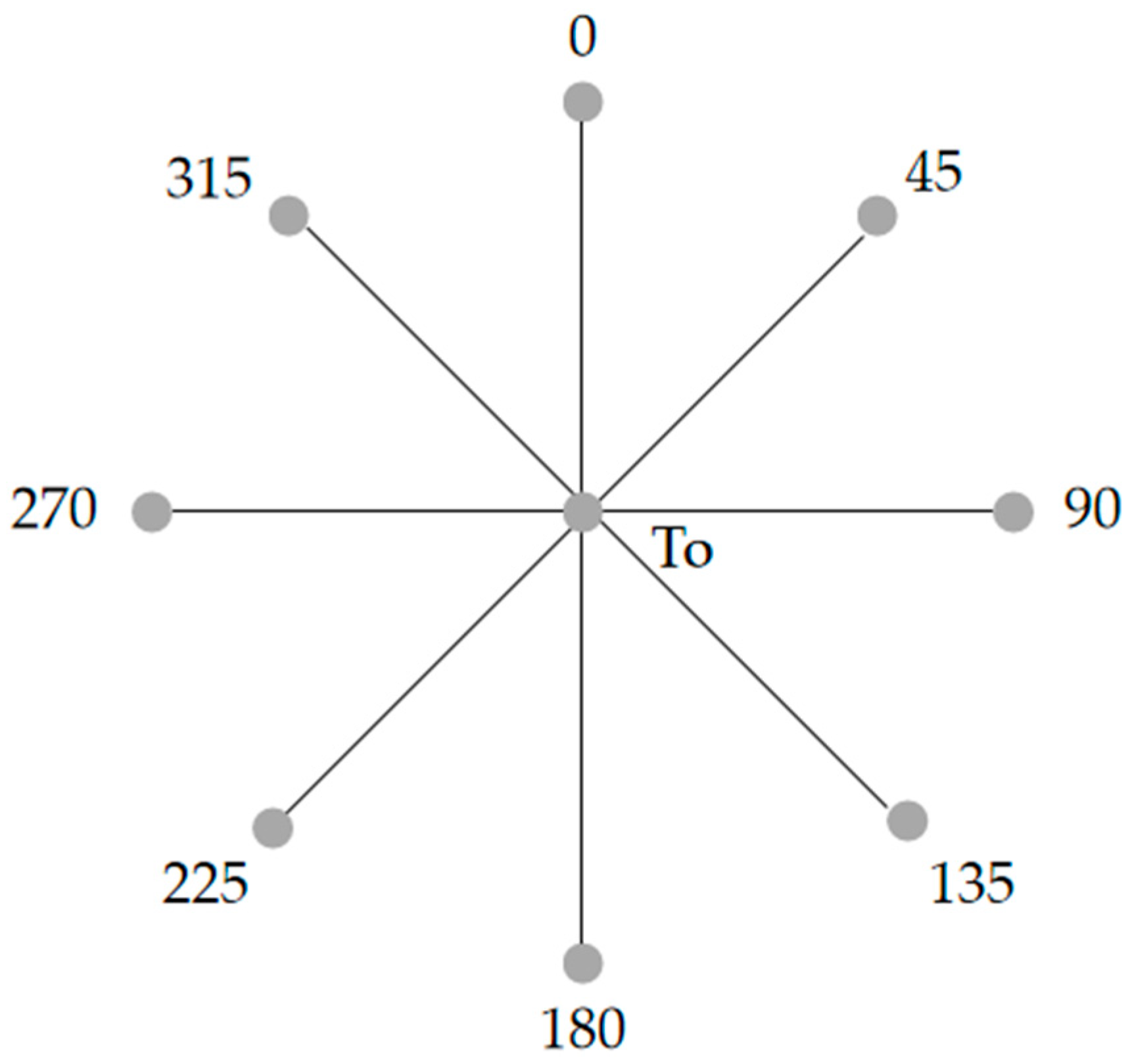

4.3.5. Radial-Descending Flight Path

4.3.6. Comparison of Multi-Angle Data Collection Methods

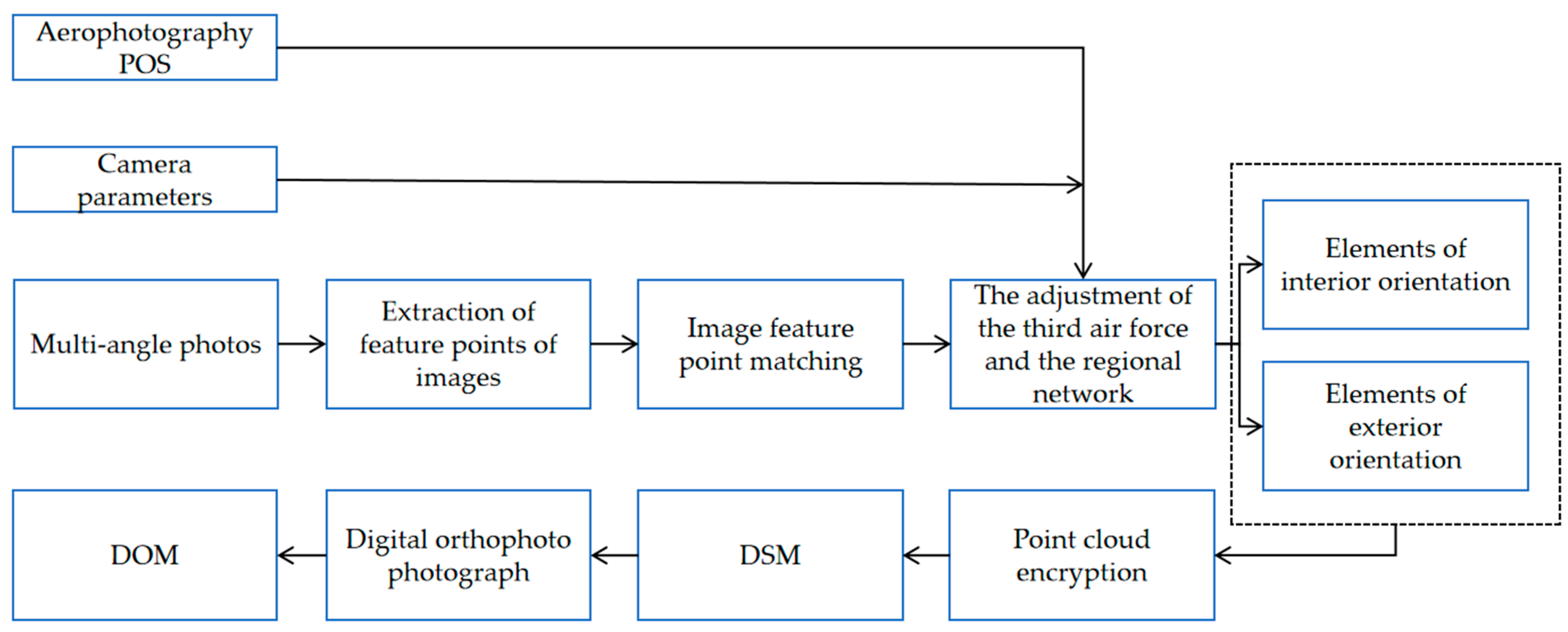

4.4. Data Processing and Correction Technologies

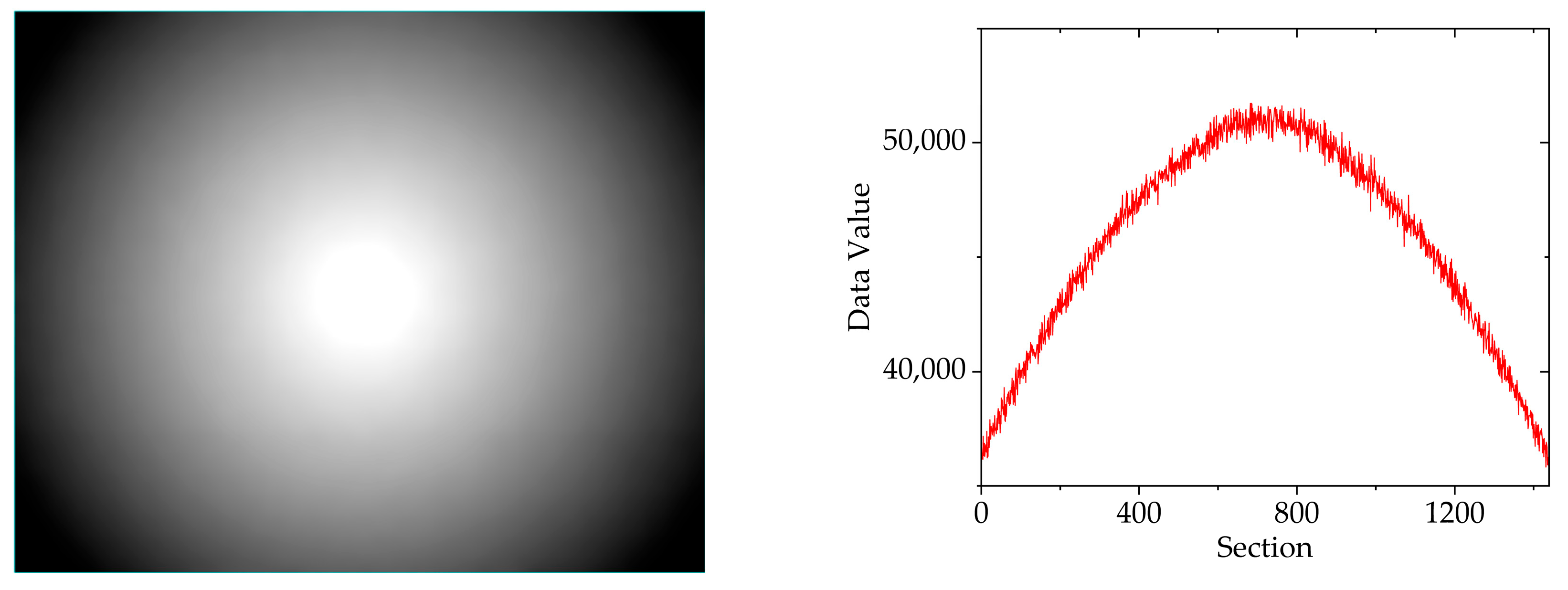

4.4.1. Spectral Data Radiation Correction

4.4.2. Geometric Correction of Spectral Data

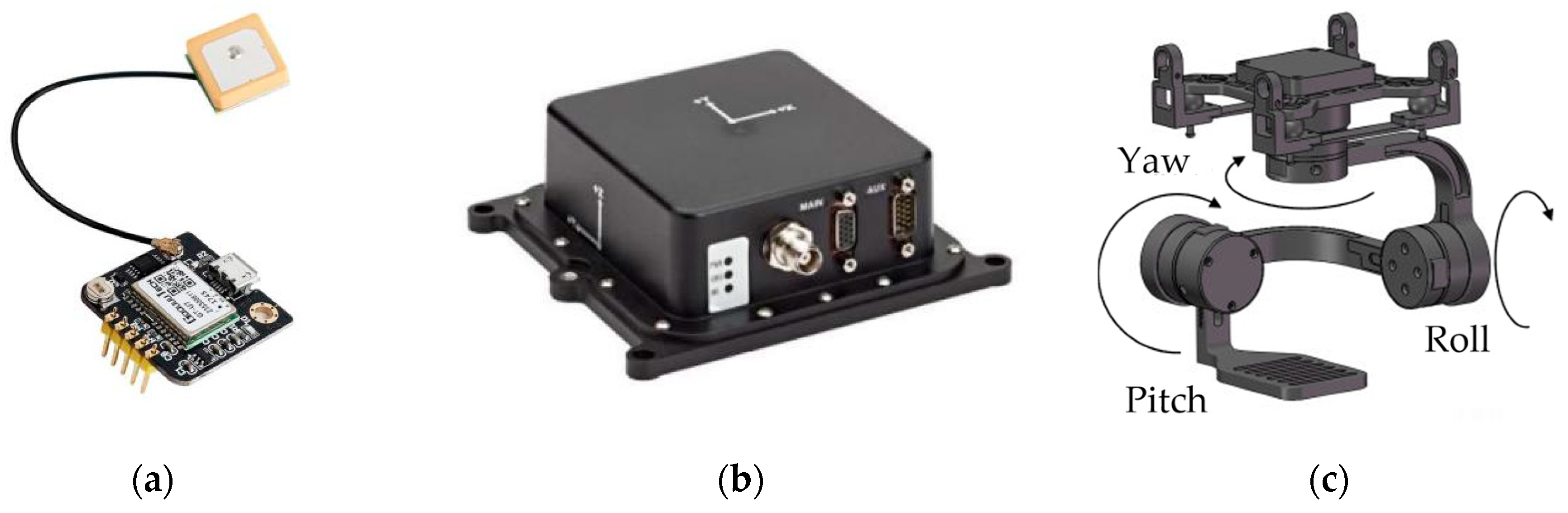

- Real-time measurement through precise inertial navigation and GPS. The angle measurement accuracy can reach 0.001°, and the position accuracy is better than the centimeter level. The measurement accuracy is high, but the equipment cost is expensive.

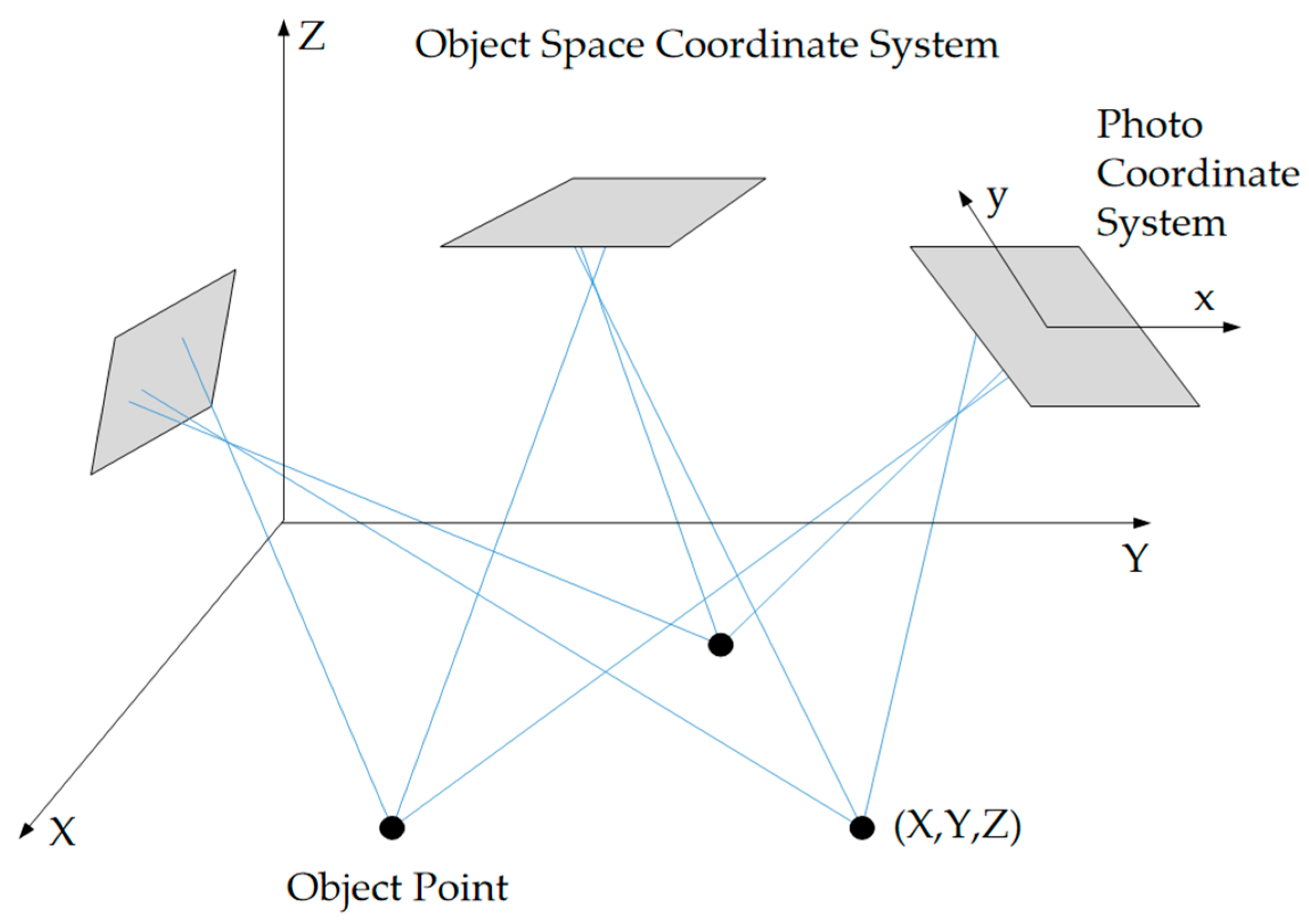

- Based on the principle of photogrammetry, the beam method regional network adjustment is carried out to correct the low-precision spatial position and attitude of the spectral image, obtaining centimeter-level accuracy of the center of photography coordinates and an attitude angle of the principal optical axis with an accuracy better than 0.01°.

4.4.3. Obtaining the Position of the Sun

5. The Application of Angle Effect in Quantitative Remote Sensing Inversion

5.1. Research on Typical Surface Bidirectional Reflection Characteristics

5.2. Radiation Correction of UAV Multi-Angle Spectral Data

5.3. Inversion and Structural Analysis of Ecological Element Parameters

5.4. Precise Monitoring and Classification of Multiple Parameters of Crops

5.5. High-Resolution Inversion of Surface Albedo in Typical Areas

6. Challenges and Future Development Trends

6.1. The Challenges

- The theoretical model is poorly adapted to real-world scenarios. Most of the existing BRDF models are designed based on satellite or aerial platforms. However, in low-altitude dynamic observations by UAV, the rapid changes in the three-dimensional structure of the ground surface, lighting conditions and sensor attitudes limit the applicability of the models, and the uncertainty of the inversion results increases significantly. For instance, traditional BRDF models (such as the PROSAIL and RT models) assume that the canopy structure is uniform, while the ground objects observed by drones (such as forest shadows and soil backgrounds) have strong heterogeneity, which in turn leads to an increase in model inversion errors. Meanwhile, the heterogeneity of the canopy structure of complex crops (such as vegetation density and leaf inclination angle distribution) further intensifies the difficulty of modeling the angle effect. In addition, the current BRDF model still does not fully consider the mutual reflection effect of surfaces, especially at the micro-terrain scale, where multiple scatters between adjacent surface elements can significantly alter the distribution pattern of reflection characteristics.

- Difficult fusion and correction of multi-source data. The multispectral or hyperspectral payloads of UAV are prone to be affected by atmospheric disturbances, geometric distortions of sensors and non-uniformity of illumination during multi-angle observations such as inclined and sky-bottom conditions. The accuracy of radiation and geometric correction is difficult to guarantee. Meanwhile, the spatio-temporal matching and fusion processing of data from multiple sensors (such as thermal imagers and lidars) is highly complex and requires the support of more efficient algorithms. In addition, when fusing data from UAV and satellites, there are situations such as mismatch in temporal resolution and differences in angle sampling, which result in a large amount of data being unable to be used collaboratively.

- Stability of observations in a dynamic environment. Low-altitude flight of UAV is vulnerable to wind field disturbances, resulting in deviations in observation angles and insufficient overlap of images. Especially in complex terrains or areas with dense vegetation, it may cause data loss or distortion in radiation measurement. Furthermore, the instantaneous changes in lighting conditions (such as cloud occlusion) pose higher requirements for the comparability of multi-temporal angle effects.

- Lack of expansion and standardization of application scenarios. At present, most studies focus on single crops or typical ground feature types, while the research on angle effects for complex ecosystems such as forests and wetlands is still insufficient. Meanwhile, the lack of unified standards for the collection, processing and verification of UAV multi-angle remote sensing data has restricted its large-scale application and promotion.

- Issues of precise navigation and positioning. When UAV make sharp turns or fly at high speeds, GNSS signals may not be received in a timely manner, resulting in positioning drift. In addition, in areas with complex terrain such as cities and mountainous regions, satellite signals are prone to being blocked, resulting in increased positioning errors.

6.2. Future Development Trends

- Multi-angle sensors and intelligent observation network innovation. Develop lightweight and high-spectral resolution multi-angle imaging sensors, and combine them with the autonomous path planning technology of UAV to achieve multi-mode coordinated observations such as space-ground, inclined, and circumferential, and enhance the full-dimensional capture capability of the two-dimensional reflection characteristics of the earth’s surface. Meanwhile, a three-dimensional monitoring network of satellite-UAV-ground is constructed to reduce the uncertainty of inversion through data complementarity of multiple platforms. In addition, in master-slave UAV swarms, long-endurance fixed-wing UAV are responsible for covering large areas at a 15° angle, while multi-rotor UAV perform local 55° fine observations. This data collection method not only enhances efficiency but also enables high-precision relative positioning through cluster SLAM.

- Deep integration of mechanisms and data-driven models. Combining the physical radiation transfer model with deep learning algorithms and using high-resolution multi-angle data to train the end-to-end inversion model breaks through the limitations of traditional empirical models. For example, the spectral responses at different angles are simulated through the Generative Adversarial Network (GAN) to optimize the inversion accuracy of physicochemical parameters of vegetation; by using Transformer to process long-term and continuous multi-angle data, the deformation characteristics of BRDF caused by crop growth can be captured, thereby improving the prediction accuracy of BRDF, etc. In addition, the research on Quantum Convolutional Neural Networks (QCNN) can handle massive multi-angle datasets, and the theoretical computing speed can be increased by 106 times.

- Dynamic environment adaptive correction technology breakthrough. Develop dynamic radiometric correction methods based on real-time meteorological data and sensor attitude information, combined with lidar point-cloud-assisted geometric correction, to improve data quality in complex scenarios. Furthermore, reinforcement learning algorithms are introduced to optimize flight control and reduce observation errors caused by external disturbances.

- Standardized application system and sharing platform construction. Formulate norms, processing procedures and verification standards for UAV multi-angle remote sensing data collection, and promote a cross-regional and cross-disciplinary data sharing mechanism. Meanwhile, a database of the angle effect characteristics of typical ground features is established to provide a reusable knowledge base for scenarios such as precise agricultural management and ecological disaster early warning.

- Multi-source fusion navigation and positioning. The tightly coupled system using RTK, visual odometer and IMU can achieve positioning accuracy of 1 cm in plane and 2 cm in elevation. Meanwhile, it is equipped with anti-interference GNSS modules (such as Septentrio Mosaic series), which effectively improves the positioning stability of UAV in complex urban environments by supporting multi-frequency and multi-constellation signal reception. This integrated navigation scheme not only ensures the high-precision positioning requirements but also enhances the system’s robustness in signal occlusion environments, providing a reliable position reference for multi-angle data acquisition under complex trajectories.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| BRDF | Bidirectional reflectance distribution function |

| UAV | Unmanned aerial vehicle |

| LAI | Leaf area index |

| CCC | Canopy chlorophyll content |

| GAN | Generative adversarial network |

| FOV | Field of view |

| MV-OBIA | Multi-view object-based image analysis |

| RMSE | Root mean square error |

| DOM | Digital orthophoto model |

| DSM | Digital surface model |

| LUT | Lookup table |

| CMOS | Complementary metal oxide semiconductor |

| GPS | Global Position System |

| IMU | Inertial measurement units |

| NDVI | Normalized difference vegetation index |

| SCIE | Science Citation Index—Expanded |

| SSCI | Social Sciences Citation Index |

| CPCI | Conference Proceedings Citation Index |

| GNSS | Global navigation satellite system |

| RTK | Real-time kinematic |

| VTOL | Vertical take-off and landing |

| SLAM | Simultaneous localization and mapping |

| QCNN | Quantum convolutional neural networks |

| BTF | Bidirectional texture function |

References

- Farhan, S.M.; Yin, J.; Chen, Z.; Memon, M.S. A Comprehensive Review of LiDAR Applications in Crop Management for Precision Agriculture. Sensors 2024, 24, 5409. [Google Scholar] [CrossRef]

- Karunathilake, E.M.B.M.; Le, A.T.; Heo, S.; Chung, Y.S.; Mansoor, S. The Path to Smart Farming: Innovations and Opportunities in Precision Agriculture. Agriculture 2023, 13, 1593. [Google Scholar] [CrossRef]

- Wang, Y.; Qu, Z.; Yang, W.; Chen, X.; Qiao, T. Inversion of Soil Salinity in the Irrigated Region along the Southern Bank of the Yellow River Using UAV Multispectral Remote Sensing. Agronomy 2024, 14, 523. [Google Scholar] [CrossRef]

- Manninen, T.; Roujean, J.; Hautecoeur, O.; Riihelä, A.; Lahtinen, P.; Jääskeläinen, E.; Siljamo, N.; Anttila, K.; Sukuvaara, T.; Korhonen, L. Airborne Measurements of Surface Albedo and Leaf Area Index of Snow-Covered Boreal Forest. J. Geophys. Res. Atmos. 2022, 127, e2021JD035376. [Google Scholar] [CrossRef]

- Ackermann, K.; Angus, S.D. A Resource Efficient Big Data Analysis Method for the Social Sciences: The Case of Global IP Activity. Procedia Comput. Sci. 2014, 29, 2360–2369. [Google Scholar] [CrossRef]

- Tian, L.; Wu, X.; Tao, Y.; Li, M.; Qian, C.; Liao, L.; Fu, W. Review of Remote Sensing-Based Methods for Forest Aboveground Biomass Estimation: Progress, Challenges, and Prospects. Forests 2023, 14, 1086. [Google Scholar] [CrossRef]

- Hatfield, J.L.; Prueger, J.H. Value of Using Different Vegetative Indices to Quantify Agricultural Crop Characteristics at Different Growth Stages under Varying Management Practices. Remote Sens. 2010, 2, 562–578. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated Narrow-Band Vegetation Indices for Prediction of Crop Chlorophyll Content for Application to Precision Agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Chakhvashvili, E.; Siegmann, B.; Muller, O.; Verrelst, J.; Bendig, J.; Kraska, T.; Rascher, U. Retrieval of Crop Variables from Proximal Multispectral UAV Image Data Using PROSAIL in Maize Canopy. Remote Sens. 2022, 14, 1247. [Google Scholar] [CrossRef] [PubMed]

- Cao, H.; You, D.; Ji, D.; Gu, X.; Wen, J.; Wu, J.; Li, Y.; Cao, Y.; Cui, T.; Zhang, H. The Method of Multi-Angle Remote Sensing Observation Based on Unmanned Aerial Vehicles and the Validation of BRDF. Remote Sens. 2023, 15, 5000. [Google Scholar] [CrossRef]

- Gatebe, C.K.; King, M.D. Airborne Spectral BRDF of Various Surface Types (Ocean, Vegetation, Snow, Desert, Wetlands, Cloud Decks, Smoke Layers) for Remote Sensing Applications. Remote Sens. Environ. 2016, 179, 131–148. [Google Scholar] [CrossRef]

- Lu, Z.; Deng, L.; Lu, H. An Improved LAI Estimation Method Incorporating with Growth Characteristics of Field-Grown Wheat. Remote Sens. 2022, 14, 4013. [Google Scholar] [CrossRef]

- Sridhar, V.N.; Mahtab, A.; Navalgund, R.R. Estimation and Validation of LAI Using Physical and Semi-empirical BRDF Models. Int. J. Remote Sens. 2008, 29, 1229–1236. [Google Scholar] [CrossRef]

- Roosjen, P.P.J.; Brede, B.; Suomalainen, J.M.; Bartholomeus, H.M.; Kooistra, L.; Clevers, J.G.P.W. Improved Estimation of Leaf Area Index and Leaf Chlorophyll Content of a Potato Crop Using Multi-Angle Spectral Data—Potential of Unmanned Aerial Vehicle Imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 66, 14–26. [Google Scholar] [CrossRef]

- Li, D.; Zheng, H.; Xu, X.; Lu, N.; Yao, X.; Jiang, J.; Wang, X.; Tian, Y.; Zhu, Y.; Cao, W.; et al. BRDF Effect on the Estimation of Canopy Chlorophyll Content in Paddy Rice from UAV-Based Hyperspectral Imagery. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6464–6467. [Google Scholar] [CrossRef]

- Xu, Z.; Li, Y.; Qin, Y.; Bach, E. A global assessment of the effects of solar farms on albedo, vegetation, and land surface temperature using remote sensing. Sol. Energy 2024, 268, 112198. [Google Scholar] [CrossRef]

- Ren, S.; Miles, E.S.; Jia, L.; Menenti, M.; Kneib, M.; Buri, P.; McCarthy, M.J.; Shaw, T.E.; Yang, W.; Pellicciotti, F. Anisotropy Parameterization Development and Evaluation for Glacier Surface Albedo Retrieval from Satellite Observations. Remote Sens. 2021, 13, 1714. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, L.; Liu, X.; Liu, Z. Development of a New BRDF-Resistant Vegetation Index for Improving the Estimation of Leaf Area Index. Remote Sens. 2016, 8, 947. [Google Scholar] [CrossRef]

- Pan, Z.; Zhang, H.; Min, X.; Xu, Z. Vicarious Calibration Correction of Large FOV Sensor Using BRDF Model Based on UAV Angular Spectrum Measurements. J. Appl. Remote Sens. 2020, 14, 1. [Google Scholar] [CrossRef]

- Farhad, M.M.; Kaewmanee, M.; Leigh, L.; Helder, D. Radiometric Cross Calibration and Validation Using 4 Angle BRDF Model between Landsat 8 and Sentinel 2A. Remote Sens. 2020, 12, 806. [Google Scholar] [CrossRef]

- Stark, B.; Zhao, T.; Chen, Y. An Analysis of the Effect of the Bidirectional Reflectance Distribution Function on Remote Sensing Imagery Accuracy from Small Unmanned Aircraft Systems. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; IEEE: Arlington, VA, USA, 2016; pp. 1342–1350. [Google Scholar] [CrossRef]

- Wierzbicki, D.; Kedzierski, M.; Fryskowska, A.; Jasinski, J. Quality Assessment of the Bidirectional Reflectance Distribution Function for NIR Imagery Sequences from UAV. Remote Sens. 2018, 10, 1348. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, H.; Ma, W.; Fan, W.; Wang, J. Land Surface Albedo Estimation and Cross Validation Based on GF-1 WFV Data. Atmosphere 2022, 13, 1651. [Google Scholar] [CrossRef]

- Li, L.; Mu, X.; Qi, J.; Pisek, J.; Roosjen, P.; Yan, G.; Huang, H.; Liu, S.; Baret, F. Characterizing Reflectance Anisotropy of Background Soil in Open-Canopy Plantations Using UAV-Based Multiangular Images. ISPRS J. Photogramm. Remote Sens. 2021, 177, 263–278. [Google Scholar] [CrossRef]

- Buchhorn, M.; Petereit, R.; Heim, B. A Manual Transportable Instrument Platform for Ground-Based Spectro-Directional Observations (ManTIS) and the Resultant Hyperspectral Field Goniometer System. Sensors 2013, 13, 16105–16128. [Google Scholar] [CrossRef]

- Scudiero, E.; Skaggs, T.H.; Corwin, D.L. Comparative Regional-Scale Soil Salinity Assessment with near-Ground Apparent Electrical Conductivity and Remote Sensing Canopy Reflectance. Ecol. Indic. 2016, 70, 276–284. [Google Scholar] [CrossRef]

- Huang, G.; Li, X.; Huang, C.; Liu, S.; Ma, Y.; Chen, H. Representativeness Errors of Point-Scale Ground-Based Solar Radiation Measurements in the Validation of Remote Sensing Products. Remote Sens. Environ. 2016, 181, 198–206. [Google Scholar] [CrossRef]

- Roccetti, G.; Emde, C.; Sterzik, M.F.; Manev, M.; Seidel, J.V.; Bagnulo, S. Planet Earth in Reflected and Polarized Light: I. Three-Dimensional Radiative Transfer Simulations of Realistic Surface-Atmosphere Systems. Astron. Astrophys. 2025, 697, A170. [Google Scholar] [CrossRef]

- Kizel, F.; Vidro, Y. Bidirectional reflectance distribution function (brdf) of mixed pixels. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 195–200. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, J.; Liang, S.; Wang, D.; Ma, B.; Bo, Y. The Bidirectional Reflectance Signature of Typical Land Surfaces and Comparison of MISR and MODIS BRDF Products. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; p. III-1099–III-1102. [Google Scholar] [CrossRef]

- Yang, R.; Friedl, M.A. Modeling the Effects of Three-dimensional Vegetation Structure on Surface Radiation and Energy Balance in Boreal Forests. J. Geophys. Res. Atmos. 2003, 108. [Google Scholar] [CrossRef]

- Coburn, C.A.; Gaalen, E.V.; Peddle, D.R.; Flanagan, L.B. Anisotropic Reflectance Effects on Spectral Indices for Estimating Ecophysiological Parameters Using a Portable Goniometer System. Can. J. Remote Sens. 2010, 36, S355–S364. [Google Scholar] [CrossRef]

- Zhen, Z.; Chen, S.; Qin, W.; Yan, G.; Gastellu-Etchegorry, J.-P.; Cao, L.; Murefu, M.; Li, J.; Han, B. Potentials and Limits of Vegetation Indices with BRDF Signatures for Soil-Noise Resistance and Estimation of Leaf Area Index. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5092–5108. [Google Scholar] [CrossRef]

- Nayar, S.K.; Ikeuchi, K.; Kanade, T. Surface reflection: Physical and geometrical perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 611–634. [Google Scholar] [CrossRef]

- Dana, K.J.; van Ginneken, B.; Nayar, S.K.; Koenderink, J.J. Reflectance and texture of real-world surfaces. ACM Trans. Graphics. 1999, 18, 1–34. [Google Scholar] [CrossRef]

- Zhou, Q.; Tian, L.; Li, J.; Wu, H.; Zeng, Q. Radiometric Cross-Calibration of Large-View-Angle Satellite Sensors Using Global Searching to Reduce BRDF Influence. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5234–5245. [Google Scholar] [CrossRef]

- Jiao, Z.; Hill, M.J.; Schaaf, C.B.; Zhang, H.; Wang, Z.; Li, X. An Anisotropic Flat Index (AFX) to Derive BRDF Archetypes from MODIS. Remote Sens. Environ. 2014, 141, 168–187. [Google Scholar] [CrossRef]

- Wang, Z.; Coburn, C.A.; Ren, X.; Teillet, P.M. Effect of Surface Roughness, Wavelength, Illumination, and Viewing Zenith Angles on Soil Surface BRDF Using an Imaging BRDF Approach. Int. J. Remote Sens. 2014, 35, 6894–6913. [Google Scholar] [CrossRef]

- Walthall, C.L.; Norman, J.M.; Welles, J.M.; Campbell, G.; Blad, B.L. Simple Equation to Approximate the Bidirectional Reflectance from Vegetative Canopies and Bare Soil Surfaces. Appl. Opt. 1985, 24, 383. [Google Scholar] [CrossRef]

- Liang, S.; Strahler, A. Retrieval of Surface BRDF from Multiangle Remotely Sensed Data. Remote Sens. Environ. 1994, 50, 18–30. [Google Scholar] [CrossRef]

- Piaser, E.; Berton, A.; Bolpagni, R.; Caccia, M.; Castellani, M.B.; Coppi, A.; Vecchia, A.D.; Gallivanone, F.; Sona, G.; Villa, P. Impact of Radiometric Variability on Ultra-High Resolution Hyperspectral Imagery Over Aquatic Vegetation: Preliminary Results. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5935–5950. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, X.; Cui, L.; Dong, Y.; Liu, Y.; Xi, Q.; Cao, H.; Chen, L.; Lian, Y. Enhancing Leaf Area Index Estimation with MODIS BRDF Data by Optimizing Directional Observations and Integrating PROSAIL and Ross–Li Models. Remote Sens. 2023, 15, 5609. [Google Scholar] [CrossRef]

- Yan, G.J.; Jiang, H.L.; Yan, K.; Cheng, S.Y.; Song, W.J.; Tong, Y.Y.; Liu, Y.N.; Qi, J.B.; Mu, X.H.; Zhang, W.M.; et al. Review of optical multi-angle quantitative remote sensing. Natl. Remote Sens. Bull. 2021, 25, 83–108. [Google Scholar] [CrossRef]

- Kuusk, A. A Fast, Invertible Canopy Reflectance Model. Remote Sens. Environ. 1995, 51, 342–350. [Google Scholar] [CrossRef]

- Verhoef, W. Theory of Radiative Transfer Models Applied in Optical Remote Sensing of Vegetation Canopies. PhD. Thesis, Wageningen University, Wageningen, The Netherlands, 1998. [Google Scholar] [CrossRef]

- Shabanov, N.V.; Huang, D.; Knjazikhin, Y.; Dickinson, R.E.; Myneni, R.B. Stochastic Radiative Transfer Model for Mixture of Discontinuous Vegetation Canopies. J. Quant. Spectrosc. Radiat. Transf. 2007, 107, 236–262. [Google Scholar] [CrossRef][Green Version]

- Hao, D.; Wen, J.; Xiao, Q.; Wu, S.; Lin, X.; You, D.; Tang, Y. Modeling Anisotropic Reflectance Over Composite Sloping Terrain. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3903–3923. [Google Scholar] [CrossRef]

- Hao, D.; Wen, J.; Xiao, Q.; You, D.; Tang, Y. An improved topography-coupled kernel-driven model for land surface aniso-tropic reflectance. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2833–2847. [Google Scholar] [CrossRef]

- Wen, J.; Liu, Q.; Xiao, Q.; Liu, Q.; You, D.; Hao, D.; Wu, S.; Lin, X. Characterizing Land Surface Anisotropic Reflectance over Rugged Terrain: A Review of Concepts and Recent Developments. Remote Sens. 2018, 10, 370. [Google Scholar] [CrossRef]

- Li, X.; Strahler, A.H.; Woodcock, C.E. A Hybrid Geometric Optical-Radiative Transfer Approach for Modeling Albedo and Directional Reflectance of Discontinuous Canopies. IEEE Trans. Geosci. Remote Sens. 1995, 33, 466–480. [Google Scholar] [CrossRef]

- Xu, X.; Fan, W.; Li, J.; Zhao, P.; Chen, G. A Unified Model of Bidirectional Reflectance Distribution Function for the Vegetation Canopy. Sci. China Earth Sci. 2017, 60, 463–477. [Google Scholar] [CrossRef]

- Goel, N.S.; Rozehnal, I.; Thompson, R.L. A Computer Graphics Based Model for Seattering from Objects of Arbitrary Shapes in the Optical Region. Remote Sens. Environ. 1991, 36, 73–104. [Google Scholar] [CrossRef]

- Qin, W.; Gerstl, S.A.W. 3-D Scene Modeling of Semidesert Vegetation Cover and Its Radiation Regime. Remote Sens. Environ. 2000, 74, 145–162. [Google Scholar] [CrossRef]

- Huang, H.; Qin, W.; Liu, Q. RAPID: A Radiosity Applicable to Porous IndiviDual Objects for Directional Reflectance over Complex Vegetated Scenes. Remote Sens. Environ. 2013, 132, 221–237. [Google Scholar] [CrossRef]

- Gastellu-Etchegorry, J.P.; Demarez, V.; Pinel, V.; Zagolski, F. Modeling Radiative Transfer in Heterogeneous 3-D Vegetation Canopies. Remote Sens. Environ. 1996, 58, 131–156. [Google Scholar] [CrossRef]

- North, P.R.J. Three-Dimensional Forest Light Interaction Model Using a Monte Carlo. IEEE Trans. Geosci. Remote Sens. 1996, 34, 946–956. [Google Scholar] [CrossRef]

- Govaerts, Y.M.; Verstraete, M.M. Raytran: A Monte Carlo Ray-Tracing Model to Compute Light Scattering in Three-Dimensional Heterogeneous Media. IEEE Trans. Geosci. Remote Sens. 1998, 36, 493–505. [Google Scholar] [CrossRef]

- Qi, J.; Xie, D.; Guo, D.; Yan, G. A Large-Scale Emulation System for Realistic Three-Dimensional (3-D) Forest Simulation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4834–4843. [Google Scholar] [CrossRef]

- Qi, J.; Xie, D.; Yin, T.; Yan, G.; Gastellu-Etchegorry, J.-P.; Li, L.; Zhang, W.; Mu, X.; Norford, L.K. LESS: LargE-Scale Remote Sensing Data and Image Simulation Framework over Heterogeneous 3D Scenes. Remote Sens. Environ. 2019, 221, 695–706. [Google Scholar] [CrossRef]

- Biliouris, D.; Van der Zande, D.; Verstraeten, W.W.; Stuckens, J.; Muys, B.; Dutré, P.; Coppin, P. RPV Model Parameters Based on Hyperspectral Bidirectional Reflectance Measurementsof Fagus sylvatica L. Leaves. Remote Sens. 2009, 1, 92–106. [Google Scholar] [CrossRef]

- Roujean, J.; Leroy, M.; Deschamps, P. A Bidirectional Reflectance Model of the Earth’s Surface for the Correction of Remote Sensing Data. J. Geophys. Res. Atmos. 1992, 97, 20455–20468. [Google Scholar] [CrossRef]

- Jiao, Z.; Schaaf, C.B.; Dong, Y.; Román, M.; Hill, M.J.; Chen, J.M.; Wang, Z.; Zhang, H.; Saenz, E.; Poudyal, R.; et al. A Method for Improving Hotspot Directional Signatures in BRDF Models Used for MODIS. Remote Sens. Environ. 2016, 186, 135–151. [Google Scholar] [CrossRef]

- Jiao, Z.; Ding, A.; Kokhanovsky, A.; Schaaf, C.; Bréon, F.-M.; Dong, Y.; Wang, Z.; Liu, Y.; Zhang, X.; Yin, S.; et al. Development of a Snow Kernel to Better Model the Anisotropic Reflectance of Pure Snow in a Kernel-Driven BRDF Model Framework. Remote Sens. Environ. 2019, 221, 198–209. [Google Scholar] [CrossRef]

- Liu, S.; Liu, Q.; Liu, Q.; Wen, J.; Li, X. The Angular and Spectral Kernel Model for BRDF and Albedo Retrieval. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2010, 3, 241–256. [Google Scholar] [CrossRef]

- Wu, S.; Wen, J.; Xiao, Q.; Liu, Q.; Hao, D.; Lin, X.; You, D. Derivation of Kernel Functions for Kernel-Driven Reflectance Model Over Sloping Terrain. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 396–409. [Google Scholar] [CrossRef]

- Yan, K.; Li, H.L.; Song, W.J.; Tong, Y.Y.; Hao, D.L.; Zeng, Y.L.; Mu, X.H.; Yan, G.J.; Fang, Y.; Myneni, R.B.; et al. Extending a linear kernel-driven BRDF model to realistically simulate reflectance anisotropy over rugged terrain. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4401816. [Google Scholar] [CrossRef]

- Rahman, H.; Pinty, B.; Verstraete, M.M. Coupled Surface-atmosphere Reflectance (CSAR) Model: 2. Semiempirical Surface Model Usable with NOAA Advanced Very High Resolution Radiometer Data. J. Geophys. Res. Atmos. 1993, 98, 20791–20801. [Google Scholar] [CrossRef]

- Boucher, Y.; Cosnefroy, H.; Petit, A.D.; Serrot, G.; Briottet, X. Comparison of Measured and Modeled BRDF of Natural Targets; Watkins, W.R., Clement, D., Reynolds, W.R., Eds.; SPIE: Orlando, FL, USA, 14 July 1999; pp. 16–26. [Google Scholar] [CrossRef]

- Rahman, H.; Verstraete, M.M.; Pinty, B. Coupled Surface-atmosphere Reflectance (CSAR) Model: 1. Model Description and Inversion on Synthetic Data. J. Geophys. Res. Atmos. 1993, 98, 20779–20789. [Google Scholar] [CrossRef]

- Zhang, Z.; Kalluri, S.; JaJa, J.; Liang, S.; Townshend, J.R.G. High performance algorithms for global BRDF retrieval. IEEE Comput. Sci. Eng. 1998, 4, 16–29. [Google Scholar] [CrossRef]

- Gobron, N.; Lajas, D. A new inversion scheme for the RPV model. Can. J. Remote Sens. 2002, 28, 156–167. [Google Scholar] [CrossRef]

- Lavergne, T.; Kaminski, T.; Pinty, B.; Taberner, M.; Gobron, N.; Verstraete, M.M.; Vossbeck, V.; Widlowski, J.-L.; Giering, R. Application to MISR land products of an RPV model inversion package using adjoint and Hessian codes. Remote Sens. Environ. 2007, 107, 362–375. [Google Scholar] [CrossRef]

- Martonchik, J.V.; Diner, D.J.; Kahn, R.A.; Ackerman, T.P.; Verstraete, M.M.; Pinty, B.; Gordon, H.R. Techniques for the Retrieval of Aerosol Properties over Land and Ocean Using Multiangle Imaging. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1212–1227. [Google Scholar] [CrossRef]

- Hall, F.G.; Hilker, T.; Coops, N.C.; Lyapustin, A.; Huemmrich, K.F.; Middleton, E.; Margolis, H.; Drolet, G.; Black, T.A. Multi-angle remote sensing of forest light use efficiency by observing PRI variation with canopy shadow fraction. Remote Sens. Envrion. 2008, 112, 3201–3211. [Google Scholar] [CrossRef]

- Peltoniemi, J.I.; Kaasalainen, S.; Naranen, J.; Rautiainen, M.; Stenberg, P.; Smolander, H.; Smolander, S.; Voipio, P. BRDF measurement of understory vegetation in pine forests: Dwarf shrubs, lichen, and moss. Remote Sens. Environ. 2005, 94, 343–354. [Google Scholar] [CrossRef]

- Sandmeier, S.; Müller, C.; Hosgood, B.; Andreoli, G. Sensitivity Analysis and Quality Assessment of Laboratory BRDF Data. Remote Sens. Environ. 1998, 64, 176–191. [Google Scholar] [CrossRef]

- Lucht, W.; Roujean, J.-L. Considerations in the Parametric Modeling of BRDF and Albedo from Multiangular Satellite Sensor Observations. Remote Sens. Rev. 2000, 18, 343–379. [Google Scholar] [CrossRef]

- Mohammed, G.H.; Colombo, R.; Middleton, E.M.; Rascher, U.; Van Der Tol, C.; Nedbal, L.; Goulas, Y.; Pérez-Priego, O.; Damm, A.; Meroni, M.; et al. Remote Sensing of Solar-Induced Chlorophyll Fluorescence (SIF) in Vegetation: 50 Years of Progress. Remote Sens. Environ. 2019, 231, 111177. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative Remote Sensing at Ultra-High Resolution with UAV Spectroscopy: A Review of Sensor Technology, Measurement Procedures, and Data Correction Workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- Zhang, M.; Luan, J.; You, Y.; He, X.; Chen, S.; Hai, X. Industry Development Status and Trend of Aerial Photogrammetry and Remote Sensing Based on UAS. Geomat. Spat. Inf. Technol. 2023, 46, 38–41+46. [Google Scholar] [CrossRef]

- Purchase DJI Mavic 3—DJI DJI Malll. Available online: https://store.dji.com/cn/product/dji-mavic-3-cine-combo?vid=110034 (accessed on 23 July 2025).

- MS600 Pro Six-Channel Multispectral Camera. Available online: https://www.mputek.com/product/ms600-pro-multispectral-camera.html (accessed on 23 July 2025).

- 5-Channel Multispectral Camera for Agricultural Remote Sensing—RedEdge MX-Holtron Optoelectronics. Available online: https://www.auniontech.com/details-994.html (accessed on 23 July 2025).

- PARROT SEQUOIA Agricultural Multispectral Camera Hyperspectral Imager Hyperspectral Camera Hyperspectral Imaging System Solution—Jiangsu Shuangli Hepu Technology Co., Ltd. Available online: https://www.dualix.com.cn/Goods/desc/id/209/aid/1168.html (accessed on 23 July 2025).

- GaiaSky-mini3-VN Hyperspectral Imager, Hyperspectral Imager, Hyperspectral Camera, Hyperspectral Imaging System Solution—Jiangsu Shuangli Hepu Technology Co., Ltd. Available online: https://www.dualix.com.cn/Goods/desc/id/123/aid/1255.html (accessed on 23 July 2025).

- HySpex. Available online: https://www.hyspex.com/hyspex-products/hyspex-mjolnir/mjolnir-v-1240 (accessed on 23 July 2025).

- Co-Aligned HP Full-Band Airborne Hyperspectral Imaging System. Available online: https://www.nbl.com.cn/cn_Products/co_aligned_vnir_swir.html (accessed on 23 July 2025).

- Shuai, Y.; Yang, J.; Wu, H.; Shao, C.; Xu, X.; Liu, M.; Liu, T.; Liang, J. Variation of Multi-angle Reflectance Collected by UAV over Quadrats of Paddy-field Canopy. Remote Sens. Technol. Applicatio. 2021, 36, 342–352. [Google Scholar] [CrossRef]

- Liu, X. Application of UAVT Tilt Photography in 3D City Modeling. Sci. Technol. Innov. Her. 2021, 18, 28–30. [Google Scholar] [CrossRef]

- Lee, S.; Kim, B.; Baik, H.; Cho, S.-J. A novel design and implementation of an autopilot terrain-following airship. IEEE Access 2022, 10, 38428–38436. [Google Scholar] [CrossRef]

- Ren, C.; Shang, H.; Zha, Z.; Zhang, F.; Pu, Y. Color balance method of dense point cloud in landslides area based on UAV images. IEEE Sens 2022, 22, 3516–3528. [Google Scholar] [CrossRef]

- Deng, L.; Chen, Y.; Zhao, Y.; Zhu, L.; Gong, H.-L.; Guo, L.-J.; Zou, H.-Y. An Approach for Reflectance Anisotropy Retrieval from UAV-Based Oblique Photogrammetry Hyperspectral Imagery. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102442. [Google Scholar] [CrossRef]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A. Sensor Correction of a 6-Band Multispectral Imaging Sensor for UAV Remote Sensing. Remote Sens. 2012, 4, 1462–1493. [Google Scholar] [CrossRef]

- Mansouri, A.; Marzani, F.; Gouton, P. Development of a protocol for CCD calibration: Application to a multispectral imaging system. Int. J. Robot. Autom. 2005, 20, 94–100. [Google Scholar] [CrossRef]

- Asada, N.; Amano, A.; Baba, M. Photometric Calibration of Zoom Lens Systems. In Proceedings of the Proceedings of 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; IEEE: Vienna, Austria, 1996; Volume 1, pp. 186–190. [Google Scholar] [CrossRef]

- Kang, S.B.; Weiss, R. Can We Calibrate a Camera Using an Image of a Flat, Textureless Lambertian Surface? In Computer Vision—ECCV 2000; Vernon, D., Ed.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2000; Volume 1843, pp. 640–653. ISBN 978-3-540-67686-7. [Google Scholar] [CrossRef]

- Yu, W. Practical Anti-Vignetting Methods for Digital Cameras. IEEE Trans. Consum. Electron. 2004, 50, 975–983. [Google Scholar] [CrossRef]

- Zheng, Y.; Lin, S.; Kang, S.B. Single-Image Vignetting Correction. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2006, 1, 461–468. [Google Scholar] [CrossRef]

- Lebourgeois, V.; Bégué, A.; Labbé, S.; Mallavan, B.; Prévot, L.; Roux, B. Can Commercial Digital Cameras Be Used as Multispectral Sensors? A Crop Monitoring Test. Sensors 2008, 8, 7300–7322. [Google Scholar] [CrossRef]

- Crimmins, M.A.; Crimmins, T.M. Monitoring Plant Phenology Using Digital Repeat Photography. Environ. Manag. 2008, 41, 949–958. [Google Scholar] [CrossRef]

- Hunt, E.R.; Cavigelli, M.; Daughtry, C.S.T.; Mcmurtrey, J.E.; Walthall, C.L. Evaluation of Digital Photography from Model Aircraft for Remote Sensing of Crop Biomass and Nitrogen Status. Precis. Agric. 2005, 6, 359–378. [Google Scholar] [CrossRef]

- Peltoniemi, J.I.; Piironen, J.; Näränen, J.; Suomalainen, J.; Kuittinen, R.; Markelin, L.; Honkavaara, E. Bidirectional Reflectance Spectrometry of Gravel at the Sjökulla Test Field. ISPRS J. Photogramm. Remote Sens. 2007, 62, 434–446. [Google Scholar] [CrossRef]

- Hakala, T.; Suomalainen, J.; Peltoniemi, J.I. Acquisition of Bidirectional Reflectance Factor Dataset Using a Micro Unmanned Aerial Vehicle and a Consumer Camera. Remote Sens. 2010, 2, 819–832. [Google Scholar] [CrossRef]

- Spencer, J.W. Fourier series representation of the position of the Sun. Search 1971, 2, 172. Available online: https://www.researchgate.net/publication/285877391_Fourier_series_representation_of_the_position_of_the_Sun (accessed on 23 July 2025).

- Walraven, R. Calculating the position of the Sun. Sol. Energy 1978, 20, 393–397. [Google Scholar] [CrossRef]

- Blanco-Muriel, M.; Alarcón-Padilla, D.C.; López-Moratalla, T.; Lara-Coira, M. Computing the Solar Vector. Sol. Energy 2001, 70, 431–441. [Google Scholar] [CrossRef]

- Grena, R. An Algorithm for the Computation of the Solar Position. Sol. Energy 2008, 82, 462–470. [Google Scholar] [CrossRef]

- Li, W.; Zhao, Y. The improvement in solar position calculations in the ellipsoid model of the earth. J. Univ. Chin. Acad. Sci. 2019, 36, 363–375. [Google Scholar] [CrossRef]

- Grenzdörffer, G.J.; Niemeyer, F. UAV based BRDF-measure-ments of agricultural surfaces with PFIFFikus. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 3822, 229–234. [Google Scholar] [CrossRef]

- Tao, B.; Hu, X.; Yang, L.; Zhang, L.; Chen, L.; Xu, N.; Wang, L.; Wu, R.; Zhang, D.; Zhang, P. BRDF feature observation method and modeling of desert site based on UAV platform. Natl. Remote Sens. Bull. 2021, 25, 1964–1977. [Google Scholar] [CrossRef]

- Wang, Z.; Li, H.; Wang, S.; Song, L.; Chen, J. Methodology and Modeling of UAV Push-Broom Hyperspectral BRDF Observation Considering Illumination Correction. Remote Sens. 2024, 16, 543. [Google Scholar] [CrossRef]

- Song, L.; Li, H. Multi-Level Spectral Attention Network for Hyperspectral BRDF Reconstruction from Multi-Angle Multi-Spectral Images. Remote Sens. 2025, 17, 863. [Google Scholar] [CrossRef]

- Li, H. High-precision Mapping Research on Geological Features under Multi-angle oblique photography by Unmanned Aerial Vehicles. China New Technol. New Prod. Mag. 2025, 17, 22–24. [Google Scholar] [CrossRef]

- Meinen, B.U.; Robinson, D.T. Mapping Erosion and Deposition in an Agricultural Landscape: Optimization of UAV Image Acquisition Schemes for SfM-MVS. Remote Sens. Environ. 2020, 239, 111666. [Google Scholar] [CrossRef]

- Zhao, M.; Chen, J.; Song, S.; Li, Y.; Wang, F.; Wang, S.; Liu, D. Proposition of UAV Multi-Angle Nap-of-the-Object Image Acquisition Framework Based on a Quality Evaluation System for a 3D Real Scene Model of a High-Steep Rock Slope. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103558. [Google Scholar] [CrossRef]

- Wand, R.; Wei, N.; Zhang, C.; Bao, T.; Liu, J.; Yu, K.; Wang, F. UAV multi angle remote sensing quantification of understory vegetation coverage in the hilly region of south China. Ecol. Environ. Sci. 2021, 30, 2294–2302. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A. Multi-view object-based classification of wetland land covers using unmanned aircraft system images. Remote Sens. Environ. 2018, 216, 122–138. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A. Deep convolutional neural network training enrichment using multi-view object-based analysis of Unmanned Aerial systems imagery for wetlands classification. ISPRS J. Photogramm. Remote Sens. 2018, 139, 154–170. [Google Scholar] [CrossRef]

- Kanning, M.; Kühling, I.; Trautz, D.; Jarmer, T. High-Resolution UAV-Based Hyperspectral Imagery for LAI and Chlorophyll Estimations from Wheat for Yield Prediction. Remote Sens. 2018, 10, 2000. [Google Scholar] [CrossRef]

- Sun, B.; Wang, C.; Yang, C.; Xu, B.; Zhou, G.; Li, X.; Xie, J.; Xu, S.; Liu, B.; Xie, T.; et al. Retrieval of Rapeseed Leaf Area Index Using the PROSAIL Model with Canopy Coverage Derived from UAV Images as a Correction Parameter. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102373. [Google Scholar] [CrossRef]

- Verger, A.; Vigneau, N.; Chéron, C.; Gilliot, J.-M.; Comar, A.; Baret, F. Green Area Index from an Unmanned Aerial System over Wheat and Rapeseed Crops. Remote Sens. Environ. 2014, 152, 654–664. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of Winter-Wheat above-Ground Biomass Based on UAV Ultrahigh-Ground-Resolution Image Textures and Vegetation Indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Duan, S.-B.; Li, Z.-L.; Wu, H.; Tang, B.-H.; Ma, L.; Zhao, E.; Li, C. Inversion of the PROSAIL Model to Estimate Leaf Area Index of Maize, Potato, and Sunflower Fields from Unmanned Aerial Vehicle Hyperspectral Data. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 12–20. [Google Scholar] [CrossRef]

- Lin, L.; Yu, K.; Yao, X.; Deng, Y.; Hao, Z.; Chen, Y.; Wu, N.; Liu, J. UAV Based Estimation of Forest Leaf Area Index (LAI) through Oblique Photogrammetry. Remote Sens. 2021, 13, 803. [Google Scholar] [CrossRef]

- Wang, C.Y. Study on Jujube Quality Detection by UAV Near-Ground Multi-Angle and Polarization Detection. Master’s Thesis, Tarim University, Xinjiang, China, 2023. [Google Scholar]

- Yan, Y.; Deng, L.; Liu, X.; Zhu, L. Application of UAV-Based Multi-Angle Hyperspectral Remote Sensing in Fine Vegetation Classification. Remote Sens. 2019, 11, 2753. [Google Scholar] [CrossRef]

- Qiu, F.; Huo, J.W.; Zhang, Q.; Chen, X.H.; Zhang, Y.G. Observation and analysis of bidirectional and hotspot reflectance of conifer forest canopies with a multiangle hyperspectral UAV imaging platform. Natl. Remote Sens. Bull. 2021, 25, 1013–1024. [Google Scholar] [CrossRef]

- Painter, T.H.; Dozier, J. The Effect of Anisotropic Reflectance on Imaging Spectroscopy of Snow Properties. Remote Sens. Environ. 2004, 89, 409–422. [Google Scholar] [CrossRef]

- Hudson, S.R.; Warren, S.G.; Brandt, R.E.; Grenfell, T.C.; Six, D. Spectral Bidirectional Reflectance of Antarctic Snow: Measurements and Parameterization. J. Geophys. Res. Atmos. 2006, 111, D18106. [Google Scholar] [CrossRef]

- Dumont, M.; Brissaud, O.; Picard, G.; Schmitt, B.; Gallet, J.-C.; and Arnaud, Y. High-accuracy measurements of snow Bidirectional Reflectance Distribution Function at visible and NIR wavelengths comparison with modelling results, Atmos. Chem. Phys. 2010, 10, 2507–2520. [Google Scholar] [CrossRef]

- Dumont, M.; Sirguey, P.; Arnaud, Y.; Six, D. Monitoring Spatial and Temporal Variations of Surface Albedo on Saint Sorlin Glacier (French Alps) Using Terrestrial Photography. Cryosphere 2011, 5, 759–771. [Google Scholar] [CrossRef]

- Ryan, J.C.; Hubbard, A.; Box, J.E.; Brough, S.; Cameron, K.; Cook, J.M.; Cooper, M.; Doyle, S.H.; Edwards, A.; Holt, T.; et al. Derivation of High Spatial Resolution Albedo from UAV Dnmigital Imagery: Application over the Greenland Ice Sheet. Front. Earth Sci. 2017, 5, 40. [Google Scholar] [CrossRef]

| Research Areas | Article Count |

|---|---|

| Remote Sensing | 35 |

| Imaging Science/Photographic Technology | 29 |

| Environmental Sciences/Ecology | 18 |

| Geology | 12 |

| Agriculture | 8 |

| Engineering | 6 |

| Computer Science | 4 |

| Geochemistry Geophysics | 3 |

| Instruments Instrumentation | 3 |

| Chemistry | 2 |

| Physical Geography | 2 |

| Forestry | 1 |

| Spectroscopy | 1 |

| Meteorology Atmospheric Sciences | 1 |

| Plant Sciences | 1 |

| Sensor | Country | Spectrum | FOV | Spatial Resolution | Product Photo | Source of Photo |

|---|---|---|---|---|---|---|

| DJI Mavic 3 | China | Green, red, red-edge, NIR | HFOV: 61.2° VFOV: 48.1° | 5.3 cm@h = 100 m |  | Web [81] |

| MS600 Pro | China | Blue, green, red, double red-edge, NIR | HFOV: 49.6° VFOV: 38° | 8.65 cm@h = 120 m |  | Web [82] |

| MicaSense RedEdge-MX | America | Blue, green, red, Red-edge, NIR | HFOV: 47.2° VFOV: 35.4° | 8 cm@h = 120 m |  | Web [83] |

| Parrot Sequoia | Switzerland | Green, red, red-edge, NIR | HFOV: 70.6° VFOV: 52.6° | 8.5 cm@h = 120 m |  | Web [84] |

| GaiaSky mini3-VN | China | 400–1000 nm 224 bands@2.7 nm 112 bands@5.5 nm 56 bands@10.8 nm | HFOV: 35.36°@16 mm 25°@23 mm VFOV: 0° | 6.2 cm (@16 mm, h = 100 m) 4.3 cm (@23 mm, h = 100 m) |  | Web [85] |

| HySpex Mjolnir V-1240 | Norway | 400–1000 nm 200 bands@3 nm | HFOV: 20° VFOV: 0° | 0.27/0.27 mrad |  | Web [86] |

| Headwall Co-aligned VNIR-SWIR | America | 400–2500 nm VNIR: 270 bands@6 nm SWIR: 267 bands@8 nm | HFOV: 17°, 25°, 34° VFOV: 0° | VNIR: 3.1 cm@h = 100 m SWIR: 6.25 cm@h = 100 m |  | Web [87] |

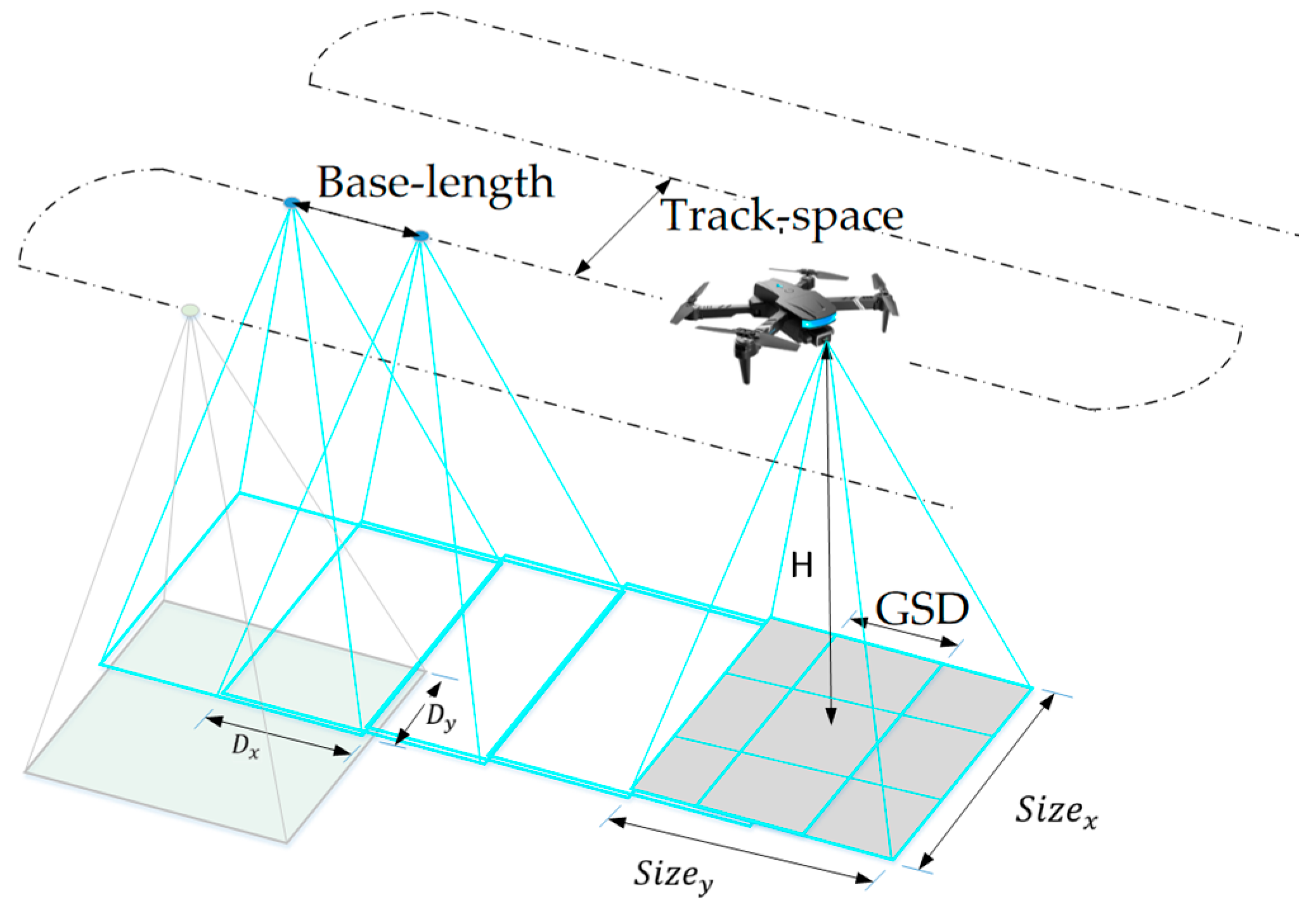

| Methods | Technical Characteristics | Limitations |

|---|---|---|

| Nadir-Parallel Flight Path | Orthogonal photography with high overlap. Large area coverage per flight. Uniform resolution in flat terrain. | Limited angle diversity. Inconsistent resolution in complex terrain (requires terrain-following algorithms). |

| Oblique-Parallel Flight Path | Observation of low zenith angle. Rich facade information (side views of buildings/trees). | Shadow interference at low solar angles. High data volume. |

| Crisscrossing Flight Route | Principal plane and perpendicular plane intersections. Dedicated flight path for hot spot effect capture. Clear sun–object–sensor geometry. | Low flight efficiency. Data inconsistency under dynamic lighting. |

| Spiral-Descending Flight Path | Constant target distance. Hemispherical angle sampling. Full azimuth angle. Multi-zenith angle. | Low-precision azimuth angle. Stability issues in turbulent airflow. Hovering consumes high energy. |

| Radial-Descending Flight Path | Radial straight-line flights. Gimbal precision pointing. High-precision radiometric calibration. High-precision azimuth angle. | Redundant flight paths. Unsuitable for large-area surveys. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, W.; Cao, H.; Ji, D.; You, D.; Wu, J.; Zhang, H.; Guo, Y.; Zhang, M.; Wang, Y. Angle Effects in UAV Quantitative Remote Sensing: Research Progress, Challenges and Trends. Drones 2025, 9, 665. https://doi.org/10.3390/drones9100665

Zhang W, Cao H, Ji D, You D, Wu J, Zhang H, Guo Y, Zhang M, Wang Y. Angle Effects in UAV Quantitative Remote Sensing: Research Progress, Challenges and Trends. Drones. 2025; 9(10):665. https://doi.org/10.3390/drones9100665

Chicago/Turabian StyleZhang, Weikang, Hongtao Cao, Dabin Ji, Dongqin You, Jianjun Wu, Hu Zhang, Yuquan Guo, Menghao Zhang, and Yanmei Wang. 2025. "Angle Effects in UAV Quantitative Remote Sensing: Research Progress, Challenges and Trends" Drones 9, no. 10: 665. https://doi.org/10.3390/drones9100665

APA StyleZhang, W., Cao, H., Ji, D., You, D., Wu, J., Zhang, H., Guo, Y., Zhang, M., & Wang, Y. (2025). Angle Effects in UAV Quantitative Remote Sensing: Research Progress, Challenges and Trends. Drones, 9(10), 665. https://doi.org/10.3390/drones9100665