Convolutional Neural Network and Ensemble Learning-Based Unmanned Aerial Vehicles Radio Frequency Fingerprinting Identification

Abstract

1. Introduction

1.1. Background

1.2. Related Works

1.3. Motivations and Contributions

- (1)

- The paper analyzes the inherent information content characteristics of ADS-B signals and segments the signal based on the different content of each segment. In a signal, the segments are divided into three types: fixed and unchanging information in all transmitters, fixed and unchanging information in the same transmitter, and constantly changing information

- (2)

- Merging end-to-end and non-end-to-end processing is proposed for different segmented ADS-B information, retaining the raw I/Q information and introducing features from other domains. Meanwhile, two different CNN models are introduced as primary classifiers.

- (3)

- The EL method is adopted to form new classifiers. Ensemble classifiers fuse the features extracted from each signal segment based on the primary classifiers. The final identification decision is made through the ensemble classifier. The proposed approach improves both the model’s classification and generalization abilities and achieves better performance in transmitter identification.

2. System Model

2.1. Signal Analysis

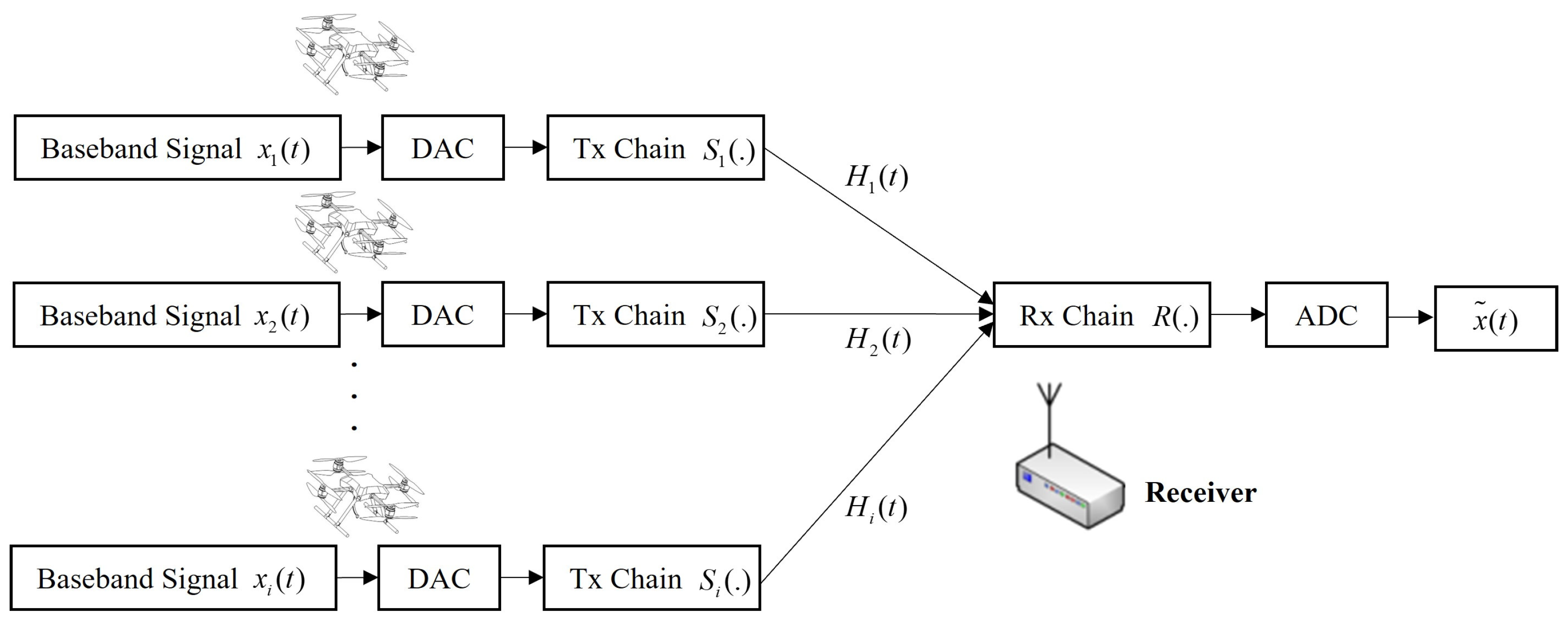

2.2. Signal Transfer Model

3. Proposed Solution

3.1. RFFI General Process

3.2. Signal Pre-Processing Step

3.3. RFFI with CNN

3.4. Ensemble Learning

- (1)

- Direct Averaging Method: The prediction result is the average of the classification confidence generated by different classifiers:

- (2)

- Weighted Average Method: Through introducing weighting factors to adjust the weights of different classifiers in the ensemble classifier, the recognition rate is improved:among

- (3)

- Voting Method: The classification results obtained by each primary classifier are transformed into predicted categories before voting, and the category with the highest number of predictions is considered the final prediction result:

4. Performance Evaluation

4.1. Experiment Setup

4.2. Performance Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicles |

| ADS-B | Automatic Dependent Surveillance-Broadcast |

| RFFI | Radio Frequency Fingerprinting Identification |

| CNN | Convolutional Neural Networks |

| IoT | Internet of Things |

| 1090 ES | 1090 MHz Mode S Extended Squitter |

| UAT | Universal Access Transceiver |

| DAC | Digital to analog converter |

| ADC | Analog to digital converter |

| DF | Down Format |

| CRC | Cyclic Redundancy Check |

| SNR | Signal-to-Noise Ratio |

References

- Song, X.; Zhao, S.; Wang, X.; Li, X.; Tian, Q. Performance Analysis of UAV RF/FSO Co-Operative Communication Network with Co–Channel Interference. Drones 2024, 8, 70. [Google Scholar] [CrossRef]

- Karch, C.; Barrett, J.; Ellingson, J.; Peterson, C.K.; Contarino, V.M. Collision Avoidance Capabilities in High-Density Airspace Using the Universal Access Transceiver ADS-B Messages. Drones 2024, 8, 86. [Google Scholar] [CrossRef]

- Wu, Z.; Shang, T.; Yue, M.; Liu, L. ADS-Bchain: A blockchain-Based T rusted service scheme for automatic dependent surveillance broadcast. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 8535–8549. [Google Scholar] [CrossRef]

- Liao, Y.; Jia, Z.; Dong, C.; Zhang, L.; Wu, Q.; Hu, H. Interference analysis for coexistence of uavs and civil aircrafts based on automatic dependent surveillance-broadcast. IEEE Trans. Veh. Technol. 2024, 1–5. [Google Scholar] [CrossRef]

- Lin, D.; Hu, S.; Wu, W.; Wu, G. Few-shot RF fingerprinting recognition for secure satellite remote sensing and image processing. Sci. China Inf. Sci. 2023, 66, 189304. [Google Scholar] [CrossRef]

- Qian, Y.; Qi, J.; Kuai, X.; Han, G.; Sun, H.; Hong, S. Specific emitter identification based on multi-level sparse representation in automatic identification system. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2872–2884. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, J.; Li, J.; Niu, S.; Song, H. Machine learning for the detection and identification of Internet of Things devices: A survey. IEEE Internet Things 2021, 9, 298–320. [Google Scholar] [CrossRef]

- Wu, W.; Hu, S.; Lin, D.; Wu, G. Reliable resource allocation with RF fingerprinting authentication in secure IoT networks. Sci. China Inf. Sci. 2022, 65, 170304. [Google Scholar] [CrossRef]

- Fu, X.; Peng, Y.; Liu, Y.; Lin, Y.; Gui, G.; Gacanin, H.; Adachi, F. Semi-supervised specific emitter identification method using metric-adversarial training. IEEE Internet Things 2023, 10, 10778–10789. [Google Scholar] [CrossRef]

- Zha, H.; Tian, Q.; Lin, Y. Real-world ADS-B signal recognition based on radio frequency fingerprinting. In Proceedings of the 2020 IEEE 28th International Conference on Network Protocols (ICNP), Madrid, Spain, 13–16 October 2020; pp. 1–6. [Google Scholar]

- Merchant, K.; Revay, S.; Stantchev, G.; Nousain, B. Deep learning for RF device fingerprinting in cognitive communication networks. IEEE J. Sel. Top. Signal Process. 2018, 12, 160–167. [Google Scholar] [CrossRef]

- Chen, X.; Wang, L.; Xu, X.; Shen, X.; Feng, Y. A review of radio frequency fingerprinting methods based on Raw I/Q and deep learning. J. Radars 2023, 12, 214–234. [Google Scholar]

- Jagannath, A.; Jagannath, J.; Kumar, P.S.P.V. A comprehensive survey on radio frequency (RF) fingerprinting: Traditional approaches, deep learning, and open challenges. Comput. Netw. 2022, 219, 109455. [Google Scholar] [CrossRef]

- Garcia, M.A.; Stafford, J.; Minnix, J.; Dolan, J. Aireon space based ADS-B performance model. In Proceedings of the 2015 Integrated Communication, Navigation and Surveillance Conference (ICNS), Herdon, VA, USA, 21–23 April 2015; pp. 1–10. [Google Scholar]

- Zeng, M.; Liu, Z.; Wang, Z.; Liu, H.; Li, Y.; Yang, H. An adaptive specific emitter identification system for dynamic noise domain. IEEE Internet Things 2022, 9, 25117–25135. [Google Scholar] [CrossRef]

- Brik, V.; Banerjee, S.; Gruteser, M.; Oh, S. Wireless device identification with radiometric signatures. In Proceedings of the ACM International Conference on Mobile Computing and Networking ACM, San Francisco, CA, USA, 14 September 2008; pp. 116–127. [Google Scholar]

- Knox, D.A.; Kunz, T. Wireless fingerprints inside a wireless sensor network. ACM Trans. Sens. Netw. (TOSN) 2015, 11, 1–30. [Google Scholar] [CrossRef]

- Bitar, N.; Muhammad, S.; Refai, H.H. Wireless technology identification using deep convolutional neural networks. In Proceedings of the 2017 IEEE 28th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Montreal, QC, Canada, 8–13 October 2017; pp. 1–6. [Google Scholar]

- Peng, L.; Hu, A.; Zhang, J.; Jiang, Y.; Yu, J.; Yan, Y. Design of a hybrid RF fingerprint extraction and device classification scheme. IEEE Internet Things 2018, 6, 349–360. [Google Scholar] [CrossRef]

- Peng, L.; Zhang, J.; Liu, M.; Hu, A. Deep learning based RF fingerprint identification using differential constellation trace figure. IEEE Trans. Veh. Technol. 2019, 69, 1091–1095. [Google Scholar] [CrossRef]

- Al-Shawabka, A.; Pietraski, P.; Pattar, S.B.; Restuccia, F.; Melodia, T. Deeplora: Fingerprinting lora devices at scale through deep learning and data augmentation. In Proceedings of the Twenty-second International Symposium on Theory, Algorithmic Foundations, and Protocol Design for Mobile Networks and Mobile Computing, Shanghai, China, 26–29 June 2021; pp. 251–260. [Google Scholar]

- Shen, G.; Zhang, J.; Marshall, A.; Peng, L.; Wang, X. Radio frequency fingerprint identification for lora using spectrogram and CNN. In Proceedings of the IEEE INFOCOM 2021-IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021; pp. 1–10. [Google Scholar]

- Shen, G.; Zhang, J.; Marshall, A.; Peng, L.; Wang, X. Radio frequency fingerprint identification for lora using deep learning. IEEE J. Sel. Areas Commun. 2021, 39, 2604–2616. [Google Scholar] [CrossRef]

- Soltani, N.; Sankhe, K.; Dy, J.; Ioannidis, S.; Chowdhury, K. More is better: Data augmentation for channel-resilient RF fingerprinting. IEEE Commun. Mag. 2020, 58, 66–72. [Google Scholar] [CrossRef]

- Robinson, J.; Kuzdeba, S.; Stankowicz, J.; Carmack, J.M. Dilated causal convolutional model for rf fingerprinting. In Proceedings of the 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2021; pp. 157–162. [Google Scholar]

- Riyaz, S.; Sankhe, K.; Ioannidis, S.; Chowdhury, K. Deep learning convolutional neural networks for radio identification. IEEE Commun. Mag. 2018, 56, 146–152. [Google Scholar] [CrossRef]

- Jian, T.; Rendon, B.C.; Ojuba, E.; Soltani, N.; Wang, Z.; Sankhe, K.; Gritsenko, A.; Dy, J.; Chowdhury, K.; Ioannidis, S. Deep learning for RF fingerprinting: A massive experimental study. IEEE Internet Things 2020, 3, 50–57. [Google Scholar] [CrossRef]

- Agadakos, I.; Agadakos, N.; Polakis, J.; Amer, M.R. Chameleons’ oblivion: Complex-valued deep neural networks for protocol-agnostic rf device fingerprinting. In Proceedings of the 2020 IEEE European Symposium on Security and Privacy (EuroS&P), Genoa, Italy, 7–11 September 2021; pp. 322–338. [Google Scholar]

- Liu, Y.; Wang, J.; Li, J.; Song, H.; Yang, T.; Niu, S.; Ming, Z. Zero-bias deep learning for accurate identification of Internet-of-Things (IoT) devices. IEEE Internet Things 2020, 8, 2627–2634. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, J.; Li, J.; Niu, S.; Song, H. Class-incremental learning for wireless device identification in IoT. IEEE Internet Things 2021, 8, 17227–17235. [Google Scholar] [CrossRef]

- Tu, Y.; Lin, Y.; Zhang, H.; Zhang, J.; Wang, Y.; Gui, G.; Mao, S. Large-scale real-world radio signal recognition with deep learning. Chin. J. Aeronaut. 2022, 35, 35–48. [Google Scholar] [CrossRef]

- Jafari, H.; Omotere, O.; Adesina, D.; Wu, H.; Qian, L. IoT devices fingerprinting using deep learning. In Proceedings of the 2018 IEEE Military Communications Conference (MILCOM), Los Angeles, CA, USA, 29–31 October 2018; pp. 1–9. [Google Scholar]

- Standard No.: DO-260B; RTCA. Minimum Operational Performance Standards (MOPS) for 1090 MHz Extended Squitter Automatic Dependent Surveillance-Broadcast (ADS-B) and Traffic Information Services-Broadcast (TIS-B). Radio Technical Commission for Aeronautics: Washington, DC, USA, 2011.

- Li, Y.; Chang, J.; Kong, C.; Bao, W. Recent progress of machine learning in flow modeling and active flow control. Chin. J. Aeronaut. 2022, 35, 14–44. [Google Scholar] [CrossRef]

- Tumer, K.; Ghosh, J. Error correlation and error reduction in ensemble classifiers. Connect. Sci. 1996, 8, 385–404. [Google Scholar] [CrossRef]

| Bit | 1–5 | 6–8 | 9–32 | 33–88 | 89–112 |

|---|---|---|---|---|---|

| Information | DF = 10001 | CA | AA | Message | PI |

| Number of bits | 5 | 3 | 24 | 56 | 24 |

| Coding | Meaning | |

|---|---|---|

| Binary | Decimal | |

| 000 | 0 | Level 1 transponder |

| 001 | 1 | Reserved |

| 010 | 2 | Reserved |

| 011 | 3 | Reserved |

| 100 | 4 | Level 2 or above transponder, and the ability to set “CA” code 7 |

| 101 | 5 | Level 2 or above transponder, and the ability to set “CA” code 7 |

| 110 | 6 | Level 2 or above transponder, and the ability to set “CA” code 7 |

| 111 | 7 | Signifies the “DR” field is not equal to ZERO (0), or the “FS” field equals 2, 3, 4, or 5, and either on the ground or airborne |

| Parameter Name | Parameter Value |

|---|---|

| MaxEpochs | 45 |

| InitialLearnRate | 0.01 |

| MiniBatchSize | 16 |

| LearnRateDropFactor | 0.2 |

| LearnRateDropPeriod | 9 |

| Method | Maximum Accuracy (%) |

|---|---|

| ELWAN-CNN | 97.5 |

| ELVM-CNN | 95.79 |

| ELWAM-IQ | 90.71 |

| ELVM-IQ | 88.54 |

| ELWAM-RES | 93.01 |

| ELVM-RES | 91.54 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, Y.; Zhang, X.; Wang, S.; Zhang, W. Convolutional Neural Network and Ensemble Learning-Based Unmanned Aerial Vehicles Radio Frequency Fingerprinting Identification. Drones 2024, 8, 391. https://doi.org/10.3390/drones8080391

Zheng Y, Zhang X, Wang S, Zhang W. Convolutional Neural Network and Ensemble Learning-Based Unmanned Aerial Vehicles Radio Frequency Fingerprinting Identification. Drones. 2024; 8(8):391. https://doi.org/10.3390/drones8080391

Chicago/Turabian StyleZheng, Yunfei, Xuejun Zhang, Shenghan Wang, and Weidong Zhang. 2024. "Convolutional Neural Network and Ensemble Learning-Based Unmanned Aerial Vehicles Radio Frequency Fingerprinting Identification" Drones 8, no. 8: 391. https://doi.org/10.3390/drones8080391

APA StyleZheng, Y., Zhang, X., Wang, S., & Zhang, W. (2024). Convolutional Neural Network and Ensemble Learning-Based Unmanned Aerial Vehicles Radio Frequency Fingerprinting Identification. Drones, 8(8), 391. https://doi.org/10.3390/drones8080391