Multi-UAVs Tracking Non-Cooperative Target Using Constrained Iterative Linear Quadratic Gaussian

Abstract

1. Introduction

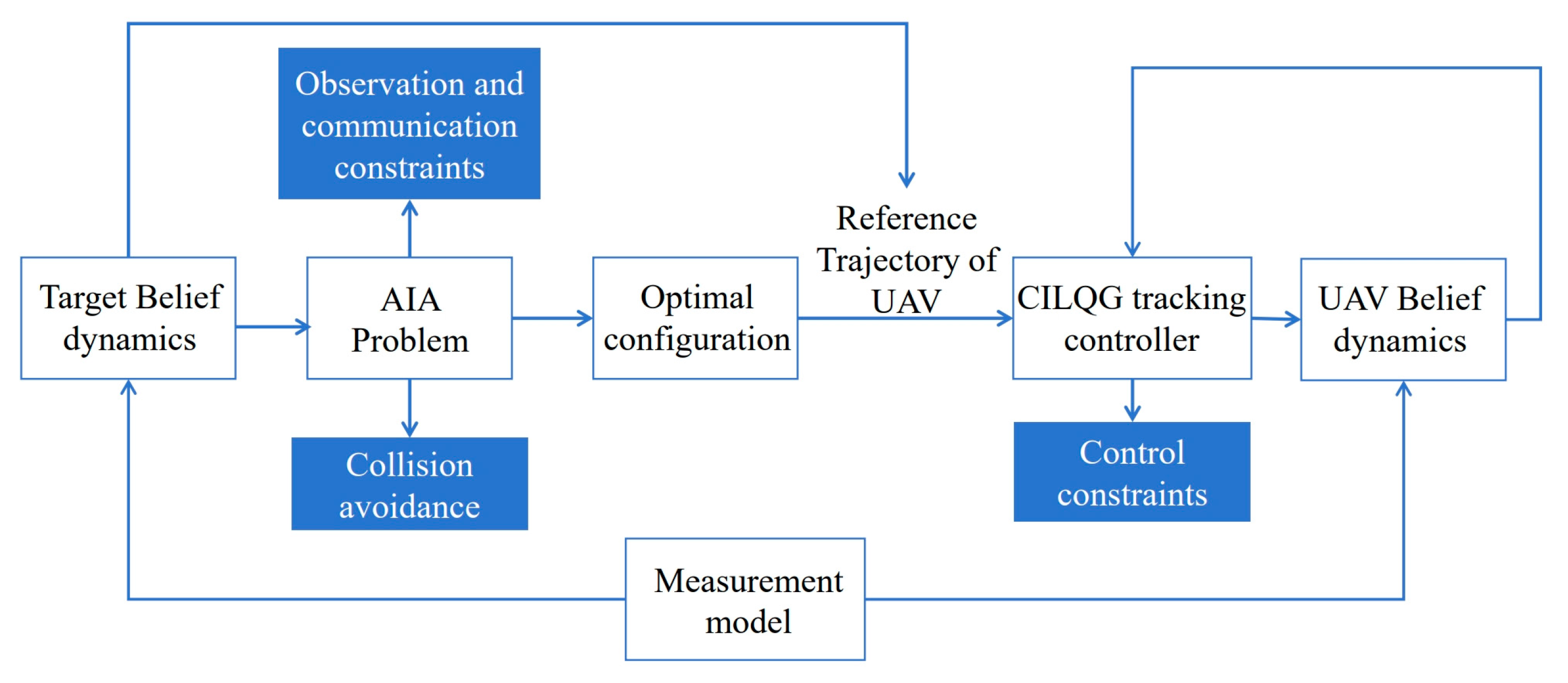

- Bilateral uncertainty from the motion of the target and UAVs poses significant challenges to control, particularly in dynamic target tracking scenarios. To address this challenge, we propose a two-stage optimization process for tracking. The first stage focuses on minimizing the target uncertainty using the Fisher Information Matrix (FIM) under the frame of an AIA problem. The second stage is tracking the reference trajectories using CILQG, which addresses the UAV’s uncertainty reduction.

- The interdependence between target motion estimation and UAV trajectory tracking also forms a crucial aspect of our approach. Due to the intertwined nature of these two processes, the impact of the bilateral uncertainty on the accuracy of the target estimation and the precision of UAVs’ trajectories will be intensified. Furthermore, the uncertainty inherent in target motion necessitates a control method capable of rapidly adapting to its movements. This challenge is amplified by limited computational resources and the critical need for real-time performance. The reasons above necessitate a control method that balances computational speed and accuracy. While rarely applied to target tracking, the Constrained Iterative Linear Quadratic Gaussian (CILQG) method demonstrates superior computational efficiency. Moreover, CILQG exhibits enhanced robustness to noise, effectively handling system uncertainties. This stems from its use of belief states and trajectory profile tracking to minimize uncertainty propagation. Conversely, conventional solvers typically treat estimated target states as deterministic values within the optimization problem, neglecting both target and robot uncertainties. This can lead to divergence and suboptimal control performance.

2. Description of the Investigated Task

3. Model for Target Tracking Problem

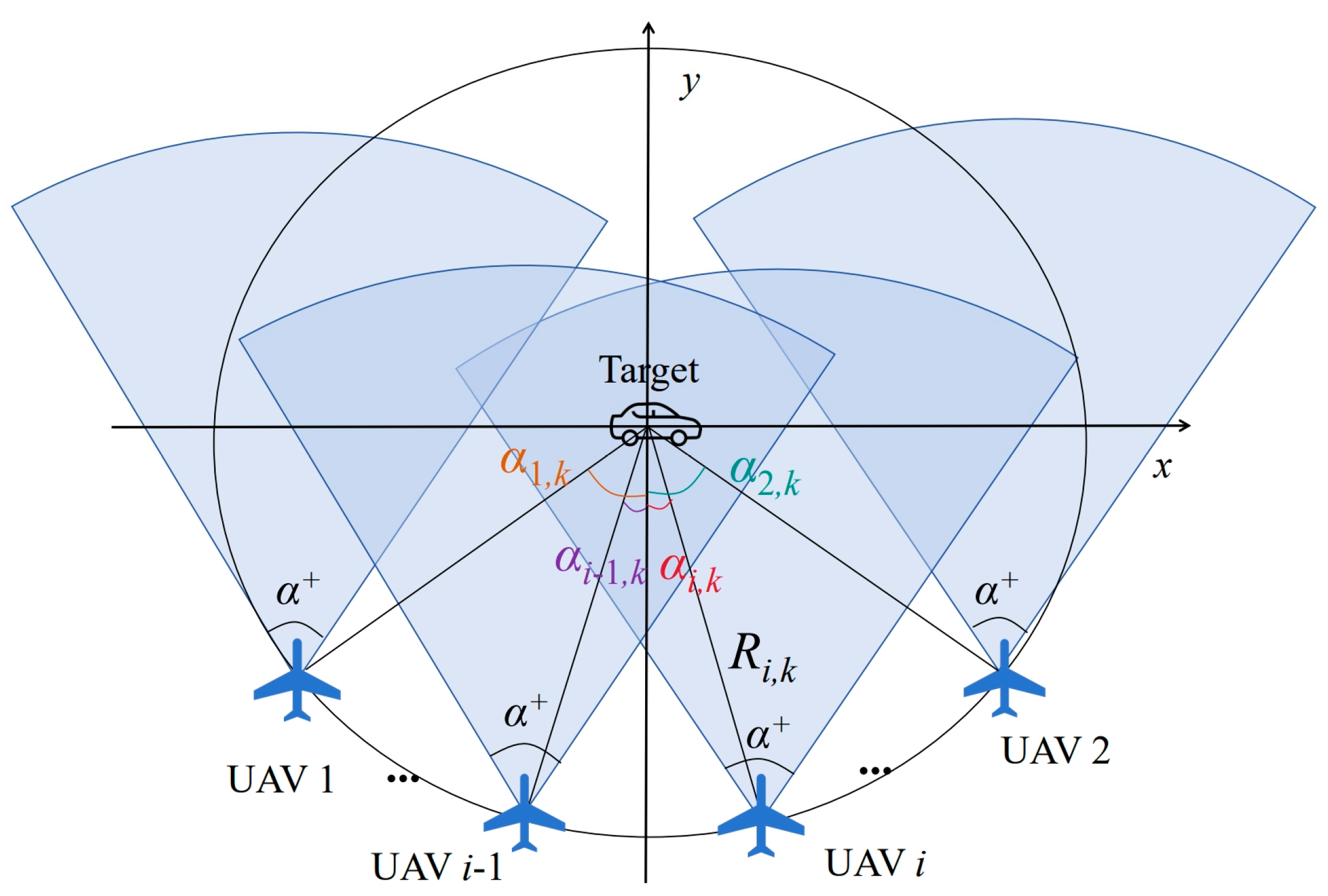

3.1. Measurement Acquisition Model

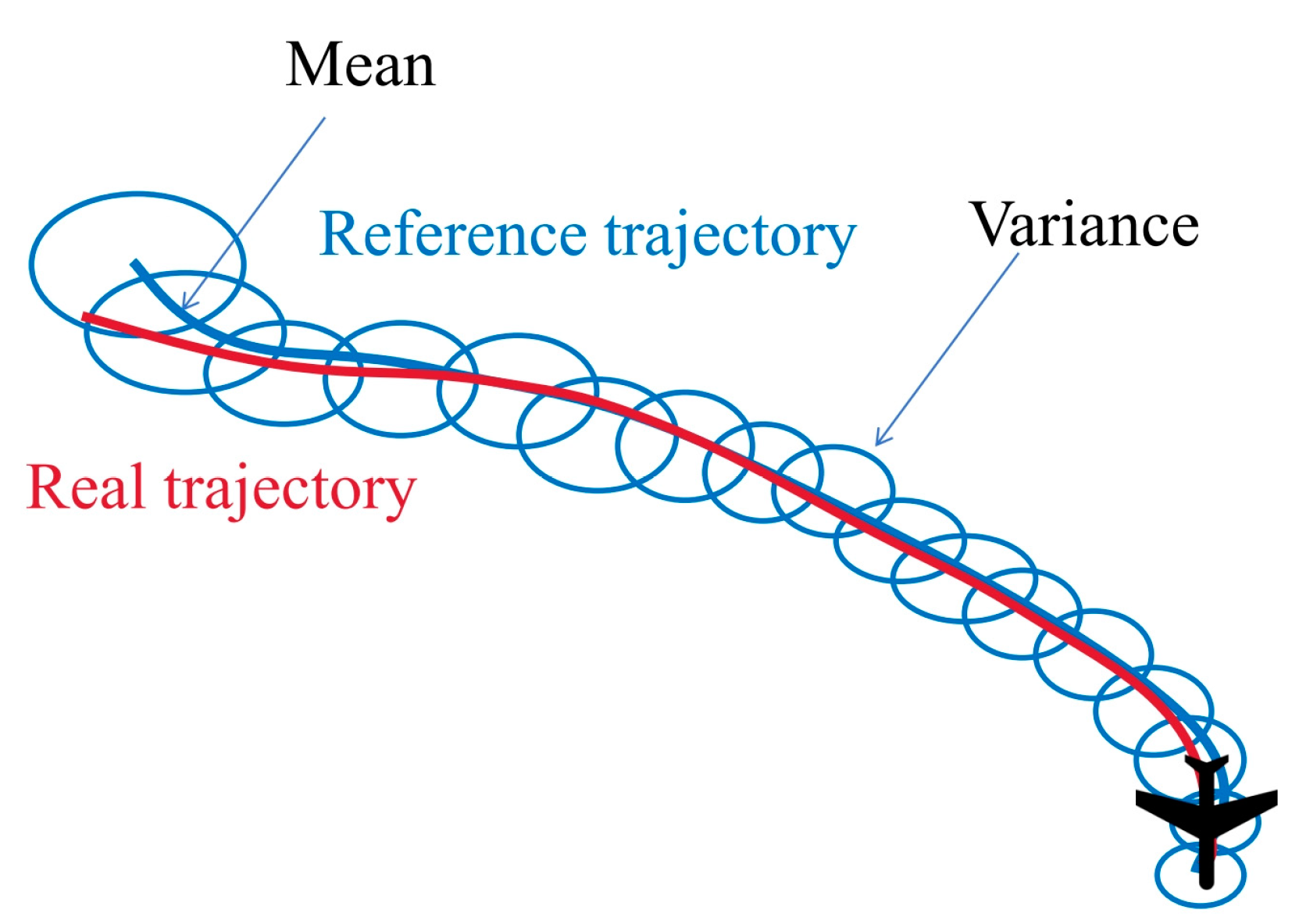

3.2. Belief Dynamics of UAV

3.3. Belief Dynamics of the Target

3.4. Obstacle Model

4. Optimization Problem behind the Active Information Acquisition

4.1. Optimization Problem about the Configuration of the Swarm

4.1.1. Pre-Designed Configuration

4.1.2. Optimization Problem for and

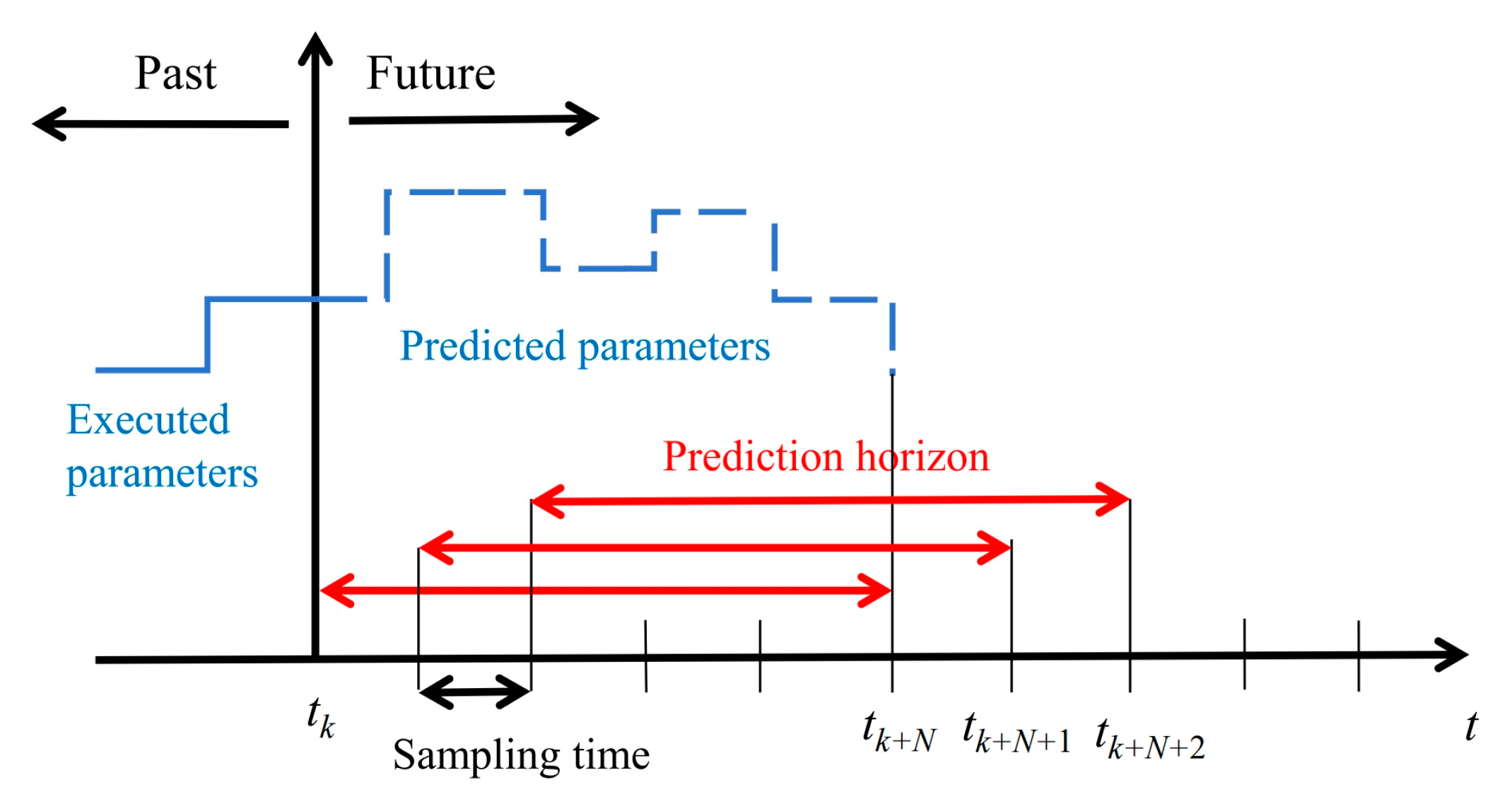

4.2. Optimization Problem for Trajectory Tracking

5. CILQG for Active Information Acquisition Problem

5.1. Backward Pass

5.2. Forward Pass

5.3. Constraints Handling by CILQG

| Algorithm 1 CILQG for trajectory tracking |

| Input: , , , |

| Output: |

| 1: , |

| 2: for k = 0 to Termination |

| 3: |

| 4: via Equations (5) and (6) |

| 5: for i = 1 to 3 (i is the number of the UAVs) |

| 6: |

| 7: Calculate the reference trajectory according to Equation (19) to Equation (21) |

| 8: according to Equations (40) and (41) |

| 9: using Equation (22) |

| 10: for ii = 1 to iteration (ii is the number of the iterations of CILQG) |

| 11: using Equation (30) to Equation (39) |

| 12: if cost_redu < expected_cost_redu (The gradient and Hessian of the cost) |

| 13: break |

| 14: while (flag = 0) |

| 15: |

| 16: cost |

| 17: flag = (cost-currentcost)/cost_redu > threshold |

| 18: if flag = 1 |

| 19: cost currentcost, input Uk, state |

| 20: else |

| 21: λ λ*learnspeed |

| 22: currentcost cost, Ui,k input |

| 23: Update |

6. Simulation

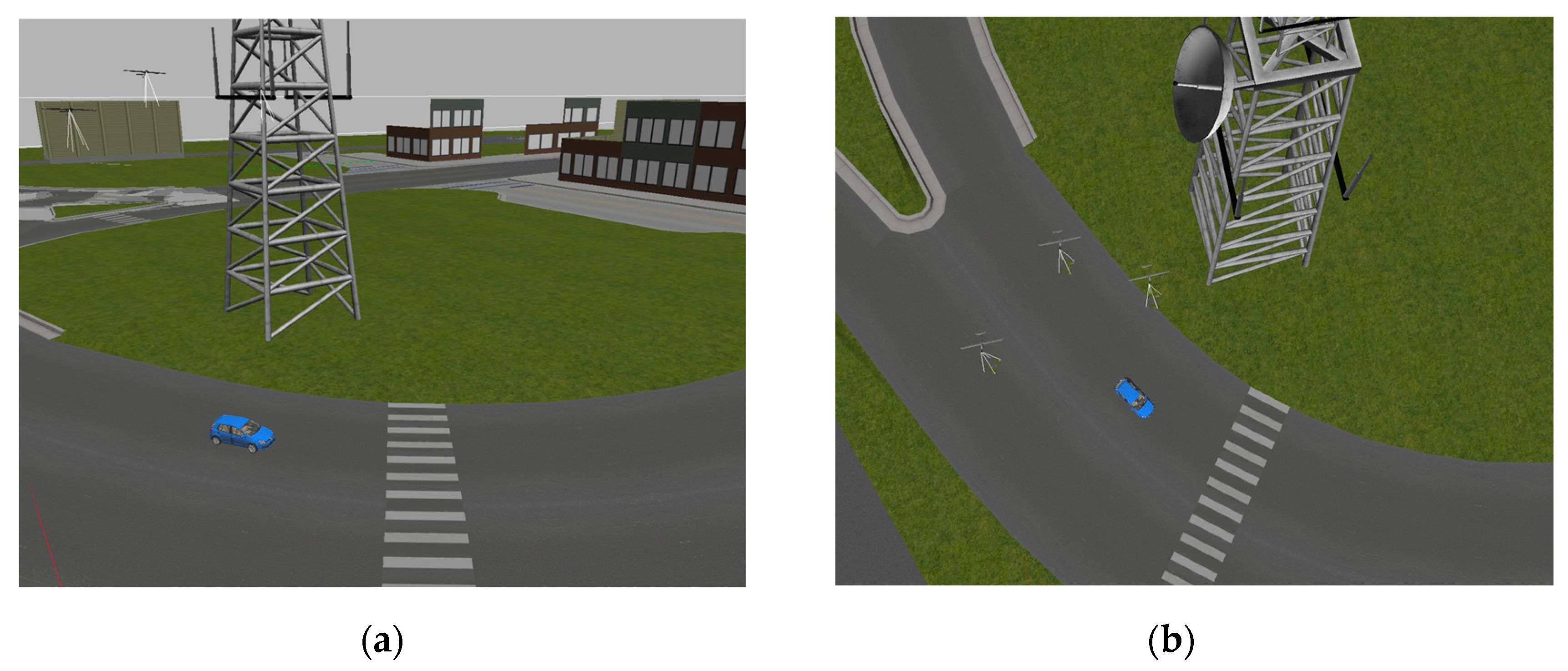

6.1. Simulation Setup and ROS Scenario

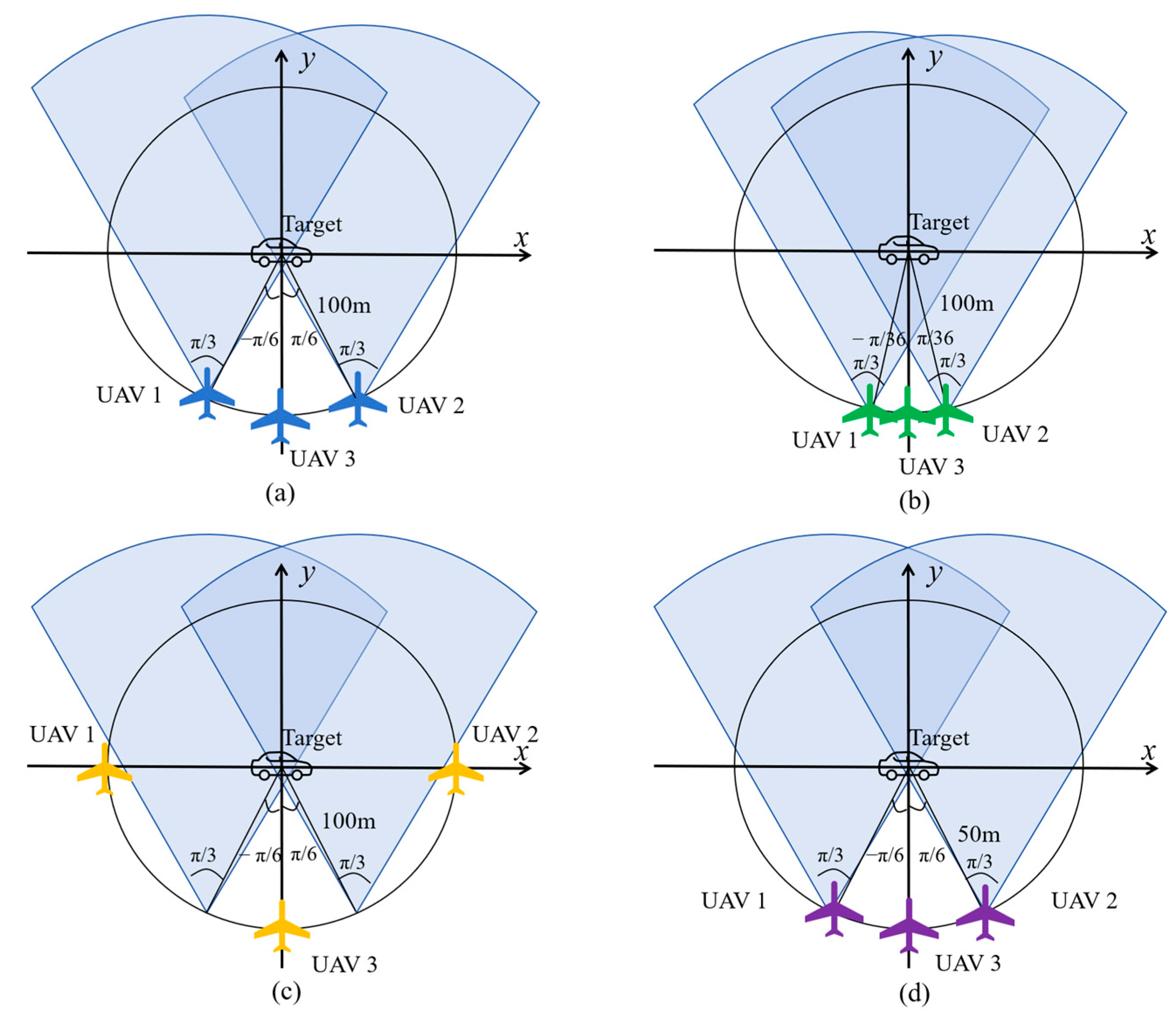

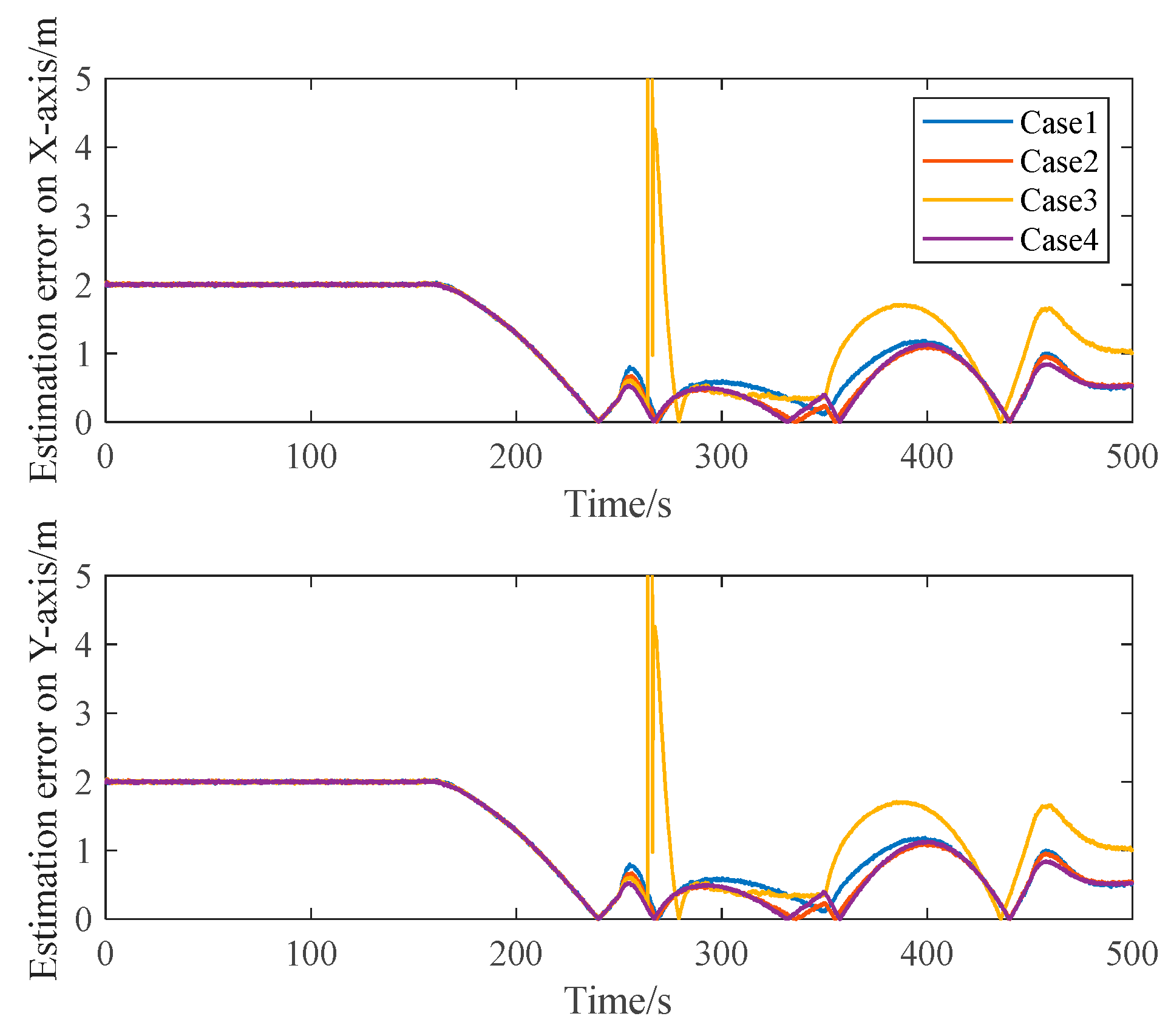

6.2. Comparison of Different Configurations

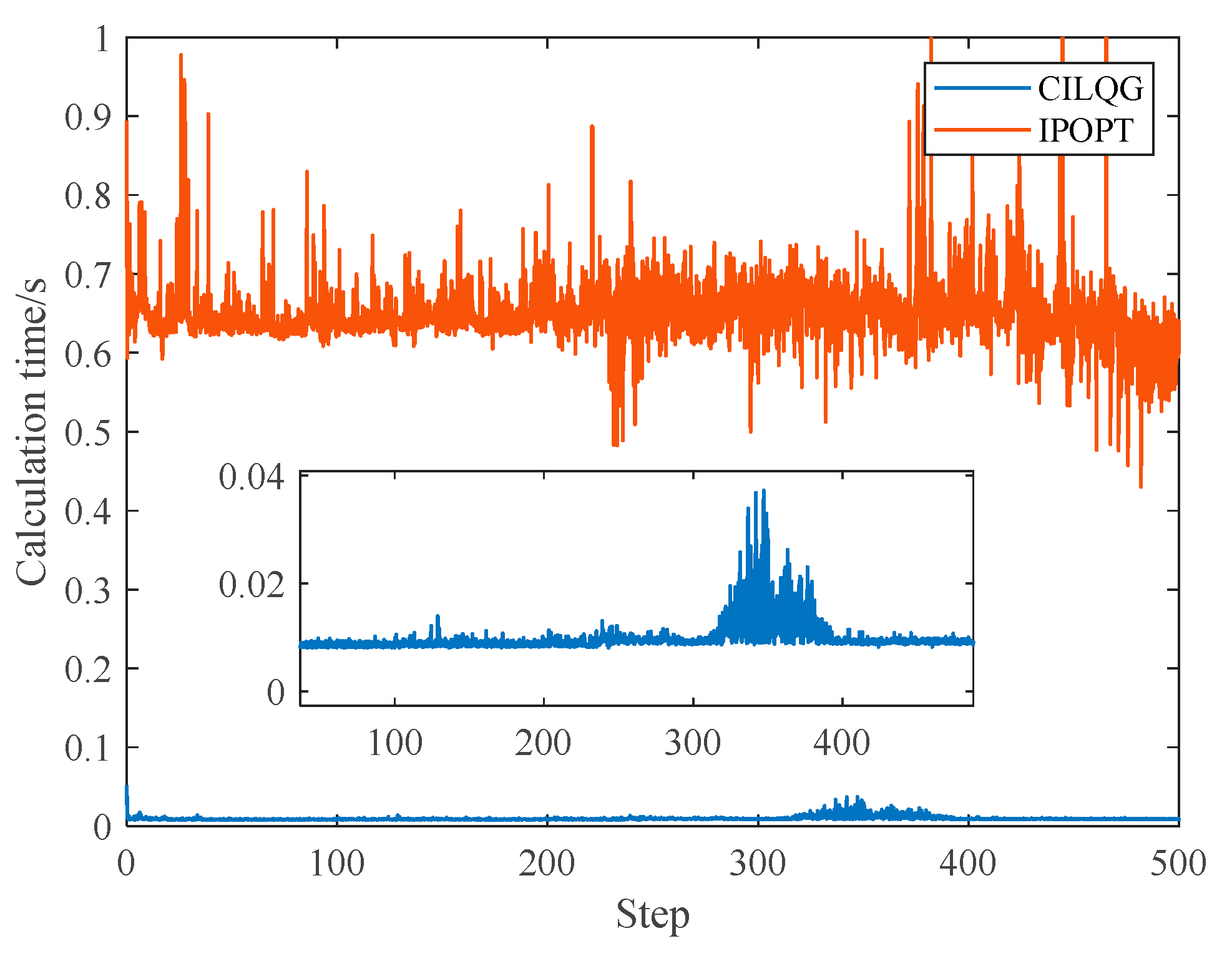

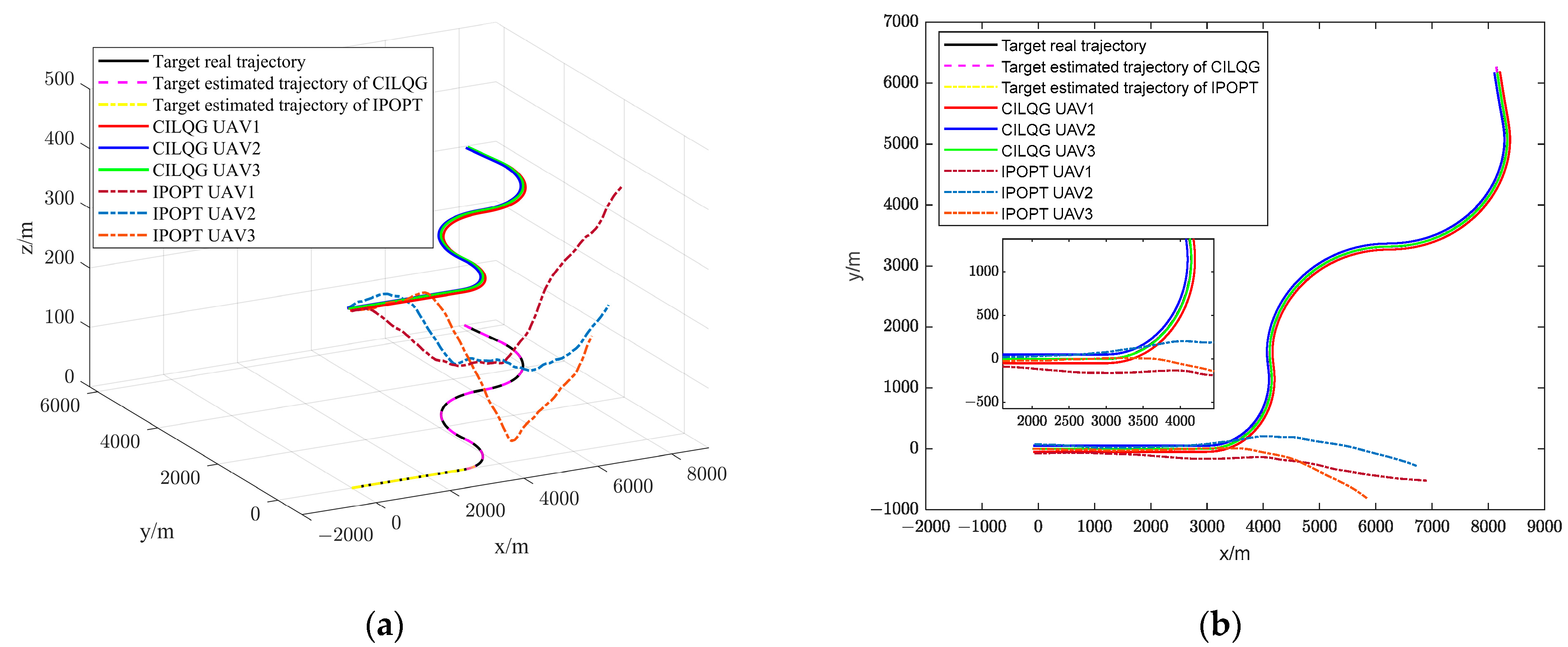

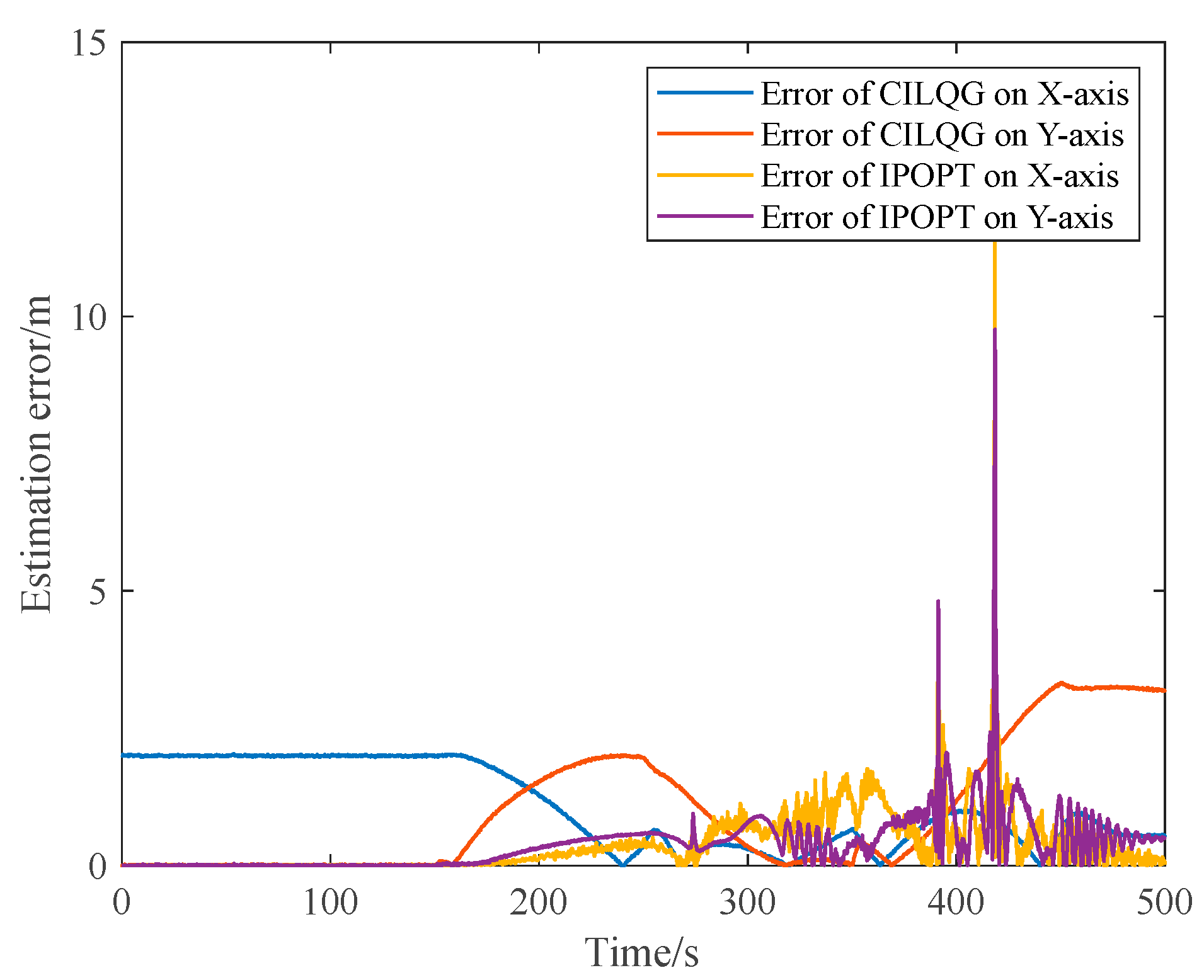

6.3. Comparison of IPOPT and CILQG

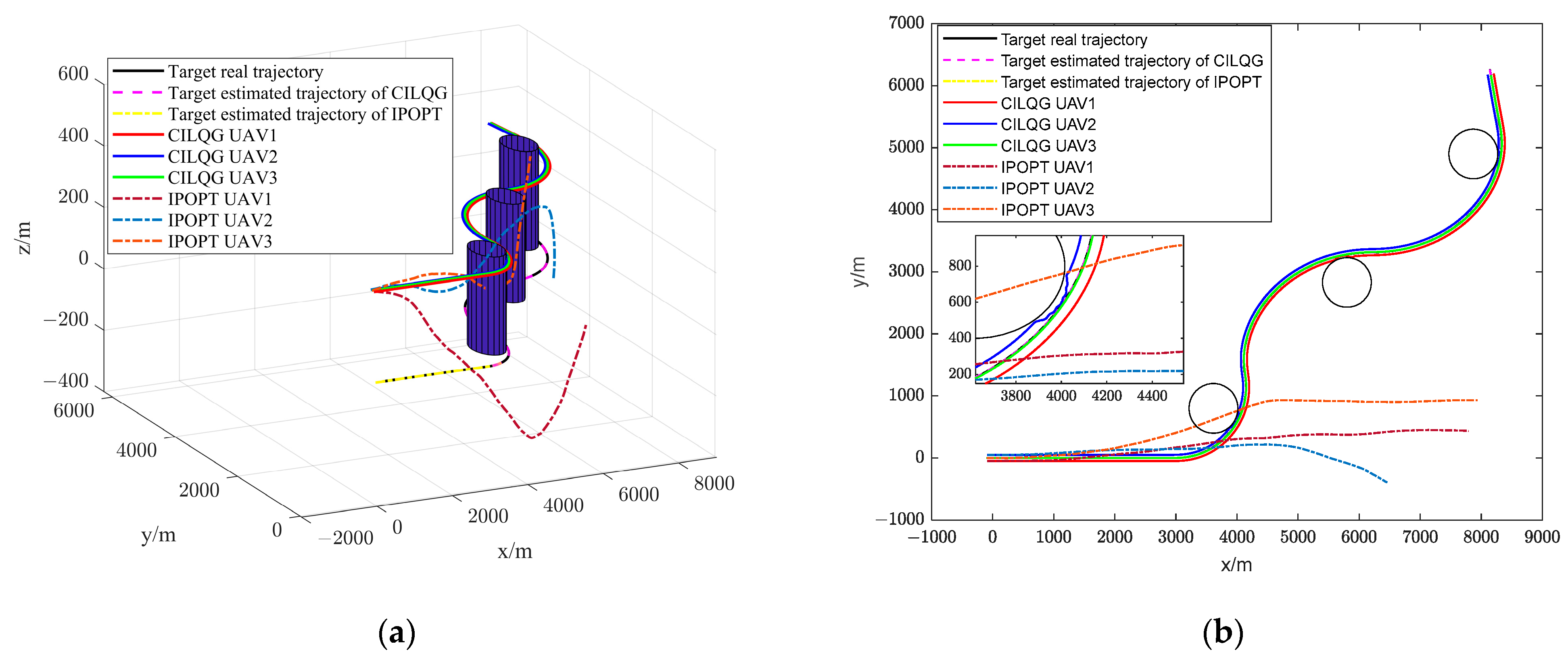

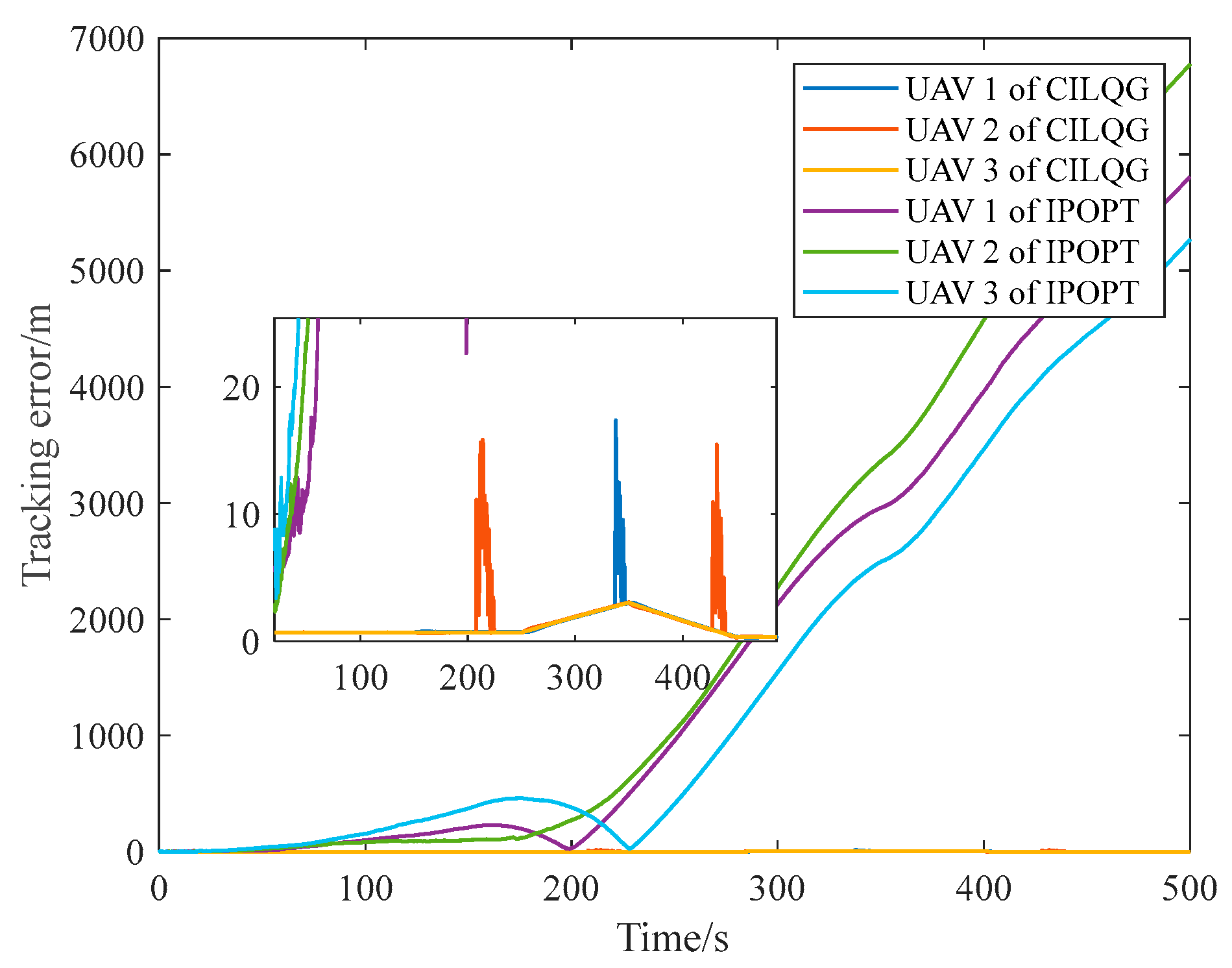

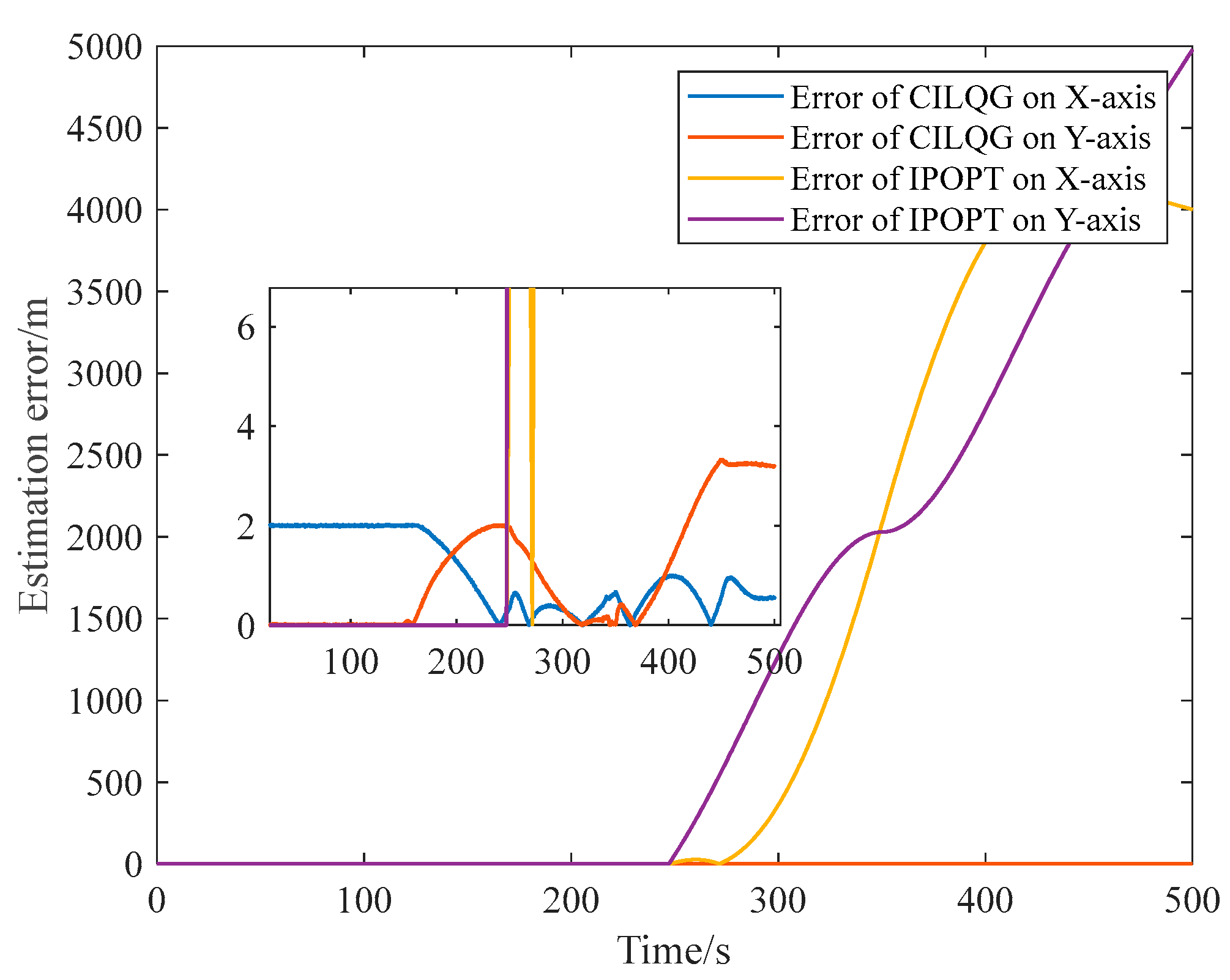

6.4. Tracking with Obstacle

7. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hsu, D.; Lee, W.S.; Rong, N. A Point-Based POMDP Planner for Target Tracking. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 2644–2650. [Google Scholar] [CrossRef]

- Qi, S.; Yao, P. Persistent Tracking of Maneuvering Target Using IMM Filter and DMPC by Initialization-Guided Game Approach. IEEE Syst. J. 2019, 13, 4442–4453. [Google Scholar] [CrossRef]

- Yang, H.; Wang, Y. Formation Optimization and Control for Maneuvering Target Tracking by Mobile Sensing Agents. IEEE Access 2019, 7, 32305–32314. [Google Scholar] [CrossRef]

- Chen, J.; Hou, X.; Qin, Z.; Guo, R. A Novel Adaptive Estimator for Maneuvering Target Tracking. In Proceedings of the 2007 International Conference on Mechatronics and Automation, Harbin, China, 5–8 August 2007; pp. 3756–3760. [Google Scholar] [CrossRef]

- Ruangwiset, A. Path Generation for Ground Target Tracking of Airplane-Typed UAV. In Proceedings of the 2008 IEEE International Conference on Robotics and Biomimetics, Bangkok, Thailand, 22–25 February 2009; pp. 1354–1358. [Google Scholar] [CrossRef]

- Zhou, L.; Leng, S.; Liu, Q.; Wang, Q. Intelligent UAV Swarm Cooperation for Multiple Targets Tracking. IEEE Internet Things J. 2022, 9, 743–754. [Google Scholar] [CrossRef]

- Yali, W. Bearings-Only Maneuvering Target Tracking Based on STF and UKF. In Proceedings of the 2008 International Conference on Advanced Computer Theory and Engineering, Phuket, Thailand, 20–22 December 2008; pp. 295–299. [Google Scholar] [CrossRef]

- Zhou, H.; Zhao, H.; Huang, H.; Zhao, X. A Cubature-Principle-Assisted IMM-Adaptive UKF Algorithm for Maneuvering Target Tracking Caused by Sensor Faults. Appl. Sci. 2017, 7, 1003. [Google Scholar] [CrossRef]

- Liao, S.; Zhu, R.; Wu, N.; Shaikh, T.A.; Sharaf, M.; Mostafa, A.M. Path Planning for Moving Target Tracking by Fixed-Wing UAV. Def. Technol. 2020, 16, 811–824. [Google Scholar] [CrossRef]

- Kong, N.J.; Li, C.; Johnson, A.M. Hybrid iLQR Model Predictive Control for Contact Implicit Stabilization on Legged Robots. IEEE Trans. Robot. 2023, 39, 4712–4727. [Google Scholar] [CrossRef]

- Lembono, T.S.; Calinon, S. Probabilistic Iterative LQR for Short Time Horizon MPC. arXiv 2021, arXiv:arXiv:2012.06349. [Google Scholar]

- Indelman, V. Towards Multi-Robot Active Collaborative State Estimation via Belief Space Planning. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 4620–4626. [Google Scholar] [CrossRef]

- Qiu, D.; Zhao, Y.; Baker, C. Latent Belief Space Motion Planning under Cost, Dynamics, and Intent Uncertainty. In Robotics: Science and Systems XVI; Robotics: Science and Systems Foundation: 2020, 12 July–16 July 2020; Available online: https://www.roboticsproceedings.org/rss16/index.html (accessed on 30 April 2024).

- Atanasov, N.; Le Ny, J.; Daniilidis, K.; Pappas, G.J. Decentralized Active Information Acquisition: Theory and Application to Multi-Robot SLAM. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 4775–4782. [Google Scholar] [CrossRef]

- Chung, T.H.; Burdick, J.W.; Murray, R.M. A Decentralized Motion Coordination Strategy for Dynamic Target Tracking. In Proceedings of the Proceedings 2006 IEEE International Conference on Robotics and Automation, 2006. ICRA 2006, Orlando, FL, USA, 15–19 May 2006; pp. 2416–2422. [Google Scholar] [CrossRef]

- Atanasov, N.; Ny, J.L.; Daniilidis, K.; Pappas, G.J. Information Acquisition with Sensing Robots: Algorithms and Error Bounds arXiv. arXiv 2013, arXiv:arXiv:1309.5390. [Google Scholar]

- Tzes, M.; Kantaros, Y.; Pappas, G.J. Distributed Sampling-Based Planning for Non-Myopic Active Information Gathering. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 5872–5877. [Google Scholar] [CrossRef]

- Schlotfeldt, B.; Thakur, D.; Atanasov, N.; Kumar, V.; Pappas, G.J. Anytime Planning for Decentralized Multirobot Active Information Gathering. IEEE Robot. Autom. Lett. 2018, 3, 1025–1032. [Google Scholar] [CrossRef]

- Schlotfeldt, B.; Tzoumas, V.; Pappas, G.J. Resilient Active Information Acquisition With Teams of Robots. IEEE Trans. Robot. 2022, 38, 244–261. [Google Scholar] [CrossRef]

- Schlotfeldt, B.; Tzoumas, V.; Thakur, D.; Pappas, G.J. Resilient Active Information Gathering with Mobile Robots. arXiv 2018, arXiv: arXiv:1803.09730. [Google Scholar]

- Papaioannou, S.; Laoudias, C.; Kolios, P.; Theocharides, T.; Panayiotou, C.G. Joint Estimation and Control for Multi-Target Passive Monitoring with an Autonomous UAV Agent. In Proceedings of the 2023 31st Mediterranean Conference on Control and Automation (MED), Limassol, Cyprus, 26–29 June 2023; pp. 176–181. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, X.; Kong, W.; Shen, L.; Jia, S. Decision-Making of UAV for Tracking Moving Target via Information Geometry. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 5611–5617. [Google Scholar] [CrossRef]

- Yang, X.; He, S.; Shin, H.-S.; Tsourdos, A. Trajectory Optimization for Target Localization and Sensor Bias Calibration with Bearing-Only Information. Guid. Navigat. Control 2022, 2, 2250015. [Google Scholar] [CrossRef]

- He, R.; Chen, S.; Wu, H.; Liu, Z.; Chen, J. Optimal Maneuver Strategy of Observer for Bearing-Only Tracking in Threat Environment. Int. J. Aerosp. Eng. 2018, 2018, 1–9. [Google Scholar] [CrossRef]

- Das, S.; Bhaumik, S. Observer Recommended Maneuver for Bearing Only Tracking of an Underwater Target. IEEE Sens. Lett. 2023, 7, 7006504. [Google Scholar] [CrossRef]

- Oshman, Y.; Davidson, P. Optimization of Observer Trajectories for Bearings-Only Target Localization. IEEE Trans. Aerosp. Electron. Syst. 1999, 35, 892–902. [Google Scholar] [CrossRef]

- Li, B.; Yang, Z.; Chen, D.; Liang, S.; Ma, H. Maneuvering Target Tracking of UAV Based on MN-DDPG and Transfer Learning. Def. Technol. 2021, 17, 457–466. [Google Scholar] [CrossRef]

- You, C. Real Time Motion Planning Using Constrained Iterative Linear Quadratic Regulator for On-Road Self-Driving. arXiv 2022, arXiv:arXiv:2202.08400. [Google Scholar]

- Lee, A.; Duan, Y.; Patil, S.; Schulman, J.; McCarthy, Z.; Van Den Berg, J.; Goldberg, K.; Abbeel, P. Sigma Hulls for Gaussian Belief Space Planning for Imprecise Articulated Robots amid Obstacles. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 5660–5667. [Google Scholar] [CrossRef]

- Chen, J.; Shimizu, Y.; Sun, L.; Tomizuka, M.; Zhan, W. Constrained Iterative LQG for Real-Time Chance-Constrained Gaussian Belief Space Planning. arXiv 2021, arXiv:arXiv:2108.06533. [Google Scholar]

- Guo, Q.; Zeng, C.; Jiang, Z.; Hu, X.; Deng, X. Application of Unscented Kalman Filter in Tracking of Video Moving Target. In Biometric Recognition; Springer International Publishing: Cham, Switzerland, 2019; Volume 11818, pp. 483–492. [Google Scholar] [CrossRef]

- Wächter, A.; Biegler, L.T. On the Implementation of an Interior-Point Filter Line-Search Algorithm for Large-Scale Nonlinear Programming. Math. Program. 2006, 106, 25–57. [Google Scholar] [CrossRef]

- Poku, M.Y.B.; Biegler, L.T.; Kelly, J.D. Nonlinear Optimization with Many Degrees of Freedom in Process Engineering. Ind. Eng. Chem. Res. 2004, 43, 6803–6812. [Google Scholar] [CrossRef]

- Lavezzi, G.; Guye, K.; Cichella, V.; Ciarcià, M. Comparative Analysis of Nonlinear Programming Solvers: Performance Evaluation, Benchmarking, and Multi-UAV Optimal Path Planning. Drones 2023, 7, 487. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, S.; Ge, M.; Zheng, W.; Chen, X.; Zheng, S.; Lu, F. Fast On-Orbit Pulse Phase Estimation of X-Ray Crab Pulsar for XNAV Flight Experiments. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 3395–3404. [Google Scholar] [CrossRef]

- Wang, Y.; Zheng, W.; Zhang, S.; Ge, M.; Li, L.; Jiang, K.; Chen, X.; Zhang, X.; Zheng, S.; Lu, F. Review of X-Ray Pulsar Spacecraft Autonomous Navigation. Chin. J. Aeronaut. 2023, 36, 44–63. [Google Scholar] [CrossRef]

- Song, M.; Wang, Y.; Zheng, W.; Li, L.; Wang, Y.; Hu, X.; Wu, Y. Fast Period Estimation of X-Ray Pulsar Signals Using an Improved Fast Folding Algorithm. Chin. J. Aeronaut. 2023, 36, 309–316. [Google Scholar] [CrossRef]

- Wang, Y.; Zheng, W.; Ge, M.; Zheng, S.; Zhang, S.; Lu, F. Use of Statistical Linearization for Nonlinear Least-Squares Problems in Pulsar Navigation. J. Guid. Control. Dyn. 2023, 46, 1850–1856. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Number of the UAVs I | 3 |

| Number of obstacles P | 3 |

| Obstacle 1 | O1 [(3600 m, 800 m), 320 m, 400 m] |

| Obstacle 2 | O2 [(5800 m, 2810 m), 321 m, 400 m] |

| Obstacle 3 | O3 [(7850 m, 4900 m), 322 m, 400 m] |

| UAV initial position | [−50 m, −86.6 m, 300 m], [50 m, −86.6 m, 301 m], [0 m, −100 m, 302 m] |

| Target initial position | [0 m, 0 m, 0 m] |

| Relative angle αi,0 | [−π/6 rad, 0 rad, π/6 rad] |

| Relative distance Ri,0 | [100 m, 100 m, 100 m] |

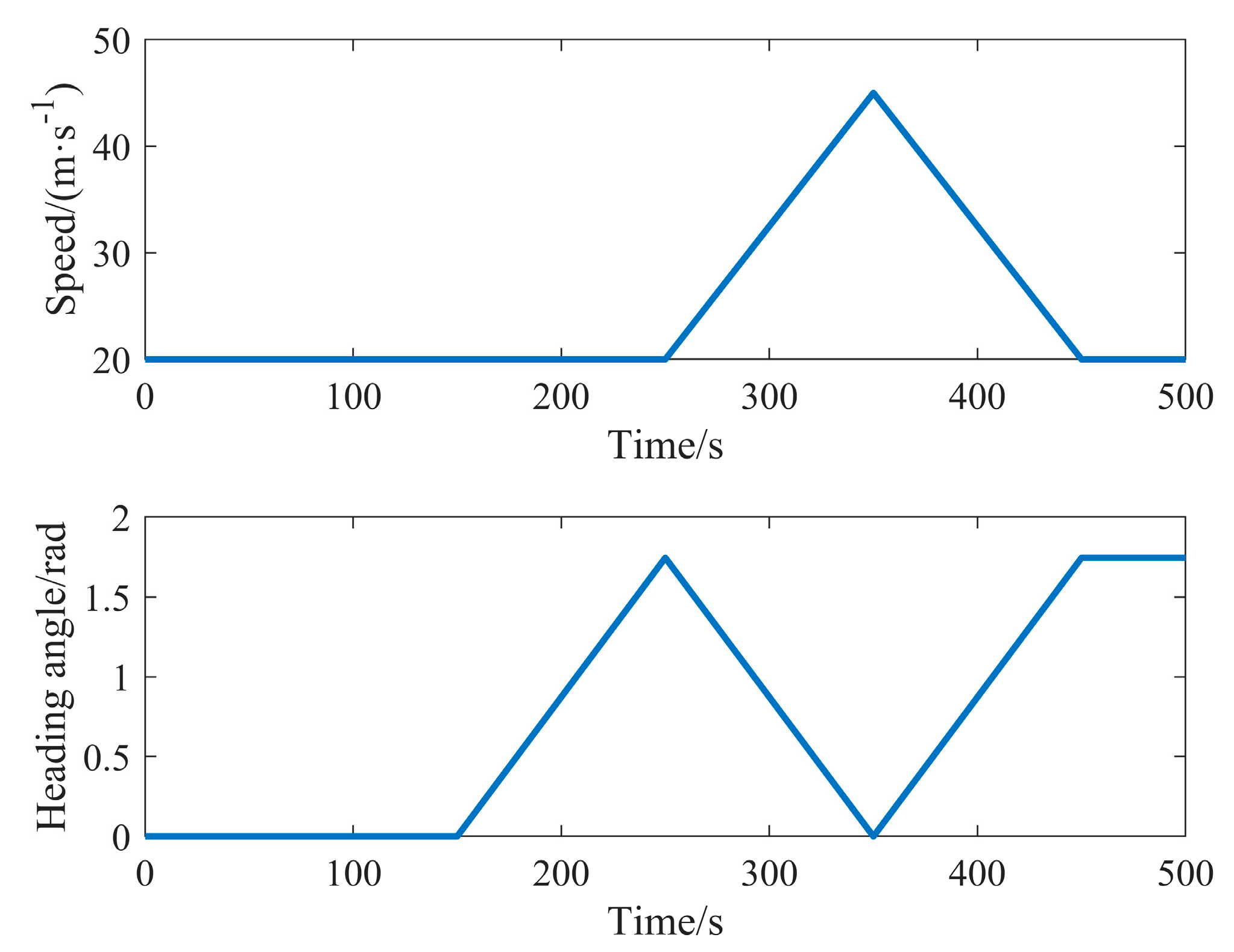

| Initial linear velocity and angular velocity | [20 m/s, 0 rad/s, 0 rad/s] |

| Initial heading and pitch angle | [0 rad, 0 rad] |

| UAV angle range | [−π/20 rad, π/20 rad] |

| Distance measurement noise | m |

| Angle measurement noise | m |

| Length of horizon | 4 |

| α1,k (rad) | α2,k (rad) | α3,k (rad) | R1,k R2,k R3,k (m) | |

|---|---|---|---|---|

| Case 1 | −π/6 | π/6 | 0 | 100 |

| Case 2 | −π/36 | π/36 | 0 | 100 |

| Case 3 | −π/2 | π/2 | 0 | 100 |

| Case 4 | −π/6 | π/6 | 0 | 50 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Wang, Y.; Zheng, W. Multi-UAVs Tracking Non-Cooperative Target Using Constrained Iterative Linear Quadratic Gaussian. Drones 2024, 8, 326. https://doi.org/10.3390/drones8070326

Zhang C, Wang Y, Zheng W. Multi-UAVs Tracking Non-Cooperative Target Using Constrained Iterative Linear Quadratic Gaussian. Drones. 2024; 8(7):326. https://doi.org/10.3390/drones8070326

Chicago/Turabian StyleZhang, Can, Yidi Wang, and Wei Zheng. 2024. "Multi-UAVs Tracking Non-Cooperative Target Using Constrained Iterative Linear Quadratic Gaussian" Drones 8, no. 7: 326. https://doi.org/10.3390/drones8070326

APA StyleZhang, C., Wang, Y., & Zheng, W. (2024). Multi-UAVs Tracking Non-Cooperative Target Using Constrained Iterative Linear Quadratic Gaussian. Drones, 8(7), 326. https://doi.org/10.3390/drones8070326