Safety and Security-Specific Application of Multiple Drone Sensors at Movement Areas of an Aerodrome

Abstract

1. Introduction

2. Materials and Methods

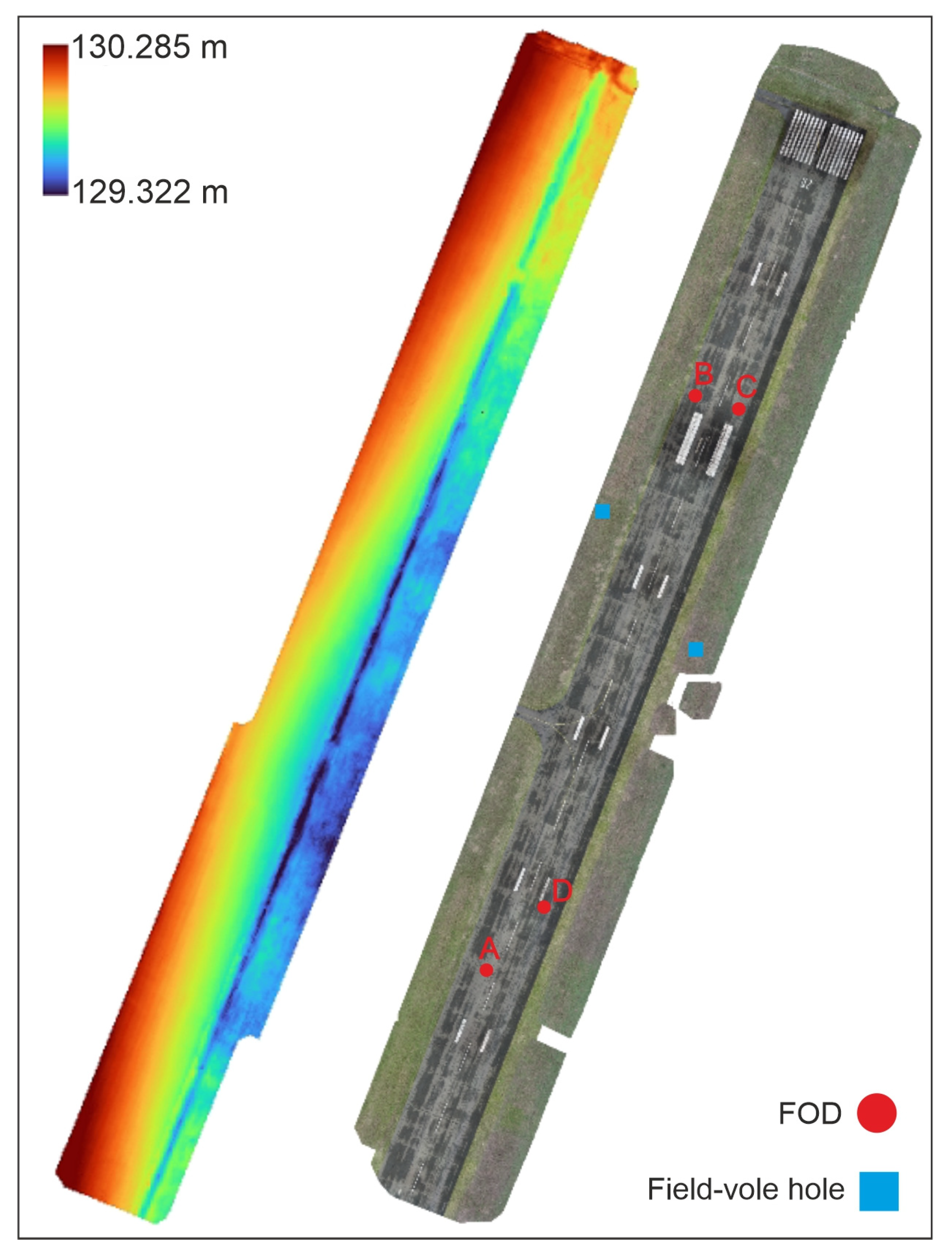

2.1. Study Area

2.2. Used Equipment

2.3. Legal Background

2.4. Data Processing Workflow

3. Results

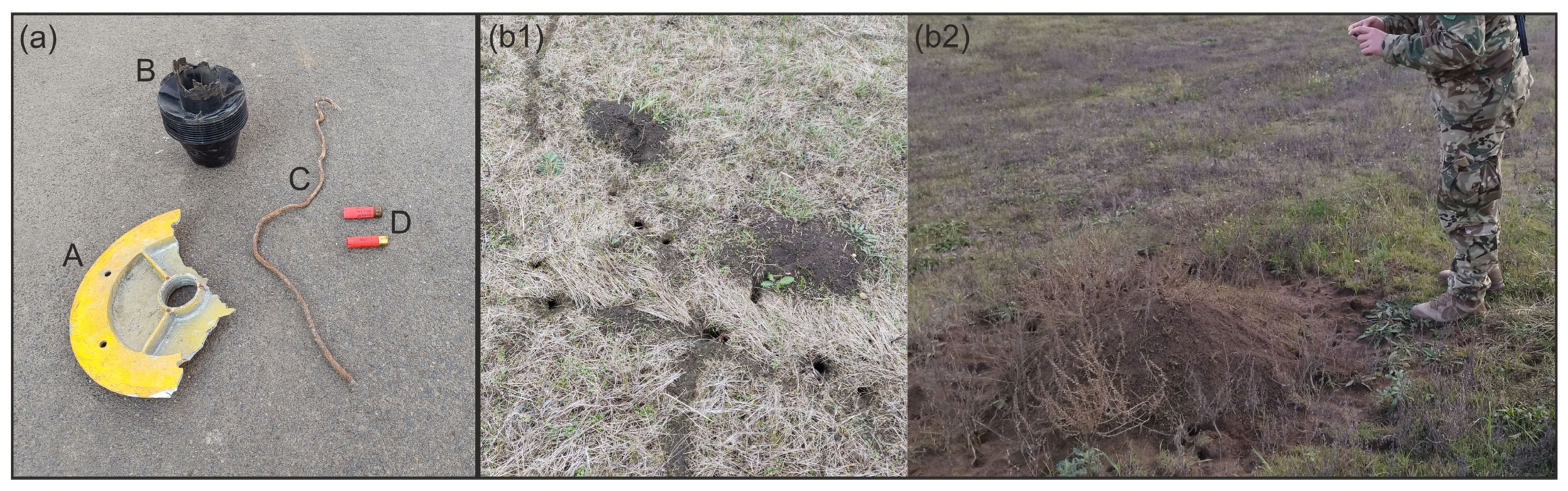

3.1. FOD Recognition Results

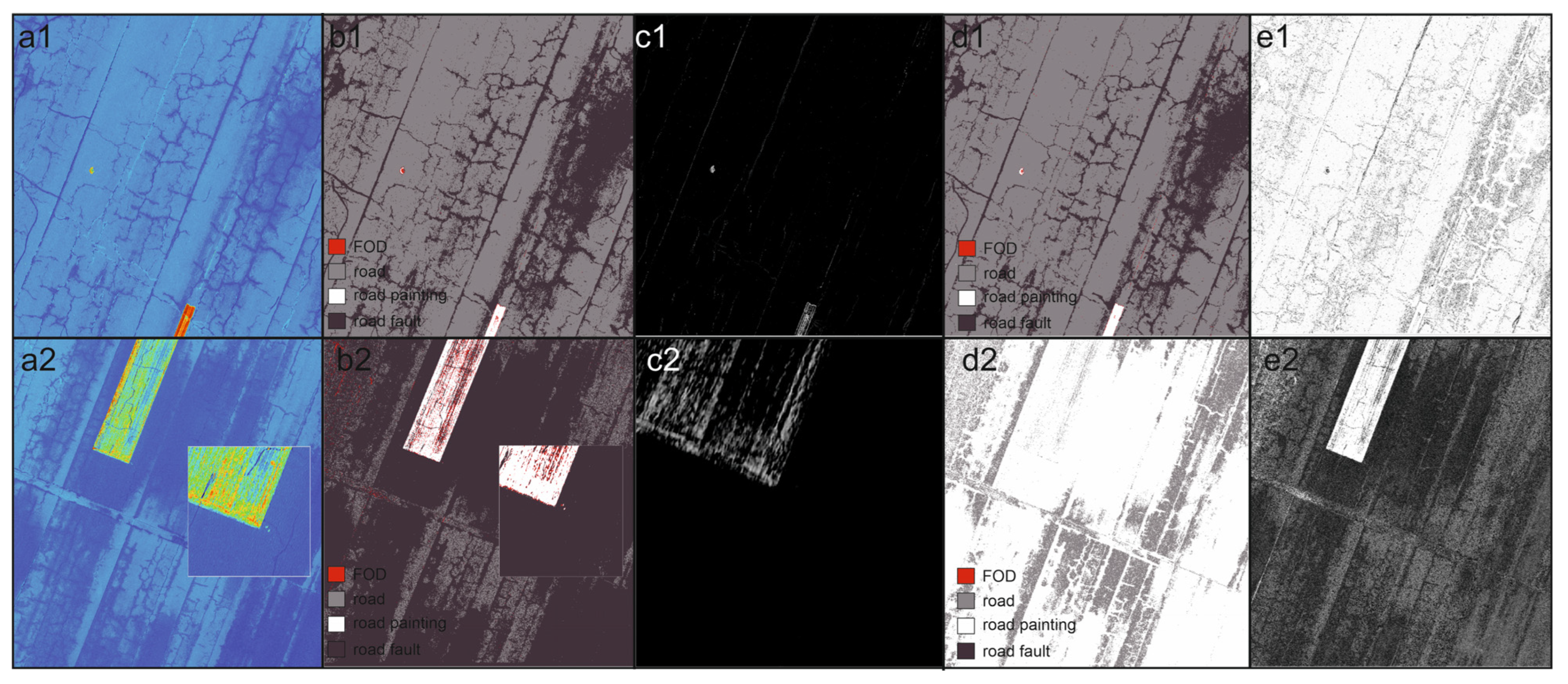

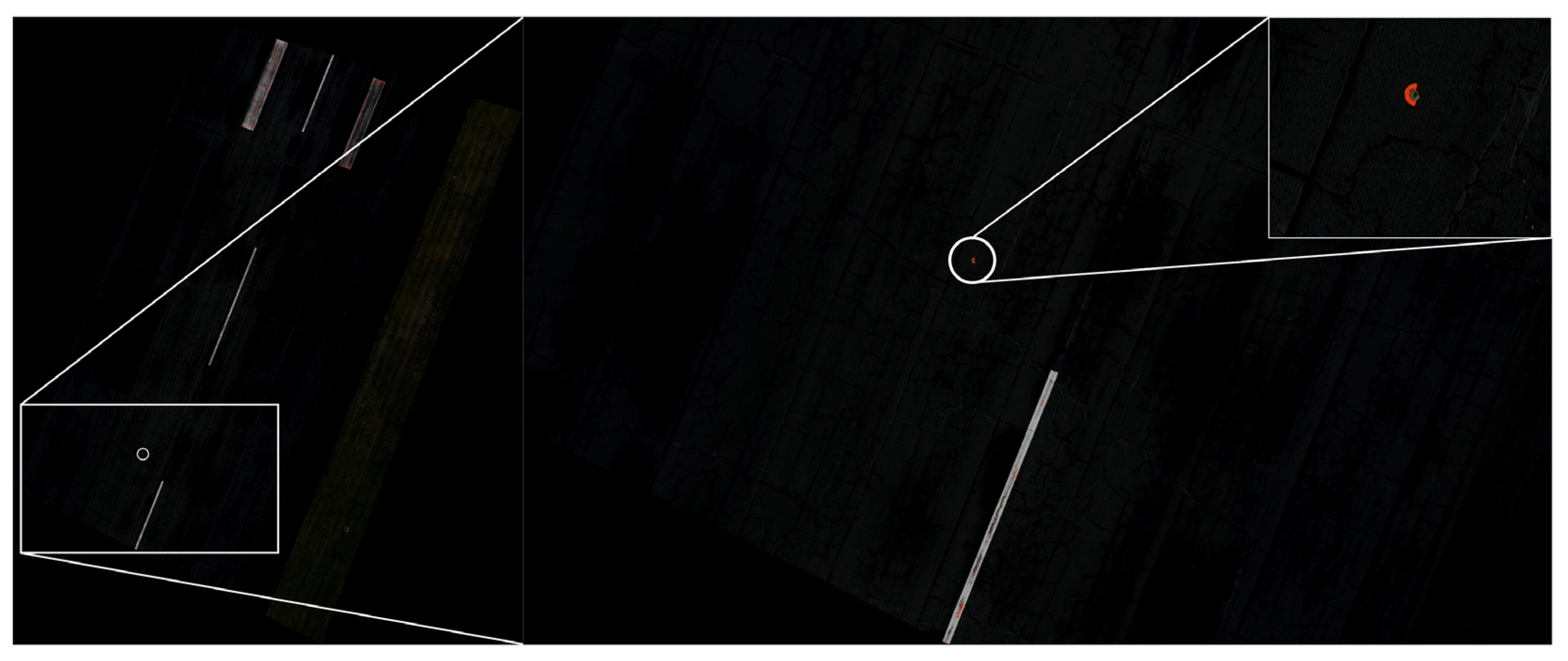

3.1.1. LiDAR Results

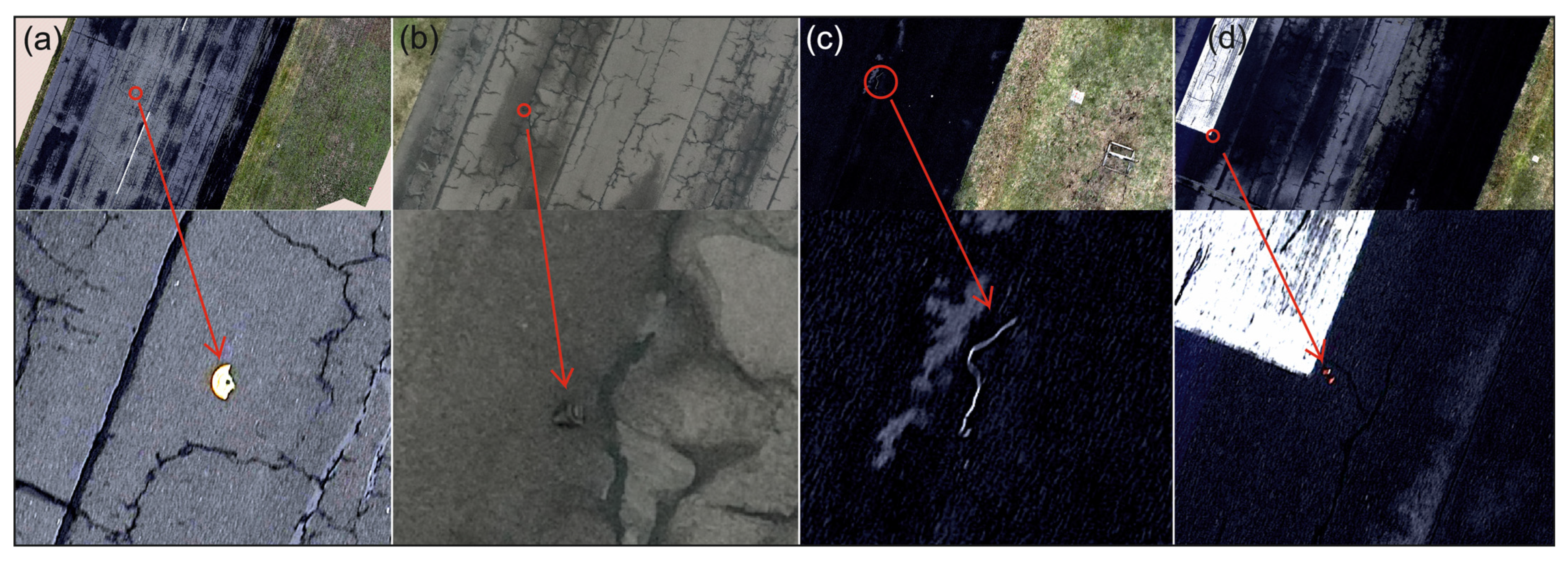

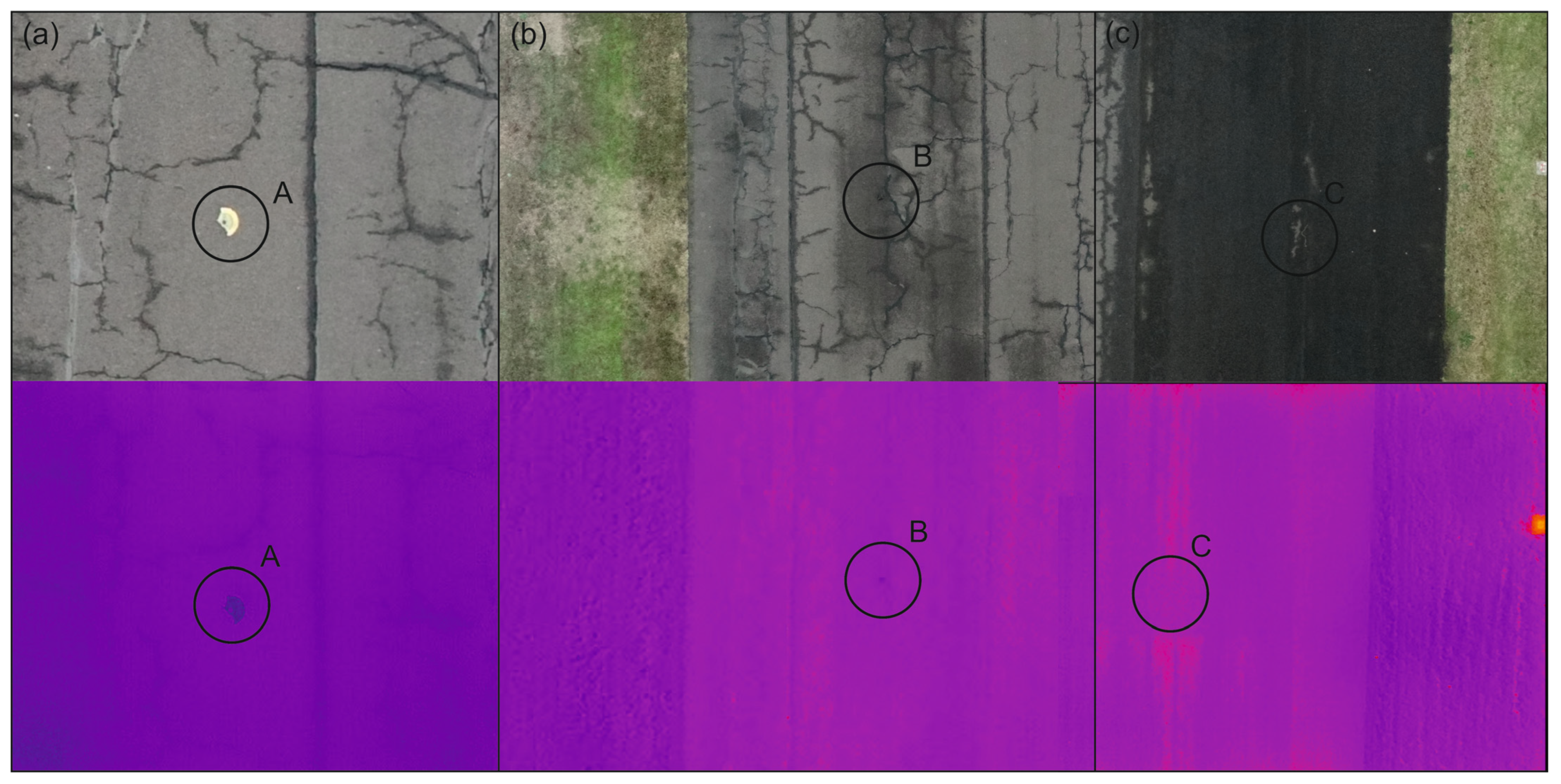

3.1.2. RGB Results

3.1.3. Thermal Results

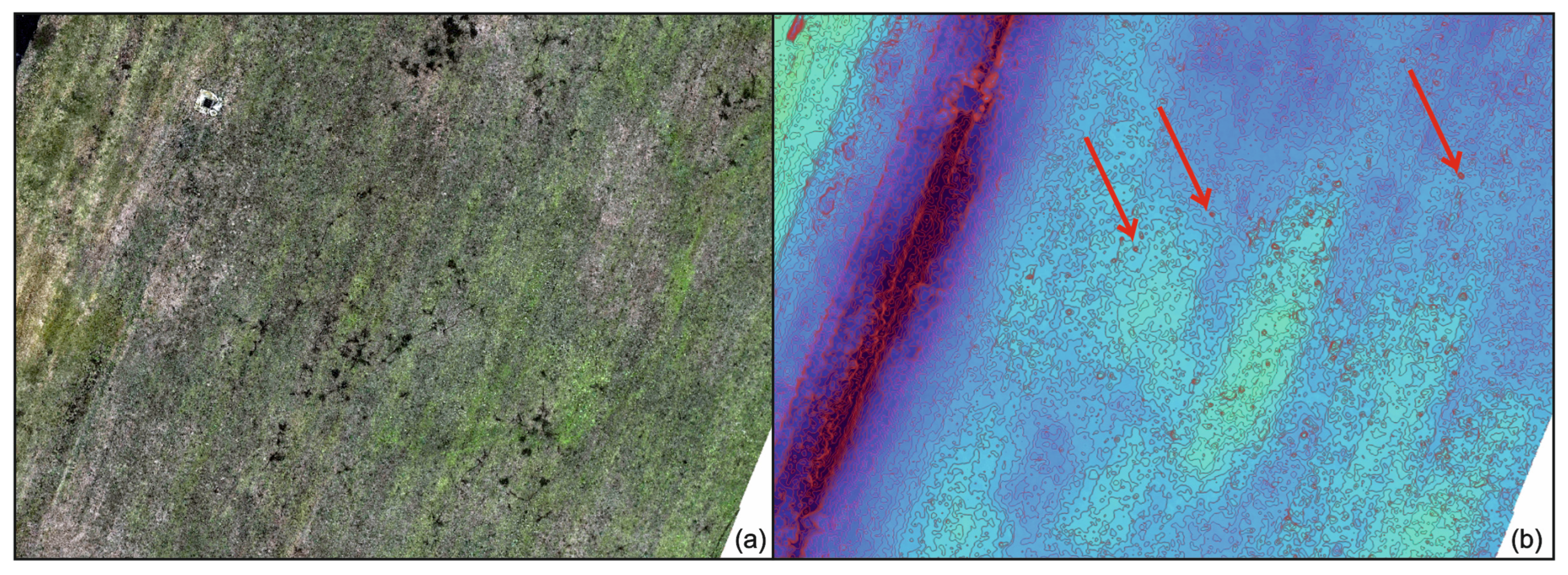

3.2. Field-Vole Hole Recognition Results

3.2.1. RGB Results

3.2.2. Thermal Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rossi, G.; Tanteri, L.; Tofani, V.; Vannocci, P.; Moretti, S.; Casagli, N. Multitemporal UAV Surveys for Landslide Mapping and Characterization. Landslides 2018, 15, 1045–1052. [Google Scholar] [CrossRef]

- Bonali, F.L.; Tibaldi, A.; Marchese, F.; Fallati, L.; Russo, E.; Corselli, C.; Savini, A. UAV-Based Surveying in Volcano-Tectonics: An Example from the Iceland Rift. J. Struct. Geol. 2019, 121, 46–64. [Google Scholar] [CrossRef]

- Török, Á.; Bögöly, G.; Somogyi, Á.; Lovas, T. Application of UAV in Topographic Modelling and Structural Geological Mapping of Quarries and Their Surroundings—Delineation of Fault-Bordered Raw Material Reserves. Sensors 2020, 20, 489. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Zhu, W.; Lian, X.; Xu, X. Monitoring Mining Surface Subsidence with Multi-Temporal Three-Dimensional Unmanned Aerial Vehicle Point Cloud. Remote Sens. 2023, 15, 374. [Google Scholar] [CrossRef]

- Yavuz, M.; Tufekcioglu, M. Assessment of Flood-Induced Geomorphic Changes in Sidere Creek of the Mountainous Basin Using Small UAV-Based Imagery. Sustainability 2023, 15, 11793. [Google Scholar] [CrossRef]

- Hussain, Y.; Schlögel, R.; Innocenti, A.; Hamza, O.; Iannucci, R.; Martino, S.; Havenith, H.B. Review on the Geophysical and UAV-Based Methods Applied to Landslides. Remote Sens. 2022, 14, 4564. [Google Scholar] [CrossRef]

- Tien Bui, D.; Long, N.Q.; Bui, X.-N.; Nguyen, V.-N.; Van Pham, C.; Van Le, C.; Ngo, P.-T.T.; Bui, D.T.; Kristoffersen, B. Lightweight Unmanned Aerial Vehicle and Structure-from-Motion Photogrammetry for Generating Digital Surface Model for Open-Pit Coal Mine Area and Its Accuracy Assessment. In Advances and Applications in Geospatial Technology and Earth Resources; Springer International Publishing: Cham, Switzerland, 2018; pp. 17–33. [Google Scholar]

- Telli, K.; Kraa, O.; Himeur, Y.; Ouamane, A.; Boumehraz, M.; Atalla, S.; Mansoor, W. A Comprehensive Review of Recent Research Trends on UAVs. Systems 2023, 11, 400. [Google Scholar] [CrossRef]

- Puri, V.; Nayyar, A.; Raja, L. Agriculture Drones: A Modern Breakthrough in Precision Agriculture. J. Stat. Manag. Syst. 2017, 20, 507–518. [Google Scholar] [CrossRef]

- Primicerio, J.; Di Gennaro, S.F.; Fiorillo, E.; Genesio, L.; Lugato, E.; Matese, A.; Vaccari, F.P. A Flexible Unmanned Aerial Vehicle for Precision Agriculture. Precis. Agric. 2012, 13, 517–523. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The Application of Small Unmanned Aerial Systems for Precision Agriculture: A Review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Mogili, U.R.; Deepak, B.B.V.L. Review on Application of Drone Systems in Precision Agriculture. Procedia Comput. Sci. 2018, 133, 502–509. [Google Scholar] [CrossRef]

- Ahirwar, S.; Raghunandan Swarnkar, S.; Srinivas, B.; Namwade, G.; Swarnkar, R.; Bhukya, S. Application of Drone in Agriculture. Int. J. Curr. Microbiol. Appl. Sci. 2019, 8, 2500–2505. [Google Scholar] [CrossRef]

- Lyons, M.B.; Brandis, K.J.; Murray, N.J.; Wilshire, J.H.; McCann, J.A.; Kingsford, R.T.; Callaghan, C.T. Monitoring Large and Complex Wildlife Aggregations with Drones. Methods Ecol. Evol. 2019, 10, 1024–1035. [Google Scholar] [CrossRef]

- Corcoran, E.; Winsen, M.; Sudholz, A.; Hamilton, G. Automated Detection of Wildlife Using Drones: Synthesis, Opportunities and Constraints. Methods Ecol. Evol. 2021, 12, 1103–1114. [Google Scholar] [CrossRef]

- Linchant, J.; Lisein, J.; Semeki, J.; Lejeune, P.; Vermeulen, C. Are Unmanned Aircraft Systems (UASs) the Future of Wildlife Monitoring? A Review of Accomplishments and Challenges. Mamm. Rev. 2015, 45, 239–252. [Google Scholar] [CrossRef]

- Wang, D.; Shao, Q.; Yue, H. Surveying Wild Animals from Satellites, Manned Aircraft and Unmanned Aerial Systems (UASs): A Review. Remote Sens. 2019, 11, 1308. [Google Scholar] [CrossRef]

- Cescutti, F.; Cefalo, R.; Coren, F. Application of Digital Photogrammetry from UAV Integrated by Terrestrial Laser Scanning to Disaster Management Brcko Flooding Case Study (Bosnia Herzegovina). In New Advanced GNSS and 3D Spatial Techniques; Lecture Notes in Geoinformation and Cartography; Springer: Cham, Switzerland, 2018; pp. 245–260. [Google Scholar]

- Anderson, K.; Westoby, M.J.; James, M.R. Low-Budget Topographic Surveying Comes of Age: Structure from Motion Photogrammetry in Geography and the Geosciences. Prog. Phys. Geogr. 2019, 43, 163–173. [Google Scholar] [CrossRef]

- Kyriou, A.; Nikolakopoulos, K.; Koukouvelas, I.; Lampropoulou, P. Repeated Uav Campaigns, Gnss Measurements, Gis, and Petrographic Analyses for Landslide Mapping and Monitoring. Minerals 2021, 11, 300. [Google Scholar] [CrossRef]

- Gomez, C.; Purdie, H. UAV Based Photogrammetry and Geocomputing for Hazards and Disaster Risk Monitoring—A Review. Geoenviron. Disaster 2016, 3, 23. [Google Scholar] [CrossRef]

- Naqvi, S.A.R.; Hassan, S.A.; Pervaiz, H.; Ni, Q. Drone-Aided Communication as a Key Enabler for 5G and Resilient Public Safety Networks. IEEE Commun. Mag. 2018, 56, 36–42. [Google Scholar] [CrossRef]

- Greenwood, W.W.; Lynch, J.P.; Zekkos, D. Applications of UAVs in Civil Infrastructure. J. Infrastruct. Syst. 2019, 25, 04019002. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Yoo, L.S.; Lee, J.H.; Lee, Y.K.; Jung, S.K.; Choi, Y. Application of a Drone Magnetometer System to Military Mine Detection in the Demilitarized Zone. Sensors 2021, 21, 3175. [Google Scholar] [CrossRef] [PubMed]

- Ko, Y.; Kim, J.; Duguma, D.G.; Astillo, P.V.; You, I.; Pau, G. Drone Secure Communication Protocol for Future Sensitive Applications in Military Zone. Sensors 2021, 21, 2057. [Google Scholar] [CrossRef] [PubMed]

- Edelman, H.; Stenroos, J.; Queralta, J.P.; Hästbacka, D.; Oksanen, J.; Westerlund, T.; Röning, J. Analysis of Airport Design for Introducing Infrastructure for Autonomous Drones Infrastructure for Autonomous Drones. Emerald Insight 2023, 41, 263–2772. [Google Scholar] [CrossRef]

- Martinez, C.; Sanchez-Cuevas, P.J.; Gerasimou, S.; Bera, A.; Olivares-Mendez, M.A. Sora Methodology for Multi-Uas Airframe Inspections in an Airport. Drones 2021, 5, 141. [Google Scholar] [CrossRef]

- LHSN SZOLNOK—Minden Dokumentum. Available online: https://www.ket.hm.gov.hu/milaiphun/Megosztott%20dokumentumok/Forms/AllItems.aspx?RootFolder=%2Fmilaiphun%2FMegosztott%20dokumentumok%2FMILAIP%2FPart%203%20AERODROMES%20%28AD%29%2FAERODROMES%202%2FLHSN%20SZOLNOK&FolderCTID=0x01200005F7E2F6A0763B489FB0273C8D5D9532&View=%7B3CC9DF8E%2DBB97%2D4202%2D8765%2D273B2108092C%7D (accessed on 29 February 2024).

- 26/2007. (III. 1.) GKM-HM-KvVM Együttes Rendelet a Magyar Légtér Légiközlekedés Céljára Történő Kijelöléséről—Hatályos Jogszabályok Gyűjteménye. Available online: https://net.jogtar.hu/jogszabaly?docid=a0700026.gkm (accessed on 29 February 2024).

- Implementing Regulation—2019/947—EN—EUR-Lex. Available online: https://eur-lex.europa.eu/eli/reg_impl/2019/947/oj (accessed on 29 February 2024).

- Regulation—2018/1139—EN—EUR-Lex. Available online: https://eur-lex.europa.eu/eli/reg/2018/1139/oj (accessed on 29 February 2024).

- 4/1998. (I. 16.) Korm. Rendelet a Magyar Légtér Igénybevételéről—Hatályos Jogszabályok Gyűjteménye. Available online: https://net.jogtar.hu/jogszabaly?docid=99800004.kor (accessed on 29 February 2024).

- Salour, F. Moisture Influence on Structural Behaviour of Pavements; Field and Laboratory Investigations, KTH, Royal Institute of Technology School of Architecture and the Built Environment: Stockholm, Sweden, 2015. [Google Scholar]

- Naotunna, C.N.; Samarakoon, S.M.S.M.K.; Fosså, K.T. Experimental Investigation of Crack Width Variation along the Concrete Cover Depth in Reinforced Concrete Specimens with Ribbed Bars and Smooth Bars. Case Stud. Constr. Mater. 2021, 15, e00593. [Google Scholar] [CrossRef]

- Rokitowski, P.; Bzówka, J.; Grygierek, M. Influence of High Moisture Content on Road Pavement Structure: A Polish Case Study. Case Stud. Constr. Mater. 2021, 15, e00594. [Google Scholar] [CrossRef]

- Elseicy, A.; Alonso-Díaz, A.; Solla, M.; Rasol, M.; Santos-Assunçao, S. Combined Use of GPR and Other NDTs for Road Pavement Assessment: An Overview. Remote Sens. 2022, 14, 4336. [Google Scholar] [CrossRef]

- Foreign Object Debris (FOD)|SKYbrary Aviation Safety. Available online: https://skybrary.aero/articles/foreign-object-debris-fod (accessed on 29 February 2024).

- A Légierő Napját Ünnepelték Szolnokon. Available online: https://airportal.hu/a-legiero-napjat-unnepeltek-szolnokon/ (accessed on 29 February 2024).

- Arrival of New H225M Helicopters Is a Milestone in Armed Forces Development. Available online: https://defence.hu/news/arrival-of-new-h225m-helicopters-is-a-milestone-in-armed-forces-development.html (accessed on 29 February 2024).

- MH 86. Szolnok Helikopter Bázis—Családi Nap, 2022. Május 28—YouTube. Available online: https://www.youtube.com/watch?v=UFBNkV_pnAc (accessed on 29 February 2024).

- Matrice 350 RTK—DJI. Available online: https://enterprise.dji.com/matrice-350-rtk (accessed on 29 February 2024).

- Zenmuse L1—UAV Load Gimbal Camera—DJI Enterprise. Available online: https://enterprise.dji.com/zenmuse-l1 (accessed on 29 February 2024).

- Zenmuse H20 Series—UAV Load Gimbal Camera—DJI Enterprise. Available online: https://enterprise.dji.com/zenmuse-h20-series (accessed on 29 February 2024).

- Zenmuse P1—UAV Load Gimbal Camera—DJI Enterprise. Available online: https://enterprise.dji.com/zenmuse-p1 (accessed on 29 February 2024).

- Drón Törvény 2021—Érthetően Szakértőktől—Légtér.Hu Kft. Available online: https://legter.hu/blog/dron-torveny-2021-erthetoen-szakertoktol/ (accessed on 18 September 2023).

- A Drónozás Szabályai. Available online: https://www.djiars.hu/blogs/news/a-dronozas-szabalyai (accessed on 18 September 2023).

- DJI Terra—Make the World Your Digital Asset—DJI. Available online: https://enterprise.dji.com/dji-terra (accessed on 19 May 2024).

- LAStools: Converting, Filtering, Viewing, Processing, and Compressing LIDAR Data in LAS and LAZ Format. Available online: https://lastools.github.io/ (accessed on 19 May 2024).

- GDAL Tools Plugin. Available online: https://docs.qgis.org/2.18/en/docs/user_manual/plugins/plugins_gdaltools.html (accessed on 19 May 2024).

- ExifTool by Phil Harvey. Available online: https://exiftool.org/ (accessed on 19 May 2024).

- DJI Thermal Analysis Tool 3—Download Center—DJI. Available online: https://www.dji.com/hu/downloads/softwares/dji-dtat3 (accessed on 19 May 2024).

- Congedo, L. Semi-Automatic Classification Plugin: A Python Tool for the Download and Processing of Remote Sensing Images in QGIS. J. Open Source Softw. 2021, 6, 3172. [Google Scholar] [CrossRef]

- OpenCV—Open Computer Vision Library. Available online: https://opencv.org/ (accessed on 19 May 2024).

| FOD Size (mm) | Length (x) | Width (y) | Height (z) |

|---|---|---|---|

| A | 335 | 240 | 35 |

| B | 160 | 160 | 210 |

| C | 675 | 10 | 10 |

| D | 65 | 20 | 23 |

| Sensor | GSD (cm/pixel) | Altitude (m) | Speed (m/s) | Number of Pictures |

|---|---|---|---|---|

| L1 | 0.83 | 30 | 3.5 | 736 |

| H20T | 4.44 | 50 | 3 | 2227 |

| P1 | 0.63 | 50 | 5.1 | 968 |

| FOD/Sensor | L1 (Lidar) | P1 (RGB) | H20T (Thermal) |

|---|---|---|---|

| A (side light lamp subframe) | Y | Y | Y |

| B (tractor engine air filter) | N | Y | Y |

| C (wire) | N | Y | N |

| D (shotgun cartridge case) | N | Y | N/A |

| FOD/Method | Terrain Analysis | Color-Based Classification | PCA | Supervised Classification | Edge Detection |

|---|---|---|---|---|---|

| A (side light lamp subframe) | Y | Y | Y | Y | Y |

| B (tractor engine air filter) | Y | N | Y | N | N |

| C (wire) | N | N | Y | N | N |

| D (shotgun cartridge case) | N | N | Y | Y | N |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kovács, B.; Vörös, F.; Vas, T.; Károly, K.; Gajdos, M.; Varga, Z. Safety and Security-Specific Application of Multiple Drone Sensors at Movement Areas of an Aerodrome. Drones 2024, 8, 231. https://doi.org/10.3390/drones8060231

Kovács B, Vörös F, Vas T, Károly K, Gajdos M, Varga Z. Safety and Security-Specific Application of Multiple Drone Sensors at Movement Areas of an Aerodrome. Drones. 2024; 8(6):231. https://doi.org/10.3390/drones8060231

Chicago/Turabian StyleKovács, Béla, Fanni Vörös, Tímea Vas, Krisztián Károly, Máté Gajdos, and Zsófia Varga. 2024. "Safety and Security-Specific Application of Multiple Drone Sensors at Movement Areas of an Aerodrome" Drones 8, no. 6: 231. https://doi.org/10.3390/drones8060231

APA StyleKovács, B., Vörös, F., Vas, T., Károly, K., Gajdos, M., & Varga, Z. (2024). Safety and Security-Specific Application of Multiple Drone Sensors at Movement Areas of an Aerodrome. Drones, 8(6), 231. https://doi.org/10.3390/drones8060231