Multi-Beam Beamforming-Based ML Algorithm to Optimize the Routing of Drone Swarms

Abstract

1. Introduction

1.1. Contribution in Our Previous Work [1] and Building Work from [1]

1.2. Introducing Artificial Neural Networks for Drone Swarm

1.3. Batch Normalization for Efficient ANN

1.4. Organization and Contribution of This Paper

- In Section 2, we present

- -

- Sparse factorization of the frequency Vandermonde matrices based on each drone, followed by an efficient classical algorithm to route a collection of AUAS.

- -

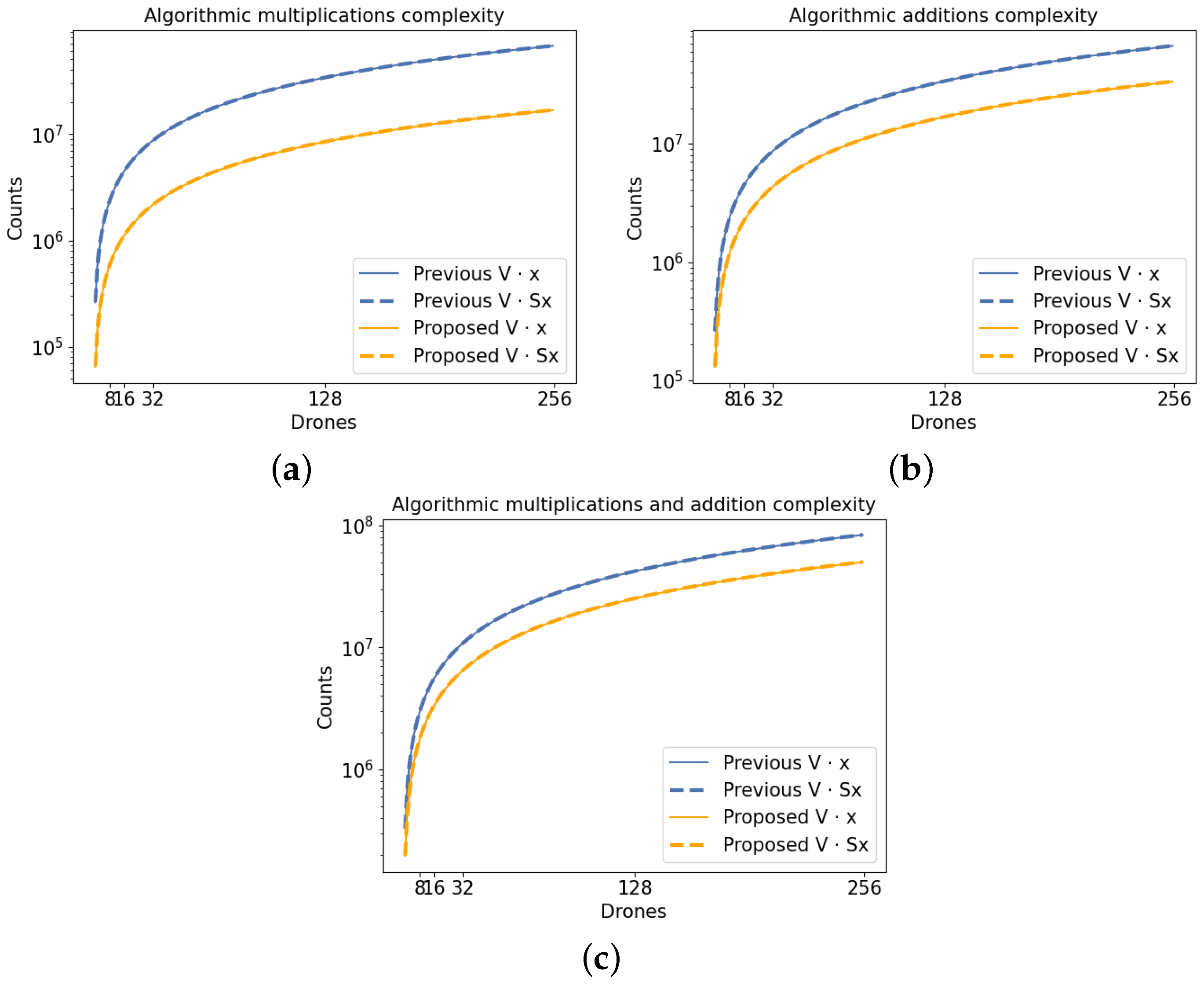

- Analytical arithmetic complexity and numerical computational complexity of the AUAS algorithm. We show that the proposed algorithm is efficient compared to the brute-force calculations and some other routing algorithms.

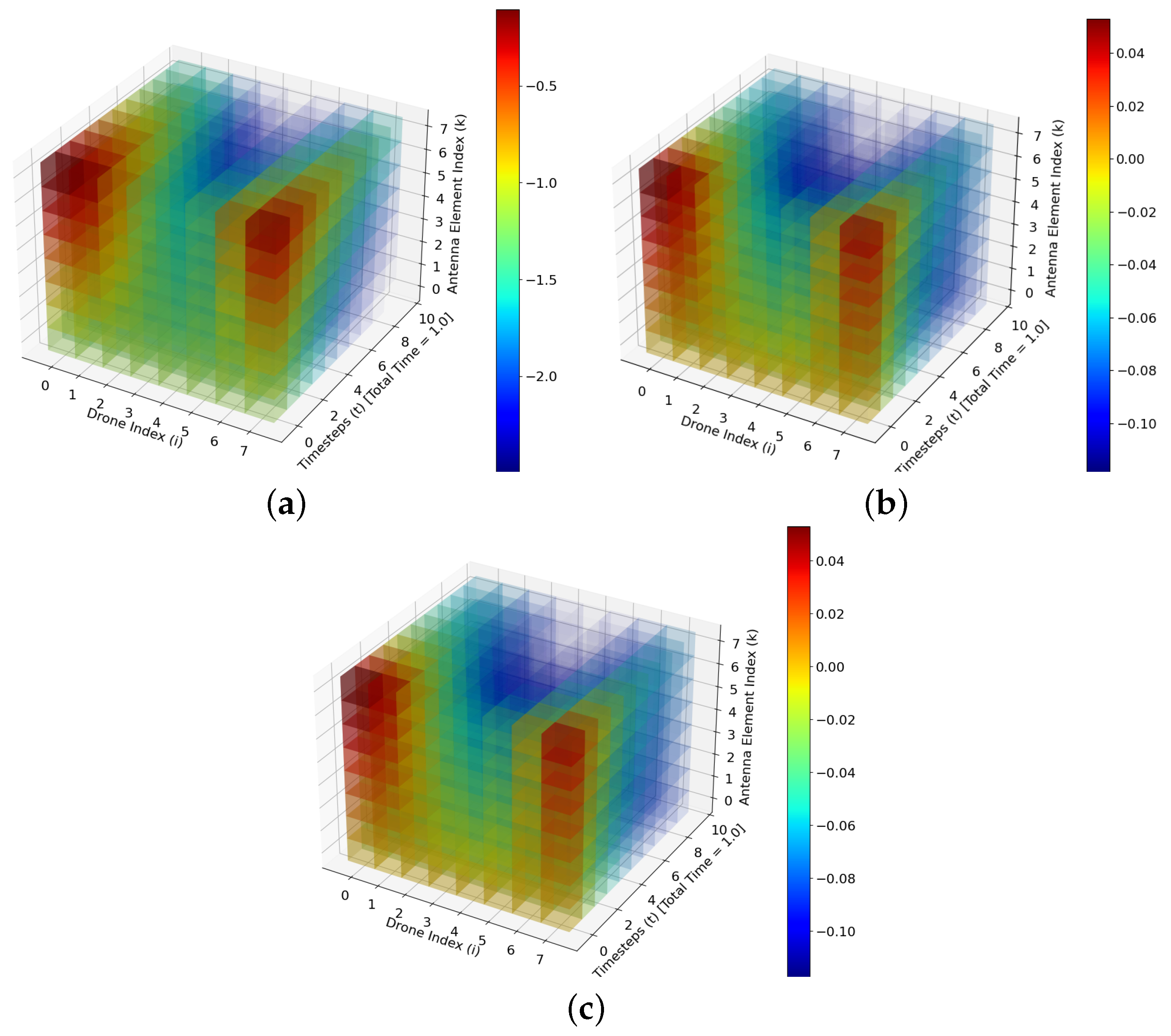

- In Section 3, we present time stamp simulations based on the proposed algorithm, i.e., the received beamformed signals of drones in the swarm at time stamps. Moreover, we compare the time-stamped beamformed signals corresponding to the proposed algorithm with the ground truth signals and the previous work to show the accuracy and compatibility of the algorithms.

- In Section 4, we present a feed-forward ANN based on the classical AUAS algorithm. The feed-forward ANN uses inputs s.t. units and trainable parameters when , to optimize the ML-based AUAS routing algorithm. We show that the optimization of the ML-based AUAS routing algorithm was achieved based on accuracy and efficiency.

- In Section 6, we conclude this paper.

2. Introducing Sparse Factors for the AUAS Model and a Routing Algorithm

2.1. Mathematical Model for AUAS Routing in [1]

2.2. An Efficient AUAS Routing Algorithm, i.e., RSwarm Algorithm

| Algorithm 1 RSwarm |

| Input: M, and t. Output: .

|

2.3. Arithmetic Complexity of the Algorithm

2.4. Numerical Results for the Arithmetic and Time Complexities of the Algorithm

3. Time-Stamp Simulations of the Algorithm

4. Optimize AUAS Routing Algorithm via ML

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ANN | Artificial neural network |

| AUAS | Autonomous Unmanned Aerial Systems |

| AODV | Ad-hoc on-demand distance vector |

| DNN | Deep neural network |

| DQN | Deep Q-learning Network |

| FFANN | Feed-Forward Artificial Neural Network |

| MARL | Multi-Agent Reinforcement Learning |

| MIMO | Multiple-input multiple-output |

| ML | Machine learning |

| OLSR | Optimized Link State Routing |

References

- Perera, S.M.; Myers, R.J.; Sullivan, K.; Byassee, K.; Song, H.; Madanayake, A. Integrating Communication and Sensor Arrays to Model and Navigate Autonomous Unmanned Aerial Systems. Electronics 2022, 11, 3023. [Google Scholar] [CrossRef]

- Agiwal, M.; Roy, A.; Saxena, N. Next Generation 5G Wireless Networks: A Comprehensive Survey. IEEE Commun. Surv. Tutorials 2016, 18, 1617–1655. [Google Scholar] [CrossRef]

- Lin, Z.; Lin, M.; de Cola, T.; Wang, J.B.; Zhu, W.P.; Cheng, J. Supporting IoT With Rate-Splitting Multiple Access in Satellite and Aerial-Integrated Networks. IEEE Internet Things J. 2021, 8, 11123–11134. [Google Scholar] [CrossRef]

- Lin, Z.; Niu, H.; An, K.; Wang, Y.; Zheng, G.; Chatzinotas, S.; Hu, Y. Refracting RIS-Aided Hybrid Satellite-Terrestrial Relay Networks: Joint Beamforming Design and Optimization. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 3717–3724. [Google Scholar] [CrossRef]

- Huang, Q.; Lin, M.; Wang, J.B.; Tsiftsis, T.A.; Wang, J. Energy Efficient Beamforming Schemes for Satellite-Aerial-Terrestrial Networks. IEEE Trans. Commun. 2020, 68, 3863–3875. [Google Scholar] [CrossRef]

- Lin, Z.; Lin, M.; Zhu, W.P.; Wang, J.B.; Cheng, J. Robust Secure Beamforming for Wireless Powered Cognitive Satellite-Terrestrial Networks. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 567–580. [Google Scholar] [CrossRef]

- An, K.; Liang, T. Hybrid Satellite-Terrestrial Relay Networks with Adaptive Transmission. IEEE Trans. Veh. Technol. 2019, 68, 12448–12452. [Google Scholar] [CrossRef]

- Jia, M.; Zhang, X.; Gu, X.; Guo, Q.; Li, Y.; Lin, P. Interbeam Interference Constrained Resource Allocation for Shared Spectrum Multibeam Satellite Communication Systems. IEEE Internet Things J. 2019, 6, 6052–6059. [Google Scholar] [CrossRef]

- Li, B.; Fei, Z.; Chu, Z.; Zhou, F.; Wong, K.K.; Xiao, P. Robust Chance-Constrained Secure Transmission for Cognitive Satellite–Terrestrial Networks. IEEE Trans. Veh. Technol. 2018, 67, 4208–4219. [Google Scholar] [CrossRef]

- Du, J.; Jiang, C.; Zhang, H.; Wang, X.; Ren, Y.; Debbah, M. Secure Satellite-Terrestrial Transmission Over Incumbent Terrestrial Networks via Cooperative Beamforming. IEEE J. Sel. Areas Commun. 2018, 36, 1367–1382. [Google Scholar] [CrossRef]

- Perera, S.; Ariyarathna, V.; Udayanga, N.; Madanayake, A.; Wu, G.; Belostotski, L.; Cintra, R.; Rappaport, T. Wideband N-beam Arrays with Low-Complexity Algorithms and Mixed-Signal Integrated Circuits. IEEE J. Sel. Top. Signal Process. 2018, 12, 368–382. [Google Scholar] [CrossRef]

- Perera, S.M.; Madanayake, A.; Cintra, R. Efficient and Self-Recursive Delay Vandermonde Algorithm for Multi-beam Antenna Arrays. IEEE Open J. Signal Process. 2020, 1, 64–76. [Google Scholar] [CrossRef]

- Perera, S.M.; Madanayake, A.; Cintra, R. Radix-2 Self-recursive Algorithms for Vandermonde-type Matrices and True-Time-Delay Multi-Beam Antenna Arrays. IEEE Access 2020, 8, 25498–25508. [Google Scholar] [CrossRef]

- Perera, S.M.; Lingsch, L.; Madanayake, A.; Mandal, S.; Mastronardi, N. Fast DVM Algorithm for Wideband Time-Delay Multi-Beam Beamformers. IEEE Trans. Signal Process. 2021, 70, 5913–5925. [Google Scholar] [CrossRef]

- Huang, Y.; Wu, Q.; Wang, T.; Zhou, G.; Zhang, R. 3D Beam Tracking for Cellular-Connected UAV. IEEE Wirel. Commun. Lett. 2020, 9, 736–740. [Google Scholar] [CrossRef]

- Di Caro, G.; Dorigo, M. AntNet: A Mobile Agents Approach to Adaptive Routing; Technical Report; IRIDIA: Carlsbad, CA, USA, 1997; pp. 97–112. [Google Scholar]

- Di Caro, G.; Dorigo, M. AntNet: Distributed Stigmergetic Control for Communications Networks. J. Artif. Intell. Res. 1998, 9, 317–365. [Google Scholar] [CrossRef]

- Mukhutdinov, D.; Filchenkov, A.; Shalyto, A.; Vyatkin, V. Multi-agent deep learning for simultaneous optimization for time and energy in distributed routing system. Future Gener. Comput. Syst. 2019, 94, 587–600. [Google Scholar] [CrossRef]

- Caro, G.A.D.; Dorigo, M. Ant Colonies for Adaptive Routing in Packet-Switched Communications Networks. In Parallel Problem Solving from Nature; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Kassabalidis, I.; El-Sharkawi, M.; Marks, R.; Arabshahi, P.; Gray, A. Swarm Intelligence for Routing in Communication Networks. In Proceedings of the GLOBECOM’01—IEEE Global Telecommunications Conference (Cat. No. 01CH37270), San Antonio, TX, USA, 25–29 November 2001; Volume 6, pp. 3613–3617. [Google Scholar] [CrossRef]

- Dhillon, S.S.; Van Mieghem, P. Performance Analysis of the AntNet Algorithm. Comput. Netw. 2007, 51, 2104–2125. [Google Scholar] [CrossRef]

- Yang, X.; Li, Z.; Ge, X. Deployment Optimization of Multiple UAVs in Multi-UAV Assisted Cellular Networks. In Proceedings of the 2019 11th International Conference on Wireless Communications and Signal Processing (WCSP), Xi’an, China, 23–25 October 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Y.; Amal, A.; Song, H.; Stansbury, R.S.; Yuan, J.; Yang, T. Fountain Code Enabled ADS-B for Aviation Security and Safety Enhancement. In Proceedings of the 2018 IEEE 37th International Performance Computing and Communications Conference (IPCCC), Orlando, FL, USA, 17–19 November 2018; pp. 1–7. [Google Scholar]

- Leonov, A.V.; Litvinov, G.A. Applying AODV and OLSR routing protocols to air-to-air scenario in flying ad hoc networks formed by mini-UAVs. In Proceedings of the 2018 Systems of Signals Generating and Processing in the Field of on Board Communications, Moscow, Russia, 14–15 March 2018; pp. 1–10. [Google Scholar]

- Messous, M.A.; Arfaoui, A.; Alioua, A.; Senouci, S.M. A Sequential Game Approach for Computation-Offloading in an UAV Network. In Proceedings of the GLOBECOM 2017—2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Li, B.; Fei, Z.; Zhang, Y.; Guizani, M. Secure UAV Communication Networks over 5G. IEEE Wirel. Commun. 2019, 26, 114–120. [Google Scholar] [CrossRef]

- Zhou, F.; Hu, R.Q.; Li, Z.; Wang, Y. Mobile Edge Computing in Unmanned Aerial Vehicle Networks. IEEE Wirel. Commun. 2020, 27, 140–146. [Google Scholar] [CrossRef]

- Li, B.; Fei, Z.; Zhang, Y. UAV Communications for 5G and Beyond: Recent Advances and Future Trends. IEEE Internet Things J. 2019, 6, 2241–2263. [Google Scholar] [CrossRef]

- Secinti, G.; Darian, P.B.; Canberk, B.; Chowdhury, K.R. SDNs in the Sky: Robust End-to-End Connectivity for Aerial Vehicular Networks. IEEE Commun. Mag. 2018, 56, 16–21. [Google Scholar] [CrossRef]

- Sun, X.; Yang, W.; Cai, Y. Secure Communication in NOMA-Assisted Millimeter-Wave SWIPT UAV Networks. IEEE Internet Things J. 2020, 7, 1884–1897. [Google Scholar] [CrossRef]

- Cui, J.; Liu, Y.; Nallanathan, A. The Application of Multi-Agent Reinforcement Learning in UAV Networks. In Proceedings of the 2019 IEEE International Conference on Communications Workshops (ICC Workshops), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Zheng, K.; Sun, Y.; Lin, Z.; Tang, Y. UAV-assisted Online Video Downloading in Vehicular Networks: A Reinforcement Learning Approach. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Chen, M.; Saad, W.; Yin, C. Liquid State Machine Learning for Resource and Cache Management in LTE-U Unmanned Aerial Vehicle (UAV) Networks. IEEE Trans. Wirel. Commun. 2019, 18, 1504–1517. [Google Scholar] [CrossRef]

- Asif, N.A.; Sarker, Y.; Chakrabortty, R.K.; Ryan, M.J.; Ahamed, M.H.; Saha, D.K.; Badal, F.R.; Das, S.K.; Ali, M.F.; Moyeen, S.I.; et al. Graph Neural Network: A Comprehensive Review on Non-Euclidean Space. IEEE Access 2021, 9, 60588–60606. [Google Scholar] [CrossRef]

- Sazli, M.H. A Brief Review of Feed-Forward Neural Networks. In Communications Faculty of Sciences University of Ankara Series A2-A3; Ankara University, Faculty of Engineering, Department of Electronics Engineering: Ankara, Turkey, 6 February 2006; Volume 50, pp. 11–17. [Google Scholar]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Wang, S.C. Artificial Neural Network. In Interdisciplinary Computing in Java Programming; Springer: Boston, MA, USA, 2003; pp. 81–100. [Google Scholar] [CrossRef]

- Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Netw. 1991, 4, 251–257. [Google Scholar] [CrossRef]

- Esmali Nojehdeh, M.; Aksoy, L.; Altun, M. Efficient Hardware Implementation of Artificial Neural Networks Using Approximate Multiply-Accumulate Blocks. In Proceedings of the 2020 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), Limassol, Cyprus, 6–8 July 2020; pp. 96–101. [Google Scholar] [CrossRef]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.J.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Tan, C.; Zhu, Y.; Guo, C. Building Verified Neural Networks with Specifications for Systems. In Proceedings of the 12th ACM SIGOPS Asia-Pacific Workshop on Systems; Association for Computing Machinery: New York, NY, USA, 2021; pp. 42–47. [Google Scholar]

- Pennington, J.; Worah, P. Nonlinear random matrix theory for deep learning. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Baskerville, N.P.; Granziol, D.; Keating, J.P. Applicability of Random Matrix Theory in Deep Learning. arXiv 2021, arXiv:2102.06740. [Google Scholar]

- Ghorbani, B.; Krishnan, S.; Xiao, Y. An Investigation into Neural Net Optimization via Hessian Eigenvalue Density. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 2232–2241. [Google Scholar]

- Dauphin, Y.N.; Pascanu, R.; Gulcehre, C.; Cho, K.; Ganguli, S.; Bengio, Y. Identifying and attacking the saddle point problem in high-dimensional non-convex optimization. In Proceedings of the Advances in Neural Information Processing Systems; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K., Eds.; Curran Associates, Inc.: Montreal, QC, Canada, 2014; Volume 27. [Google Scholar]

- Verleysen, M.; Francois, D.; Simon, G.; Wertz, V. On the effects of dimensionality on data analysis with neural networks. In Proceedings of the Artificial Neural Nets Problem Solving Methods; Mira, J., Álvarez, J.R., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 105–112. [Google Scholar]

- Qin, L.; Gong, Y.; Tang, T.; Wang, Y.; Jin, J. Training Deep Nets with Progressive Batch Normalization on Multi-GPUs. Int. J. Parallel Program. 2018, 47, 373–387. [Google Scholar] [CrossRef]

- Joshi, V.; Le Gallo, M.; Haefeli, S.; Boybat, I.; Nandakumar, S.R.; Piveteau, C.; Dazzi, M.; Rajendran, B.; Sebastian, A.; Eleftheriou, E. Accurate deep neural network inference using computational phase-change memory. Nat. Commun. 2020, 11, 2473. [Google Scholar] [CrossRef]

- van de Ven, G.M.; Tuytelaars, T.; Tolias, A.S. Three types of incremental learning. Nat. Mach. Intell. 2022, 4, 1185–1197. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, J.; Li, J.; Niu, S.; Song, H. Class-Incremental Learning for Wireless Device Identification in IoT. IEEE Internet Things J. 2021, 8, 17227–17235. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, J.; Li, J.; Niu, S.; Wu, L.; Song, H. Zero-bias Deep Learning Enabled Quickest Abnormal Event Detection in IoT. IEEE Internet Things J. 2021, 9, 11385–11395. [Google Scholar] [CrossRef]

- Zhou, M.; Wang, Q.; Shu, J.; Zhao, Q.; Meng, D. Diagnosing Batch Normalization in Class Incremental Learning. arXiv 2022, arXiv:2202.08025. [Google Scholar]

- Liu, Y.; Wang, J.; Li, J.; Song, H.; Yang, T.; Niu, S.; Ming, Z. Zero-Bias Deep Learning for Accurate Identification of Internet-of-Things (IoT) Devices. IEEE Internet Things J. 2021, 8, 2627–2634. [Google Scholar] [CrossRef]

- Garbin, C.; Zhu, X.; Marques, O. Dropout vs. batch normalization: An empirical study of their impact to deep learning. Multimed. Tools Appl. 2020, 79, 12777–12815. [Google Scholar] [CrossRef]

- Dong, Y.; Ni, R.; Li, J.; Chen, Y.; Su, H.; Zhu, J. Stochastic Quantization for Learning Accurate Low-Bit Deep Neural Networks. Int. J. Comput. Vis. 2019, 127, 1629–1642. [Google Scholar] [CrossRef]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for Activation Functions. arXiv 2017, arXiv:1710.05941. [Google Scholar]

- Arafat, M.Y.; Moh, S. Routing Protocols for Unmanned Aerial Vehicle Networks: A Survey. IEEE Access 2019, 7, 99694–99720. [Google Scholar] [CrossRef]

- Tahir, A.; Böling, J.M.; Haghbayan, M.H.; Toivonen, H.T.; Plosila, J. Swarms of Unmanned Aerial Vehicles—A Survey. J. Ind. Inf. Integr. 2019, 16, 100106. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Y.; Niu, S.; Song, H. 5G-enabled Optimal Bi-Throughput for UAS Swarm Networking. In Proceedings of the 2020 International Conference on Space-Air-Ground Computing (SAGC), Beijing, China, 4–6 December 2020; pp. 43–48. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Y.; Niu, S.; Song, H. Extensive Throughput Enhancement For 5G Enabled UAV Swarm Networking. IEEE J. Miniaturization Air Space Syst. 2021, 2, 199–208. [Google Scholar] [CrossRef]

- Yang, S.L. On the LU factorization of the Vandermonde matrix. Discret. Appl. Math. 2005, 146, 102–105. [Google Scholar] [CrossRef]

- Sohail, M.S.; Saeed, M.O.B.; Rizvi, S.Z.; Shoaib, M.; Sheikh, A.U.H. Low-Complexity Particle Swarm Optimization for Time-Critical Applications. arXiv 2014, arXiv:1401.0546. [Google Scholar]

- Wisittipanich, W.; Phoungthong, K.; Srisuwannapa, C.; Baisukhan, A.; Wisittipanit, N. Performance Comparison between Particle Swarm Optimization and Differential Evolution Algorithms for Postman Delivery Routing Problem. Appl. Sci. 2021, 11, 2703. [Google Scholar] [CrossRef]

- Chen, W.; Zhu, J.; Liu, J.; Guo, H. A fast coordination approach for large-scale drone swarm. J. Netw. Comput. Appl. 2024, 221, 103769. [Google Scholar] [CrossRef]

- Javed, F.; Khan, H.Z.; Anjum, R. Communication capacity maximization in drone swarms. Drone Syst. Appl. 2023, 11, 1–12. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, D.; Yu, J. Multi-UAV Task Assignment With Parameter and Time-Sensitive Uncertainties Using Modified Two-Part Wolf Pack Search Algorithm. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 2853–2872. [Google Scholar] [CrossRef]

- Huang, C. ReLU Networks Are Universal Approximators via Piecewise Linear or Constant Functions. Neural Comput. 2020, 32, 2249–2278. [Google Scholar] [CrossRef]

- Szandała, T. Review and Comparison of Commonly Used Activation Functions for Deep Neural Networks. In Bio-Inspired Neurocomputing; Bhoi, A.K., Mallick, P.K., Liu, C.M., Balas, V.E., Eds.; Springer: Singapore, 2021; pp. 203–224. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Myers, R.J.; Perera, S.M.; McLewee, G.; Huang, D.; Song, H. Multi-Beam Beamforming-Based ML Algorithm to Optimize the Routing of Drone Swarms. Drones 2024, 8, 57. https://doi.org/10.3390/drones8020057

Myers RJ, Perera SM, McLewee G, Huang D, Song H. Multi-Beam Beamforming-Based ML Algorithm to Optimize the Routing of Drone Swarms. Drones. 2024; 8(2):57. https://doi.org/10.3390/drones8020057

Chicago/Turabian StyleMyers, Rodman J., Sirani M. Perera, Grace McLewee, David Huang, and Houbing Song. 2024. "Multi-Beam Beamforming-Based ML Algorithm to Optimize the Routing of Drone Swarms" Drones 8, no. 2: 57. https://doi.org/10.3390/drones8020057

APA StyleMyers, R. J., Perera, S. M., McLewee, G., Huang, D., & Song, H. (2024). Multi-Beam Beamforming-Based ML Algorithm to Optimize the Routing of Drone Swarms. Drones, 8(2), 57. https://doi.org/10.3390/drones8020057