Mobility-Aware Task Offloading and Resource Allocation in UAV-Assisted Vehicular Edge Computing Networks

Abstract

1. Introduction

- Task Offloading Framework: The article proposes a novel task offloading and resource allocation framework for UAV-assisted VEC systems. This framework considers the mobility of vehicles, heterogeneous task requirements, and limited coverage of drones. It makes vehicle position predictions based on their motion information in order to optimize task offloading and resource allocation.

- MAVTO Algorithm: The article introduces the Mobility Aware Vehicular Task Offloading (MAVTO) algorithm, which dynamically adjusts task offloading decisions based on vehicle speed, task deadlines, and computational resources available in the UAV servers. MAVTO uses container-based virtualization to efficiently manage resources and improve task execution performance.

- Offloading Modes: The framework includes multiple offloading modes (direct, predictive, and hybrid), which provide flexibility in offloading tasks based on vehicle movement. The hybrid mode maximizes task success rates by selecting the optimal UAV nodes that vehicles will pass through within task deadlines.

2. Related Work

3. System Model and Problem Formulation

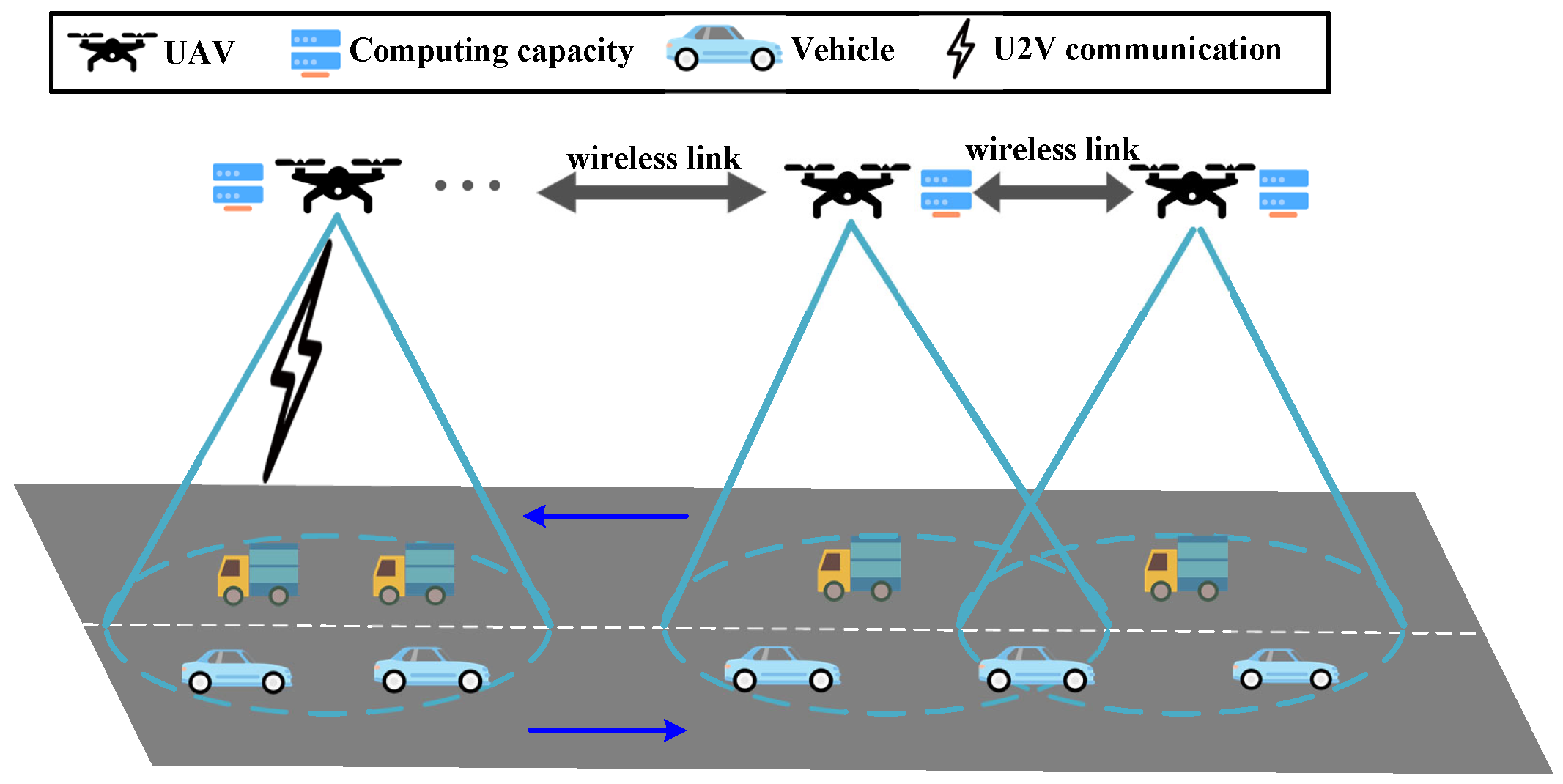

3.1. System Model

3.2. Computing Model

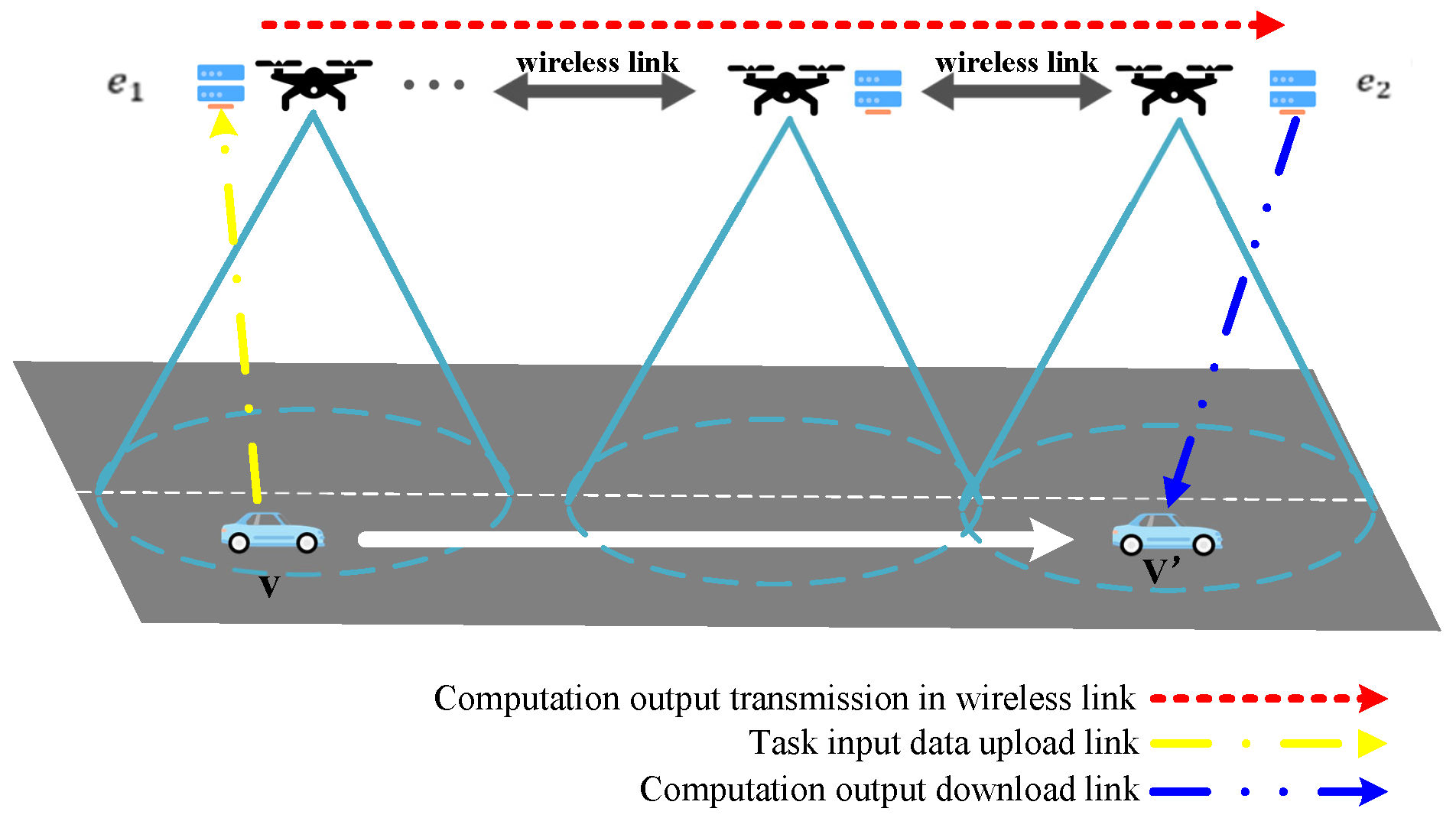

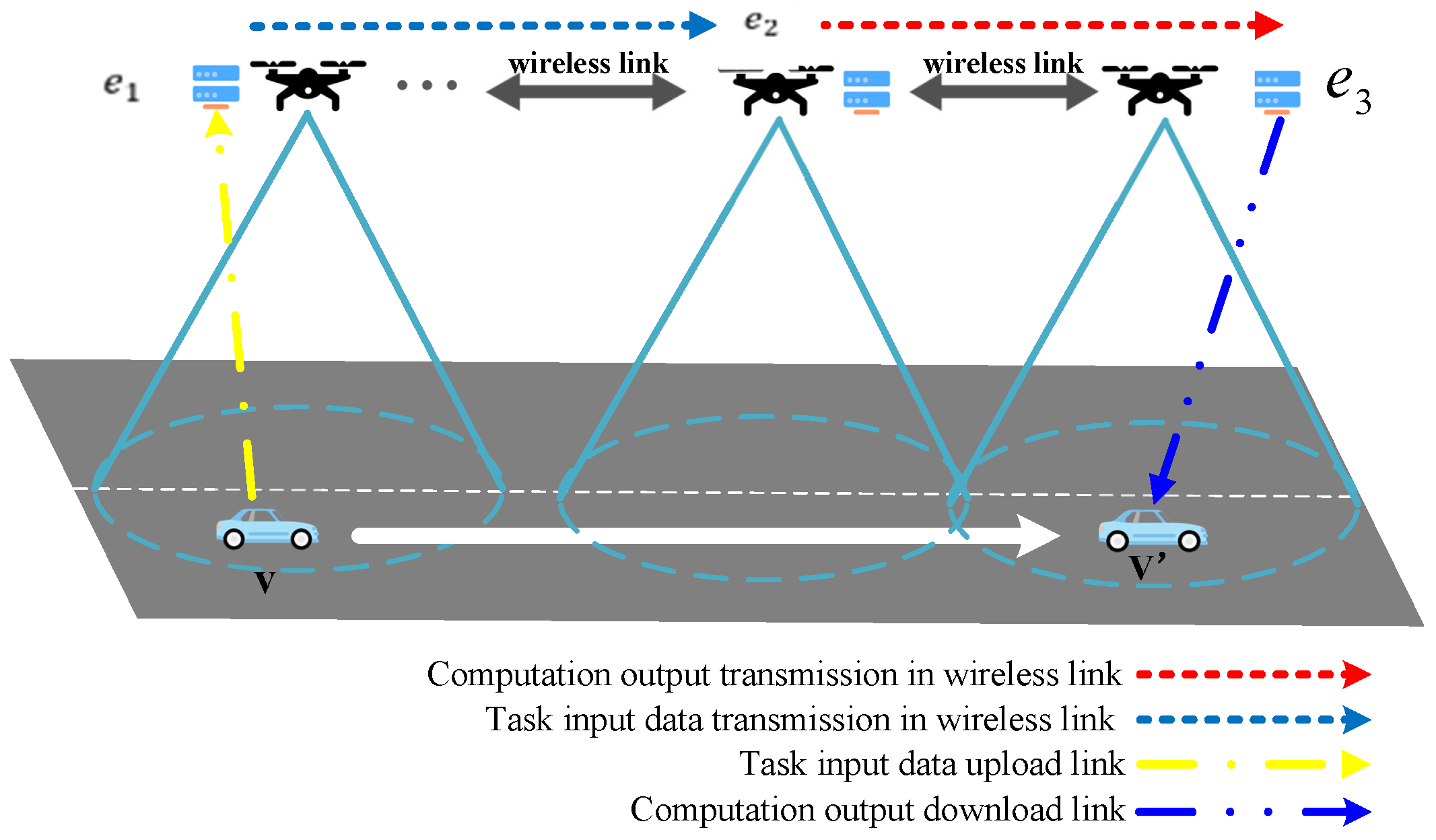

- Task uploading: Vehicles upload tasks to the currently accessed UAV server via U2V upload links.

- Task transmission: When the computing power of the UAV node in use is insufficient, or if numerous tasks are queued with a significant load burden, it is advisable to consider offloading tasks to a UAV node when the vehicle is not currently busy. To optimize task execution or waiting times and alleviate the load on the UAV node, the task should be transmitted across the UAVs’ wireless links to the target UAV node for processing.

- Task execution: Once the task is uploaded to the destination UAV node, it is executed on the UAV server to retrieve the computational result. The waiting time for tasks should be taken into account.

- Result downloading: When the vehicle is within the UAV coverage range and the task has been calculated, the UAV server transmits the calculation result back to the vehicle through the download links.

3.3. Offloading Model

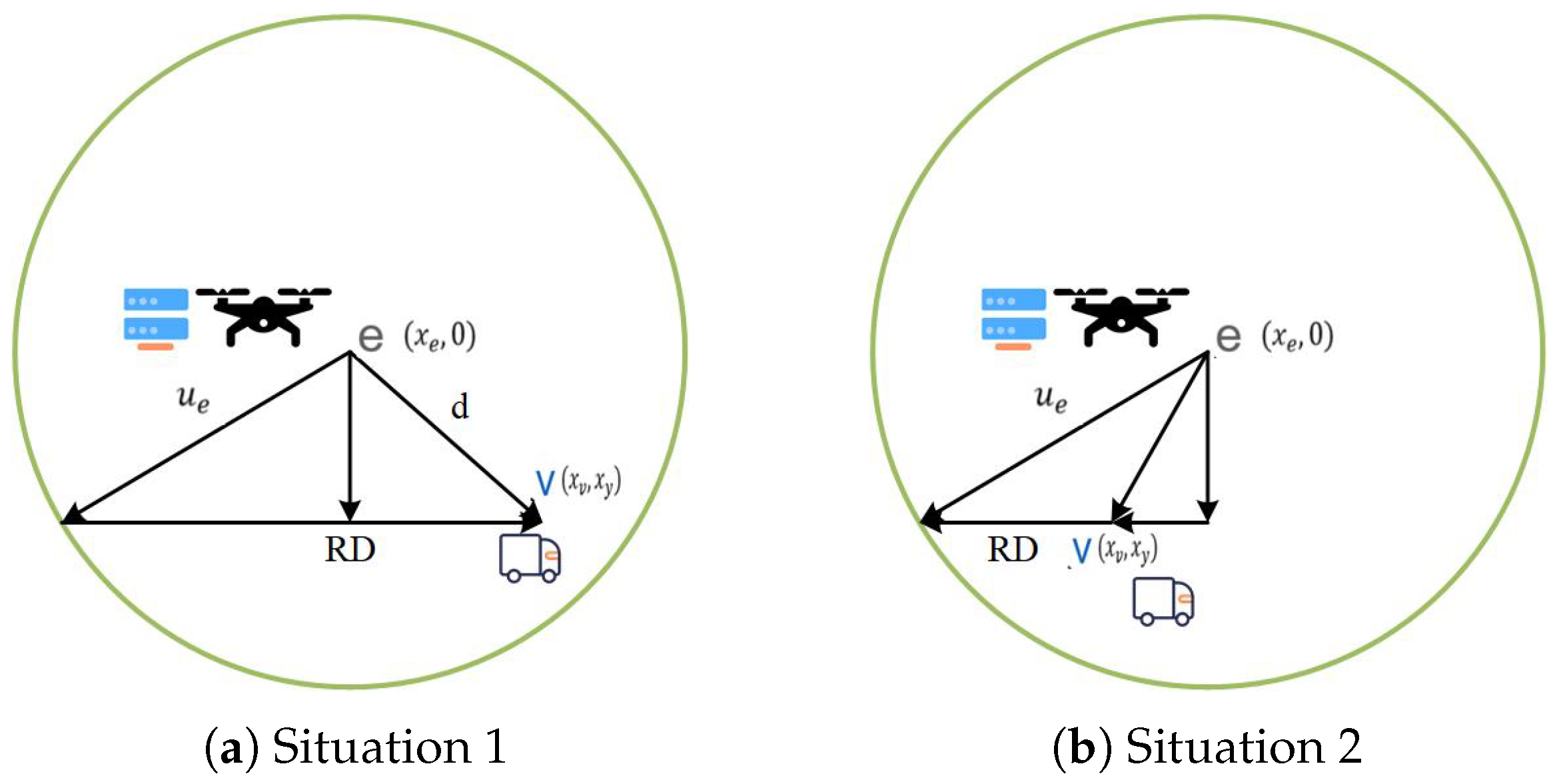

3.3.1. Direct Offloading Model

3.3.2. Prediction Offloading Model

3.3.3. Mixed Offloading Model

3.4. Problem Formulation

4. Mobility Aware Vehicular Task Offloading Algorithm

4.1. Algorithm Framework

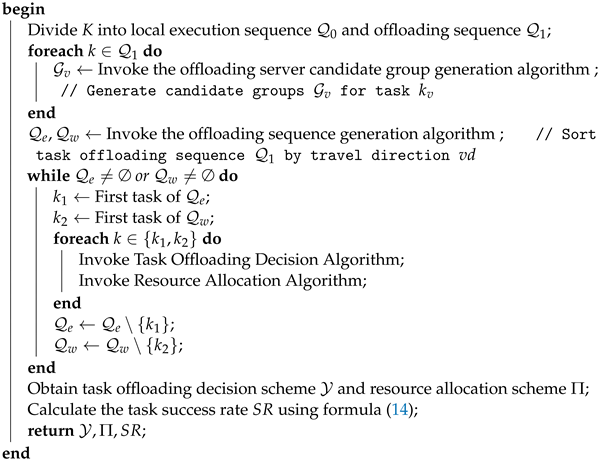

| Algorithm 1: MAVTO (Mobility Aware Vehicular Task Offloading) Algorithm Framework |

Input: The set of UAV servers ; the set of vehicles ; the set of tasks K; Output: Task Offloading Decision ; Resource Allocation ; Success Rate of Task Completion  |

4.2. Generate the Candidate Group of Offloading Servers

4.3. Task Offloading Sequence Generation

- 1.

- OTSS1: MNCF (Minimum Number of Candidates First). The MNCF rule considers the candidate group count of the potential offloading servers for tasks, as mentioned in Section 4.2. For tasks with a limited selection range, they should be assigned offloading nodes with higher priority. On the other hand, for tasks with a wide selection range, they should be assigned offloading nodes later so that the offloading node assignment can be adjusted based on the current decision scheme. Otherwise, it would result in limited flexibility for tasks with a smaller selection range.

- 2.

- OTSS2: MATF (Minimum Available Time First). The MATF rule considers not only the deadline constraint of tasks but also takes into account the characteristics of vehicle speed and position based on vehicle mobility information. The less time a vehicle spends driving within the current coverage range, the easier it is for the vehicle to leave the range of the current UAV. The available time for a vehicle v to handle task k is denoted as :where is the remaining travel time of the vehicle within the coverage area of the current UAV node, and is the remaining travel distance of the vehicle within the coverage area of the current UAV node. The calculation is shown in the example in Figure 5.

- 3.

- OTSS3: Maximum Local Execution Time First. For tasks that take a long time to execute locally, tasks are more likely to be completed beyond the deadline, resulting in a poor experience. Therefore, priority is given to offloading tasks that take a long time to execute locally.

- 4.

- OTSS4: MWSDC (Minimum Weighted Sum of Data and Computation First). The MWSDC rule takes into account the input data volume and computational workload of a task. For a task k, its weighted sum is defined as follows:where and are used to balance the computational workload () and data volume () of the task, a smaller value indicates a lower resource demand for the task. Therefore, tasks with smaller SUM values have higher priority and are preferred for offloading decisions.

4.4. Offloading Decision

- 1.

- OD1: MECTF (Minimum Estimated Completion Time First). When selecting offloading nodes for tasks, various factors such as uploading time, transmission time, waiting time, computation time, and result return time are taken into account. The estimated completion time of the task at each UAV node is assessed, and the destination node is chosen as the one that can complete the task earliest. The estimated completion time of the task is calculated using Equation (32).The estimated completion time of task execution on a UAV node is determined by a combination of factors, including the transmission time from various nodes, the estimated earliest availability time of servers , the estimated computation time , and the estimated task result return time . Transferring the task to an unconnected node incurs an additional transmission cost compared to uploading it directly to the directly connected UAV node . The value of is calculated using the average processing speed of the UAV server, represented by . The estimated earliest availability time of the server is calculated using the average startup time of containers and the average wait time of tasks on the server. The task result return time is determined by the size of the computation result, the downstream transmission rate, and the location at which the vehicle arrives, specifically . Based on the perception information of vehicle movement, the predicted location of the vehicle’s arrival is used to estimate the number of that the computation result needs to traverse between UAVs.

- 2.

- OD2: MCCF (Maximum Computing Capacity First). Taking into consideration the computational capabilities of UAV nodes, tasks executed on UAV servers with higher computational capabilities will have shorter execution times, maximizing the utilization of node processing capabilities. However, this approach directly ignores the task transmission cost and the node’s workload, which may result in an uneven distribution of tasks among UAV nodes. As a consequence, servers with higher processing capabilities may become overloaded, while servers with lower processing capabilities remain idle, leading to under-utilization of resources.

- 3.

- OD3: LBF (Load Balance First). Balancing the processing capabilities and the number of pending tasks among UAV nodes to match their processing capacity with the workload is essential to achieve equilibrium among the nodes. In the task selection process, the UAV node with the minimum current load pressure is chosen as the offloading node. The load factor (LF) is defined as the ratio of the current workload of a UAV node to its maximum processing capacity:Here, represents the set of pending tasks for a UAV node. is used as a comprehensive measure to assess the processing capabilities and the number of tasks being processed by a UAV node. The UAV node with the smallest value is selected as the unload node for task computation.

4.5. Resource Allocation

- 1.

- RA1: EFTF (Earliest Finish Time First). The EFTF strategy takes into account both the processing capabilities of the containers and the queuing status of pending task sequences. It estimates the completion time of tasks on each container and assigns tasks to the container with the shortest completion time for processing. This approach ensures that tasks are assigned to the containers that can complete them in the shortest amount of time.

- 2.

- RA2: Max–Min. The core idea of this strategy is to sort the task sequence in a non-increasing order of processing time and assign tasks to containers with shorter processing times. This maximizes resource utilization and minimizes task completion time, ultimately aiming to meet task deadlines. The strategy optimizes resource usage and increases the likelihood of completing tasks within their respective deadlines.

- 3.

- RA3: Min-Variance. To improve the efficiency of task scheduling in a containerized environment, a strategy is proposed where tasks are sorted based on their processing time in ascending order. The tasks are then assigned to containers with the shortest processing time. An initial scheduling plan is obtained on the basis of this strategy. Subsequently, a failure sequence of tasks that exceed the deadline is recorded. To address these failures, a Min-Variance algorithm and a rescheduling algorithm are introduced. The Min-Variance algorithm sorts tasks in a non-decreasing order of their computational workload and assigns each task to a container with the minimum completion time. After obtaining the task-resource mapping sequence, the failed tasks are rescheduled onto other containers for execution. The pseudocode for the Min-Variance algorithm and the rescheduling algorithm is presented as Algorithm 2 and Algorithm 3, respectively.

| Algorithm 2: The Framework of Min Variance |

Input: The set of UAV servers Output: The task-container matching sequence ; success rate of task execution  |

| Algorithm 3: TRS (Task Re-Scheduling) |

Input: Set of failed tasks ; Sequence of task-container matches ; Number of successfully executed tasks Output: Updated sequence of task-container matches ; Updated number of successfully executed tasks  |

5. Experimental Results

5.1. Experimental Environment and Datasets

5.2. Parameter Calibration

- 1.

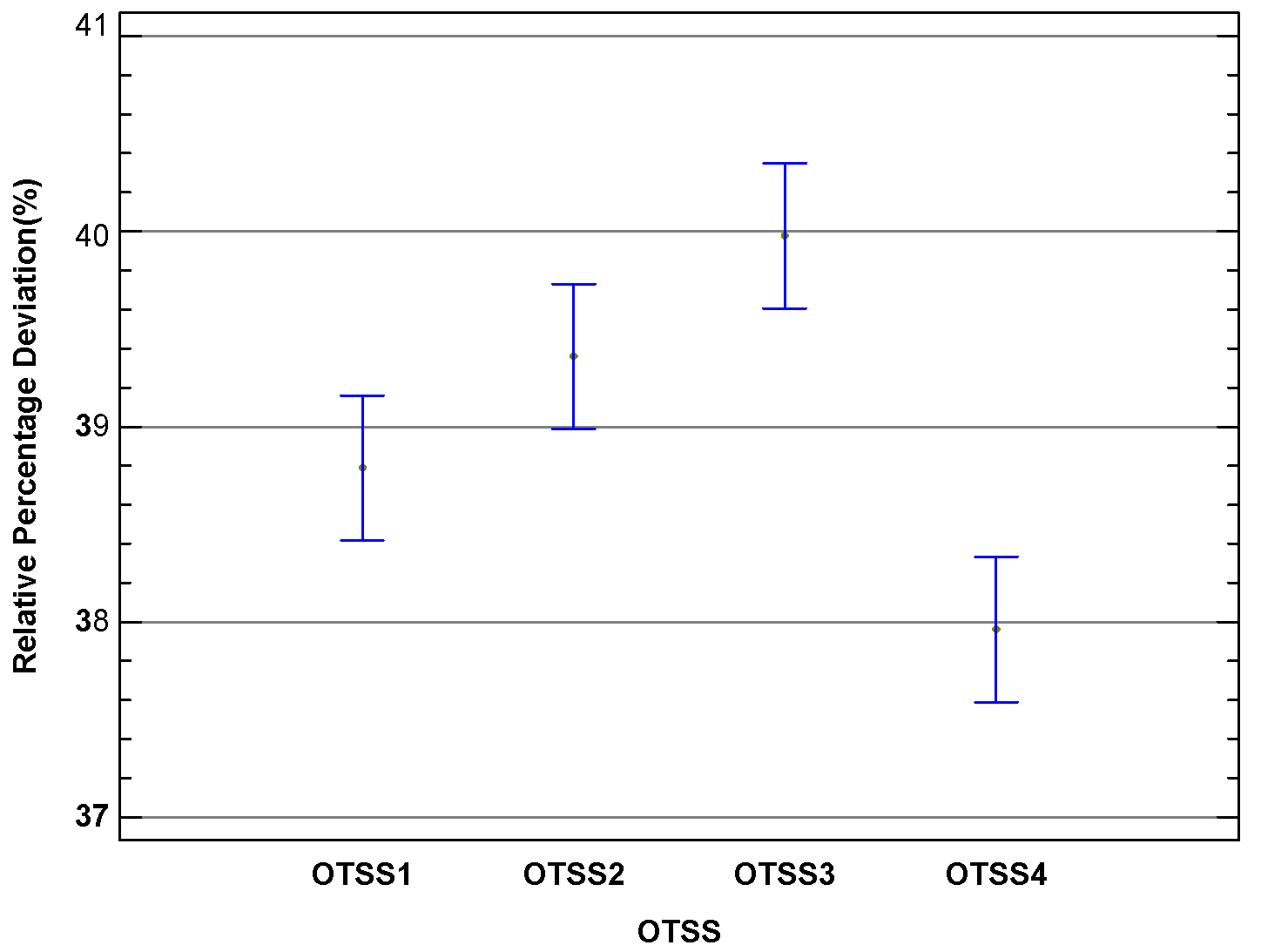

- Task Offloading Sequence GenerationFigure 6 presents a comparison of four task offloading sequence ordering rules: OTSS1, OTSS2, OTSS3, and OTSS4. Among these, OTSS4, which gives precedence to workloads with the least data intensity, demonstrates superior performance. This rule takes into account both transmission and computation resource demands, thus enhancing the likelihood of tasks meeting their deadlines. Conversely, OTSS1 and OTSS2 focus solely on minimizing the number of candidate nodes and available time, respectively, thus evaluating only partial task characteristics and delivering inferior performance. OTSS3 acknowledges computation resource needs but overlooks bandwidth requirements and variations in processing capabilities. Therefore, OTSS4 is selected as the task offloading sequence ordering rule in this study.

- 2.

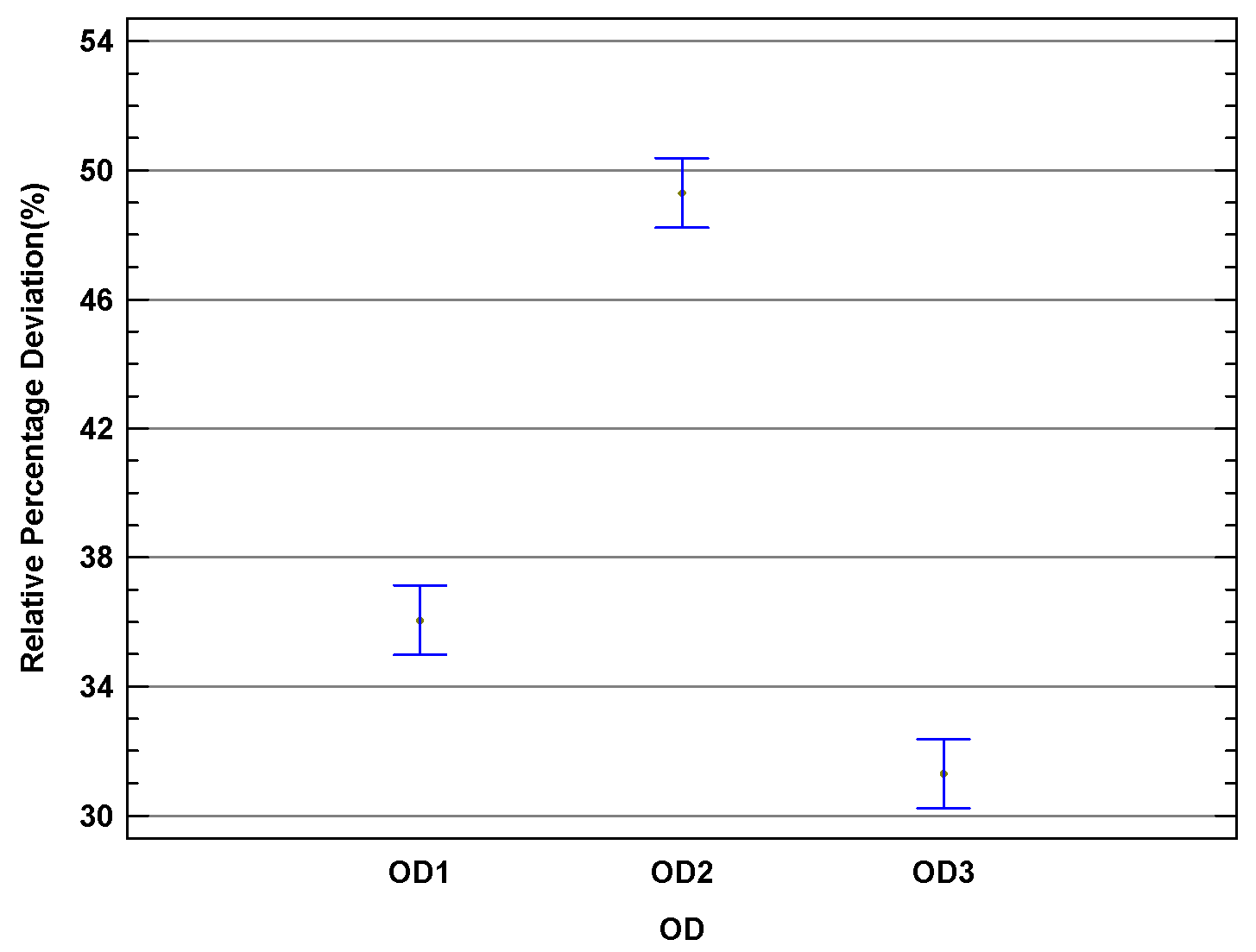

- Offloading Decision StrategyFigure 7 depicts a comparison of the performance of three task offloading strategies: OD1, OD2, and OD3. Of these, OD3, which implements a load balancing priority tactic, achieves superior performance compared to OD1 and OD2. OD3 takes into account both the processing capabilities of the UAV nodes and the order of pending task offloading when selecting nodes. Conversely, OD1 is based on a minimum estimated completion time priority strategy and considers the server’s processing capabilities and current load. However, it may result in suboptimal task allocation due to inaccurate estimates of task completion times, leading to inferior performance relative to OD3. On the other hand, OD2 employs a maximum processing capability priority strategy that focuses solely on the resource status of UAV nodes, causing uneven server loads and reduced task processing effectiveness. Consequently, OD2 delivers the least favorable performance. From the analysis and comparison, OD3, with its load balancing priority approach, is selected as the optimal task offloading decision rule.

- 3.

- Resource AllocationFigure 8 presents a comparative analysis of various resource allocation strategies within a 95% Tukey HSD confidence interval. The figure illustrates that among the three strategies evaluated, RA3 demonstrates superior performance. RA1, which prioritizes the earliest completion time, allocates tasks to containers with the earliest finishing capability but fails to account for resource contention among tasks, resulting in extended waits for other tasks. RA2, based on the Max–Min strategy, prioritizes large task scheduling by assigning them to the earliest available containers, which, in turn, possibly makes smaller tasks wait beyond their deadlines. Conversely, RA3 adopts the Min-Variance strategy, focusing on scheduling smaller tasks first, thereby minimizing their wait times. Post initial allocation, a secondary assignment is conducted to relocate tasks at risk of deadline breaches to containers that can further reduce their completion times, thereby enhancing overall task success rates. Ultimately, this study selects RA3 as the most effective resource allocation strategy.

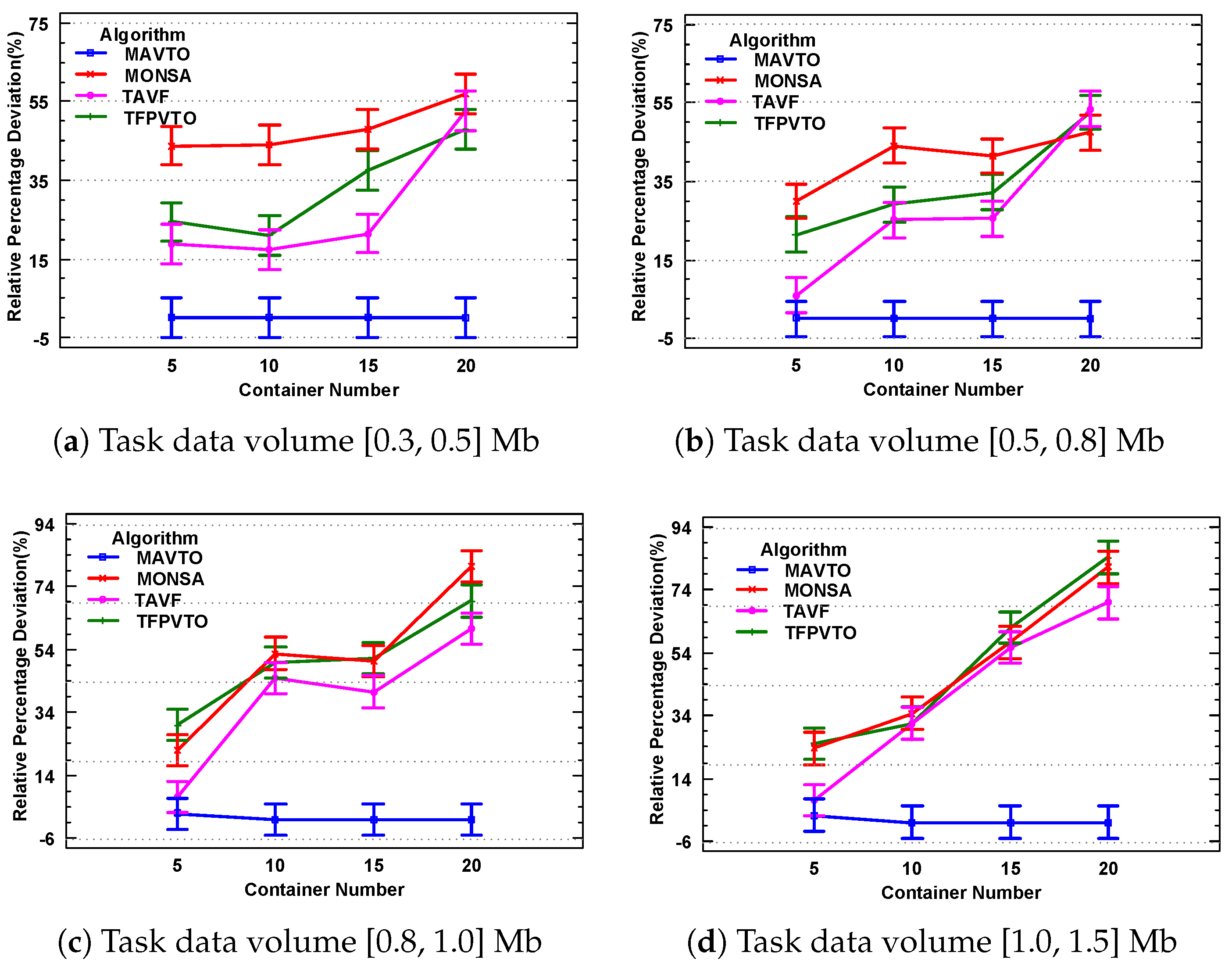

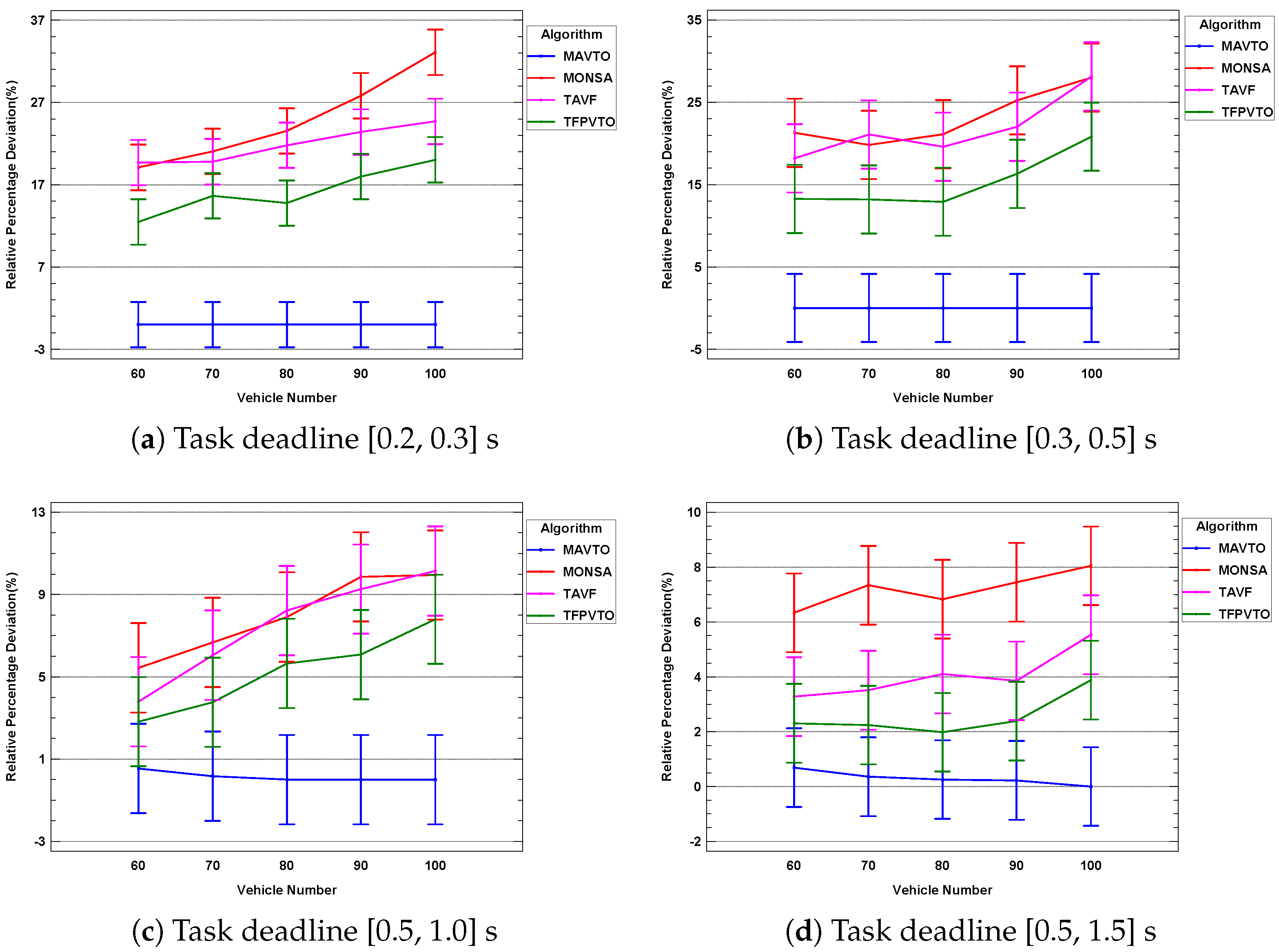

5.3. Performance Comparison

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ali, S.S.D.; Zhao, H.P.; Kim, H. Mobile edge computing: A promising paradigm for future communication systems. In Proceedings of the TENCON 2018–2018 IEEE Region 10 Conference, Jeju, Republic of Korea, 28–31 October 2018; pp. 1183–1187. [Google Scholar]

- Bute, M.S.; Fan, P.; Liu, G.; Abbas, F.; Ding, Z. A collaborative task offloading scheme in vehicular edge computing. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Virtual, 25–28 April 2021; pp. 1–5. [Google Scholar]

- Cui, Y.; Liang, Y.; Wang, R. Intelligent task offloading algorithm for mobile edge computing in vehicular networks. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–5. [Google Scholar]

- Liu, J.; Liu, N.; Liu, L.; Li, S.; Zhu, H.; Zhang, P. A proactive stable scheme for vehicular collaborative edge computing. IEEE Trans. Veh. Technol. 2023, 72, 10724–10736. [Google Scholar] [CrossRef]

- Xue, Z.; Liu, C.; Liao, C.; Han, G.; Sheng, Z. Joint service caching and computation offloading scheme based on deep reinforcement learning in vehicular edge computing systems. IEEE Trans. Veh. Technol. 2023, 72, 6709–6722. [Google Scholar] [CrossRef]

- Wang, X.; Ning, Z.; Guo, S.; Wang, L. Imitation learning enabled task scheduling for online vehicular edge computing. IEEE Trans. Mob. Comput. 2020, 21, 598–611. [Google Scholar] [CrossRef]

- Bahreini, T.; Brocanelli, M.; Grosu, D. Vecman: A framework for energy-aware resource management in vehicular edge computing systems. IEEE Trans. Mob. Comput. 2023, 22, 1231–1245. [Google Scholar] [CrossRef]

- Fan, W.; Hua, M.; Zhang, Y.; Su, Y.; Li, X.; Tang, B.; Wu, F.; Liu, Y. Game-based task offloading and resource allocation for vehicular edge computing with edge-edge cooperation. IEEE Trans. Veh. Technol. 2023, 72, 7857–7870. [Google Scholar] [CrossRef]

- Ernest, T.Z.H.; Madhukumar, A.S. Computation offloading in mec-enabled iov networks: Average energy efficiency analysis and learning-based maximization. IEEE Trans. Mob. Comput. 2024, 23, 6074–6087. [Google Scholar] [CrossRef]

- You, C.; Huang, K.; Chae, H.; Kim, B. Energy-efficient resource allocation for mobile-edge computation offloading. IEEE Trans. Wirel. Commun. 2017, 16, 1397–1411. [Google Scholar] [CrossRef]

- Tan, K.; Feng, L.; Dán, G.; Törngren, M. Decentralized convex optimization for joint task offloading and resource allocation of vehicular edge computing systems. IEEE Trans. Veh. Technol. 2022, 71, 13226–13241. [Google Scholar] [CrossRef]

- Dai, X.; Xiao, Z.; Jiang, H.; Lui, J.C. UAV-assisted task offloading in vehicular edge computing networks. IEEE Trans. Mob. Comput. 2023, 23, 2520–2534. [Google Scholar] [CrossRef]

- Samir, M.; Ebrahimi, D.; Assi, C.; Sharafeddine, S.; Ghrayeb, A. Leveraging uavs for coverage in cell-free vehicular networks: A deep reinforcement learning approach. IEEE Trans. Mob. Comput. 2020, 20, 2835–2847. [Google Scholar] [CrossRef]

- He, Y.; Zhai, D.; Zhang, R.; Du, J.; Aujla, G.S.; Cao, H. A mobile edge computing framework for task offloading and resource allocation in uav-assisted vanets. In Proceedings of the IEEE INFOCOM 2021-IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Virtual, 9–12 May 2021; pp. 1–6. [Google Scholar]

- Qi, W.; Song, Q.; Guo, L.; Jamalipour, A. Energy-efficient resource allocation for uav-assisted vehicular networks with spectrum sharing. IEEE Trans. Veh. Technol. 2022, 71, 7691–7702. [Google Scholar] [CrossRef]

- Alkubeily, M.; Sakulin, S.A.; Hasan, B. Design an adaptive trajectory to support uav assisted vanet networks. In Proceedings of the 2023 5th International Youth Conference on Radio Electronics, Electrical and Power Engineering (REEPE), Moscow, Russia, 16–18 March 2023; Volume 5, pp. 1–6. [Google Scholar]

- Yang, C.; Liu, B.; Li, H.; Li, B.; Xie, K.; Xie, S. Learning based channel allocation and task offloading in temporary uav-assisted vehicular edge computing networks. IEEE Trans. Veh. Technol. 2022, 71, 9884–9895. [Google Scholar] [CrossRef]

- Li, B.; Xie, W.; Ye, Y.; Liu, L.; Fei, Z. Flexedge: Digital twin-enabled task offloading for UAV-aided vehicular edge computing. IEEE Trans. Veh. Technol. 2023, 72, 11086–11091. [Google Scholar] [CrossRef]

- Liwang, M.; Gao, Z.; Hosseinalipour, S.; Su, Y.; Wang, X.; Dai, H. Graph-represented computation-intensive task scheduling over air-ground integrated vehicular networks. IEEE Trans. Serv. Comput. 2023, 16, 3397–3411. [Google Scholar] [CrossRef]

- Wang, J.; Fu, T.; Xue, J.; Li, C.; Song, H.; Xu, W.; Shangguan, Q. Realtime wide-area vehicle trajectory tracking using millimeter-wave radar sensors and the open tjrd ts dataset. Int. J. Transp. Sci. Technol. 2023, 12, 273–290. [Google Scholar] [CrossRef]

- Ren, H.; Liu, K.; Yan, G.; Li, Y.; Zhan, C.; Guo, S. A memetic algorithm for cooperative complex task offloading in heterogeneous vehicular networks. IEEE Trans. Netw. Sci. Eng. 2023, 10, 189–204. [Google Scholar] [CrossRef]

- Tang, C.; Wei, X.; Zhu, C.; Wang, Y.; Jia, W. Mobile vehicles as fog nodes for latency optimization in smart cities. IEEE Trans. Veh. Technol. 2020, 69, 9364–9375. [Google Scholar] [CrossRef]

- Zhang, R.; Wu, L.; Cao, S.; Hu, X.; Xue, S.; Wu, D.; Li, Q. Task offloading with task classification and offloading nodes selection for mec-enabled iov. ACM Trans. Internet Technol. (TOIT) 2021, 22, 1–24. [Google Scholar] [CrossRef]

- Xie, L.; Chen, L.; Li, X.; Wang, S. A traffic flow prediction based task offloading method in vehicular edge computing. In Proceedings of the CCF Conference on Computer Supported Cooperative Work and Social Computing, Harbin, China, 18–20 August 2023; pp. 360–374. [Google Scholar]

| Notation | Description |

|---|---|

| the set of UAV servers, , where | |

| the U2V wireless link transmission rate of UAV node e | |

| the CPU processing speed of UAV server e | |

| r | radius of coverage zones of UAV server e |

| , coordinates of UAV server e | |

| W | the U2U wireless link transmission rate between UAV nodes |

| the set of vehicles, , where | |

| speed of vehicle v | |

| direction of vehicle v | |

| , coordinates of vehicle v | |

| K | the set of tasks generated, , where , task generated by vehicle v |

| the input data volume of task k | |

| the output data volume of task k | |

| the computational workload of task k | |

| the deadline of task k | |

| the set of containers on UAV server e, , where ) | |

| the instance startup time required for container s | |

| the CPU processing speed of container s | |

| the completion time of task k on UAV node e | |

| the start time of task k on container s | |

| the time for uploading task k to UAV node e | |

| the time for transmitting task k between UAV node and | |

| the computation time of task k on UAV node e | |

| the time for downloading results of task k to vehicles |

| Parameter | Parameter Value |

|---|---|

| Number of UAV servers | 5 |

| U2V link transmission rate | |

| CPU processing speed of UAV servers | |

| Radius of coverage zones of UAVs r | |

| Number of vehicles | |

| Input task data volume | |

| Output task result data volume | |

| Task computational workload | |

| Task deadline intervals |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Du, J.; Zhu, X. Mobility-Aware Task Offloading and Resource Allocation in UAV-Assisted Vehicular Edge Computing Networks. Drones 2024, 8, 696. https://doi.org/10.3390/drones8110696

Chen L, Du J, Zhu X. Mobility-Aware Task Offloading and Resource Allocation in UAV-Assisted Vehicular Edge Computing Networks. Drones. 2024; 8(11):696. https://doi.org/10.3390/drones8110696

Chicago/Turabian StyleChen, Long, Jiaqi Du, and Xia Zhu. 2024. "Mobility-Aware Task Offloading and Resource Allocation in UAV-Assisted Vehicular Edge Computing Networks" Drones 8, no. 11: 696. https://doi.org/10.3390/drones8110696

APA StyleChen, L., Du, J., & Zhu, X. (2024). Mobility-Aware Task Offloading and Resource Allocation in UAV-Assisted Vehicular Edge Computing Networks. Drones, 8(11), 696. https://doi.org/10.3390/drones8110696