Abstract

Knowledge-driven building extraction method exhibits a restricted adaptability scope and is vulnerable to external factors that affect its extraction accuracy. On the other hand, data-driven building extraction method lacks interpretability, heavily relies on extensive training data, and may result in extraction outcomes with building boundary blur issues. The integration of pre-existing knowledge with data-driven learning is essential for the intelligent identification and extraction of buildings from high-resolution aerial images. To overcome the limitations of current deep learning building extraction networks in effectively leveraging prior knowledge of aerial images, a geometric significance-aware deep mutual learning network (GSDMLNet) is proposed. Firstly, the GeoSay algorithm is utilized to derive building geometric significance feature maps as prior knowledge and integrate them into the deep learning network to enhance the targeted extraction of building features. Secondly, a bi-directional guidance attention module (BGAM) is developed to facilitate deep mutual learning between the building feature map and the building geometric significance feature map within the dual-branch network. Furthermore, the deployment of an enhanced flow alignment module (FAM++) is utilized to produce high-resolution, robust semantic feature maps with strong interpretability. Ultimately, a multi-objective loss function is crafted to refine the network’s performance. Experimental results demonstrate that the GSDMLNet excels in building extraction tasks within densely populated and diverse urban areas, reducing misidentification of shadow-obscured regions and color-similar terrains lacking building structural features. This approach effectively ensures the precise acquisition of urban building information in aerial images.

1. Introduction

As pivotal vessels of urban data, the categories and spatial arrangement of buildings mirror the patterns of urbanization and growth. The timely acquisition of building information plays a vital role in urban management and serves as a catalyst for the advancement of smart cities [1,2]. High-resolution images provide a wealth of spectral, textural, and spatial information, enabling the extraction of target objects with exceptional accuracy [3]. Compared with other land covers, buildings, as man-made structures, exhibit certain regularities in their geometry, spatial layout, attributes, and topology, which render them well suited for intelligent extraction from high-resolution imagery. Knowledge-driven methods aim to extract prior knowledge of buildings from remote sensing data and represent it through predefined rules, simulating the decision-making processes of domain experts to construct targeted building extraction models. However, these approaches exhibit limited adaptability and are susceptible to external factors, resulting in reduced extraction accuracy. On the other hand, data-driven methods leverage machine learning techniques to autonomously learn building features from images and construct intelligent extraction models based on network structures and data characteristics. Despite their automated nature, these methods often suffer from poor interpretability, reliance on extensive training samples, and issues such as blurred building boundaries. Therefore, the crux of intelligent building extraction from high-resolution aerial images lies in the autonomous assimilation and application of prior knowledge of buildings.

Over the past few years, deep learning semantic segmentation networks have gained recognition as the prevailing approach for building extraction from high-resolution images [4,5,6]. While initially developed for natural images, current deep learning methods for building extraction predominantly concentrate on learning RGB (Red, Green, Blue) semantic features from high-resolution images. Nonetheless, RGB semantic features encounter challenges in accurately capturing the geometric shapes and structural information of buildings, especially in the extraction of intricate building shapes, corners, and other geometric features. Aerial images possess distinct characteristics compared to natural images, encapsulating extensive prior knowledge of buildings, including building boundaries, corners, and spatial relationships. Regrettably, existing deep learning building extraction networks often struggle to effectively harness prior knowledge of aerial images, thereby leading to less precise building extraction outcomes when processing aerial images.

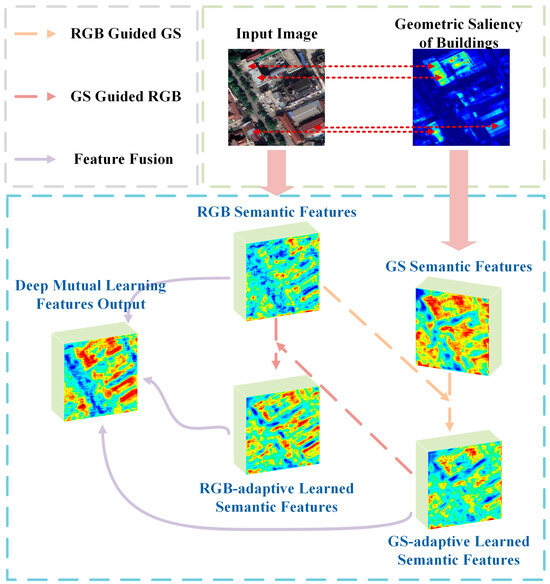

To address the aforementioned limitations, this article proposes a geometric significance-aware deep mutual learning network, named GSDMLNet. The motivation of GSDMLNet is shown in Figure 1, where the incorporation of geometric saliency information regarding buildings serves as crucial prior knowledge within the network. By leveraging both RGB semantic features and Geometric Significance (GS) semantic features in a bi-directional manner, the network enhances its ability to capture the intricate relationship between visual and geometric properties of buildings in remote sensing imagery. GSDMLNet establishes a guidance mechanism between RGB and GS semantic features, strategically leveraging the complementary characteristics of these two feature sets to adaptively learn building features and subsequently refine them through feature fusion, thereby facilitating deep mutual learning building feature extraction. Through the fusion of geometric saliency information and the deep mutual learning mechanism, GSDMLNet effectively harnesses the rich prior knowledge inherent in aerial images, ultimately accentuating target area delineation. The contributions of this article can be summarized as follows:

Figure 1.

Motivation of GSDMLNet.

- This study incorporates the geometric saliency of buildings as prior knowledge within the deep learning network, directing the network’s focus toward the geometrically salient regions of buildings. This strategic integration significantly improves the network’s specificity in learning building features.

- Furthermore, a novel bi-directional guidance attention module (BGAM) is proposed in this study, effectively capturing the interdependency between same-scale feature maps within the RGB and GS semantic branches. The deep mutual learning facilitated by the BGAM enhances the extraction of features within the target area.

- Additionally, an enhanced flow alignment module (FAM++) is introduced to mitigate the occurrence of voids in building feature maps and attain highly semantic information at a heightened resolution. By implementing a gating mechanism inspired by the original FAM design, FAM++ effectively learns the spatial and semantic relationships across adjacent feature layers.

- To enhance the precision of building extraction outcomes, a multi-objective loss function is devised in this study to fine-tune the network. This loss function encompasses the refinement of both the final prediction map and the feature maps derived from the encoder, with the goal of synergistically enhancing the network’s efficiency.

The structure of this paper is outlined below. Section 2 reviews the relevant work on building extraction from aerial images. Section 3 describes our proposed building extraction method. Section 4 describes the datasets, experimental setup parameters, experimental results, and analysis. Finally, the conclusion of this article is in Section 5.

2. Related Works

Currently, there are two main methods for building extraction on a large scale with high frequency. One is a knowledge-driven method, and the other is a data-driven method.

2.1. Knowledge-Driven Building Extraction

Building extraction driven by knowledge leverages the spectral and spatial attributes of buildings within remote sensing imagery to develop models that are capable of interpreting and extracting building features. It mainly includes methods based on region segmentation, feature-based methods, and prior model-based methods.

Region-based methods for building extraction in remote sensing imagery involve the initial partitioning of the image into regions, followed by the extraction of buildings through the integration of texture, geometry, and spectral features. This approach offers a promising solution to mitigate the impulse noise issues associated with pixel-level extraction techniques. Cui et al. [7] combined the Hough transformation with graph search algorithms to further extract buildings from high-resolution images after region segmentation. However, this method may face limitations when dealing with complex scenes due to its inherent constraints. Merabet et al. [8] utilized the watershed segmentation algorithm for initial building extraction, followed by the watershed algorithm and region-merging strategy to further optimize the extraction results.

Feature-based methods for building extraction in images involve the extraction of diverse features, including spectral, shape, texture, edge contour, and spatial features, to effectively identify buildings. The integration of multiple features is essential due to the intricate nature of building structures and colors in images, compensating for the limitations of single-feature-based extraction methods. Notably, research has demonstrated the efficacy of shadow features in assisting building extraction [9,10], with specific emphasis on their role in reducing the error rate of high-rise building extraction [11,12,13]. Hu et al. [14] leveraged the spatial correlation between shadows and buildings to ascertain the buildings’ locations by computing the angle between the shade line and shadow line (SLSLA). Furthermore, the Morphological Building Index (MBI) [15], which encompasses brightness, contrast, shape, and orientation features, has found widespread application in building extraction. Ding et al. [16] introduced an innovative approach for building extraction by fully utilizing MBI, spectral constraints, shadow constraints, and shape constraints. Additionally, some researchers propose building extraction methods based on geometric boundary features, such as prominent straight boundaries or corners of buildings. Zhang et al. [17] proposed a semi-automatic building extraction method based on building corners and orientations, which performs well in extracting regular-shaped buildings. Ning and Lin [18] used building corners and boundary line segments for spatial voting, enhancing the salience of building areas.

The building extraction method based on a prior model involves consolidating prior knowledge of buildings, encompassing spectral information, geometric features, and spatial characteristics, into a structured prior model utilized for building extraction. Typically, in existing research, the prior shape of buildings is integrated into active contour models, Snake models, and their variations to facilitate building extraction [19,20,21].

This approach relies on designed features derived from prior knowledge, making it susceptible to various factors such as the imaging angle of remote sensing data, lighting conditions, building morphology, and shadows. These factors pose constraints on the accuracy of building extraction outcomes and limit the method’s generalizability in large-scale urban settings.

2.2. Data-Driven Building Extraction

The prevalence of data-driven building extraction methodologies enhances precision by refining the multi-scale feature extraction modules, attention mechanism modules, and loss functions within established network architectures. FCN (Fully Convolutional Networks) [22], PSPNet (Pyramid Scene Parsing Network) [23], DeepLab [24], SegNet [25], UNet [26], and other networks are commonly used for building extraction, with some modifications made to these base networks.

UNet and its variants are extensively implemented for the task of building extraction from satellite imagery. Liu et al. [27] used a UNet model enhanced by depth channels to extract building roofs from drone images. They combined this with image analysis methods to identify changes in the entire roof and detect potential illegal constructions. Zheng et al. [28] devised a strategy for building extraction that capitalizes on the UNet as its foundational structure and introduces an innovative Cross-layer Block (CLB) to harmonize features across disparate scales and integrate contextual information from multiple tiers creating a new network called CLNet..

With the introduction of DenseNet [29], a densely connected network extracts feature information and captures buildings in complex scenes. Zhou et al. [30] incorporated dense connections into the UNet network and introduced the concept of deep supervision, resulting in the UNet++ network structure. Tong et al. [31] replaced the convolutional layers in UNet++ with DenseBlocks, reducing the number of network parameters while maintaining the accuracy of building extraction. Zhao et al. [32] incorporated attention modules and multi-task learning into UNet++ to preserve the details of building boundaries.

Some researchers have added building boundary feature extraction branch networks and boundary constraint optimization functions to improve the accuracy of building edge extraction. Guo et al. [33] obtain semantic features and edge features of buildings by constructing a feature decoupling network (FD-Net), with the learning of edge features preserving the integrity of building edge information. Hu et al. [6] introduced a shape preservation model (SPM) and a boundary loss function into the Mask R-CNN, enhancing the network’s ability to extract building boundaries. Zhu et al. [5] proposed a semantic collaboration module (SCM) to fully explore the correlation between the semantic features and edge features of buildings, further enhancing the performance of the network. Yang et al. [34] combined a centroid map forecasting component, a segmentation forecasting component, and a boundary rectification component into an integrated network, thereby augmenting the precision of building boundary detection. Sun et al. [35] proposed a Geographic Spatial Layered Optimization Sampling (GSOS) method based on geographic prior information and sample space location distribution optimization to obtain representative samples. Multiple qualitative and quantitative experiments were conducted, demonstrating the superiority of their method.

While the aforementioned building extraction methods have introduced novel deep learning network models, they exhibit an excessive dependence on training samples and lack incorporation of prior knowledge guidance during model training, leading to a lack of specificity in network targeting.

In knowledge-driven approaches to building extraction, the manual crafting of prior knowledge for buildings is limited and susceptible to interference from external elements such as lighting conditions, posing challenges for large-scale application. Conversely, data-driven building extraction methods overly rely on training samples and do not benefit from the guidance of a priori knowledge, resulting in inadequate network targeting. To address these limitations and enhance the precision and effective integration of prior knowledge in deep learning-based high-resolution remote sensing image building extraction networks, and to meet the demand for accurate building extraction, it is imperative to introduce a deep mutual learning approach for remote sensing image building extraction. This approach considers geometric saliency and aims to mitigate the aforementioned challenges.

3. Methodology

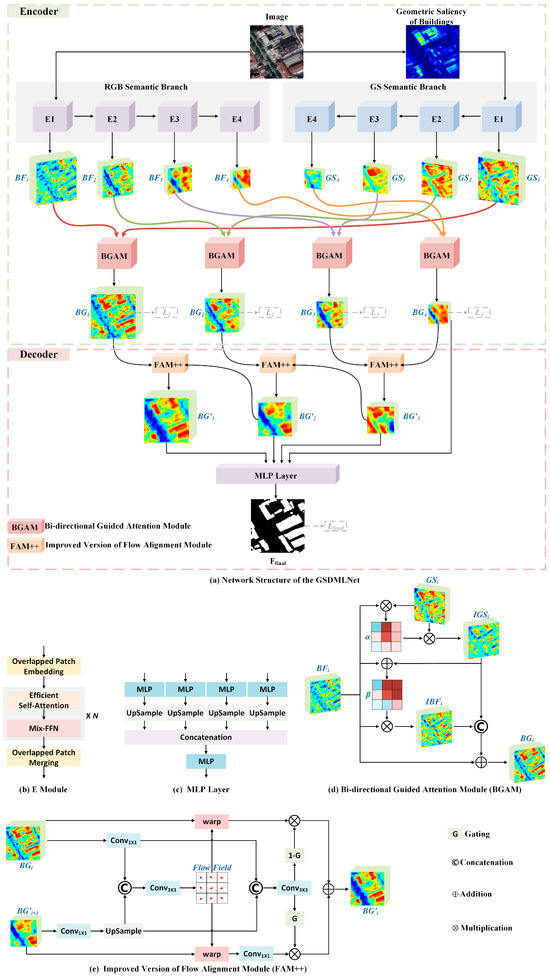

Aiming at the problem of existing deep learning-based building extraction networks lacking effective utilization of prior knowledge of aerial images, this article proposes a geometric significance-aware deep mutual learning network (GSDMLNet) for building extraction from aerial images. The GSDMLNet’s network configuration is depicted in Figure 2, showcasing an encoder-decoder framework. The encoder part includes a dual-branch network and a bi-directional guided attention module (BGAM), while the decoder part includes an improved flow alignment module (FAM++) and a multi-layer perceptron (MLP) layer. GSDMLNet incorporates the geometric saliency of buildings as prior knowledge into the deep learning framework through the dual-branch network. By leveraging deep mutual learning with the BGAM, the network enhances feature extraction within the target region. Additionally, FAM++ is designed to further bolster the network’s capacity to learn building features effectively. The optimization process involves jointly optimizing a multi-objective loss function to enhance the overall performance of the network.

Figure 2.

Illustrates the structure of the proposed GSDMLNet as follows: (a) Overall structure of GSDMLNet; (b) Module E in the dual-branch network; (c) MLP layer; (d) Bi-directional guided attention module (BGAM); (e) Improved version of flow alignment module (FAM++).

3.1. Acquiring Geometric Significance of Buildings

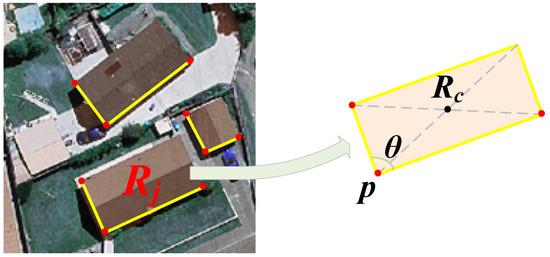

The geometric attributes of buildings in remote sensing imagery often demonstrate a notable regularity, frequently manifesting as regular rectangles. Buildings exhibit distinct boundaries, with clear, predominantly right-angled corners, as illustrated in Figure 3. This inherent geometric saliency renders buildings easily distinguishable from other land features within remote sensing imagery. To effectively guide the network in discerning crucial regional features, the proposed GSNMLNet leverages remote sensing imagery to derive a geometric saliency feature map specific to buildings. This feature map is subsequently integrated as prior knowledge within the deep learning network.

Figure 3.

Geometric attributes of typical buildings in aerial images. represents the quadrilateral region formed by a single building, denotes the intersection point, denotes the centroid of the region, and denotes the angle.

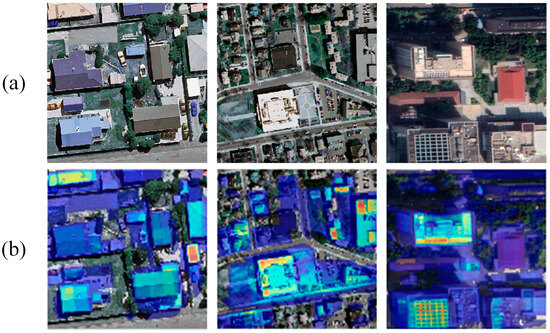

The intersections within building structures serve as key indicators for accurately defining the geometric characteristics of buildings, with corners being prominent locations for intersection detection. Drawing insights from the distinctive features of building intersections in remote sensing imagery, the GeoSay (Geometric Saliency) algorithm [36] is employed to derive a geometric saliency feature map specific to buildings, as depicted in Figure 4. The confidence and angle information of intersections serve as the first-order saliency , while the adjacency relationships of intersections serve as the second-order saliency . By fully integrating the first-order and second-order saliency of intersections on remote sensing imagery, a geometric saliency feature map of buildings is obtained, with the calculation formula as follows:

where represents each pixel point on the remote sensing image, represents all intersections obtained by the ASJ algorithm [37] on the remote sensing image, represents a single intersection point, represents the quadrilateral region formed by a single intersection point and the angle between branches, and indicates that the pixel value is 1 when the pixel point is within the range of , otherwise it is 0.

where represents the confidence of the intersection point, represents the probability of an intersection point with an angle of , and belongs to building intersections. Since most buildings in aerial images are rectangular, is set to in this article.

where represents the size of the neighborhood, represents the set of intersecting points obtained through the τ-NN algorithm, represents the intersecting points within , and represent the center points of and within a range of , and represents the normalization of the distance between and .

Figure 4.

Geometric saliency feature map of buildings in aerial images: (a) Original image and (b) geometric saliency feature map obtained using the GeoSay algorithm.

3.2. Dual-Branch Network

The encoder part of the proposed GSDMLNet constructs a dual-branch network, including an RGB semantic branch and a GS semantic branch. The RGB semantic branch and the GS semantic branch have the same structure, consisting of four E modules (refer to Figure 2b), where the E module is mainly inspired by the transformer backbone network. Considering a remote sensing image denoted as , it is characterized by its dimensions: for height, for width, and for the count of spectral channels. The remote sensing image is input into the RGB semantic branch to obtain four building feature maps at different scales denoted as , with a size of . After applying the GeoSay algorithm, a single-band building geometric saliency feature map is obtained, which is then input into the GS semantic branch to obtain four building feature maps at different scales containing geometric saliency, denoted as , with a size of .

3.3. Bi-Directional Guided Attention Module

To effectively capture the interdependencies of building feature maps at the same scale in the dual branches, a bi-directional guided attention module (BGAM) is devised, as shown in Figure 2d. The deep mutual learning between the two branches can enhance the feature extraction effect in the target area. Firstly, guided by the building feature map , the building geometric saliency feature map adaptively learns the features of the corresponding region, preserving the important region features in that are strongly correlated with . Mathematically:

where , represents an activation function, and represents the correlation between the ith building feature map and the building geometric saliency feature map .

Next, guided by the important region feature , the building feature map adaptively learns the features of the corresponding region, obtaining the feature in that is strongly correlated with . In mathematical terms, the aforementioned procedures can be formulated as:

where represents the correlation between the ith building feature map and the building geometric saliency feature map .

Finally, the adaptively learned features and are fused. In order to complement the semantic information of buildings, the residual idea is utilized by adding it to the input , resulting in the deep mutually learned building feature map . The can be computed as follows:

where represents feature fusion operation along the channel dimension, and represents convolution operation with a kernel size of .

3.4. Improved Flow Alignment Module

To obtain high-resolution (high-spatial resolution), strong semantic information building feature maps and reduce the phenomenon of holes within buildings, an enhanced version of the flow alignment module (FAM++) is constructed, as illustrated in Figure 2e. The advancement over the original flow alignment module (FAM) [38], FAM++, incorporates the idea of gating to better learn semantic and spatial information between adjacent feature layers. Firstly, the semantic flow field is computed for adjacent feature layers, with the calculation formula as follows:

where denotes the high-resolution building feature map that contains weak semantic details, refers to the low-resolution building feature map that is rich in strong semantic content, signifies a convolution with a kernel size of , and indicates the process of up-sampling.

Subsequently, to accentuate the commonalities in features across adjacent layers, a merging process is applied to these proximate feature layers. The gated feature map is obtained through gating operation. The calculation formula for the is as follows:

where represents the gating operation, which includes one convolutional layer with a kernel size of and one sigmoid activation function.

Ultimately, the identical semantic flow field is deployed to individually carry out warping procedures on the neighboring feature strata, culminating in the acquisition of a pair of high-resolution feature maps that are harmonized with semantic data. Then, the gated feature maps and are used to perform weighted fusion on the two aligned feature maps, resulting in a high-resolution building feature map with strong semantic information. The calculation formula for is as follows:

where represents the warp operation.

3.5. Simple Multi-Layer Perceptron Module

To diminish the quantity of parameters throughout the entire network, lightweight MLP layers were used in the encoder section, as shown in Figure 2c. Four high-resolution building feature maps with strong semantic information, denoted as and each of size , are input into the MLP layer for feature fusion, obtaining the final prediction result map denoted as . The calculation formula is as follows:

where represents the MLP layer operation.

3.6. Multi-Objective Loss Function

We have engineered a multi-objective loss function to optimize the network’s performance, aiming to achieve higher precision in building extraction. The loss from the final prediction map, combined with the losses of the four feature maps produced by the encoder, constitutes our multi-objective loss function, denoted as . The formula for calculation is as follows:

where represents the loss function of the final prediction map , represents the loss function of the intermediate feature map , and represents the weight coefficient. In this article, to alleviate the effects of imbalanced sampling, dice loss [39] and focal loss [40] are used for , focal loss is used for , and is set to 1.

4. Experiments

4.1. Datasets

This research evaluated the performance of the GSDMLNet through effectiveness testing on two renowned UAV image datasets that are accessible to the public: the WHU Building Dataset [41], and Massachusetts Building Dataset [42]. The WHU Building Dataset comprises buildings exhibiting small-type discrepancies and relatively regular shapes, thereby presenting less formidable challenges in building extraction. In contrast, the Massachusetts Building Dataset encompasses urban and suburban buildings characterized by dense arrangements, diverse types, and varied shapes, thus markedly heightening the complexity of building extraction. The details of the two datasets are shown in Table 1.

Table 1.

Detailed information of experimental data.

4.2. Implementation Details and Evaluation Metrics

The experimental hardware setup for this study comprises an NVIDIA GeForce RTX 3090 GPU, with the programming environment based on PyTorch 2.1.1. During the training process of GSDMLNet, the batch size was set to 30, with a maximum of 100 iterations. We froze half of the training epochs to accelerate the training speed, where the learning rate during the frozen training period was set to 0.0001, and the learning rate during the unfrozen period was set to 0.00001, utilizing the Adam optimizer to optimize the network parameters. The study utilizes seven metrics for a quantitative assessment of the proposed GSDMLNet and other methodologies for building extraction outcomes: Precision, Recall, F1-Score (F1), Intersection over Union (IoU), Overall Accuracy (OA), Params, and Frames Per Second (FPS).

4.3. Comparison and Analysis

In order to confirm the efficacy of the proposed GSDMLNet in extracting buildings from aerial images, we compared it with five other methods: PSPNet [23], Deeplabv3+ [43], HRNet [44], SegFormer [45], and BEARNet [46]. PSPNet employs a pyramid pooling module to aggregate contextual information from diverse regions, thereby enhancing its capacity to encapsulate global information. Deeplabv3+ leverages spatial pyramid pooling with dilated convolutions to facilitate multi-scale feature extraction, thereby enhancing the precision of semantic segmentation boundaries. HRNet establishes parallel connections between convolutions at different resolutions to facilitate information interaction, ensuring the preservation of reliable high-resolution representations across the network. SegFormer is a network that integrates lightweight multi-layer perceptrons (MLPs) within Transformers ensuring straightforward and efficient functionality. Finally, BEARNet disentangles building bodies and boundaries, guiding the network to independently learn building boundary features and optimize the targeted acquisition of boundary features.

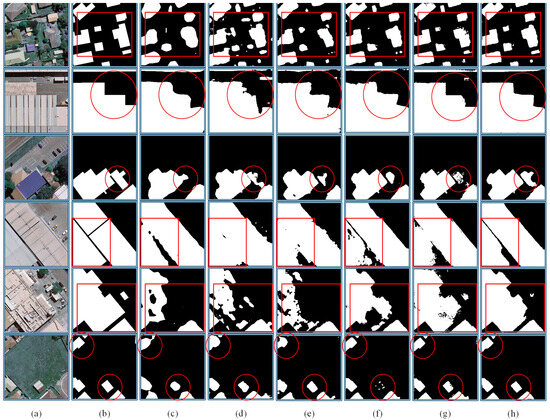

(1) Results on the WHU Building Dataset: Figure 5 illustrates the outcomes of employing diverse methods for building extraction on the WHU Building Dataset. The dataset features a relatively low density of buildings, predominantly presenting regular rectangular shapes, which simplifies the task of building extraction. From Figure 5, it can be observed that SegFormer, BEARNet, and GSDMLNet extract buildings with more complete boundaries, while PSPNet, Deeplabv3+, and HRNet have lower accuracy in building boundary extraction. Furthermore, when buildings in aerial images have colors similar to the ground, the performance of building extraction by PSPNet, Deeplabv3+, HRNet, SegFormer, and BEARNet is poorer. Due to GSDMLNet incorporating geometric saliency of buildings as prior knowledge, this method can accurately extract buildings by relying on the structural features of buildings even when they have colors similar to the ground. For the extraction of large-scale buildings, PSPNet, Deeplabv3+, HRNet, and SegFormer tend to exhibit hole phenomena, while BEARNet utilizes the FAM to improve this. The use of the FAM++ in GSDMLNet preserves the integrity of buildings and reduces the occurrence of holes in large-scale buildings.

Figure 5.

The building extraction results of each method on WHU Building Dataset: (a) Original image; (b) Ground truth label; (c) PSPNet; (d) Deeplabv3+; (e) HRNet; (f) SegFormer; (g) BEARNet; and (h) GSDMLNet.

The quantitative assessment of various methodologies on the WHU Building Dataset is presented in Table 2. For the relatively easy WHU Building Dataset in terms of building extraction, all methods perform well. Among them, GSDMLNet has the highest accuracy evaluation indicators, followed closely by BEARNet. The Precision, Recall, F1, IoU, and OA of GSDMLNet are 97.89%, 97.57%, 97.73%, 95.61%, and 98.83%, respectively. In comparison to the closely ranked BEARNet, which manifests robust quantitative evaluation outcomes overall, GSDMLNet surpasses with superior Precision, Recall, F1 score, IoU, and OA metrics, surpassing by 0.19%, 0.42%, 0.31%, 0.58%, and 0.16%, respectively. Furthermore, GSDMLNet achieves this performance while utilizing 13.33M fewer parameters compared to BEARNet.

Table 2.

Quantitative evaluation results of each method on the WHU Building Dataset and Massachusetts Building Dataset.

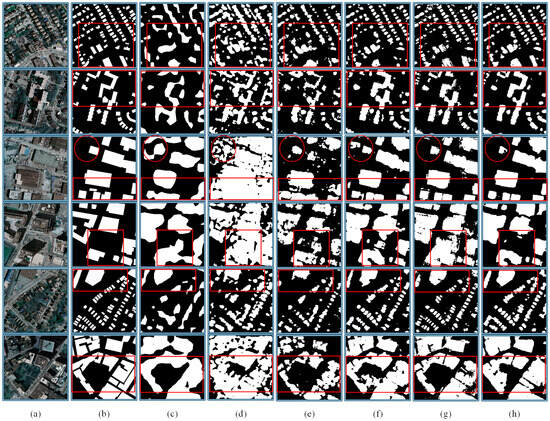

(2) Results on the Massachusetts Building Dataset: The outcomes of building extraction employing diverse methods on the Massachusetts Building Dataset are depicted in Figure 6. The dataset is distinguished by a dense distribution of buildings, featuring complex and diverse shapes alongside inconsistent scales, which collectively present a substantial challenge to the task of building extraction. The building extraction results of PSPNet, Deeplabv3+, and HRNet are not satisfactory, while SegFormer, BEARNet, and GSDMLNet produce building results that are close to the ground truth. Buildings extracted by PSPNet and Deeplabv3+ often exhibit merging phenomena, while HRNet has fewer mergers but incomplete building boundaries. For dense small-scale building extraction, BEARNet and GSDMLNet provide more accurate building boundaries compared to SegFormer. In particular, shadows in aerial images interfere with the extraction of building features to some extent. The BGAM of GSDMLNet is capable of lessening the effects of shadow occlusion, enabling the network to dynamically extract building features and subsequently enhance the precision of building extraction.

Figure 6.

The building extraction results of each method on Massachusetts Building Dataset: (a) Original image; (b) Ground truth label; (c) PSPNet; (d) Deeplabv3+; (e) HRNet; (f) SegFormer; (g) BEARNet; and (h) GSDMLNet.

Table 2 presents the quantitative evaluation results of a variety of methods applied to the Massachusetts Building Dataset. For the challenging Massachusetts Building Dataset, the evaluation metrics of each method are lower compared to the other two datasets. The precision evaluation metrics of GSDMLNet are superior to other methods, with Precision, Recall, F1, IoU, and OA of 85.89%, 86.58%, 86.23%, 77.34%, and 94.42%, respectively. Compared to the second-ranked BEARNet, GSDMLNet outperforms in Precision, Recall, F1, IoU, and OA by 0.97%, 1.31%, 1.14%, 1.52%, and 0.43%, respectively. When compared to SegFormer, which also uses Transformer as the backbone network, GSDMLNet surpasses in Precision, Recall, F1, IoU, and OA by 1.35%, 2.77%, 2.55%, 2.71%, and 0.71%, while GSDMLNet only increases by 2.37M Params compared to SegFormer.

4.4. Sensitivity Analysis of Key Parameters

This article improves the performance of the GSDMLNet by supervising the optimization using a loss function of the final prediction map and intermediate feature map . To delve into the responsiveness of the hyperparameter λ within the multi-objective loss function, a series of experiments have been executed on both the WHU Building Dataset and the Massachusetts Building Dataset. The values of λ tested range from 0 to 2.0, with increments of 0.5. As shown in Table 3, compared to the conventional network with only loss constraints on the final prediction map, the network with loss constraints on the intermediate feature map achieved improved building extraction accuracy on three datasets. When the λ value is set to 1.0, the GSDMLNet achieved the highest building extraction accuracy in terms of F1, IoU, and OA on all datasets, indicating that applying loss constraints on the intermediate feature map effectively enhances the network’s performance.

Table 3.

Sensitivity analysis of λ on the WHU Building Dataset and Massachusetts Building Dataset.

4.5. Comparing with Microsoft’s Building Extraction Method

Between 2014 and 2024, Bing Maps detected 1.4 billion buildings worldwide. The building extraction process was completed in two stages: First, a deep neural network (DNN) was used to identify building features in aerial images, and then the building pixels were converted into polygons. This approach ensured the regularity and completeness of the building extraction results, offering broad application potential. The method achieved a precision of 94.85%, a recall of 79.02%, and an IoU of 65.24% globally. However, extracting building contours in two steps made it difficult to control errors during the semantic segmentation stage. In some regions, the extraction results showed issues such as overlapping buildings, incorrect shapes, and omissions. Analyzing the cause, it is likely that the deep learning-based semantic segmentation process encountered significant errors, such as disconnections, missing segments, and misclassifications, leading to chaotic geometric results. Compared to Microsoft’s method, our approach does exhibit irregular building edges, but in terms of the semantic segmentation stage alone, our method is more stable, with higher recall and IoU scores.

5. Conclusions

In response to the issue of existing deep learning networks for building extraction from remote sensing imagery lacking effective utilization of prior knowledge in aerial images, this article proposes GSDMLNet for building extraction in aerial images. This network incorporates building geometric saliency feature maps as prior knowledge into the network. By utilizing the BGAM and FAM++ to further enhance the network’s learning capabilities for building features, and optimizing with a multi-objective loss function, the accuracy and precision of building extraction are effectively improved. The introduction of building geometric saliency significantly enhances the network’s targeted learning of building features, the BGAM effectively enhances the extraction performance of target regions, and the FAM++ effectively reduces the occurrence of building holes. GSDMLNet demonstrates superior performance on three datasets, achieving the highest precision evaluation metrics, indicating the method’s versatility and strong generalization across different datasets. However, while the multi-objective loss function is beneficial, it may complicate the training process and lead to difficulties in balancing the various loss components.

In future research, we will focus on simplifying the multi-objective loss function to enhance the robustness and flexibility of the method in various scenarios. Additionally, building extraction from imagery may be incomplete or erroneous due to occlusion by shadows or vegetation. To address this, we plan to incorporate more prior knowledge about buildings, leveraging features such as shadows to optimize the extraction process. The extracted building edges may exhibit irregularities, making them difficult to apply directly. By comparing with Microsoft’s building extraction method, which involves semantic segmentation followed by geometric transformation, we will explore using geometric transformation to further improve the regularity of the extraction results, facilitating their practical application.

Author Contributions

Conceptualization, M.H.; methodology, M.H. and H.L.; validation, W.L. and S.C.; visualization, H.L. and W.L.; Project administration, H.Z. and N.Z.; writing—original draft preparation, M.H. and H.L.; writing—review and editing, M.H. and H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under grant number 42271368 and grant number U22A20569, and in part by the Fundamental Research Funds for the Central Universities under grant number 2021YCPY0113.

Data Availability Statement

Some or all data, models or code that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

Author Huijing Lin was employed by the company Nanjing Research Institute of Surveying, Mapping & Geotechnical Investigation, Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Jain, A.; Gue, I.H.; Jain, P. Research trends, themes, and insights on artificial neural networks for smart cities towards SDG-11. J. Clean. Prod. 2023, 412, 137300. [Google Scholar] [CrossRef]

- Allam, Z.; Jones, D.S. Future (post-COVID) digital, smart and sustainable cities in the wake of 6G: Digital twins, immersive realities and new urban economies. Land Use Policy 2021, 101, 105201. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, C.; Li, J.; Sui, Y. MF-Dfnet: A deep learning method for pixel-wise classification of very high-resolution remote sensing images. Int. J. Remote Sens. 2022, 43, 330–348. [Google Scholar] [CrossRef]

- Wei, S.; Zhang, T.; Ji, S.; Luo, M.; Gong, J. BuildMapper: A fully learnable framework for vectorized building contour extraction. ISPRS J. Photogramm. Remote Sens. 2023, 197, 87–104. [Google Scholar] [CrossRef]

- Zhu, X.; Zhang, X.; Zhang, T.; Tang, X.; Chen, P.; Zhou, H.; Jiao, L. Semantics and contour based interactive learning network for building footprint extraction. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5623513. [Google Scholar] [CrossRef]

- Hu, A.; Wu, L.; Chen, S.; Xu, Y.; Wang, H.; Xie, Z. Boundary shape-preserving model for building mapping from high-resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5610217. [Google Scholar] [CrossRef]

- Cui, S.; Yan, Q.; Reinartz, P. Complex building description and extraction based on Hough transformation and cycle detection. Remote Sens. Lett. 2012, 3, 151–159. [Google Scholar] [CrossRef]

- El Merabet, Y.; Meurie, C.; Ruichek, Y.; Sbihi, A.; Touahni, R. Building Roof Segmentation from Aerial Images Using a Lineand Region-Based Watershed Segmentation Technique. Sensors 2015, 15, 3172–3203. [Google Scholar] [CrossRef]

- Ok, A.O. Automated detection of buildings from single VHR multispectral images using shadow information and graph cuts. ISPRS J. Photogramm. Remote Sens. 2013, 86, 21–40. [Google Scholar] [CrossRef]

- Izadi, M.; Saeedi, P. Three-dimensional polygonal building model estimation from single satellite images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2254–2272. [Google Scholar] [CrossRef]

- Liasis, G.; Stavrou, S. Satellite images analysis for shadow detection and building height estimation. ISPRS J. Photogramm. Remote Sens. 2016, 119, 437–450. [Google Scholar] [CrossRef]

- Gao, X.; Wang, M.; Yang, Y.; Li, G. Building extraction from RGB VHR Images using shifted shadow algorithm. IEEE Access 2018, 6, 22034–22045. [Google Scholar] [CrossRef]

- Zhou, G.; Sha, H. Building shadow detection on ghost images. Remote Sens. 2020, 12, 679. [Google Scholar] [CrossRef]

- Lei, H.; Jin, Z.; Feng, G. A building extraction method using shadow in high resolution multispectral images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Vancouver, BC, Canada, 24–29 July 2011; pp. 1862–1865. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. A multidirectional and multiscale morphological index for automatic building extraction from multispectral GeoEye-1 imagery. Photogramm. Eng. Remote Sens. 2011, 77, 721–732. [Google Scholar] [CrossRef]

- Ding, Z.; Wang, X.Q.; Li, Y.L.; Zhang, S.S. Study on building extraction from high-resolution images using MBI. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-3, 283–287. [Google Scholar] [CrossRef]

- Zhang, C.; Hu, Y.; Cui, W. Semiautomatic right-angle building extraction from very high-resolution aerial images using graph cuts with star shape constraint and regularization. J. Appl. Remote Sens. 2018, 12, 26005. [Google Scholar] [CrossRef]

- Ning, X.; Lin, X. An index based on joint density of corners and line segments for built-up area detection from high resolution satellite imagery. ISPRS Int. J. Geo-Inf. 2017, 6, 338. [Google Scholar] [CrossRef]

- Karantzalos, K.; Paragios, N. Recognition-driven two-dimensional competing priors toward automatic and accurate building detection. IEEE Trans. Geosci. Remote Sens. 2009, 47, 133–144. [Google Scholar] [CrossRef]

- Ahmadi, S.; Zoej, M.J.V.; Ebadi, H.; Moghaddam, H.A.; Mohammadzadeh, A. Automatic urban building boundary extraction from high resolution aerial images using an innovative model of active contours. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 150–157. [Google Scholar] [CrossRef]

- Ywata, M.S.Y.; Dal Poz, A.P.; Shimabukuro, M.H.; de Oliveira, H.C. Snake-based model for automatic roof boundary extraction in the object space integrating a high-resolution aerial images stereo pair and 3D roof models. Remote Sens. 2021, 13, 1429. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference On Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference On Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, Y.; Tao, S.; Wang, M.; Shen, Q.; Huang, J. Discovering potential illegal construction within building roofs from UAV images using semantic segmentation and object-based change detection. Photogramm. Eng. Remote Sens. 2021, 87, 263–271. [Google Scholar] [CrossRef]

- Zheng, Z.; Wan, Y.; Zhang, Y.; Xiang, S.; Peng, D.; Zhang, B. CLNet: Cross-layer convolutional neural network for change detection in optical remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2021, 175, 247–267. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: A nested U-Net architecture for medical image segmentation. In International Workshop On Deep Learning in Medical Image Analysis (DLMIA); Springer: Cham, Switzerland, 2018; Volume 11045, pp. 3–11. [Google Scholar] [CrossRef]

- Tong, Z.; Li, Y.; Li, Y.; Fan, K.; Si, Y.; He, L. New network based on Unet plus plus and Densenet for building extraction from high resolution satellite imagery. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), New York, NY, USA, 26 September–2 October 2020; pp. 2268–2271. [Google Scholar]

- Zhao, H.; Zhang, H.; Zheng, X. A multiscale attention-guided UNet plus plus with edge constraint for building extraction from high spatial resolution imagery. Appl. Sci. 2022, 12, 5960. [Google Scholar] [CrossRef]

- Guo, H.; Su, X.; Wu, C.; Du, B.; Zhang, L. Decoupling semantic and edge representations for building footprint extraction from remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5613116. [Google Scholar] [CrossRef]

- Yang, D.; Wang, B.; Li, W.; He, C. Exploring the user guidance for more accurate building segmentation from high-resolution remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2024, 126, 103609. [Google Scholar] [CrossRef]

- Sun, Z.; Zhang, Z.; Chen, M.; Qian, Z.; Cao, M.; Wen, Y. Improving the Performance of Automated Rooftop Extraction through Geospatial Stratified and Optimized Sampling. Remote Sens. 2022, 14, 4961. [Google Scholar] [CrossRef]

- Xue, N.; Xia, G.; Bai, X.; Zhang, L.; Shen, W. Anisotropic-scale junction detection and matching for indoor images. IEEE Trans. Image Process. 2018, 27, 78–91. [Google Scholar] [CrossRef]

- Xia, G.; Huang, J.; Xue, N.; Lu, Q.; Zhu, X. GeoSay: A geometric saliency for extracting buildings in remote sensing images. Comput. Vis. Image Underst. 2019, 186, 37–47. [Google Scholar] [CrossRef]

- Li, X.; You, A.; Zhu, Z.; Zhao, H.; Yang, M.; Yang, K.; Tan, S.; Tong, Y. Semantic flow for fast and accurate scene parsing. In Computer Vision—ECCV 2020; Springer: Cham, Switzerland, 2020; pp. 775–793. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S. V-Net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the IEEE International Conference on 3D Vision (3DV), New York, NY, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Ji, S.; Wei, S.; Lu, M. Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Mnih, V. Machine Learning for Aerial Image Labeling; University of Toronto: Toronto, ON, Canada, 2013. [Google Scholar]

- Du, S.; Du, S.; Liu, B.; Zhang, X. Incorporating DeepLabv3+and object-based image analysis for semantic segmentation of very high resolution remote sensing images. Int. J. Digit. Earth 2021, 14, 357–378. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), 35th Conference on Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021; Volume 34. [Google Scholar]

- Lin, H.; Hao, M.; Luo, W.; Yu, H.; Zheng, N. BEARNet: A novel buildings edge-aware refined network for building extraction from high-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6005305. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).