Abstract

Using drones to obtain vital signs during mass-casualty incidents can be extremely helpful for first responders. Thanks to technological advancements, vital parameters can now be remotely assessed rapidly and robustly. This motivates the development of an automated unmanned aerial system (UAS) for patient triage, combining methods for the automated detection of respiratory-related movements and automatic classification of body movements and body poses with an already published algorithm for drone-based heart rate estimation. A novel UAS-based triage algorithm using UAS-assessed vital parameters is proposed alongside a robust UAS-based respiratory rate assessment and pose classification algorithm. A pilot concept study involving 15 subjects and 30 vital sign measurements under outdoor conditions shows that with our approach, an overall triage classification accuracy of 89% and an score of 0.94 can be achieved, demonstrating its basic feasibility.

1. Introduction

Mass casualty incidents (MCIs) like train crashes or great floodings require rapid response and triage to save as many lives as possible. Traditional triage methods rely on first responders to assess patients and categorize them based on the severity of their injuries. The lack of routine caused by the infrequency of these incidents and the higher-than-usual psychological and emotional stress levels of the first responders often result in wrong assignments of the triage categories, leading to delays in treatment and potentially higher mortality rates [1].

In recent years, unmanned aerial systems (UASs) have emerged as promising emergency response and disaster management tools. UASs can cover long distances in a relatively short amount of time and reach places that are hardly accessible to rescuers [2,3] while bypassing environmental hazards [4,5]. Furthermore, their ability to be equipped with various sensors enables them to perform various tasks, such as searching, rescuing, surveillance, and delivering medical supplies.

To address the challenges of traditional triage methods in MCIs and leverage the benefits of UASs without occupying existing personnel, we propose a novel automatic UAS triage system. In contrast to the UAS based triage system proposed by Lu et al. [6], this UAS employs contactless vital sign assessment to provide triage category suggestions for emergency personnel, establishing a semi-automatic triage system based on aerial footage.

Current triage algorithms used by first responders in the field, such as the Simple Treatment and Rapid Transport (STaRT) [7], usually rely on detecting essential vital signs, including ambulation, responsiveness, respiratory rate (RR), pattern, pulse, open injuries, and bleeding. In previous research examining the use of UAS for triage, a trained operator communicated with the injured individuals via radio devices attached to a drone while observing them with a thermal camera [8]. Although achieving similar categorization accuracy compared to standard procedures, this approach resulted in the pilot and operator being permanently occupied and performed significantly slower than current approaches. In addition, no assessment of vital parameters was used, resulting in them having to be ignored or roughly estimated. The Aerial Remote Triage System (ARTS) [9] represents an alternative approach that aims to minimize the criteria necessary for triage assessment by leveraging the capabilities of an integrated drone camera to capture visual data that human operators can analyze. Despite this approach, the feasibility of acquiring vital parameters remotely through camera footage has not been considered. Nevertheless, several methods are currently available to assess vital signs remotely from a great distance [10].

Imaging photoplethysmography (iPPG) or remote photoplethysmography (rPPG) has been proposed as a method for non-contact assessment of HR [11,12]. This technique, similar to contact PPG, uses a camera to measure the changes in light intensity reflected by the skin [13]. It has been successfully demonstrated by our previous research that this technology can be utilized on a UAS to assess heart rate for triage purposes [14].

Multiple approaches have been proposed for the non-contact RR assessment [15]. These approaches vary based on the type of sensor used and can be categorized as using thermal cameras, conventional cameras, depth cameras, laser vibrometers, or radars. Approaches utilizing visible-light or depth imaging detect a person’s respiration-related movement by analyzing chest and shoulder movements over consecutive video frames using change of pixel intensity [16], optical flow tracking [17] or Eulerian video magnification [18]. Another approach used with visible-light cameras involves using convolutional neural networks (CNNs) to generate data-derived filter kernels to detect respiratory motion [19] or other kinds of neural networks that can estimate surrogates for respiratory rate [20]. In contrast, thermal imaging measures respiration-correlated temperature changes, typically around the nostrils [21]. Laser vibrometers and radars are also capable of working independently of ambient lighting. A proof-of-concept for RR assessment via a drone-mounted radar was presented by Jing et al. [22]. However, this approach did not compensate for high system motion caused by wind. It required a relatively close distance directly above a lying person, making it unsuitable for use in an MCI.

Likewise, using camera-based methods presents a similar challenge, as the drone’s movement can result in artifacts that can affect the accuracy of the results required for triage. Hence, all previous approaches to RR assessment using drone footage required a relatively close distance to the subjects [23], or even landing on them [24], or it had no compensation for wind as they were tested only under an optimal environment [25]. Therefore, it is important to overcome the challenge of compensating for drone movement artifacts to ensure the results are accurate enough for triage.

During triage, it is crucial to evaluate a person’s overall physical condition and mental state. However, in situations where direct evaluation techniques are not available, the assessment must rely on auxiliary indicators. Moreover, for the proposed UAS-based triage system, this evaluation must be conducted using the available sensors, such using a brief video sequence captured by the drone’s camera system. Assessing the ability to walk or move can provide a good overall estimate as an individual moving is not likely to be unconscious or significantly impeded. Furthermore, body position is an additional indicator, as lying individuals are more likely to be distressed than those sitting or standing. An initial attempt was made by our research group to assess the mental and general state of individuals from an elevated position using available techniques for recognizing their movements [26]. However, this needs to be expanded for use with drone camera footage and the ability to automatically assess body poses.

Therefore, the main challenges for creating a semi-automated UAS triage system include developing a novel triage algorithm that utilizes contactless vital parameter detection and an MCI concept that incorporates the system. Moreover, it is necessary to modify the existing algorithms for drone-based RR detection, which have been primarily tested under quasi-stationary conditions [23,24,25], to compensate for heavy UAS movements and ensure reliable detection. Additionally, entirely new procedures for assessing the physical and mental state of individuals must be developed.

Accordingly, this paper introduces an MCI concept that leverages UAS capabilities for semi-automatic triage. It presents a newly developed triage algorithm based on the camera-based detection of vital parameters, including heart rate, breathing rate, body position, and movement, using a UAS. Additionally, it presents an approach for the robust remote assessment of RR using digital image stabilization and signal denoising based on UAS sensor data. This method is designed to address the challenges of moving systems during gusty weather, ensuring the robustness of the data for triage. The paper also proposes a novel approach for automatically classifying body pose and movement detection using ML-based keypoint detection and a subsequent classification network. Finally, it showcases the feasibility of the proposed system through a pilot concept study involving 15 subjects and 30 vital sign measurements under outdoor conditions.

2. Materials and Methods

2.1. UAS Triage System

The UAS used for the proposed MCI concept and experimental evaluation is a tilt-wing UAS, which has been already presented in past publications [14]. It was developed by the Institute of Flight System Dynamics, RWTH Aachen University (Aachen, Germany), and the company flyXdrive GmbH (Aachen, Germany). The UAS is based on the “Neo” tilt-wing UAS manufactured by flyXdrive GmbH, provided during the “FALKE” research project, funded by the German Federal Ministry of Education and Research. This system can operate efficiently in both forward and hover flight modes, enabling vertical takeoff and landing in nearly any location. It can reach a top speed of up to 130 km/h during fixed-wing flight and maintain a hovering mode for several minutes. The UAS was further adapted to include gimbal-mounted sensors for the remote assessment of vital signs. As wind gusts can cause dynamic changes in position relative to the target and the UAS’s inherent movement, vibration, and shake during recordings, an electromechanical gimbal is needed to compensate for this. The gimbal utilizes a motor-driven cardanic suspension to maintain a constant line of sight relative to the target. The UAS was additionally equipped with a Nvidia Jetson Nano for on-board data processing.

2.1.1. MCI Concept

Our MCI concept provides that the UAS is already launched where it is stationed (Figure 1a). The UAS then navigates autonomously to a specified incident location and searches for injured people. For this, the UAS uses an integrated trajectory planner developed as part of the “FALKE” project [27]. The planner enables the UAS to autonomously approach any previously known location within its range. The UAS is not dependent on ground infrastructure and can even arrive at the operation area before the emergency personnel, saving crucial time due to its high speed in fixed-wing flight and its ability to bypass obstacles. Once it arrives at the incident location, the UAS commences its search for individuals who may be immobile and, therefore, likely injured. After these positions have been determined, each injured is successively approached for the non-contact assessment of vital signs (Figure 1b). For this purpose, the UAS approaches the patient and hovers at a position within recording distance for the assessment duration. The next injured person will then be approached (Figure 1c). Upon arrival of the emergency personnel, the collected data are processed and made available to the emergency services with a generated preliminary triage category and the recorded video footage (Figure 1d). Based on this information, the emergency physician in charge of the mission can decide whether to act according to the proposed triage or take different measures.

Figure 1.

Process of drone-based triage. The UAS is deployed together with the emergency personnel (a). The UAS arrives at the scene and starts assessing the first immobile person (b). After the first assessment, the UAS approaches the next immobile person while processing the previous data (c). Upon arrival of the emergency personnel, the already processed data are transmitted wirelessly (d).

2.1.2. UAS Triage Algorithm

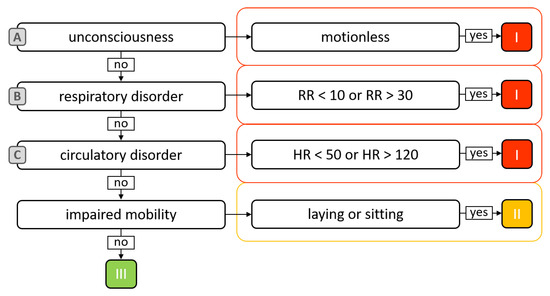

The algorithm for automated triage proposed in this paper, detailed in Figure 2, is based primarily on the two major MCI triage algorithms used in Germany: mSTaRT [28] and PRIOR [29]. The algorithms are designed to assist emergency responders in assessing the urgency of treatment needed. This is achieved by classifying the required treatment into five distinct categories. The first three categories are of particular importance: category I signifies the need for immediate treatment with the highest priority, category II represents urgent treatment that can be deferred and is of medium priority, while category III denotes that no acute treatment is required and is of low priority. To achieve this categorization, they rely primarily on the ABCDE schema by focussing on the examination of airway (A), breathing (B), circulation (C), mental disability (D) and environment (E) with the examination of unconsciousness (A), respiratory disorder (B), circulatory disorder (C), bleeding (C), mental disorder (D) and impaired mobility.

Figure 2.

Schema for UAS-based triage algorithm. Illustrating the parameters and their ABCDE categories, along with the threshold values leading to the categorization into categories I, II, and III.

The goal in this work was to determine how the developed UAS could assess these characteristics using camera-based assessment methods. The detection of unconsciousness is covered through the recognition of body movement since a conscious person, especially when filmed by a UAS during an MCI, is likely to try to draw attention to themselves and thus is not motionless. The respiratory disorder is assessed by remotely detecting the RR. The remote assessment of HR covers circulatory disorder. To assess impaired mobility, the person’s pose is automatically evaluated. Assuming that a person sitting or lying down during an MCI is likely to be immobile, they are classified into three categories: lying, sitting, or standing.

2.2. Assessment of Body Pose and Movement

The assessment of body pose and movement relies on the machine-learning-based recognition of body key points (distinctive body landmarks like sternum, abdomen, and pelvic center and significant body joints) followed by the classification of body pose and the detection of limb movements. A multi-step approach is required to enable this assessment using image data from a UAS, comprising three major steps:

- (i)

- Data acquisition and image pre-processing: adjustments to the system’s parameters prior to taking measurements and preparing the captured video frames;

- (ii)

- Detection and tracking of body key points: temporal tracking of relevant body key points and joints;

- (iii)

- Classification of body pose and movement detection: final step of pose classification and movement detection to be used for triage.

2.2.1. Data Acquisition and Image Pre-Processing

Before the triage evaluation, the UAS camera must first capture and process the video footage. For this purpose, the individual video frames denoted as , are captured one by one in raw RGB format along with the timestamps . Due to the limitation of using a two-axis gimbal that compensates only for the pitch and roll axes of the UAS, the captured images are not rotation-free in relation to the target and require correction. This correction can be accomplished by utilizing the sensor data from the gimbal, particularly by using the gimbal base vectors in conjunction with their respective timestamps, as presented in [14]. With this correction, a new set of rotation-free frames is generated for further processing.

2.2.2. Detection and Tracking of Body Key Points

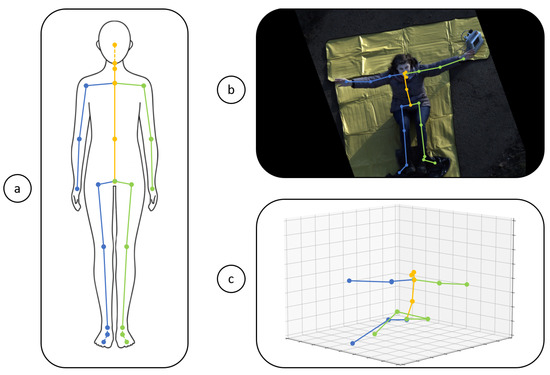

After capturing and pre-processing the video footage, the next step is to locate the body key points within each frame of . For this purpose, the Efficient Video Pose Estimation via Neural Architecture Search (ViPNAS) approach proposed by Xu, Lumin, and Guan et al. [30] was employed. This multi-stage body key point detection, based on the MMPose toolbox of the OpenMMLab project, extracts 133 two-dimensional body key points from a given image or image sequence using multiple chained neural networks. Of these 133 points, 22 key points at relevant body joints were chosen for the pose classification, as illustrated in Figure 3a.

Figure 3.

Three-dimensional body keypoint detection. The relevant body key points on the human body for the left side (green), right side (blue), and center (yellow) (a), the detected points in the captured 2D video frames (b), and the reconstructed 3D points in a normalized 3D space (c) are depicted.

By applying this approach to each frame of individually, as exemplified in Figure 3b, a set of 2D body key points is generated. In specific scenarios, mainly when dealing with individuals in upright or supine positions, these 2D key points can lead to ambiguities due to the near-identical spatial arrangements and interrelations. However, due to the inherent physiology of the human body, the arrangements between these key points are not arbitrary. The constraints of the human body’s key points can be exploited to deduce the corresponding 3D positions from the available 2D data. To transform the 2D key points into 3D key points, the image-based 3D-lifter network provided by the MMPose Toolbox was used, which is based on the research conducted by Martinez [31]. Using this approach, a subset of the original 133 key points was transformed into 3D key points, and the corresponding 22 3D body key points were generated from , as exemplified in Figure 3c.

2.2.3. Classification of Body Pose and Movement Detection

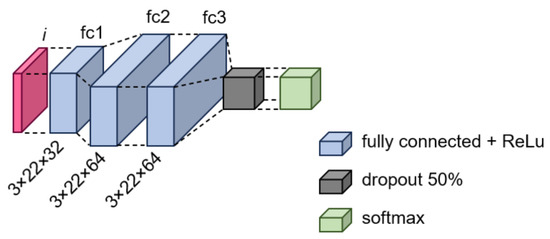

In order to categorize the generated 3D body key points , a simple 2D fully connected neural network with rectified linear activation units (ReLu) was trained into the classes of laying (0), standing (1), and sitting (2). The specific architecture of this network is detailed in Figure 4.

Figure 4.

Neural network structure of the pose classification network.

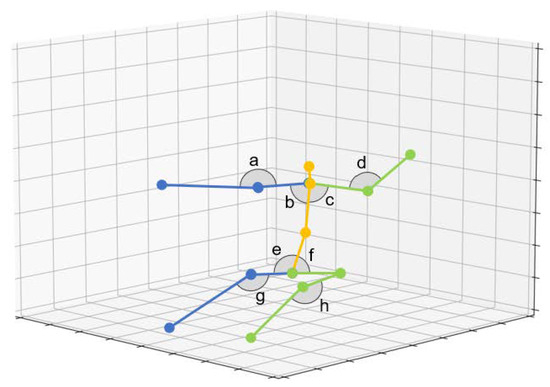

This network was then applied to each set of the 3D body key points corresponding to each frame of , resulting in the classification set . The final classification for the whole set was then obtained by applying the median to the whole set. The generated 3D key points were also used for motion detection. Eight 3D body joint angles – were defined to achieve this, as illustrated in Figure 5.

Figure 5.

Body joint angles evaluated for motion detection. Angles are evaluated symmetrically for the left side (green) and right side (blue), as well as for the center (yellow).

The angles between the joints offer a more robust approach than monitoring individual key points. This is because joint angles remain invariant to perspective changes and exhibit greater resilience to key point jitter. Movement detection is accomplished by analyzing the temporal changes of these angle values over each frame of :

If the standard deviation of any of the time series – exceeds the threshold t, the person is classified as moving and conscious:

The threshold value of t = 0.1 Rad, which corresponds roughly to 6 Deg, was chosen for the final classification.

2.3. Assessment of RR

The assessment of the RR was made through the optical tracking of specific key points in the chest area of a person. This tracking was performed to capture respiration-related movements, allowing for the reconstruction of the respiratory curve. A multi-step approach was necessary to acquire curves suitable for RR analysis. This approach was designed to mitigate the motion artifacts introduced by the flight system and the additional movement of the individual and consists of four major steps:

- (i)

- Data acquisition and image pre-processing: System parameter adjustments made prior to conducting measurements and preparing the captured video frames;

- (ii)

- Detection and tracking of chest region: Temporal tracking of relevant chest region;

- (iii)

- Detection and tracking of chest region key points: Temporal tracking of relevant key points within the tracked chest region;

- (iv)

- Extraction of respiratory curves: Extraction and refinement of respiratory curves obtained through the temporal key point tracking;

- (v)

- RR assessment: Final step of assessing the RR for automated triage through spectral analysis.

2.3.1. Data Acquisition and Image Pre-Processing

Including a larger image frame encompassing the whole chest area is advantageous when trying to capture chest movements effectively. Therefore, a different camera optic with an extended zoom factor has been employed for assessing the RR. However, the initial image processing procedures remain identical to those previously described in Section 2.2.1, resulting in a second set of .

2.3.2. Detection and Tracking of Chest Region

Within the rotated frames , the region containing only the chest area shall be subsequently isolated and tracked across consecutive frames. In the first step, analogous to the approach described in Section 2.2.2, the upper body’s 2D key points are computed via ViPNAS for each frame. The chest region is subsequently defined as the square formed by the shoulder and hip points , resulting in the bounding boxes:

However, these bounding boxes are not yet suitable for examining respiration, as they may still contain additional perspective distortions relative to each other, originating from uncompensated movements of the flight system. An affine image registration was conducted to mitigate these distortions without compromising the detection of respiration-related movements. A scale-invariant feature transform-based (SIFT) feature matching [32] was performed within the respective chest bounding boxes , for consecutive frames , to estimate the affine transformation parameters, resulting in the transformation . Subsequently, this transformation was applied to transform to and to . This procedure was iteratively applied to all frames of and all bounding boxes of , thus generating a new set of images and boxes . This approach is detailed in Algorithm 1.

| Algorithm 1 Consecutive Affine Image Warping |

|

When applied to real-world data, a potential challenge with this approach is the possibility of encountering situations where no bounding box or SIFT feature matching can be identified. This scenario is especially likely to occur when strong system movements result in the individual being partially or entirely outside the camera’s field of view. In this case, a continuous image series of cannot be generated, and corresponds to individual connected segments and , respectively. Therefore, only the longest continuous image sequence was selected for further analysis.

2.3.3. Extraction of Respiratory Curves

To extract the respiratory curves, capturing the respiration-related movements within , is necessary. For this purpose, optical key point tracking, based on the principles of optical flow, was used. Initially, a grid of key points was generated across the first bounding box’s area, with

and with a horizontal and vertical spacing of 40 pixels. These key points’ x and y coordinates were then tracked across all frames using optical flow tracking, generating a temporal set of 2D key point positions . In order to generate the signal corresponding to the respiration for each key point’s time series, the key points were orthogonally projected onto the corresponding longitudinal axis , which was determined for each frame by calculating the best-fit axis between the points of the body center of :

After projection onto this axis, the respiratory curves were measured as the deviation from the temporal mean position of :

2.3.4. Respiratory Rate Assessment

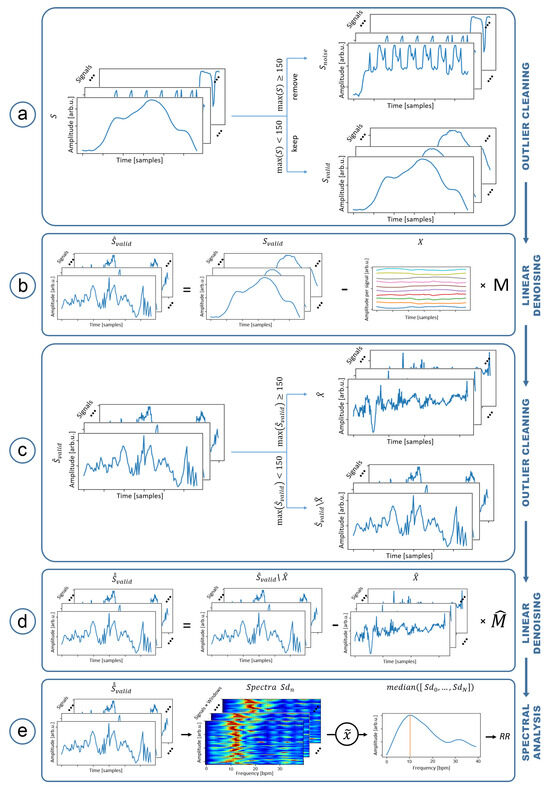

The whole process of RR assessment from the chest signals is shown in Figure 6. When determining a valid RR, the first step involves the removal of signals in that are heavily corrupted, rendering them unsuitable for RR calculation (Figure 6a).

Figure 6.

Overview of the RR estimation process. First signal separation (a). First denoising of with GPS and accelerometer data (colored) (b). Second signal seperation with (c). Second denoising with and seperated signals (d) and RR estimation by frequency analysis using power density spectra (red high, blue low) over sliding windows (e).

For this purpose, the signals in were separated based on their maximum amplitude into two categories: with , representing the valid signals, and with , which is indicative of artifacts. In the second step (Figure 6b), linear denoising based on generalized linear modeling (GLM) was used on . For this, was modeled as a composition of signals from known sources of noise , the noise mixing matrix , the actual respiratory-related signals , and the residual noise :

The objective was to substract known noise signals from according to their contributions . To achieve this, the contributions were determined through a least-squares fit for over-determined systems. Subsequently, the denoised signals were obtained by subtracting :

With this type of denoising, it is crucial to only use known noise signals that do not correlate with respiration to ensure that respiratory-related signals are not inadvertently removed from .

In our approach, the primary sources of noise are the artifacts caused by movements of the flight system. Therefore, signals that correlate with these movements but do not relate to respiration were required. For these signals, the data recorded during the flight through the camera gimbal were used. Specifically, the temporal deviation of the spatial vectors , and , in addition to the calculated camera rotations of , were used:

After denoising, it is possible that signals in , which previously fell below the threshold of , are exceeding this threshold (Figure 6c). These signals, denoted as were subsequently used in a second denoising pass (Figure 6d) alongside the remaining signals in :

resulting in the final denoised set of respiratory signals .

Most existing RR estimation approaches predominantly concentrate on continuous patient monitoring, emphasizing the detection of RR variations. However, in disaster medicine, this parameter’s temporal variation may be less important than the immediate RR assessment for patient triage. Therefore, our approach focuses on obtaining a single reliable RR value. For RR assessment, it was assumed that RR changes only slightly over short time intervals. Thus, RR was considered constant within any chest region during the measurement. This assumption was used to compensate for short-term disruptions in the signals that the denoising process could not remove. All signals in were divided into overlapping segments of 100 samples using a sliding window with a stride size of one sample. For each signal window, the power-density spectrum with a bandpass filter within the range of 5 to 40 breaths/min was calculated to determine the RR (Figure 6e). The final RR value was then determined as the maximum frequency of the median across all spectra:

2.4. Experimental Setup

The dataset from a previous study [14] was repurposed to evaluate the newly developed automated triage system. In addition to the earlier publication on HR assessment, it is also used to evaluate the RR assessment. Furthermore, the acquired results of the HR assessment are reevaluated regarding our proposed approach to automated triage. Additionally, the first study was extended with whole-body recordings for evaluating the pose detection, involving 15 out of the 18 participants from the initial research using the same hardware in a different configuration.

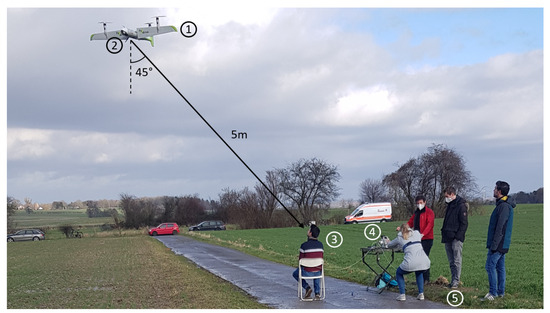

The overall setup already published within the previous study [14] is shown in Figure 7. The goal was to record volunteers from a distance of five meters at a 45° horizontal angle. Since no live view was available, a fixed position was pre-measured for the volunteers, which the UAS targeted throughout the flight. Each volunteer sat in this position for two consecutive 30 s recordings. The volunteers were divided into two groups: Group One was tasked to simulate resting conditions, and Group Two should simulate abnormal respiratory rates by breathing very slowly or very quickly.

Figure 7.

Previous experimental setup: UAS (1) equipped with gimbal and camera (2) is hovering in 5 m viewing distance and target engangement angle of 45° to a volunteering subject at a fixed position (3). The reference respiratory signal is recorded by a chest belt and stored on a computer (4). The rest of the respective subject group is preparing for their recording (5).

2.4.1. Flight System Sensors

The Neo tilt-wing UAS was fitted with a two-axis gimbal to balance the system motion during data recording. It could be controlled on the roll axis ( to ) and pitch axis ( to ) at an actuation speed of 400°/s. It was outfitted with an Allied Vision Mako G-234C RGB camera made by Allied Vision Technologies GmbH in Stadtroda, Germany. It utilizes a Sony IMX249 CMOS sensor from Sony Group Corporation in Tokyo, Japan. The selection of this camera was based on its compact size of 28 cm, lightweight at 80 g, and its capability to capture raw 10-bit Bayer frames at a maximum rate of 41.2 frames per second (fps), featuring a special resolution of up to 1936 by 1216 pixels. Furthermore, it uses a standard C-mount, allowing for the attachment of various optics, thus covering multiple distances.

2.4.2. Reference Telemetry

To obtain comparable reference values for the RR measurement, the volunteers were equipped with a respiratory chest belt (SleepSense PVDF Effort Sensor Kit (Adult)) by S.L.P. Scientific Laboratory Products Inc., Elgin, IL, USA. This was in addition to the Philips MP2 used for HR reference assessment. The respiratory curves were captured using a NI USB-6000 ADC with a sampling rate of 30 kHz, by National Instruments, Austin, TX, USA. Respiratory belt signals and HR reference data were stored on a mobile computer and time-synchronized via WiFi and NTP with the UAS’s onboard recording hardware to generate comparable references.

2.4.3. Study Protocol for Pose Detection

To experimentally assess the efficacy of the newly developed triage algorithm, a cohort of 18 subjects underwent UAS recordings. The subjects were recruited from within the FALKE project members. The experiments were carried out with the cooperation of healthy volunteers who provided their written informed consent. Ethical approval for the study was granted by the ethics committee of the medical faculty at RWTH Aachen University in Germany (EK 022/22, protocol code CTC-A, #22-007, approved 2 June 2022). The volunteers included five females and ten males, aged between 20 and 36, predominantly of light skin color.

The overall setup for the evaluation of pose and movement classification is illustrated in Figure 8. The objective was to capture whole-body footage of volunteers from a horizontal viewing angle of 45°. As a Kowa 50 mm 1” 5MP C-Mount lens optic was used on the camera, this resulted in a targeted distance to the subject of 12 m. Two 30 s recording sessions, denoted as phase I and II, were conducted for each subject. Subjects were instructed to adopt a predetermined pose and perform either body movements or maintain stillness for each of the recordings. A comprehensive overview of the participants and their corresponding modalities is provided in Table 1.

Figure 8.

Experimental setup: A UAS (1) fitted with a gimbal-mounted camera (2) is hovering at a view distance of 12 m and with an engagement angle of 45° to the subject (3), who is taking poses and/or movement.

Table 1.

Subject modalities. Overview over the modalities for movement and body pose for each subject and recording phase.

To prevent participants from becoming cold and damp, particularly when assuming lying or sitting positions, a thermally insulating underlay was utilized during the recordings.

3. Results

3.1. Recording Conditions

The recordings were made between 11 a.m. and 4 p.m. The outdoor conditions were demanding, with an average wind force of 6–7, equivalent to a wind speed of approximately 14 m/s, and strong wind gusts. Moreover, the weather shifted between sun, clouds, and light rain, leading to continually changing lighting conditions. The temperature was approximately 7 °C.

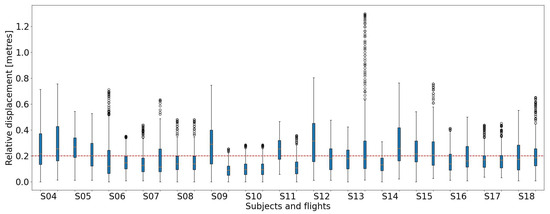

As a result of the demanding weather conditions, it was nearly impossible for the UAS to maintain a fixed position, resulting in significant system movement, as shown as relative displacement in Figure 9.

Figure 9.

UAS relative displacement: Boxplot of the UAS displacement in m for every subject and flight.

Throughout almost all the flights, a general displacement of about 10 to 30 cm was observed with extreme cases where the UAS was pushed over 1.2 m away from its designated position. The average displacement across all flights was approximately 20 cm.

3.2. Assessment of Body Pose

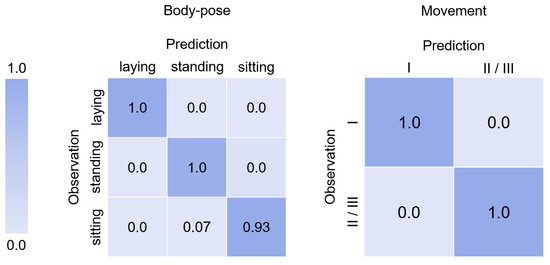

To evaluate the body-pose assessment, a body-pose dataset was initially generated from valid frames of the aerial video footage, i.e., frames where the entire body is visible. This dataset assigns one of the classes, laying, standing, or sitting, to each recognized set of body key points in individual frames of the video recording, resulting in 11,255 keypoint–class pairs. Subsequently, the proposed neural network was trained using this dataset by splitting the dataset into training and test sets following the leaving-one-subject-out approach. Thus, the network was trained 15 times for each subject, with the data of 14 subjects, and evaluated on the remaining subject. The overall performance was then determined as the average of the best training performances for each respective subject, resulting in the cross-validation matrix shown in Figure 10 (left).

Figure 10.

Cross-classification matrix for body-pose (left) and movement (right) assessment comparing the designated (observation) and assessed classification (prediction).

A 100% accuracy in classifying individuals as lying or standing could be achieved. Only once was a sitting subject misclassified as standing, resulting in a 93% accuracy for seated individuals. The normalized total classification accuracy was 97.6% with an score of 0.98.

3.3. Assessment of Body Movement

The dataset used to assess body movement is derived from the dataset used for evaluating body-pose assessment. From the body-pose dataset, the longest contiguous sequence, wherein all body key points were observable, was selected for each subject and phase for evaluation. As shown in Figure 10 (right), our approach achieved perfect classification on this dataset, enabling a consistent differentiation between subjects in motion and those at rest.

3.4. Assessment of Respiratory Rate

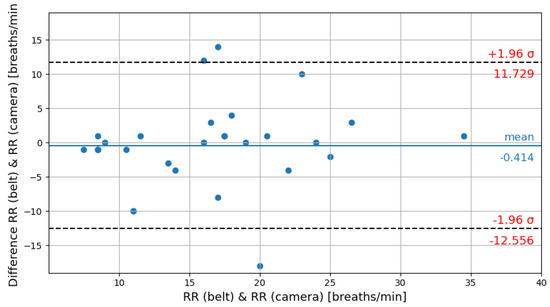

As described in [14], the subjects were instructed to simulate various physiological conditions, resulting in RR alterations. Accordingly, a dataset of upper-body videos and corresponding respiratory curves of 15 subjects for two phases was generated from the data to evaluate the RR assessment. The overall performance of the proposed approach for all valid datasets is shown in Figure 11. Through a Bland–Altman plot, the RR determined by the UAS approach was compared to the reference RR, which was derived by analyzing the frequency spectrum of the acquired respiratory curves. The reference RR was computed as the frequency corresponding to the spectral peak of the overall spectrum of the measurement. The majority of values fell within the range of ±5 bpm and lie within the 95% confidence interval of −12.556 to 11.729 breaths/min, which is primarily influenced by a few high outliers. The overall RMSE of the proposed approach resulted in 6.10 breaths/min.

Figure 11.

Bland–Altman plot of reference RR and camera-based RR assessment.

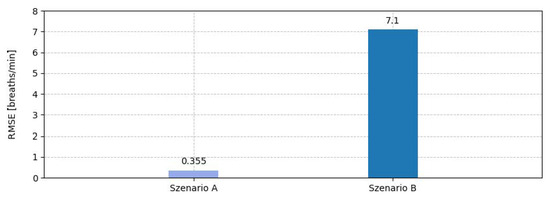

In comparison to the results obtained by Al-Naji et al. [23], which were achieved at a significantly closer distance to the subjects and under quasi-stationary conditions, the proposed system exhibits a notably higher RMSE of 7.1 breaths/min, which is contrasted with the average RMSE of 0.355 breaths/min achieved by Al-Naji et al. [23], Figure 12.

Figure 12.

Comparison of RMSE performance: Comparison of a drone-based approach at a relatively close distance to the subject with minimal weather interference, as demonstrated by Al-Naji et al. [23] (Scenario A) versus the proposed system at greater distance with significant wind gusts (Scenario B).

3.5. Assessment of Heart Rate

The results for HR have been published in [14] and are accessible for review in this publication.

3.6. Triage Categorization

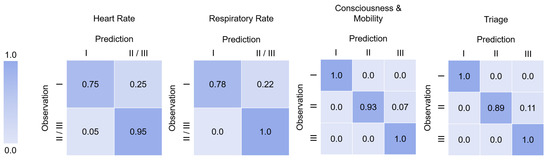

To evaluate the performance of the individual vital parameter assessments regarding their application for automated triage, each parameter was examined based on its direct impact on correctly altering the triage category according to the proposed schema. For HR and RR assessments, we specifically verified whether the evaluated values accurately led to the assignment of category I for our subjects. Regarding consciousness and mobility detection, it was verified whether participants were accurately classified into category II through body-pose detection and into category I through detection of immobility. The cross-classification matrices for the individual parameters are presented in Figure 13.

Figure 13.

Overview of triage cross-classification matrices. The matrices depicting the individual impact of the vital parameters HR, RR, and consciousness and mobility on the proposed triage classification as well as the overall triage classification results.

Regarding HR, it shows that for 75% of individuals with a critical HR, the detection was accurate, leading to a correct assignment to category I. However, for the remaining 25%, the critical HR was not correctly assessed, resulting in no change in category. On the other hand, for 95% of participants with a non-critical heart rate, the identification was correct, and their category remained unchanged. For the remaining 5% with a non-critical heart rate, a critical heart rate was mistakenly identified, leading to an incorrect assignment to category I.

The results for RR were similar. In 78% of subjects with a critical RR, the detection was accurate, leading to their category I assignment. However, in 22% of subjects with a critical RR, correct detection did not occur, resulting in their non-assignment in category I. In contrast, all subjects with a normal RR were accurately identified, and there were no misclassifications. This results in a normalized overall accuracy of the approach of 89% and an score of 0.94. The triage categorization based on consciousness and movement detection successfully identified all motionless subjects, thus correctly assigning them to category I. Among subjects with impaired mobility, 93% were accurately assessed and categorized as category II, while 7% were mistakenly identified as mobile and were not assigned to category II. On the other hand, all moving subjects without impairment were accurately identified, and the triage category remained unchanged. When combining the results of the individual parameters for all our study subjects, this results in the overall cross-classification matrix which is depicted in Figure 13.

4. Discussion

Our study presents a preliminary investigation into the feasibility of semi-automatic triage using an automated UAS under outdoor conditions. Moreover, the feasibility of automatically assessing vital signs in MCI scenarios and implementing an adapted triage scheme was evaluated. The data for the study were gathered from a small group of relatively easily observable subjects at fixed positions, making the findings preliminary in nature. They serve only as a foundation for future research and should not be considered conclusive. More studies with a more prominent and representative subject sample and a wider range of simulated conditions are still necessary to validate the findings and draw conclusive inferences.

With its tilt-wing design and autonomous capabilities, our specialized flight system allows it to be deployed simultaneously with the emergency services. Unlike conventional drones, it covers longer distances and can reach the scene ahead of emergency personnel. With its integrated recording equipment and ability to evaluate various triage-related factors, it provides a preliminary automated triage before emergency personnel arrive.

Despite challenging weather conditions, the developed tilt-wing UAS produced recordings suitable for automated triage vital parameter assessment. This holds particularly true for the first-of-its-kind classification of body pose and movement, which allowed for an almost perfect classification despite gusty winds during the flights. However, it should be noted that the provided thermally insulating underlay contributed to a higher contrast between individuals and the ground. In real-life scenarios, the achieved accuracy may not be easily reproducible.

The assessment of RR from a UAS has been demonstrated to be generally feasible. The achieved RMSE of 7.1 breaths/min is much larger as in previous studies conducted under highly controlled system conditions, e.g., the one by Janssen et al. [17] and is not yet suited to be used in the sense of clinical monitoring. Also, compared to the results by Al-Naji et al. [23], who could achieve an average RMSE of 0.355, the achieved RMSE of 7.1 breaths/min was much larger. This finding underscores that the error remains substantial despite the rigorous movement compensation, highlighting the considerable challenges associated with measuring respiratory rates under adverse weather conditions, particularly in the presence of gusty winds. However, given that the approach used by Al-Naji et al. [23] lacks motion compensation and was tested only under quasi-stationary conditions, it is expected to yield significantly worse results under the same conditions as those presented in our study. Nonetheless, it could be shown that our method is suitable for RR assessment within the context of triage, especially during gusty wheater, where a correct triage classification rate regarding the influence of RR on the algorithm of 89% could be achieved. However, concerning the cross-classification rates of the approach, it reveals a high specificity and the requirement for further sensitivity enhancement for active usage.

This applies similarly to the performance of our approach for HR assessment. The evaluation within the triage algorithm reveals a normalized accuracy of 85% as well as demonstrating high specificity and indicating room for improvement in sensitivity in the cross-classification. In both HR and RR assessments, the primary reason for misclassifications are the high outliers during measurements, caused by harmonic noise, most likely as a result of the system motion. Such outliers inevitably impact the accuracy of triage classification. Therefore, in addition to opting for a more precise three-axis gimbal to reduce the impact of system motion, it is essential to develop a “goodness” metric that can identify and eliminate these outliers from the triage process.

The 93% overall accuracy achieved in the triage classification shows the promising performance of our approach. This is significantly more accurate compared to research studies that evaluated the effectiveness of human first responders. For instance, according to a study by Lee et al. [33] with briefly trained first responders on how to apply the SALT algorithm, humans scored between 57.8% and 87%, depending on their first responder background. Additionally, during the explicit simulation of an MCI, also by Lee et al. [33], the highest accuracy achieved by paramedic first responders was 79.9%. Our results even slightly outperform experienced first responders equipped with specialized medical equipment during a simulated MCI in a study by Follmann et al. [1] where an accuracy of 58.0% was achieved.

By capturing vital parameters, our approach is potentially more accurate in determining triage categories than Lu et al.’s approach [6], which relies solely on the classification of body positions. Furthermore, our method aligns more closely with established triage procedures, leading to higher acceptance by authorities and users.

However, the high overall accuracy of our approach is greatly influenced by the study subjects’ combination of HR, RR, and the designated poses and movements. When examining individual parameter performance, achieving a worst-case performance of only 75% is theoretically possible when the HR is the only critical parameter, which is still comparable to human accuracy. Unfortunately, there are little to no data on the distribution of conditions and vital parameters for real MCIs, as documenting scientific data in such situations could cost lives. Our current UAS-based triage system is designed for 30 s records of a person, which is twice the time taken by an experienced paramedic, who typically spends around 15 s [34]. However, a comparative or even faster average triage time can be achieved depending on the distance and obstacles between two individuals, as a UAS can typically travel much faster.

The proposed approach focused mainly on the technical capabilities of combining technologies and methods in conjunction with a UAS under outdoor conditions. To implement the presented approach in real-world scenarios, it is crucial to consider various factors intentionally omitted in our study but anticipated to impact reliability in genuine MCIs. This includes partially obscured individuals, diverse ground surfaces, and soiled clothing and faces. Also, nighttime triage scenarios were not addressed, where capturing heart rate through cameras is likely unfeasible, and detecting body pose and RR requires specialized night vision or thermal imaging cameras. Additionally, the ability to differentiate between emergency responders and injured individuals at the scene is a crucial factor that demands further exploration in real applications. Furthermore, the issue of racial bias in the use of remote photoplethysmography for HR assessment persists and necessitates consideration in the ongoing development of a usable system. In future work, the number of parameters relevant for triage could also be increased by utilizing semantic segmentation techniques to automatically detect bone fractures or bleeding. Also, reliance on wireless communication channels in real-world scenarios exposes the system to various vulnerabilities, including eavesdropping, jamming, and unauthorized access [35]. Given the sensitive nature of medical data, it is particularly crucial to protect it from unauthorized access. Accordingly, implementing secure communication [36], similar to military applications, is essential to ensure data integrity.

5. Conclusions

Our study provides a preliminary assessment of the feasibility of using a UAS for semi-automatic triage under challenging outdoor conditions. This work specifically focuses on the potential for automated vital sign assessment and triage in MCIs, leveraging an adapted triage scheme. While our findings offer promising insights, they are based on a small, relatively easily observable group of subjects in controlled environments. Therefore, these results serve as a foundation for future research rather than conclusive evidence. Our tilt-wing UAS, with its autonomous capabilities and specialized design, was able to capture vital triage-related data even under challenging weather conditions. Despite system movement due to wind, the UAS demonstrated accurate body-pose classification and delivered an overall triage classification accuracy of 93%, outperforming human first responders in several comparative studies. However, real-world applicability remains to be further validated, particularly concerning the motion compensation needed to improve HR and respiratory rate RR assessments. The study demonstrated the feasibility of robust RR assessment using the UAS during gusty wind, but the relatively high RMSE of 7.1 breaths per minute shows that significant improvement is required for clinical-grade monitoring. While the results indicate that UAS-based triage could be faster and potentially more accurate than traditional methods, the high performance was influenced by controlled conditions. Future research should address more diverse and complex environments, including nighttime scenarios, partially obscured individuals, and varying ground surfaces. Additionally, ethical considerations such as racial bias in rPPG need further investigation to ensure equitable and reliable triage outcomes. In conclusion, this study highlights the technical viability of UAS-based automated triage but underscores the need for further testing under more realistic and diverse conditions. By integrating additional capabilities, such as detecting fractures or bleeding through semantic segmentation, the UAS could become a more comprehensive tool for emergency response in the future.

Author Contributions

Conceptualization, L.M., D.Q.P., A.F. and M.C.; methodology, L.M.; software, L.M.; validation, L.M. and C.B.P.; formal analysis, L.M.; investigation, L.M., D.Q.P., I.B. and A.M; resources, I.B., D.M. and M.C.; data curation, L.M.; writing—original draft preparation, L.M.; writing—review and editing, all authors; visualization, L.M.; supervision, A.F. and C.B.P.; project administration, I.B., A.M. and A.F.; funding acquisition, D.M., A.F. and M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Federal Ministry of Education and Research (BMBF), grant number 13N14772 and 13N14773, under the FALKE (Flugsystem-Assistierte Leitung Komplexer Einsatzlage) project.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the local ethics committee Klinisches Ethik Komitee (KEK) and the Center for Translational and Clinical Research (CTC-A), with ethical approval number EK 022/22 (protocol code CTC-A, #22-007, approved 2 June 2022).

Informed Consent Statement

Written informed consent was obtained from all subjects before they participated in the study.

Data Availability Statement

The dataset presented in this article is not fully available due to privacy concerns. The data cannot be anonymized since the participant’s faces must be visible, and not all subjects consented for their data to be shared publicly. However, a minimal dataset from participants who consented to data sharing is available at: https://doi.org/10.5281/zenodo.12771420 (accessed on 16 October 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAS | unmanned aerial system |

| MCI | mass-casualty incident |

| HR | heart rate |

| RR | respiratory rate |

| rPPG | remote photoplethysmography |

| ROI | Region of Interest |

| GLM | Generalized Linear Modelling |

| SNR | Signal-to-Noise Ratio |

| RMSE | Root-Mean-Square Error |

| bpm | beats per minute/breaths per minute |

References

- Follmann, A.; Ohligs, M.; Hochhausen, N.; Beckers, S.K.; Rossaint, R.; Czaplik, M. Technical support by smart glasses during a mass casualty incident: A randomized controlled simulation trial on technically assisted triage and telemedical app use in disaster medicine. J. Med. Internet Res. 2019, 21, e11939. [Google Scholar] [CrossRef]

- Roig, F.J.E. Drones at the service of emergency responders: Rather more than more toys. Emer. Rev. Soc. Esp. Med. Emerg. 2016, 28, 73–74. [Google Scholar]

- Konert, A.; Smereka, J.; Szarpak, L. The use of drones in emergency medicine: Practical and legal aspects. Emerg. Med. Int. 2019, 2019, 3589792. [Google Scholar] [CrossRef]

- Abrahamsen, H.B. A remotely piloted aircraft system in major incident management: Concept and pilot, feasibility study. BMC Emerg. Med. 2015, 15, 12. [Google Scholar] [CrossRef]

- Rosser, J.C., Jr.; Vignesh, V.; Terwilliger, B.A.; Parker, B.C. Surgical and medical applications of drones: A comprehensive review. J. Soc. Laparoendosc. Surg. 2018, 22, e2018. [Google Scholar] [CrossRef]

- Lu, J.; Wang, X.; Chen, L.; Sun, X.; Li, R.; Zhong, W.; Fu, Y.; Yang, L.; Liu, W.; Han, W. Unmanned aerial vehicle based intelligent triage system in mass-casualty incidents using 5G and artificial intelligence. World J. Emerg. Med. 2023, 14, 273. [Google Scholar] [CrossRef]

- Garner, A.; Lee, A.; Harrison, K.; Schultz, C.H. Comparative analysis of multiple-casualty incident triage algorithms. Ann. Emerg. Med. 2001, 38, 541–548. [Google Scholar] [CrossRef]

- Jain, T.; Sibley, A.; Stryhn, H.; Hubloue, I. Comparison of unmanned aerial vehicle technology-assisted triage versus standard practice in triaging casualties by paramedic students in a mass-casualty incident scenario. Prehospital Disaster Med. 2018, 33, 375–380. [Google Scholar] [CrossRef]

- Álvarez-García, C.; Cámara-Anguita, S.; López-Hens, J.M.; Granero-Moya, N.; López-Franco, M.D.; María-Comino-Sanz, I.; Sanz-Martos, S.; Pancorbo-Hidalgo, P.L. Development of the aerial remote triage system using drones in mass casualty scenarios: A survey of international experts. PLoS ONE 2021, 16, e0242947. [Google Scholar] [CrossRef]

- Molinaro, N.; Schena, E.; Silvestri, S.; Bonotti, F.; Aguzzi, D.; Viola, E.; Buccolini, F.; Massaroni, C. Contactless vital signs monitoring from videos recorded with digital cameras: An overview. Front. Physiol. 2022, 13, 160. [Google Scholar] [CrossRef]

- Takano, C.; Ohta, Y. Heart rate measurement based on a time-lapse image. Med. Eng. Phys. 2007, 29, 853–857. [Google Scholar] [CrossRef]

- Verkruysse, W.; Svaasand, L.O.; Nelson, J.S. Remote plethysmographic imaging using ambient light. Opt. Express 2008, 16, 21434–21445. [Google Scholar] [CrossRef]

- Allen, J. Photoplethysmography and its application in clinical physiological measurement. Physiol. Meas. 2007, 28, R1. [Google Scholar] [CrossRef]

- Mösch, L.; Barz, I.; Müller, A.; Pereira, C.B.; Moormann, D.; Czaplik, M.; Follmann, A. For Heart Rate Assessments from Drone Footage in Disaster Scenarios. Bioengineering 2023, 10, 336. [Google Scholar] [CrossRef]

- Massaroni, C.; Nicolo, A.; Sacchetti, M.; Schena, E. Contactless methods for measuring respiratory rate: A review. IEEE Sens. J. 2020, 21, 12821–12839. [Google Scholar] [CrossRef]

- Massaroni, C.; Schena, E.; Silvestri, S.; Taffoni, F.; Merone, M. Measurement system based on RBG camera signal for contactless breathing pattern and respiratory rate monitoring. In Proceedings of the 2018 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rome, Italy, 11–13 June 2018; pp. 1–6. [Google Scholar]

- Janssen, R.; Wang, W.; Moço, A.; De Haan, G. Video-based respiration monitoring with automatic region of interest detection. Physiol. Meas. 2015, 37, 100. [Google Scholar] [CrossRef]

- Wu, H.Y.; Rubinstein, M.; Shih, E.; Guttag, J.; Durand, F.; Freeman, W. Eulerian video magnification for revealing subtle changes in the world. ACM Trans. Graph. 2012, 31, 65. [Google Scholar] [CrossRef]

- Pediaditis, M.; Farmaki, C.; Schiza, S.; Tzanakis, N.; Galanakis, E.; Sakkalis, V. Contactless respiratory rate estimation from video in a real-life clinical environment using eulerian magnification and 3D CNNs. In Proceedings of the 2022 IEEE International Conference on Imaging Systems and Techniques (IST), Virtual, 21–23 June 2022; pp. 1–6. [Google Scholar]

- Brieva, J.; Ponce, H.; Moya-Albor, E. Non-contact breathing rate estimation using machine learning with an optimized architecture. Mathematics 2023, 11, 645. [Google Scholar] [CrossRef]

- Kwon, H.M.; Ikeda, K.; Kim, S.H.; Thiele, R.H. Non-contact thermography-based respiratory rate monitoring in a post-anesthetic care unit. J. Clin. Monit. Comput. 2021, 35, 1291–1297. [Google Scholar] [CrossRef]

- Jing, Y.; Qi, F.; Yang, F.; Cao, Y.; Zhu, M.; Li, Z.; Lei, T.; Xia, J.; Wang, J.; Lu, G. Respiration detection of ground injured human target using UWB radar mounted on a hovering UAV. Drones 2022, 6, 235. [Google Scholar] [CrossRef]

- Al-Naji, A.; Perera, A.G.; Chahl, J. Remote monitoring of cardiorespiratory signals from a hovering unmanned aerial vehicle. Biomed. Eng. Online 2017, 16, 101. [Google Scholar] [CrossRef]

- Saitoh, T.; Takahashi, Y.; Minami, H.; Nakashima, Y.; Aramaki, S.; Mihara, Y.; Iwakura, T.; Odagiri, K.; Maekawa, Y.; Yoshino, A. Real-time breath recognition by movies from a small drone landing on victim’s bodies. Sci. Rep. 2021, 11, 5042. [Google Scholar]

- Al-Naji, A.; Perera, A.G.; Mohammed, S.L.; Chahl, J. Life signs detector using a drone in disaster zones. Remote Sens. 2019, 11, 2441. [Google Scholar] [CrossRef]

- Queirós Pokee, D.; Barbosa Pereira, C.; Mösch, L.; Follmann, A.; Czaplik, M. Consciousness Detection on Injured Simulated Patients Using Manual and Automatic Classification via Visible and Infrared Imaging. Sensors 2021, 21, 8455. [Google Scholar] [CrossRef]

- Barz, I.; Danielmeier, L.; Seitz, S.D.; Moormann, D. Kollisionsfreie Flugbahnen für Kippflügelflugzeuge auf Basis des Wavefront-Algorithmus unter Berücksichtigung Flugmechanischer Beschränkungen; Universitätsbibliothek der RWTH Aachen: Aachen, Germany, 2022. [Google Scholar]

- Kanz, K.G.; Hornburger, P.; Kay, M.; Mutschler, W.; Schäuble, W. mSTaRT-Algorithmus für Sichtung, Behandlung und Transport bei einem Massenanfall von Verletzten. Notf. Rettungsmed. 2006, 3, 264–270. [Google Scholar] [CrossRef]

- Bubser, F.; Callies, A.; Schreiber, J.; Grüneisen, U. PRIOR: Vorsichtungssystem für Rettungsassistenten und Notfallsanitäter. Rettungsdienst 2014, 37, 730–734. [Google Scholar]

- Xu, L.; Guan, Y.; Jin, S.; Liu, W.; Qian, C.; Luo, P.; Ouyang, W.; Wang, X. Vipnas: Efficient video pose estimation via neural architecture search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16072–16081. [Google Scholar]

- Martinez, J.; Hossain, R.; Romero, J.; Little, J.J. A simple yet effective baseline for 3d human pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2640–2649. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Lee, C.W.; McLeod, S.L.; Peddle, M.B. First responder accuracy using SALT after brief initial training. Prehospital Disaster Med. 2015, 30, 447–451. [Google Scholar] [CrossRef]

- Follmann, A.; Ruhl, A.; Gösch, M.; Felzen, M.; Rossaint, R.; Czaplik, M. Augmented reality for guideline presentation in medicine: Randomized crossover simulation trial for technically assisted decision-making. JMIR Mhealth Uhealth 2021, 9, e17472. [Google Scholar] [CrossRef]

- Krichen, M.; Adoni, W.Y.H.; Mihoub, A.; Alzahrani, M.Y.; Nahhal, T. Security challenges for drone communications: Possible threats, attacks and countermeasures. In Proceedings of the 2022 2nd International Conference of Smart Systems and Emerging Technologies (SMARTTECH), Riyadh, Saudi Arabia, 9–11 May 2022; pp. 184–189. [Google Scholar]

- Ko, Y.; Kim, J.; Duguma, D.G.; Astillo, P.V.; You, I.; Pau, G. Drone secure communication protocol for future sensitive applications in military zone. Sensors 2021, 21, 2057. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).