Abstract

This paper presents a drone system that uses an improved network topology and MultiAgent Reinforcement Learning (MARL) to enhance mission performance in Unmanned Aerial Vehicle (UAV) swarms across various scenarios. We propose a UAV swarm system that allows drones to efficiently perform tasks with limited information sharing and optimal action selection through our Efficient Self UAV Swarm Network (ESUSN) and reinforcement learning (RL). The system reduces communication delay by 53% and energy consumption by 63% compared with traditional MESH networks with five drones and achieves a 64% shorter delay and 78% lower energy consumption with ten drones. Compared with nonreinforcement learning-based systems, mission performance and collision prevention improved significantly, with the proposed system achieving zero collisions in scenarios involving up to ten drones. These results demonstrate that training drone swarms through MARL and optimized information sharing significantly increases mission efficiency and reliability, allowing for the simultaneous operation of multiple drones.

1. Introduction

In recent years, drone technology, initially developed for military purposes, has significantly expanded to various industrial sectors [1,2]. In agriculture, drones equipped with advanced imaging sensors and AI algorithms monitor crop health with precision, detecting early signs of nutrient deficiencies or water stress to optimize yields [3,4]. By integrating various sensors and working alongside other rescue equipment, drones enhance the effectiveness of disaster response efforts. Drones play a crucial role in search and rescue missions with their real-time mapping capabilities, quickly surveying large areas and creating topographic maps for efficient rescue operations. Surveillance drones provide commanders with real-time information and situational awareness, which is essential for strategic decision making. In military contexts, drones are indispensable for surveillance, reconnaissance, and combat [5]. Armed drones execute precision strikes with minimal collateral damage, enhancing operational efficiency and reducing personnel risk. Additionally, drones equipped with advanced sensors can detect and track hostile forces, enhancing military preparedness and response capabilities [6].

However, as drone operations continue to expand in scale, traditional control methods utilizing Ground Control Stations (GCSs) and remote control reveal significant limitations, particularly in scenarios involving large swarms of drones. These conventional methods are increasingly inadequate in terms of response time and operational efficiency [7]. The reliance on direct communication between an ever-growing number of drones amplifies the risks associated with delays, packet loss, and mission disruptions [8]. Furthermore, the task of programming multiple drones individually proves to be not only highly time-consuming but also inefficient. This inefficiency is starkly illustrated by incidents at drone shows, where numerous drones have collided due to errors in direct programming. Such events highlight the critical need for more advanced and reliable methods of managing and controlling large-scale drone operations to mitigate these risks and enhance overall performance.

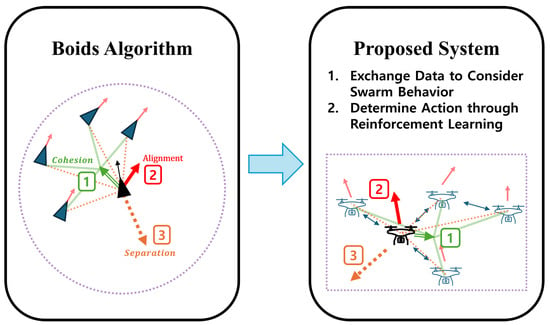

To address these challenges, we propose a swarming drone operation system like the Boids algorithm, which simulates biological systems such as schools of fish and flocks of birds. The Boids algorithm is a flock movement algorithm that simulates the flocking behavior of birds. These natural systems exhibit swarming intelligence, where simple local interactions among individuals result in sophisticated collective behaviors and efficient task execution. As shown in Figure 1, similar to the operation of the Boids algorithm, our system uses surrounding information to determine appropriate swarm behavior and learns each Unmanned Aerial Vehicle (UAV) to perform optimized behavior through the RL we designed. Through this process, each UAV recognizes the situation required to achieve the goal of the entire UAV swarm, and at the same time takes the most optimal action for that situation. The proposed system leverages sensor data from each drone, along with information from nearby drones, to facilitate intelligent cooperation. Operating autonomously, each drone communicates and collaborates with others to seamlessly achieve collective goals, functioning like a unified organism. This biomimetic approach ensures that the entire drone fleet can adapt dynamically to changing conditions, optimize task performance, and mitigate the risks associated with traditional control methods.

Figure 1.

The concept of the proposed system.

The core of our approach is to build a dynamically efficient network between drones, called the Efficient Self UAV Swarm Network (ESUSN), and to design the MultiAgent Reinforcement Learning (MARL) system that efficiently improves performance by providing the necessary information for agents to select an action. ESUSN limits the number of connections each drone maintains while optimizing overall cooperation. And using the technology of the designed MARL system, each drone learns to select the optimal operation based on information from surrounding drones and sensor values collected by the Single-Board Computer (SBC). This adaptive framework not only enhances network stability but also significantly improves the efficiency of critical tasks such as collision avoidance. By continually adapting to the environment and the state of neighboring drones, our system ensures robust and efficient drone operations, thereby overcoming the limitations of traditional control methods and paving the way for more advanced and reliable drone management strategies.

Compared with existing technologies, our proposed system has several advantages, as listed below:

- Efficient and reliable network algorithms for UAV swarms. We propose a swarm drone operation system that leverages advanced network algorithms to build a dynamically efficient network called the ESUSN. This system limits the number of connections each drone maintains while optimizing overall cooperation to ensure robust and reliable drone operations.

- Optimal action selection through RL. We design a flexible MARL system that operates across various scenarios based on specific parameters. In this system, each drone within the swarm learns to select the optimal behavior using a limited set of inputs from surrounding drones and sensor values collected by the SBC. This adaptive framework reduces the need for complex networks and significantly enhances the efficiency of critical tasks such as reconnaissance and collision avoidance.

- Appropriate method of communication. Until now, when exchanging data between drones in a UAV swarm, various communication problems have occurred because the state of the drone was not taken into consideration and an inappropriate routing method was used. However, our system improved network performance by considering the drone’s environment and configuring an appropriate communication system to ensure that data are accurately delivered to the destination.

- Real-time adaptation. Each drone adapts in real time based on environmental changes and the status of neighboring drones. This dynamic adaptive ability maximizes mission performance efficiency and overcomes the limitations of traditional control methods.

The rest of this paper is organized as follows. Section 2 details the related research for autonomous drones, including existing drone-to-drone communication approaches and drone research related to RL. Section 3 describes the proposed UAV swarm, internal communication system, and the system architecture designed for swarm drone operations using MARL. Section 4 presents experimental evaluations verifying the effectiveness of the swarm drone system, while Section 5 provides concluding insights and outlines future research directions.

2. Related Work

This section discusses existing approaches related to our proposed system, providing a comprehensive overview of advancements in UAV swarm network planning and decision making using RL. We explore foundational research and recent developments that inform and contrast with our innovative methodologies.

2.1. Communication Networks and Protocols for UAV Swarm

A UAV swarm is typically defined as a collection of autonomous drones that interact based on local sensing and reactive behaviors, resulting in a global behavior emerging from these interactions [9,10]. This concept relies on decentralized control where each drone follows simple local rules without the need for a central command [11]. The emergent behavior of the swarm allows for the efficient execution of complex tasks such as area search, surveillance, and environmental monitoring. In the context of UAV swarm network planning, significant progress has been made in developing efficient communication networks for UAVs. Traditional approaches, such as Flying Ad Hoc Networks (FANETs) and Mobile Ad Hoc Networks (MANETs), have laid the groundwork for drone-to-drone communication [12,13].

MANETs provide a broader framework applicable to various mobile nodes, including UAVs [14]. While MANET protocols can be adapted for UAV swarms, significant modifications are often required to address the high-speed mobility and three-dimensional movement characteristics of drones [15]. Enhancements to traditional MANET routing protocols have been proposed to better suit UAV-specific needs, focusing on reducing latency and improving data packet delivery rates [16]. Additionally, the integration of MANETs with cognitive radio technologies has been explored to improve spectrum efficiency and communication reliability in UAV networks [17].

FANETs, in particular, are specialized for UAV communication, leveraging high mobility and dynamic topology to enable robust network connections [18]. The FANET structure allows nodes to dynamically join and leave the network, making drone swarms highly scalable [19]. Even if some nodes fail, the remaining nodes can reconfigure the network through a dynamic routing protocol, improving the robustness of the drone swarm [20]. Mutual relay between each node reduces the node’s radiated power and reduces the likelihood of drone swarms being detected, increasing survivability in battlefield environments.

Among the many routing protocols available for FANETs, AODV (Ad hoc On-demand Distance Vector) is commonly used due to its ability to dynamically establish routes only when needed. This reactive protocol is well suited for environments with high mobility, such as drone swarms, where network topology frequently changes. AODV minimizes routing overhead by discovering routes on-demand and maintaining only active routes, which is crucial for maintaining communication efficiency in highly dynamic UAV networks [21]. However, while AODV is effective in managing dynamic topology changes, it also has limitations, such as increased latency during route discovery and higher susceptibility to link failures during periods of rapid mobility.

However, there are many challenges in FANET, and there are several prior studies to solve them. Research by Bekmezci et al. highlighted the unique challenges and solutions in FANETs, emphasizing the importance of adaptive routing protocols to maintain network stability and performance in dynamic environments [22]. Research to solve the problems of FANET is trying to overcome the shortcomings of this network through various approaches. By introducing Software-Defined Networking (SDN) and network virtualization in FANET, it aims to solve major problems that arise in the process of building a high-performance three-dimensional distributed heterogeneous network [23]. To minimize the periodic transmission of “Hello” messages to maintain the route, AI-Hello aims to reduce network bandwidth waste and energy consumption by adjusting the transmission interval of “Hello” messages [24].

Despite these advancements, both FANETs and MANETs face limitations in scalability and response times, particularly in large-scale UAV operations. Traditional networks often struggle with maintaining efficient communication as the number of drones increases, leading to issues such as network congestion and increased latency. Our proposed algorithm aims to address these issues by limiting the number of connections each drone maintains, optimizing overall cooperation, and ensuring reliable communication through an adaptive network structure. This approach not only enhances the scalability of the network but also improves its robustness against node failures and dynamic environmental changes.

2.2. Reinforcement Learning Algorithms for UAV Decision Making

RL is a methodology in which an agent interacts with the environment and learns optimal behavior to achieve a given goal. The agent tries different actions in the environment and improves its action strategy according to the rewards it receives from the results. This allows the agent to learn a policy that maximizes long-term rewards.

RL has emerged as a powerful tool for autonomous decision making in UAVs. Various RL algorithms have been explored to enhance the decision-making capabilities of drones, particularly in complex and dynamic environments. Deep Deterministic Policy Gradient (DDPG), Deep Q-Networks (DQN), Double Deep Q-Networks (DDQN), and Proximal Policy Optimization (PPO) are among the prominent RL algorithms applied to UAV operations [25,26].

DDPG extends the capabilities of RL algorithms by enabling continuous action spaces, which is crucial for UAV control tasks requiring fine-grained adjustments [27]. In precision agriculture, DDPG was applied to control drones performing delicate tasks like targeted pesticide spraying and precise watering, allowing for smooth and accurate maneuvers [28]. The application of DDPG in UAVs has shown promising results in tasks requiring continuous control, such as maintaining stable flight in turbulent conditions or performing intricate aerial maneuvers [29]. LSTM-DDPG-based flight control research is scarce, but ongoing studies are exploring its potential for drone control applications [30,31].

DQN has been widely adopted for its ability to handle high-dimensional input spaces through deep learning [32]. For example, in a study where UAVs were tasked with navigating through a complex urban environment, DQN was utilized to enable drones to learn optimal paths and avoid obstacles by processing visual input from onboard cameras [33]. However, DQN’s limitations, such as overestimation bias and instability during training, have led researchers to explore more advanced algorithms. An implementation for UAV path planning in urban environments demonstrated DQN’s potential but also highlighted the need for further optimization in real-time applications.

DDQN, an extension of DQN, mitigates overestimation issues by decoupling the action selection and action evaluation processes [34]. This improvement results in more accurate value estimates, enhancing the decision-making process in UAVs. In a UAV collision avoidance system, DDQN was implemented to help drones make split-second decisions to avoid obstacles and other drones in flight, significantly improving safety and operational efficiency [35]. Research has illustrated the benefits of DDQN in achieving more reliable performance in dynamic environments.

PPO addresses some of these limitations by providing a more stable and efficient training process [36]. In a scenario where UAVs needed to perform coordinated search and rescue missions, PPO was used to ensure smoother policy updates, enabling drones to dynamically adjust their search patterns based on real-time environmental feedback [37]. PPO enables stable and efficient learning while preventing excessive changes when updating policies. This is especially useful in complex environments where multiple drones work together and perform their respective roles simultaneously. Additionally, PPO is widely used in multiagent systems because it is relatively simple to implement and has excellent learning stability while maintaining high performance. PPO can also be used in multiagent systems to implement triangulation through RL [38].

Our proposed MARL system builds upon these foundational algorithms, incorporating adaptive learning mechanisms to optimize UAV behavior in diverse scenarios. By leveraging sensor data and interdrone communication, our system ensures real-time adaptation and robust decision making, addressing the limitations of traditional RL approaches and enhancing the overall efficiency of swarm drone operations. This integration of MARL with our ESUSN network architecture enables each drone to learn from both its environment and its peers, fostering a highly cooperative and efficient swarm system.

3. System Design and Algorithm

In this section, we first describe the overall structure of our system, then introduce the data exchange network algorithm and the MARL system that efficiently improves performance by providing the necessary information necessary for agents to select an action.

3.1. System Overview

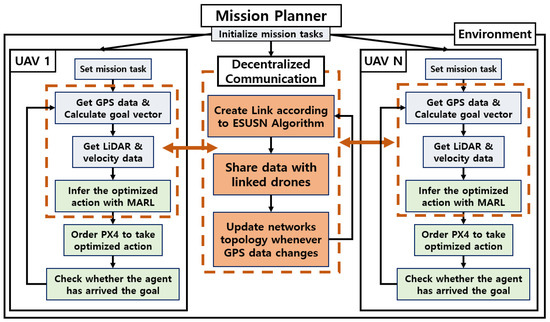

The proposed system comprises a swarm of UAVs, each equipped with a SBC to manage autonomous flight and interdrone communication. Each drone follows a systematic process to achieve its objectives and effectively collaborate within the swarm. Below is a detailed overview of the system components and their interactions. Figure 2 is an overall overview of the proposed system.

Figure 2.

The overall overview of the proposed system.

The Mission Planner initializes and distributes tasks to each UAV within the swarm. Each UAV receives a specific mission from the Mission Planner and collects GPS data to calculate the goal vector. Additionally, each UAV gathers Light Detection And Ranging (LiDAR) data for obstacle detection and velocity data for movement adjustments. To enhance coordination, UAVs may also share other relevant data as needed. UAVs share the collected data (GPS, LiDAR, and velocity information) with linked drones to ensure synchronized operations and collective decision making. Using MARL policies, each UAV processes the shared data and infers optimized actions based on RL strategies. These policies allow the UAVs to learn from their environment and improve task execution over time. The inferred optimized actions are transmitted to the PX4 flight controller, which executes these commands to control the UAV’s motors and sensors, ensuring stable flight. Each UAV continuously checks if it has reached its assigned goal and adjusts its actions as necessary.

The method for configuring the network is decentralized rather than centralized. This ensures that communication does not overload a particular UAV, forming links that allow each drone to exchange the minimal necessary information for action selection. The proposed network topology algorithm, ESUSN, determines the links for sending and receiving data and updates the network whenever the location changes. The system architecture enables each UAV to autonomously navigate and share critical data with peers, enhancing situational awareness and collective decision making. The inclusion of MARL in each UAV facilitates continuous learning and optimization, allowing the swarm to adapt to dynamic environments and execute complex missions efficiently. The PX4 flight controller ensures high reliability and stability, enabling safe operation across various environments. The modular design supports scalability, allowing more UAVs to be added to the swarm without significant adjustments to the system.

3.2. Efficient Self UAV Swarm Network

Traditional methods for drone networks often encounter several issues, such as high latency, increased packet loss, and inefficient energy consumption. Communication between UAVs is challenging due to their frequent position changes and unstable wireless link status. Therefore, a network system tailored to the UAVs’ characteristics and specific mission requirements is necessary. Existing FANET approaches offer high flexibility but suffer from severe network congestion and increased energy consumption as the network scales, leading to performance degradation. To address these challenges, this paper proposes the ESUSN that considers the dynamic positions of UAVs and mission requirements to provide optimal network performance by minimizing packet loss and maximizing energy efficiency.

The ESUSN system is designed to provide an efficient and reliable network for UAVs. The system begins by randomly initializing the positions of drones within a defined two-dimensional coordinate system. Subsequently, the Euclidean distances between every pair of drones are calculated using Equation (1), forming the basis for constructing the Minimum Spanning Tree (MST). The MST ensures that all drones are connected with minimal total distance, optimizing the overall efficiency of the network. This foundational structure minimizes initial setup costs and forms a backbone for further optimization.

Beyond constructing the MST, the system ensures that each drone maintains at least two connections to its nearest neighbors. This redundancy enhances network robustness, allowing the system to tolerate node failures and dynamic changes in drone positions. The optimization process further refines the network to maintain these connections while ensuring that no drone exceeds its maximum link limit. This balanced approach helps achieve minimal packet loss and high energy efficiency, making the ESUSN an optimal solution for UAV networks.

Furthermore, the ESUSN system can dynamically adjust the network in real time to accommodate changes in drone positions and mission requirements. This flexibility is crucial for maintaining network stability and efficiency, enabling high performance across various mission environments. By adapting to the real-time conditions and demands, the ESUSN system ensures sustained optimal performance.

Energy efficiency is further enhanced through power-aware routing protocols that consider the remaining battery life of each drone when establishing and maintaining connections. By prioritizing routes that involve drones with higher energy reserves, the ESUSN extends the overall operational time of the network, making it suitable for long-duration missions. Moreover, the system can implement sleep modes for drones that are temporarily not needed for communication or sensing tasks, conserving energy and prolonging the network’s operational lifespan.

The key processes used in ESUSN are as follows:

- Euclidean Distance Calculation:where is the distance between drone i and drone j, and and are the coordinates of drones i and j, respectively.

- Minimum Spanning Tree (MST) Calculation using GHS Algorithm: The MST is considered to care for all drones in the swarm. In our system, MST is constructed using the GHS algorithm [39].where T is the MST, and G is the graph of drone distances.The algorithm involves the following steps:

- (a)

- Each drone starts as an individual fragment.

- (b)

- Each fragment identifies the smallest edge to another fragment and attempts to merge.

- (c)

- Repeat the merging process until all drones are connected into a single MST.

- Network Optimization: To ensure all drones are connected while maintaining the maximum number of links per drone:

- (a)

- For each drone i, identify the two closest drones and add links such that i has at most 2 links:

- (b)

- Remove excess links if a drone has more than two connections. Keep only the two shortest links:

- (c)

- Include the MST to ensure all drones are connected:

The process of the ESUSN algorithm is expressed in Algorithm 1. Firstly, the algorithm operates by continuously updating the positions of each drone and recalculating the Euclidean distances between every pair of drones to form a distance matrix. This matrix is used to construct a graph G where drones are vertices and the distances are edge weights. Each drone is initially treated as an individual fragment. The GHS algorithm is employed to construct the MST by identifying and merging the smallest edges between fragments until all fragments are connected. Subsequently, each drone identifies its two closest neighbors and establishes links with them. If any drone ends up with more than two connections, the longest link is removed to ensure optimal network topology. The final network combines the MST with these additional optimized links, and this process is continuously repeated to dynamically adjust the network in real time, maintaining robustness and efficiency.

| Algorithm 1 Efficient Self UAV Swarm Network (ESUSN) using GHS Algorithm |

|

3.3. Collision Avoidance Using PPO

In our model design, instead of relying solely on environmental sensors for interaction, we strategically focus on increasing the efficiency of collaboration between agents through the exchange of information between agents connected via ESUSN. This approach is critical for integrating shared data directly into the model architecture, influencing the choice of what each agent performs, and thereby facilitating a robust decision-making process. The key to our approach is the use of a variety of key variables that are critical for effective decision making in multiagent systems.

These variables contain the exact GPS coordinates of each agent, providing accurate location data that is essential for navigation and coordination. In addition, each agent’s velocity vector allows the model to account for dynamic changes in velocity, which is crucial for predicting agent motion and optimizing path planning. In addition, direction vectors toward destinations play an important role in guiding agents toward their goals. By incorporating these directional signals into the decision-making process, our model ensures that agents are not only aware of their current location but also move towards achieving their goals efficiently. An important aspect of our model design is to leverage distances to fairly close peripheral agents or obstacles through 360-degree LiDAR measurements. These measurements provide detailed information about spatial relationships between agents in the environment, allowing them to better coordinate and avoid collisions.

The implementation of our model is supported by a PPO algorithm known to be able to handle complex environments characterized by numerous interacting agents. PPO’s on-policy learning approach allows agents to continuously improve their decision-making strategies based on real-time data, optimizing their behavior in response to changing environmental conditions. Notably, the use of a clip epsilon parameter in PPO, set at 0.2, prevents abrupt policy changes. Meanwhile, the discount factor (gamma), crucial for balancing immediate rewards against future gains, is fixed at 0.8, while , which adjusts action variability, is set to 0.9. The formulation for calculating the PPO Loss is as Equation (2), ensuring precise evaluation and refinement of the model’s performance under varying conditions. This structured approach ensures that our model remains adaptable and effective across diverse operational environments, promoting reliable decision making and collaborative synergy among agents.

In MARL, various reward functions are designed to evaluate the performance of each agent. These reward functions guide agents to achieve their assigned goals, prevent collisions, and maintain appropriate spacing between agents. Such formulations play a crucial role in optimizing agents to form a cohesive group, understand one another, and cooperate effectively. In contrast, traditional RL systems focus on a single agent learning optimal behavior in a given environment, but this approach has limitations in complex multiagent environments. Single-agent systems often struggle to respond appropriately to dynamic changes in the environment and the behavior of other agents, leading to potential collisions or inefficient actions. Furthermore, these systems typically lack interagent cooperation, which limits the overall performance of the system.

On the other hand, MARL is designed so that each agent acts independently while cooperating to achieve common goals. This design enables agents to interact and share information within the environment more effectively, thereby enhancing the overall system performance. The reward functions in MARL facilitate this cooperation, allowing agents to learn optimal actions individually while maintaining harmony and efficiency within the entire swarm. Particularly, in MARL, cooperation among agents is essential, as it allows each agent to adjust its behavior and derive better outcomes through interaction with other agents in the environment. This highlights the importance of coordination and collaboration among agents, which is often overlooked in traditional reinforcement learning systems, and plays a crucial role in maximizing performance in multiagent environments.

In our proposed model, we define the components of the state as follows, and concatenate all these components to utilize them as the state:

- Current UAV Information: The position and velocity of the current UAV, and the distance to the target.

- Sensor Value: Values output by the LiDAR sensor attached to the UAV.

- Colleague Information: Information of the UAVs connected through ESUSN, including their positions, velocities, and distances to their targets.

In addition, the following reward components are measured using a weighted sum:

- Distance Reward: Each agent measures the distance reduction to the target point through the GPS value of the current point. Agents receive higher rewards as they move closer to their goal. This encourages agents to efficiently navigate toward their target points. This metric is shared among all agents, allowing for updates that might slightly increase the distance for a specific agent but enhance the overall reward for the group.

- Goal Reward: Check whether an agent has reached its destination. Agents receive a substantial reward upon reaching their target point, encouraging them to successfully complete their missions.

- Collision Reward: Determine if an agent has collided with another agent or an obstacle. If a collision occurs, the agent incurs a significant penalty. This discourages collisions and promotes safe navigation.

- Space Reward: Evaluate whether an agent maintains an appropriate distance from nearby agents. During environment initialization, the minimum and maximum recommended distances between agents are defined. Agents receive rewards for maintaining these defined distances and penalties for being too close or too far. By defining varied maximum and minimum distances, diverse scenarios and actions are encouraged, preventing agents from straying too far from the group.where the following holds:

- , , , are the weight for each reward score,

- is the positive reward for agent i being within a certain distance of its target, defined as:where is a very short distance to assume that the agent has arrived at the destination,

- is the negative reward for agent i being within a certain distance of other agents or obstacle, defined aswhere is a very short distance to assume that the agent has collided. And j is another agent or obstacle,

- is the negative reward that agents i receive when they are outside a certain range in the connected agent, defined aswhere a and b are the minimum and maximum distance parameters between agents declared for navigation and isolation prevention. And j are agents that are linked through ESUSN.

All these reward components are integrated into a weighted sum to determine the total reward value using Equation (4) for each agent. The weights of each reward component are set to reinforce specific behaviors of the agents. This comprehensive reward structure ensures that agents are motivated to achieve their goals efficiently, avoid collisions, and maintain proper spacing, thus facilitating effective collaboration and optimized performance in a multiagent system.

In addition, at each time step, the agent’s action is defined as the acceleration values along the x and y axes, which are used to compute the UAV’s movement. The policy network outputs these acceleration values to adjust the UAV’s trajectory. The action is computed as follows:

where is the policy function parameterized by , mapping the state to the corresponding acceleration values and along the x and y axes. At each step, the UAV updates its position using the computed acceleration, allowing it to move dynamically toward its target while avoiding obstacles in real time. This continuous adjustment ensures smooth navigation and efficient task execution in a multiagent environment.

In summary, the integration of PPO in multiagent environments not only enhances optimization through on-policy learning but also fosters adaptive decision-making capabilities critical for navigating complex and dynamic operational settings. The PPO algorithm in MARL with sharing ambient agent’s information through ESUSN is noted in Algorithm 2. This approach not only promotes robust performance across diverse scenarios but also emphasizes the efficacy of collaborative synergy among autonomous agents in achieving shared objectives.

| Algorithm 2 MultiAgent PPO Algorithm with sharing ambient agent’s information through ESUSN |

|

4. Performance Evaluation

We tested decision making in UAV swarms by implementing our system. To demonstrate our results, we compared the network performance for existing network algorithms and our algorithm. Additionally, our system showed superiority when learning was applied.

4.1. Implementation

For the drone hardware, we used the PX4 flight controller to ensure stable and precise flight operations, which are critical for the success of our multi-UAV missions. The onboard computational tasks were handled by a Raspberry Pi, which served as the SBC. For the MARL aspect, we designed our system using the PPO algorithm due to its efficiency and reliability in training complex policies for agents in dynamic environments. To facilitate the training and testing of our MARL algorithms, we utilized the Vectorized MultiAgent Simulator (VMAS) environment.

Communication between the SBC and the PX4 flight controller was achieved using MAVSDK, a comprehensive software development kit for MAVLink protocol. MAVSDK facilitates seamless communication and command execution between the Raspberry Pi and PX4, allowing us to send flight commands, receive telemetry data, and ensure synchronized operations across multiple UAVs. This setup allows for real-time data exchange and control, which is crucial for coordinated multi-UAV missions.

Our experimental environment includes both static and dynamic obstacles to reflect real-world UAV operational conditions. Static obstacles, such as buildings and other immovable structures, represent the fixed elements that drones must navigate around in structured environments. Additionally, the dynamic aspect is introduced through the movement of other drones within the swarm, which act as moving obstacles for each other. This constantly changing environment requires each drone to adapt in real time, adjusting its behavior to avoid collisions and optimize navigation.

The key distinction between the proposed ESUSN algorithm combined with MARL and traditional heuristic optimization algorithms lies in their adaptability and cooperative efficiency. Heuristic methods, which often rely on static, predefined rules, are limited in their ability to respond effectively to dynamic and unpredictable changes in the environment. In contrast, the ESUSN algorithm dynamically adjusts network topology in real time, enabling UAVs to swiftly adapt to unexpected environmental shifts. Moreover, the integration of MARL fosters cooperative learning among UAVs, allowing them to optimize their actions collectively. This results in enhanced mission success rates and improved collision avoidance. These adaptive capabilities are particularly advantageous in dynamic scenarios where UAVs must operate autonomously under uncertain conditions. Unlike heuristic methods, which struggle to provide real-time adaptability and collaboration, our approach significantly enhances flexibility and efficiency through continuous learning and cooperation.

4.2. Network Efficiency Experiments

In our system, after the UAV swarm receives a mission, the drones in the swarm make network topology through the ESUSN algorithm. The number of drones in the swarm is assumed to be 5 and 10 depending on the experiment, with each position being a random natural number between (0, 100) to build a communication system that is not limited to a specific formation. Once the locations of the drones in the UAV swarm are determined, each drone calculates the distance to other drones in the swarm, excluding itself, using the LiDAR sensor. Using the calculated distance and ESUSN algorithm, optimal network links are configured. The constructed topology is updated whenever the drone’s location changes.

Figure 3 shows how the ESUSN algorithm constructs the network topology as the drone learns and moves in our system. With this algorithm, our system improves network performance compared with other algorithms by sharing only the information necessary for learning.

Figure 3.

Structure of ESUSN topologies during step.

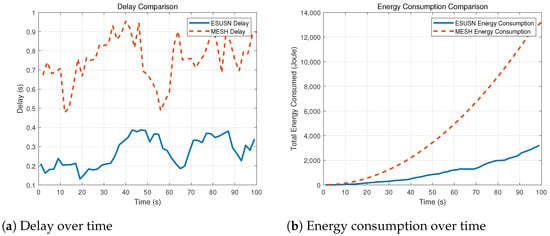

The network performance experiments were conducted under two different scenarios, each assuming 5 and 10 drones within the swarm, respectively. In a dynamically updating routing environment, we compared the delay and energy consumption at each second. In the Mesh configuration, every drone in the swarm communicated with all other drones, whereas, in the ESUSN configuration, data were exchanged according to the links determined by the algorithm. Through these experiments, we aimed to analyze the most suitable network topology for drone environments and compare the performance of the two network configurations as the network size and complexity increased.

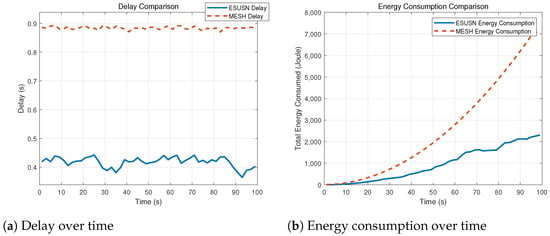

As a result of comparing the MESH and ESUSN simulation datasets in an environment with five drones in a swarm, as can be seen in Figure 4, the average delay time of the MESH network is 0.884 s, while ESUSN is 0.418 s, which is approximately 53% shorter. It turned out to be shorter. This indicates that the ESUSN network can provide faster and more stable communication. Additionally, The average energy consumption of the MESH network was 2569.141 Joules, whereas the ESUSN network’s average energy consumption was 950.605 Joules, about 63% less. Over a communication period of 100 s, the total energy consumption for the ESUSN network was 2397 Joules, compared with 7490 Joules for the MESH network.

Figure 4.

Comparison of delay and energy consumption over time with 5 UAVs in the swarm.

As shown in Figure 5, comparing MESH and ESUSN simulation datasets in an environment with 10 drones within a swarm revealed significant differences in performance. The MESH network exhibited an average delay of 0.756 s, whereas the ESUSN network demonstrated a considerably lower average delay of 0.274 s, approximately 64% shorter. The MESH network’s average energy consumption was 4709.905 units, while the ESUSN network’s average energy consumption was only 1047.860 units, representing a reduction of about 78%. Over a communication period of 100 s, the total energy consumption for the ESUSN network was 3433 units, in stark contrast to the MESH network’s maximum energy consumption of 13,731.8 units. These results clearly demonstrate that the ESUSN network operates more efficiently, offering significant advantages in both delay and energy consumption for scenarios involving a larger number of drones.

Figure 5.

Comparison of delay and energy consumption over time with 10 UAVs in the swarm.

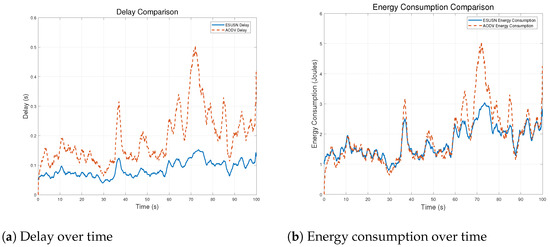

The comparison between ESUSN and AODV-based FANET was conducted to evaluate ESUSN’s performance in dynamic environments such as drone swarms. The results, as shown in Figure 6, indicate that ESUSN outperforms AODV-based FANET in key metrics. In terms of communication delay, ESUSN consistently maintained lower latency compared with AODV, demonstrating its ability to provide more stable and efficient communication in dynamic conditions. Additionally, ESUSN exhibited more optimized energy consumption, maintaining a lower and more stable energy usage pattern compared with AODV-based FANET. These findings confirm that ESUSN is more suited for drone swarm environments, offering better resource efficiency and stability in rapidly changing network conditions.

Figure 6.

Comparison of network stability between FANET and ESUSN with 10 UAVs in the swarm.

4.3. Experiments on Reinforcement Learning Performance

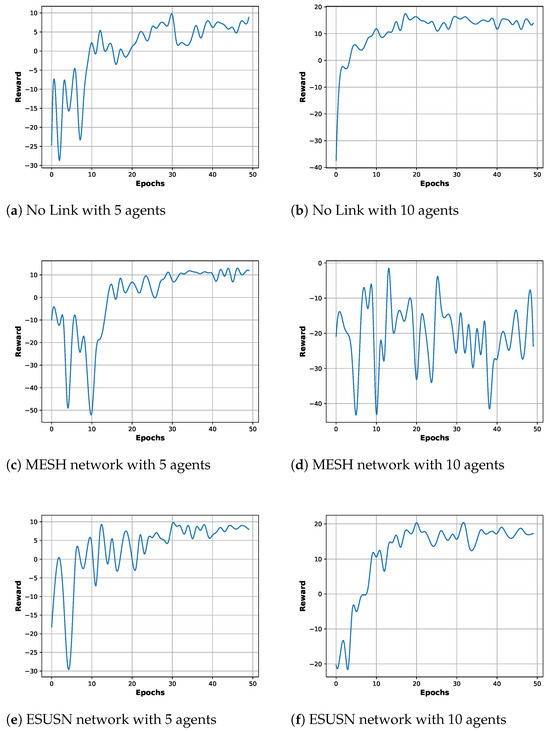

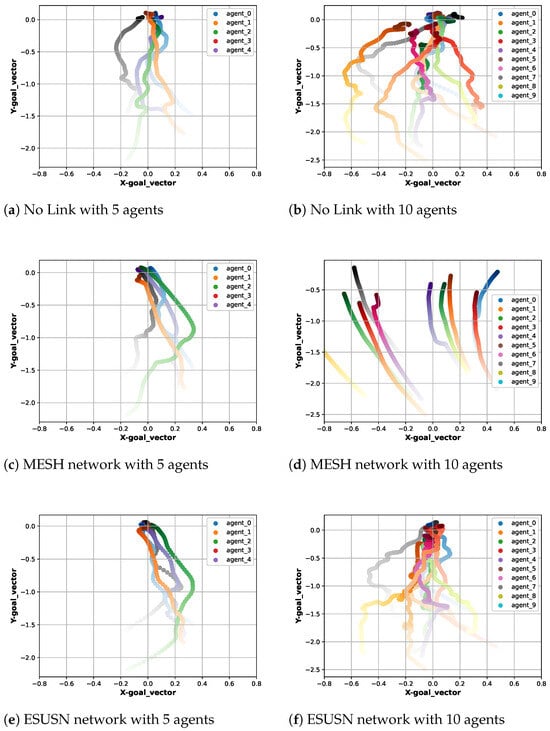

In this section, we verify that the proposed system effectively completes missions through mutual information sharing and evaluate the performance of the proposed UAV clustering system in the ESUSN network, MESH network, and the No Link scenario. The experiments include assessing the reward graph and the trajectories of agents. Each policy is tested over episodes with fixed coordinates for agents, targets, and obstacles to compare mission completion time and collision count. Additionally, the time consumed during the training of each policy is evaluated.

In traditional navigation scenarios, where no connections are formed with surrounding agents, each agent operates independently using only the sensor information attached to the drone, without any information sharing. In contrast, scenarios using information sharing through the MESH network allow agents to input and act upon the collective information from all agents. The proposed scenario involves the ESUSN network for efficient data sharing among drones. This system is designed to exchange only the necessary information through a relatively small number of links to maximize the overall reward of the cluster. The scenario aims to evaluate how the integration of ESUSN and MARL enhances the cooperation and performance of UAV groups. Comparative experiments for three scenarios are conducted by varying the number of agents between five and ten.

The image in Figure 7 displays the reward values during the training of each scenario while varying the number of agents. As shown, when the number of agents is five, the reward value for the MESH scenario is marginally better than that of other scenarios. This indicates that in situations with fewer agents, the number of links connected to each agent is not significantly large, resulting in minimal performance differences. However, when the number of agents is ten, it can be observed that training in the MESH network does not proceed normally, unlike in the No Link and ESUSN scenarios. This is because the number of links connected to each agent increases from four to nine, leading to an increase in unnecessary data input to the policy for action selection. At first glance, the reward graph may suggest that the performance of No Link is similar to that of MARL using ESUSN. When the number of UAVs is five, the relatively low number of UAVs makes the episode less challenging, meaning No Link can perform adequately. However, as the number of UAVs increases, the difficulty of the episode rises, and as a result, the reward value of No Link gradually decreases compared with that of ESUSN. The following sections provide a comparison of performance metrics as each model progresses through the episodes, clearly highlighting the differences between the models.

Figure 7.

Comparison of reward graph.

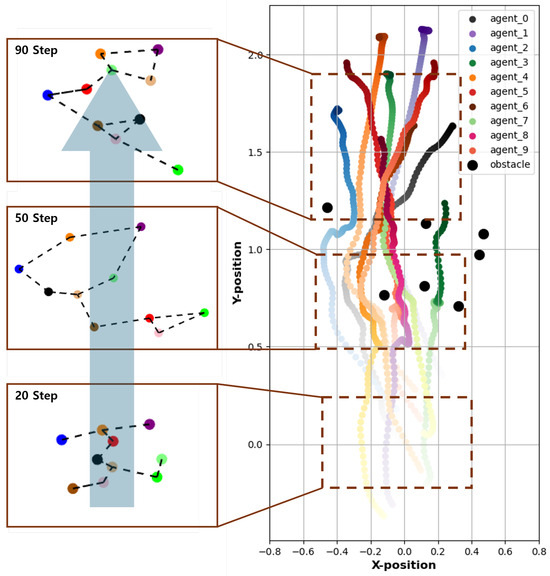

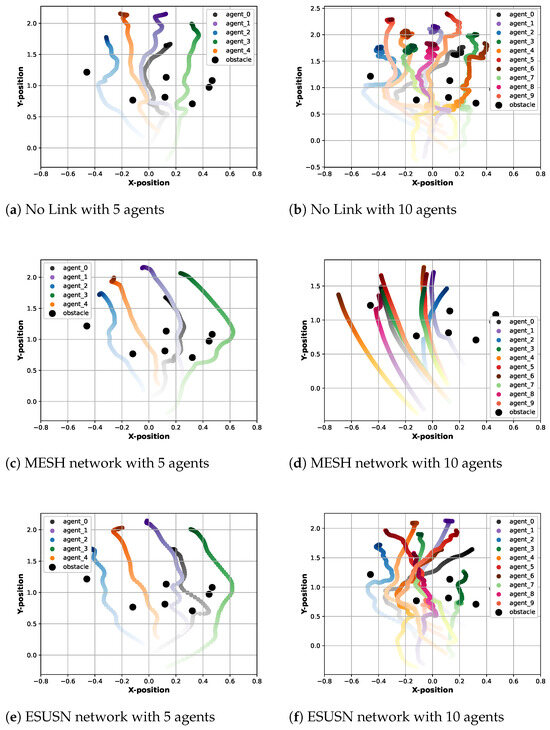

Figure 8 compares the trajectories of agents based on identical coordinates for agents, obstacles, and targets. As seen above, when there are 10 agents, the MESH network scenario does not allow the policy to learn properly, resulting in the agents failing to reach the target correctly. While the No Link and ESUSN scenarios may appear to have similar performance, as shown in Figure 9, there is a notable difference when the number of agents is ten. In the ESUSN scenario, the distance of all agents to their targets quickly converges the distance vector to (0, 0) as steps progress. In contrast, in the No Link scenario, the target distance does not converge properly distance vector to (0, 0). This indicates that without the ability to know the information of surrounding agents, each agent moves closer to the target but ends up choosing unnecessary actions to avoid collisions.

Figure 8.

Comparison of agent trajectories.

Figure 9.

Comparison of distance between agent and target.

Table 1 compares the performance metrics for each scenario in test situations. According to Table 1, the number of steps required for agents to reach the target is relatively lower in the ESUSN scenario. This indicates that agents in our proposed system can quickly reach the target by selecting optimal actions. Additionally, unlike the other scenarios, the ESUSN scenario maintains a collision count of zero, demonstrating that agents can find safe and efficient actions. Epoch time refers to the time consumed per epoch during policy training and is measured in minutes. With five agents, the No Link scenario recorded 2 min, the MESH scenario 5 min, and the ESUSN scenario 2 min. With ten agents, the No Link scenario recorded 8 min, the MESH scenario 15 min, and the ESUSN scenario 6 min. As the number of links in the network increases, the model’s size also grows, naturally increasing the time required for training. Additionally, epoch time is related to the number of steps required to complete an episode; the faster an episode is completed, the quicker the transition to the next epoch.

Table 1.

Performance evaluation for each scenario.

The ESUSN scenario shows that efficient link configuration does not significantly increase training time compared with the No Link scenario. However, due to the higher computational load, the epoch time is naturally higher than No Link. Nevertheless, by utilizing information from surrounding agents, agents can reach the target faster and complete episodes more quickly, thus reducing the overall epoch time. These experimental results emphasize the efficiency and safety of our proposed system regarding energy and time consumption compared with other scenarios. By prioritizing the overall reward of the drone swarm over individual rewards and optimizing actions through shared information and reinforcement learning, the system achieves higher mission performance and collision avoidance. This study demonstrates that the selective information sharing of the ESUSN network and the MARL system effectively and safely achieves overall mission objectives, even in scenarios where multiple drones operate simultaneously.

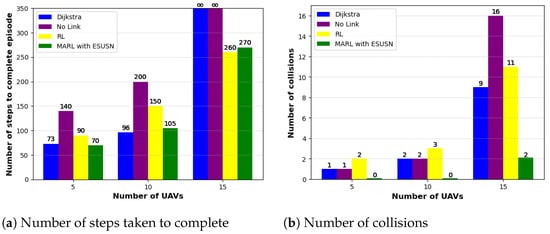

To evaluate the performance of the system proposed in this paper, we additionally conducted comparative experiments with Dijkstra’s algorithm and General RL. Table 2 presents the comparative performance results between the systems. Dijkstra’s algorithm is commonly used to find the shortest path from a single source node to other nodes in a graph and is widely applied in path optimization problems. Since Dijkstra’s algorithm is an algorithm rather than a learning-based model, it does not require training time, which may give it an advantage over our model at the initial comparison. General RL refers to reinforcement learning that does not utilize multiagent systems, and we applied the PPO method to conduct the comparative experiments. The experimental environment was set up similarly to the experiments in Table 1, dividing the number of agents into 5 and 10 for the comparative experiments.

Table 2.

Performance evaluation for each algorithm.

Even when the number of agents was 5, Dijkstra’s algorithm and Normal RL already showed slightly inferior performance compared with the system proposed in this paper. Dijkstra’s algorithm reached the destination in 73 steps but had 1 collision, and General RL reached it in 90 steps but had 2 collisions, failing to match our proposed model’s performance of reaching the destination in 70 steps with 0 collisions. As the number of agents increased, this difference became more pronounced. When the number of agents was 10, Dijkstra’s algorithm reached the destination in 96 steps with 2 collisions, and General RL reached it in 150 steps with 3 collisions. However, our proposed system completed the scenario in 105 steps with 0 collisions.

These performance records demonstrate that Dijkstra’s algorithm and General RL learning do not consider the environmental changes that occur as multiple agents select their actions. In contrast, our proposed system maintains high performance because it is trained to recognize the environment and dynamically select actions by efficiently exchanging only the necessary information through interactions among agents.

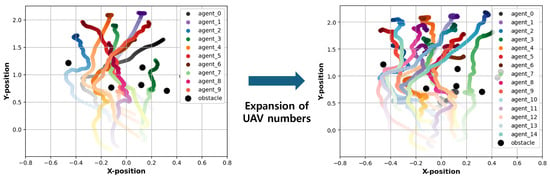

Additionally, the model we propose is theoretically infinitely scalable because it uses a network that optimizes the process of agents inferring actions. In the case of a MESH network, as the number of UAVs increases, the amount of data collected as state values also increases, and as the state size grows, the computational load increases, which prevents smooth learning. However, the ESUSN-based model finds out the number of links to only those necessary for reinforcement learning, ensuring that even as UAVs are added, the number of links connected to each UAV remains optimal. This means that the number of state values held by each agent stays consistent regardless of the number of UAVs, and the model can be applied even if the number of agents during training differs from the number of agents during inference like Figure 10. In practice, when the policy derived through training is deployed to each UAV’s SBC, the UAV collects state values via its sensors, inputs them into the policy, and operates as expected. This approach theoretically allows for the infinite expansion of UAV numbers.

Figure 10.

Expansion of UAV numbers 10 to 15.

Figure 11 compares the performance of each algorithm as the number of UAVs increases to 15. The MESH network was excluded from the comparison due to its structure requiring retraining based on the number of UAVs. As seen in Figure 11a, when the number of UAVs expands to 15, both Dijkstra and No Link fail to complete the episode. This is because the lack of information about neighboring UAVs prevents each UAV from optimizing its trajectory, making it unable to avoid obstacles and complete the episode. Additionally, while the general RL model reaches the destination faster than the MARL model using the ESUSN network, Figure 11b shows that it results in more than five times the number of collisions compared with the MARL model using ESUSN. Compared with these three algorithms, the proposed model demonstrates stable performance even as the number of UAVs increases, effectively avoiding collisions with obstacles and other UAVs, and successfully completing the episode.

Figure 11.

Performance comparison between algorithms as the number of UAVs increases.

5. Future Research Directions

Future research could focus on extending the UAV swarm system to operate in three-dimensional environments. While this study demonstrates the effectiveness of our proposed system in a two-dimensional environment, several avenues for future research remain to be explored. One of the key extensions involves adapting the current methodology to three-dimensional environments. By incorporating altitude data and adjusting relevant system parameters, we anticipate that the principles of reinforcement learning and information sharing can be successfully applied to 3D operations. This would enable UAVs to navigate more complex spaces where height adjustments are critical for optimal performance and collision avoidance. Furthermore, it is important to test the system’s scalability and practicality in various real-world scenarios. Although our experiments include static obstacles such as buildings and dynamic elements like other drones in the swarm, future work can be focused on testing the system in larger and more intricate environments. These environments could feature additional dynamic variables, such as unpredictable external factors or more sophisticated obstacle configurations. Expanding the scale and complexity of the testing environment can help to validate the system’s robustness and adaptability in real-world scenarios. Moreover, exploring improvements in communication protocols and decision-making algorithms could further optimize UAV swarm performance in environments that require rapid adaptation to changing conditions. Future research could investigate the integration of more advanced learning algorithms and the development of enhanced communication systems that can maintain efficiency in highly dynamic and large-scale scenarios alike.

6. Conclusions

This study introduced an innovative UAV swarm system utilizing the Efficient Self UAV Swarm Network (ESUSN) and MultiAgent Reinforcement Learning (MARL) to improve mission performance, communication efficiency, and collision avoidance. The results demonstrated notable advantages, such as reduced communication delay and energy consumption compared with traditional network configurations, and enhanced mission success rates, particularly in scenarios involving multiple UAVs. The proposed system showed significant improvements in reducing communication delay by up to 64% and energy consumption by 78% compared with MESH networks, while also achieving zero collisions in test scenarios. These findings emphasize the system’s capability to increase mission efficiency, making it more reliable in real-time applications involving multiple drones. The combination of optimized network design and reinforcement learning allows UAVs to perform complex tasks while sharing minimal data, ensuring both stability and efficiency. Overall, this research highlights the potential of combining reinforcement learning and optimized network algorithms to enhance UAV swarm operations. The presented methodology showcases a promising approach for improving the scalability, efficiency, and robustness of UAV swarms across various mission-critical applications, from surveillance to disaster management.

Author Contributions

Conceptualization, W.J., C.P. and H.K.; methodology, W.J. and C.P.; software, W.J., C.P. and S.L; validation, W.J., C.P. and H.K.; investigation, W.J., C.P. and S.L.; formal analysis, W.J., C.P. and H.K.; resources, H.K.; data curation, W.J. and C.P.; writing—original draft preparation, W.J., C.P., S.L. and H.K.; writing—review and editing, W.J., C.P., S.L. and H.K.; visualization, W.J., C.P. and S.L.; supervision, H.K.; project administration, H.K.; funding acquisition, H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Korea Research Institute for defense Technology Planning and advancement (KRIT) grant funded by the Korea government (DAPA (Defense Acquisition Program Administration)) (KRIT-CT-23-041, LiDAR/RADAR Supported Edge AI-based Highly Reliable IR/UV FSO/OCC Specialized Laboratory, 2024).

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly provided under the terms of the contract with the funding agency.

Conflicts of Interest

The authors declare no conflicts of interest. Furthermore, the funders had no role in the design of the study; in the collection, analysis, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| UAV | Unmanned Aerial Vehicle |

| RL | Reinforcement Learning |

| ESUSN | Efficient Self UAV Swarm Network |

| MARL | MultiAgent Reinforcement Learning |

| GCS | Ground Control Stations |

| SBC | Single-Board Computer |

| FANETs | Flying Ad Hoc Networks |

| MANETs | Mobile Ad Hoc Networks |

| SDN | Software-Defined Networking |

| DDPG | Deep Deterministic Policy Gradient |

| DQN | Deep Q-Networks |

| DDQN | Double Deep Q-Networks |

| PPO | Proximal Policy Optimization |

| LSTM | Long Short-Term Memory |

| MST | Minimum Spanning Tree |

| VMAS | Vectorized MultiAgent Simulator |

| LiDAR | Light Detection And Ranging |

References

- Hayat, S.; Yanmaz, E.; Muzaffar, R. Survey on Unmanned Aerial Vehicle Networks for Civil Applications: A Communications Viewpoint. IEEE Commun. Surv. Tutor. 2016, 18, 2624–2661. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Dutta, G.; Goswami, P. Application of drone in agriculture: A review. Int. J. Chem. Stud. 2020, 8, 181–187. [Google Scholar] [CrossRef]

- Veroustraete, F. The rise of the drones in agriculture. EC Agric. 2015, 2, 325–327. [Google Scholar]

- Yoo, T.; Lee, S.; Yoo, K.; Kim, H. Reinforcement learning based topology control for UAV networks. Sensors 2023, 23, 921. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Kim, H.T.; Kim, H. Vmcs: Elaborating apf-based swarm intelligence for mission-oriented multi-uv control. IEEE Access 2020, 8, 223101–223113. [Google Scholar] [CrossRef]

- Lee, W.; Lee, J.Y.; Lee, J.; Kim, K.; Yoo, S.; Park, S.; Kim, H. Ground control system based routing for reliable and efficient multi-drone control system. Appl. Sci. 2018, 8, 2027. [Google Scholar] [CrossRef]

- Fotouhi, A.; Ding, M.; Hassan, M. Service on Demand: Drone Base Stations Cruising in the Cellular Network. In Proceedings of the 2017 IEEE Globecom Workshops (GC Wkshps), Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Shahzad, M.M.; Saeed, Z.; Akhtar, A.; Munawar, H.; Yousaf, M.H.; Baloach, N.K.; Hussain, F. A review of swarm robotics in a nutshell. Drones 2023, 7, 269. [Google Scholar] [CrossRef]

- Yoon, N.; Lee, D.; Kim, K.; Yoo, T.; Joo, H.; Kim, H. STEAM: Spatial Trajectory Enhanced Attention Mechanism for Abnormal UAV Trajectory Detection. Appl. Sci. 2023, 14, 248. [Google Scholar] [CrossRef]

- Park, S.; Kim, H. Dagmap: Multi-drone slam via a dag-based distributed ledger. Drones 2022, 6, 34. [Google Scholar] [CrossRef]

- Bekmezci, I.; Sen, I.; Erkalkan, E. Flying ad hoc networks (FANET) test bed implementation. In Proceedings of the 2015 7th International Conference on Recent Advances in Space Technologies (RAST), Istanbul, Turkey, 16–19 June 2015; pp. 665–668. [Google Scholar]

- Corson, S.; Macker, J. Mobile ad Hoc Networking (MANET): Routing Protocol Performance Issues and Evaluation Considerations. RFC 2501 1999. Available online: https://www.rfc-editor.org/rfc/rfc2501 (accessed on 27 July 2024).

- Park, C.; Lee, S.; Joo, H.; Kim, H. Empowering adaptive geolocation-based routing for UAV networks with reinforcement learning. Drones 2023, 7, 387. [Google Scholar] [CrossRef]

- Park, S.; La, W.G.; Lee, W.; Kim, H. Devising a distributed co-simulator for a multi-UAV network. Sensors 2020, 20, 6196. [Google Scholar] [CrossRef] [PubMed]

- Nazib, R.A.; Moh, S. Routing protocols for unmanned aerial vehicle-aided vehicular ad hoc networks: A survey. IEEE Access 2020, 8, 77535–77560. [Google Scholar] [CrossRef]

- Saleem, Y.; Rehmani, M.H.; Zeadally, S. Integration of cognitive radio technology with unmanned aerial vehicles: Issues, opportunities, and future research challenges. J. Netw. Comput. Appl. 2015, 50, 15–31. [Google Scholar] [CrossRef]

- Gupta, L.; Jain, R.; Vaszkun, G. Survey of important issues in UAV communication networks. IEEE Commun. Surv. Tutor. 2015, 18, 1123–1152. [Google Scholar] [CrossRef]

- Khan, M.F.; Yau, K.L.A.; Noor, R.M.; Imran, M.A. Routing schemes in FANETs: A survey. Sensors 2019, 20, 38. [Google Scholar] [CrossRef]

- Srivastava, A.; Prakash, J. Future FANET with application and enabling techniques: Anatomization and sustainability issues. Comput. Sci. Rev. 2021, 39, 100359. [Google Scholar] [CrossRef]

- Nayyar, A. Flying adhoc network (FANETs): Simulation based performance comparison of routing protocols: AODV, DSDV, DSR, OLSR, AOMDV and HWMP. In Proceedings of the 2018 International Conference on Advances in Big Data, Computing and Data Communication Systems (icABCD), Durban, South Africa, 6–7 August 2018; pp. 1–9. [Google Scholar]

- Bekmezci, I.; Sahingoz, O.K.; Temel, Ş. Flying ad-hoc networks (FANETs): A survey. Ad Hoc Netw. 2013, 11, 1254–1270. [Google Scholar] [CrossRef]

- Zhu, L.; Karim, M.M.; Sharif, K.; Xu, C.; Li, F. Traffic flow optimization for UAVs in multi-layer information-centric software-defined FANET. IEEE Trans. Veh. Technol. 2022, 72, 2453–2467. [Google Scholar] [CrossRef]

- Ayub, M.S.; Adasme, P.; Melgarejo, D.C.; Rosa, R.L.; Rodríguez, D.Z. Intelligent hello dissemination model for FANET routing protocols. IEEE Access 2022, 10, 46513–46525. [Google Scholar] [CrossRef]

- Koch, W.; Mancuso, R.; West, R.; Bestavros, A. Reinforcement learning for UAV attitude control. ACM Trans.-Cyber-Phys. Syst. 2019, 3, 1–21. [Google Scholar] [CrossRef]

- Azar, A.T.; Koubaa, A.; Ali Mohamed, N.; Ibrahim, H.A.; Ibrahim, Z.F.; Kazim, M.; Ammar, A.; Benjdira, B.; Khamis, A.M.; Hameed, I.A.; et al. Drone deep reinforcement learning: A review. Electronics 2021, 10, 999. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Yang, Q.; Zhu, Y.; Zhang, J.; Qiao, S.; Liu, J. UAV air combat autonomous maneuver decision based on DDPG algorithm. In Proceedings of the 2019 IEEE 15th International Conference on Control and Automation (ICCA), Edinburgh, UK, 16–19 July 2019; pp. 37–42. [Google Scholar]

- Cetin, E.; Barrado, C.; Muñoz, G.; Macias, M.; Pastor, E. Drone navigation and avoidance of obstacles through deep reinforcement learning. In Proceedings of the 2019 IEEE/AIAA 38th Digital Avionics Systems Conference (DASC), San Diego, CA, USA, 8–12 September 2019; pp. 1–7. [Google Scholar]

- Li, K.; Ni, W.; Emami, Y.; Dressler, F. Data-driven flight control of internet-of-drones for sensor data aggregation using multi-agent deep reinforcement learning. IEEE Wirel. Commun. 2022, 29, 18–23. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Hodge, V.J.; Hawkins, R.; Alexander, R. Deep reinforcement learning for drone navigation using sensor data. Neural Comput. Appl. 2021, 33, 2015–2033. [Google Scholar] [CrossRef]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Tong, G.; Jiang, N.; Biyue, L.; Xi, Z.; Ya, W.; Wenbo, D. UAV navigation in high dynamic environments: A deep reinforcement learning approach. Chin. J. Aeronaut. 2021, 34, 479–489. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Drew, D.S. Multi-agent systems for search and rescue applications. Curr. Robot. Rep. 2021, 2, 189–200. [Google Scholar] [CrossRef]

- Gavin, T.; LacroiX, S.; Bronz, M. Multi-Agent Reinforcement Learning based Drone Guidance for N-View Triangulation. In Proceedings of the 2024 International Conference on Unmanned Aircraft Systems (ICUAS), Chania-Crete, Greece, 4–7 June 2024; pp. 578–585. [Google Scholar]

- Gallager, R.G.; Humblet, P.A.; Spira, P.M. A Distributed Algorithm for Minimum-Weight Spanning Trees. ACM Trans. Program. Lang. Syst. (TOPLAS) 1983, 5, 66–77. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).