Chestnut Burr Segmentation for Yield Estimation Using UAV-Based Imagery and Deep Learning

Abstract

1. Introduction

2. Material and Methods

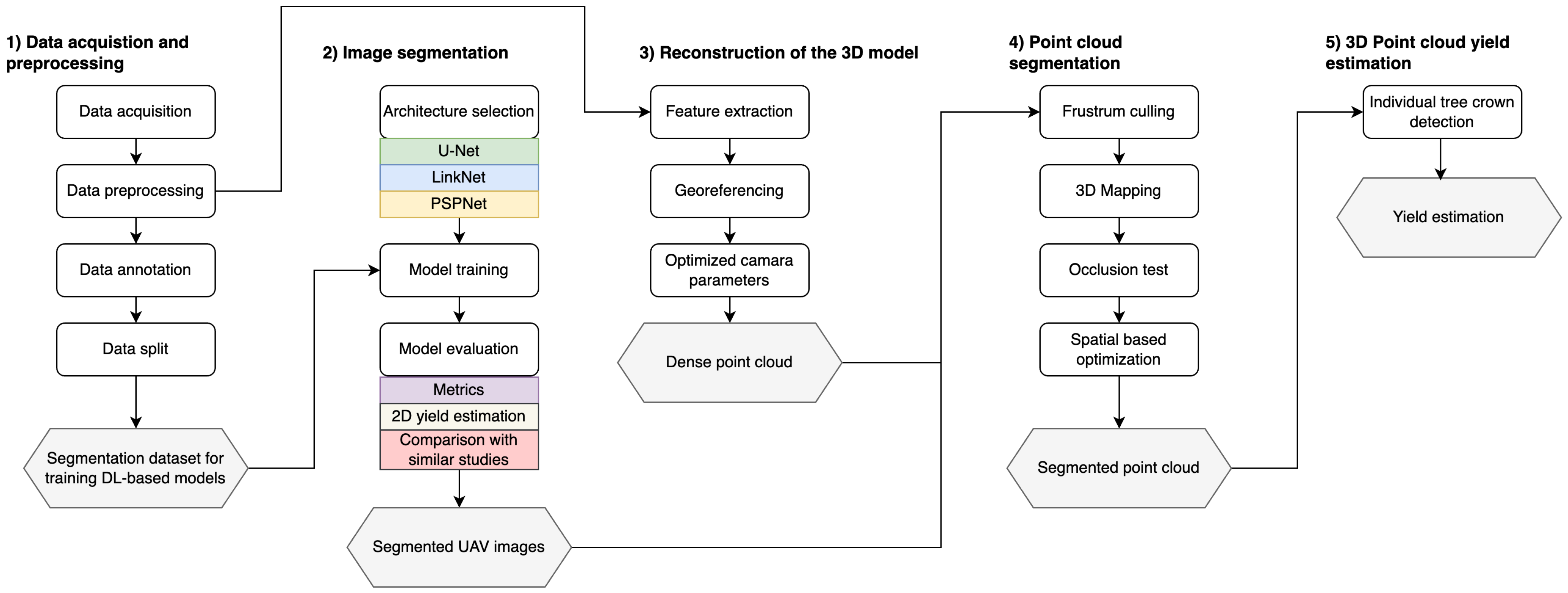

2.1. Proposed Methodology

2.2. Proposed Dataset

2.2.1. UAV Data Acquisition

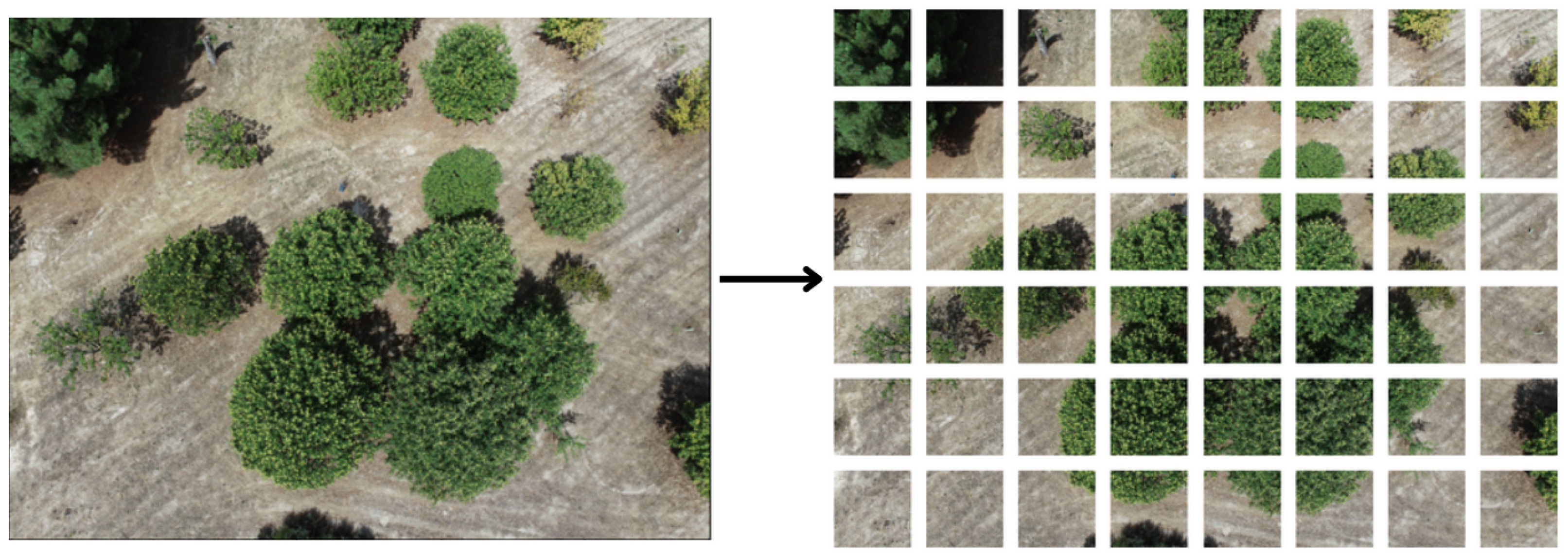

2.2.2. Dataset Annotation

2.2.3. Dataset 2

2.3. Models and Training

2.4. Burr Counting in UAV Images

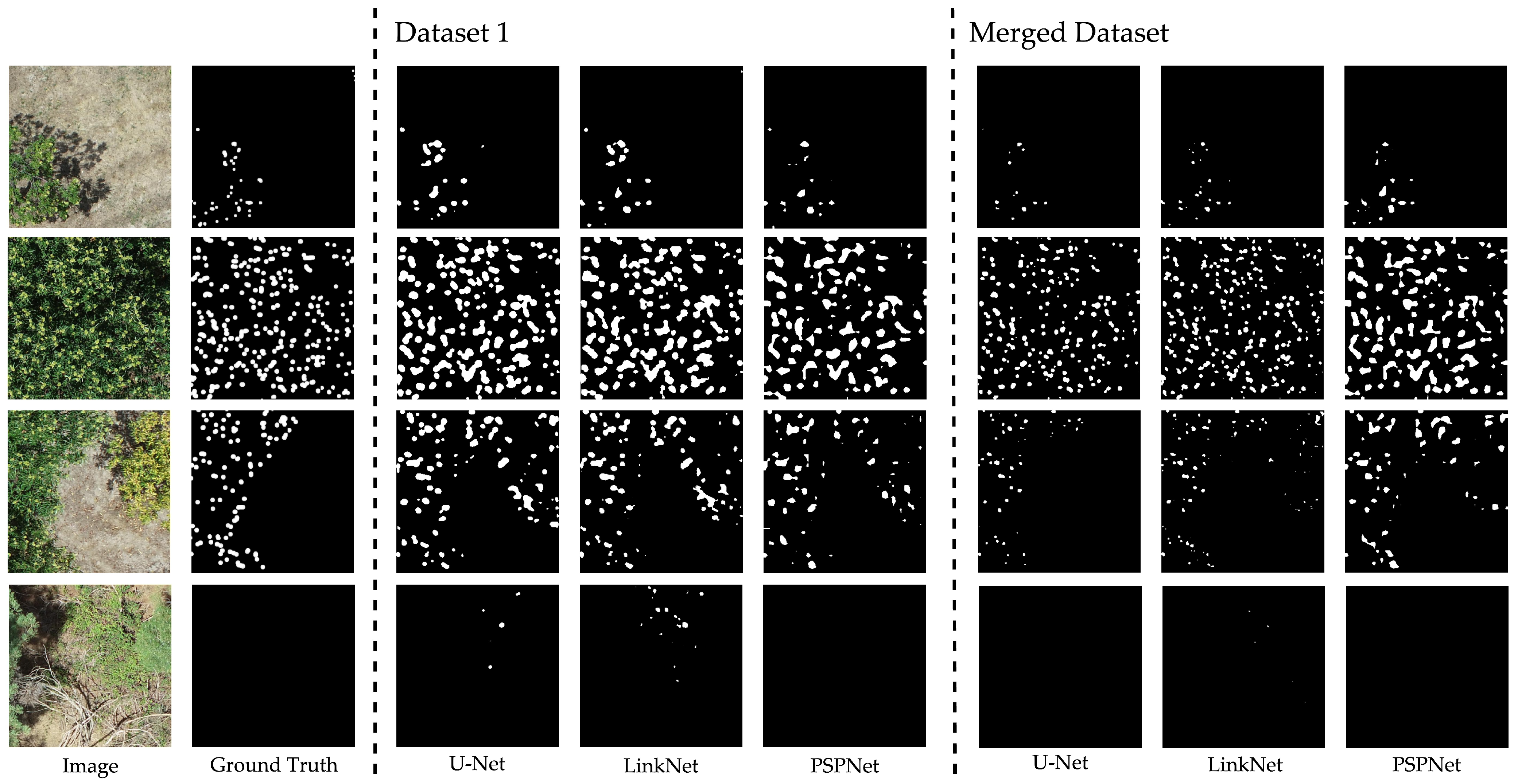

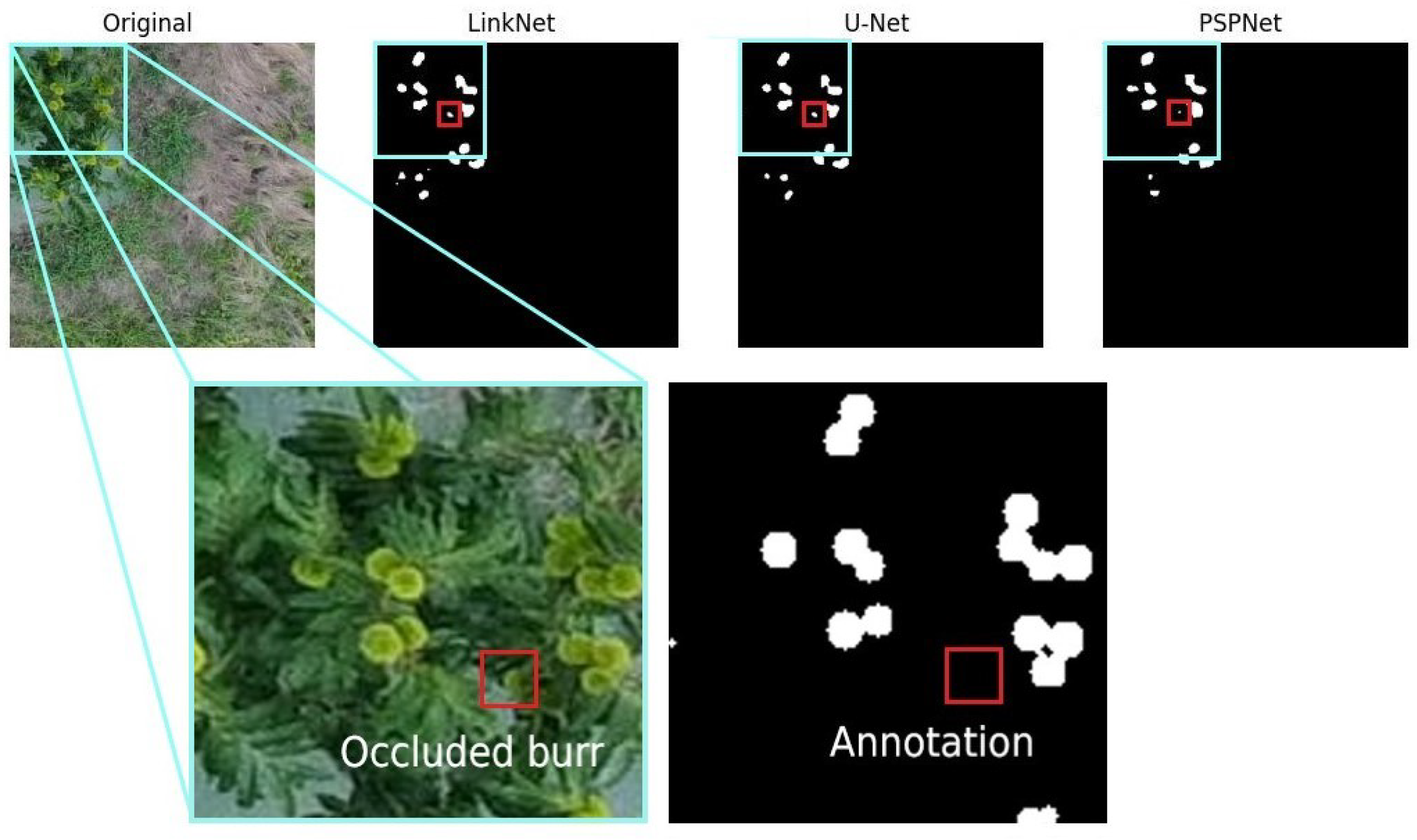

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zaffaroni, M.; Bevacqua, D. Maximize crop production and environmental sustainability: Insights from an ecophysiological model of plant-pest interactions and multi-criteria decision analysis. Eur. J. Agron. 2022, 139, 126571. [Google Scholar] [CrossRef]

- Zhu, Q. Integrated Environment Monitoring and Data Management in Agriculture. In Encyclopedia of Smart Agriculture Technologies; Zhang, Q., Ed.; Springer International Publishing: Cham, Switzerland, 2022; pp. 1–12. [Google Scholar] [CrossRef]

- Zhang, J.; Nie, J.; Cao, W.; Gao, Y.; Lu, Y.; Liao, Y. Long-term green manuring to substitute partial chemical fertilizer simultaneously improving crop productivity and soil quality in a double-rice cropping system. Eur. J. Agron. 2023, 142, 126641. [Google Scholar] [CrossRef]

- Nowak, B. Precision agriculture: Where do we stand? A review of the adoption of precision agriculture technologies on field crops farms in developed countries. Agric. Res. 2021, 10, 515–522. [Google Scholar] [CrossRef]

- Yin, H.; Cao, Y.; Marelli, B.; Zeng, X.; Mason, A.J.; Cao, C. Soil sensors and plant wearables for smart and precision agriculture. Adv. Mater. 2021, 33, 2007764. [Google Scholar] [CrossRef]

- Cimtay, Y.; Özbay, B.; Yilmaz, G.; Bozdemir, E. A new vegetation index in short-wave infrared region of electromagnetic spectrum. IEEE Access 2021, 9, 148535–148545. [Google Scholar] [CrossRef]

- Khanna, A.; Kaur, S. Evolution of Internet of Things (IoT) and its significant impact in the field of Precision Agriculture. Comput. Electron. Agric. 2019, 157, 218–231. [Google Scholar] [CrossRef]

- Kahvecı, M. Contribution of GNSS in precision agriculture. In Proceedings of the 2017 8th International Conference on Recent Advances in Space Technologies (RAST), Istanbul, Turkey, 19–22 June 2017; pp. 513–516. [Google Scholar]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of remote sensing in precision agriculture: A review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Zhang, P.; Guo, Z.; Ullah, S.; Melagraki, G.; Afantitis, A.; Lynch, I. Nanotechnology and artificial intelligence to enable sustainable and precision agriculture. Nat. Plants 2021, 7, 864–876. [Google Scholar] [CrossRef]

- Pádua, L.; Adão, T.; Hruška, J.; Sousa, J.J.; Peres, E.; Morais, R.; Sousa, A. Very high resolution aerial data to support multi-temporal precision agriculture information management. Procedia Comput. Sci. 2017, 121, 407–414. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bareth, G. UAV-based imaging for multi-temporal, very high resolution crop surface models to monitor crop growth variability. In Unmanned Aerial Vehicles (UAVs) for Multi-Temporal Crop Surface Modelling; Schweizerbart Science Publishers: Stuttgart, Germany, 2013; p. 44. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Martins, L.M.; Castro, J.P.; Bento, R.; Sousa, J.J. Monitorização da condição fitossanitária do castanheiro por fotografia aérea obtida com aeronave não tripulada. Rev. Ciências Agrárias 2015, 38, 184–190. [Google Scholar]

- Vannini, A.; Vettraino, A.; Fabi, A.; Montaghi, A.; Valentini, R.; Belli, C. Monitoring ink disease of chestnut with the airborne multispectral system ASPIS. In Proceedings of the III International Chestnut Congress 693, Chaves, Portugal, 20–23 October 2004; pp. 529–534. [Google Scholar]

- Martins, L.; Castro, J.; Macedo, W.; Marques, C.; Abreu, C. Assessment of the spread of chestnut ink disease using remote sensing and geostatistical methods. Eur. J. Plant Pathol. 2007, 119, 159–164. [Google Scholar] [CrossRef]

- Castro, J.; Azevedo, J.; Martins, L. Temporal analysis of sweet chestnut decline in northeastern Portugal using geostatistical tools. In Proceedings of the I European Congress on Chestnut-Castanea 2009 866, Cuneo-Torino, Italy, 13–16 October 2009; pp. 405–410. [Google Scholar]

- Martins, L.; Castro, J.P.; Macedo, F.; Marques, C.; Abreu, C.G. Índices espectrais em fotografia aérea de infravermelho próximo na monitorização da doença tinta do castanheiro. In Proceedings of the 5º Congresso Florestal Nacional, SPCF-Sociedade Portuguesa de Ciências Florestais, Instituto Politécnico de Viseu, Viseu, Portugal, 16 May 2005. [Google Scholar]

- Montagnoli, A.; Fusco, S.; Terzaghi, M.; Kirschbaum, A.; Pflugmacher, D.; Cohen, W.B.; Scippa, G.S.; Chiatante, D. Estimating forest aboveground biomass by low density lidar data in mixed broad-leaved forests in the Italian Pre-Alps. For. Ecosyst. 2015, 2, 1–9. [Google Scholar] [CrossRef]

- Prada, M.; Cabo, C.; Hernández-Clemente, R.; Hornero, A.; Majada, J.; Martínez-Alonso, C. Assessing canopy responses to thinnings for sweet chestnut coppice with time-series vegetation indices derived from landsat-8 and sentinel-2 imagery. Remote Sens. 2020, 12, 3068. [Google Scholar] [CrossRef]

- Marchetti, F.; Waske, B.; Arbelo, M.; Moreno-Ruíz, J.A.; Alonso-Benito, A. Mapping Chestnut stands using bi-temporal VHR data. Remote Sens. 2019, 11, 2560. [Google Scholar] [CrossRef]

- Martins, L.; Castro, J.; Bento, R.; Sousa, J. Chestnut health monitoring by aerial photographs obtained by unnamed aerial vehicle. Rev. Ciências Agrárias 2015, 38, 184–190. [Google Scholar]

- Pádua, L.; Hruška, J.; Bessa, J.; Adão, T.; Martins, L.M.; Gonçalves, J.A.; Peres, E.; Sousa, A.M.; Castro, J.P.; Sousa, J.J. Multi-temporal analysis of forestry and coastal environments using UASs. Remote Sens. 2017, 10, 24. [Google Scholar] [CrossRef]

- Marques, P.; Pádua, L.; Adão, T.; Hruška, J.; Peres, E.; Sousa, A.; Sousa, J.J. UAV-based automatic detection and monitoring of chestnut trees. Remote Sens. 2019, 11, 855. [Google Scholar] [CrossRef]

- Di Gennaro, S.F.; Nati, C.; Dainelli, R.; Pastonchi, L.; Berton, A.; Toscano, P.; Matese, A. An automatic UAV based segmentation approach for pruning biomass estimation in irregularly spaced chestnut orchards. Forests 2020, 11, 308. [Google Scholar] [CrossRef]

- Pádua, L.; Marques, P.; Martins, L.; Sousa, A.; Peres, E.; Sousa, J.J. Monitoring of chestnut trees using machine learning techniques applied to UAV-based multispectral data. Remote Sens. 2020, 12, 3032. [Google Scholar] [CrossRef]

- Albahar, M. A survey on deep learning and its impact on agriculture: Challenges and opportunities. Agriculture 2023, 13, 540. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Deep Count: Fruit Counting Based on Deep Simulated Learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef]

- Afonso, M.; Fonteijn, H.; Fiorentin, F.S.; Lensink, D.; Mooij, M.; Faber, N.; Polder, G.; Wehrens, R. Tomato Fruit Detection and Counting in Greenhouses Using Deep Learning. Front. Plant Sci. 2020, 11, 571299. [Google Scholar] [CrossRef]

- Gao, F.; Fang, W.; Sun, X.; Wu, Z.; Zhao, G.; Li, G.; Li, R.; Fu, L.; Zhang, Q. A novel apple fruit detection and counting methodology based on deep learning and trunk tracking in modern orchard. Comput. Electron. Agric. 2022, 197, 107000. [Google Scholar] [CrossRef]

- Häni, N.; Roy, P.; Isler, V. A Comparative Study of Fruit Detection and Counting Methods for Yield Mapping in Apple Orchards. J. Field Robot. 2020, 37, 263–282. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Pérez-Ruiz, M.; Martínez-Guanter, J.; Valente, J. A Cloud-Based Environment for Generating Yield Estimation Maps From Apple Orchards Using UAV Imagery and a Deep Learning Technique. Front. Plant Sci. 2020, 11, 1086. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning for real-time fruit detection and orchard fruit load estimation: Benchmarking of ‘MangoYOLO’. Precis. Agric. 2019, 20, 1107–1135. [Google Scholar] [CrossRef]

- Xiong, J.; Liu, Z.; Chen, S.; Liu, B.; Zheng, Z.; Zhong, Z.; Yang, Z.; Peng, H. Visual detection of green mangoes by an unmanned aerial vehicle in orchards based on a deep learning method. Biosyst. Eng. 2020, 194, 261–272. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Martínez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield and size of citrus fruits using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Xiong, Z.; Wang, L.; Zhao, Y.; Lan, Y. Precision Detection of Dense Litchi Fruit in UAV Images Based on Improved YOLOv5 Model. Remote Sens. 2023, 15, 4017. [Google Scholar] [CrossRef]

- Neupane, B.; Horanont, T.; Hung, N.D. Deep learning based banana plant detection and counting using high-resolution red-green-blue (RGB) images collected from unmanned aerial vehicle (UAV). PLoS ONE 2019, 14, e0223906. [Google Scholar] [CrossRef] [PubMed]

- Kalantar, A.; Edan, Y.; Gur, A.; Klapp, I. A deep learning system for single and overall weight estimation of melons using unmanned aerial vehicle images. Comput. Electron. Agric. 2020, 178, 105748. [Google Scholar] [CrossRef]

- Mengoli, D.; Bortolotti, G.; Piani, M.; Manfrini, L. On-line real-time fruit size estimation using a depth-camera sensor. In Proceedings of the 2022 IEEE Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Perugia, Italy, 3–5 November 2022; pp. 86–90. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 386–397. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. arXiv 2017, arXiv:1611.05431. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 770–778, ISBN 9781467388504. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2018, arXiv:1708.02002. [Google Scholar] [CrossRef]

- Sun, Y.; Hao, Z.; Guo, Z.; Liu, Z.; Huang, J. Detection and Mapping of Chestnut Using Deep Learning from High-Resolution UAV-Based RGB Imagery. Remote Sens. 2023, 15, 4923. [Google Scholar] [CrossRef]

- Zhong, Q.; Zhang, H.; Tang, S.; Li, P.; Lin, C.; Zhang, L.; Zhong, N. Feasibility Study of Combining Hyperspectral Imaging with Deep Learning for Chestnut-Quality Detection. Foods 2023, 12, 2089. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Jiang, H.; Jiang, X.; Shi, M. Identification of Geographical Origin of Chinese Chestnuts Using Hyperspectral Imaging with 1D-CNN Algorithm. Agriculture 2021, 11, 1274. [Google Scholar] [CrossRef]

- Adão, T.; Pádua, L.; Pinho, T.M.; Hruška, J.; Sousa, A.; Sousa, J.J.; Morais, R.; Peres, E. Multi-Purpose Chestnut Clusters Detection Using Deep Learning: A Preliminary Approach. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4238, 1–7. [Google Scholar] [CrossRef]

- Arakawa, T.; Tanaka, T.S.T.; Kamio, S. Detection of on-tree chestnut fruits using deep learning and RGB unmanned aerial vehicle imagery for estimation of yield and fruit load. Agron. J. 2024, 116, 973–981. [Google Scholar] [CrossRef]

- Comba, L.; Biglia, A.; Sopegno, A.; Grella, M.; Dicembrini, E.; Ricauda Aimonino, D.; Gay, P. Convolutional Neural Network Based Detection of Chestnut Burrs in UAV Aerial Imagery. In AIIA 2022: Biosystems Engineering Towards the Green Deal; Ferro, V., Giordano, G., Orlando, S., Vallone, M., Cascone, G., Porto, S.M.C., Eds.; Lecture Notes in Civil Engineering; Springer: Cham, Switzerland, 2023; pp. 501–508. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Mesas-Carrascosa, F.J.; Santesteban, L.G.; Jiménez-Brenes, F.M.; Oneka, O.; Villa-Llop, A.; Loidi, M.; López-Granados, F. Grape Cluster Detection Using UAV Photogrammetric Point Clouds as a Low-Cost Tool for Yield Forecasting in Vineyards. Sensors 2021, 21, 3083. [Google Scholar] [CrossRef] [PubMed]

- Wu, G.; Li, B.; Zhu, Q.; Huang, M.; Guo, Y. Using color and 3D geometry features to segment fruit point cloud and improve fruit recognition accuracy. Comput. Electron. Agric. 2020, 174, 105475. [Google Scholar] [CrossRef]

- Jurado-Rodríguez, D.; Jurado, J.M.; Pádua, L.; Neto, A.; Muñoz-Salinas, R.; Sousa, J.J. Semantic segmentation of 3D car parts using UAV-based images. Comput. Graph. 2022, 107, 93–103. [Google Scholar] [CrossRef]

- Weatherall, I.L.; Coombs, B.D. Skin Color Measurements in Terms of CIELAB Color Space Values. J. Investig. Dermatol. 1992, 99, 468–473. [Google Scholar] [CrossRef]

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting Encoder Representations for Efficient Semantic Segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. arXiv 2017, arXiv:1612.01105. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLO. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 8 January 2024).

- Bardis, M.; Houshyar, R.; Chantaduly, C.; Ushinsky, A.; Glavis-Bloom, J.; Shaver, M.; Chow, D.; Uchio, E.; Chang, P. Deep Learning with Limited Data: Organ Segmentation Performance by U-Net. Electronics 2020, 9, 1199. [Google Scholar] [CrossRef]

- Iakubovskii, P. Segmentation Models. 2024. Available online: https://github.com/qubvel/segmentation_models (accessed on 5 January 2024).

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar] [CrossRef]

- Chollet, F. Deep Learning with Python; Manning Publications Company: Shelter Island, NY, USA, 2017. [Google Scholar]

- Buslaev, A.; Parinov, A.; Khvedchenya, E.; Iglovikov, V.I.; Kalinin, A.A. Albumentations: Fast and flexible image augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef]

- Lin, T.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Doll’a r, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. arXiv 2014, arXiv:1405.0312. [Google Scholar] [CrossRef]

- Yu, T.; Hu, C.; Xie, Y.; Liu, J.; Li, P. Mature pomegranate fruit detection and location combining improved F-PointNet with 3D point cloud clustering in orchard. Comput. Electron. Agric. 2022, 200, 107233. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Sanz-Cortiella, R.; Rosell-Polo, J.R.; Morros, J.R.; Ruiz-Hidalgo, J.; Vilaplana, V.; Gregorio, E. Fruit detection and 3D location using instance segmentation neural networks and structure-from-motion photogrammetry. Comput. Electron. Agric. 2020, 169, 105165. [Google Scholar] [CrossRef]

- Miranda, J.C.; Gené-Mola, J.; Zude-Sasse, M.; Tsoulias, N.; Escolà, A.; Arnó, J.; Rosell-Polo, J.R.; Sanz-Cortiella, R.; Martínez-Casasnovas, J.A.; Gregorio, E. Fruit sizing using AI: A review of methods and challenges. Postharvest Biol. Technol. 2023, 206, 112587. [Google Scholar] [CrossRef]

- Chen, S.W.; Shivakumar, S.S.; Dcunha, S.; Das, J.; Okon, E.; Qu, C.; Taylor, C.J.; Kumar, V. Counting Apples and Oranges With Deep Learning: A Data-Driven Approach. IEEE Robot. Autom. Lett. 2017, 2, 781–788. [Google Scholar] [CrossRef]

- Kestur, R.; Meduri, A.; Narasipura, O. MangoNet: A deep semantic segmentation architecture for a method to detect and count mangoes in an open orchard. Eng. Appl. Artif. Intell. 2019, 77, 59–69. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J.P. Image Segmentation for Fruit Detection and Yield Estimation in Apple Orchards. J. Field Robot. 2017, 34, 1039–1060. [Google Scholar] [CrossRef]

- Olson, D.; Anderson, J. Review on unmanned aerial vehicles, remote sensors, imagery processing, and their applications in agriculture. Agron. J. 2021, 113, 971–992. [Google Scholar] [CrossRef]

| Dataset | U-Net | LinkNet | PSPNet | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | IoU | Precision | Recall | F1 | IoU | Precision | Recall | F1 | IoU | Precision | Recall | |

| Dataset 1 | 0.56 | 0.39 | 0.51 | 0.72 | 0.53 | 0.36 | 0.50 | 0.69 | 0.51 | 0.34 | 0.50 | 0.54 |

| Dataset 2 | 0.76 | 0.62 | 0.78 | 0.79 | 0.76 | 0.62 | 0.76 | 0.81 | 0.71 | 0.55 | 0.71 | 0.76 |

| Merged | 0.67 | 0.52 | 0.76 | 0.49 | 0.67 | 0.52 | 0.73 | 0.54 | 0.65 | 0.48 | 0.57 | 0.66 |

| Training | Test | U-Net | LinkNet | PSPNet | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | IoU | Precision | Recall | F1 | IoU | Precision | Recall | F1 | IoU | Precision | Recall | ||

| Dataset 1 | Dataset 2 | 0.42 | 0.27 | 0.29 | 0.94 | 0.4 | 0.25 | 0.27 | 0.95 | 0.35 | 0.22 | 0.23 | 0.95 |

| Dataset 2 | Dataset 1 | 0.07 | 0.04 | 0.94 | 0.04 | 0.08 | 0.04 | 0.88 | 0.04 | 0.02 | 0.01 | 0.85 | 0.01 |

| Merged | Dataset 1 | 0.43 | 0.28 | 0.84 | 0.30 | 0.49 | 0.33 | 0.79 | 0.38 | 0.54 | 0.38 | 0.53 | 0.59 |

| Merged | Dataset 2 | 0.75 | 0.60 | 0.72 | 0.83 | 0.73 | 0.57 | 0.68 | 0.81 | 0.67 | 0.51 | 0.63 | 0.80 |

| Models | Dataset 1 | |||||||

|---|---|---|---|---|---|---|---|---|

| Mean | Mode | Median | Regions | Regions Size Std | ||||

| Value | Count | Value | Count | Value | Count | Value | Value | |

| U-Net | 174 | 426 | 5 | 14,824 | 122 | 608 | 6142 | 179 |

| LinkNet | 171 | 369 | 5 | 12,695 | 112 | 563 | 5915 | 200 |

| PSPNet | 159 | 312 | 5 | 9904 | 109 | 455 | 4454 | 192 |

| Models | Dataset 2 | |||||||

| Mean | Mode | Median | Regions | Regions Size Std | ||||

| Value | Count | Value | Count | Value | Count | Value | Value | |

| U-Net | 120 | 930 | 84 | 1328 | 103 | 1083 | 4331 | 84 |

| LinkNet | 122 | 965 | 80 | 1471 | 101 | 1165 | 4399 | 92 |

| PSPNet | 121 | 1021 | 59 | 2094 | 102 | 1210 | 3945 | 92 |

| Models | Dataset 1 | |||||||

|---|---|---|---|---|---|---|---|---|

| Mean | Mode | Median | Regions | Regions Size Std | ||||

| Value | Count | Value | Count | Value | Count | Value | Value | |

| U-Net | 52 | 218 | 12 | 945 | 46 | 245 | 5847 | 38 |

| LinkNet | 56 | 314 | 5 | 3516 | 44 | 400 | 7839 | 52 |

| PSPNet | 146 | 326 | 5 | 9497 | 98 | 485 | 4862 | 197 |

| Models | Dataset 2 | |||||||

| Mean | Mode | Median | Regions | Regions Size Std | ||||

| Value | Count | Value | Count | Value | Count | Value | Value | |

| U-Net | 132 | 930 | 82 | 1496 | 111 | 1106 | 4343 | 97 |

| LinkNet | 129 | 1113 | 8 | 17,939 | 108 | 1328 | 4661 | 101 |

| PSPNet | 139 | 1095 | 8 | 19,022 | 115 | 1324 | 4007 | 114 |

| Training | Testing | F1 | Precision | Recall | mAP at 50% of IoU | Counting () | Counting () |

|---|---|---|---|---|---|---|---|

| Dataset 1 | Dataset 1 | 0.52 | 0.53 | 0.52 | 0.47 | 379 | 729 |

| Dataset 1 | Dataset 2 | 0.34 | 0.49 | 0.26 | 0.24 | 729 | 943 |

| Dataset 2 | Dataset 1 | 0.15 | 0.12 | 0.20 | 0.10 | 67 | 244 |

| Dataset 2 | Dataset 2 | 0.78 | 0.81 | 0.76 | 0.81 | 2403 | 3802 |

| Merged | Dataset 1 | 0.51 | 0.50 | 0.52 | 0.45 | 659 | 1080 |

| Merged | Dataset 2 | 0.77 | 0.80 | 0.75 | 0.80 | 2456 | 4038 |

| Characteristics | Dataset 1 | Arakawa et al. [53] | Comba et al. [54] |

|---|---|---|---|

| Samples | 144 (training) 21 (counting) | 500 (training) 53 (counting) | 44 |

| Image Size | 320/336 | 418 (training) 608 (counting) | 16 |

| Phytosanitary problems | Yes | No | No |

| Flight height | 30 m | 12–15 m | 20 m |

| Weather Conditions | Sunny | Cloudy | Sunny |

| Image Type | RGB | RGB | Multispectral |

| Cultivar | Castanea sativa | Castanea cretana Castanea molissima | Bouch de Bétizac (hybrid between Castanea sativa and Castanea crenata) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carneiro, G.A.; Santos, J.; Sousa, J.J.; Cunha, A.; Pádua, L. Chestnut Burr Segmentation for Yield Estimation Using UAV-Based Imagery and Deep Learning. Drones 2024, 8, 541. https://doi.org/10.3390/drones8100541

Carneiro GA, Santos J, Sousa JJ, Cunha A, Pádua L. Chestnut Burr Segmentation for Yield Estimation Using UAV-Based Imagery and Deep Learning. Drones. 2024; 8(10):541. https://doi.org/10.3390/drones8100541

Chicago/Turabian StyleCarneiro, Gabriel A., Joaquim Santos, Joaquim J. Sousa, António Cunha, and Luís Pádua. 2024. "Chestnut Burr Segmentation for Yield Estimation Using UAV-Based Imagery and Deep Learning" Drones 8, no. 10: 541. https://doi.org/10.3390/drones8100541

APA StyleCarneiro, G. A., Santos, J., Sousa, J. J., Cunha, A., & Pádua, L. (2024). Chestnut Burr Segmentation for Yield Estimation Using UAV-Based Imagery and Deep Learning. Drones, 8(10), 541. https://doi.org/10.3390/drones8100541