Using YOLO Object Detection to Identify Hare and Roe Deer in Thermal Aerial Video Footage—Possible Future Applications in Real-Time Automatic Drone Surveillance and Wildlife Monitoring

Abstract

:1. Introduction

1.1. Automatic Detection and Computer Vision

1.2. You-Only-Look-Once-Based UAV Technology

1.3. Mean Average Precision

2. Materials and Methods

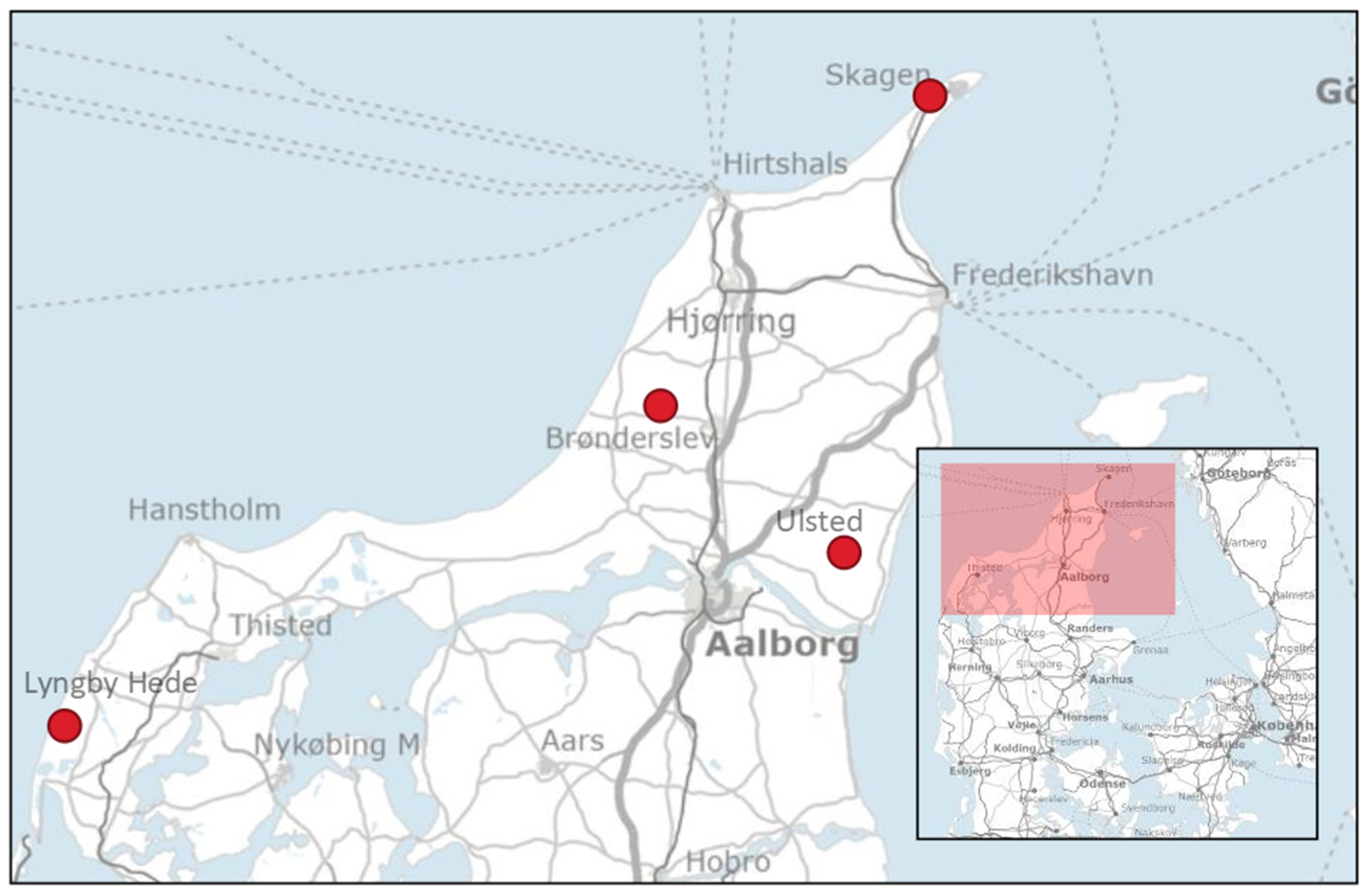

2.1. Collecting Thermal Footage of the Species

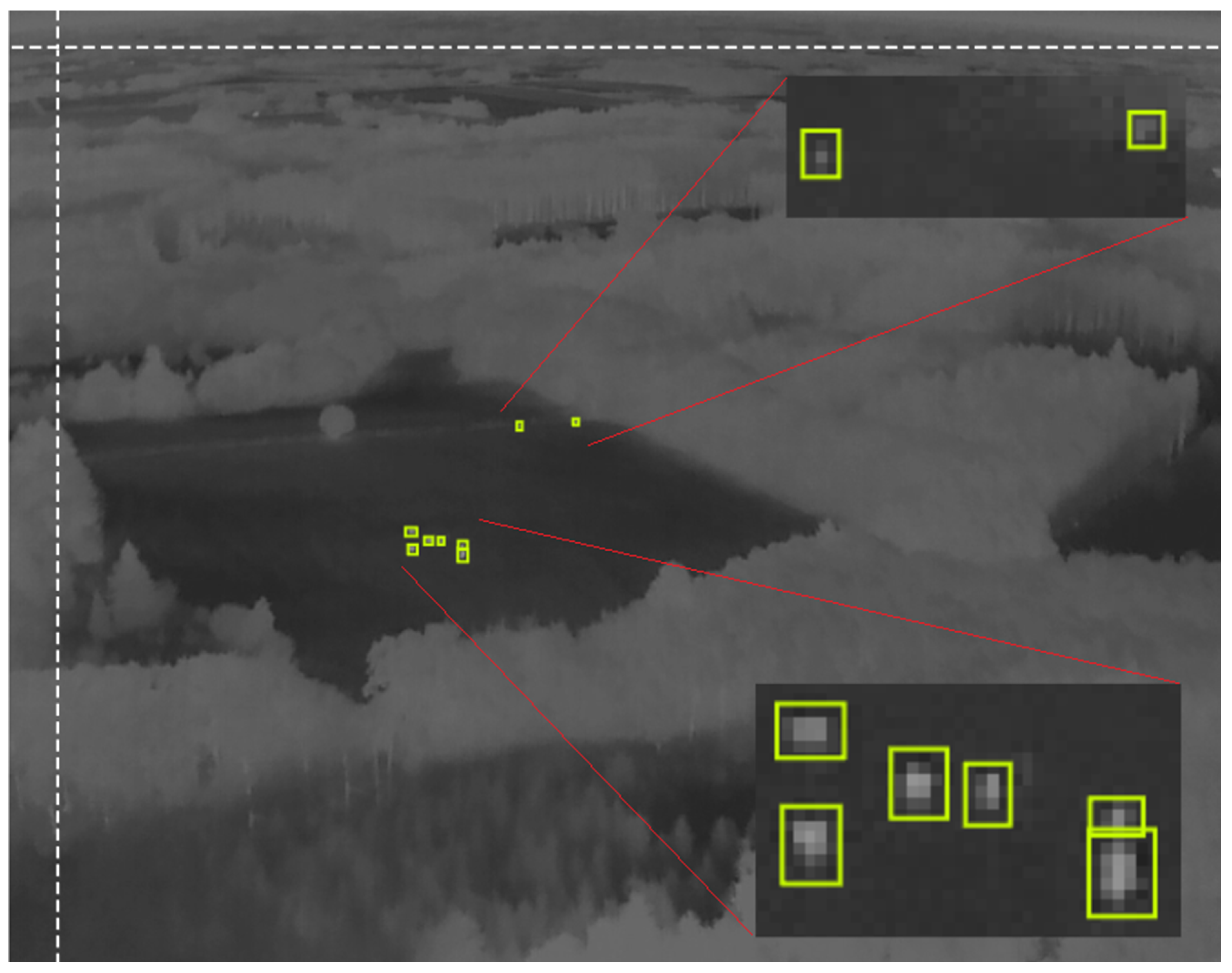

2.2. Image Annotation

2.3. Custom Training of YOLOv5 for Object Detection

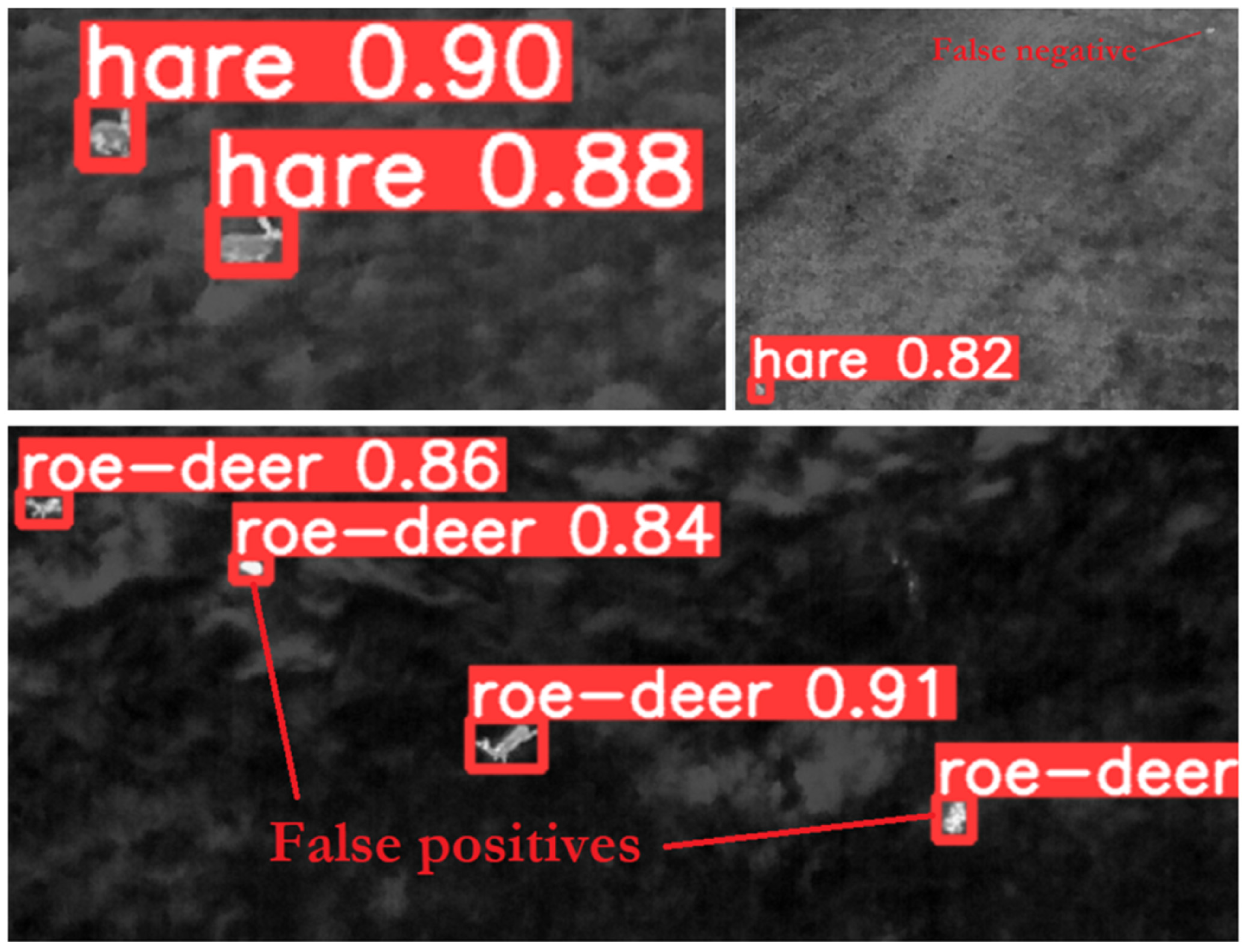

2.4. Evaluating Model Accuracy, Detection, and False Positives and False Negatives

3. Results

4. Discussion

4.1. Conceptual Algorithm for Automated Wildlife Monitoring Using YBUT

4.2. Limitations of Study

4.3. Similar Studies and Perspectives

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Linchant, J.; Lisein, J.; Semeki, J.; Lejeune, P.; Vermeulen, C. Are unmanned aircraft systems (UASs) the future of wildlife monitoring? A review of accomplishments and challenges. Mammal. Rev. 2015, 45, 239–252. [Google Scholar] [CrossRef]

- Lyu, X.; Li, X.; Dang, D.; Dou, H.; Wang, K.; Lou, A. Unmanned Aerial Vehicle (UAV) Remote Sensing in Grassland Ecosystem Monitoring: A Systematic Review. Remote Sens. 2022, 14, 1096. [Google Scholar] [CrossRef]

- Corcoran, E.; Winsen, M.; Sudholz, A.; Hamilton, G. Automated detection of wildlife using drones: Synthesis, opportunities and constraints. Methods Ecol. Evol. 2021, 12, 1103–1114. [Google Scholar] [CrossRef]

- Petso, T.; Jamisola, R.S.; Mpoeleng, D. Review on methods used for wildlife species and individual identification. Eur. J. Wildl. Res. 2022, 68, 3. [Google Scholar] [CrossRef]

- Robinson, J.M.; Harrison, P.A.; Mavoa, S.; Breed, M.F. Existing and emerging uses of drones in restoration ecology. Methods Ecol. Evol. 2022, 13, 1899–1911. [Google Scholar] [CrossRef]

- Chen, C.; Zheng, Z.; Xu, T.; Guo, S.; Feng, S.; Yao, W.; Lan, Y. YOLO-Based UAV Technology: A Review of the Research and Its Applications. Drones 2023, 7, 190. [Google Scholar] [CrossRef]

- Tomljanovic, K.; Kolar, A.; Duka, A.; Franjevic, M.; Jurjevic, L.; Matak, I.; Ugarkovic, D.; Balenovic, I. Application of UAS for Monitoring of Forest Ecosystems—A Review of Experience and Knowledge. Croat. J. For. Eng. 2022, 43, 487–504. [Google Scholar] [CrossRef]

- Psiroukis, V.; Malounas, I.; Mylonas, N.; Grivakis, K.; Fountas, S.; Hadjigeorgiou, I. Monitoring of free-range rabbits using aerial thermal imaging. Smart Agric. Technol. 2021, 1, 100002. [Google Scholar] [CrossRef]

- Povlsen, P.; Bruhn, D.; Pertoldi, C.; Pagh, S. A Novel Scouring Method to Monitor Nocturnal Mammals Using Uncrewed Aerial Vehicles and Thermal Cameras—A Comparison to Line Transect Spotlight Counts. Drones 2023, 7, 661. [Google Scholar] [CrossRef]

- Chrétien, L.; Théau, J.; Ménard, P. Wildlife multispecies remote sensing using visible and thermal infrared imagery acquired from an unmanned aerial vehicle (UAV). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-1/W4, 241–248. [Google Scholar] [CrossRef]

- Beaver, J.T.; Baldwin, R.W.; Messinger, M.; Newbolt, C.H.; Ditchkoff, S.S.; Silman, M.R. Evaluating the Use of Drones Equipped with Thermal Sensors as an Effective Method for Estimating Wildlife. Wildl. Soc. Bull. 2020, 44, 434–443. [Google Scholar] [CrossRef]

- Baidya, R.; Jeong, H. YOLOv5 with ConvMixer Prediction Heads for Precise Object Detection in Drone Imagery. Sensors 2022, 22, 8424. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Gao, F.; Yang, W.; Zhang, H. Wildlife Object Detection Method Applying Segmentation Gradient Flow and Feature Dimensionality Reduction. Electronics 2023, 12, 377. [Google Scholar] [CrossRef]

- Winsen, M.; Denman, S.; Corcoran, E.; Hamilton, G. Automated Detection of Koalas with Deep Learning Ensembles. Remote Sens. 2022, 14, 2432. [Google Scholar] [CrossRef]

- Rominger, K.R.; Meyer, S.E. Drones, Deep Learning, and Endangered Plants: A Method for Population-Level Census Using Image Analysis. Drones 2021, 5, 126. [Google Scholar] [CrossRef]

- Tan, M.; Chao, W.; Cheng, J.; Zhou, M.; Ma, Y.; Jiang, X.; Ge, J.; Yu, L.; Feng, L. Animal Detection and Classification from Camera Trap Images Using Different Mainstream Object Detection Architectures. Animals 2022, 12, 1976. [Google Scholar] [CrossRef]

- Pei, Y.; Xu, L.; Zheng, B. Improved YOLOv5 for Dense Wildlife Object Detection; Deng, W., Feng, J., Huang, D., Kan, M., Sun, Z., Zheng, F., Wang, W., He, Z., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2022; pp. 569–578. [Google Scholar]

- Eikelboom, J.A.J.; Wind, J.; van de Ven, E.; Kenana, L.M.; Schroder, B.; de Knegt, H.J.; van Langevelde, F.; Prins, H.H.T. Improving the precision and accuracy of animal population estimates with aerial image object detection. Methods Ecol. Evol. 2019, 10, 1875–1887. [Google Scholar] [CrossRef]

- Ultralytics/YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 27 April 2023).

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ultralytics.com. Available online: https://docs.ultralytics.com/ (accessed on 28 April 2023).

- DJI Matrice 300RTK. Available online: https://www.dji.com/dk/matrice-300 (accessed on 27 April 2023).

- Whitworth, A.; Pinto, C.; Ortiz, J.; Flatt, E.; Silman, M. Flight speed and time of day heavily influence rainforest canopy wildlife counts from drone-mounted thermal camera surveys. Biodivers Conserv. 2022, 31, 3179–3195. [Google Scholar] [CrossRef]

- Dai, W.; Wang, H.; Song, Y.; Xin, Y. Wildlife small object detection based on enhanced network in ecological surveillance. In Proceedings of the 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021. [Google Scholar]

- Li, S.; Li, Y.; Li, Y.; Li, M.; Xu, X. YOLO-FIRI: Improved YOLOv5 for Infrared Image Object Detection. IEEE 2021, 9, 141861–141875. [Google Scholar] [CrossRef]

- Roboflow.com. Available online: https://help.roboflow.com/ (accessed on 27 April 2023).

- Povlsen, P.; Linder, A.C.; Larsen, H.L.; Durdevic, P.; Arroyo, D.O.; Bruhn, D.; Pertoldi, C.; Pagh, S. Using Drones with Thermal Imaging to Estimate Population Counts of European Hare (Lepus europaeus) in Denmark. Drones 2023, 7, 5. [Google Scholar] [CrossRef]

- Clapham, M.; Miller, E.; Nguyen, M.; Darimont, C.T. Automated facial recognition for wildlife that lack unique markings: A deep learning approach for brown bears. Ecol. Evol. 2020, 10, 12883–12892. [Google Scholar] [CrossRef] [PubMed]

- Roboflow Notebooks. Available online: https://github.com/roboflow/notebooks (accessed on 27 April 2023).

- Droneregler.dk. Available online: https://www.droneregler.dk/ (accessed on 27 April 2023).

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A wheat spike detection method in UAV images based on improved yolov5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Lee, S.; Song, Y.; Kil, S. Feasibility Analyses of Real-Time Detection of Wildlife Using UAV-Derived Thermal and RGB Images. Remote Sens. 2021, 13, 2169. [Google Scholar] [CrossRef]

- Micheal, A.A.; Vani, K.; Sanjeevi, S.; Lin, C. Object Detection and Tracking with UAV Data Using Deep Learning. J. Indian Soc. Remote Sens. 2021, 49, 463–469. [Google Scholar] [CrossRef]

- Lipping, T.; Linna, P.; Narra, N. New Developments and Environmental Applications of Drones: Proceedings of FinDrones 2020; Springer International Publishing AG: Cham, Switzerland, 2021. [Google Scholar]

- Partheepan, S.; Sanati, F.; Hassan, J. Autonomous Unmanned Aerial Vehicles in Bushfire Management: Challenges and Opportunities. Drones 2023, 7, 47. [Google Scholar] [CrossRef]

- Chen, J.; Chen, Z.; Huang, R.; You, H.; Han, X.; Yue, T.; Zhou, G. The Effects of Spatial Resolution and Resampling on the Classification Accuracy of Wetland Vegetation Species and Ground Objects: A Study Based on High Spatial Resolution UAV Images. Drones 2023, 7, 61. [Google Scholar] [CrossRef]

| Number of Annotated Images | Number of Objects per Image | Number of Training Set Images | Number of Validation Set Images | Number of Test Set Images | |

|---|---|---|---|---|---|

| Hare | 627 | ~1.1 | 1310 | 123 | 40 |

| Roe deer | 158 | ~1.3 | 313 | 31 | 17 |

| POI | 260 | ~5.4 | 549 | 46 | 21 |

| Model/ Confidence Limit | Trained Model mAP | Number of Object | Correctly Annotated % | False Negative % | False Positive % |

|---|---|---|---|---|---|

| Hare 0.50 | 0.99 | 169 | 100 | 0 | 21 |

| Hare 0.80 | 0.99 | 169 | 72 | 28 | 0 |

| Roe deer 0.50 | 0.96 | 133 | 100 | 0 | 58 |

| Roe deer 0.80 | 0.96 | 133 | 97 | 3 | 24 |

| POI 0.20 | 0.43 | 624 | 60 | 40 | 10 |

| POI 0.50 | 0.43 | 624 | 29 | 71 | 2 |

| POI 0.80 | 0.43 | 624 | 0 | 100 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Povlsen, P.; Bruhn, D.; Durdevic, P.; Arroyo, D.O.; Pertoldi, C. Using YOLO Object Detection to Identify Hare and Roe Deer in Thermal Aerial Video Footage—Possible Future Applications in Real-Time Automatic Drone Surveillance and Wildlife Monitoring. Drones 2024, 8, 2. https://doi.org/10.3390/drones8010002

Povlsen P, Bruhn D, Durdevic P, Arroyo DO, Pertoldi C. Using YOLO Object Detection to Identify Hare and Roe Deer in Thermal Aerial Video Footage—Possible Future Applications in Real-Time Automatic Drone Surveillance and Wildlife Monitoring. Drones. 2024; 8(1):2. https://doi.org/10.3390/drones8010002

Chicago/Turabian StylePovlsen, Peter, Dan Bruhn, Petar Durdevic, Daniel Ortiz Arroyo, and Cino Pertoldi. 2024. "Using YOLO Object Detection to Identify Hare and Roe Deer in Thermal Aerial Video Footage—Possible Future Applications in Real-Time Automatic Drone Surveillance and Wildlife Monitoring" Drones 8, no. 1: 2. https://doi.org/10.3390/drones8010002

APA StylePovlsen, P., Bruhn, D., Durdevic, P., Arroyo, D. O., & Pertoldi, C. (2024). Using YOLO Object Detection to Identify Hare and Roe Deer in Thermal Aerial Video Footage—Possible Future Applications in Real-Time Automatic Drone Surveillance and Wildlife Monitoring. Drones, 8(1), 2. https://doi.org/10.3390/drones8010002