UAV Localization in Low-Altitude GNSS-Denied Environments Based on POI and Store Signage Text Matching in UAV Images

Abstract

:1. Introduction

2. Related Work

- ✓

- Relative vision localization

- ✓

- Absolute vision localization

3. Methods

3.1. The LPS Framework

3.2. Introduction to POI

3.3. Text Recognition in the UAV Images

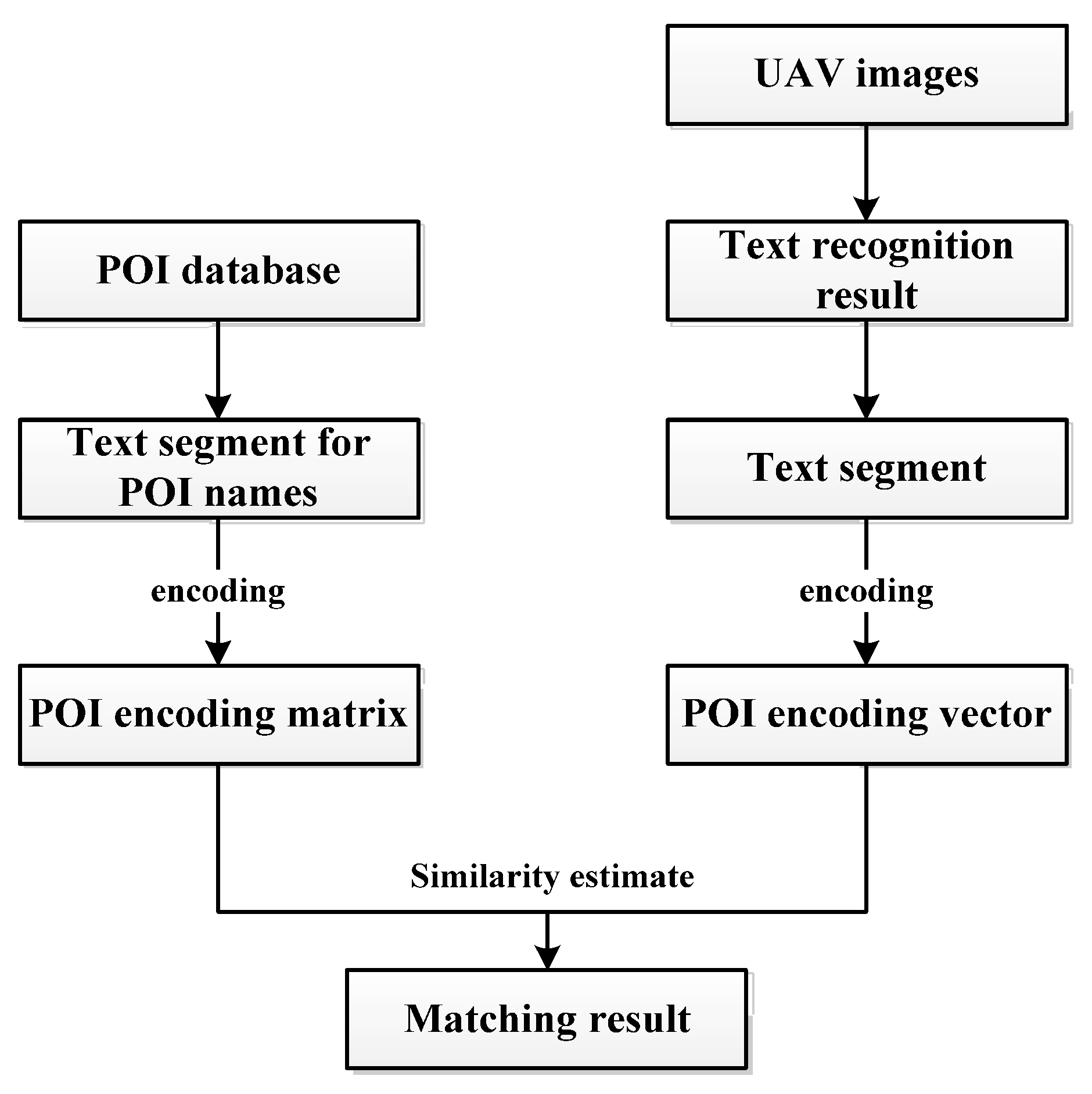

3.4. Fuzzy Matching of Store Signage Text and POI Names

- (1)

- Count the number of different phrases in the name attribute of the POI database, and calculate the IDF value for each phrase with the following formula:where n represents the total number of entries in the POI database and count represents the number of POI entries containing this phrase.

- (2)

- Encode each POI name string by generating a vector of length m. Each position of the vector represents the IDF value of a phrase, where m represents the number of all phrases contained in the POI database.

- (3)

- For the store signages recognized from the UAV images, first perform text segmentation, and then perform the same encoding as that in step (2).

- (4)

- Match the store signage text identified in the UAV image with the name in the POI database. Iterate through the POI database and use the cosine function to measure the similarity between the name in the POI database and the store signage text:represent the encoding vector of the POI database name to be matched and the encoding vector of the store signage text, respectively. In this paper, the text is thought to be matched successfully if the similarity exceeds 0.75.

3.5. Scene Localization for UAV Images

- (1)

- Scene initialization localization

- (a)

- Assuming a large position uncertainty for the UAV at take-off, the POI database is spatially retrieved with the UAV take-off position as the center and the uncertainty R as the radius, and the retrieved results are used as subsequent POI entries to be matched.

- (b)

- Text is recognized from sequential UAV images and is pre-processed. Because some store signages are truncated by the UAV images, leaving only one word, and to ensure that the text matching process is as trustworthy as possible, any recognized text shorter than two words is removed. In addition, due to the high overlap of consecutive frames of UAV images, the text is detected repeatedly; thus, to avoid duplicate matching, duplicate text with a high similarity is removed according to the IDF model.

- (c)

- The recognized text is fuzzy matched with the names in the spatially retrieved POI database using the IDF model, and the match is considered successful when the similarity between the text and the names of the POI exceeds 0.75.

- (d)

- Because the fuzzy matching result for the text of a single signage may contain multiple POIs, and in order to ensure that the final matched POIs are correct, the distance between the matched POIs for multiple signages during scene initialization is quite strict, and the interval cannot exceed 50 m. When the cumulative number of successfully matched texts does not reach 3, the text continues to be identified from the subsequent images and is matched with the POI. When the cumulative number of successfully matched texts reaches 3, the DBSCAN algorithm is used to cluster the location of the matched POIs, and the parameter eps are set to 50 m. If there are no less than two POIs in the clustering cluster, the POIs in the cluster correspond to the signages in the UAV image. If there is no clustering cluster, the text continues to be identified and is matched with the POI from the subsequent images.

- (2)

- Scene update

- (a)

- Spatial retrieval of POI data is carried out with the initialized location of the scene as the center and 50 m as the radius. Then, the retrieval results are subsequent POI entries to be matched.

- (b)

- Text recognition occurs for the j-th frame image and removes any text shorter than two words.

- (c)

- Fuzzy matching of the recognized text with the names in the POI database is performed after spatial retrieval using the IDF model, which is considered successful when the similarity between the text and the name of the POI exceeds 0.75.

- (d)

- If the match is successful, the location of the UAV image scene at this time is the latitude and longitude of the POI corresponding to the signage. If the match is not successful, the POI spatial retrieval radius is reset to r, and then the next image frame is processed, where r = V × (j – i) + 50 m and V is the maximum flight speed of the UAV.

3.6. UAV Position Solving

- (1)

- The image coordinates of the center pixel point of the store signage Oi (Xi, Yi) are calculated. Based on the extracted image coordinates of the four corner points of each signage, the mean value of the row and column directions is calculated as follows:

- (2)

- Store signages at the same height in the image are found. Because of the roll angle when taking UAV images, the store signages at the same height may actually not be located in the same row in the image. To determine whether the store signage of ID.1 and the store signage of ID.2 in Table 1 are at the same height, a straight line is formed by connecting the midpoint of A1D1 and the midpoint of B1C1. If this line passes through the rectangle formed by the corner points of the store signage of ID.2, these two signages are considered to be at the same height; otherwise, they are not at the same height. By iterating all signage combinations, store signages at the same height can be identified.

- (3)

- If only one shop signage exists at different heights, then this image is skipped. In addition, if there is more than one store signage at the same height in step (2), the signages at the lowest level are chosen to calculate the latitude and longitude coordinates of the corner points. If there are more than two store signages at the lowest level, the two signages at the farthest distance are chosen to calculate the latitude and longitude of the corner points. Therefore, in Figure 5, the signage of ID.2 and signage of ID.4 are chosen to calculate the latitude and longitude of the corner points. The latitude and longitude of two corner points on the same side of the same store signage are the same, and only the height is not the same (such as for point A2 and D2 in Figure 5). For point E in Figure 5, it has the same latitude and longitude as those of A2 and D2; therefore,

4. Experiments and Results

4.1. Experiment Data

4.1.1. Hardware Configuration

4.1.2. Data Acquisition

4.1.3. POI Data

4.2. Results

4.2.1. Text Recognition Results

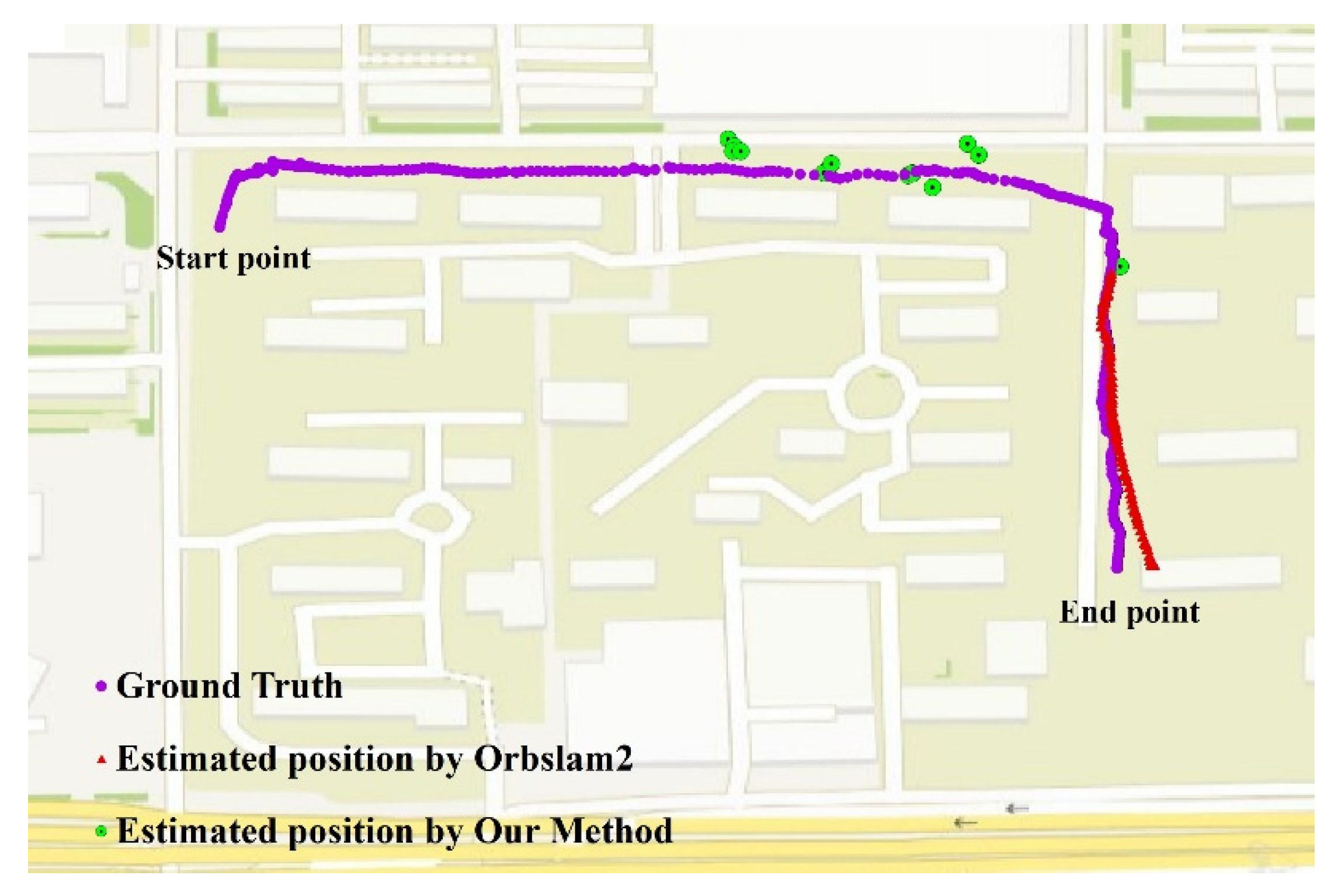

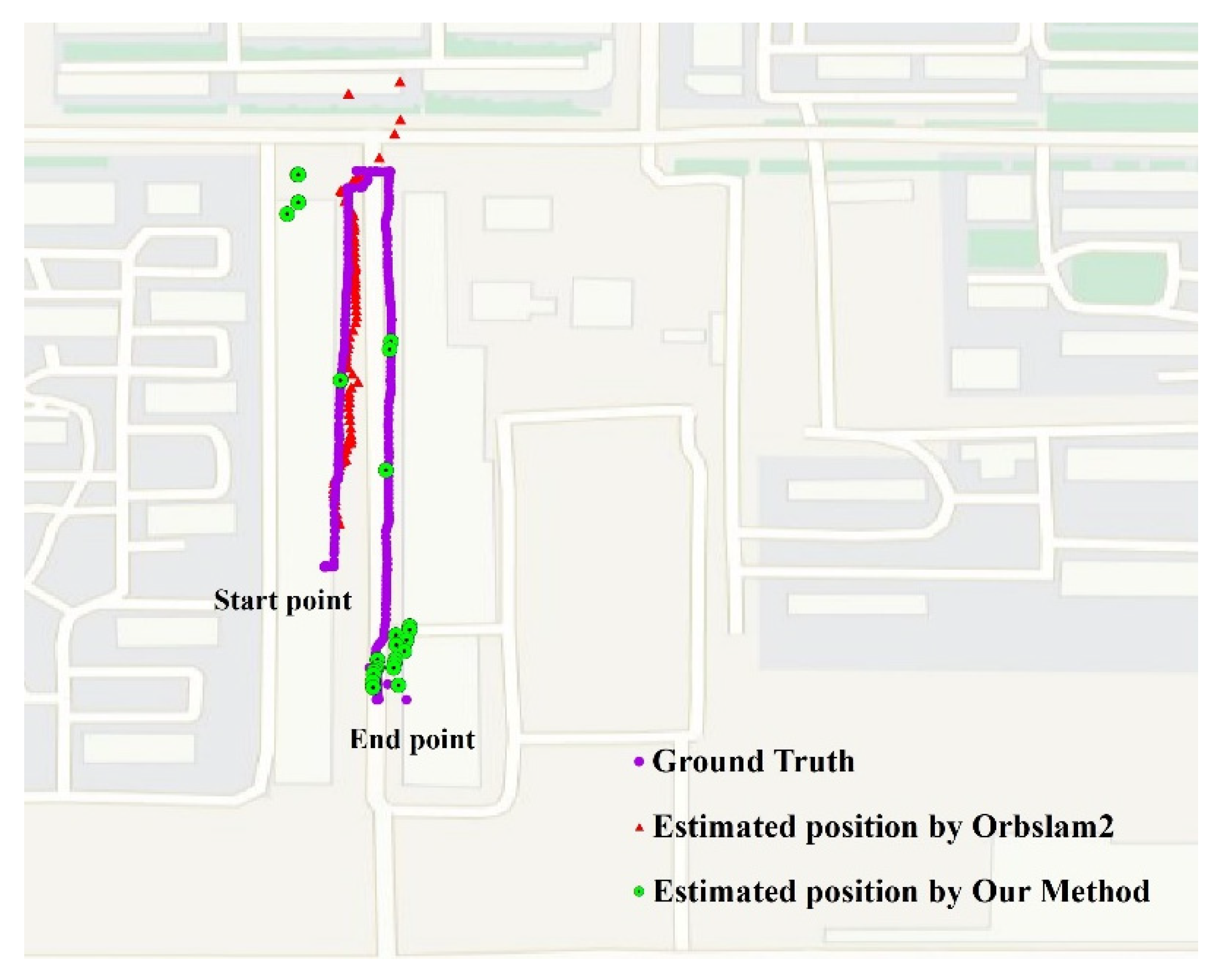

4.2.2. UAV Positioning Results

5. Discussion

5.1. Analysis of Localization Error

5.2. Analysis of the Number of Localization Points

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, N.; Xiang, W. Summary of Research Status of UAV Combat Navigation and Positioning in Urban Environment. Unmanned Syst. Technol. 2022, 5, 75–87. [Google Scholar] [CrossRef]

- Scherer, J.; Yahyanejad, S.; Hayat, S.; Yanmaz, E.; Vukadinovic, V.; Andre, T.; Bettstetter, C.; Rinner, B.; Khan, A.; Hellwagner, H. An Autonomous Multi-UAV System for Search and Rescue. In Proceedings of the 2015 Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use; Florence, Italy, 18 May 2015; Association for Computing Machinery, Inc.: New York, NY, USA, 2015; pp. 33–38. [Google Scholar]

- Gonçalves, J.A.; Henriques, R. UAV Photogrammetry for Topographic Monitoring of Coastal Areas. ISPRS J. Photogramm. Remote Sens. 2015, 104, 101–111. [Google Scholar] [CrossRef]

- Jiang, Z.; Groves, P.D. NLOS GPS Signal Detection Using a Dual-Polarisation Antenna. GPS Solut. 2014, 18, 15–26. [Google Scholar] [CrossRef]

- Couturier, A.; Akhloufi, M.A. A Review on Absolute Visual Localization for UAV. Robot. Auton. Syst. 2021, 135, 103666. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-Time Single Camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Washington, DC, USA, 13–16 November 2007. [Google Scholar]

- Macario Barros, A.; Michel, M.; Moline, Y.; Corre, G.; Carrel, F. A Comprehensive Survey of Visual SLAM Algorithms. Robotics 2022, 11, 24. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Qinghua, Z.; Yixue, L.; Ke, S.; Yineng, L. Review on SLAM Technology Development for Vision and Its Fusion of Inertial Information. J. Nanjing Univ. Aeronaut. Astronaut. 2022, 54. [Google Scholar] [CrossRef]

- Gui, J.; Gu, D.; Wang, S.; Hu, H. A Review of Visual Inertial Odometry from Filtering and Optimisation Perspectives. Adv. Robot. 2015, 29, 1289–1301. [Google Scholar] [CrossRef]

- Mourikis, A.I.; Roumeliotis, S.I. A Multi-State Constraint Kalman Filter for Vision-Aided Inertial Navigation. In Proceedings of the IEEE International Conference on Robotics and Automation, Rome, Italy, 10 April 2007. [Google Scholar]

- Weiss, S.M. Vision Based Navigation for Micro Helicopters; ETH Zürich: Zurich, Switzerland, 2012. [Google Scholar]

- Lynen, S.; Achtelik, M.W.; Weiss, S.; Chli, M.; Siegwart, R.; Lynen, S.; Achtelik, M.W.; Weiss, S.; Chli, M. A Robust and Modular Multi-Sensor Fusion Approach Applied to MAV Navigation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2003. [Google Scholar]

- Leutenegger, S.; Furgale, P.; Rabaud, V.; Chli, M.; Konolige, K.; Siegwart, R.; Leutenegger, S.; Furgale, P.; Rabaud, V.; Chli, M.; et al. Keyframe-Based Visual-Inertial SLAM Using Nonlinear Optimization. In Proceedings of Robotis Science and Systems (RSS); Robotics: Science and Systems: Daegu, Republic of Korea, 2013. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Wang, R.; Wan, W.; Wang, Y.; Di, K. A New RGB-D SLAM Method with Moving Object Detection for Dynamic Indoor Scenes. Remote Sens. 2019, 11, 1143. [Google Scholar] [CrossRef]

- Fu, D.; Xia, H.; Qiao, Y. Monocular Visual-Inertial Navigation for Dynamic Environment. Remote Sens. 2021, 13, 1610. [Google Scholar] [CrossRef]

- Chao, Y.; Zuxin, L.; Xin-Jun, L.; Fugui, X.; Yi, Y.; Qi, W.; Qiao, F. DS-SLAM: A Semantic Visual SLAM towards Dynamic Environments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1168–1174. [Google Scholar]

- Wan, X.; Liu, J.; Yan, H.; Morgan, G.L.K. Illumination-Invariant Image Matching for Autonomous UAV Localisation Based on Optical Sensing. ISPRS J. Photogramm. Remote Sens. 2016, 119, 198–213. [Google Scholar] [CrossRef]

- Lewis, J.P. Fast Template Matching. In Proceedings of the Vision Interface 95, Canadian Image Processing and Pattern Recognition Society, Quebec City, QC, Canada, 15–19 May 1995; pp. 120–123. [Google Scholar]

- Aurélien, Y.; Bertrand, D.; Amaury, D.; Jean-Émile, D. Eric Marchand Vision-Based Absolute Localization for Unmanned Aerial Vehicles. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 2014; pp. 3429–3434. [Google Scholar]

- Patel, B.; Barfoot, T.D.; Schoellig, A.P. Visual Localization with Google Earth Images for Robust Global Pose Estimation of UAVs. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–4 June 2020; pp. 6491–6497. [Google Scholar]

- Geoffrey, P.; Will, M.; Paul, N. Robust Direct Visual Localisation Using Normalised Information Distance. In Proceedings of the British Machine Vision Conference (BMVC), Swansea, UK, 7–10 September 2015; p. 4. [Google Scholar]

- Rosten, E.; Porter, R.; Drummond, T. Faster and Better: A Machine Learning Approach to Corner Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 105–119. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine Learning for High-Speed Corner Detection. In Lecture Notes in Computer Science (Including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2006; Volume 3951, pp. 430–443. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded up Robust Features. In Lecture Notes in Computer Science (Including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2006; Volume 3951, pp. 404–417. [Google Scholar]

- Mantelli, M.; Pittol, D.; Neuland, R.; Ribacki, A.; Maffei, R.; Jorge, V.; Prestes, E.; Kolberg, M. A Novel Measurement Model Based on AbBRIEF for Global Localization of a UAV over Satellite Images. Robot. Auton. Syst. 2019, 112, 304–319. [Google Scholar] [CrossRef]

- Kinnari, J.; Verdoja, F.; Kyrki, V. Season-Invariant GNSS-Denied Visual Localization for UAVs. IEEE Robot. Autom. Lett. 2022, 7, 10232–10239. [Google Scholar] [CrossRef]

- Kinnari, J.; Verdoja, F.; Kyrki, V. GNSS-Denied Geolocalization of UAVs by Visual Matching of Onboard Camera Images with Orthophotos. In Proceedings of the 20th International Conference on Advanced Robotics, ICAR 2021, Ljubljana, Slovenia, 6–10 December 2021; Institute of Electrical and Electronics Engineers Inc.: Manhattan, NY, USA, 2021; pp. 555–562. [Google Scholar]

- Wang, H.; Cheng, Y.; Liu, N.; Zhao, Y.; Cheung-Wai Chan, J.; Li, Z. An Illumination-Invariant Shadow-Based Scene Matching Navigation Approach in Low-Altitude Flight. Remote Sens. 2022, 14, 3869. [Google Scholar] [CrossRef]

- Choi, J.; Myung, H. BRM Localization: UAV Localization in GNSS-Denied Environments Based on Matching of Numerical Map and UAV Images. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020; pp. 4537–4544. [Google Scholar]

- Nassar, A.; Amer, K.; Elhakim, R.; Elhelw, M. A Deep CNN-Based Framework for Enhanced Aerial Imagery Registration with Applications to UAV Geolocalization. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Madrid, Spain, 13 December 2018; Volume 2018. pp. 1594–1604.

- Masselli, A.; Hanten, R.; Zell, A. Localization of Unmanned Aerial Vehicles Using Terrain Classification from Aerial Images. In Intelligent Autonomous Systems 13; Springer: Cham, Switzerland, 2016; Volume 302, pp. 831–842. [Google Scholar]

- Dilshad, N.; Ullah, A.; Kim, J.; Seo, J. LocateUAV: Unmanned Aerial Vehicle Location Estimation via Contextual Analysis in an IoT Environment. IEEE Internet Things J. 2023, 10, 4021–4033. [Google Scholar] [CrossRef]

- POI Data Description. Available online: https://www.poi86.com/ (accessed on 16 December 2022).

- Li, C.; Liu, W.; Guo, R.; Yin, X.; Jiang, K.; Du, Y.; Du, Y.; Zhu, L.; Lai, B.; Hu, X.; et al. PP-OCRv3: More Attempts for the Improvement of Ultra Lightweight OCR System. arXiv 2022, arXiv:2206.03001. [Google Scholar]

- Aizawa, A. An Information-Theoretic Perspective of Tf-Idf Measures. Inf. Process. Manag. 2003, 39, 45–65. [Google Scholar] [CrossRef]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Pan, J. Design and Implementation of Guangdong POI Data Crawler Program Based on Amap.Com; Guangzhou University: Guangzhou, China, 2019. [Google Scholar]

| ID | Text Information | Quadrangular Point Pixel Coordinates | Coordinate of POI |

|---|---|---|---|

| 1 | 晁文图文快印广告 * | A1 (x11, y11) B1 (x12, y12) D1 (x14, y14) C1 (x13, y13) | (lon1, lat1) |

| 2 | 乐途烟酒茶 ** | A2 (x21, y21) B2 (x22, y22) D2 (x24, y24) C2 (x23, y23) | (lon2, lat2) |

| 3 | 艺剪美美容美发头皮养护 *** | A3 (x31, y31) B3 (x32, y32) D3 (x14, y34) C3 (x33, y13) | (lon3, lat3) |

| 4 | 青年红 **** | A4 (x41, y41) B4 (x42, y42) D4 (x44, y44) C4 (x43, y43) | (lon4, lat4) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Bai, J.; Wang, G.; Wu, X.; Sun, F.; Guo, Z.; Geng, H. UAV Localization in Low-Altitude GNSS-Denied Environments Based on POI and Store Signage Text Matching in UAV Images. Drones 2023, 7, 451. https://doi.org/10.3390/drones7070451

Liu Y, Bai J, Wang G, Wu X, Sun F, Guo Z, Geng H. UAV Localization in Low-Altitude GNSS-Denied Environments Based on POI and Store Signage Text Matching in UAV Images. Drones. 2023; 7(7):451. https://doi.org/10.3390/drones7070451

Chicago/Turabian StyleLiu, Yu, Jing Bai, Gang Wang, Xiaobo Wu, Fangde Sun, Zhengqiang Guo, and Hujun Geng. 2023. "UAV Localization in Low-Altitude GNSS-Denied Environments Based on POI and Store Signage Text Matching in UAV Images" Drones 7, no. 7: 451. https://doi.org/10.3390/drones7070451

APA StyleLiu, Y., Bai, J., Wang, G., Wu, X., Sun, F., Guo, Z., & Geng, H. (2023). UAV Localization in Low-Altitude GNSS-Denied Environments Based on POI and Store Signage Text Matching in UAV Images. Drones, 7(7), 451. https://doi.org/10.3390/drones7070451