Geometric and Radiometric Quality Assessments of UAV-Borne Multi-Sensor Systems: Can UAVs Replace Terrestrial Surveys?

Abstract

:1. Introduction

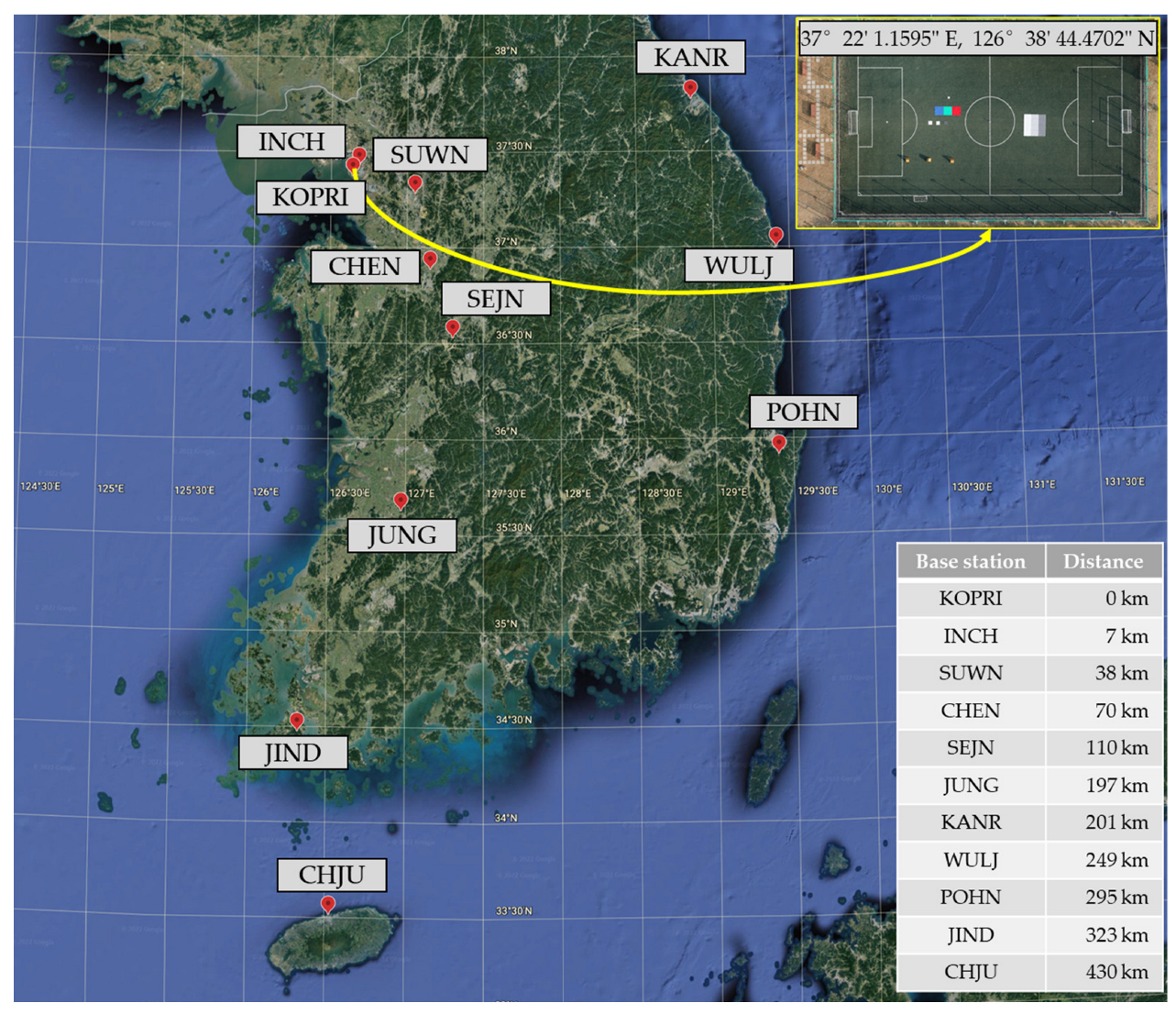

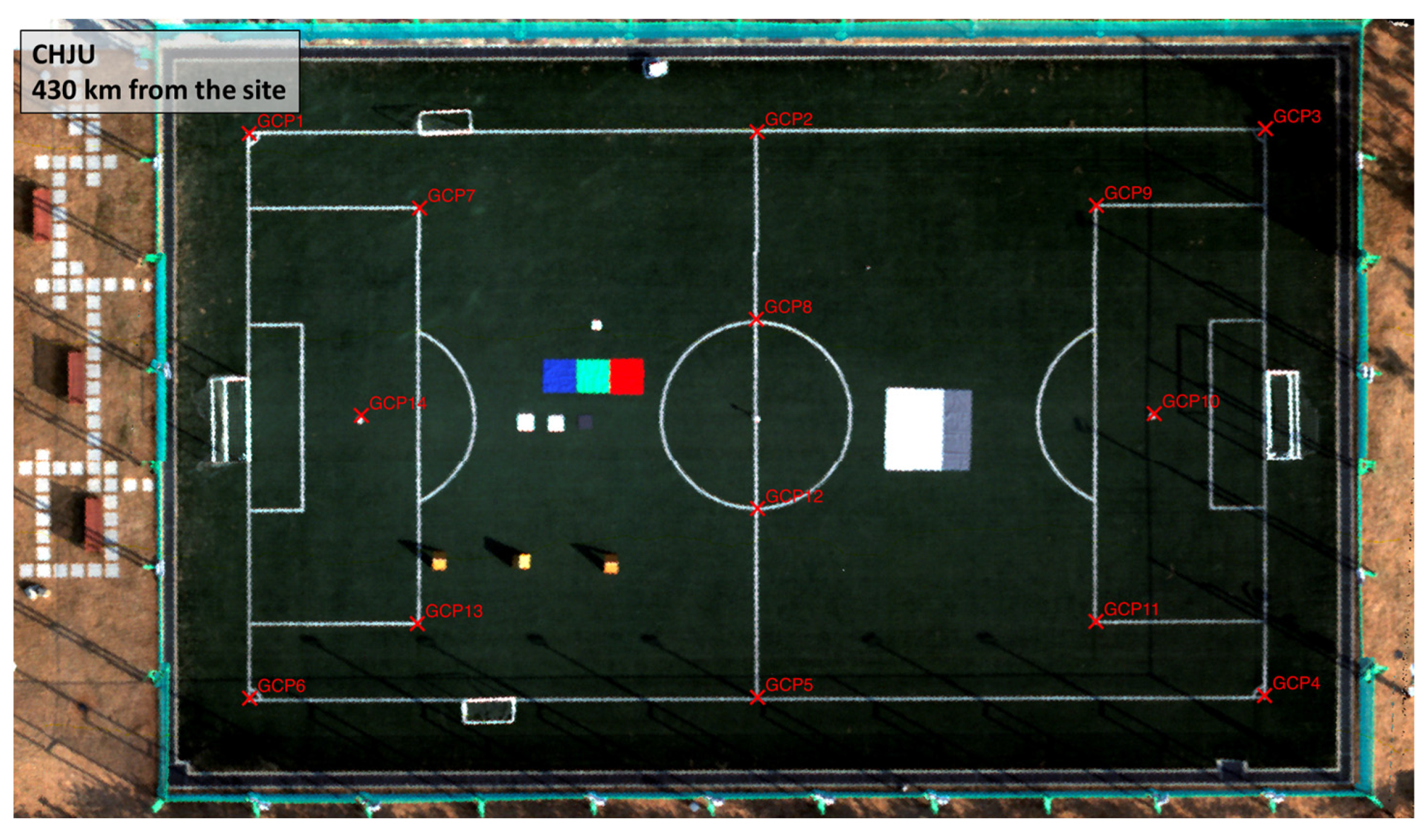

2. Data Acquisitions

3. Methods

3.1. Position Accuracy of Orthorectified Images

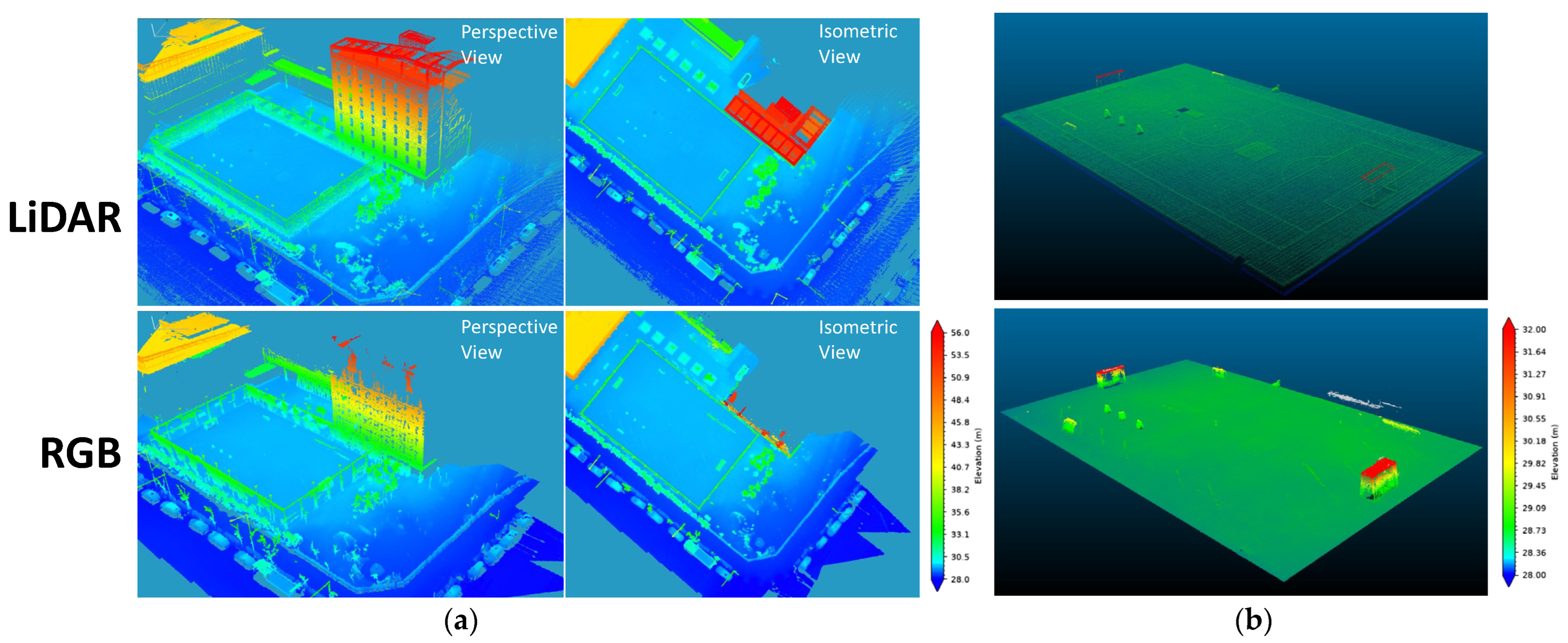

3.2. Quality and Vertical Accuracy of RGB and LiDAR Point Clouds

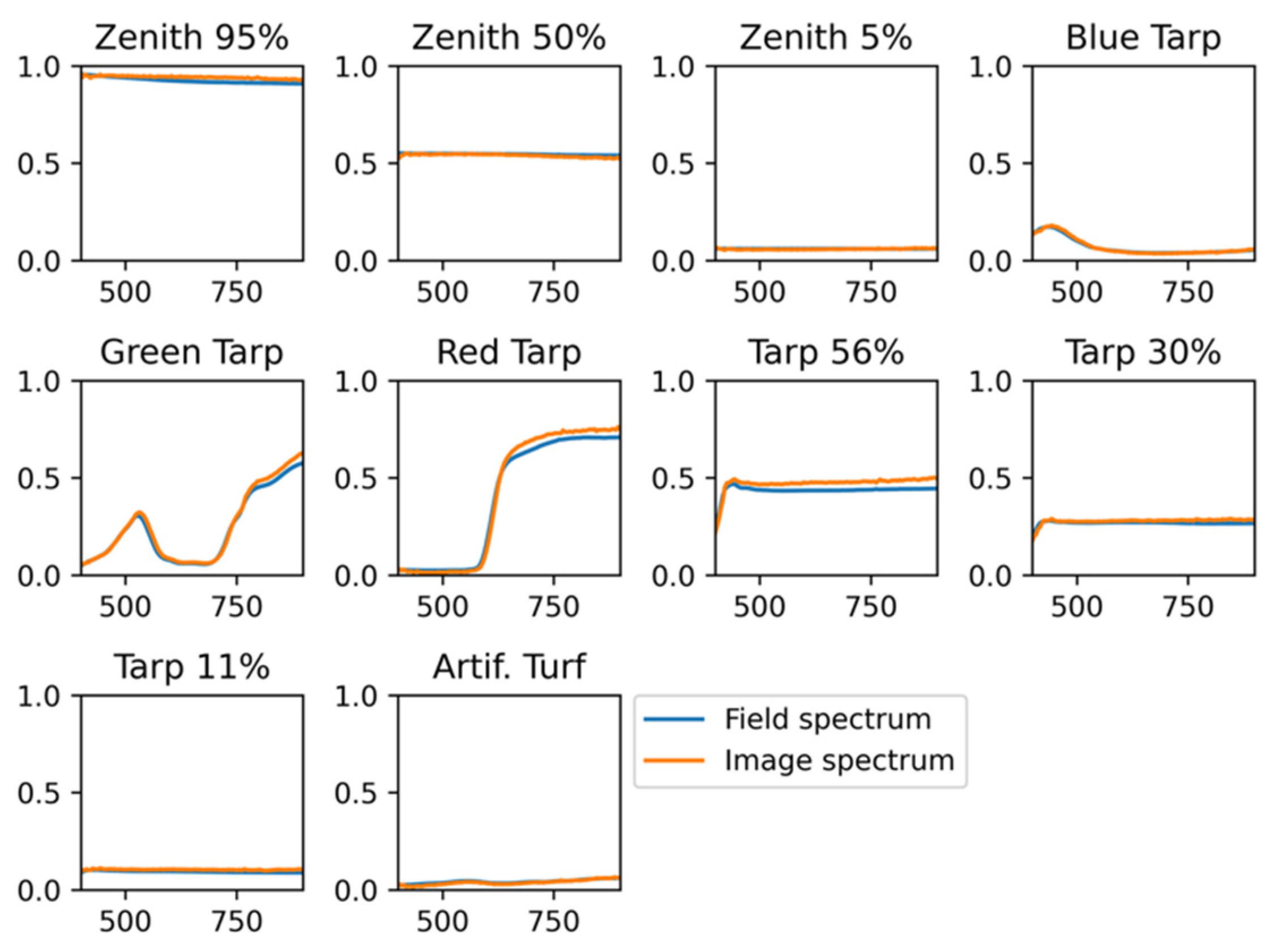

3.3. Radiometric Quality of Hyperspectral Images

4. Results

4.1. Position Accuracy of Orthorectified Images

4.2. Quality and Vertical Accuracy of RGB and LiDAR Point Clouds

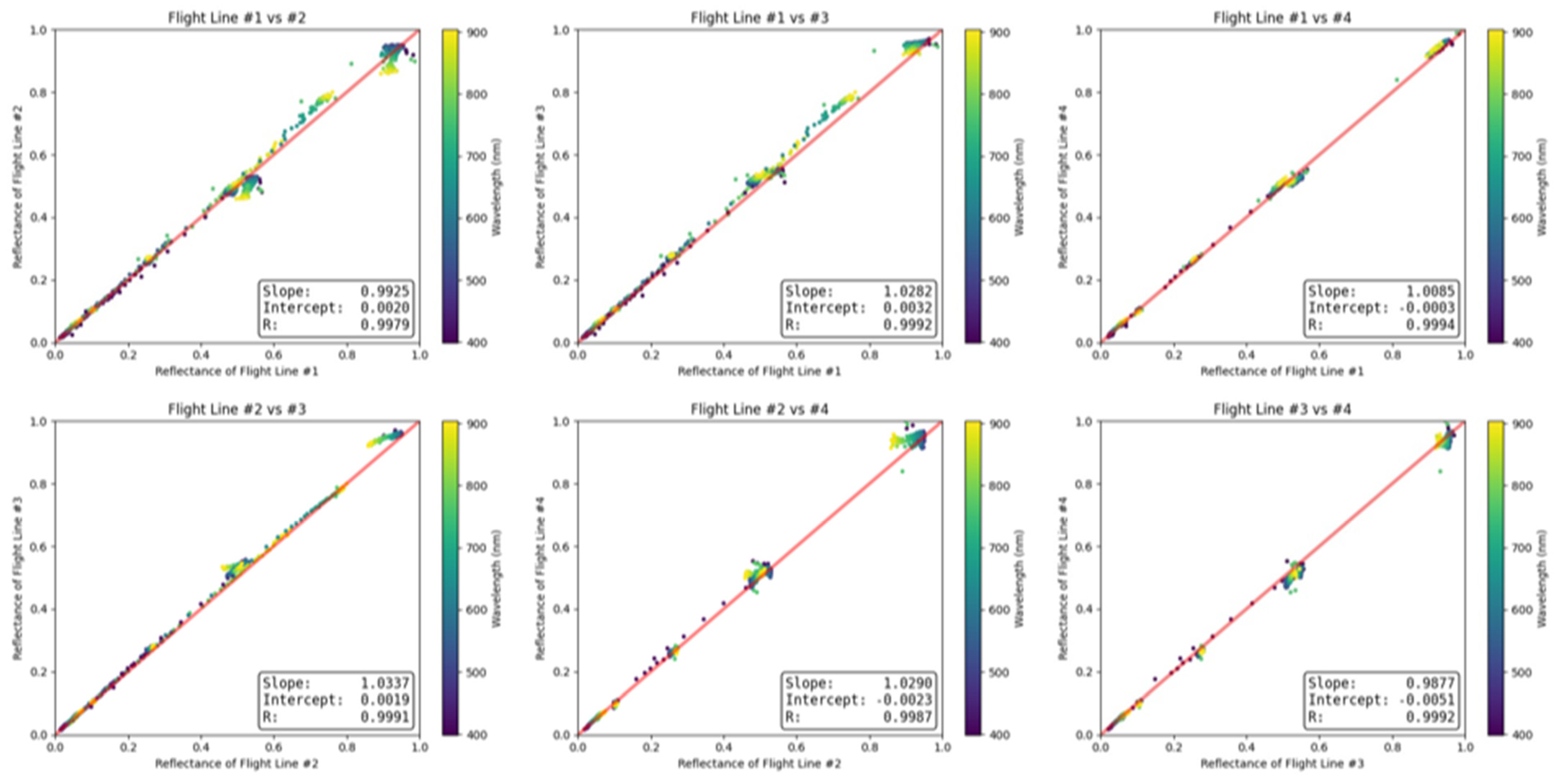

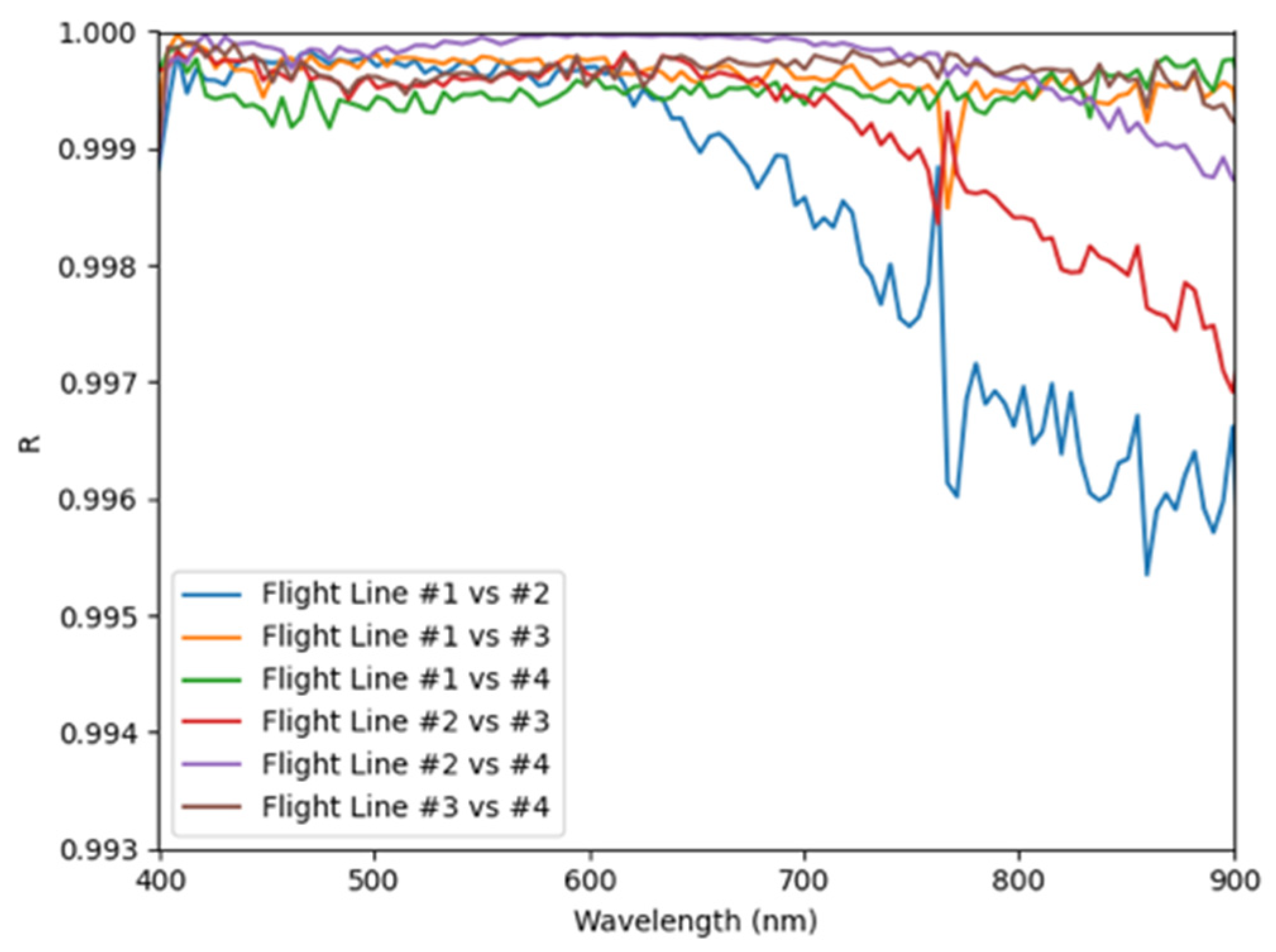

4.3. Radiometric Quality of Hyperspectral Images

5. Discussions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alex, T.; Maican, L.; Muhammad, H.B.M.H.; Joseph, G.D.; Jeannie, S.A.L.; Henrik, H.; Hoang, D.N. Drone-Based AI and 3D Reconstruction for Digital Twin Augmentation; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 511–529. [Google Scholar]

- Dong, J.; Zhuang, D.; Huang, Y.; Fu, J. Advances in Multi-Sensor Data Fusion: Algorithms and Applications. Sensors 2009, 9, 7771–7784. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV Lidar and Hyperspectral Fusion for Forest Monitoring in the Southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- Goetz, A.F.H.; Vane, G.; Solomon, J.E.; Rock, B.N. Imaging Spectrometry for Earth Remote Sensing. Science 1985, 228, 1147–1153. [Google Scholar] [CrossRef] [PubMed]

- Green, R.O.; Eastwood, M.L.; Sarture, C.M.; Chrien, T.G.; Aronsson, M.; Chippendale, B.J.; Faust, J.A.; Pavri, B.E.; Chovit, C.J.; Solis, M.; et al. Imaging Spectroscopy and the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS). Remote Sens. Environ. 1998, 65, 227–248. [Google Scholar] [CrossRef]

- Pearlman, J.S.; Barry, P.S.; Segal, C.C.; Shepanski, J.; Beiso, D.; Carman, S.L. Hyperion, a Space-Based Imaging Spectrometer. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1160–1173. [Google Scholar] [CrossRef]

- Loizzo, R.; Guarini, R.; Longo, F.; Scopa, T.; Formaro, R.; Facchinetti, C.; Varacalli, G. Prisma: The Italian Hyperspectral Mission. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 175–178. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef] [Green Version]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points Used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

- Martin, M.E.; Aber, J.D. High Spectral Resolution Remote Sensing of Forest Canopy Lignin, Nitrogen, and Ecosystem Processes. Ecol. Appl. 1997, 7, 431–443. [Google Scholar] [CrossRef]

- Barreto, M.A.P.; Johansen, K.; Angel, Y.; McCabe, M.F. Radiometric Assessment of a UAV-Based Push-Broom Hyperspectral Camera. Sensors 2019, 19, 4699. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Trimble APX-15 UAV Datasheet. Available online: https://www.applanix.com/downloads/products/specs/APX15_UAV.pdf (accessed on 17 March 2022).

- Habib, A.; Zhou, T.; Masjedi, A.; Zhang, Z.; Flatt, J.E.; Crawford, M. Boresight Calibration of GNSS/INS-Assisted Push-Broom Hyperspectral Scanners on UAV Platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 1734–1749. [Google Scholar] [CrossRef]

- Ravi, R.; Shamseldin, T.; Elbahnasawy, M.; Lin, Y.-J.; Habib, A. Bias Impact Analysis and Calibration of UAV-Based Mobile LiDAR System with Spinning Multi-Beam Laser Scanner. Appl. Sci. 2018, 8, 297. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.; Chi, J.; Masjedi, A.; Flatt, J.E.; Crawford, M.M.; Habib, A.F.; Lee, J.; Kim, H. High-resolution Hyperspectral Imagery from Pushbroom Scanners on Unmanned Aerial Systems. Geosci. Data J. 2022, 9, 221–234. [Google Scholar] [CrossRef]

- Hruska, R.; Mitchell, J.; Anderson, M.; Glenn, N.F. Radiometric and Geometric Analysis of Hyperspectral Imagery Acquired from an Unmanned Aerial Vehicle. Remote Sens. 2012, 4, 2736–2752. [Google Scholar] [CrossRef] [Green Version]

- Yuhas, R.H.; Goetz, A.F.; Boardman, J.W. Discrimination among Semi-Arid Landscape Endmembers Using the Spectral Angle Mapper (SAM) Algorithm. In Proceedings of the JPL, Summaries of the Third Annual JPL Airborne Geoscience Workshop, Pasadena, CA, USA, 1 January 1992; Volume 1, pp. 147–149. [Google Scholar]

- L3 Harris Geospatial Documentation Center. Available online: https://www.l3harrisgeospatial.com/docs/thormaterialidentification.html (accessed on 7 April 2022).

- Feng, Y.; Wang, J. GPS RTK Performance Characteristics and Analysis. J. Glob. Position. Syst. 2008, 7, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Khomsin; Anjasmara, I.M.; Pratomo, D.G.; Ristanto, W. Accuracy Analysis of GNSS (GPS, GLONASS and BEIDOU) Obsevation For Positioning. E3s Web Conf. 2019, 94, 01019. [Google Scholar] [CrossRef]

- Ma, L.; Wang, X.; Li, S. Accuracy Analysis of GPS Broadcast Ephemeris in the 2036th GPS Week. IOP Conf. Ser. Mater. Sci. Eng. 2019, 631, 042013. [Google Scholar] [CrossRef]

- Möller, G.; Landskron, D. Atmospheric Bending Effects in GNSS Tomography. Atmos. Meas. Tech. 2018, 12, 23–34. [Google Scholar] [CrossRef] [Green Version]

- Kääb, A.; Bolch, T.; Casey, K.; Heid, T.; Kargel, J.S.; Leonard, G.J.; Paul, F.; Raup, B.H. Global Land Ice Measurements from Space. In Global Land Ice Measurements from Space; Springer: Berlin/Heidelberg, Germany, 2014; pp. 75–112. ISBN 9783540798170. [Google Scholar]

| RGB (SONY A7R III) | |

| Spatial pixels (image size) | 7952 × 5304 |

| Sensor size | 35.943 mm × 23.974 mm |

| Detector pixel size | 4.52 µm |

| Focal length | 35 mm |

| Hyperspectral (Headwall Nano-Hyperspec) | |

| Spectral range | 400–1000 nm |

| Spectral pixels (or bands) | 270 |

| Detector pixel size | 7.4 µm |

| Spatial pixels | 640 |

| Charge-coupled device (CCD) technology | Complementary metal oxide semiconductor (CMOS) |

| Maximum frame rate | 350 Hz |

| Pixel depth (dynamic range) | 12-bit |

| Focal length of lens | 8 mm |

| LiDAR (Velodyne Puck Hi-Res) | |

| Number of channels | 16 |

| Maximum measurement range | 100 m |

| Range accuracy (typical) | Up to ±3 cm |

| Field of view | −10°–10° (vertical); 360° (horizontal) |

| Angular resolution | 1.33° (vertical); 0.1°–0.4° (horizontal) |

| Rotation rate | 5–20 Hz |

| Laser wavelength | 903 nm |

| Laser pulses per second | 300,000 (single return mode) 600,000 (dual return mode) |

| GCP | X | Y | GCP | X | Y |

|---|---|---|---|---|---|

| #1 | 291,510.212 | 4,138,204.498 | #8 | 291,520.020 | 4,138,188.103 |

| #2 | 291,524.214 | 4,138,193.245 | #9 | 291,531.900 | 4,138,183.662 |

| #3 | 291,538.254 | 4,138,182.009 | #10 | 291,528.850 | 4,138,176.649 |

| #4 | 291,525.611 | 4,138,166.438 | #11 | 291,522.622 | 4,138,172.227 |

| #5 | 291,511.632 | 4,138,177.683 | #12 | 291,515.831 | 4,138,182.864 |

| #6 | 291,497.650 | 4,138,188.961 | #13 | 291,503.926 | 4,138,187.281 |

| #7 | 291,513.239 | 4,138,198.653 | #14 | 291,507.010 | 4,138,194.246 |

| System | PPK | Easting | Northing | Planar |

|---|---|---|---|---|

| DJI Phantom 4 (21 February 2022) | N/A | 1.412 | 3.344 | 3.630 |

| DJI Phantom 4 (22 February 2022) | N/A | 0.364 | 4.609 | 4.623 |

| GNSS/IMU-assisted RGB | Yes | 0.018 | 0.019 | 0.026 |

| CORS | Distance (km) | Easting (cm) | Northing (cm) | Planar (cm) |

|---|---|---|---|---|

| KOPRI (On-site) | 0 | 4.25 | 4.38 | 6.11 |

| INCH | 7 | 2.68 | 4.59 | 5.32 |

| SUWN | 38 | 2.25 | 4.34 | 4.89 |

| CHEN | 70 | 2.06 | 4.03 | 4.52 |

| SEJN | 110 | 2.28 | 4.27 | 4.85 |

| JUNG | 197 | 8.07 | 5.53 | 9.78 |

| KANR | 201 | 2.38 | 4.23 | 4.85 |

| WULJ | 249 | 3.09 | 5.23 | 6.07 |

| POHN | 295 | 2.76 | 6.37 | 6.94 |

| JIND | 323 | 6.55 | 5.68 | 8.67 |

| CHJU | 430 | 6.81 | 4.69 | 8.27 |

| Soccer Goals | Futsal Goals | A-Frame Signs | |

|---|---|---|---|

| Ground Truth | 210 | 120 | 85 |

| LiDAR Height | 211 | 122 | 86 |

| RGB Height | 205 | 118 | 87 |

| Spectral Angle (Unit: Radian) | RMSE | |

|---|---|---|

| Zenith 95% | 0.0108 | 0.0194 |

| Zenith 50% | 0.0093 | 0.0080 |

| Zenith 5% | 0.0461 | 0.0031 |

| Blue Tarp | 0.0453 | 0.0041 |

| Green Tarp | 0.0333 | 0.0206 |

| Red Tarp | 0.0305 | 0.0312 |

| 56% Tarp | 0.0281 | 0.0389 |

| 30% Tarp | 0.0305 | 0.0129 |

| 11% Tarp | 0.0360 | 0.0107 |

| Artificial Turf | 0.1031 | 0.0051 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chi, J.; Kim, J.-I.; Lee, S.; Jeong, Y.; Kim, H.-C.; Lee, J.; Chung, C. Geometric and Radiometric Quality Assessments of UAV-Borne Multi-Sensor Systems: Can UAVs Replace Terrestrial Surveys? Drones 2023, 7, 411. https://doi.org/10.3390/drones7070411

Chi J, Kim J-I, Lee S, Jeong Y, Kim H-C, Lee J, Chung C. Geometric and Radiometric Quality Assessments of UAV-Borne Multi-Sensor Systems: Can UAVs Replace Terrestrial Surveys? Drones. 2023; 7(7):411. https://doi.org/10.3390/drones7070411

Chicago/Turabian StyleChi, Junhwa, Jae-In Kim, Sungjae Lee, Yongsik Jeong, Hyun-Cheol Kim, Joohan Lee, and Changhyun Chung. 2023. "Geometric and Radiometric Quality Assessments of UAV-Borne Multi-Sensor Systems: Can UAVs Replace Terrestrial Surveys?" Drones 7, no. 7: 411. https://doi.org/10.3390/drones7070411

APA StyleChi, J., Kim, J.-I., Lee, S., Jeong, Y., Kim, H.-C., Lee, J., & Chung, C. (2023). Geometric and Radiometric Quality Assessments of UAV-Borne Multi-Sensor Systems: Can UAVs Replace Terrestrial Surveys? Drones, 7(7), 411. https://doi.org/10.3390/drones7070411