Enhancing Online UAV Multi-Object Tracking with Temporal Context and Spatial Topological Relationships

Abstract

1. Introduction

- We design a novel temporal feature aggregation module to enhance the temporal consistency of network perception. By adaptively fusing multiple frame features, we improve the robustness of perception in UAV scenarios, including occlusion, small objects, and motion blur.

- We propose a topology-integrated embedding module to model the long-range dependencies of the entire image in a global and sparse manner. By incorporating global contextual information through a deformable attention mechanism, the discriminative power of the object embedding is enhanced, leading to improved accuracy in data association.

2. Related Work

2.1. Multi-Object Tracking (MOT)

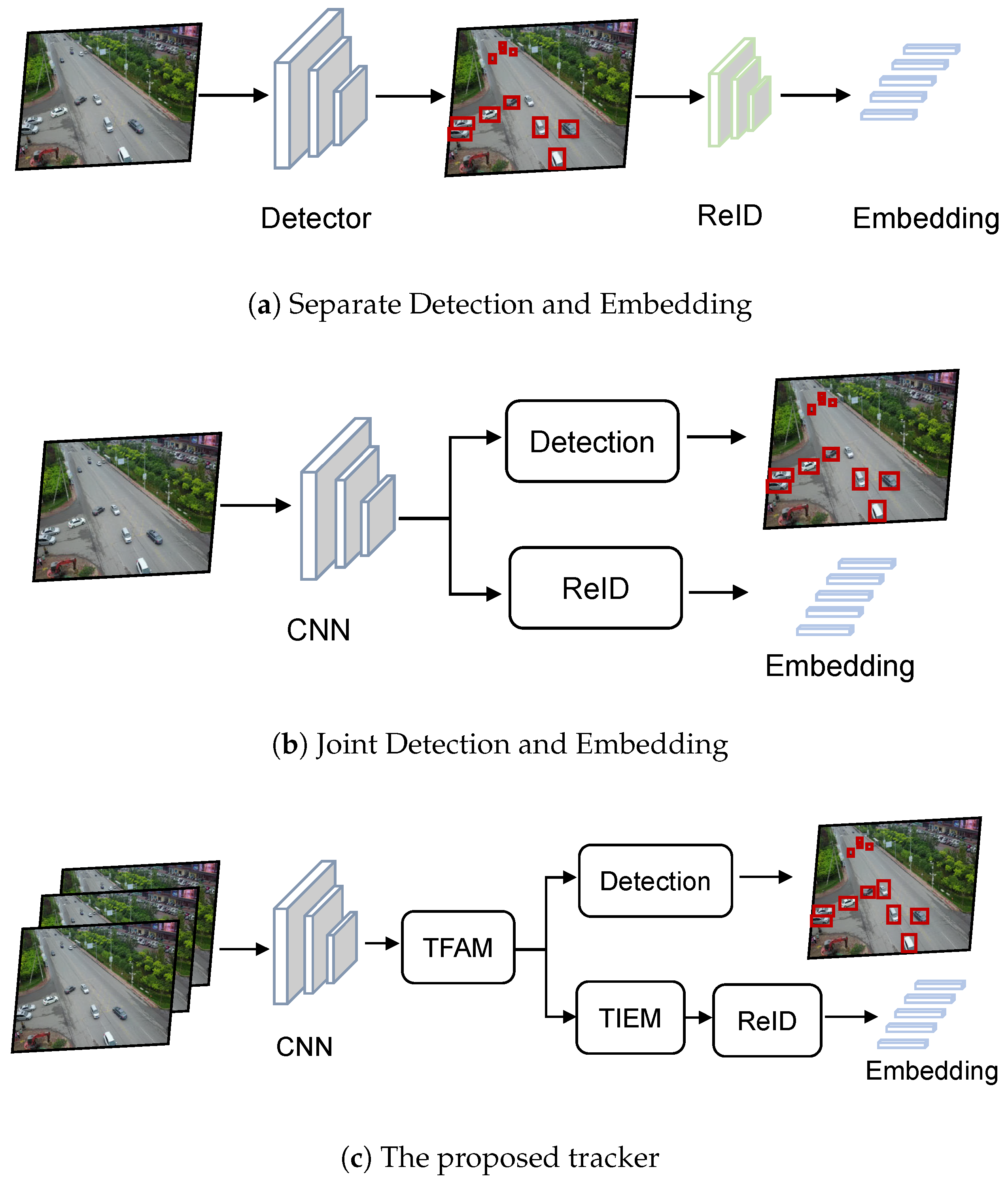

2.2. Joint Detection and Tracking

2.3. Regression-Based Tracking Method

2.4. Attention-Based Methods

2.5. Graph-Based Methods

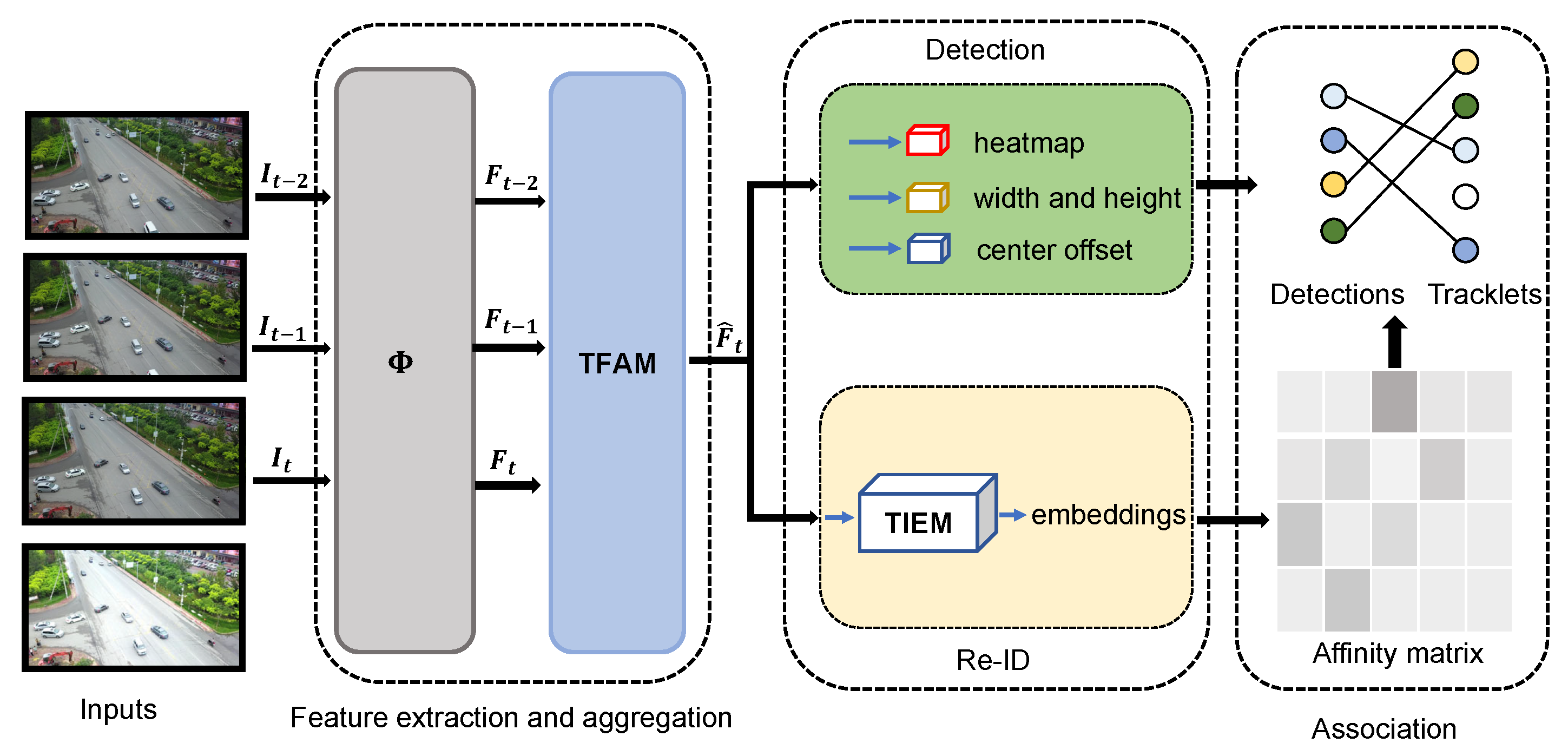

3. Method

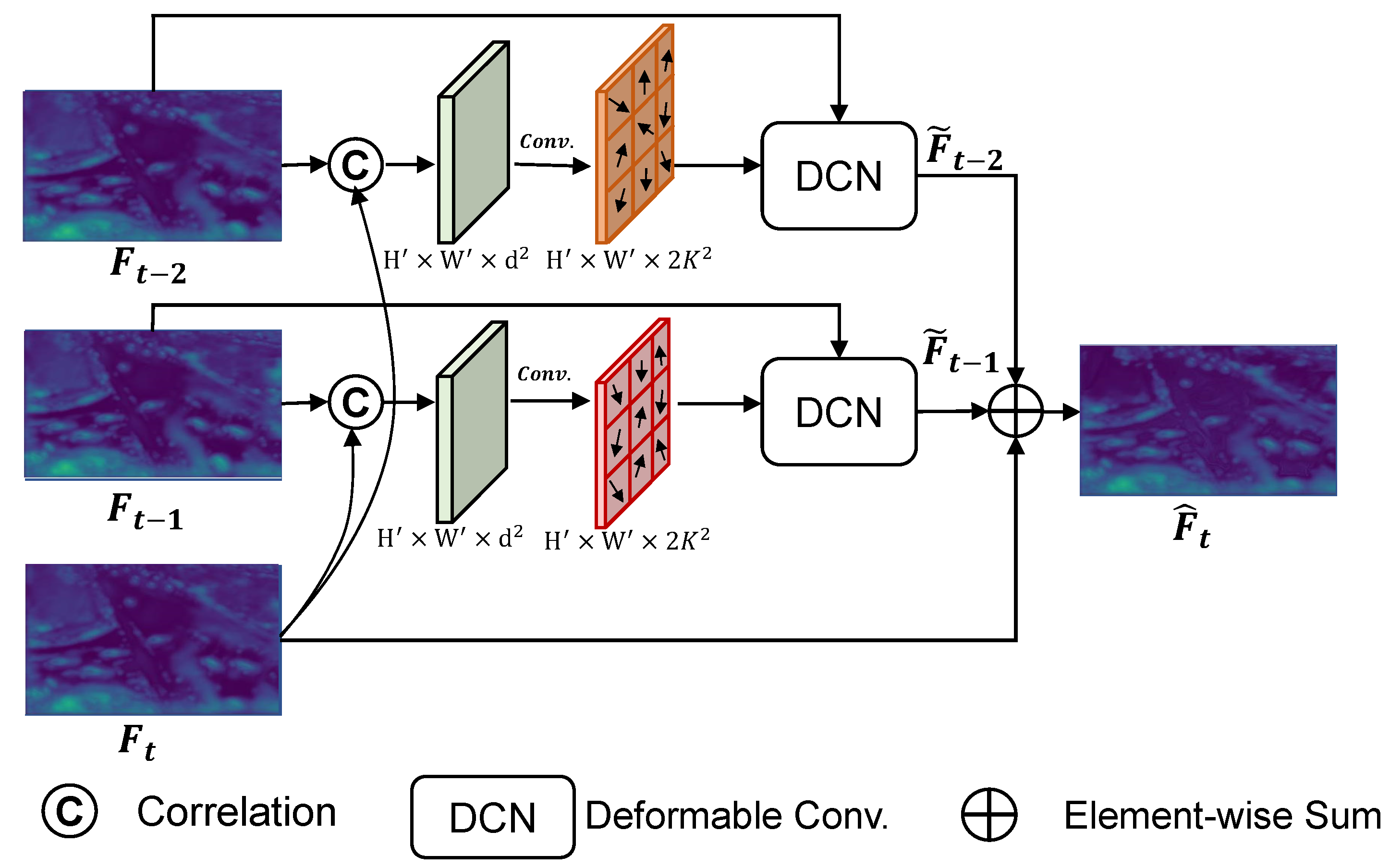

3.1. Temporal Feature Aggregation

3.1.1. Feature Propagation

3.1.2. Adaptive Feature Aggregation

3.2. Topology-Integrated Embedding Module

3.3. Optimization Objectives

3.4. Online Tracking

4. Experiments

4.1. Datasets and Metrics

4.1.1. Dataset

4.1.2. Metrics

4.2. Implementation Details

4.3. State-of-the-Art Comparison

4.4. Ablation Analysis

4.4.1. Component-Wise Analysis

4.4.2. Effect of Different Feature Fusion Strategies

4.4.3. Effect of Different Sizes of Local Regions

4.4.4. Effect of Number of Previous Features

4.4.5. Effect of Different Sizes of the Coefficient s

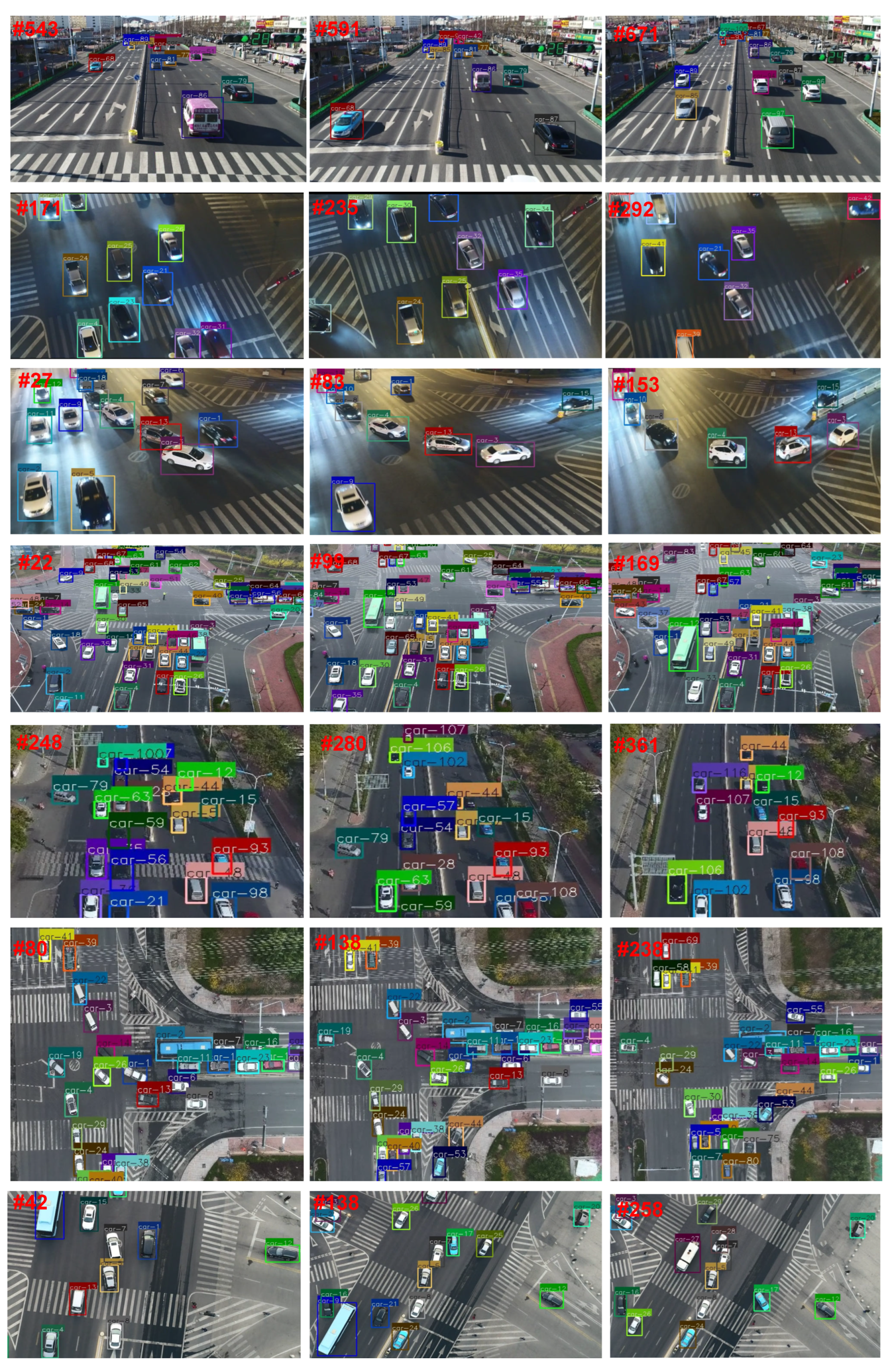

4.5. Qualitative Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Luo, W.; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Kim, T.K. Multiple object tracking: A literature review. Artif. Intell. 2021, 293, 103448. [Google Scholar] [CrossRef]

- Milan, A.; Leal-Taixé, L.; Reid, I.; Roth, S.; Schindler, K. MOT16: A benchmark for multi-object tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar]

- Dendorfer, P.; Rezatofighi, H.; Milan, A.; Shi, J.; Cremers, D.; Reid, I.; Roth, S.; Schindler, K.; Leal-Taixé, L. Mot20: A benchmark for multi object tracking in crowded scenes. arXiv 2020, arXiv:2003.09003. [Google Scholar]

- Dendorfer, P.; Osep, A.; Milan, A.; Schindler, K.; Cremers, D.; Reid, I.; Roth, S.; Leal-Taixé, L. Motchallenge: A benchmark for single-camera multiple target tracking. Int. J. Comput. Vis. 2021, 129, 845–881. [Google Scholar] [CrossRef]

- Wang, F.; Luo, L.; Zhu, E. Two-stage real-time multi-object tracking with candidate selection. In MMM 2021: MultiMedia Modeling, Proceedings of the International Conference on Multimedia Modeling, Prague, Czech Republic, 22–24 June 2021; Springer: Cham, Switzerland, 2021; pp. 49–61. [Google Scholar]

- Filkin, T.; Sliusar, N.; Ritzkowski, M.; Huber-Humer, M. Unmanned aerial vehicles for operational monitoring of landfills. Drones 2021, 5, 125. [Google Scholar] [CrossRef]

- Fan, J.; Yang, X.; Lu, R.; Xie, X.; Li, W. Design and implementation of intelligent inspection and alarm flight system for epidemic prevention. Drones 2021, 5, 68. [Google Scholar] [CrossRef]

- Svanström, F.; Alonso-Fernandez, F.; Englund, C. Drone Detection and Tracking in Real-Time by Fusion of Different Sensing Modalities. Drones 2022, 6, 317. [Google Scholar] [CrossRef]

- Dewangan, V.; Saxena, A.; Thakur, R.; Tripathi, S. Application of Image Processing Techniques for UAV Detection Using Deep Learning and Distance-Wise Analysis. Drones 2023, 7, 174. [Google Scholar] [CrossRef]

- Sun, L.; Zhang, J.; Yang, Z.; Fan, B. A Motion-Aware Siamese Framework for Unmanned Aerial Vehicle Tracking. Drones 2023, 7, 153. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Hu, Q.; Ling, H. Vision meets drones: Past, present and future. arXiv 2020, arXiv:2001.06303. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The unmanned aerial vehicle benchmark: Object detection and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Long, C.; Haizhou, A.; Zijie, Z.; Chong, S. Real-time Multiple People Tracking with Deeply Learned Candidate Selection and Person Re-identification. In Proceedings of the ICME, San Diego, CA, USA, 23–27 July 2018. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Yang, K.; Li, D.; Dou, Y. Towards precise end-to-end weakly supervised object detection network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8372–8381. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Luo, H.; Jiang, W.; Gu, Y.; Liu, F.; Liao, X.; Lai, S.; Gu, J. A strong baseline and batch normalization neck for deep person re-identification. IEEE Trans. Multimed. 2019, 22, 2597–2609. [Google Scholar] [CrossRef]

- Bergmann, P.; Meinhardt, T.; Leal-Taixe, L. Tracking without bells and whistles. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 941–951. [Google Scholar]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. Fairmot: On the fairness of detection and re-identification in multiple object tracking. Int. J. Comput. Vis. 2021, 129, 3069–3087. [Google Scholar] [CrossRef]

- Brasó, G.; Leal-Taixé, L. Learning a neural solver for multiple object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6247–6257. [Google Scholar]

- Weng, X.; Wang, Y.; Man, Y.; Kitani, K.M. Gnn3dmot: Graph neural network for 3d multi-object tracking with 2d-3d multi-feature learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6499–6508. [Google Scholar]

- Wang, Y.; Kitani, K.; Weng, X. Joint object detection and multi-object tracking with graph neural networks. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13708–13715. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Zhang, L.; Li, Y.; Nevatia, R. Global data association for multi-object tracking using network flows. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Lan, L.; Tao, D.; Gong, C.; Guan, N.; Luo, Z. Online Multi-Object Tracking by Quadratic Pseudo-Boolean Optimization. In Proceedings of the IJCAI, New York, NY, USA, 9–15 July 2016; pp. 3396–3402. [Google Scholar]

- Bochinski, E.; Eiselein, V.; Sikora, T. High-speed tracking-by-detection without using image information. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Kalman, R.E. Contributions to the theory of optimal control. Bol. Soc. Mat. Mex. 1960, 5, 102–119. [Google Scholar]

- Tang, Z.; Hwang, J.N. Moana: An online learned adaptive appearance model for robust multiple object tracking in 3d. IEEE Access 2019, 7, 31934–31945. [Google Scholar] [CrossRef]

- Wang, G.; Wang, Y.; Gu, R.; Hu, W.; Hwang, J.N. Split and connect: A universal tracklet booster for multi-object tracking. IEEE Trans. Multimed. 2023, 25, 1256–1268. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 107–122. [Google Scholar]

- Wang, Q.; Zheng, Y.; Pan, P.; Xu, Y. Multiple object tracking with correlation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3876–3886. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Tracking objects as points. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 474–490. [Google Scholar]

- Peng, J.; Wang, C.; Wan, F.; Wu, Y.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Fu, Y. Chained-tracker: Chaining paired attentive regression results for end-to-end joint multiple-object detection and tracking. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 145–161. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Detect to track and track to detect. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3038–3046. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Guo, S.; Wang, J.; Wang, X.; Tao, D. Online Multiple Object Tracking with Cross-Task Synergy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Sun, P.; Cao, J.; Jiang, Y.; Zhang, R.; Xie, E.; Yuan, Z.; Wang, C.; Luo, P. Transtrack: Multiple object tracking with transformer. arXiv 2020, arXiv:2012.15460. [Google Scholar]

- Meinhardt, T.; Kirillov, A.; Leal-Taixe, L.; Feichtenhofer, C. TrackFormer: Multi-Object Tracking with Transformers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Zeng, F.; Dong, B.; Zhang, Y.; Wang, T.; Zhang, X.; Wei, Y. MOTR: End-to-End Multiple-Object Tracking with TRansformer. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2022. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Cai, J.; Xu, M.; Li, W.; Xiong, Y.; Xia, W.; Tu, Z.; Soatto, S. MeMOT: Multi-object tracking with memory. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8090–8100. [Google Scholar]

- Hornakova, A.; Henschel, R.; Rosenhahn, B.; Swoboda, P. Lifted disjoint paths with application in multiple object tracking. In Proceedings of the International Conference on Machine Learning. PMLR, Virtual, 13–18 July 2020; pp. 4364–4375. [Google Scholar]

- Xu, J.; Cao, Y.; Zhang, Z.; Hu, H. Spatial-temporal relation networks for multi-object tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3988–3998. [Google Scholar]

- He, J.; Huang, Z.; Wang, N.; Zhang, Z. Learnable graph matching: Incorporating graph partitioning with deep feature learning for multiple object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5299–5309. [Google Scholar]

- Yu, F.; Wang, D.; Shelhamer, E.; Darrell, T. Deep layer aggregation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2403–2412. [Google Scholar]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; Van Der Smagt, P.; Cremers, D.; Brox, T. Flownet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2758–2766. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Xia, Z.; Pan, X.; Song, S.; Li, L.E.; Huang, G. Vision transformer with deformable attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4794–4803. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7482–7491. [Google Scholar]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The clear mot metrics. EURASIP J. Image Video Process. 2008, 2008, 246309. [Google Scholar] [CrossRef]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 17–35. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Pirsiavash, H.; Ramanan, D.; Fowlkes, C.C. Globally-optimal greedy algorithms for tracking a variable number of objects. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1201–1208. [Google Scholar]

- Milan, A.; Roth, S.; Schindler, K. Continuous energy minimization for multitarget tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 58–72. [Google Scholar] [CrossRef] [PubMed]

- Dicle, C.; Camps, O.I.; Sznaier, M. The way they move: Tracking multiple targets with similar appearance. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2304–2311. [Google Scholar]

- Bae, S.H.; Yoon, K.J. Robust online multi-object tracking based on tracklet confidence and online discriminative appearance learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1218–1225. [Google Scholar]

| Method | MOTA↑ | IDF1↑ | MOTP↑ | MT↑ | ML↓ | FP↓ | FN↓ | IDs↓ | FM↓ |

|---|---|---|---|---|---|---|---|---|---|

| MOTDT [15] | −0.8 | 21.6 | 68.5 | 87 | 1196 | 44,548 | 185,453 | 1437 | 3609 |

| SORT [13] | 14.0 | 38.0 | 73.2 | 506 | 545 | 80,845 | 112,954 | 3629 | 4838 |

| IOUT [29] | 28.1 | 38.9 | 74.7 | 467 | 670 | 36,158 | 126,549 | 2393 | 3829 |

| GOG [60] | 28.7 | 36.4 | 76.1 | 346 | 836 | 17,706 | 144,657 | 1387 | 2237 |

| MOTR [43] | 22.8 | 41.4 | 72.8 | 272 | 825 | 28,407 | 147,937 | 959 | 3980 |

| TrackFormer [42] | 25.0 | 30.5 | 73.9 | 385 | 770 | 25,856 | 141,526 | 4840 | 4855 |

| FairMOT [22] | 28.7 | 39.8 | 75.1 | 449 | 758 | 22,771 | 137,215 | 3611 | 6162 |

| Ours | 30.9 | 42.7 | 74.4 | 491 | 668 | 27,732 | 126,811 | 3998 | 7061 |

| Method | MOTA↑ | IDF1↑ | MOTP↑ | MT↑ | ML↓ | FP↓ | FN↓ | IDs↓ | FM↓ |

|---|---|---|---|---|---|---|---|---|---|

| CEM [61] | −6.8 | 10.1 | 70.4 | 94 | 1062 | 64,373 | 298,090 | 1530 | 2835 |

| SMOT [62] | 33.9 | 45.0 | 72.2 | 524 | 367 | 57,112 | 166,528 | 1752 | 9577 |

| GOG [60] | 35.7 | 0.3 | 72 | 627 | 374 | 62,929 | 153,336 | 3104 | 5130 |

| IOUT [29] | 36.6 | 23.7 | 72.1 | 534 | 357 | 42,245 | 163,881 | 9938 | 10,463 |

| CMOT [63] | 36.9 | 57.5 | 74.7 | 664 | 351 | 69,109 | 144,760 | 1111 | 3656 |

| SORT [13] | 39.0 | 43.7 | 74.3 | 484 | 400 | 33,037 | 172,628 | 2350 | 5787 |

| DeepSORT [14] | 40.7 | 58.2 | 73.2 | 595 | 338 | 44,868 | 155,290 | 2061 | 6432 |

| MDP [63] | 43.0 | 61.5 | 73.5 | 647 | 324 | 46,151 | 147,735 | 541 | 4299 |

| FairMOT [22] | 44.5 | 66.3 | 72.2 | 640 | 193 | 71,922 | 116,510 | 664 | 6326 |

| Ours | 47.0 | 67.8 | 72.9 | 652 | 193 | 68,282 | 111,959 | 506 | 5884 |

| TFAM | TIEM | MOTA↑ | IDF1↑ | FP↓ | FN↓ | IDs↓ |

|---|---|---|---|---|---|---|

| 26.0 | 43.2 | 10,648 | 41,605 | 1019 | ||

| 🗸 | 28.4 | 44.0 | 9074 | 41,398 | 948 | |

| 🗸 | 27.3 | 45.7 | 11,645 | 39,601 | 971 | |

| 🗸 | 🗸 | 29.1 | 46.1 | 9589 | 40,380 | 938 |

| Fusion Strategies | MOTA↑ | IDF1↑ | FP↓ | FN↓ | IDs↓ |

|---|---|---|---|---|---|

| Addition | 26.6 | 45.5 | 11,109 | 40,635 | 1001 |

| Concatenation | 27.1 | 45.8 | 10,815 | 40,708 | 851 |

| Adaptive feature aggregation | 28.4 | 44.0 | 9074 | 41,398 | 948 |

| d | MOTA↑ | IDF1↑ | FP↓ | FN↓ | IDs↓ |

|---|---|---|---|---|---|

| 4 | 26.6 | 44.4 | 11,532 | 41,187 | 905 |

| 8 | 27.4 | 46.4 | 11,112 | 40,122 | 912 |

| 16 | 28.4 | 44.0 | 9074 | 41,398 | 948 |

| 20 | 26.3 | 45.6 | 11,279 | 40,710 | 937 |

| 24 | 25.1 | 44.7 | 12,369 | 40,549 | 906 |

| L | MOTA↑ | IDF1↑ | FP↓ | FN↓ | IDs↓ |

|---|---|---|---|---|---|

| 1 | 28.1 | 44.4 | 9679 | 41,077 | 900 |

| 2 | 28.4 | 44.0 | 9074 | 41,398 | 948 |

| 3 | 27.5 | 45.4 | 9958 | 41,253 | 850 |

| 4 | 27.4 | 43.2 | 9034 | 42,429 | 735 |

| 5 | 27.6 | 44.2 | 8759 | 42,501 | 791 |

| s | IDF1↑ | MOTA↑ | FP↓ | FN↓ | IDs↓ |

|---|---|---|---|---|---|

| 2 | 44.3 | 25.4 | 11,434 | 41,155 | 976 |

| 3 | 44.7 | 27.7 | 8880 | 42,300 | 796 |

| 4 | 45.7 | 27.3 | 11,645 | 39,601 | 971 |

| 5 | 43.4 | 25.4 | 10,175 | 42,422 | 968 |

| 6 | 44.5 | 25.7 | 12,027 | 40,307 | 1034 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, C.; Cao, Q.; Zhong, Y.; Lan, L.; Zhang, X.; Cai, H.; Luo, Z. Enhancing Online UAV Multi-Object Tracking with Temporal Context and Spatial Topological Relationships. Drones 2023, 7, 389. https://doi.org/10.3390/drones7060389

Xiao C, Cao Q, Zhong Y, Lan L, Zhang X, Cai H, Luo Z. Enhancing Online UAV Multi-Object Tracking with Temporal Context and Spatial Topological Relationships. Drones. 2023; 7(6):389. https://doi.org/10.3390/drones7060389

Chicago/Turabian StyleXiao, Changcheng, Qiong Cao, Yujie Zhong, Long Lan, Xiang Zhang, Huayue Cai, and Zhigang Luo. 2023. "Enhancing Online UAV Multi-Object Tracking with Temporal Context and Spatial Topological Relationships" Drones 7, no. 6: 389. https://doi.org/10.3390/drones7060389

APA StyleXiao, C., Cao, Q., Zhong, Y., Lan, L., Zhang, X., Cai, H., & Luo, Z. (2023). Enhancing Online UAV Multi-Object Tracking with Temporal Context and Spatial Topological Relationships. Drones, 7(6), 389. https://doi.org/10.3390/drones7060389