Crown Width Extraction of Metasequoia glyptostroboides Using Improved YOLOv7 Based on UAV Images

Abstract

1. Introduction

2. Materials

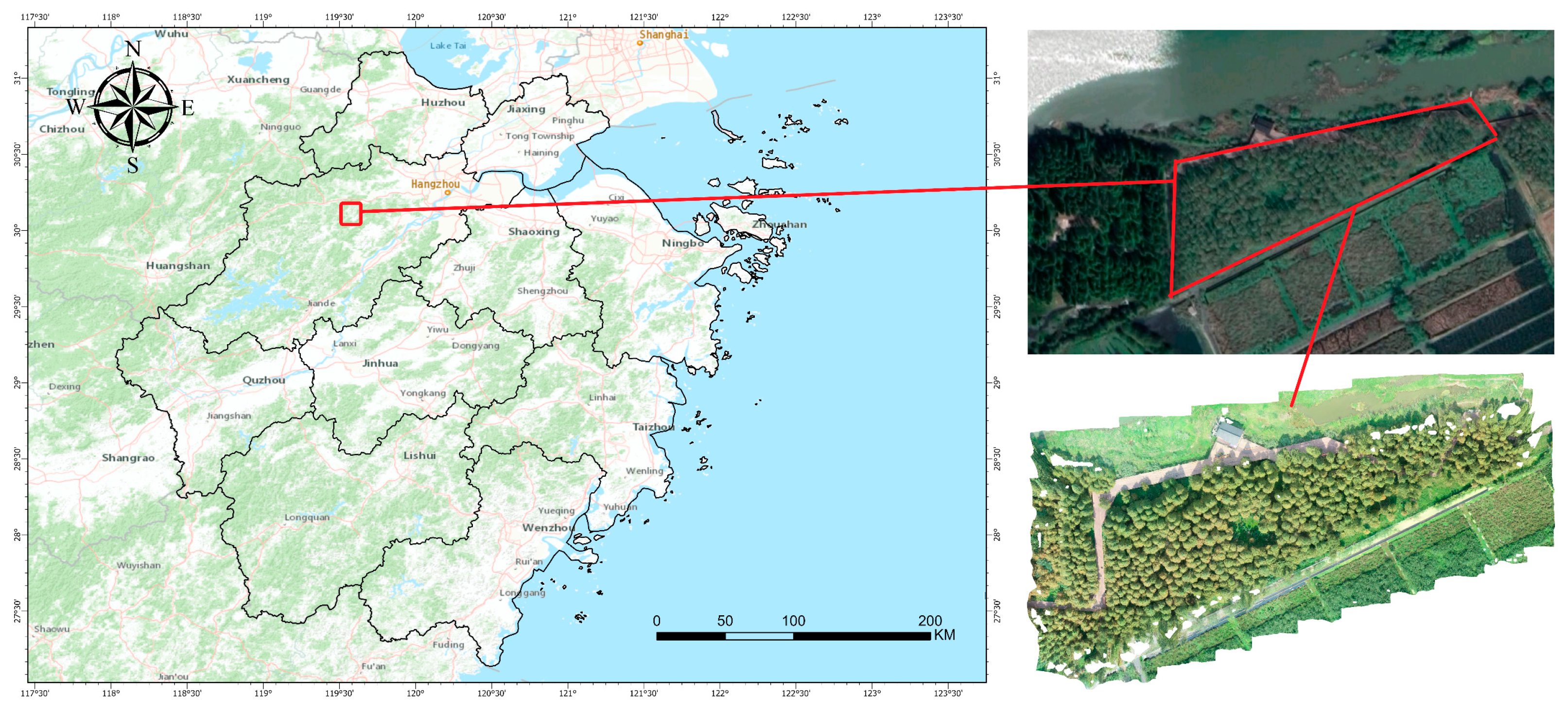

2.1. Overview of the Study Area

2.2. Data Acquisition

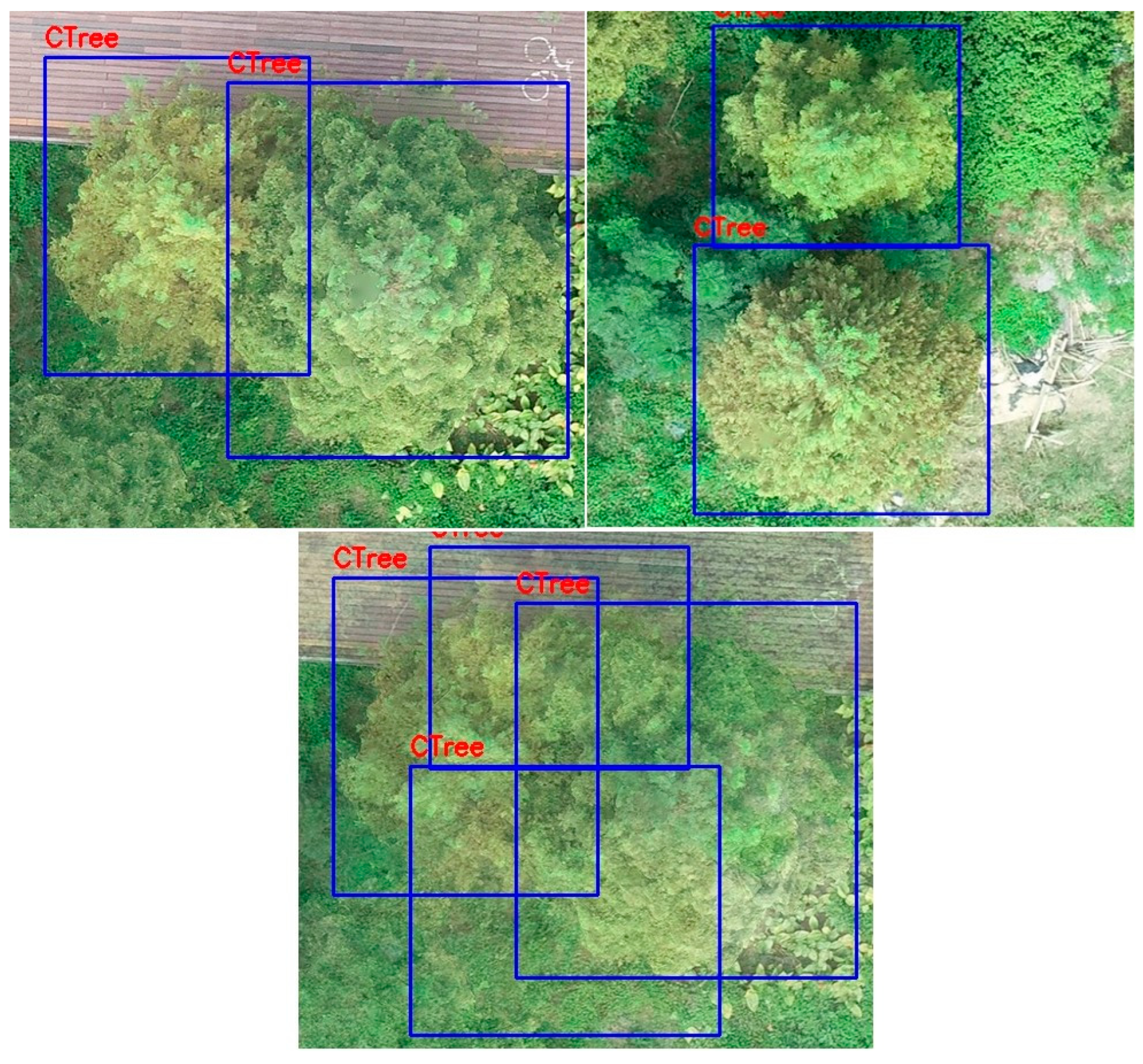

2.3. Dataset Establishment

2.4. Mixup Data Enhancement

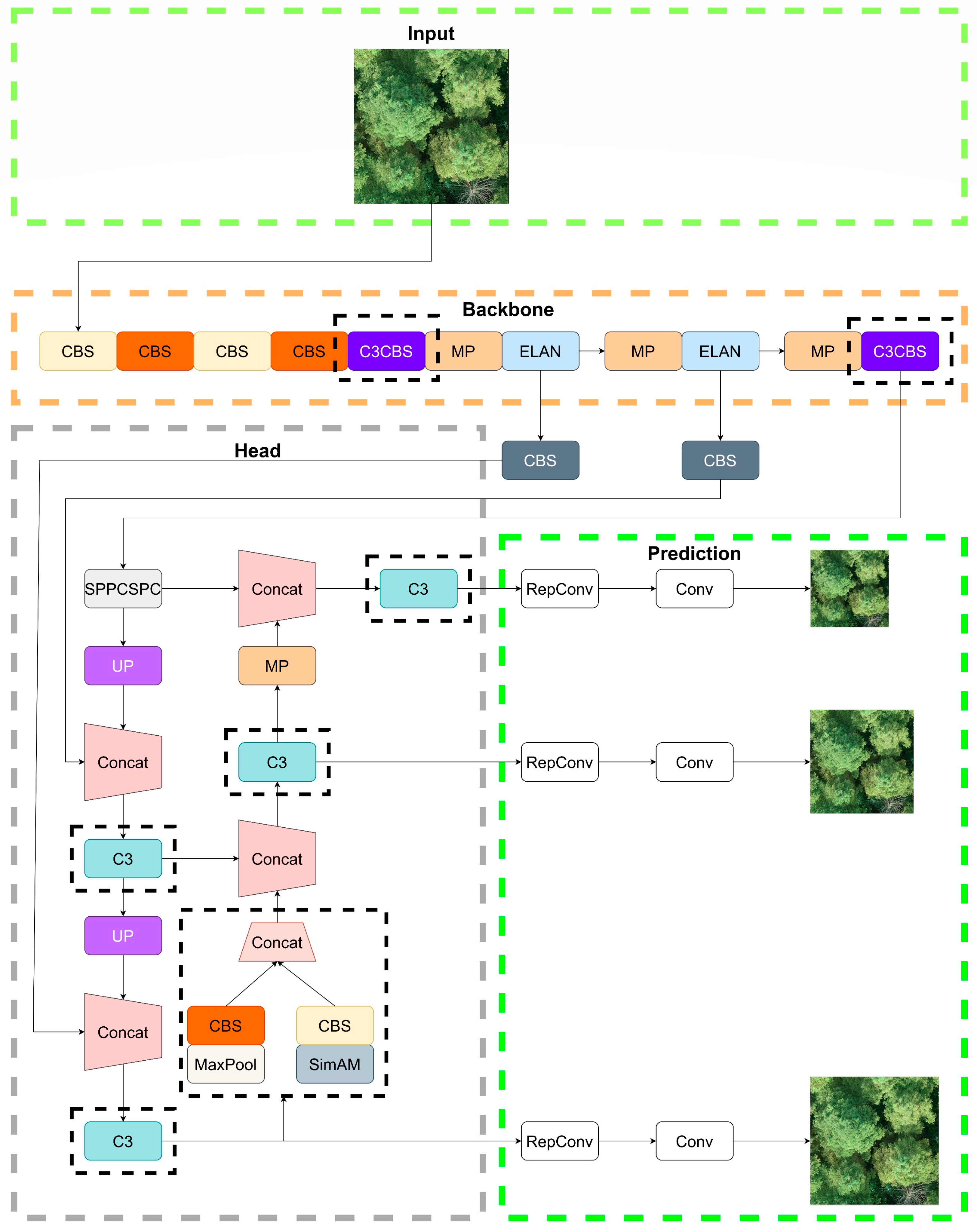

3. Method

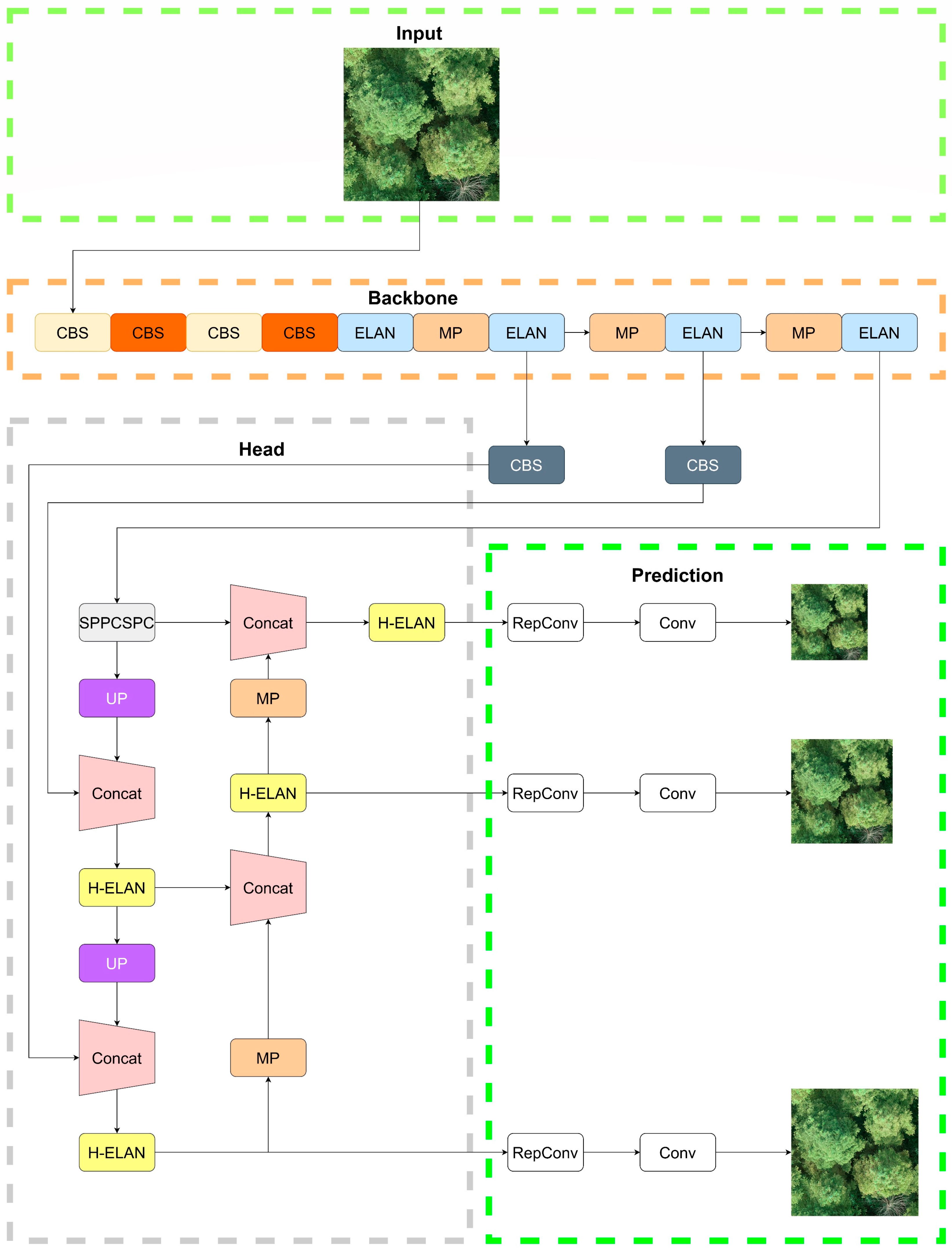

3.1. Description of YOLOv7 Algorithm

3.2. SimAM Attention Mechanism

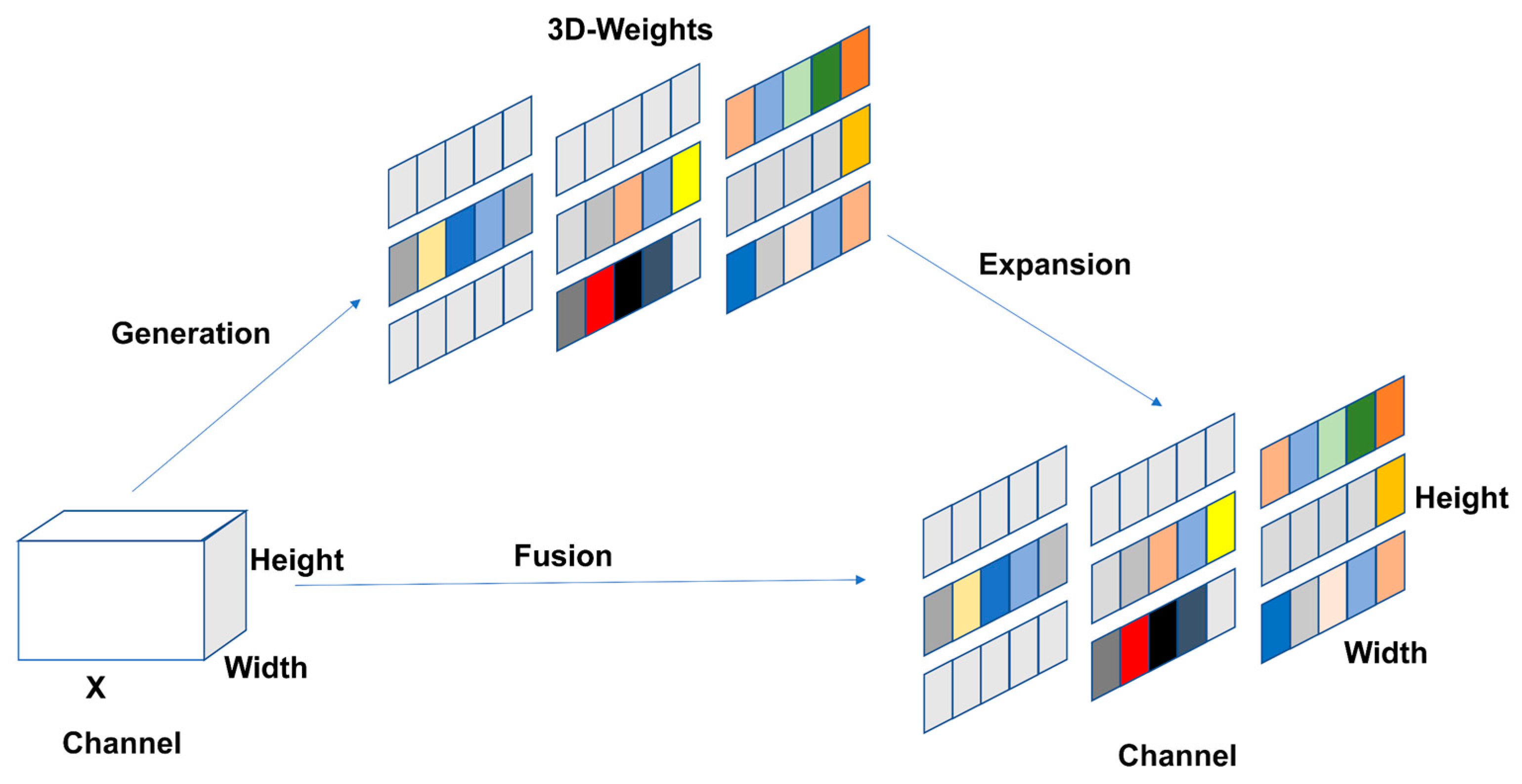

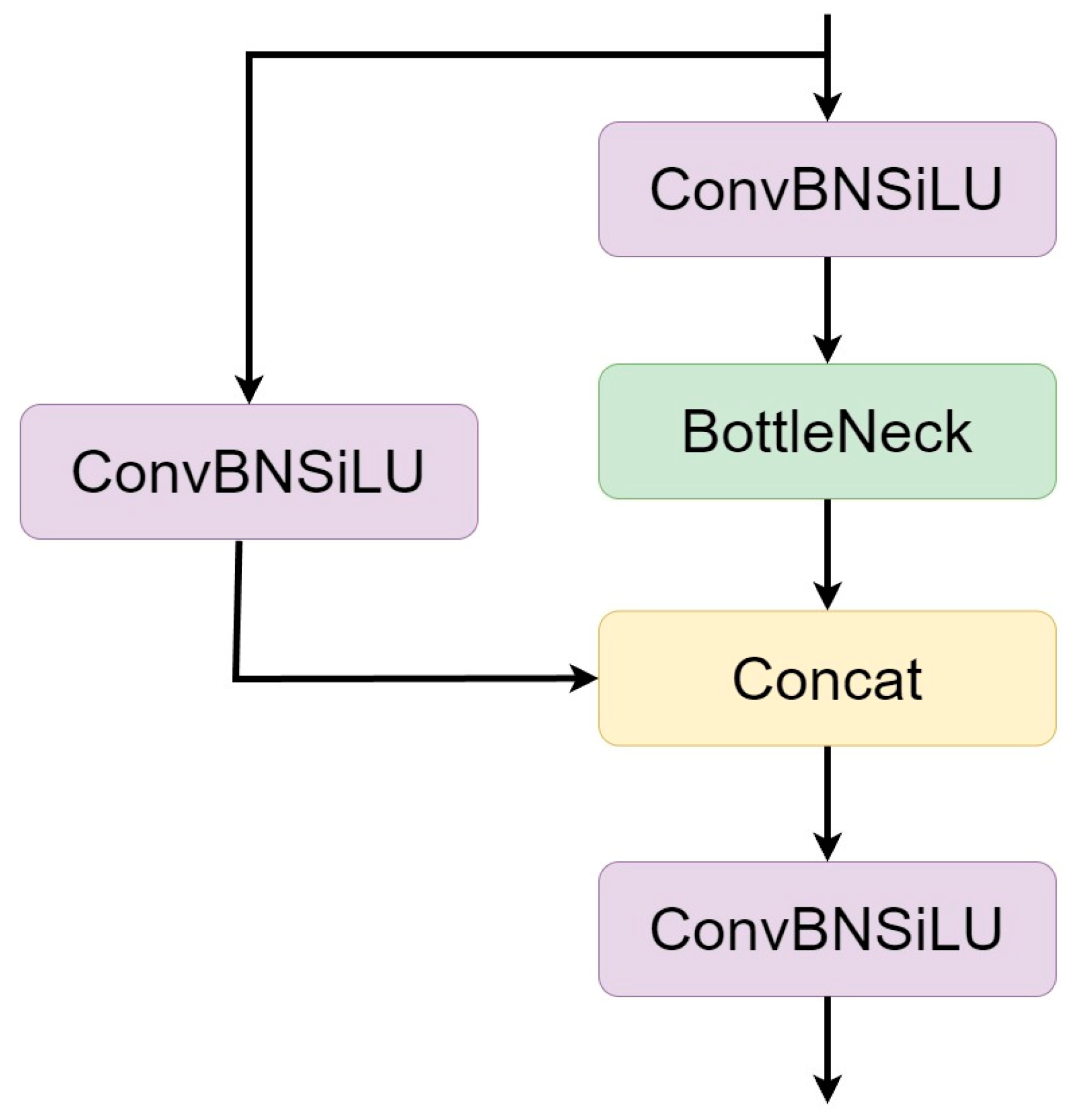

3.3. C3 Lightweight Module

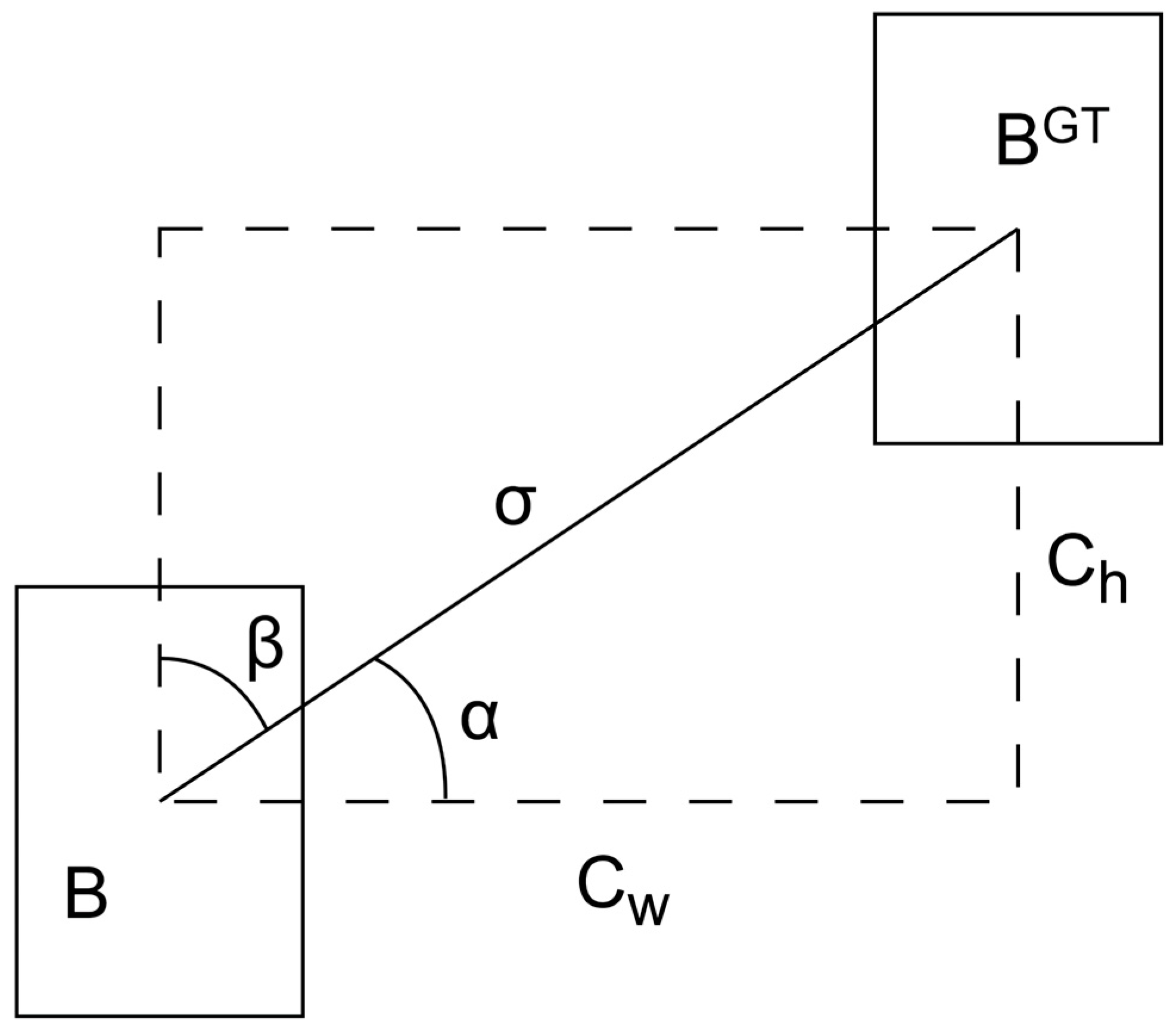

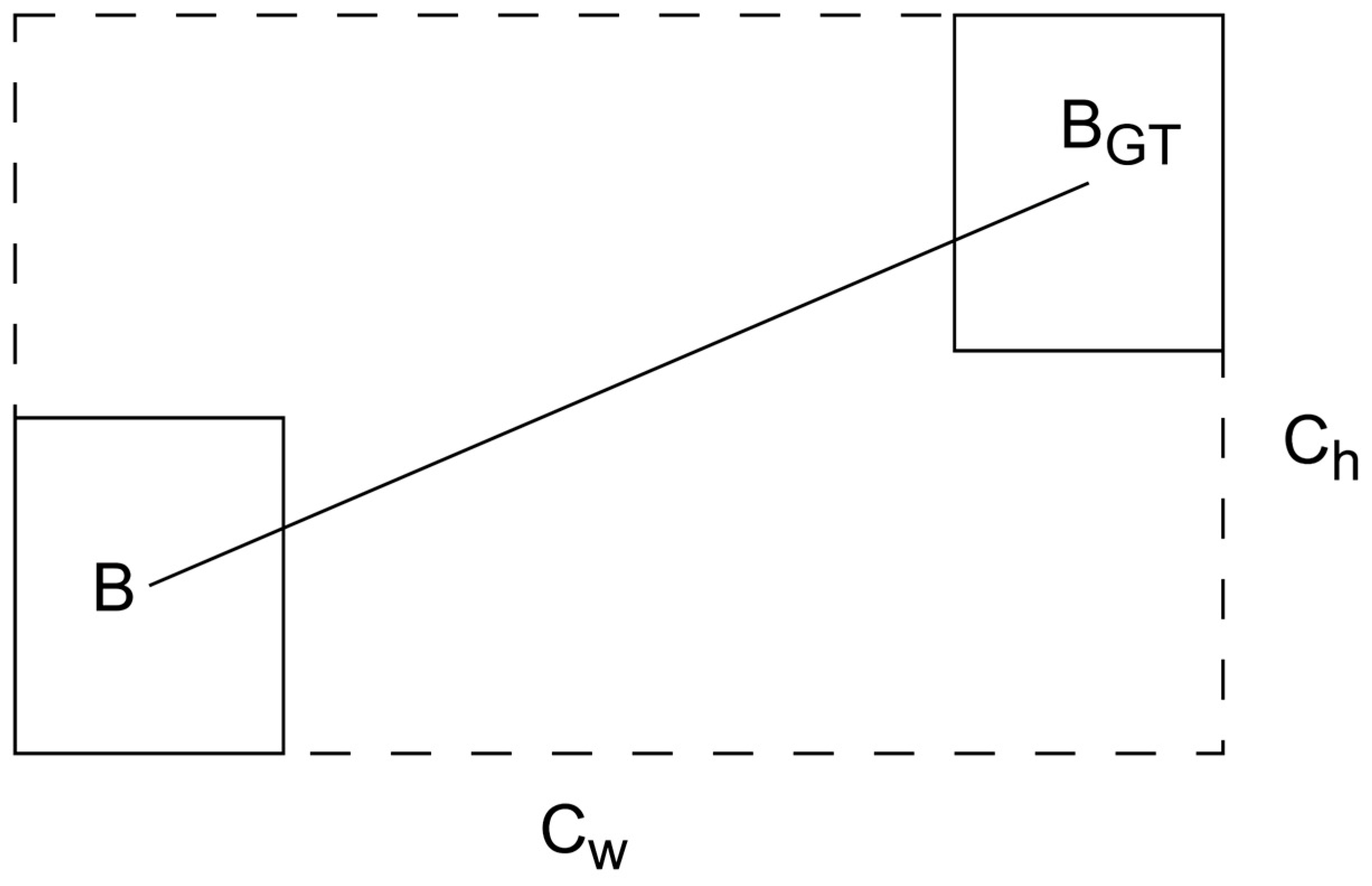

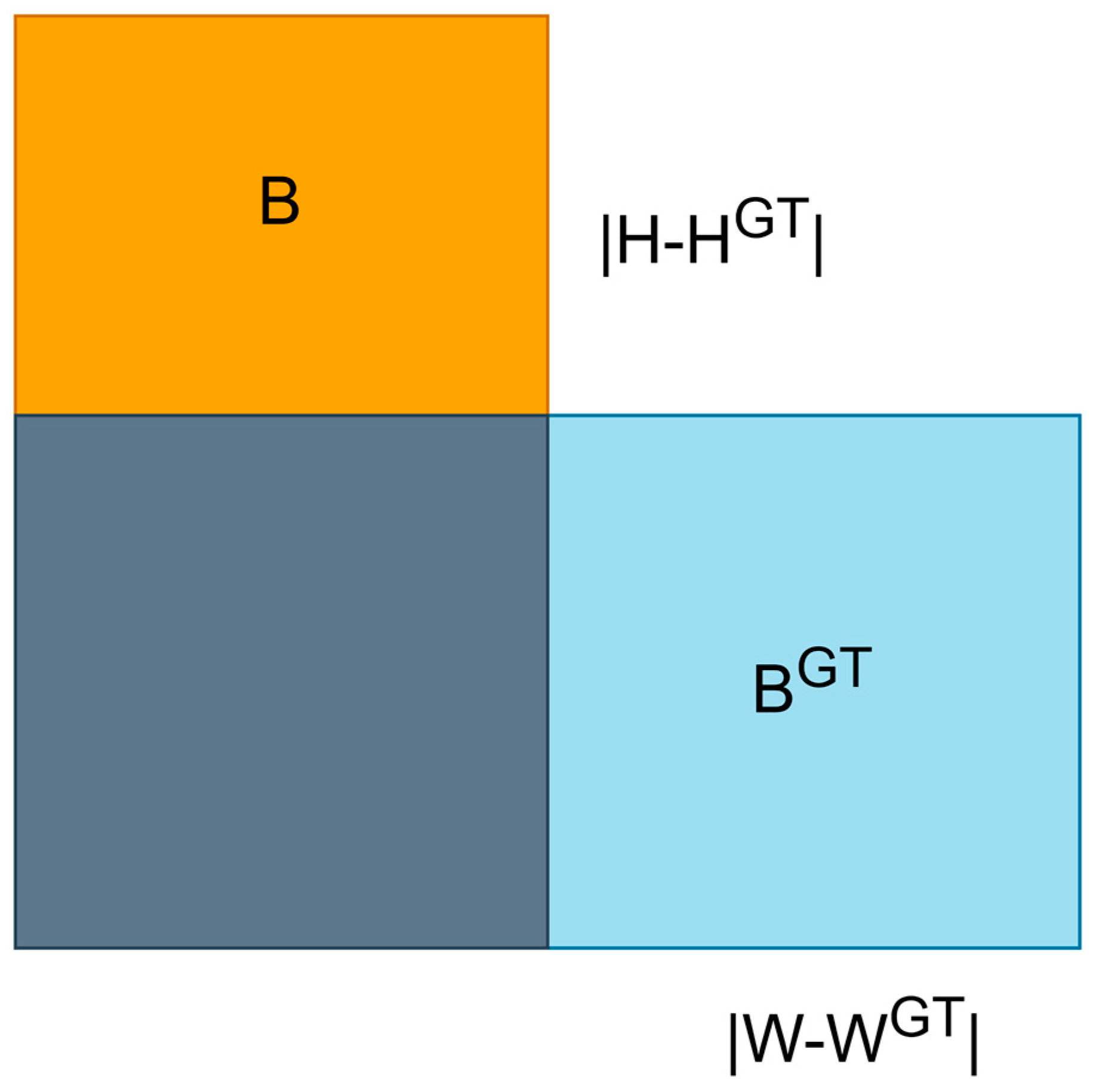

3.4. SIoU

- (1)

- The angle loss is defined as follows:

- (2)

- The distance loss is defined as follows:

- (3)

- The shape loss is defined as follows:

- (4)

- The IoU loss is defined as follows:

3.5. Improved YOLOv7 Network Model

3.6. Evaluation Indexes

4. Results and Discussion

4.1. Experimental Configuration and Model Training

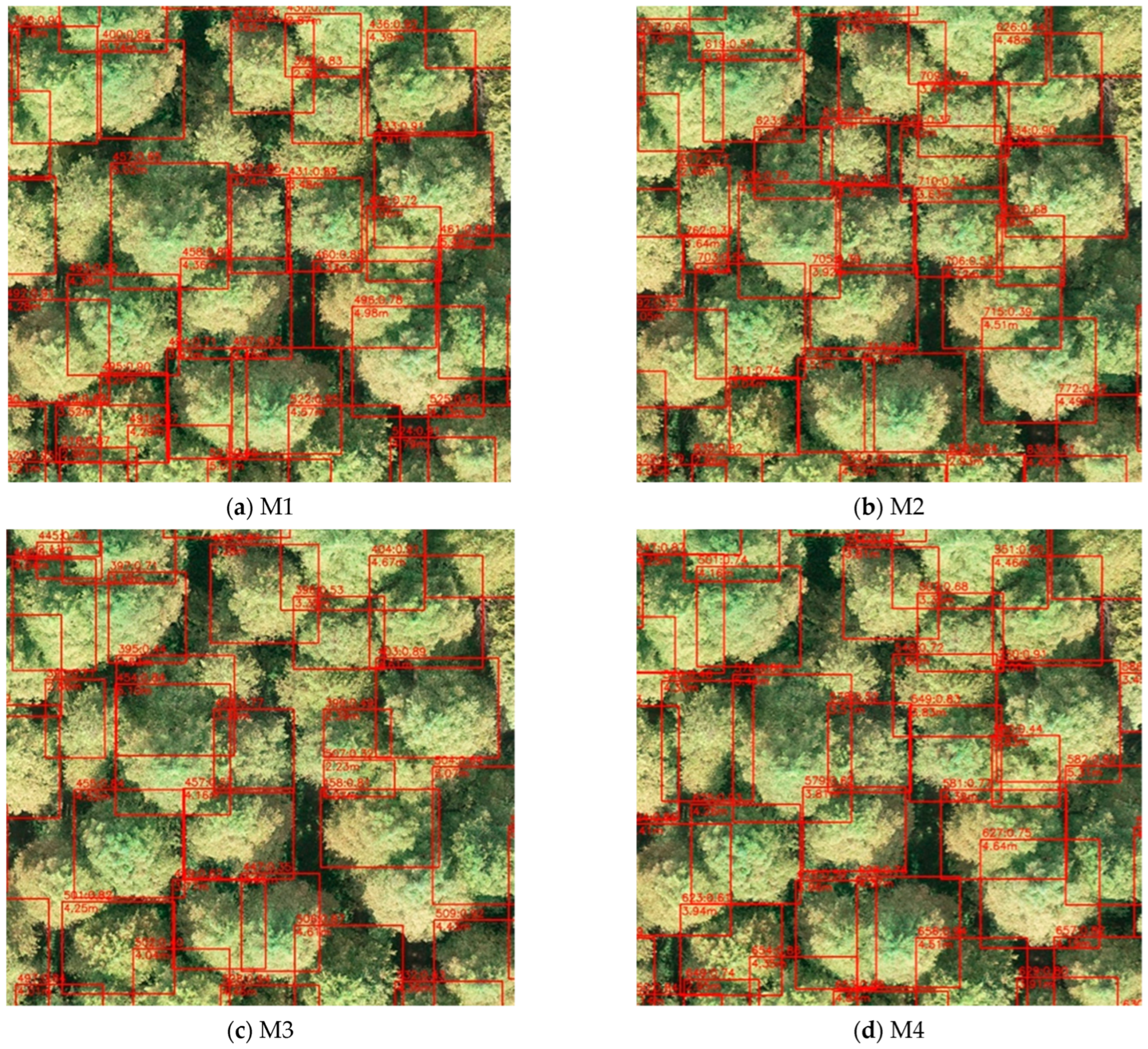

4.2. Comparison of Crown Detection Performance

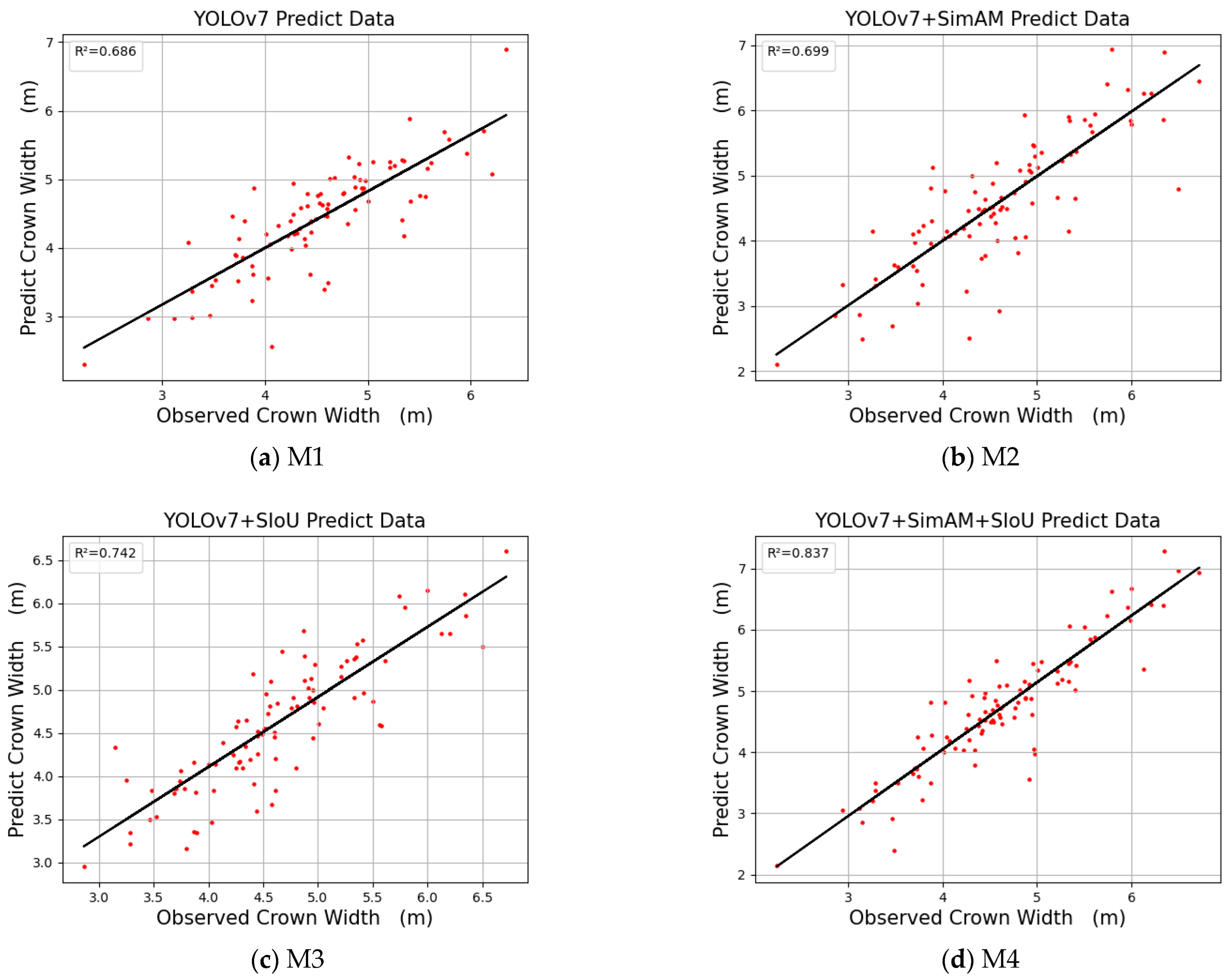

4.3. Comparison of Crown Width Measurement Accuracy

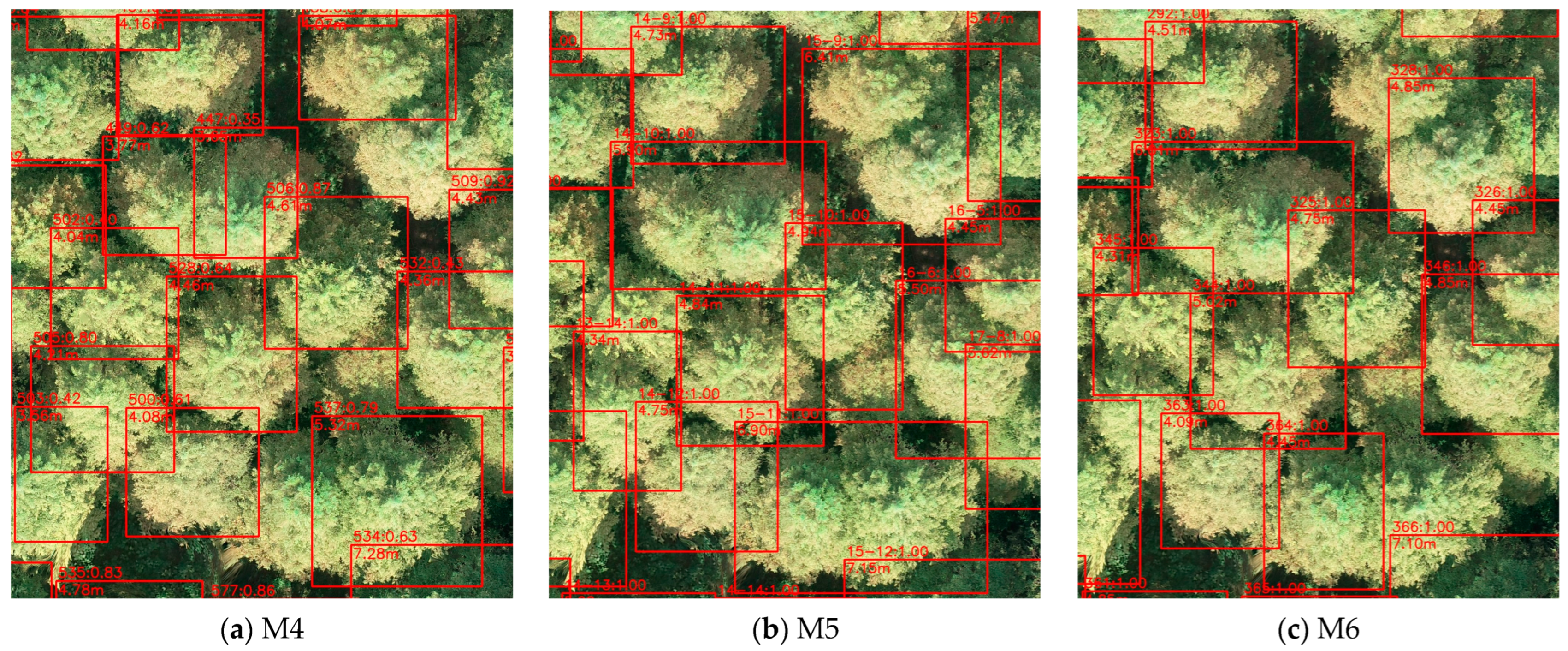

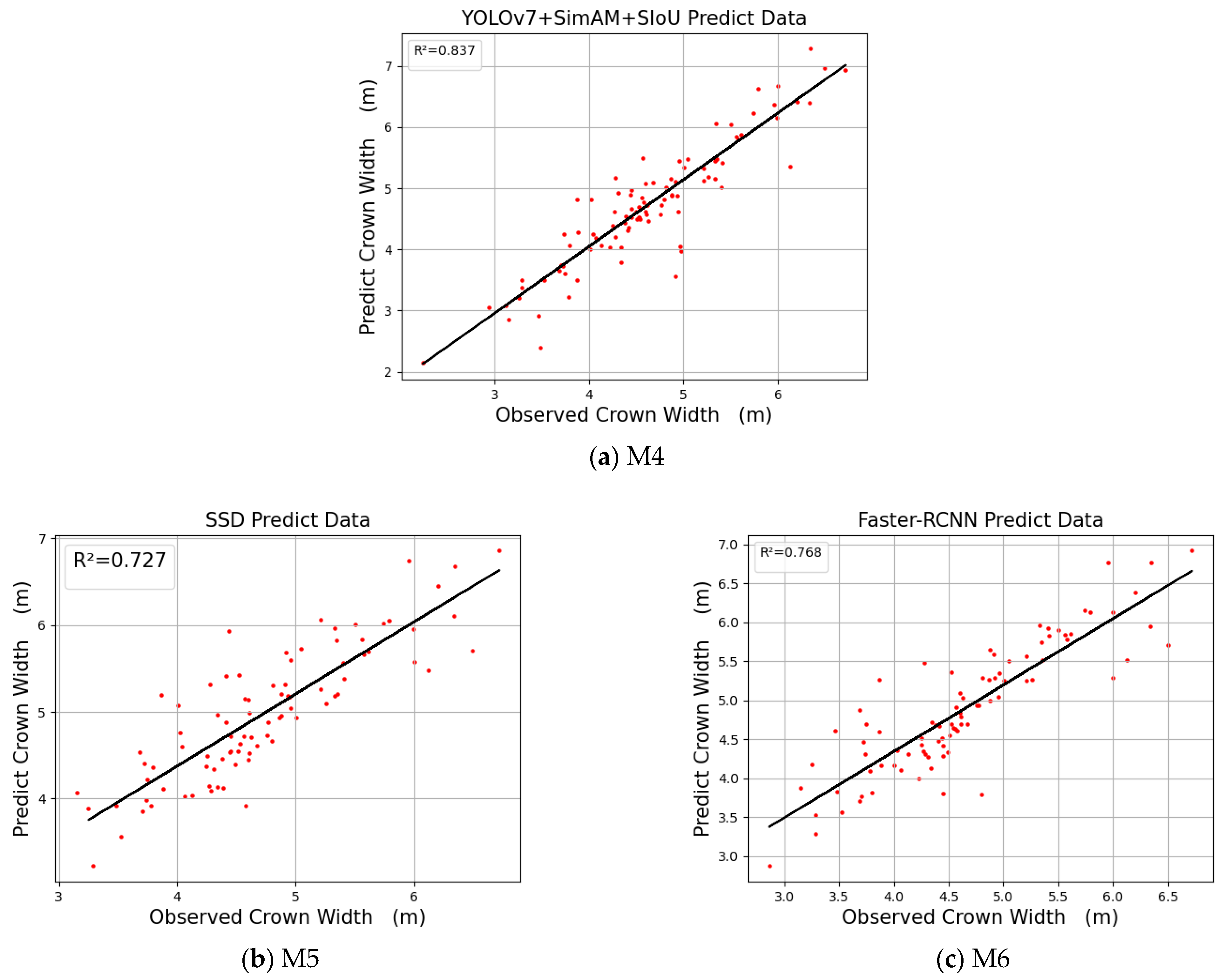

4.4. Comparison of Crown Identification and Crown Width Measurement Accuracy between Different Models

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Riutta, T.; Malhi, Y.; Kho, L.K.; Marthews, T.R.; Huaraca Huasco, W.; Khoo, M.; Tan, S.; Turner, E.; Reynolds, G.; Both, S.; et al. Logging Disturbance Shifts Net Primary Productivity and Its Allocation in Bornean Tropical Forests. Glob. Chang. Biol. 2018, 24, 2913–2928. [Google Scholar] [CrossRef] [PubMed]

- Goldstein, J.H.; Caldarone, G.; Duarte, T.K.; Ennaanay, D.; Hannahs, N.; Mendoza, G.; Polasky, S.; Wolny, S.; Daily, G.C. Integrating Ecosystem-Service Tradeoffs into Land-Use Decisions. Proc. Natl. Acad. Sci. USA 2012, 109, 7565–7570. [Google Scholar] [CrossRef] [PubMed]

- Parrotta, J.A. Forest Biodiversity and the Delivery of Ecosystem Goods and Services: Translating Science into Policy. BioScience 2011, 61, 972–981. [Google Scholar]

- McKinley, D.C.; Ryan, M.G.; Birdsey, R.A.; Giardina, C.P.; Harmon, M.E.; Heath, L.S.; Houghton, R.A.; Jackson, R.B.; Morrison, J.F.; Murray, B.C.; et al. A Synthesis of Current Knowledge on Forests and Carbon Storage in the United States. Ecol. Appl. 2011, 21, 1902–1924. [Google Scholar] [CrossRef]

- Luyssaert, S.; Schulze, E.-D.; Börner, A.; Knohl, A.; Hessenmöller, D.; Law, B.E.; Ciais, P.; Grace, J. Old-Growth Forests as Global Carbon Sinks. Nature 2008, 455, 213–215. [Google Scholar] [CrossRef]

- Fahey, T.J.; Woodbury, P.B.; Battles, J.J.; Goodale, C.L.; Hamburg, S.P.; Ollinger, S.V.; Woodall, C.W. Forest Carbon Storage: Ecology, Management, and Policy. Front. Ecol. Environ. 2010, 8, 245–252. [Google Scholar] [CrossRef]

- Luo, Y.; Le, J.; Zhang, Y.; Wang, R.; Li, Q.; Lu, X.; Liu, J.; Deng, Z. Identification and Functional Analysis of LncRNAs in Response to Seed Aging in Metasequoia Glyptostroboides by Third Generation Sequencing Technology. Forests 2022, 13, 1579. [Google Scholar] [CrossRef]

- Sterck, F.; Martinéz-Ramos, M.; Dyer-Leal, G.; Rodríguez-Velazquez, J.; Poorter, L. The Consequences of Crown Traits for the Growth and Survival of Tree Saplings in a Mexican Lowland Rainforest. Funct. Ecol. 2003, 17, 194–200. [Google Scholar] [CrossRef]

- Pretzsch, H.; Biber, P.; Uhl, E.; Dahlhausen, J.; Rötzer, T.; Caldentey, J.; Koike, T.; van Con, T.; Chavanne, A.; Seifert, T.; et al. Crown Size and Growing Space Requirement of Common Tree Species in Urban Centres, Parks, and Forests. Urban For. Urban Green. 2015, 14, 466–479. [Google Scholar] [CrossRef]

- Sinoquet, H.; Le Roux, X.; Adam, B.; Ameglio, T.; Daudet, F.A. RATP: A Model for Simulating the Spatial Distribution of Radiation Absorption, Transpiration and Photosynthesis within Canopies: Application to an Isolated Tree Crown. Plant Cell Environ. 2001, 24, 395–406. [Google Scholar] [CrossRef]

- Zarnoch, S.J.; Bechtold, W.A.; Stolte, K.W. Using Crown Condition Variables as Indicators of Forest Health. Can. J. For. Res. 2004, 34, 1057–1070. [Google Scholar] [CrossRef]

- Fu, L.; Sharma, R.P.; Wang, G.; Tang, S. Modelling a System of Nonlinear Additive Crown Width Models Applying Seemingly Unrelated Regression for Prince Rupprecht Larch in Northern China. For. Ecol. Manag. 2017, 386, 71–80. [Google Scholar] [CrossRef]

- Westfall, J.A.; Nowak, D.J.; Henning, J.G.; Lister, T.W.; Edgar, C.B.; Majewsky, M.A.; Sonti, N.F. Crown Width Models for Woody Plant Species Growing in Urban Areas of the U.S. Urban Ecosyst. 2020, 23, 905–917. [Google Scholar] [CrossRef]

- Goodman, R.C.; Phillips, O.L.; Baker, T.R. The Importance of Crown Dimensions to Improve Tropical Tree Biomass Estimates. Ecol. Appl. 2014, 24, 680–698. [Google Scholar] [CrossRef]

- Hemery, G.E.; Savill, P.S.; Pryor, S.N. Applications of the Crown Diameter–Stem Diameter Relationship for Different Species of Broadleaved Trees. For. Ecol. Manag. 2005, 215, 285–294. [Google Scholar] [CrossRef]

- Song, C. Estimating Tree Crown Size with Spatial Information of High Resolution Optical Remotely Sensed Imagery. Int. J. Remote Sens. 2007, 28, 3305–3322. [Google Scholar] [CrossRef]

- Gao, H.; Chen, D.; Sun, X.; Zhang, S. Modelling the Responses of Crown Profiles to Competition in Terms of Different Tree Classes and Directions in Two Coniferous Tree Species in Northeastern China. Eur. J. For. Res. 2021, 140, 159–174. [Google Scholar] [CrossRef]

- Metzger, J.M.; Oren, R. The Effect of Crown Dimensions on Transparency and the Assessment of Tree Health. Ecol. Appl. 2001, 11, 1634–1640. [Google Scholar] [CrossRef]

- Fleck, S.; Mölder, I.; Jacob, M.; Gebauer, T.; Jungkunst, H.F.; Leuschner, C. Comparison of Conventional Eight-Point Crown Projections with LIDAR-Based Virtual Crown Projections in a Temperate Old-Growth Forest. Ann. For. Sci. 2011, 68, 1173–1185. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J.P. Image Segmentation for Fruit Detection and Yield Estimation in Apple Orchards. J. Field Robot. 2017, 34, 1039–1060. [Google Scholar] [CrossRef]

- Bragg, D.C. A Local Basal Area Adjustment for Crown Width Prediction. North. J. Appl. For. 2001, 18, 22–28. [Google Scholar] [CrossRef]

- Fu, L.; Sun, H.; Sharma, R.P.; Lei, Y.; Zhang, H.; Tang, S. Nonlinear Mixed-Effects Crown Width Models for Individual Trees of Chinese Fir (Cunninghamia Lanceolata) in South-Central China. For. Ecol. Manag. 2013, 302, 210–220. [Google Scholar] [CrossRef]

- Sharma, R.P.; Vacek, Z.; Vacek, S. Individual Tree Crown Width Models for Norway Spruce and European Beech in Czech Republic. For. Ecol. Manag. 2016, 366, 208–220. [Google Scholar] [CrossRef]

- Song, C.; Dickinson, M.B.; Su, L.; Zhang, S.; Yaussey, D. Estimating Average Tree Crown Size Using Spatial Information from Ikonos and QuickBird Images: Across-Sensor and across-Site Comparisons. Remote Sens. Environ. 2010, 114, 1099–1107. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual Tree-Crown Detection in RGB Imagery Using Semi-Supervised Deep Learning Neural Networks. Remote Sens. 2019, 11, 1309. [Google Scholar] [CrossRef]

- Emin, M.; Anwar, E.; Liu, S.; Emin, B.; Mamut, M.; Abdukeram, A.; Liu, T. Target Detection-Based Tree Recognition in a Spruce Forest Area with a High Tree Density—Implications for Estimating Tree Numbers. Sustainability 2021, 13, 3279. [Google Scholar] [CrossRef]

- Chen, X.; Jiang, K.; Zhu, Y.; Wang, X.; Yun, T. Individual Tree Crown Segmentation Directly from UAV-Borne LiDAR Data Using the PointNet of Deep Learning. Forests 2021, 12, 131. [Google Scholar] [CrossRef]

- Panagiotidis, D.; Abdollahnejad, A.; Surový, P.; Chiteculo, V. Determining Tree Height and Crown Diameter from High-Resolution UAV Imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Ye, Z.; Wei, J.; Lin, Y.; Guo, Q.; Zhang, J.; Zhang, H.; Deng, H.; Yang, K. Extraction of Olive Crown Based on UAV Visible Images and the U2-Net Deep Learning Model. Remote Sens. 2022, 14, 1523. [Google Scholar] [CrossRef]

- Wu, J.; Yang, G.; Yang, H.; Zhu, Y.; Li, Z.; Lei, L.; Zhao, C. Extracting Apple Tree Crown Information from Remote Imagery Using Deep Learning. Comput. Electron. Agric. 2020, 174, 105504. [Google Scholar] [CrossRef]

- Lou, X.; Huang, Y.; Fang, L.; Huang, S.; Gao, H.; Yang, L.; Weng, Y.; Hung, I.-K. Measuring Loblolly Pine Crowns with Drone Imagery through Deep Learning. J. For. Res. 2022, 33, 227–238. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (Voc) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond Empirical Risk Minimization. arXiv 2018, arXiv:1710.09412. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

| Method | Improvement Method | Precision | Recall | F1-Score | mAP@0.5 | Parameter Size | Training Time |

|---|---|---|---|---|---|---|---|

| M1 | YOLOv7 | 96.87% | 82.14% | 88.90% | 89.34% | 7.30 MB | 1.92 h |

| M2 | YOLOv7+SimAM | 98.03% | 89.29% | 93.46% | 92.99% | 6.63 MB | 1.31 h |

| M3 | YOLOv7+SIoU | 86.78% | 82.14% | 84.40% | 85.30% | 7.31 MB | 1.96 h |

| M4 | YOLOv7+SimAM+SIoU | 96.23% | 91.07% | 94.58% | 94.34% | 6.63 MB | 1.42 h |

| Method | Improvement Method | Bias | RMSE | R2 |

|---|---|---|---|---|

| M1 | YOLOv7 | 0.322 | 0.457 | 0.686 |

| M2 | YOLOv7+SimAM | 0.394 | 0.554 | 0.699 |

| M3 | YOLOv7+SIoU | 0.305 | 0.412 | 0.742 |

| M4 | YOLOv7+SimAM+SIoU | 0.304 | 0.424 | 0.837 |

| Method | Improvement Method | Precision | Recall | F1-Score | mAP@0.5 | Parameter Size | Inference Time |

|---|---|---|---|---|---|---|---|

| M4 | YOLOv7+SimAM+SIoU | 96.23% | 91.07% | 94.58% | 94.34% | 6.63 MB | 18.2 ms |

| M5 | SSD | 94.90% | 72.50% | 82.20% | 86.40% | 9.28 MB | 62.4 ms |

| M6 | Faster-RCNN | 90.19% | 92.00% | 91.09% | 96.21% | 53.40 MB | 115.2 ms |

| Method | Improvement Method | Bias | RMSE | R2 |

|---|---|---|---|---|

| M4 | YOLOv7+SimAM+SIoU | 0.304 | 0.424 | 0.837 |

| M5 | SSD | 0.362 | 0.487 | 0.727 |

| M6 | Faster-RCNN | 0.356 | 0.476 | 0.768 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, C.; Cai, C.; Chen, S.; Xu, H.; Yang, L.; Ji, J.; Huang, S.; Hung, I.-K.; Weng, Y.; Lou, X. Crown Width Extraction of Metasequoia glyptostroboides Using Improved YOLOv7 Based on UAV Images. Drones 2023, 7, 336. https://doi.org/10.3390/drones7060336

Dong C, Cai C, Chen S, Xu H, Yang L, Ji J, Huang S, Hung I-K, Weng Y, Lou X. Crown Width Extraction of Metasequoia glyptostroboides Using Improved YOLOv7 Based on UAV Images. Drones. 2023; 7(6):336. https://doi.org/10.3390/drones7060336

Chicago/Turabian StyleDong, Chen, Chongyuan Cai, Sheng Chen, Hao Xu, Laibang Yang, Jingyong Ji, Siqi Huang, I-Kuai Hung, Yuhui Weng, and Xiongwei Lou. 2023. "Crown Width Extraction of Metasequoia glyptostroboides Using Improved YOLOv7 Based on UAV Images" Drones 7, no. 6: 336. https://doi.org/10.3390/drones7060336

APA StyleDong, C., Cai, C., Chen, S., Xu, H., Yang, L., Ji, J., Huang, S., Hung, I.-K., Weng, Y., & Lou, X. (2023). Crown Width Extraction of Metasequoia glyptostroboides Using Improved YOLOv7 Based on UAV Images. Drones, 7(6), 336. https://doi.org/10.3390/drones7060336