An ETA-Based Tactical Conflict Resolution Method for Air Logistics Transportation

Abstract

1. Introduction

1.1. Research Contributions

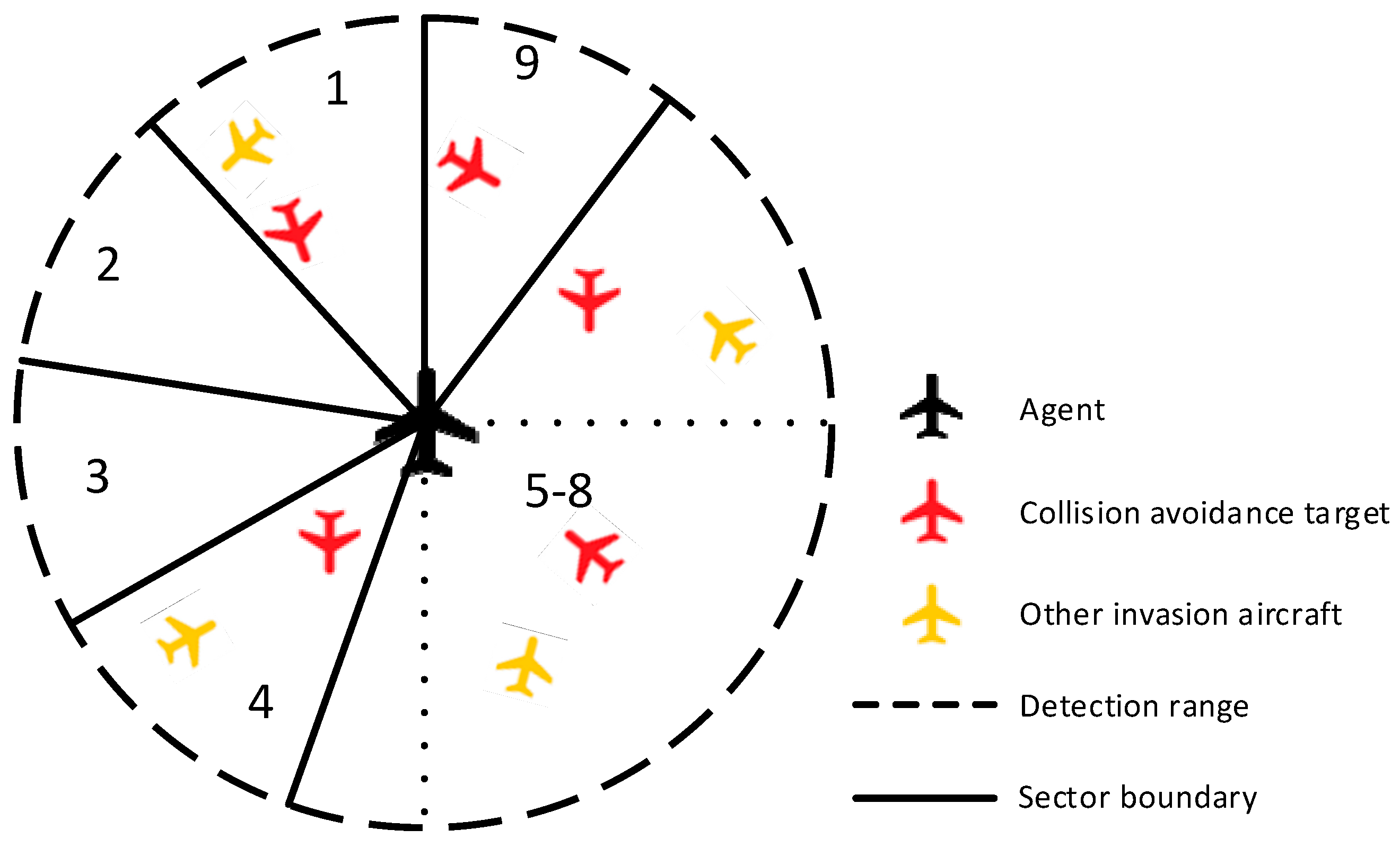

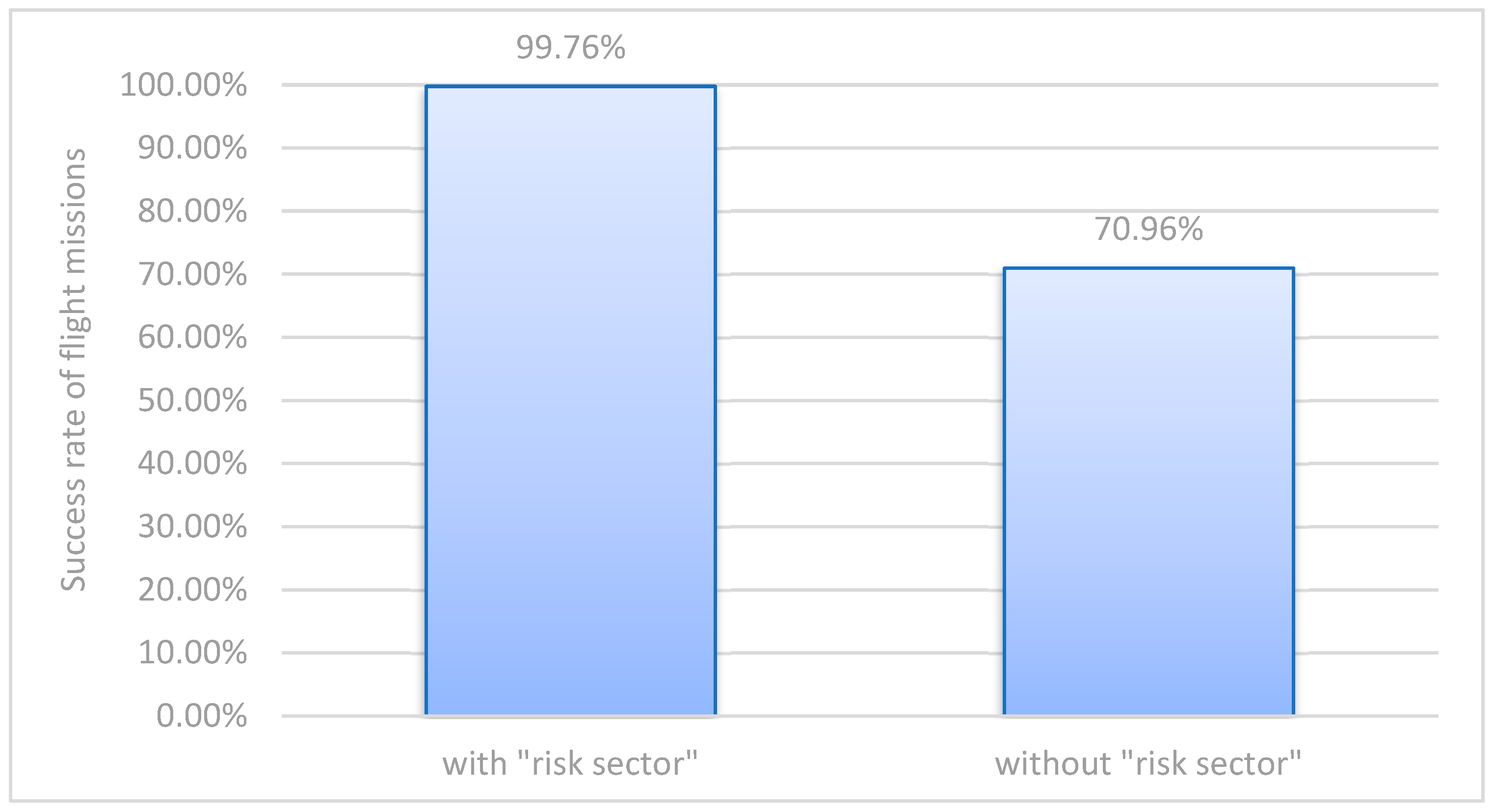

- This study introduces the novel concept of risk sectors to describe the state space, which improves the success rate of tactical conflict resolution for unmanned aerial vehicles by allowing the same state information to express both the relative direction and distance with the collision avoidance target.

- This study addresses the problem of tactical conflict resolution under the temporal constraints of the strategic 4D trajectory by modeling it as a multi-objective optimization problem. To the best of our knowledge, this problem is considered for the first time. Specifically, this study proposes a novel deep reinforcement learning method for tactical conflict resolution, introducing a criterion reward based on the estimated time of arrival at the next pre-defined waypoint to achieve the coupled goals of collision avoidance and timely arrival at the next 4D waypoint, thus reducing the risk of secondary conflicts.

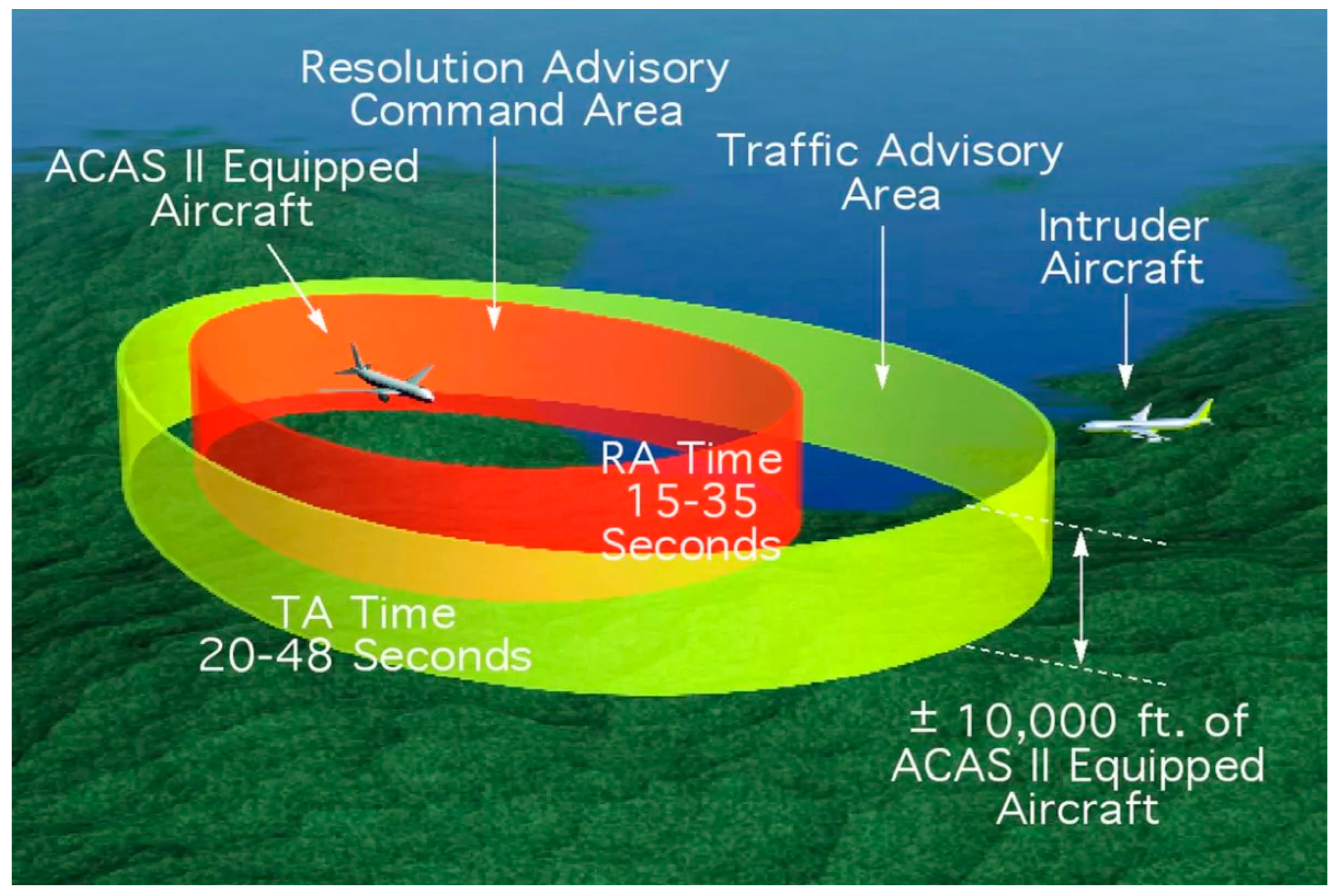

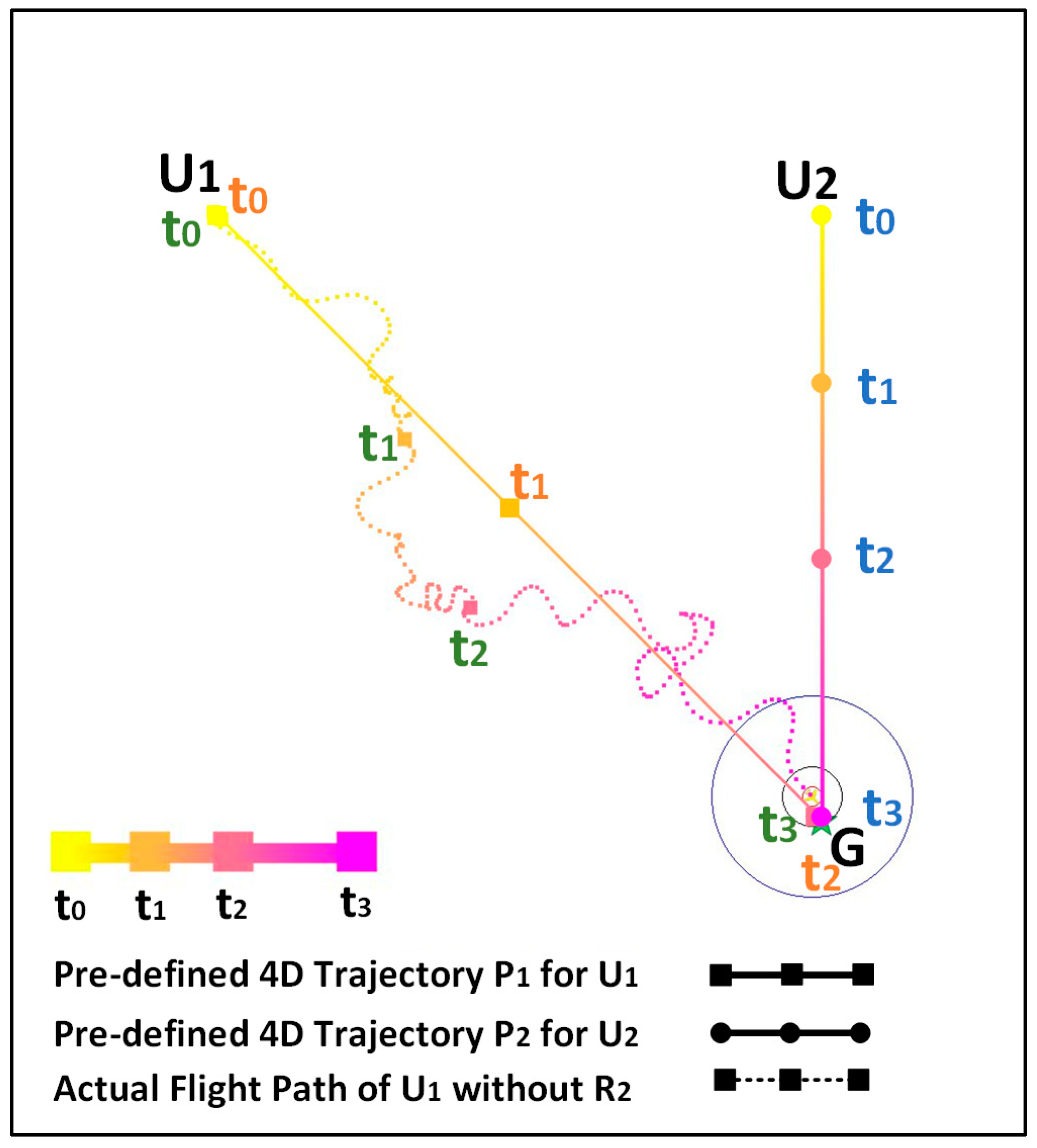

- The simulation results show that our method outperforms the traditional tactical confliction resolution method, achieving an improvement of 40.59% in the success rate of flight missions. In comparison with existing standards, our method can operate safely in scenarios with a non-cooperative target density of 0.26 aircraft per square nautical mile, providing a 3.3-fold improvement over TCAS II. We also adopt our method in a specific local scenario with two drones; the result of which indicated that the drones can successfully avoid secondary conflicts through our novel ETA-based temporal reward setting. Moreover, we analyze the effectiveness of each part of our ETA-based temporal reward in detail in the ablation experiment.

1.2. Organization

2. Related Work

2.1. Strategic Trajectory Planning Methods

2.2. Strategies of Tactical Conflict Resolution

3. Preliminaries

3.1. Problem Description

3.2. Model Construction

4. Review of Typical Methods

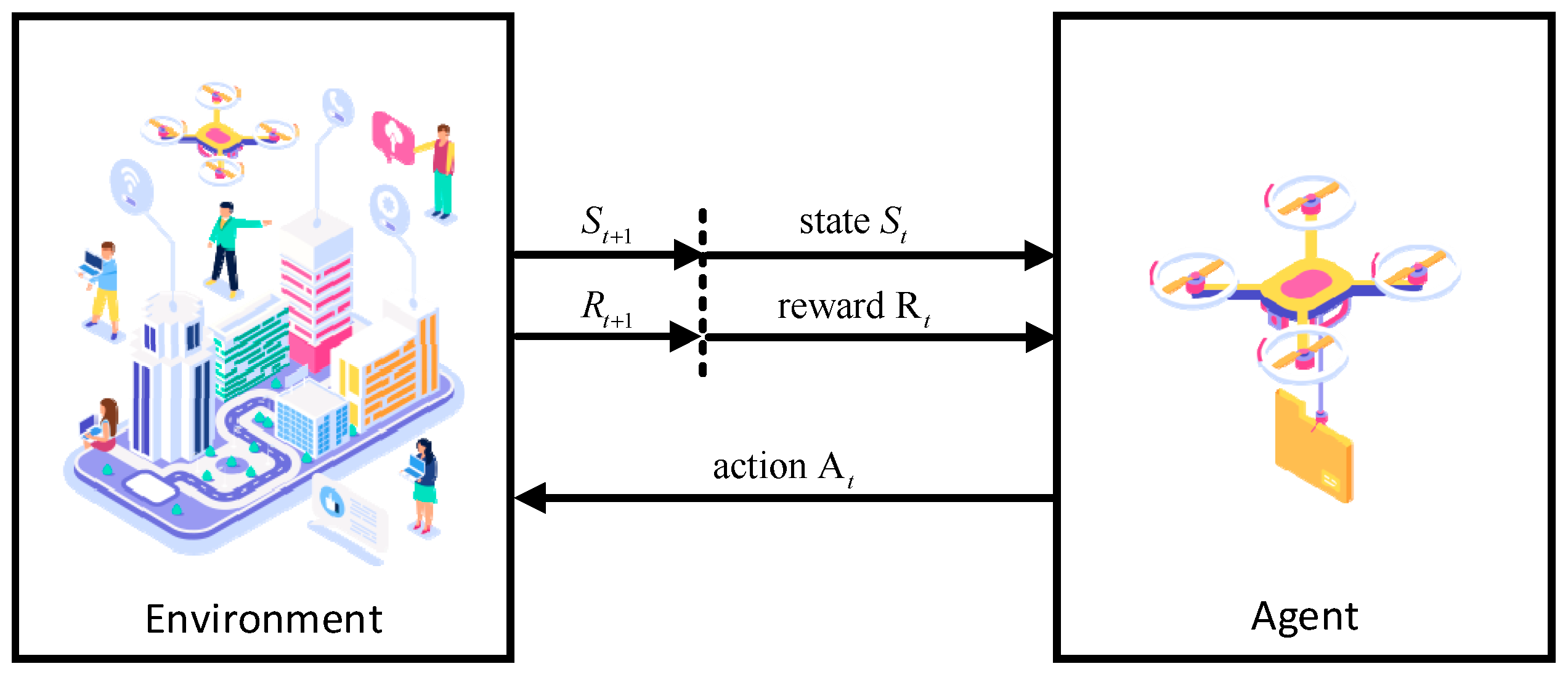

4.1. Markov Decision Process and Reinforcement Learning

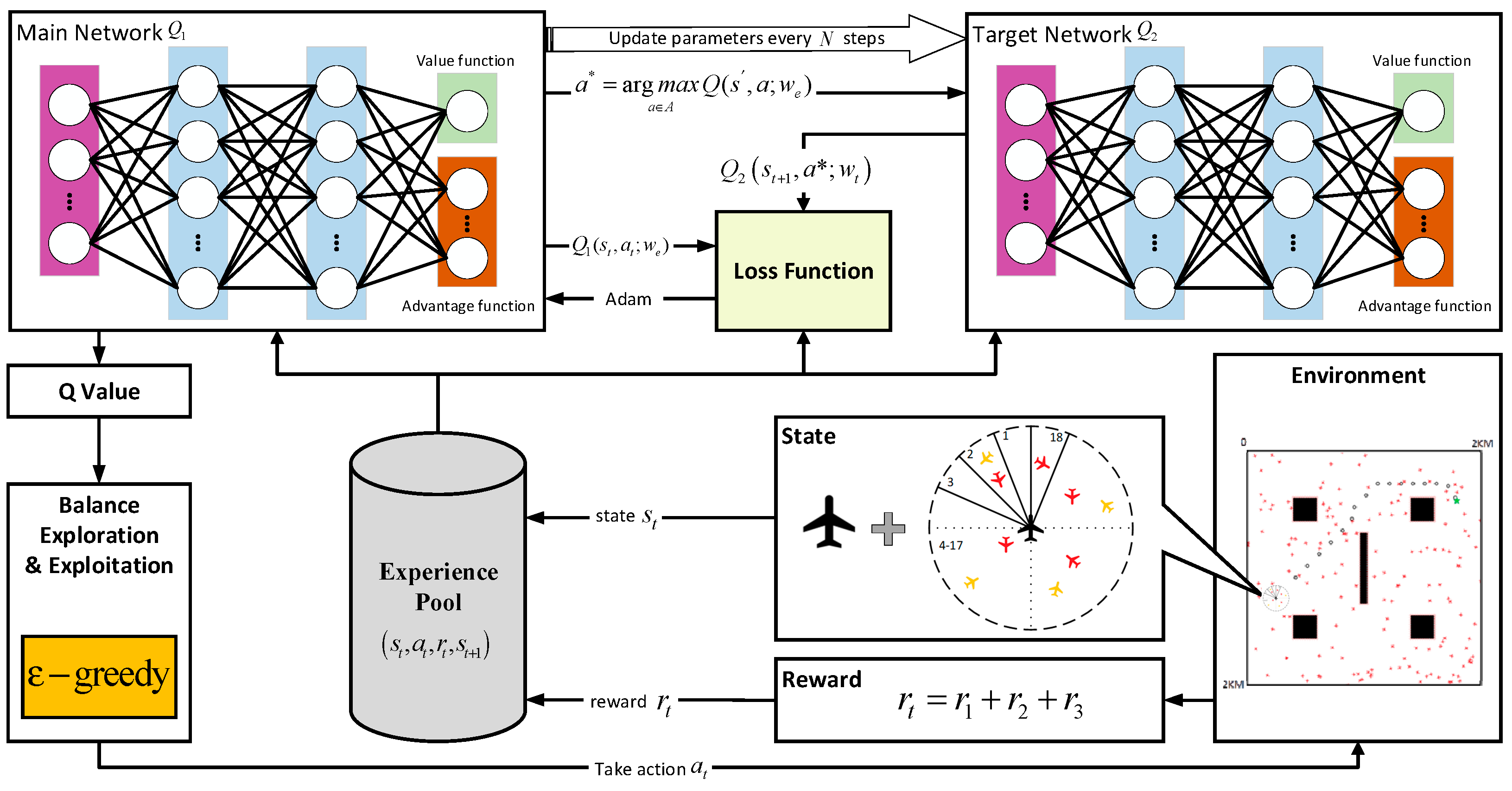

4.2. Introduction of the D3QN Algorithm

4.2.1. Deep Q-Networks

4.2.2. Double DQN

4.2.3. Dueling DQN

4.2.4. Dueling Double DQN

5. Method

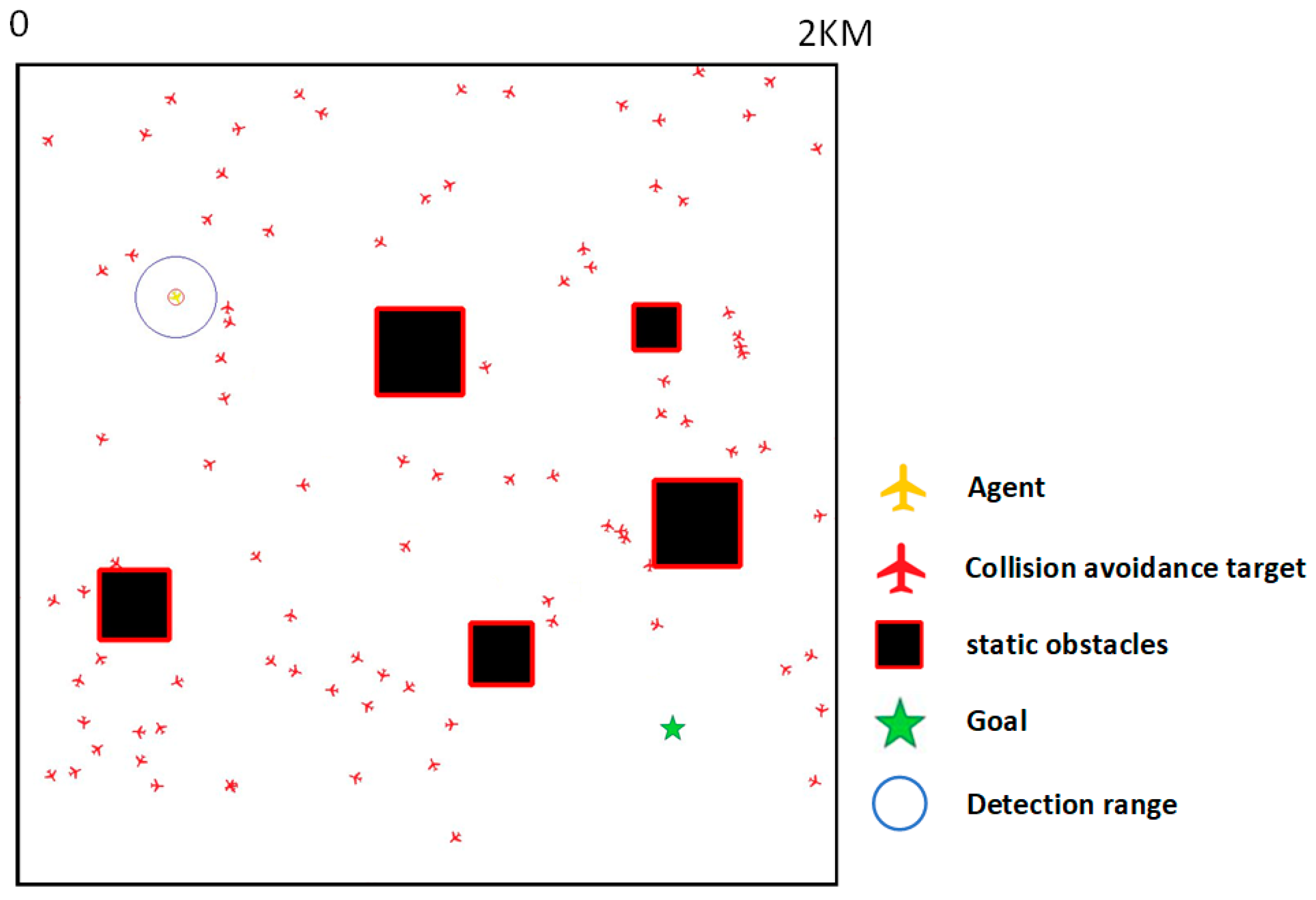

5.1. Environment Construction for the Problem

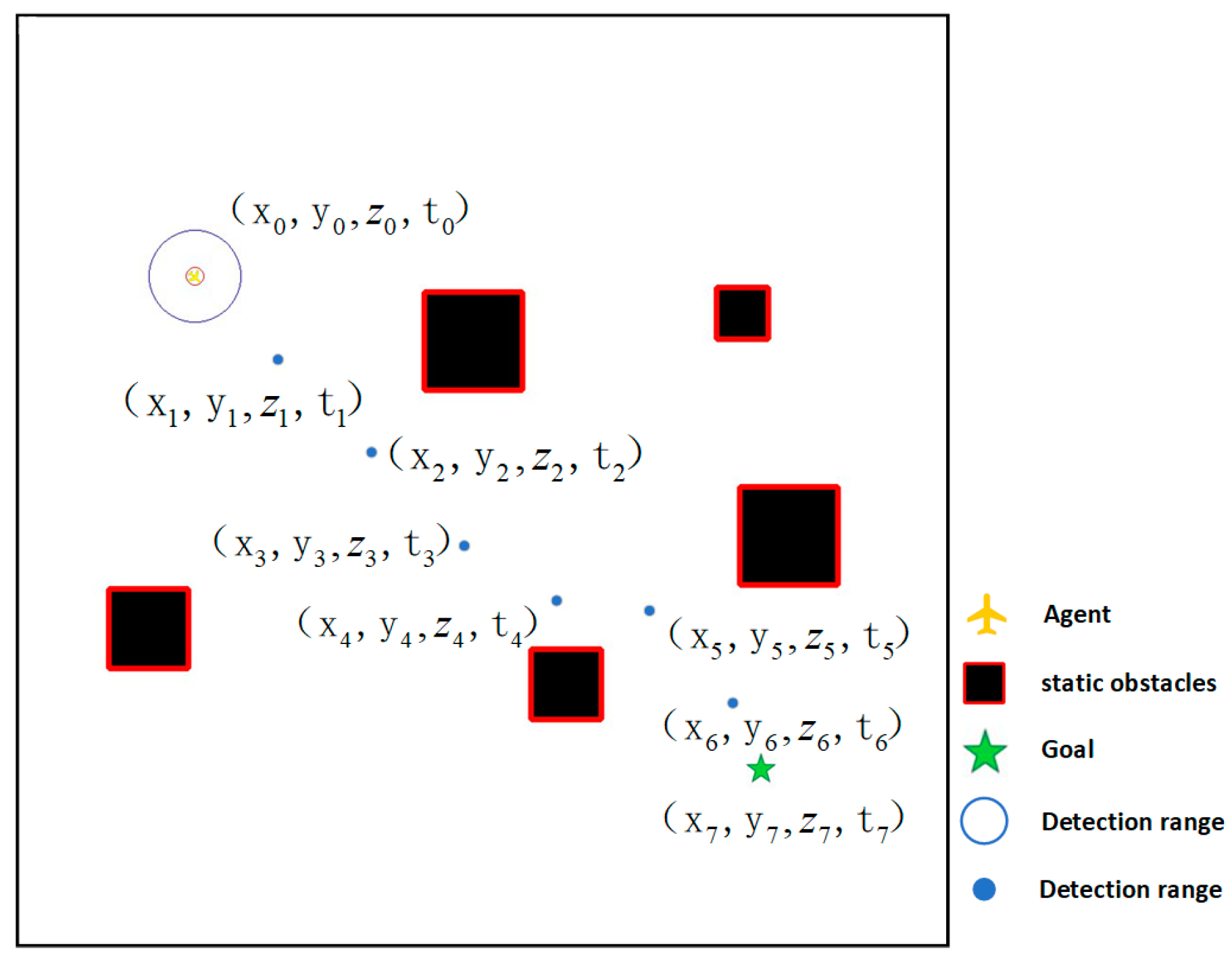

5.1.1. State Space

5.1.2. Action Space

5.1.3. Reward Function

5.2. Algorithm

| Algorithm 1 Pseudocode of D3QN in this paper |

| 1 Create a training environment 2 Initialize the network parameters and experience pool 3 for episode = 1 to M do 4 Initializing the Environment S 5 for t = 1 to T do 6 if random > ε then 7 pick an action at random 8 else 9 action 10 end 11 execute the action 12 get 13 get 14 get 15 get 16 17 18 store fragments in the experience pool 19 if the current round is a training round then 20 randomly extract fragments from the experience pool |

| 21 update the Q-value 22 end 23 if the current round is the updated target network round then 24 copy the parameters of the current network to the target network 25 end 26 if is ended then 27 break 28 end |

6. Simulation

6.1. Platform

6.1.1. Simulation Scene Setting

6.1.2. Reinforcement Learning Setting

6.2. Test 1: Comparison Analysis of Sector Improvement

6.2.1. Task Setting

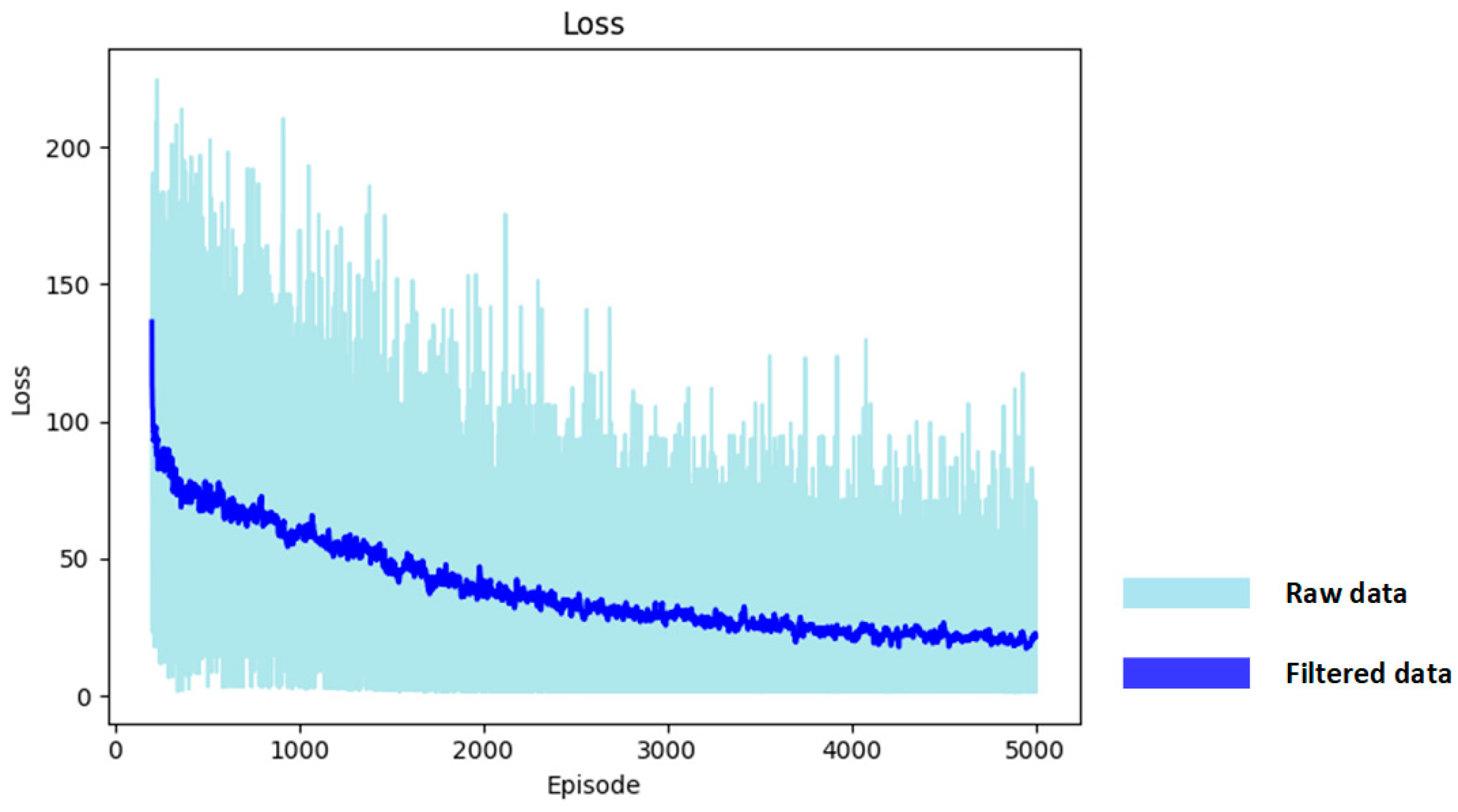

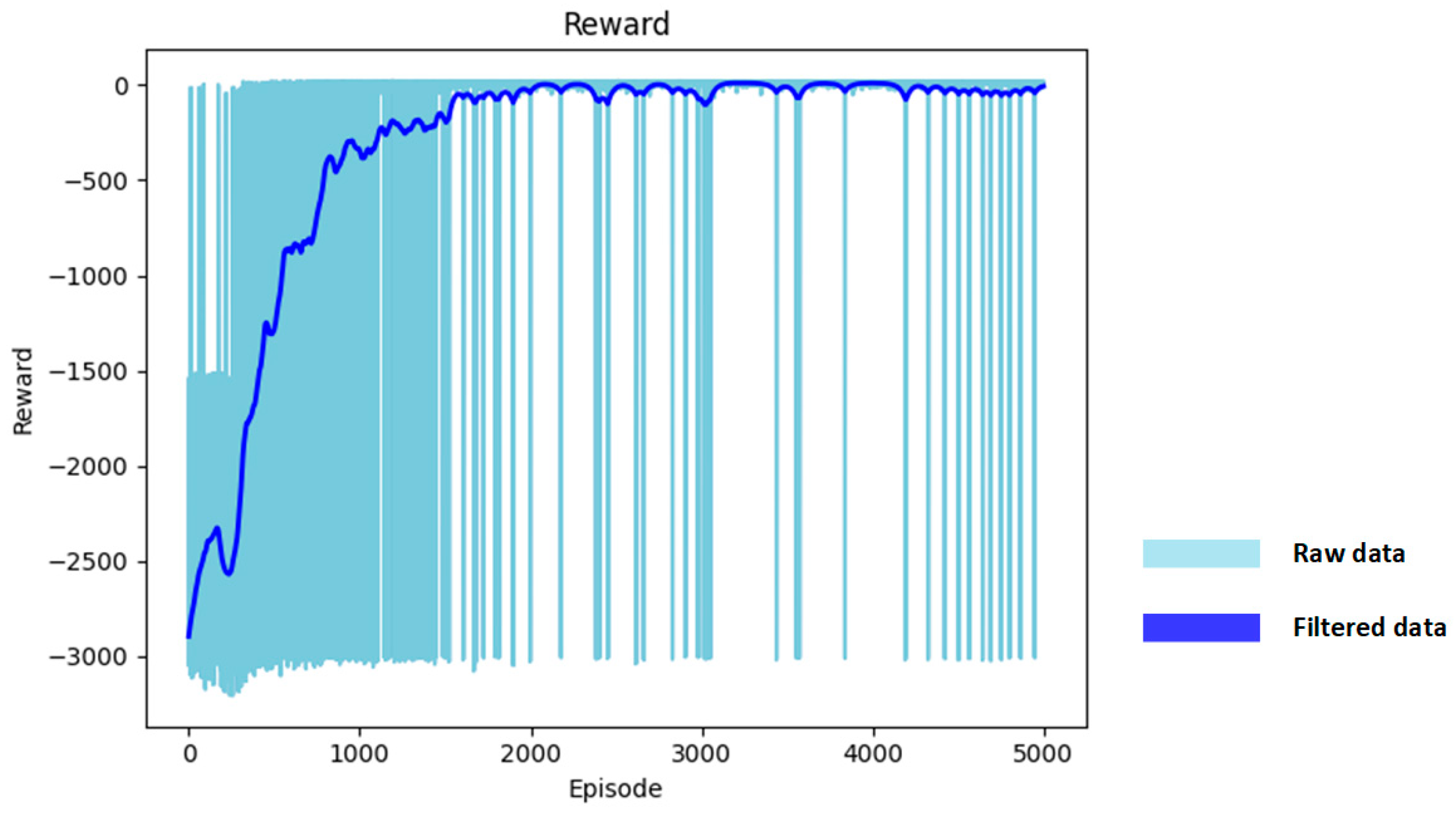

6.2.2. Simulation Results

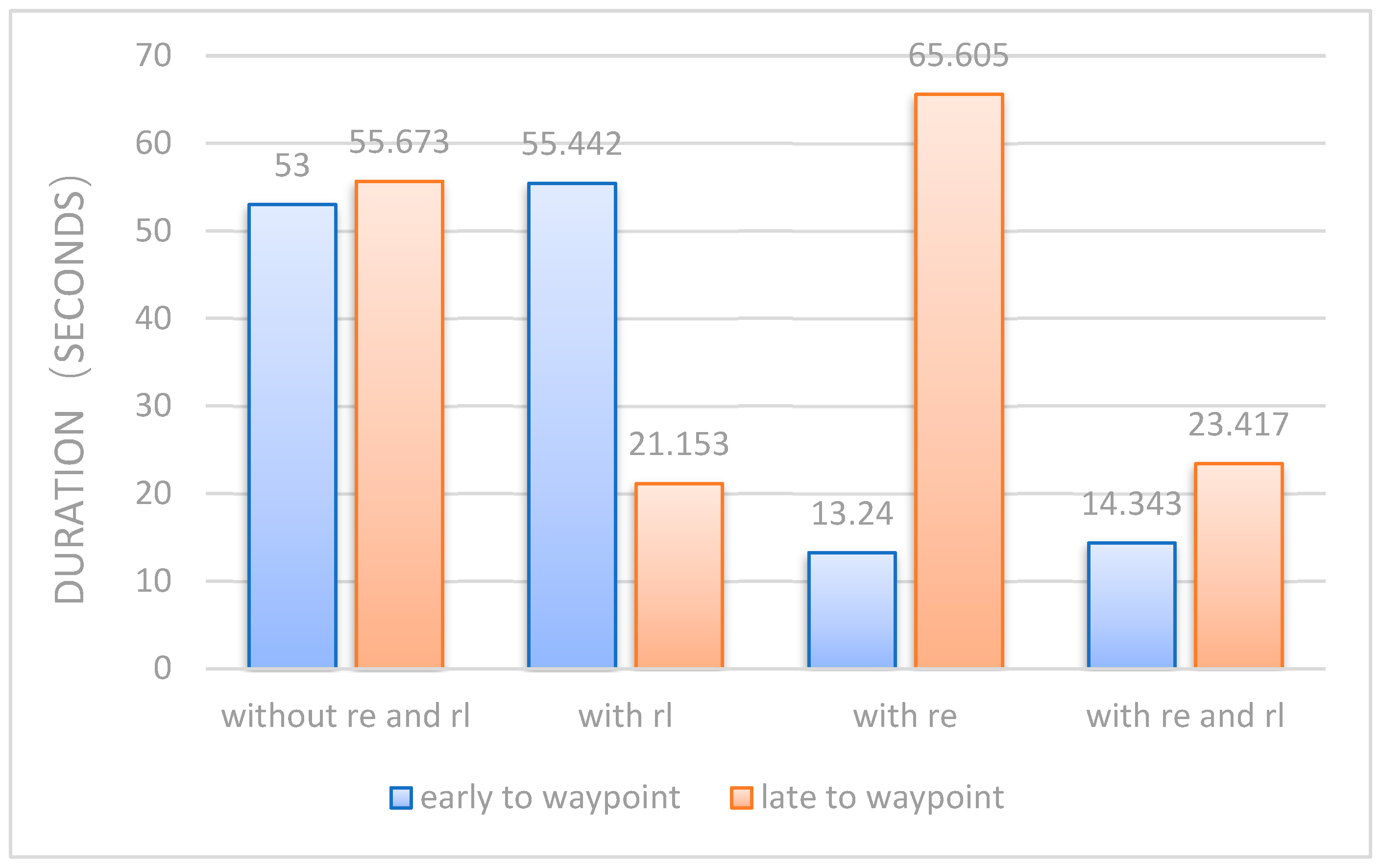

6.3. Test 2: Ablation Study of the ETA-Based Temporal Rewards

6.3.1. Task Setting

6.3.2. Simulation Results

6.4. Test 3: Exploring the Maximum Density in the Scenario

6.4.1. Task Setting

6.4.2. Simulation Results

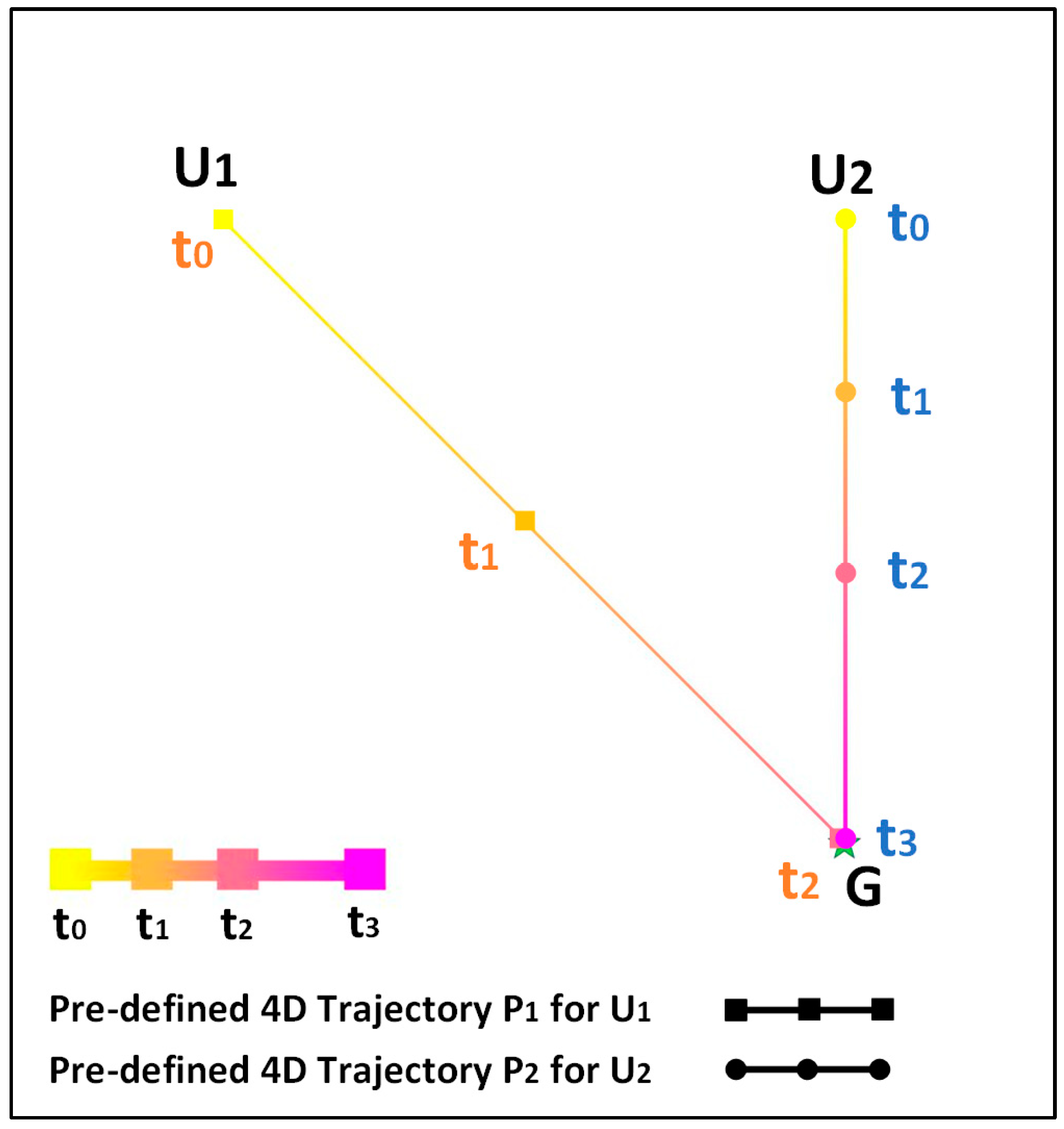

6.5. Test 4: Case Study

6.5.1. Task Setting

6.5.2. Simulation Results

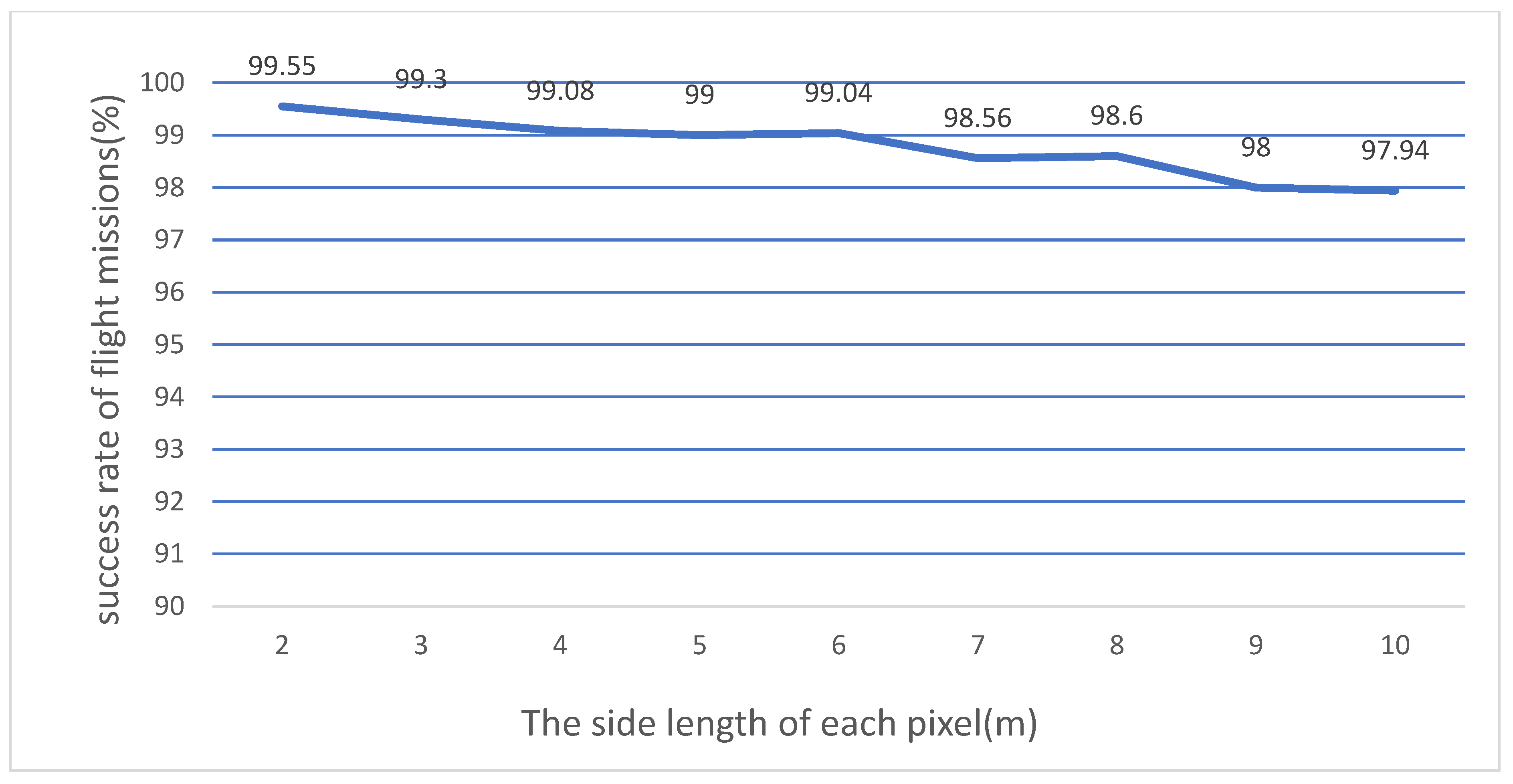

6.6. Test 5: Robustness to Uncertainty

6.6.1. Task Setting

6.6.2. Simulation Results

6.7. Test 6: Ablation Study

6.7.1. Task Setting

6.7.2. Simulation Results

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| Symbols | Definition |

| ETA | Estimated Time of Arrival |

| ICAO | International Civil Aviation Organization |

| 4DT | 4D Trajectory |

| 4-PNV | 4D Trajectory Planning, Negotiation, and Verification |

| MCTS | Monte Carlo Tree Search |

| DQN | Deep Q Network |

| DDPG | Deep Deterministic Policy Gradient |

| USS | UAS Service Supplier |

| the initial state of the drone | |

| the final state of the drone | |

| the hazard cost function | |

| the temporal difference cost function | |

| the estimated time of arrival of the drone at the next waypoint under present situation | |

| the specific time of arrival | |

| the risk of the drone colliding with the static obstacle | |

| the static obstacle | |

| the risk of the drone colliding with the non-cooperative target | |

| the non-cooperative target | |

| the flight speed of the drone at the moment | |

| the acceleration of the drone at the moment | |

| mission reward | |

| the yaw angular velocity | |

| horizontal coordinates | |

| vertical coordinates | |

| the minimum distance between the drone and the nearest non-cooperation target | |

| the agent’s state space at time | |

| the status information of the drone itself | |

| 4D trajectory temporal information | |

| the threatening status information | |

| collision avoidance reward | |

| subitem of | |

| subitem of | |

| constant reward | |

| subitem of | |

| penalize on collision, subitem of | |

| constant reward | |

| collision threshold | |

| the slowest speed | |

| an ETA-based temporal reward | |

| the early arrival penalty | |

| the late arrival penalty | |

| the arrival time difference | |

| the weighted velocity that changes as the current state changes | |

| the normalized remaining time | |

| the normalized remaining distance | |

| the time window threshold | |

| the distance between the drone and any static obstacles . | |

| the fastest speed | |

| the position of the closest non-cooperative target in -th sector | |

| subitem of | |

| subitem of | |

| the line-of-sight reward | |

| the destination distance reward | |

| MDP | Markov Decision Process |

| the distance between the drone and the next waypoint at time | |

| the reward coefficients | |

| the reward coefficients | |

| the yaw angle |

References

- Global Drone Delivery Market—Analysis and Forecast, 2023 to 2030. Available online: https://www.asdreports.com/market-research-report-575426/global-drone-delivery-market-analysis-forecast (accessed on 8 November 2022).

- Dahle, O.H.; Rydberg, J.; Dullweber, M.; Peinecke, N.; Bechina, A.A.A. A proposal for a common metric for drone traffic density. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems (ICUAS), Dubrovnik, Croatia, 21–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 64–72. [Google Scholar]

- Bradford, S.; Kopardekar, P. FAA/NASA UAS Traffic Management Pilot Program (UPP) UPP Phase 2 Final Report. In FAA/NASA Unmanned Aerial Systems Traffic Management Pilot Program Industry Workshop; NASA: Washington, DC, USA, 2021. [Google Scholar]

- Mohamed Salleh, M.F.B.; Low, K.H. Concept of operations (ConOps) for traffic management of Unmanned Aircraft Systems (TM-UAS) in urban environment. In AIAA Information Systems-AIAA Infotech@ Aerospace; American Institute of Aeronautics and Astronautics, Inc.: Reston, VA, USA, 2017; p. 0223. [Google Scholar]

- Arafat, M.Y.; Moh, S. JRCS: Joint routing and charging strategy for logistics drones. IEEE Internet Things J. 2022, 9, 21751–21764. [Google Scholar] [CrossRef]

- Huang, H.; Savkin, A.V.; Huang, C. Reliable path planning for drone delivery using a stochastic time-dependent public transportation network. IEEE Trans. Intell. Transp. Syst. 2020, 22, 4941–4950. [Google Scholar] [CrossRef]

- Khan, A. Risk Assessment, Prediction, and Avoidance of Collision in Autonomous Drones. arXiv 2021, arXiv:2108.12770. [Google Scholar]

- Siqi, H.A.O.; Cheng, S.; Zhang, Y. A multi-aircraft conflict detection and resolution method for 4-dimensional trajectory-based operation. Chin. J. Aeronaut. 2018, 31, 1579–1593. [Google Scholar]

- Peinecke, N.; Kuenz, A. Deconflicting the urban drone airspace. In Proceedings of the 2017 IEEE/AIAA 36th Digital Avionics Systems Conference (DASC), St. Petersburg, FL, USA, 17–21 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Chen, T.; Zhang, G.; Hu, X.; Xiao, J. Unmanned aerial vehicle route planning method based on a star algorithm. In Proceedings of the 2018 13th IEEE conference on industrial electronics and applications (ICIEA), Wuhan, China, 31 May–2 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1510–1514. [Google Scholar]

- Maini, P.; Sujit, P.B. Path planning for a uav with kinematic constraints in the presence of polygonal obstacles. In Proceedings of the 2016 international conference on unmanned aircraft systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 62–67. [Google Scholar]

- Abhishek, B.; Ranjit, S.; Shankar, T.; Eappen, G.; Sivasankar, P.; Rajesh, A. Hybrid PSO-HSA and PSO-GA algorithm for 3D path planning in autonomous UAVs. SN Appl. Sci. 2020, 2, 1805. [Google Scholar] [CrossRef]

- Khatib, O. Real-time obstacle avoidance for manipulators and mobile robots. Int. J. Robot. Res. 1986, 5, 90–98. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, X.; Xu, C.; Gao, F. Geometrically constrained trajectory optimization for multicopters. IEEE Trans. Robot. 2022, 38, 3259–3278. [Google Scholar] [CrossRef]

- Howard, T.M.; Green, C.J.; Kelly, A.; Ferguson, D. State space sampling of feasible motions for high-performance mobile robot navigation in complex environments. J. Field Robot. 2008, 25, 325–345. [Google Scholar] [CrossRef]

- Mankiewicz, R.H. Organisation de l’aviation civile internationale. In Global Air Traffic Management Operational Concept; ICAO: Montreal, QC, Canada, 2005. [Google Scholar]

- Florence, H.O.; HO, F. Scalable Conflict Detection and Resolution Methods for Safe Unmanned Aircraft Systems Traffic Management. Ph.D. Thesis, The Graduate University for Advanced Studies, Hayama, Japan, 2020. [Google Scholar]

- Gardi, A.; Lim, Y.; Kistan, T.; Sabatini, R. Planning and negotiation of optimised 4D trajectories in strategic and tactical re-routing operations. In Proceedings of the 30th Congress of the International Council of the Aeronautical Sciences, ICAS, Daejeon, Republic of Korea, 25–30 September 2016; Volume 2016. [Google Scholar]

- Qian, X.; Mao, J.; Chen, C.H.; Chen, S.; Yang, C. Coordinated multi-aircraft 4D trajectories planning considering buffer safety distance and fuel consumption optimization via pure-strategy game. Transp. Res. Part C Emerg. Technol. 2017, 81, 18–35. [Google Scholar] [CrossRef]

- Chaimatanan, S.; Delahaye, D.; Mongeau, M. Aircraft 4D trajectories planning under uncertainties. In Proceedings of the 2015 IEEE Symposium Series on Computational Intelligence, Cape Town, South Africa, 7–10 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 51–58. [Google Scholar]

- FAA, Eurocontrol Pursue Initial Trajectory-Based Operations Now, Full Implementation Later. Available online: https://interactive.aviationtoday.com/avionicsmagazine/july-august-2022/faa-eurocontrol-pursue-initial-trajectory-based-operations-now-full-implementation-later/ (accessed on 15 November 2022).

- 4D Skyways Improving Trajectory Management for European Air Transport. Available online: https://www.eurocontrol.int/project/4d-skyways (accessed on 15 November 2022).

- Park, J.W.; Oh, H.D.; Tahk, M.J. UAV collision avoidance based on geometric approach. In Proceedings of the 2008 SICE Annual Conference, Chofu, Japan, 20–22 August 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 2122–2126. [Google Scholar]

- Strobel, A.; Schwarzbach, M. Cooperative sense and avoid: Implementation in simulation and real world for small unmanned aerial vehicles. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1253–1258. [Google Scholar]

- Marchidan, A.; Bakolas, E. Collision avoidance for an unmanned aerial vehicle in the presence of static and moving obstacles. J. Guid. Control. Dyn. 2020, 43, 96–110. [Google Scholar] [CrossRef]

- Roadmap, A.I. A Human-Centric Approach to AI in Aviation; European Aviation Safety Agency: Cologne, Germany, 2020.

- Brat, G. Are we ready for the first easa guidance on the use of ml in aviation. In Proceedings of the SAE G34 Meeting, Online, 18 May 2021. [Google Scholar]

- Yang, X.; Wei, P. Autonomous on-demand free flight operations in urban air mobility using Monte Carlo tree search. In Proceedings of the International Conference on Research in Air Transportation (ICRAT), Barcelona, Spain, 26–29 June 2018; Volume 8. [Google Scholar]

- Chen, Y.; González-Prelcic, N.; Heath, R.W. Collision-free UAV navigation with a monocular camera using deep reinforcement learning. In Proceedings of the 2020 IEEE 30th International Workshop on Machine Learning for Signal Processing (MLSP), Espoo, Finland, 21–24 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Cetin, E.; Barrado, C.; Munoz, G.; Macias, M.; Pastor, E. Drone navigation and avoidance of obstacles through deep reinforcement learning. In Proceedings of the 2019 IEEE/AIAA 38th Digital Avionics Systems Conference (DASC), San Diego, CA, USA, 8–12 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–7. [Google Scholar]

- Wan, K.; Gao, X.; Hu, Z.; Wu, G. Robust motion control for UAV in dynamic uncertain environments using deep reinforcement learning. Remote Sens. 2020, 12, 640. [Google Scholar] [CrossRef]

- Monk, K.J.; Rorie, C.; Smith, C.; Keeler, J.; Sadler, G.; Brandt, S.L. Unmanned Aircraft Systems (UAS) Integration in the National Airspace System (NAS) Project: ACAS-Xu Run 5 Human-In-The-Loop Sim SC-147 Results Outbrief. In Proceedings of the RTCA Special Committee 147 Face-to-Face Meeting, Phoenix, AZ, USA, 10–13 March 2020. No. ARC-E-DAA-TN73281. [Google Scholar]

- ASTM F3548-21; Standard Specification for UAS Traffic Management (UTM) UAS Service Supplier (USS) Interoperability. ASTM: West Conshohocken, PA, USA, 2022; Volume 15.09. [CrossRef]

- Fully Automated Instant Delivery Network. Antwork Technology. Available online: https://www.antwork.link (accessed on 5 May 2023).

- TCAS Event Recorder. Honeywell. 2021. Available online: https://aerospace.honeywell.com/us/en/about-us/news/2021/09/tcas-event-recorder (accessed on 5 May 2023).

- Kopardekar, P.; Rios, J.; Prevot, T.; Johnson, M.; Jung, J.; Robinson, J.E. Unmanned aircraft system traffic management (UTM) concept of operations. In AIAA Aviation and Aeronautics Forum (Aviation 2016); No. ARC-E-DAA-TN32838; NASA: Washington, DC, USA, 2016. [Google Scholar]

- Johnson, M. Unmanned Aircraft Systems (UAS) Traffic Management (UTM) Project; NASA: Washington, DC, USA, 2021. Available online: https://nari.arc.nasa.gov/sites/default/files/attachments/UTM%20TIM-Marcus%20Johnson.pdf (accessed on 5 June 2022).

- ACSL. Made-in-Japan Drone for Logistics AirTruck. ACSL. 22 December 2022. Available online: https://product.acsl.co.jp/en/wp-content/uploads/2022/12/220627_AirTruck_en_trim.pdf (accessed on 5 May 2023).

- Lu, P. Overview of China’s Logistics UAV Industry in 2020. LeadLeo. April 2020. Available online: https://pdf.dfcfw.com/pdf/H3_AP202101071448279174_1.pdf (accessed on 5 May 2023).

- 36 Kr Venture Capital Research Institute. Unmanned Distribution Field Research Report. 36 Kr. 26 February 2020. Available online: http://pdf.dfcfw.com/pdf/H3_AP202003041375814837_1.pdf (accessed on 5 May 2023).

- Huang, L.Y.; Zhang, D.L. Concept of Operation for UAVs in Urban Ultra-Low-Altitude Airspace. J. Civ. Aviat. 2022, 6, 50–55. [Google Scholar]

- Minsky, M. Steps toward artificial intelligence. Proc. IRE 1961, 49, 8–30. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling network architectures for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, PMLR, New York, NY, USA, 20–22 June 2016; pp. 1995–2003. [Google Scholar]

- ICAO Model UAS Regulations. Available online: https://www.icao.int/safety/UA/Pages/ICAO-Model-UAS-Regulations.aspx (accessed on 18 November 2022).

- Mo, S.; Pei, X.; Chen, Z. Decision-making for oncoming traffic overtaking scenario using double DQN. In Proceedings of the 2019 3rd Conference on Vehicle Control and Intelligence (CVCI), Hefei, China, 21–22 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar]

- Fang, S.; Chen, F.; Liu, H. Dueling Double Deep Q-Network for Adaptive Traffic Signal Control with Low Exhaust Emissions in A Single Intersection. IOP Conf. Ser. Mater. Sci. Eng. 2019, 612, 052039. [Google Scholar] [CrossRef]

- Han, B.A.; Yang, J.J. Research on adaptive job shop scheduling problems based on dueling double DQN. IEEE Access 2020, 8, 186474–186495. [Google Scholar] [CrossRef]

- Sui, Z.; Pu, Z.; Yi, J.; Xiong, T. Formation control with collision avoidance through deep reinforcement learning. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–8. [Google Scholar]

- Radio Technical Commission for Aeronautics (US). Minimum Operational Performance Standards for Traffic Alert and Collision Avoidance System (TCAS) Airborne Equipment; Radio Technical Commission for Aeronautics: Washington, DC, USA, 1983. [Google Scholar]

- Indian Defence Review. Aviation: The Future Is Unmanned. Available online: http://www.indiandefencereview.com/news/aviation-the-future-is-unmanned/2/ (accessed on 18 January 2023).

| −3 | 0 | 3 | ||

|---|---|---|---|---|

| 0 | ||||

| Parameter | Value |

|---|---|

| Learning rate | 0.00005 |

| Discount factor | 0.99 |

| buffer_size | 1,000,000 |

| batch_size | 256 |

| Multi-step update | 5 |

| Update delay of current network | 10 steps |

| Update delay of target network | Upon completion of each round |

| Total number of training rounds | 5000 |

| Loss function | MSE |

| Success rate of flight missions (%) | 99.58 | 99.16 | 99.64 | 99.11 |

| Early to waypoint (s) | 53 | 55.442 | 13.24 | 14.343 |

| Late to waypoint (s) | 55.673 | 21.153 | 65.605 | 23.417 |

| On-time rate (%) with time window {−10 s, 10 s} | 3.15 | 1.20 | 6.38 | 16.34 |

| On-time rate (%) with time window {−15 s, 15 s} | 4.45 | 1.50 | 10.20 | 24.82 |

| On-time rate (%) with time window {−20 s, 20 s} | 5.35 | 1.86 | 14.54 | 38.16 |

| On-time rate (%) with time window {−25 s, 25 s} | 6.29 | 2.15 | 19.50 | 64.56 |

| On-time rate (%) with time window {−30s, 30 s} | 7.35 | 2.33 | 25.09 | 84.55 |

| Average Magnitude of Error | Variance | Success Rate of Flight Missions (%) | Average Calculation Time (s) |

|---|---|---|---|

| 1 | 0.25 | 99.5% | 0.001003 |

| 5 | 0.25 | 99.34% | 0.001074 |

| 10 | 0.25 | 98.92% | 0.000998 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Gu, W.; Zheng, Y.; Huang, L.; Zhang, X. An ETA-Based Tactical Conflict Resolution Method for Air Logistics Transportation. Drones 2023, 7, 334. https://doi.org/10.3390/drones7050334

Li C, Gu W, Zheng Y, Huang L, Zhang X. An ETA-Based Tactical Conflict Resolution Method for Air Logistics Transportation. Drones. 2023; 7(5):334. https://doi.org/10.3390/drones7050334

Chicago/Turabian StyleLi, Chenglong, Wenyong Gu, Yuan Zheng, Longyang Huang, and Xuejun Zhang. 2023. "An ETA-Based Tactical Conflict Resolution Method for Air Logistics Transportation" Drones 7, no. 5: 334. https://doi.org/10.3390/drones7050334

APA StyleLi, C., Gu, W., Zheng, Y., Huang, L., & Zhang, X. (2023). An ETA-Based Tactical Conflict Resolution Method for Air Logistics Transportation. Drones, 7(5), 334. https://doi.org/10.3390/drones7050334