Abstract

Recently, the unmanned aerial vehicle (UAV) synthetic aperture radar (SAR) has become a highly sought-after topic for its wide applications in target recognition, detection, and tracking. However, SAR automatic target recognition (ATR) models based on deep neural networks (DNN) are suffering from adversarial examples. Generally, non-cooperators rarely disclose any SAR-ATR model information, making adversarial attacks challenging. To tackle this issue, we propose a novel attack method called Transferable Adversarial Network (TAN). It can craft highly transferable adversarial examples in real time and attack SAR-ATR models without any prior knowledge, which is of great significance for real-world black-box attacks. The proposed method improves the transferability via a two-player game, in which we simultaneously train two encoder–decoder models: a generator that crafts malicious samples through a one-step forward mapping from original data, and an attenuator that weakens the effectiveness of malicious samples by capturing the most harmful deformations. Particularly, compared to traditional iterative methods, the encoder–decoder model can one-step map original samples to adversarial examples, thus enabling real-time attacks. Experimental results indicate that our approach achieves state-of-the-art transferability with acceptable adversarial perturbations and minimum time costs compared to existing attack methods, making real-time black-box attacks without any prior knowledge a reality.

1. Introduction

The ongoing advances in unmanned aerial vehicle (UAV) and synthetic aperture radar (SAR) technologies have enabled the acquisition of high-resolution SAR images through UAVs. However, unlike visible light imaging, SAR images reflect the reflection intensity of imaging targets to radar signals, making it difficult for humans to extract effective semantic information from SAR images without the aid of interpretation tools. Currently, deep learning has achieved excellent performance in various scenarios [1,2,3], and SAR automatic target recognition (SAR-ATR) models based on deep neural networks (DNN) [4,5,6,7,8] have become one of the most popular interpretation methods. With their powerful representation capabilities, DNNs outperform traditional approaches in image classification tasks. However, recent studies have shown that DNN-based SAR-ATR models are susceptible to adversarial examples [9].

The concept of adversarial examples was first proposed by Szegedy et al. [10], which suggests that a carefully designed tiny perturbation can cause a well-trained DNN model to misclassify. This finding has made adversarial attacks one of the most serious threats to artificial intelligence (AI) security. To date, researchers have proposed a variety of adversarial attack methods, which can be mainly divided into two categories from the perspective of prior knowledge: the white-box and black-box attacks. In the first case, attackers can utilize a large amount of prior knowledge, such as the model structure and gradient information, etc., to craft adversarial examples for victim models. Examples of white-box methods include gradient-based attacks [11,12], boundary-based attacks [13], and saliency map-based attacks [14], etc. In the second case, attackers can only access the output information or even less, making adversarial attacks much more difficult. Examples of black-box methods include probability label-based attacks [15,16] and decision-based attacks [17], etc. We now consider an extreme situation, where attackers have no access to any feedback from victim models, such that existing attack methods are unable to craft adversarial examples until researchers discover that adversarial examples can transfer among DNN models performing the same task [18]. Recent relevant studies focused on improving the basic FGSM [11] method to enhance the transferability of adversarial examples, such as gradient-based methods [19,20], transformation-based methods [20,21], and variance-based methods [22], etc. However, the transferability and real-time performance of the above approaches are still insufficient to meet realistic attack requirements. Consequently, adversarial attacks are pending further improvements.

With the wide application of DNNs in the field of remote sensing, researchers have embarked on investigating the adversarial examples of remote sensing images. Xu et al. [23] first investigated the adversarial attack and defense in safety-critical remote sensing tasks, and proposed the mixup attack [24] to generate universal adversarial examples for remote sensing images. However, the research on the adversarial example of SAR images is still in its infancy. Li et al. [25] generated abundant adversarial examples for CNN-based SAR image classifiers through the basic FGSM method and systematically evaluated critical factors affecting the attack performance. Du et al. [26] designed a Fast C&W algorithm to improve the efficiency of generating adversarial examples by introducing an encoder–decoder model. To enhance the universality and feasibility of adversarial perturbations, the work in [27] presented a universal local adversarial network to generate universal adversarial perturbations for the target region of SAR images. Furthermore, the latest research [28] has broken through the limitations of the digital domain and implemented the adversarial example of SAR images in the signal domain by transmitting a two-dimensional jamming signal. Despite the high attack success rates achieved by the above methods, the problem of transferable adversarial examples in the field of SAR-ATR has yet to be addressed.

In this paper, a transferable adversarial network (TAN) is proposed to improve the transferability and real-time performance of adversarial examples in SAR images. Specifically, during the training phase of TAN, we simultaneously trained two encoder–decoder models: a generator that crafts malicious samples through a one-step forward mapping from original data, and an attenuator that weakens the effectiveness of malicious samples by capturing the most harmful deformations. We argue that if the adversarial examples crafted by the generator are robust to the deformations produced by the attenuator, i.e., the attenuated adversarial examples remain effective to DNN models, then they are capable of transferring to other victim models. Moreover, unlike traditional iterative methods, our approach can one-step map original samples to adversarial examples, thus enabling real-time attacks. In other words, we realize real-time transferable adversarial attacks through a two-player game between the generator and attenuator.

The main contributions of this paper are summarized as follows.

- (1)

- For the first time, this paper systematically evaluates the transferability of adversarial examples among DNN-based SAR-ATR models. Meanwhile, our research reveals that there may be potential common vulnerabilities among DNN models performing the same task.

- (2)

- We propose a novel network to enable real-time transferable adversarial attacks. Once the proposed network is well-trained, it can craft adversarial examples with high transferability in real time, thus attacking black-box victim models without resorting to any prior knowledge. As such, our approach possesses promising applications in AI security.

- (3)

- The proposed method is evaluated on the most authoritative SAR-ATR dataset. Experimental results indicate that our approach achieves state-of-the-art transferability with acceptable adversarial perturbations and minimum time costs compared to existing attack methods, making real-time black-box attacks without any prior knowledge a reality.

2. Preliminaries

2.1. Adversarial Attacks for DNN-Based SAR-ATR Models

Suppose is a single channel SAR image from the dataset and is a DNN-based K-class SAR-ATR model. Given a sample as input to , the output is a K-dimensional vector , where denotes the score of belonging to class i. Let represent the predicted class of for . The adversarial attack is to fool with an adversarial example that only has a minor perturbation on . The detail process can be expressed as follows:

where the -norm is defined as , and controls the magnitude of adversarial perturbations. The common -norm includes the -norm, -norm, and -norm. Attackers can select different norm types according to practical requirements. For example, the -norm represents the number of modified pixels in , the -norm measures the mean square error (MSE) between and and the -norm denotes the maximum variation for individual pixels in .

Meanwhile, adversarial attacks can be mainly divided into two modes. The first basic mode is called the non-targeted attack, making DNN models misclassify. The second one is more stringent, called the targeted attack, which induces models to output specified results. There is no doubt that the latter poses a higher level of threat to AI security. In other words, the non-targeted attack is to minimize the probability of models correctly recognizing samples; conversely, the targeted attack maximizes the probability of models identifying samples as target classes. Thus, (1) can be transformed into the following optimization problems:

- For the non-targeted attack:

- For the targeted attack:

where the discriminant function equals one if the equation holds; otherwise, it equals zero. and represent the true and target classes of the input. N is the number of samples in the dataset. Obviously, the above optimization problems are exactly the opposite of a DNN’s training process, and the corresponding loss functions will be given in the next chapter.

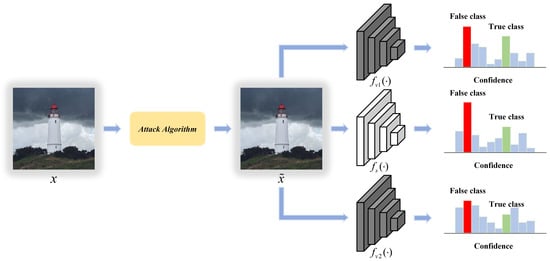

2.2. Transferability of Adversarial Examples

We consider an extreme situation where attackers have no access to any feedback from victim models, in which existing white-box and black-box attacks are unable to craft adversarial examples. In this case, attackers can utilize the transferability of adversarial examples to attack models. Specifically, the extensive experiments in [18] have demonstrated that adversarial examples can transfer among models, even if they have different architectures or are trained on different training sets, so long as they are trained to perform the same task. Details about the transferability are shown in Figure 1.

Figure 1.

Transferability of adversarial examples.

As shown in Figure 1, for an image classification task, we have trained three recognition models. Suppose that only the surrogate model is a white-box model, and victim models , are black-box models. Undoubtedly, given an sample x, attackers can craft an adversarial example to fool through attack algorithms. Meanwhile, given the transferability of adversarial examples, can also fool and successfully. However, the transferability generated by existing algorithms is very weak, so this paper is dedicated to crafting highly transferable adversarial examples.

3. The Proposed Transferable Adversarial Network (TAN)

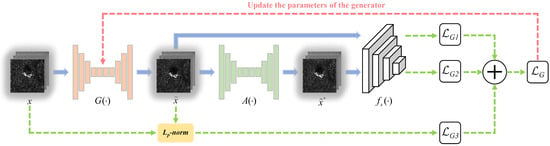

In this paper, the proposed Transferable Adversarial Network (TAN) utilizes the encoder–decoder model and data augmentation technology to improve the transferability and real-time performance of adversarial examples. The framework of our network is shown in Figure 2. As we can see, compared to traditional iterative methods, TAN introduces a generator to learn the one-step forward mapping from the clean sample x to the adversarial example , thus enabling real-time attacks. Meanwhile, to improve the transferability of , we simultaneously trained an attenuator to capture the most harmful deformations, which are supposed to weaken the effectiveness of while still preserving the semantic meaning of x. We argue that if is robust to the deformations produced by , i.e., remains effective to DNN models, then is capable of transferring to the black-box victim model . In other words, we achieve real-time transferable adversarial attacks through a two-player game between and . This chapter will introduce our method in detail.

Figure 2.

Framework of TAN.

3.1. Training Process of the Generator

For easy understanding, Figure 3 shows the detailed training process of the generator. Note that a white-box model is selected as the surrogate model during the training phase.

Figure 3.

Training process of the generator.

As we can see, given a clean sample x, the generator crafts the adversarial example through a one-step forward mapping, as follows:

Meanwhile, the attenuator takes as input and outputs the attenuated adversarial example :

Since has to fool with a minor perturbation, and needs to remain effective against , the loss function of consists of three parts. Next, we will give the generator loss of non-targeted and targeted attacks, respectively.

For the non-targeted attack: First, according to (2), is to minimize the classification accuracy of , which means that it has to decrease the confidence of being recognized as the true class , i.e., to increase the confidence of being identified as others. Thus, the first part of can be expressed as:

Second, to improve the transferability of , we expect that remains effective to , so the second part of can be derived as:

Finally, the last part of is used to limit the perturbation magnitude. We introduce the traditional -norm to measure the degree of image distortion as follows:

In summary, we apply the linear weighted sum method to balance the relationship among , , and . As such, the complete generator loss for the non-targeted attack can be represented as:

where . are the weight coefficients of , , and , respectively. The weight coefficients represent the relative importance of each loss term during the training process. A larger weight implies that the corresponding loss will decrease more rapidly and significantly, allowing attackers to adjust the parameters flexibly according to their actual needs.

For the targeted attack: According to (3), is to maximize the probability of being recognized as the target class , i.e., to increase the confidence of . Thus, here can be expressed as:

To maintain the effectiveness of against , here is derived as:

The perturbation magnitude is still limited by the shown in (8). Therefore, the complete generator loss for the targeted attack can be represented as:

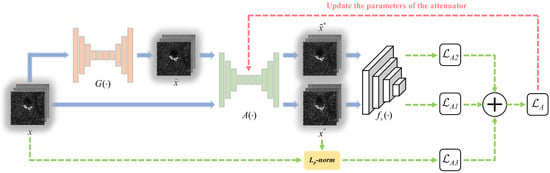

3.2. Training Process of the Attenuator

According to Figure 2, during the training phase of TAN, an attenuator was introduced to weaken the effectiveness of while still preserving the semantic meaning of x. We show the detailed training process of in Figure 4.

Figure 4.

Training process of the attenuator.

As we can see, the attenuator loss also consists of three parts. First, to preserve the semantic meaning of x, has to keep a basic classification accuracy on the following attenuated sample :

It means that the first part of should increase the confidence of being recognized as the true class , as follows:

Meanwhile, to weaken the effectiveness of , also need to improve the confidence of the attenuated adversarial example being identified as , so the second part of can be expressed as:

Finally, to avoid excessive image distortion caused by , the third part of is used to limit the deformation magnitude, which can be expressed by the traditional -norm, as follows:

As with the generator loss, we utilize the linear weighted sum method to derive the complete attenuator loss as follows:

where . are the weight coefficients of , , and , respectively.

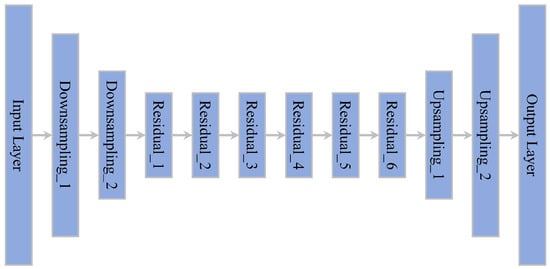

3.3. Network Structure of the Generator and Attenuator

According to Section 3.1 and Section 3.2, the generator and attenuator are essentially two encoder–decoder models, so the choice of a suitable model structure is necessary. We mainly consider two factors. First, as the size of original samples and adversarial examples should be the same, the model has to keep the input and output sizes identical. Second, to prevent our network from overfitting while saving computational resources, a lightweight model will be a better choice. In summary, we applied ResNet Generator proposed in [29] as the encoder–decoder model of TAN. The structure of ResNet Generator is shown in Figure 5.

Figure 5.

Structure of ResNet Generator.

As we can see, ResNet Generator mainly consists of downsampling, residual, and upsampling modules. For a visual understanding, given an input data of size , the input and output sizes of each module are listed in Table 1.

Table 1.

Input–output relationships for each module of ResNet Generator.

Obviously, the input and output sizes of ResNet Generator are the same. Meanwhile, to ensure the validity of the generated data, we added a function after the output module, which restricts the generated data to the interval . The total number of parameters in ResNet Generator has been calculated to be approximately , which is a fairly lightweight network. For more details, please refer to the literature [29].

3.4. Complete Training Process of TAN

As we described earlier, TAN improves the transferability of adversarial examples through a two-player game between the generator and attenuator, which is quite similar to the working principle of generative adversarial networks (GAN) [30]. Therefore, we also adopted an alternating training scheme to train our network. Specifically, given the dataset and batch size S, we first randomly divided into M batches at the beginning of each training iteration. Second, we set a training ratio , which means that TAN trains the generator R times and then trains the attenuator once, i.e., once per batch for the former and only once per R batch for the latter. In this way, we can prevent the attenuator from being so strong that the generator cannot be optimized. Meanwhile, to shorten training time, we set an early stop condition so that training can be ended early when certain indicators meet the condition. Note that the generator and attenuator are trained alternately, i.e., the attenuator’s parameters are fixed when the generator is trained, and vice versa. More details of the complete training process for TAN are shown in Algorithm 1.

| Algorithm 1:Transferable Adversarial Network Training |

|

4. Experiments

4.1. Data Descriptions

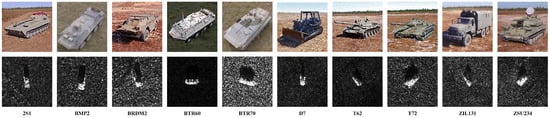

To date, there is no publicly available dataset for UAV SAR-ATR, thus this paper experiments on the most authoritative SAR-ATR dataset, i.e., the moving and stationary target acquisition and recognition (MSTAR) dataset [31]. MSTAR is collected by a high-resolution spotlight SAR and published by the U.S. Defense Advanced Research Projects Agency (DARPA) in 1996, which contains SAR images of Soviet military vehicle targets at different azimuth and depression angles. In standard operating conditions (SOC), MSTAR includes ten classes of targets, such as self-propelled howitzers (2S1); infantry fighting vehicles (BMP2); armored reconnaissance vehicles (BRDM2); wheeled armored transport vehicles (BTR60, BTR70); bulldozers (D7); main battle tanks (T62, T72); cargo trucks (ZIL131); and self-propelled artillery (ZSU234). The training dataset contains 2747 images collected at a depression angle of , and the testing dataset contains 2426 images captured at a depression angle of . More details about the dataset are given in Table 2, and Figure 6 shows the optical images and corresponding SAR images of each class.

Table 2.

Details of the MSTAR dataset under SOC, including target class, serial, depression angle, and sample numbers.

Figure 6.

Optical images (top) and SAR images (bottom) of the MSTAR dataset.

4.2. Implementation Details

The proposed method is evaluated on the following six common DNN models: DenseNet121 [32], GoogLeNet [33], InceptionV3 [34], Mobilenet [35], ResNet50 [36], and Shufflenet [37]. In terms of data preprocessing, we resized all the images in MSTAR to and uniformly sample of training data to form the validation dataset. During the training phase of recognition models, the training epoch and batch size were set to 100 and 32, respectively. During the training phase of TAN, to minimize the MSE between adversarial examples and original samples, we adopted the -norm to evaluate the image distortion caused by adversarial perturbations. Meanwhile, for better attack performance, the hyperparameters of TAN are fine-tuned through numerous experiments, and the following set of parameters is eventually determined to best meet our requirements. Specifically, we set the generator loss weights to , the attenuator loss weights to , the training ratio to 3, the training epoch to 50, and the batch size to 8. Due to the adversarial process involved in TAN, training can be challenging to converge. As such, we employed Adam [38], a more computationally efficient optimizer, to accelerate model convergence, which also performs better in solving non-stationary objective and sparse gradient problems. The learning rate is set to . When evaluating the transferability, we first crafted adversarial examples for each surrogate model and then assessed the transferability by testing the recognition results of victim models on corresponding adversarial examples. Detailed experiments will be given later.

Furthermore, the following six attack algorithms from the Torchattacks [39] toolbox were introduced as baseline methods for comparison with TAN: MIFGSM [19], DIFGSM [21], NIFGSM [20], SINIFGSM [20], VMIFGSM [22], and VNIFGSM [22]. All codes were written in Pytorch, and the experimental environment consisted of Windows 10 with an NVIDIA GeForce RTX 2080 Ti GPU and a GHz Intel Core i9-9900K CPU.

4.3. Evaluation Metrics

We mainly consider two factors to comprehensively evaluate the performance of adversarial attacks: the effectiveness and stealthiness, which are directly related to the classification accuracy of victim models on adversarial examples and the norm value of adversarial perturbations, respectively. For the metric, the formula is as follows:

where and represent the true and target classes of the input data, K is the number of target classes, and is a discriminant function. In the non-targeted attack, the metric reflects the probability that the victim model identifies the adversarial example as , while in the targeted attack it indicates the probability that recognizes as . Obviously, in the non-targeted attack, the lower the metric, the better the attack. Conversely, in the targeted attack, a higher metric represents is more likely to recognize as , and thus the attack is more effective. In conclusion, the effectiveness of non-targeted attacks is inversely proportional to the metric, and the effectiveness of targeted attacks is proportional to this metric. Additionally, there are other three similar indicators, , , and , that represent the classification accuracy of for the original sample , the attenuated sample , and the attenuated adversarial example , respectively. Note that whether it is a non-targeted or targeted attack, always represents the accuracy with which identifies as , while the other three accuracy indicators need to be calculated via (18) based on the attack mode. In particular, represents the recognition result of on , which indirectly reflects the strength of the transferability possessed by .

Meanwhile, we applied the following -norm values to measure the attack stealthiness:

where and represent the image distortion caused by the generator and attenuator, respectively. In our experiments, the -norm defaults to -norm. In summary, we can set the early stop condition mentioned in Section 3.4 with the above indicators, as follows:

Furthermore, to evaluate the real-time performance of adversarial attacks, we introduced the metric to denote the time cost of generating a single adversarial example, as follows:

where is the total time consumed to generate N adversarial examples.

4.4. DNN-Based SAR-ATR Models

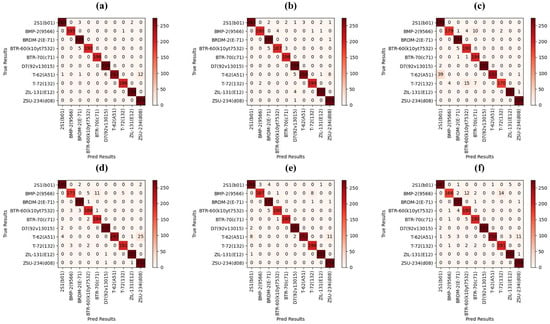

A well-trained recognition model is a prerequisite for effective adversarial attacks, so we have trained six SAR-ATR models on the MSTAR dataset: DenseNet121, GoogLeNet, InceptionV3, Mobilenet, ResNet50, and Shufflenet. All of them achieve outstanding recognition performance, with the classification accuracy of , , , , , and on the testing dataset, respectively. In addition, we show the confusion matrix of each model in Figure 7.

Figure 7.

Confusion matrixes of DNN-based SAR-ATR models on the MSTAR dataset. (a) DenseNet121. (b) GoogLeNet. (c) InceptionV3. (d) Mobilenet. (e) ResNet50. (f) Shufflenet.

4.5. Comparison of Attack Performance

In this section, we first evaluated the attack performance of the proposed method against DNN-based SAR-ATR models on the MSTAR dataset. Specifically, during the training phase of TAN, we took each network as the surrogate model in turn and assessed the recognition results of corresponding models on the outputs of TAN at each stage. The results of non-targeted and targeted attacks are detailed in Table 3 and Table 4, respectively.

Table 3.

Non-targeted attack results of our method against DNN-based SAR-ATR models on the MSTAR dataset.

Table 4.

Targeted attack results of our method against DNN-based SAR-ATR models on the MSTAR dataset.

In non-targeted attacks, the metric of each model on the MSTAR dataset exceeds . However, after the non-targeted attack, the classification accuracy of all models on the generated adversarial examples, i.e., the metric, is below , and the indicator is less than . It means that adversarial examples deteriorate the recognition performance of models rapidly through minor adversarial perturbations. Meanwhile, during the training phase of TAN, we evaluate the performance of the attenuator. According to the metric, the attenuator leads to an average improvement of about in the classification accuracy of models on adversarial examples, that is, it indeed weakens the effectiveness of adversarial examples. We also should pay attention to the metrics and , i.e., the recognition accuracy of models on the attenuated samples, and the deformation distortion caused by the attenuator. The fact is that the indicator of each model exceeds , and the average value of the metric is about 4. It means that the attenuator retains most semantic information of original samples without causing excessive deformation distortion, which is in line with our requirements.

In targeted attacks, the metric represents the probability that models identify original samples as target classes, so it can reflect the dataset distribution, i.e., each category accounts for about of the total dataset. After the targeted attack, the probability of each model recognizing adversarial examples as target classes, i.e., the metric, is over , and the indicator shows that the image distortion caused by adversarial perturbations is less than . It means that the adversarial examples crafted by the generator can induce models to output specified results with high probability through minor perturbations. As with the non-targeted attack, we evaluate the performance of the attenuator. The metric shows that the attenuator results in an average decrease of about in the probability of adversarial examples being identified as target classes. Meanwhile, the metric of each model exceeds , and the average value of the indicator is about . That is, the attenuator weakens the effectiveness of adversarial examples through slight deformations, while preserving the semantic meaning of original samples well.

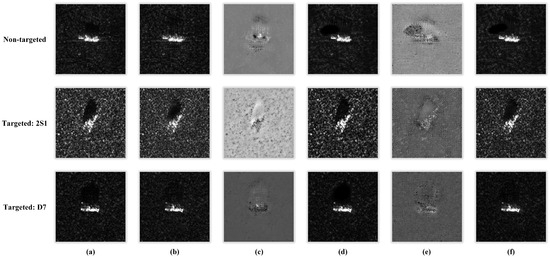

In summary, for both non-targeted and targeted attacks, the adversarial examples crafted by the generator can fool models with high success rates, and the attenuator is able to weaken the effectiveness of adversarial examples with slight deformations while retaining the semantic meaning of original samples. Moreover, we ensure that the generator always outperforms the attenuator by adjusting the training ratio between the two models. To visualize the attack results of TAN, we took ResNet50 as the surrogate model and display the outputs of TAN at each stage in Figure 8.

Figure 8.

Visualization of attack results against ResNet50. (a) Original samples. (b) Adversarial examples. (c) Adversarial perturbations. (d) Attenuated samples. (e) Deformation distortion. (f) Attenuated adversarial examples. From top to bottom, the corresponding target classes are None, 2S1, and D7, respectively.

Finally, we compared the non-targeted and targeted attack performance of different methods against DNN-based SAR-ATR models on the MSTAR dataset, as detailed in Table 5. Obviously, for the same image distortion, the attack effectiveness of the proposed method against a single model may not be the best. Nevertheless, we focused more on the transferability of adversarial examples, which will be the main topic of the following section.

Table 5.

Attack performance of different methods against DNN-based SAR-ATR models on the MSTAR dataset.

4.6. Comparison of Transferability

In this section, we evaluated the transferability of adversarial examples among DNN-based SAR-ATR models on the MSTAR dataset. Specifically, we first took each network as the surrogate model in turn and crafted adversarial examples for them, respectively. Then, we assessed the transferability by testing the recognition results of victim models on corresponding adversarial examples. The transferability in non-targeted and targeted attacks are shown in Table 6 and Table 7, respectively.

Table 6.

Transferability of adversarial examples generated by different attack algorithms in non-targeted attacks.

Table 7.

Transferability of adversarial examples generated by different attack algorithms in targeted attacks.

In non-targeted attacks, when the proposed method sequentially takes DenseNet121, GoogLeNet, InceptionV3, Mobilenet, ResNet50, and Shufflenet as the surrogate model, the highest recognition accuracy of victim models on the generated adversarial examples are , , , , , and , respectively. Equivalently, the highest recognition accuracy of victim models on the adversarial examples produced by baseline methods are , , , , , and , respectively. Meanwhile, for each surrogate model, victim models always have the lowest recognition accuracy on the adversarial examples crafted by our approach. Obviously, compared with baseline methods, the proposed method slightly sacrifices the performance on attacking surrogate models, but achieves state-of-the-art transferability among victim models in non-targeted attacks. Detailed results are shown in Table 6.

In targeted attacks, the proposed method still takes DenseNet121, GoogLeNet, InceptionV3, Mobilenet, ResNet50, and Shufflenet as the surrogate model in turn, and the minimum probability that victim models identify the generated adversarial examples as target classes are , , , , , and , respectively. Correspondingly, the minimum probability that victim models recognize the adversarial examples produced by baseline methods as target classes are , , , , , and , respectively. Moreover, for each surrogate model, victim models always identify the adversarial examples crafted by our approach as target classes with the maximum probability. Thus, the proposed method also achieves state-of-the-art transferability among victim models in targeted attacks. Detailed results are shown in Table 7.

In conclusion, for both non-targeted and targeted attacks, our approach generates adversarial examples with the strongest transferability. In other words, it performs better on exploring the common vulnerability of DNN models. We attribute this to the adversarial training between the generator and attenuator. Figuratively speaking, it is because of the attenuator constantly creating obstacles for the generator that the attack capability of the generator is continuously enhanced and completed.

4.7. Comparison of Real-Time Performance

According to (4), compared to traditional iterative methods, the generator in our approach is capable of one-step mapping original samples to adversarial examples. It acts like a function that takes inputs and outputs results based on the mapping relationship. To evaluate the real-time performance of adversarial attacks, we compared the time cost of generating a single adversarial example through different attack algorithms. The time consumption of non-targeted and targeted attacks is shown in Table 8 and Table 9, respectively.

Table 8.

Time cost of generating a single adversarial example through different attack algorithms in non-targeted attacks.

Table 9.

Time cost of generating a single adversarial example through different attack algorithms in targeted attacks.

As we can see, there is almost no difference in the time cost of crafting a single adversarial example in non-targeted and targeted attacks. Meanwhile, for all the victim models, the time cost of generating a single adversarial example through our method is stable around 2 ms. As for baseline methods, it depends on the complexity of victim models, the more complex the model, the longer the time cost. However, even for the simplest victim model, the minimum time cost of baseline methods is about 4.5 ms, consuming twice as much time as our approach. Thus, there is no doubt that the proposed method achieves the most superior and stable real-time performance.

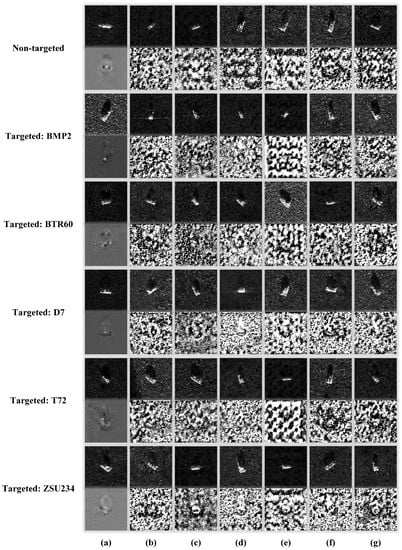

4.8. Visualization of Adversarial Examples

In this section, we took ResNet50 as the surrogate model and visualized the adversarial examples crafted by different methods in Figure 9. Obviously, the adversarial perturbations generated by our method are continuous, and mainly focus on the target region of SAR images. In contrast, the perturbations produced by baseline methods are quite discrete, and almost cover the global area of SAR images. First, from the perspective of feature extraction, since the features that have a greater impact on recognition results are mainly concentrated in the target region rather than the background clutter area, a focused disruption of key features is certainly a more efficient attack strategy. Second, from the perspective of physical feasibility, the fewer pixels modified in adversarial examples, the smaller range perturbed in reality, so localized perturbations are more feasible than global ones. In summary, the proposed method improves the efficiency and feasibility of adversarial attacks by focusing perturbations on the target region of SAR images.

Figure 9.

Visualization of adversarial examples against ResNet50. (a) TAN. (b) MIFGSM. (c) DIFGSM. (d) NIFGSM. (e) SINIFGSM. (f) VMIFGSM. (g) VNIFGSM. From top to bottom, the corresponding target classes are None, BMP2, BTR60, D7, T72, and ZSU234, respectively. For each attack, the first row shows adversarial examples, and the second row shows corresponding adversarial perturbations.

5. Discussions

So far, the proposed method has been proven to be effective for SAR images. Further studies should verify its effectiveness in other fields, such as optical [40,41], infrared [42,43], and synthetic aperture sonar (SAS) [44,45,46,47] images, etc. Although different imaging principles lead to huge differences in the resolution, dimension, and target type of images, we argue that TAN can be well-suitable to these fields. The reason is that adversarial examples essentially attack the inherent vulnerability of DNN models, independent of the input data. However, the non-negligible challenge is how to realize these adversarial examples in the real world. Specifically, the physical implementation depends on the imaging principle, e.g., crafting adversarial patches against optical cameras, changing temperature against infrared devices, and emitting acoustic signals against SAS, etc. This is a worthwhile topic in the future.

6. Conclusions

This paper proposed a transferable adversarial network (TAN) to attack DNN-based SAR-ATR models, with the benefit that the transferability and the real-time performance of adversarial examples is significantly improved, which is of great significance for real-world black-box attacks. In the proposed method, we simultaneously trained two encoder–decoder models: a generator that learns the one-step forward mapping from original data to adversarial examples, and an attenuator that captures the most harmful deformations to malicious samples. It is motivated by enabling real-time attacks by one-step mapping original data to adversarial examples, and enhancing the transferability through a two-player game between the generator and attenuator. Experimental results demonstrated that our approach achieves state-of-the-art transferability with acceptable adversarial perturbations and minimum time costs compared to existing attack methods, making real-time black-box attacks without any prior knowledge a reality. Potential future work could consider attacking DNN-based SAR-ATR models under small sample conditions. In addition to improving the performance of attack algorithms, it makes sense to implement adversarial examples in the real world.

Author Contributions

Conceptualization, M.D. (Meng Du) and D.B.; methodology, M.D. (Meng Du); software, M.D. (Meng Du); validation, D.B., Y.S., B.S. and Z.W.; formal analysis, D.B. and M.D. (Mingyang Du); investigation, M.D. (Mingyang Du); resources, D.B.; data curation, M.D. (Meng Du); writing—original draft preparation, M.D. (Meng Du); writing—review and editing, M.D. (Meng Du), L.L. and D.B.; visualization, M.D. (Meng Du); supervision, D.B.; project administration, D.B.; funding acquisition, D.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China under Grant 62071476.

Institutional Review Board Statement

The study does not involve humans or animals.

Informed Consent Statement

The study does not involve humans.

Data Availability Statement

The experiments in this paper use public datasets, so no data are reported in this work.

Conflicts of Interest

The authors declare that they have no conflict of interest to report regarding the present study.

References

- Li, D.; Kuai, Y.; Wen, G.; Liu, L. Robust Visual Tracking via Collaborative and Reinforced Convolutional Feature Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 15–19 June 2019. [Google Scholar] [CrossRef]

- Kuai, Y.; Wen, G.; Li, D. Masked and dynamic Siamese network for robust visual tracking. Inf. Sci. 2019, 503, 169–182. [Google Scholar] [CrossRef]

- Cong, R.; Yang, N.; Li, C.; Fu, H.; Zhao, Y.; Huang, Q.; Kwong, S. Global-and-local collaborative learning for co-salient object detection. IEEE Trans. Cybern. 2022, 53, 1920–1931. [Google Scholar] [CrossRef] [PubMed]

- Tang, J.; Xiang, D.; Zhang, F.; Ma, F.; Zhou, Y.; Li, H. Incremental SAR Automatic Target Recognition With Error Correction and High Plasticity. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1327–1339. [Google Scholar] [CrossRef]

- Wang, L.; Yang, X.; Tan, H.; Bai, X.; Zhou, F. Few-Shot Class-Incremental SAR Target Recognition Based on Hierarchical Embedding and Incremental Evolutionary Network. IEEE Trans. Geosci. Remote Sens. 2023, 2023, 3248040. [Google Scholar] [CrossRef]

- Kwak, Y.; Song, W.J.; Kim, S.E. Speckle-Noise-Invariant Convolutional Neural Network for SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2019, 16, 549–553. [Google Scholar] [CrossRef]

- Du, C.; Chen, B.; Xu, B.; Guo, D.; Liu, H. Factorized discriminative conditional variational auto-encoder for radar HRRP target recognition. Signal Process. 2019, 158, 176–189. [Google Scholar] [CrossRef]

- Vint, D.; Anderson, M.; Yang, Y.; Ilioudis, C.; Di Caterina, G.; Clemente, C. Automatic Target Recognition for Low Resolution Foliage Penetrating SAR Images Using CNNs and GANs. Remote Sens. 2021, 13, 596. [Google Scholar] [CrossRef]

- Huang, T.; Zhang, Q.; Liu, J.; Hou, R.; Wang, X.; Li, Y. Adversarial attacks on deep-learning-based SAR image target recognition. J. Netw. Comput. Appl. 2020, 162, 102632. [Google Scholar] [CrossRef]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar] [CrossRef]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial examples in the physical world. In Artificial Intelligence Safety and Security; Chapman and Hall/CRC: London, UK, 2018; pp. 99–112. [Google Scholar]

- Moosavi-Dezfooli, S.M.; Fawzi, A.; Frossard, P. Deepfool: A simple and accurate method to fool deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2574–2582. [Google Scholar] [CrossRef]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS&P), Saarbrücken, Germany, 21–24 March 2016; pp. 372–387. [Google Scholar] [CrossRef]

- Su, J.; Vargas, D.V.; Sakurai, K. One pixel attack for fooling deep neural networks. IEEE Trans. Evol. Comput. 2019, 23, 828–841. [Google Scholar] [CrossRef]

- Chen, P.Y.; Zhang, H.; Sharma, Y.; Yi, J.; Hsieh, C.J. Zoo: Zeroth order optimization based black-box attacks to deep neural networks without training substitute models. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, 3 November 2017; pp. 15–26. [Google Scholar] [CrossRef]

- Chen, J.; Jordan, M.I.; Wainwright, M.J. Hopskipjumpattack: A query-efficient decision-based attack. In Proceedings of the 2020 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 18–21 May 2020; pp. 1277–1294. [Google Scholar] [CrossRef]

- Papernot, N.; McDaniel, P.; Goodfellow, I. Transferability in machine learning: From phenomena to black-box attacks using adversarial samples. arXiv 2016, arXiv:1605.07277. [Google Scholar]

- Dong, Y.; Liao, F.; Pang, T.; Su, H.; Zhu, J.; Hu, X.; Li, J. Boosting adversarial attacks with momentum. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9185–9193. [Google Scholar] [CrossRef]

- Lin, J.; Song, C.; He, K.; Wang, L.; Hopcroft, J.E. Nesterov accelerated gradient and scale invariance for adversarial attacks. arXiv 2019, arXiv:1908.06281. [Google Scholar]

- Xie, C.; Zhang, Z.; Zhou, Y.; Bai, S.; Wang, J.; Ren, Z.; Yuille, A.L. Improving transferability of adversarial examples with input diversity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2730–2739. [Google Scholar] [CrossRef]

- Wang, X.; He, K. Enhancing the transferability of adversarial attacks through variance tuning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1924–1933. [Google Scholar] [CrossRef]

- Xu, Y.; Du, B.; Zhang, L. Assessing the threat of adversarial examples on deep neural networks for remote sensing scene classification: Attacks and defenses. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1604–1617. [Google Scholar] [CrossRef]

- Xu, Y.; Ghamisi, P. Universal Adversarial Examples in Remote Sensing: Methodology and Benchmark. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Li, H.; Huang, H.; Chen, L.; Peng, J.; Huang, H.; Cui, Z.; Mei, X.; Wu, G. Adversarial examples for CNN-based SAR image classification: An experience study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1333–1347. [Google Scholar] [CrossRef]

- Du, C.; Huo, C.; Zhang, L.; Chen, B.; Yuan, Y. Fast C&W: A Fast Adversarial Attack Algorithm to Fool SAR Target Recognition with Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Du, M.; Bi, D.; Du, M.; Xu, X.; Wu, Z. ULAN: A Universal Local Adversarial Network for SAR Target Recognition Based on Layer-Wise Relevance Propagation. Remote Sens. 2022, 15, 21. [Google Scholar] [CrossRef]

- Xia, W.; Liu, Z.; Li, Y. SAR-PeGA: A Generation Method of Adversarial Examples for SAR Image Target Recognition Network. IEEE Trans. Aerosp. Electron. Syst. 2022, 2022, 3206261. [Google Scholar] [CrossRef]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14; Springer: Cham, Switzerland, 2016; pp. 694–711. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Keydel, E.R.; Lee, S.W.; Moore, J.T. MSTAR extended operating conditions: A tutorial. Algorithms Synth. Aperture Radar Imag. III 1996, 2757, 228–242. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Kim, H. Torchattacks: A pytorch repository for adversarial attacks. arXiv 2020, arXiv:2010.01950. [Google Scholar]

- Kang, J.; Wang, Z.; Zhu, R.; Xia, J.; Sun, X.; Fernandez-Beltran, R.; Plaza, A. DisOptNet: Distilling Semantic Knowledge From Optical Images for Weather-Independent Building Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Liu, K.; Liang, Y. Underwater optical image enhancement based on super-resolution convolutional neural network and perceptual fusion. Opt. Express 2023, 31, 9688–9712. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Ma, J. Image fusion in the loop of high-level vision tasks: A semantic-aware real-time infrared and visible image fusion network. Inf. Fusion 2022, 82, 28–42. [Google Scholar] [CrossRef]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-aware dual adversarial learning and a multi-scenario multi-modality benchmark to fuse infrared and visible for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5802–5811. [Google Scholar]

- Kiang, C.W.; Kiang, J.F. Imaging on Underwater Moving Targets With Multistatic Synthetic Aperture Sonar. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, H.; Sun, H.; Ying, W. Multireceiver SAS imagery based on monostatic conversion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10835–10853. [Google Scholar] [CrossRef]

- Choi, H.m.; Yang, H.s.; Seong, W.j. Compressive underwater sonar imaging with synthetic aperture processing. Remote Sens. 2021, 13, 1924. [Google Scholar] [CrossRef]

- Pate, D.J.; Cook, D.A.; O’Donnell, B.N. Estimation of Synthetic Aperture Resolution by Measuring Point Scatterer Responses. IEEE J. Ocean. Eng. 2021, 47, 457–471. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).