A Comparison of Different Data Fusion Strategies’ Effects on Maize Leaf Area Index Prediction Using Multisource Data from Unmanned Aerial Vehicles (UAVs)

Abstract

:1. Introduction

2. Materials and Methods

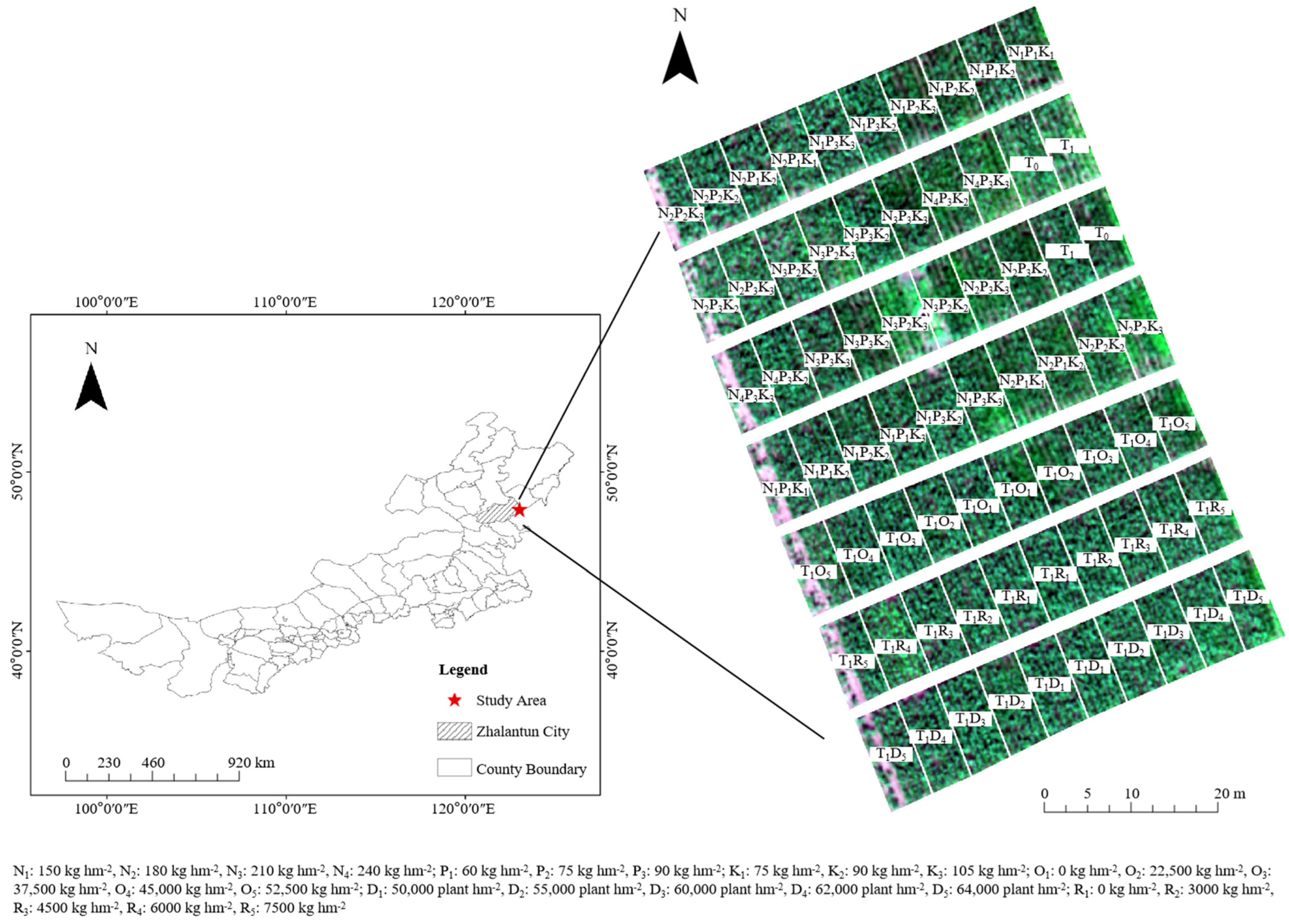

2.1. Field Experiment

2.2. Data Acquisition and Processing

2.2.1. UAV Data Acquisition

2.2.2. Field-Measured Data

2.3. Data Analysis

2.3.1. Different Prediction Strategy Scenarios

2.3.2. Image Feature Extraction

2.3.3. Model Design for Each Scenario

3. Results and Analysis

3.1. LAI in the Field

3.2. Comparison of the Original Image and Pixel-Level Fused Image

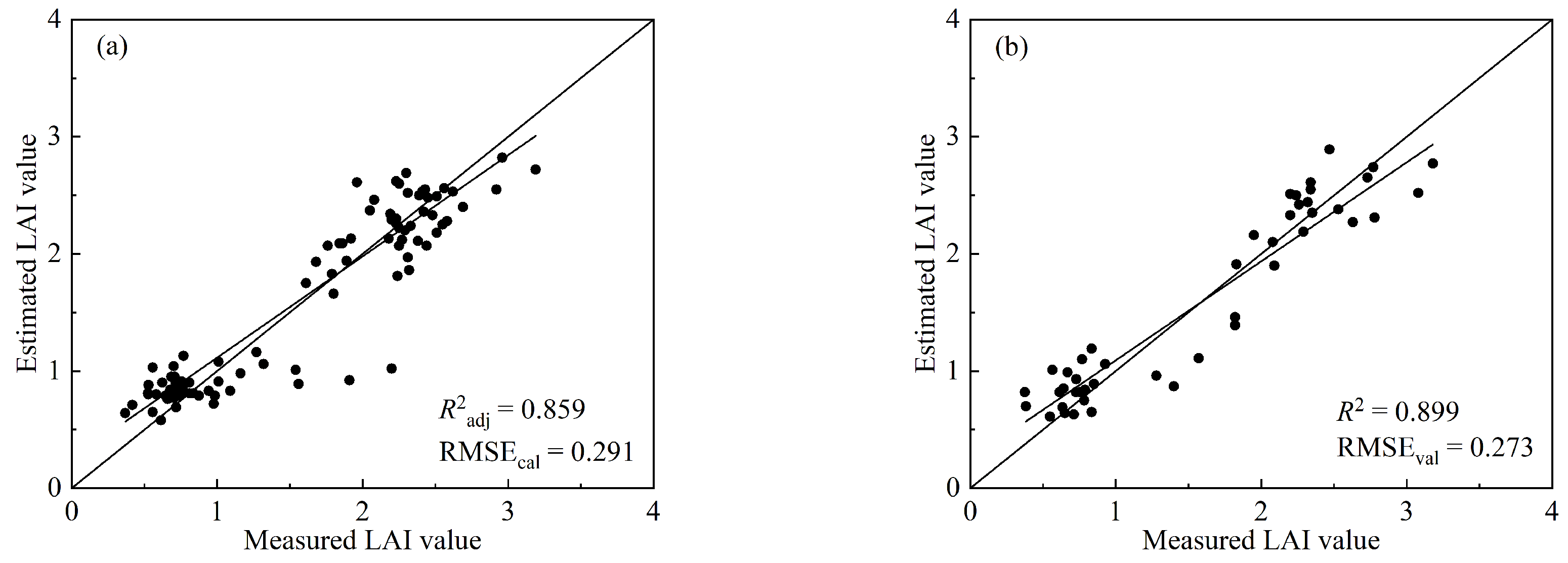

3.3. Results of LAI Inversion for the Single-Source Image Strategy Scenarios

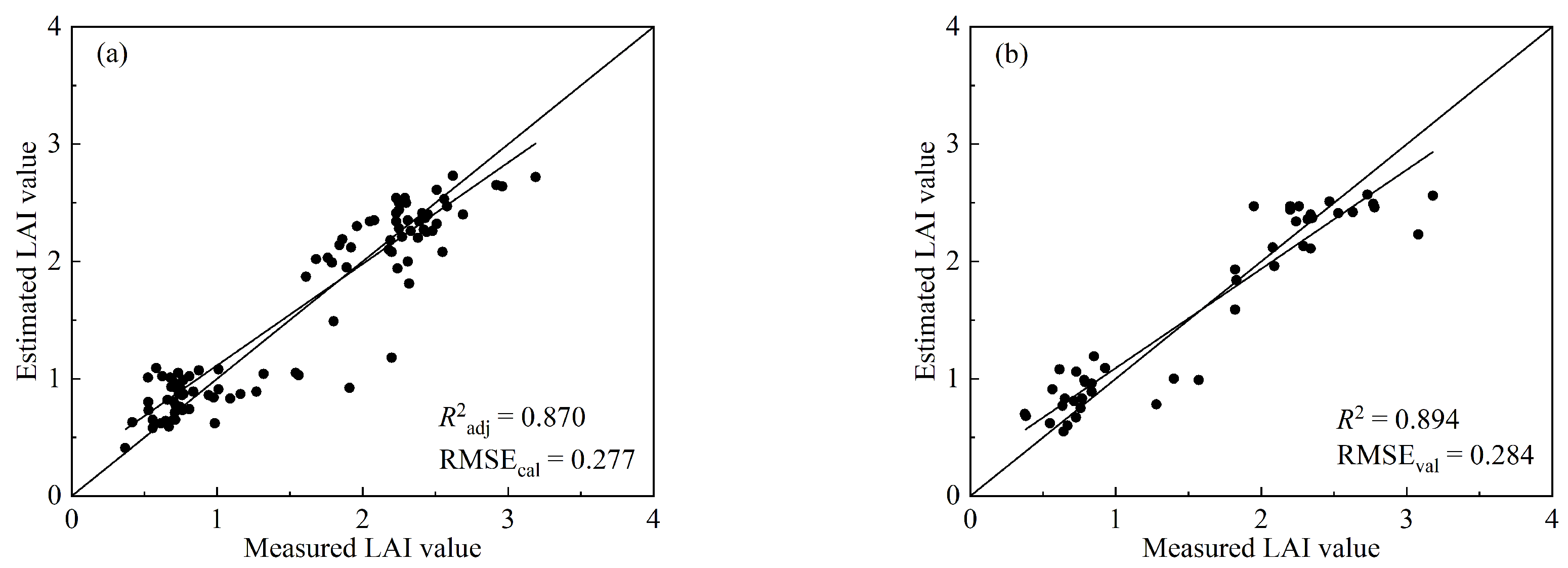

3.4. Results of LAI Inversion for Pixel-Level Data Fusion Strategy Scenarios

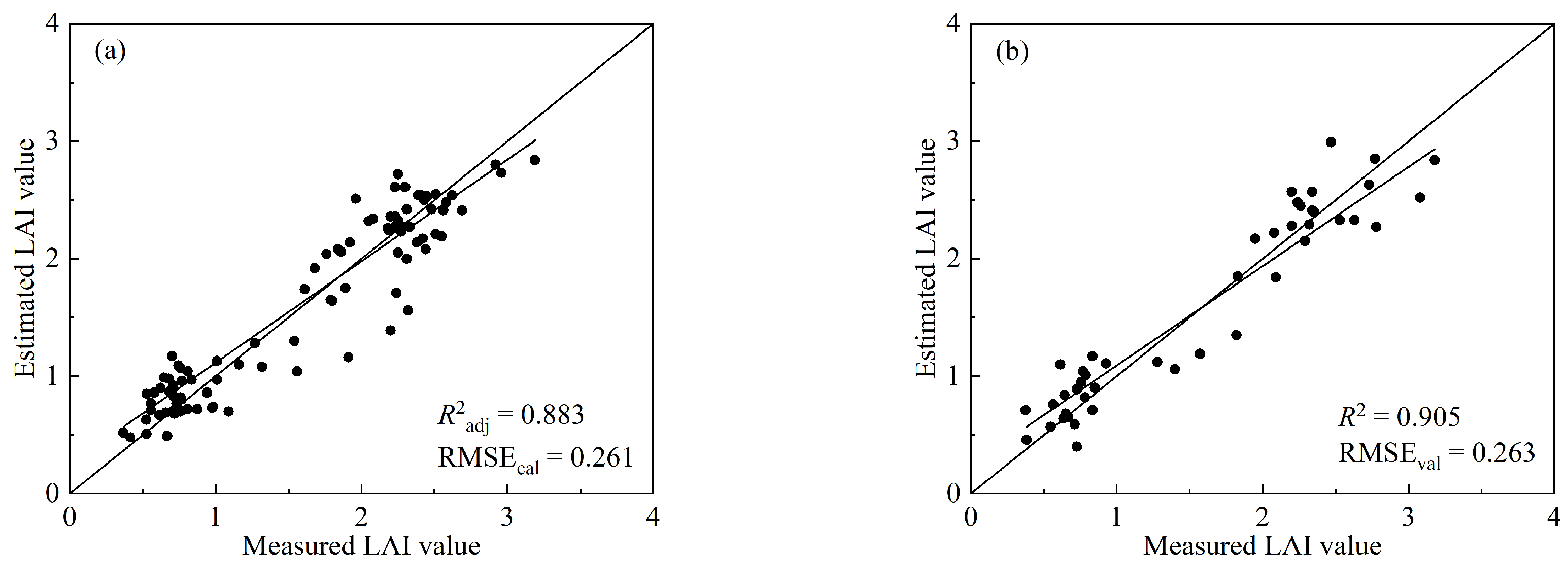

3.5. Results of LAI Inversion for Feature-Level Data Fusion Strategy Scenarios

4. Discussion

4.1. Comparison with Previous Studies

4.2. Optimal LAI Prediction Strategy

4.3. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Luo, L.L.; Chang, Q.R.; Gao, Y.F.; Jiang, D.Y.; Li, F.L. Combining Different Transformations of Ground Hyperspectral Data with Unmanned Aerial Vehicle (UAV) Images for Anthocyanin Estimation in Tree Peony Leaves. Remote Sens. 2022, 14, 2271. [Google Scholar] [CrossRef]

- Collins, W. Remote sensing of crop type and maturity. Photogramm. Eng. Remote Sens. 1978, 44, 42–55. [Google Scholar]

- Palacios-Rojas, N.; McCulley, L.; Kaeppler, M.; Titcomb, T.J.; Gunaratna, N.S.; Lopez-Ridaura, S.; Tanumihardjo, S.A. Mining maize diversity and improving its nutritional aspects within agro-food systems. Compr. Rev. Food Sci. Food Saf. 2020, 19, 1809–1834. [Google Scholar] [CrossRef]

- Yan, G.J.; Hu, R.H.; Luo, J.H.; Weiss, M.; Jiang, H.L.; Mu, X.H.; Xie, D.H.; Zhang, W.M. Review of indirect optical measurements of leaf area index: Recent advances, challenges, and perspectives. Agric. For. Meteorol. 2019, 265, 390–411. [Google Scholar] [CrossRef]

- Yue, J.B.; Feng, H.K.; Yang, G.J.; Li, Z.H. A Comparison of Regression Techniques for Estimation of Above-Ground Winter Wheat Biomass Using Near- Surface Spectroscopy. Remote Sens. 2018, 10, 66. [Google Scholar] [CrossRef]

- Fu, Y.Y.; Yang, G.J.; Li, Z.H.; Song, X.Y.; Li, Z.H.; Xu, X.G.; Wang, P.; Zhao, C.J. Winter Wheat Nitrogen Status Estimation Using UAV-Based RGB Imagery and Gaussian Processes Regression. Remote Sens. 2020, 12, 3778. [Google Scholar] [CrossRef]

- Sumesh, K.C.; Ninsawat, S.; Som-ard, J. Integration of RGB-based vegetation index, crop surface model and object-based image analysis approach for sugarcane yield estimation using unmanned aerial vehicle. Comput. Electron. Agric. 2021, 180, 105903. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.J.; Dai, H.Y.; Xu, B.; Yang, H.; Feng, H.K.; Li, Z.H.; Yang, X.D. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Chen, P.F.; Jin, X.L. Predicting Wheat Leaf Nitrogen Content by Combining Deep Multitask Learning and a Mechanistic Model Using UAV Hyperspectral Images. Remote Sens. 2022, 14, 6334. [Google Scholar] [CrossRef]

- Li, Z.H.; Jin, X.L.; Wang, J.H.; Yang, G.J.; Nie, C.W.; Xu, X.G.; Feng, H.K. Estimating winter wheat (Triticum aestivum) LAI and leaf chlorophyll content from canopy reflectance data by integrating agronomic prior knowledge with the PROSAIL model. Int. J. Remote Sens. 2015, 36, 2634–2653. [Google Scholar] [CrossRef]

- Darvishzadeh, R.; Atzberger, C.; Skidmore, A.; Schlerf, M. Mapping grassland leaf area index with airborne hyperspectral imagery: A comparison study of statistical approaches and inversion of radiative transfer models. ISPRS J. Photogramm. Remote Sens. 2011, 66, 894–906. [Google Scholar] [CrossRef]

- Durbha, S.S.; King, R.L.; Younan, N.H. Support vector machines regression for retrieval of leaf area index from multiangle imaging spectroradiometer. Remote Sens Environ. 2007, 107, 348–361. [Google Scholar] [CrossRef]

- Hasan, U.; Sawut, M.; Chen, S.S. Estimating the Leaf Area Index of Winter Wheat Based on Unmanned Aerial Vehicle RGB-Image Parameters. Sustainability 2019, 11, 6829. [Google Scholar] [CrossRef]

- Yuan, H.H.; Yang, G.J.; Li, C.C.; Wang, Y.J.; Liu, J.G.; Yu, H.Y.; Feng, H.K.; Xu, B.; Zhao, X.Q.; Yang, X.D. Retrieving soybean leaf area index from unmanned aerial vehicle hyperspectral remote sensing: Analysis of RF, ANN, and SVM regression models. Remote Sens. 2017, 9, 309. [Google Scholar] [CrossRef]

- Shi, Y.J.; Gao, Y.; Wang, Y.; Luo, D.N.; Chen, S.Z.; Ding, Z.T.; Fan, K. Using unmanned aerial vehicle-based multispectral image data to monitor the growth of intercropping crops in tea plantation. Front. Plant Sci. 2022, 13, 820585. [Google Scholar] [CrossRef]

- Yang, K.L.; Gong, Y.; Fang, S.H.; Duan, B.; Yuan, N.G.; Peng, Y.; Wu, X.T.; Zhu, R.S. Combining Spectral and Texture Features of UAV Images for the Remote Estimation of Rice LAI throughout the Entire Growing Season. Remote Sens. 2021, 13, 3001. [Google Scholar] [CrossRef]

- Li, S.Y.; Yuan, F.; Ata-UI-Karim, S.T.; Zheng, H.B.; Cheng, T.; Liu, X.J.; Tian, Y.C.; Zhu, Y.; Cao, W.X.; Cao, Q. Combining Color Indices and Textures of UAV-Based Digital Imagery for Rice LAI Estimation. Remote Sens. 2019, 11, 1763. [Google Scholar] [CrossRef]

- Zhang, X.W.; Zhang, K.F.; Sun, Y.Q.; Zhao, Y.D.; Zhuang, H.F.; Ban, W.; Chen, Y.; Fu, E.R.; Chen, S.; Liu, J.X.; et al. Combining Spectral and Texture Features of UAS-Based Multispectral Images for Maize Leaf Area Index Estimation. Remote Sens. 2022, 14, 331. [Google Scholar] [CrossRef]

- Feng, A.J.; Zhou, J.F.; Vories, E.D.; Sudduth, K.A.; Zhang, M.N. Yield estimation in cotton using UAV-based multi-sensor imagery. Biosyst. Eng. 2020, 193, 101–114. [Google Scholar] [CrossRef]

- Yan, P.C.; Han, Q.S.; Feng, Y.M.; Kang, S.Z. Estimating LAI for Cotton Using Multisource UAV Data and a Modified Universal Model. Remote Sens. 2022, 14, 4272. [Google Scholar] [CrossRef]

- Dian, R.W.; Li, S.T.; Sun, B.; Guo, A.J. Recent advances and new guidelines on hyperspectral and multispectral image fusion. Inf. Fusion. 2021, 69, 40–51. [Google Scholar] [CrossRef]

- Pohl, C.; Van Genderen, J.L. Review article multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Zhu, W.X.; Sun, Z.G.; Huang, Y.H.; Yang, T.; Li, J.; Zhu, K.Y.; Zhang, J.Q.; Yang, B.; Shao, C.X.; Peng, J.B.; et al. Optimization of multi-source UAV RS agro-monitoring schemes designed for field-scale crop phenotyping. Precis. Agric. 2021, 22, 1768–1802. [Google Scholar] [CrossRef]

- Liu, S.B.; Jin, X.L.; Nie, C.W.; Wang, S.Y.; Yu, X.; Cheng, M.H.; Shao, M.C.; Wang, Z.X.; Tuohuyi, N.; Bai, Y.; et al. Estimating leaf area index using unmanned aerial vehicle data: Shallow vs. deep machine learning algorithms. Plant Physiol. 2021, 183, 1551–1576. [Google Scholar] [CrossRef]

- Sadeh, Y.; Zhu, X.; Dunkerley, D.; Walker, J.P.; Zhang, Y.X.; Rozenstein, O.; Manivasagam, V.S.; Chenu, K. Fusion of Sentinel-2 and PlanetScope time-series data into daily 3m surface reflectance and wheat LAI monitoring. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102260. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F.; Simth, G.J.; Jonckheere, J.; Coppin, P. Review of methods for in situ leaf area index (LAI) determination Part II. estimation of LAI, errors and sampling. Agric. Forest Meteorol. 2004, 121, 37–53. [Google Scholar] [CrossRef]

- Selva, M.; Aiazzi, B.; Butera, F.; Chiarantini, L.; Baronti, S. Hyper-Sharpening: A First Approach on SIM-GA Data. IEEE J.-STARS 2015, 8, 3008–3024. [Google Scholar] [CrossRef]

- Xie, Q.Y.; Huang, W.J.; Zhang, B.; Chen, P.F.; Song, X.Y.; Pascucci, S.; Pignatti, S.; Laneve, G.; Dong, Y.Y. Estimating winter wheat leaf area index from ground and hyperspectral observations using vegetation indices. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 771–780. [Google Scholar] [CrossRef]

- Duan, B.; Liu, Y.T.; Gong, Y.; Peng, Y.; Wu, X.T.; Zhu, R.S.; Fang, S.H. Remote estimation of rice LAI based on Fourier spectrum texture from UAV image. Plant Methods 2019, 15, 124. [Google Scholar] [CrossRef]

- Richardson, M.D.; Karcher, D.E.; Purcell, L.C. Quantifying turfgrass cover using digital image analysis. Crop Sci. 2001, 41, 1884–1888. [Google Scholar] [CrossRef]

- Schuerger, A.C.; Capelle, G.A.; DiBenedetto, J.A.; Mao, C.Y.; Thai, C.N.; Evans, M.D.; Richards, J.T.; Blank, T.A.; Stryjewski, E.C. Comparison of two hyperspectral imaging and two laser-induced fluorescence instruments for the detection of zinc stress and chlorophyll concentration in bahia grass (Paspalum notatum Flugge.). Remote Sens Environ. 2003, 84, 572–588. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens Envrion. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Richardson, A.J.; Wiegand, C.L. Distinguishing vegetation from soil background information. Photogramm. Eng. Remote Sen. 1977, 43, 1541–1552. [Google Scholar]

- Erdle, K.; Mistele, B.; Schmidhalter, U. Comparison of active and passive spectral sensors in discriminating biomass parameters and nitrogen status in wheat cultivars. Field Crop Res. 2011, 124, 74–84. [Google Scholar] [CrossRef]

- Miller, J.R.; Hare, E.W.; Wu, J. Quantitative characterization of the vegetation red edge reflectance 1. An invertedGaussian reflectance model. Int. J. Remote Sens. 1990, 11, 1755–1773. [Google Scholar] [CrossRef]

- Thompson, C.N.; Mills, C.; Pabuayon, I.L.B.; Ritchie, G.L. Time-based remote sensing yield estimates of cotton in water-limiting environments. Agron. J. 2020, 112, 975–984. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Keer, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens Envrion. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens Envrion. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Huete, A.; Justice, C.; Liu, H. Development of vegetation and soil indices for MODIS-EOS. Remote Sens Environ. 1994, 49, 224–234. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens Envrion. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation adjusted index(SAVI). Remote Sens Envrion. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Van Beek, J.; Tits, L.; Somers, B.; Coppin, P. Stem water potential monitoring in pear orchards through WorldView-2 multispectral imagery. Remote Sens. 2013, 5, 6647–6666. [Google Scholar] [CrossRef]

- Daughtry, C.S.T.; Walthall, C.L.; Kim, M.S.; de Colstoun, E.B.; McMurtrey, J.E. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens Envrion. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens Envrion. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Haboudane, D.; Tremblay, N.; Miller, J.R.; Vigneault, P. Remote estimation of crop chlorophyll content using spectral indices derived from hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2008, 46, 423–437. [Google Scholar] [CrossRef]

- Megat Mohamed Nazir, M.N.; Terhem, R.; Norhisham, A.R.; Mohd Razali, S.; Meder, R. Early Monitoring of Health Status of Plantation-Grown Eucalyptus pellita at Large Spatial Scale via Visible Spectrum Imaging of Canopy Foliage Using Unmanned Aerial Vehicles. Forests 2021, 12, 1393. [Google Scholar] [CrossRef]

- Roujean, J.L.; Breon, F.M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens Envrion. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Sripada, R.P.; Heiniger, R.W.; White, J.G.; Meijer, A.D. Aerial color infrared photography for determining early in-season nitrogen requirements in corn. Agron. J. 2006, 98, 968–977. [Google Scholar] [CrossRef]

- Verrelst, J.; Schaepman, M.E.; Koetz, B.; Kneubühler, M. Angular sensitivity analysis of vegetation indices derived from chris/proba data. Remote Sens Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Sellaro, R.; Crepy, M.; Trupkin, S.A.; Karayekov, E.; Buchovsky, A.S.; Rossi, C.; Casal, J.J. Cryptochrome as a sensor of the blue/green ratio of natural radiation in arabidopsis. Plant Physiol. 2010, 154, 401–409. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Vonbargen, K.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Guerrero, J.M.; Pajares, G.; Montalvo, M.; Romeo, J.; Guijarro, M. Support Vector Machines for crop/weeds identification in maize fields. Expert. Syst. Appl. 2012, 39, 11149–11155. [Google Scholar] [CrossRef]

- Sun, G.X.; Li, Y.B.; Wang, X.C.; Hu, G.Y.; Wang, X.; Zhang, Y. Image segmentation algorithm for greenhouse cucumber canopy under various natural lighting conditions. Int. J. Agric. Biol. Eng. 2016, 9, 130–138. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Song, X.X.; Wu, F.; Lu, X.T.; Yang, T.L.; Ju, C.X.; Sun, C.M.; Liu, T. The Classification of Farming Progress in Rice–Wheat Rotation Fields Based on UAV RGB Images and the Regional Mean Model. Agriculture 2022, 12, 124. [Google Scholar] [CrossRef]

- Fu, Z.P.; Jiang, J.; Gao, Y.; Krienke, B.; Wang, M.; Zhong, K.T.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.X.; et al. Wheat Growth Monitoring and Yield Estimation based on Multi-Rotor Unmanned Aerial Vehicle. Remote Sens. 2020, 12, 508. [Google Scholar] [CrossRef]

- Sun, X.K.; Yang, Z.Y.; Su, P.Y.; Wei, K.X.; Wang, Z.G.; Yang, C.B.; Wang, C.; Qin, M.X.; Xiao, L.J.; Yang, W.D.; et al. Non destructive monitoring of maize LAI by fusing UAV spectral and textural features. Front. Plant Sci. 2023, 14, 1158837. [Google Scholar] [CrossRef]

- Zheng, H.B.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Cao, W.X.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2018, 20, 611–629. [Google Scholar] [CrossRef]

- Zhang, D.Y.; Han, X.X.; Lin, F.F.; Du, S.Z.; Zhang, G.; Hong, Q. Estimation of winter wheat leaf area index using multi-source UAV image feature fusion. Trans. Chin. Soc. Agric. Eng. 2022, 38, 171–179. (In Chinese) [Google Scholar]

| Band Name | Central Wavelength (nm) | Bandwidth (nm) |

|---|---|---|

| Blue | 475 | 20 |

| Green | 560 | 20 |

| Red | 668 | 10 |

| Red edge | 717 | 10 |

| Near-infrared | 840 | 40 |

| SI | Full Name | Formula | Source |

|---|---|---|---|

| RVI | Ratio Vegetation Index | [32] | |

| GRVI | Green Ratio Vegetation Index | [33] | |

| DVI | Difference Environmental Vegetation Index | [34] | |

| RESR | Red-Edge Simple Ratio | [35] | |

| NDVI | Normalized Difference Vegetation Index | [36] | |

| NDRE | Normalized Difference Red Edge Index | [37] | |

| EVI | Enhanced Vegetation Index | [38] | |

| MSAVI | Modified Soil-Adjusted Vegetation Index | [39] | |

| OSAVI | Optimized Soil-Adjusted Vegetation Index | [40] | |

| GNDVI | Green Normalized Difference Vegetation Index | [41] | |

| TVI | Triangular Vegetation Index | [42] | |

| SAVI | Soil-Adjusted Vegetation Index | [43] | |

| RENDVI | Red Edge Normalized Difference Vegetation Index | [44] | |

| MCARI | Modified Chlorophyll Absorption Ratio Index | [45] | |

| TCARI | Transformed Chlorophyll Absorption in Reflectance Index | [46] | |

| TCARI/OSAVI | Combined Spectral Index | [47] | |

| VARI | Visible Atmospherically Resistant Index | [48] | |

| RDVI | Re-normalized Difference Vegetation Index | [49] | |

| MSR | Modified Simple Ratio | [50] | |

| NGI | Normalized Green Index | [51] |

| SI | Full Name | Formula | Source |

|---|---|---|---|

| GBRI | Green–Blue Ratio Index | [13] | |

| GRRI | Green–Red Ratio Index | [52] | |

| BRRI | Blue–Red Ratio Index | [53] | |

| ExG | Excess Green | [54] | |

| ExR | Excess Red | [55] | |

| ExGR | Excess Green Minus Excess Red | [56] | |

| NGRDI | Normalized Green–Red Difference Index | [57] | |

| RGBVI | Red–Green–Blue Vegetation Index | [58] | |

| CIVE | Color Index of Vegetation | [59] | |

| MExG | Modified Excess Green | [60] | |

| GLA | Green Leaf Algorithm | [61] | |

| VARI | Visible Atmospherically Resistant Index | [62] | |

| NGBDI | Normalized Green–Blue Difference Index | [63] |

| Growth Stage | Number of Samples | Min. Value | Max. Value | Average Value | Standard Deviation | Variance | Coefficient of Variation (%) |

|---|---|---|---|---|---|---|---|

| V4 stage | 70 | 0.37 | 2.20 | 0.83 | 0.34 | 0.11 | 40.96 |

| V9 stage | 70 | 1.61 | 3.19 | 2.31 | 0.34 | 0.12 | 14.72 |

| Strategy | Model | Calibration | Validation | |||

|---|---|---|---|---|---|---|

| R2adj | RMSEcal | AIC | R2 | RMSEval | ||

| SI of MS | 0.838 | 0.315 | −211.26 | 0.897 | 0.283 | |

| Textural features of MS | 0.817 | 0.332 | −198.62 | 0.881 | 0.303 | |

| SI and textural features of MS | 0.859 | 0.291 | −221.89 | 0.899 | 0.273 | |

| SI of RGB | 0.819 | 0.332 | −199.42 | 0.875 | 0.316 | |

| Textural features of RGB | 0.826 | 0.325 | −203.29 | 0.902 | 0.289 | |

| SI and textural features of RGB | 0.833 | 0.319 | −207.01 | 0.903 | 0.283 | |

| Strategy | Model | Calibration | Validation | |||

|---|---|---|---|---|---|---|

| R2adj | RMSEcal | AIC | R2 | RMSEval | ||

| SI of fused image | 0.837 | 0.316 | −210.65 | 0.896 | 0.285 | |

| Textural features of fused image | 0.861 | 0.288 | −223.82 | 0.898 | 0.280 | |

| SI + textural features of fused image | 0.870 | 0.277 | −229.20 | 0.894 | 0.284 | |

| Strategy | Fitting Model | Calibration | Validation | |||

|---|---|---|---|---|---|---|

| R2adj | RMSEcal | AIC | R2 | RMSEval | ||

| SI of MS + RGB | 0.844 | 0.308 | −213.44 | 0.902 | 0.277 | |

| SI of MS + textural features of RGB | 0.849 | 0.303 | −216.46 | 0.890 | 0.292 | |

| SI and textural features of MS + RGB | 0.883 | 0.261 | −236.61 | 0.905 | 0.263 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, J.; Chen, P.; Wang, L. A Comparison of Different Data Fusion Strategies’ Effects on Maize Leaf Area Index Prediction Using Multisource Data from Unmanned Aerial Vehicles (UAVs). Drones 2023, 7, 605. https://doi.org/10.3390/drones7100605

Ma J, Chen P, Wang L. A Comparison of Different Data Fusion Strategies’ Effects on Maize Leaf Area Index Prediction Using Multisource Data from Unmanned Aerial Vehicles (UAVs). Drones. 2023; 7(10):605. https://doi.org/10.3390/drones7100605

Chicago/Turabian StyleMa, Junwei, Pengfei Chen, and Lijuan Wang. 2023. "A Comparison of Different Data Fusion Strategies’ Effects on Maize Leaf Area Index Prediction Using Multisource Data from Unmanned Aerial Vehicles (UAVs)" Drones 7, no. 10: 605. https://doi.org/10.3390/drones7100605

APA StyleMa, J., Chen, P., & Wang, L. (2023). A Comparison of Different Data Fusion Strategies’ Effects on Maize Leaf Area Index Prediction Using Multisource Data from Unmanned Aerial Vehicles (UAVs). Drones, 7(10), 605. https://doi.org/10.3390/drones7100605