Abstract

Asbestos is a class 1 carcinogen, and it has become clear that it harms the human body. Its use has been banned in many countries, and now the investigation and removal of installed asbestos has become a very important social issue. Accordingly, many social costs are expected to occur, and an efficient asbestos investigation method is required. So far, the examination of asbestos slates was performed through visual inspection. With recent advances in deep learning technology, it is possible to distinguish objects by discovering patterns in numerous training data. In this study, we propose the use of drone images and a faster region-based convolutional neural network (Faster R-CNN) to identify asbestos slates in target sites. Furthermore, the locations of detected asbestos slates were estimated using orthoimages and compiled cadastral maps. A total of 91 asbestos slates were detected in the target sites, and 91 locations were estimated from a total of 45 addresses. To verify the estimated locations, an on-site survey was conducted, and the location estimation method obtained an accuracy of 98.9%. The study findings indicate that the proposed method could be a useful research method for identifying asbestos slate roofs.

1. Introduction

Asbestos refers to silicate minerals produced as asbestiform. Asbestos has excellent mechanical strength, incombustibility, insulating capability, as well as soundproofing and sound absorption capability. Hence, it has been used as a raw material for building construction for a long time [1].

The risk of asbestos-related diseases has been known since the 1960s. Furthermore, it has been established that asbestos causes incurable diseases, such as asbestosis (i.e., a type of respiratory disease), malignant mesothelioma, and lung cancer [2]. Therefore, the International Agency for Cancer has classified asbestos as a Group 1 carcinogen [3].

As the dangers of asbestos have been revealed, many countries, including the United States and Japan, have banned its use since the 1990s, and its consumption is rapidly declining worldwide [4]. In Korea, the asbestos regulation was enacted, and since 2009, the manufacturing, importing, and use of all asbestos, as well as asbestos-containing products, have been banned [5]. Despite the asbestos ban, dismantling and removal of existing asbestos remain a social issue.

In the case of Korea, asbestos was mainly used for exterior building materials like slates, interior materials such as textiles, and facility materials like gaskets. In particular, about 96% of imported asbestos in the 1970s was used for slates. Currently, the number of buildings in which asbestos slates are used in Korea amounts to 1.2 million, which accounts for about 18% of all buildings in Korea [6]. According to a study by Kim et al. [3], it is predicted that up to 555 people will die from asbestos-related diseases by 2031 due to the impact of asbestos-containing slate buildings installed in Korea. Therefore, the safe removal of asbestos slates is a very urgent matter.

The removal of asbestos slates is performed in the following steps: (1) status survey, (2) quantity calculation, (3) dismantling of asbestos slates, and (4) burying asbestos slates in a landfill. The Korean government recommends the removal of all asbestos slates regardless of the condition, such as damage or defects installed as exterior materials. Asbestos slate inspection is conducted through a visual inspection by a professional investigator and the location where the slate is installed is the roof of the building, so it is very difficult to visually inspect it. Furthermore, a lot of manpower and costs are required. These problems can be solved using drones.

A drone generally refers to an aircraft without a pilot on board that is designed and manufactured to perform tasks that are difficult for humans by autonomously flying according to a pre-programmed function or remotely controlling the aircraft [7]. A study was recently conducted on surveying asbestos slates installed on roofs by utilizing spatial characteristics of the drone’s flight. Lee et al. [8] used drones to identify the status of asbestos slate roofs of buildings in a densely populated, old residential area. They used existing building information for comparison to uncover the differences and confirmed the usefulness of their method through on-site verification. However, drone images were analyzed with the naked eye in their study. Therefore, there was a limitation in quickly and accurately identifying the status of asbestos slate roofs of buildings in a large-scale area such as a city.

The technology for automatically analyzing images has developed rapidly with the recent advances in deep learning technology. Deep learning is a method that extracts high-level abstractions from training data through a combination of various non-linear transformation techniques [9]. Moreover, it teaches computers to think like humans. In deep learning, machines perform the feature extraction step, which used to be assigned by humans, and machines have the advantage of recognizing features of objects that humans previously could not detect. Deep learning trains artificial neural networks (ANN) to distinguish objects from humans by discovering patterns in numerous training data.

Previously, it was very difficult to implement an ANN with an applicable level of complexity by increasing the number of hidden layers noticeably. Since 2010, computational speed has significantly improved due to the advancement of graphics hardware, and it has become easier to obtain training data from Internet’s big data. As a result, deep learning of ANN has been made possible [10]. After the error rate of deep learning improved by over 20%, its use began to spread into various fields. Furthermore, deep learning achieved the best classification performance in the ImageNet Challenge in 2012. Since then, there has been a rapid increase in its application in the image classification field. The convolutional neural network (CNN) is the most widely used in image recognition, and shows good results, especially in image classification [11,12,13,14,15]. Recently, the faster region-based convolutional neural network (Faster R-CNN), which is one of the techniques that was further developed from a CNN algorithm, has been used extensively. Faster R-CNN has been developed to detect even the location of an object in an image with high accuracy. It consists of a region proposal network (RPN) and a Fast R-CNN structure. After the input values pass through the CNN, the output feature map is fed into the RPN and Fast R-CNN. The RPN structure proposes a region for the output feature map, and the Fast R-CNN detects the proposed region [16].

Studies using deep learning technology based on drone images are actively underway. Lou et al. [17] applied deep learning, such as Faster R-CNN and YOLOv3, to drone images for detecting the crown of pine trees and estimating its width with a high accuracy. Alshaibani et al. [18] conducted a study to identify types of airplanes based on Mask R-CNN and drone images to handle traffic bottlenecks in airports. Asbestos slates produced in Korea are standardized and produced in two types, so the color, width, and depth of the trough are constant and there are no similar materials. We constructed asbestos slate learning data for the two types. Objects with such patterned appearance characteristics are easy to be analyzed by deep learning. Consequently, it is possible to clearly distinguish roofs made with asbestos slates from roofs made with other materials like roof tiles or reinforced concrete. Thus, buildings with roofs made of asbestos slates can be identified because their external characteristics are distinct. Accordingly, Faster R-CNN can be applied in the case of asbestos slates with unique external characteristics. However, there are few related prior studies. Therefore, a study was conducted for the purpose of automatic detection of asbestos slate and GIS-based location estimation using drone images and Faster R-CNN, which is described in this paper.

2. Methodology

2.1. Overview

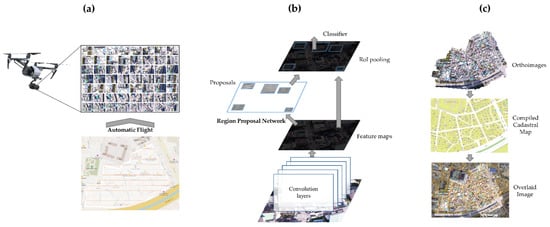

Figure 1 shows the schematic diagram of the method for surveying the asbestos slate roof using drones and Faster R-CNN. As shown the drone image-based automatic identification of asbestos slate roofs consists of three steps: (a) aerial photography, (b) aerial image analysis based on Faster R-CNN, and (c) estimation of the location of asbestos slates. The objective of this study is to automatically detect asbestos slate roofs and estimate their locations. To achieve that, the compiled cadastral map and orthoimages were used, which are public data provided by the government.

Figure 1.

Schematic diagram of the method for surveying the asbestos slate roof.

2.2. Drone-Based Data Acquisition

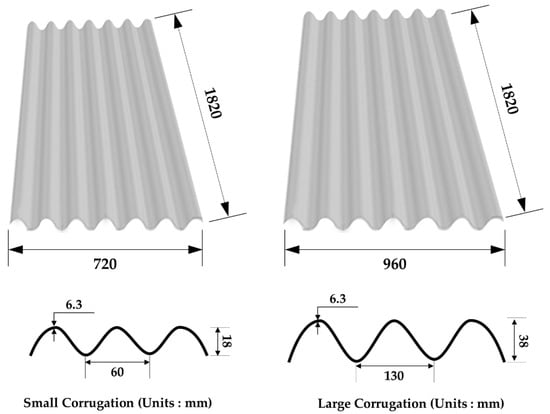

Aerial images are used for two purposes in this study: (1) detection of asbestos slates based on Faster R-CNN, and (2) estimation of the location of asbestos by constructing orthoimages. When taking aerial images from a drone to detect asbestos slates and estimate their locations, it is necessary to consider the altitude at which aerial images are taken, camera angle, time slot of aerial images, photographic method, and photograph overlap. The altitude must be set such that asbestos slate roofs can be distinguished from aerial images. Based on the altitude, the ground sample distance (GSD) value that considers the external characteristics of asbestos slates should be satisfied. As mentioned in Section 1, asbestos slates have distinct external characteristics. As shown in Figure 2 below, the asbestos slate has a 6 cm gap between the grooves. Therefore, it is required to secure a GSD value of at least 3 cm/pixel to enable the identification of the gap between grooves. In addition, if a high-rise structure exists around the target site, the height of that structure must be considered when setting the altitude.

Figure 2.

External characteristics of asbestos slates.

In the case of setting the camera angle, a vertical shooting angle of 90 degrees is appropriate for detecting asbestos slates because they are used as roofing material. Therefore, if the time slot for taking aerial images to generate drone-based orthoimages is closer to noon, it is possible to reduce the possibility of distortion and noise caused by shadows. Moreover, images with a certain quality level or higher quality need to be acquired to utilize Faster R-CNN. In the case of a manual flight, it is impossible to keep the flight altitude, speed, and overlap constantly due to the subjective factors of the pilot being reflected. On the contrary, in the case of automatic route flight, it is possible to secure aerial image data of a certain quality compared to that in manual flight.

To estimate the location of asbestos slates, it is essential to construct orthoimages. Furthermore, the overlap setting is a very important element in constructing orthoimages of better quality. According to Chapter 3, Article 13 of the “Guidelines for Public Surveying Using Unmanned Aerial Vehicles” by the National Geographic Information Institute of Korea, the overlap should be 65% or more in the direction of camera shooting, and 60% or more between adjacent courses when taking images from an unmanned aerial vehicle. Furthermore, it is stated that images should be taken with an overlap of 85% or more in the direction of camera shooting and 80% or more between adjacent courses if the terrain is highly undulating or there are high-rise buildings. In a study by Cho et al. [19], they conducted their experiment 45 times at various altitudes, overlaps, and camera angles within 150 m of altitude. Then, they compared and analyzed orthoimages and 1/1000 numerical maps. In addition, as the overlap increases when taking photos, the plane positioning accuracy increases as well. Moreover, the study in [20] showed that orthoimages and digital surface modal (DSMs) created when the forward overlap is 70% and lateral overlap is 80% can be used as data for aerial photogrammetry maps of a reduced scale of 1:1000 or more.

2.3. Faster R-CNN and Training Data

With regards to the R-CNN series, Faster R-CNN is an algorithm that includes the RPN. When an image is input, it is first trained with a CNN to create a feature map. A region proposal is then created by training the generated feature map with RPN. Afterward, classification and bounding-box regression are performed on the created region proposal. At the same time, this algorithm projects the region proposal onto the feature map to set the region of interest (ROI) and calculates the final results by performing classification and bounding-box regression on it [21,22,23].

A large amount of training data is required to survey asbestos slate roofs automatically using Faster R-CNN. The machine learning process of object detection largely consists of data preparation, model preparation and training, and model evaluation and analysis [24]. The amount of data to be trained in the data preparation step significantly impacts the accuracy in the later training and model evaluation steps. In other words, a large amount of labeled, high-quality training data is required to train a model to make it highly accurate [25]. In particular, the accuracy was higher when the model was trained with drone image-based training data [26].

2.4. Construction of Orthoimages and Estimation of Location

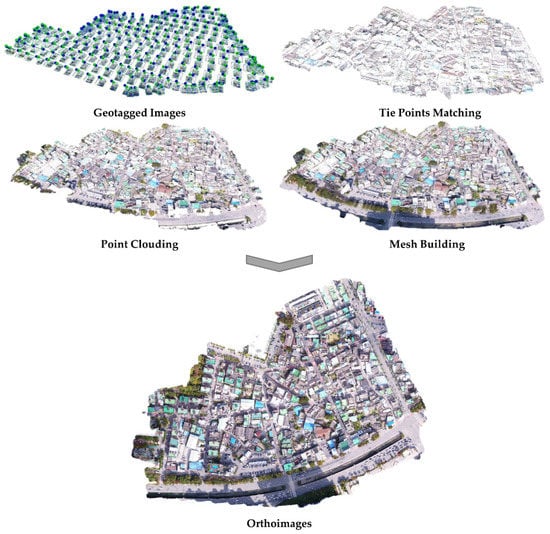

To estimate the location of asbestos slate images taken with a drone, orthoimages must first be created. They require aerial image data, exterior orientation factors, and a precise digital elevation model (DEM) for the target site. The accuracy of orthoimages is determined by the quality of aerial photography images, the accuracy of exterior orientation factors, and the accuracy of DEM [27]. The point cloud-based 3D modeling process consists of three steps: (1) tie point matching, (2) point cloud generation, and (3) mesh building. For the construction of a 3D model, the feature points are extracted from aerial photography images acquired from the unmanned aircraft in the initial processing step through the Scale Invariant Feature Transform (SIFT) algorithm. Then, the extracted feature points go through the following steps: (1) internal orientation, (2) calibration of image subset, and (3) relative orientation. If the value of the bundle adjustment results is invalid after performing the geo-referencing step, the geo-referencing process is repeated. Next, the point cloud data are generated through the dense reconstruction step, and then, the mesh formation process is carried out to construct a 3D model [28,29]. Lastly, to construct orthoimages, textures are created based on original images and mapped to a polygon mesh model. Orthoimages can be constructed by performing this building texture step. The detailed process of constructing orthoimages is shown in the following Figure 3.

Figure 3.

The process of constructing orthoimages.

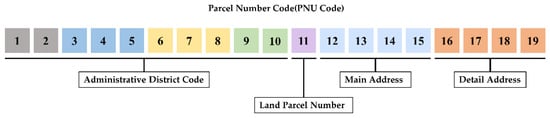

ArcGIS Desktop (by ESRI) was used to estimate the location of asbestos slates detected through Faster R-CNN. ArcGIS Desktop is a basic solution for GIS professionals to create, isolate, manage and share spatial information, it is a program that enables them to create maps, perform spatial analysis, and manage data. The detected object is converted into points by layering the orthoimages and compiled cadastral map, which has Parcel number (PNU) code attributes, in the program. Since each converted point has a PNU code number composed of a unique parcel number, they can be converted into addresses using these numbers. A detailed description of the PNU code is shown in Figure 4.

Figure 4.

Details of parcel number code.

3. Experimental Results

3.1. Aerial Photography

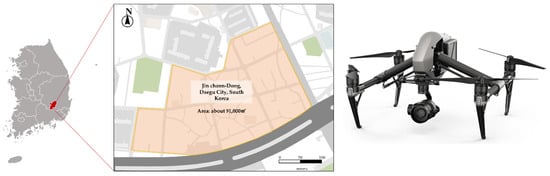

The priority area for removing asbestos slates, where a redevelopment project has been planned, was selected as the target site to acquire the asbestos slate data. The status of the target site is shown in Figure 5 below. Aerial photographs were taken at a vertical angle of 90 degrees around noon on 22 October 2020. The aerial photography altitude was set at 70 m in this study to avoid collisions with high-rise apartments and secure a GSD at which the identification of unique external characteristics of asbestos slates is possible. Both the rotary-wing drone (Inspire 2 model) and vision sensor (Zenmuse X5s) used for aerial photography were manufactured by DJI Co. Ltd. in China. The two systems were connected by a gimbal, as shown in Figure 5. The resolution of the camera was 5280 × 2970 pixels, and the field of view (FOV) was 72° (DJI MFT 15 mm/1.7 ASPH). The GSD of the image acquired through the route flight was analyzed at 1.32 cm/pixel, ensuring a GSD level at which the gap between the grooves of the asbestos slate can be identified. Aerial photographs were taken approximately during 15 min of automatic flight at an average flight speed of 10 m/s. By considering the overlap and image registration for constructing orthoimages, aerial photographs were taken by setting both forward and lateral overlaps at 70%. As a result, a total of 254 aerial images were obtained.

Figure 5.

The status of the target site and drone.

3.2. Analysis of Aerial Images Based on Faster R-CNN

To obtain training data for Faster R-CNN, drones were used in this study in areas where asbestos slates are widely distributed. As shown in Figure 6 below, data for 475 asbestos slates were collected from five sites using the same drone used in validation.

Figure 6.

Site for obtaining asbestos slates data.

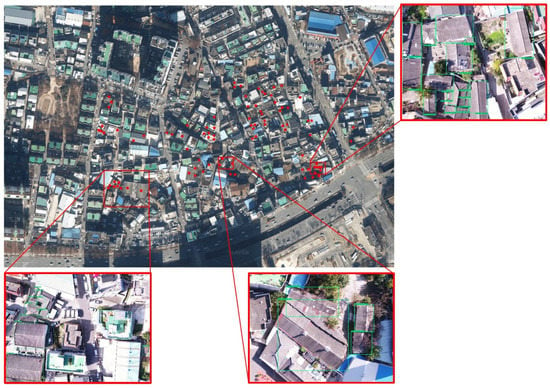

Asbestos slates were detected through transfer learning of 475 collected training data based on the ImageNet training model (50,000 data, 20 classes). Twenty validation datasets were utilized, and 2080 TI was used as the graphic processing unit (GPU). Moreover, ResNet101 was used as the backbone model. As a result, a total of 91 asbestos slate roofs were detected in target sites. The following Figure 7 shows the distribution and detection of asbestos slates in these sites.

Figure 7.

The distribution and detection of asbestos slates in target sites.

3.3. Estimation of Location of Asbestos Slates and on-Site Verification

Using Agisoft’s PhotoScan Professional program, an orthoimage with RMSE X = 0.100 m, RMSE Y = 0.074 m, and spatial resolution of GSD 1.59 cm/pixel was constructed through point cloud-based 3D modeling. To increase the location accuracy when constructing orthoimages, six ground control points (GCPs) were set using the VRS-RTK equipment. ArcGIS Desktop was used to estimate the locations of detected asbestos slates based on the orthoimages and compiled cadastral map. The results showed that asbestos slates were detected in 91 locations from a total of 45 addresses. The details are shown in the following Table 1.

Table 1.

Location and quantity of asbestos slates detected.

An on-site validation was performed from 1 April until 3 April 2021, to analyze the reliability of detection of asbestos slates and location estimation results. Based on the results, a total of 90 asbestos slates were identified in the on-site validation, showing an accuracy of 98.9%. Thus, the possibility of detecting asbestos slates and estimating their locations with high accuracy using drones and Faster R-CNN was verified. It is anticipated that the false detection of asbestos slates can be solved by supplementing the training data and enhancing the algorithms.

4. Discussion

Asbestos is a class 1 carcinogen, and it has become clear that it harms the human body. As a result, its use is banned in many countries. Investigation and removal of installed asbestos is now a very important issue [30]. Accordingly, many social costs are expected to occur, and for this, an efficient research method is required [31]. In this study, an asbestos slate was detected using drones and deep learning. In addition, an efficient methodology for finding asbestos slate was presented by analyzing the location through ArcGIS Desktop.

Previously, Lee et al. suggested a methodology to find asbestos slate using a drone, but they analyzed each image manually [8]. E Raczko et al. applied deep learning to find asbestos slate [32]. However, this study had limitations in finding the exact location of the asbestos slate by providing only the GPS information of the drone with an error of several meters.

This study is different from the study of Lee et al. [8] in that the asbestos slate was automatically extracted through deep learning and from the study of E Raczko et al. [32] in that the point cloud technique was applied to the drone image for correcting the location information and finding the exact address of the asbestos slate.

In many countries, rapid urbanization occurred in the 1970s and 1980s, and many asbestos slates were installed. Now, in many places, district-scale redevelopment is in progress. In this process, it is expected that huge costs will be incurred for the investigation and removal of asbestos slates. Accordingly, there is an urgent need for a commercialization-level asbestos slate investigation methodology that can be directly applied in the industrial field. Therefore, this study is expected to contribute to the industry by automatically extracting asbestos and providing accurate address information.

However, in this study, the Faster R-CNN architecture detected the asbestos slate through individual aerial still-cut images and estimated the location of the detected asbestos slate through the use of orthoimages. Therefore, the fusion of these two data is necessary. In addition, recent accuracy deep learning algorithms such as YOLOv5 have been released. Our model has an accuracy of 98.9%, so it is judged that additional research applying such a high-accuracy algorithm is necessary. Kim et al. conducted a study on the cost of asbestos slate removal [33]. Combining this study with the research of Kim et al. is expected to contribute to the field of asbestos slate investigation and demolition of large-scale redevelopment sites.

5. Conclusions

Since the use of asbestos has been banned in many countries, asbestos slates must be dismantled and removed due to their hazards. Accordingly, there is a need for an efficient research method for asbestos slate instead of the visual inspection method. In this study, we selected areas where the dismantling and removal of asbestos slates were anticipated as the target sites. Then, a drone and Faster R-CNN were used to detect asbestos slates. Furthermore, the locations of detected asbestos slates were estimated using the ArcGIS Desktop program based on orthoimages and compiled cadastral maps.

Drone images were used to construct orthoimages with a location accuracy of root mean square error (RMSE) X = 0.100 m, RMSE Y = 0.074 m, and a spatial resolution of GSD 1.59cm/pixel. In addition, images in the asbestos slate training data obtained through aerial photography were used to train Faster R-CNN. As a result, a total of 91 asbestos slates were detected in the target sites. The result shows that 91 locations were estimated from a total of 45 addresses. To verify the estimated locations, an on-site survey was conducted. Based on the analysis of the result, the location estimation had an accuracy of 98.9%. Therefore, it is considered that the process of identifying asbestos slate roofs using drone images and Faster R-CNN presented in this study can be a useful research method.

This study presented a methodology that can automatically detect asbestos slate and accurately estimate its location. This is considered an efficient investigation that can change the paradigm of the asbestos slate investigation method, which relies on visual inspection currently. It is also expected to make a great contribution to the field of asbestos slate investigation and demolition in the process of large-scale urban remodeling.

Author Contributions

Conceptualization, D.-M.S. and S.-C.B.; methodology, D.-M.S. and S.-C.B.; software, I.-H.K., H.-J.W. and M.-S.K.; validation, D.-M.S., H.-J.W. and M.-S.K.; formal analysis, W.-H.H.; investigation, D.-M.S.; resources, S.-C.B.; data curation, H.-J.W.; writing—original draft preparation, D.-M.S.; writing—review and editing, S.-C.B.; visualization, M.-S.K.; supervision, W.-H.H. and S.-C.B.; project administration, S.-C.B.; funding acquisition, S.-C.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government(MSIT) (No. 2020R1F1A1073244).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jang, H.S.; Lee, T.H.; Kim, J.H. Asbestos Management Plan According to the Investigation on the Actual Conditions of Asbestos in Public Buildings. Korean Soc Env. Admin. 2014, 20, 27–34. [Google Scholar]

- Baek, S.C.; Kim, Y.C.; Choi, J.H.; Hong, W.H. Determination of the essential activity elements of an asbestos management system in the event of a disaster and their prioritization. J. Clean. Prod. 2016, 137, 414–426. [Google Scholar] [CrossRef]

- Kim, S.Y.; Kim, Y.C.; Kim, Y.K.; Hong, W.H. Predicting the Mortality from Asbestos-Related Diseases Based on the Amount of Asbestos used and the Effects of Slate Buildings in Korea. Sci. Total Environ. 2016, 542, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Castleman, B.I. Controversies at international organizations over asbestos industry influence. Int. J. Health Serv. 2001, 31, 193–202. [Google Scholar] [CrossRef]

- Kim, Y.C.; Hong, W.H. Optimal Management Program for Asbestos Containing Building Materials to be Available in the Event of a Disaster. Waste Manag. 2017, 64, 272–285. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.K.; Paek, D.M.; Paik, N.W. The Production, the use, the Number of Workers and Exposure Level of Asbestos in Korea. J. Korean Soc. Occup. Environ. Hyg. 1998, 8, 242–253. [Google Scholar]

- Lee, S.Y.; Kang, W. A Study on the Reestablishment of the Drone’s Concept. Korean Secur. Sci. Rev. 2019, 58, 35–58. [Google Scholar] [CrossRef]

- Lee, S.W.; Hong, W.H.; Lee, S.Y. A Study on Slate Roof Research of Decrepit Residential Area by using UAV. J. Archit. Inst. Korea Plan. Des. 2016, 32, 59–66. [Google Scholar] [CrossRef]

- Pouyanfar, S.; Sadiq, S.; Yan, Y.; Tian, H.; Tao, Y.; Reyes, M.P.; Shyu, M.; Chen, S.; Iyengar, S.S. A Survey on Deep Learning: Algorithms, Techniques, and Applications. ACM Comput. Surv. (CSUR) 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Yang, G.R.; Wang, X. Artificial Neural Networks for Neuroscientists: A Primer. Neuron 2020, 107, 1048–1070. [Google Scholar] [CrossRef]

- Nilsback, M.E.; Zisserman, A. A Visual Vocabulary for Flower Classification. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 1447–1454. [Google Scholar]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of Citrus Trees from Unmanned Aerial Vehicle Imagery using Convolutional Neural Networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef] [Green Version]

- Flores, D.; González-Hernández, I.; Lozano, R.; Vazquez-Nicolas, J.M.; Hernandez Toral, J.L. Automated Agave Detection and Counting using a Convolutional Neural Network and Unmanned Aerial Systems. Drones 2021, 5, 4. [Google Scholar] [CrossRef]

- Papakonstantinou, A.; Batsaris, M.; Spondylidis, S.; Topouzelis, K. A Citizen Science Unmanned Aerial System Data Acquisition Protocol and Deep Learning Techniques for the Automatic Detection and Mapping of Marine Litter Concentrations in the Coastal Zone. Drones 2021, 5, 6. [Google Scholar] [CrossRef]

- Ouattara, T.A.; Sokeng, V.-C.J.; Zo-Bi, I.C.; Kouamé, K.F.; Grinand, C.; Vaudry, R. Detection of Forest Tree Losses in Côte d’Ivoire Using Drone Aerial Images. Drones 2022, 6, 83. [Google Scholar] [CrossRef]

- Sharma, V.; Mir, R.N. Saliency Guided Faster-RCNN (SGFr-RCNN) Model for Object Detection and Recognition. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 1687–1699. [Google Scholar] [CrossRef]

- Lou, X.; Huang, Y.; Fang, L.; Huang, S.; Gao, H.; Yang, L.; Weng, Y.; Hung, I. Measuring Loblolly Pine Crowns with Drone Imagery through Deep Learning. J. For. Res. 2022, 33, 227–238. [Google Scholar] [CrossRef]

- Alshaibani, W.T.; Helvaci, M.; Shayea, I.; Saad, S.A.; Azizan, A.; Yakub, F. Airplane Type Identification Based on Mask RCNN and Drone Images. arXiv preprint 2021, arXiv:2108.12811. [Google Scholar]

- Cho, J.M.; Lee, J.S.; Lee, B.K. A Study on the Optimal Shooting Conditions of UAV for 3D Production and Orthophoto Generation. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2020, 38, 645–653. [Google Scholar]

- Yoo, Y.H.; Choi, J.W.; Choi, S.K.; Jung, S.H. Quality Evaluation of Orthoimage and DSM Based on Fixed-Wing UAV Corresponding to Overlap and GCPs. J. Korean Soc. Geospat. Inf. Sci. 2016, 24, 3–9. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Kim, J.M.; Hyeon, S.G.; Chae, J.H.; Do, M.S. Road Crack Detection Based on Object Detection Algorithm using Unmanned Aerial Vehicle Image. J. Korea Inst. Intell. Transp. Syst. 2019, 18, 155–163. [Google Scholar] [CrossRef]

- Huyan, J.; Li, W.; Tighe, S.; Zhai, J.; Xu, Z.; Chen, Y. Detection of Sealed and Unsealed Cracks with Complex Backgrounds using Deep Convolutional Neural Network. Autom. Constr. 2019, 107, 102946. [Google Scholar] [CrossRef]

- Lee, Y.J. Multispectral Orthoimage Production and Accuracy Evaluation using Drone. Master’s Thesis, Chungnam National University, Daejeon, Korea, 2020. Available online: http://www.riss.kr/link?id=T15513259 (accessed on 22 May 2022).

- Han, B.Y. Deep Learning: Its Challenges and Future Directions. Commun. Korean Inst. Inf. Sci. Eng. 2019, 37, 37–45. [Google Scholar]

- Hung, G.L.; Sahimi, M.S.B.; Samma, H.; Almohamad, T.A.; Lahasan, B. Faster R-CNN Deep Learning Model for Pedestrian Detection from Drone Images. SN Comput. Sci. 2020, 1, 116. [Google Scholar] [CrossRef]

- Lee, K.H.; Hwang, M.G.; Sung, W.K. Research Trends in Data Management Technology for Deep Learning. Korea Inf. Sci. Soc. Rev. 2019, 37, 13–20. [Google Scholar]

- Woo, H.J.; Baek, S.C.; Hong, W.H.; Chung, M.S.; Kim, H.D.; Hwang, J.H. Evaluating Ortho-Photo Production Potentials Based on UAV Real-Time Geo-Referencing Points. Spat. Inf. Res. 2018, 26, 639–646. [Google Scholar] [CrossRef]

- Liao, Y.; Mohammadi, M.E.; Wood, R.L. Deep Learning Classification of 2D Orthomosaic Images and 3D Point Clouds for Post-Event Structural Damage Assessment. Drones 2020, 4, 24. [Google Scholar] [CrossRef]

- Zhang, Y.L.; Byeon, H.S.; Hong, W.H.; Cha, G.W.; Lee, Y.H.; Kim, Y.C. Risk assessment of asbestos containing materials in a deteriorated dwelling area using four different methods. J. Hazard. Mater. 2021, 410, 124645. [Google Scholar] [CrossRef] [PubMed]

- Hong, Y.K.; Kim, Y.C.; Hong, W.H. Development of Estimation Equations for Disposal Costs of Asbestos Containing Materials by Listing the Whole Process to Dismantle and Demolish them. J. Archit. Inst. Korea Plan. Des. 2014, 30, 235–242. [Google Scholar] [CrossRef]

- Raczko, E.; Krówczyńska, M.; Wilk, E. Asbestos roofing recognition by use of convolutional neural networks and high-resolution aerial imagery. Build. Environ. 2022, 217, 109092. [Google Scholar] [CrossRef]

- Kim, Y.C.; Son, B.H.; Park, W.M.; Hong, W.H. A study on the distribution of the asbestos cement slates and calculation of disposal cost in the rural area. Archit. Res. 2011, 13, 31–40. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).