Mission Chain Driven Unmanned Aerial Vehicle Swarms Cooperation for the Search and Rescue of Outdoor Injured Human Targets

Abstract

:1. Introduction

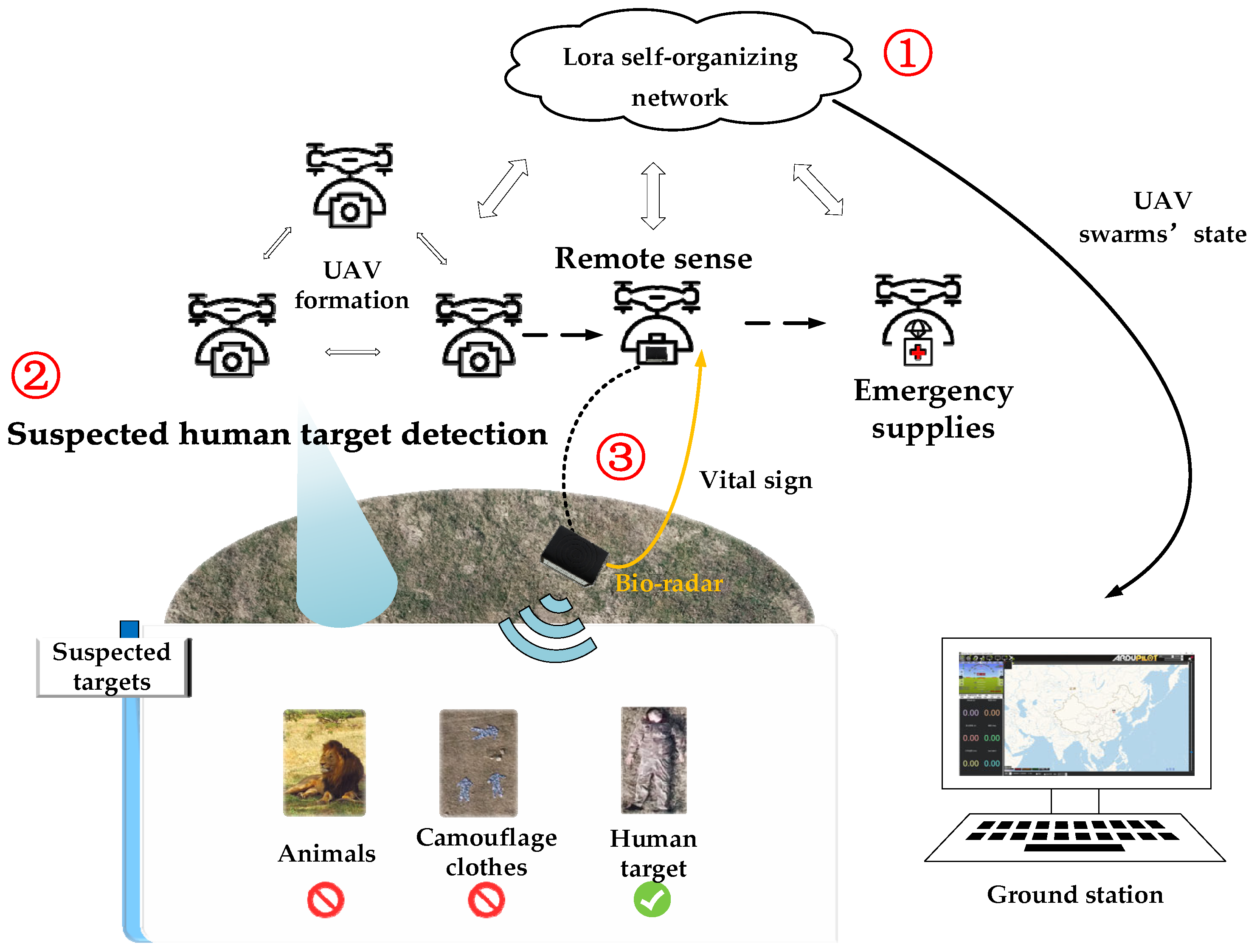

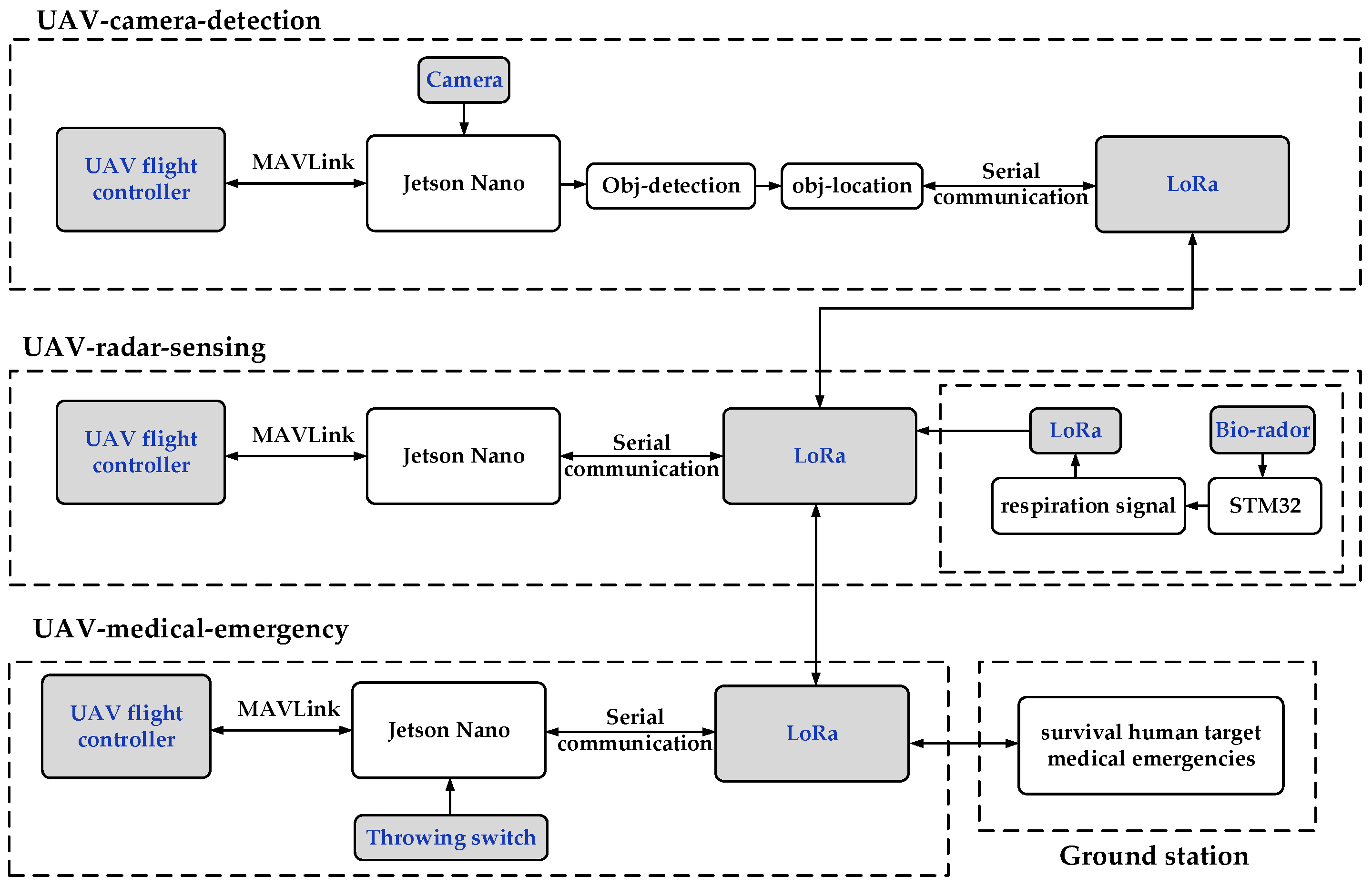

2. System Design

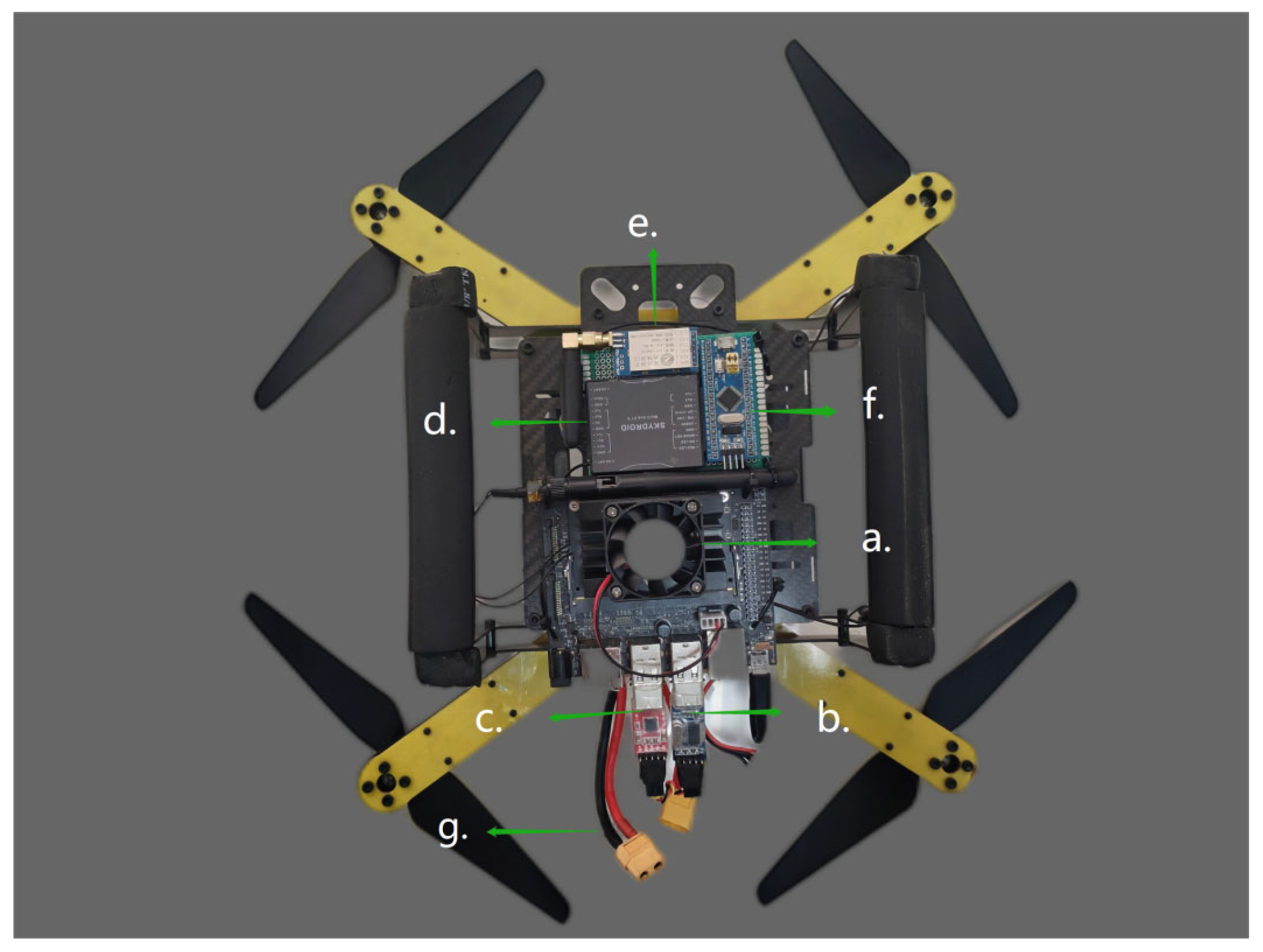

2.1. Main Hardware of the System

2.1.1. UAV Swarms

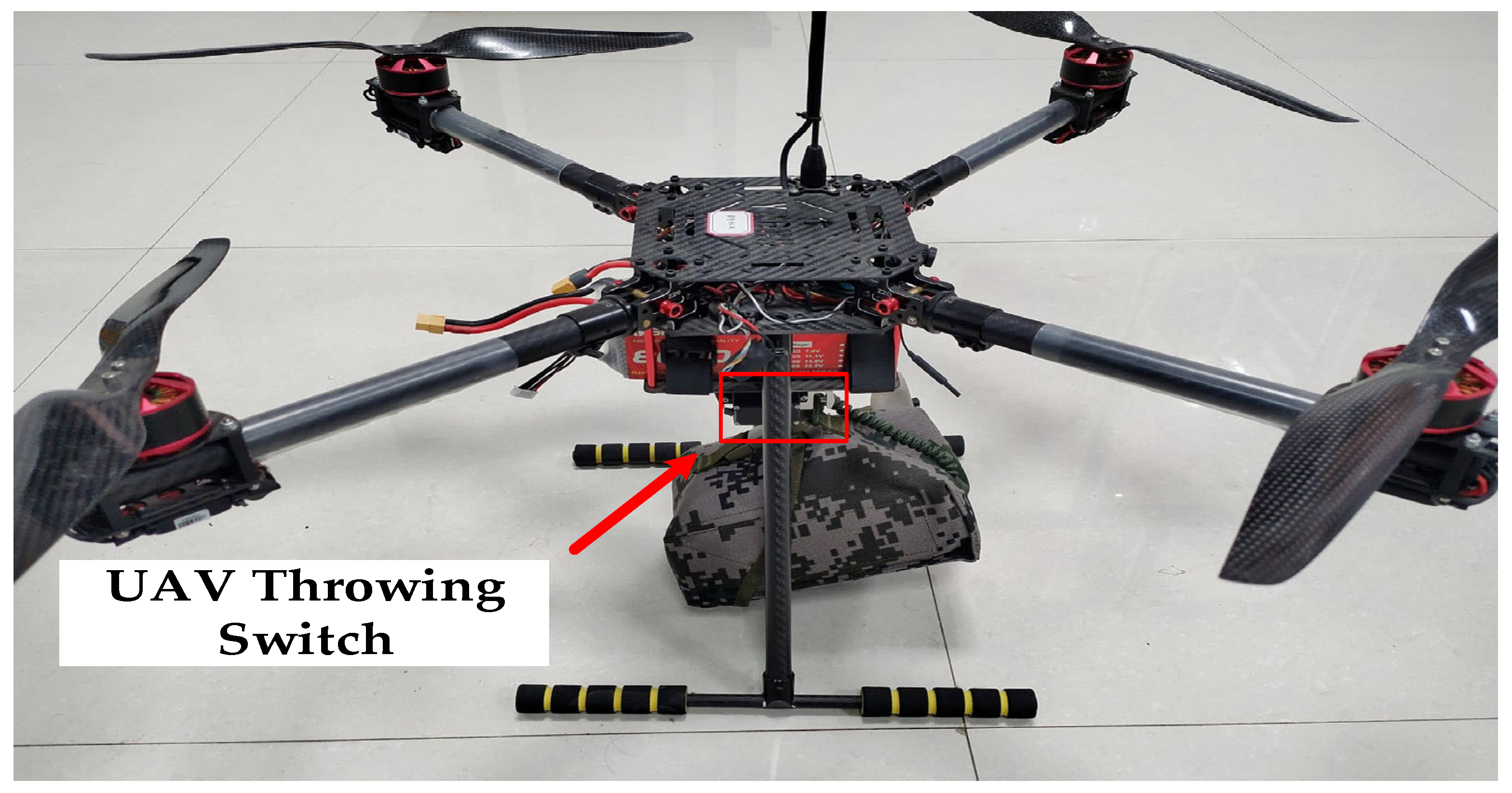

2.1.2. Bio-Radar Module and First Aid Kit

2.1.3. Control and Information Processing Center

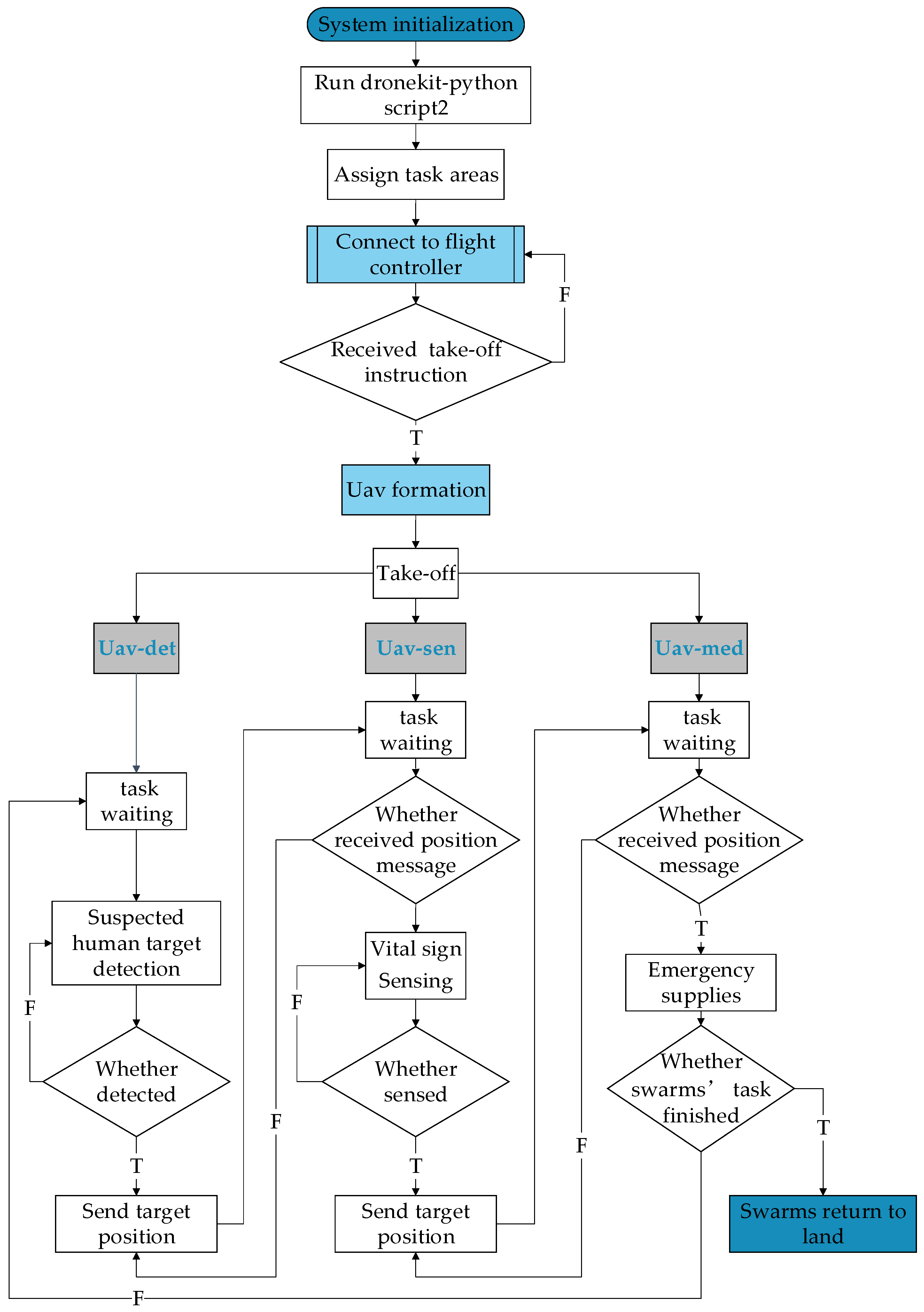

2.2. Mission Chain Driven UAV Swarms Cooperation Algorithm

- (1)

- UAV-camera-based suspected human target detection. After the UAVs receive the Take-off command, they will automatically form a formation to go to the mission point and automatically perform the search task according to the “zigzag” pattern.

- (2)

- UAV-radar-based human target reconfirmation. We wrote a Python script to obtain the location of the UAV when the target was detected and share the location information of the injured to the sensing UAV through the self-organizing network. The sensing UAV will autonomously fly near the injured person and throw a sensing module to further obtain the breath signal of the target.

- (3)

- Medical emergencies through the emergency UAV. Finally, after the target survival is determined, the UAVs that deliver emergency rescue will provide the necessary support to keep the wounded alive.

2.2.1. UAV-Camera-Based Suspected Human Target Detection

- (1)

- Tiny Yolov4

- (2)

- K-means clustering methods

- (3)

- TensorRT Acceleration

2.2.2. UAV-Radar-Based Human Target Reconfirmation

3. Experiments and Discussion

- (1)

- Multi-UAV cooperation: The communication distance of the ad hoc network and the task coordination based on functional differences are mainly tested.

- (2)

- Human target detection: The YOLOv4-Tiny algorithm was tested to match the accuracy and speed of object recognition detection.

- (3)

- Human Target reconfirmation: The accuracy of the sensing device on the respiration signal acquisition of human targets was tested and analysed.

- (4)

- Medical emergencies through the emergency UAV

3.1. Multi-UAV Cooperation

3.2. Human Target Detection

3.2.1. Experimental Design and Configuration

3.2.2. Results and Analysis

3.3. Human Target Reconfirmation

3.4. Medical Emergencies through the Emergency UAV

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Definition | Acronyms |

| Unmanned Aerial Vehicle | UAV |

| Deep Learning | DL |

| Convolutional Neural Networks | CNN |

| You Only Look Once | YOLO |

| Region-CNN | RCNN |

| Long-range Radio | LoRa |

| Graphics Processing Unit | GPU |

| Leaky Rectified Linear Unit | LeakyReLU |

| Feature Pyramid Network | FPN |

| Single Shot MultiBox Detector | SSD |

| Mean average precision | mAP |

| Frames per second | FPS |

References

- Jayasekera, S.; Hensel, E.; Robinson, R. Feasibility Assessment of Wearable Respiratory Monitors for Ambulatory Inhalation Topography. Int. J. Environ. Res. Public Health 2021, 18, 2990. [Google Scholar] [CrossRef] [PubMed]

- Yeom, S. Moving People Tracking and False Track Removing with Infrared Thermal Imaging by a Multirotor. Drones 2021, 5, 65. [Google Scholar] [CrossRef]

- Zhao, W.; Dong, Q.; Zuo, Z. A Method Combining Line Detection and Semantic Segmentation for Power Line Extraction from Unmanned Aerial Vehicle Images. Remote Sens. 2022, 14, 1367. [Google Scholar] [CrossRef]

- Qi, F.; Zhu, M.; Li, Z.; Lei, T.; Xia, J.; Zhang, L.; Yan, Y.; Wang, J.; Lu, G. Automatic Air-to-Ground Recognition of Outdoor Injured Human Targets Based on UAV Bimodal Information: The Explore Study. Appl. Sci. 2022, 12, 3457. [Google Scholar] [CrossRef]

- Wang, S.; Han, Y.; Chen, J.; Zhang, Z.; Du, N. A deep-learning-based sea search and rescue algorithm by UA V remote sensing. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (GNCC), Xiamen, China, 10–12 August 2018; pp. 1–5. [Google Scholar]

- Ding, J.; Zhang, J.; Zhan, Z.; Tang, X.; Wang, X. A Precision Efficient Method for Collapsed Building Detection in Post-Earthquake UAV Images Based on the Improved NMS Algorithm and Faster R-CNN. Remote Sens. 2022, 14, 663. [Google Scholar] [CrossRef]

- Pedersen, C.B.; Nielsen, K.G.; Rosenkrands, K.; Vasegaard, A.E.; Nielsen, P.; El Yafrani, M. A GRASP-Based Approach for Planning UAV-Assisted Search and Rescue Missions. Sensors 2022, 22, 275. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Ge, J.; Wang, Y.; Li, J.; Ding, K.; Zhang, Z.; Guo, Z.; Li, W.; Lan, J. Multi-UAV Optimal Mission Assignment and Path Planning for Disaster Rescue Using Adaptive Genetic Algorithm and Improved Artificial Bee Colony Method. Actuators 2021, 11, 4. [Google Scholar] [CrossRef]

- Qin, B.; Zhang, D.; Tang, S.; Wang, M. Distributed Grouping Cooperative Dynamic Task Assignment Method of UAV Swarm. Appl. Sci. 2022, 12, 2865. [Google Scholar] [CrossRef]

- Hildmann, H.; Kovacs, E.; Saffre, F.; Isakovic, A.F. Nature-Inspired Drone Swarming for Real-Time Aerial Data-Collection Under Dynamic Operational Constraints. Drones 2019, 3, 71. [Google Scholar] [CrossRef] [Green Version]

- Camarillo-Escobedo, R.; Flores, J.L.; Marin-Montoya, P.; García-Torales, G.; Camarillo-Escobedo, J.M. Smart Multi-Sensor System for Remote Air Quality Monitoring Using Unmanned Aerial Vehicle and LoRaWAN. Sensors 2022, 22, 1706. [Google Scholar] [CrossRef] [PubMed]

- Davoli, L.; Pagliari, E.; Ferrari, G. Hybrid LoRa-IEEE 802.11s Opportunistic Mesh Networking for Flexible UAV Swarming. Drones 2021, 5, 26. [Google Scholar] [CrossRef]

- Okulski, M.; Ławryńczuk, M. How Much Energy Do We Need to Fly with Greater Agility? Energy Consumption and Performance of an Attitude Stabilization Controller in a Quadcopter Drone: A Modified MPC vs. PID. Energies 2022, 15, 1380. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Li, X.; He, B.; Ding, K.; Guo, W.; Huang, B.; Wu, L. Wide-Area and Real-Time Object Search System of UAV. Remote Sens. 2022, 14, 1234. [Google Scholar] [CrossRef]

- Kathuria, N.; Seet, B.-C. 24 GHz Flexible Antenna for Doppler Radar-Based Human Vital Signs Monitoring. Sensors 2021, 21, 3737. [Google Scholar] [CrossRef] [PubMed]

- Gouveia, C.; Vieira, J.; Pinho, P. A Review on Methods for Random Motion Detection and Compensation in Bio-Radar Systems. Sensors 2019, 19, 604. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, C.; Chen, F.; Qi, F.; Liu, M.; Li, Z.; Liang, F.; Jing, X.; Lu, G.; Wang, J. Searching for survivors through random human-body movement outdoors by continuous-wave radar array. PLoS ONE 2016, 11, e0152201. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Wang, P.; Xue, H.; Liang, F.; Qi, F.; Lev, H.; Yu, X.; Wang, J.; Zhang, Y. Non-contact vital states identification of trapped living bodies using ultra-wideband bio-radar. IEEE Access 2020, 9, 6550–6559. [Google Scholar] [CrossRef]

| Parameter | Configuration |

|---|---|

| CPU | Inter i7-1180H |

| GPU | Nvidia RTX 3050 |

| System | Windows10/Ubuntu20.04 |

| Accelerate environment | CUDA11.4 cuDNN8.2 |

| Training framework | Darknet |

| Model | mAP50 | FPS |

|---|---|---|

| Yolov4-tiny | 67.38% | 14 |

| clustered yolov4-tiny | 91.07% | 14 |

| Yolov4-tiny after clustering and TensorRT acceleration | 87.21% | 38 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, Y.; Qi, F.; Jing, Y.; Zhu, M.; Lei, T.; Li, Z.; Xia, J.; Wang, J.; Lu, G. Mission Chain Driven Unmanned Aerial Vehicle Swarms Cooperation for the Search and Rescue of Outdoor Injured Human Targets. Drones 2022, 6, 138. https://doi.org/10.3390/drones6060138

Cao Y, Qi F, Jing Y, Zhu M, Lei T, Li Z, Xia J, Wang J, Lu G. Mission Chain Driven Unmanned Aerial Vehicle Swarms Cooperation for the Search and Rescue of Outdoor Injured Human Targets. Drones. 2022; 6(6):138. https://doi.org/10.3390/drones6060138

Chicago/Turabian StyleCao, Yusen, Fugui Qi, Yu Jing, Mingming Zhu, Tao Lei, Zhao Li, Juanjuan Xia, Jianqi Wang, and Guohua Lu. 2022. "Mission Chain Driven Unmanned Aerial Vehicle Swarms Cooperation for the Search and Rescue of Outdoor Injured Human Targets" Drones 6, no. 6: 138. https://doi.org/10.3390/drones6060138

APA StyleCao, Y., Qi, F., Jing, Y., Zhu, M., Lei, T., Li, Z., Xia, J., Wang, J., & Lu, G. (2022). Mission Chain Driven Unmanned Aerial Vehicle Swarms Cooperation for the Search and Rescue of Outdoor Injured Human Targets. Drones, 6(6), 138. https://doi.org/10.3390/drones6060138