A Synthetic Review of UAS-Based Facility Condition Monitoring

Abstract

1. Introduction

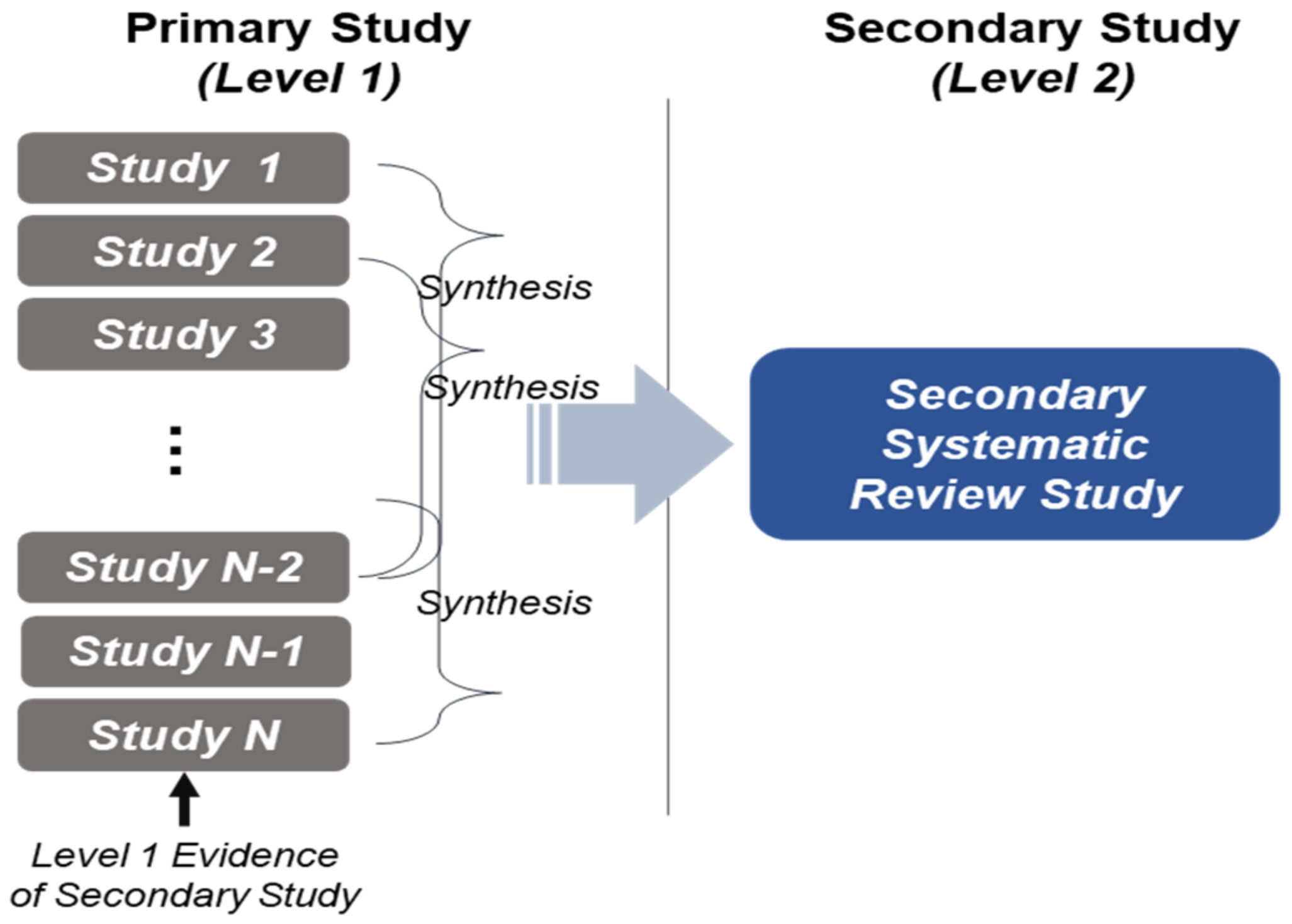

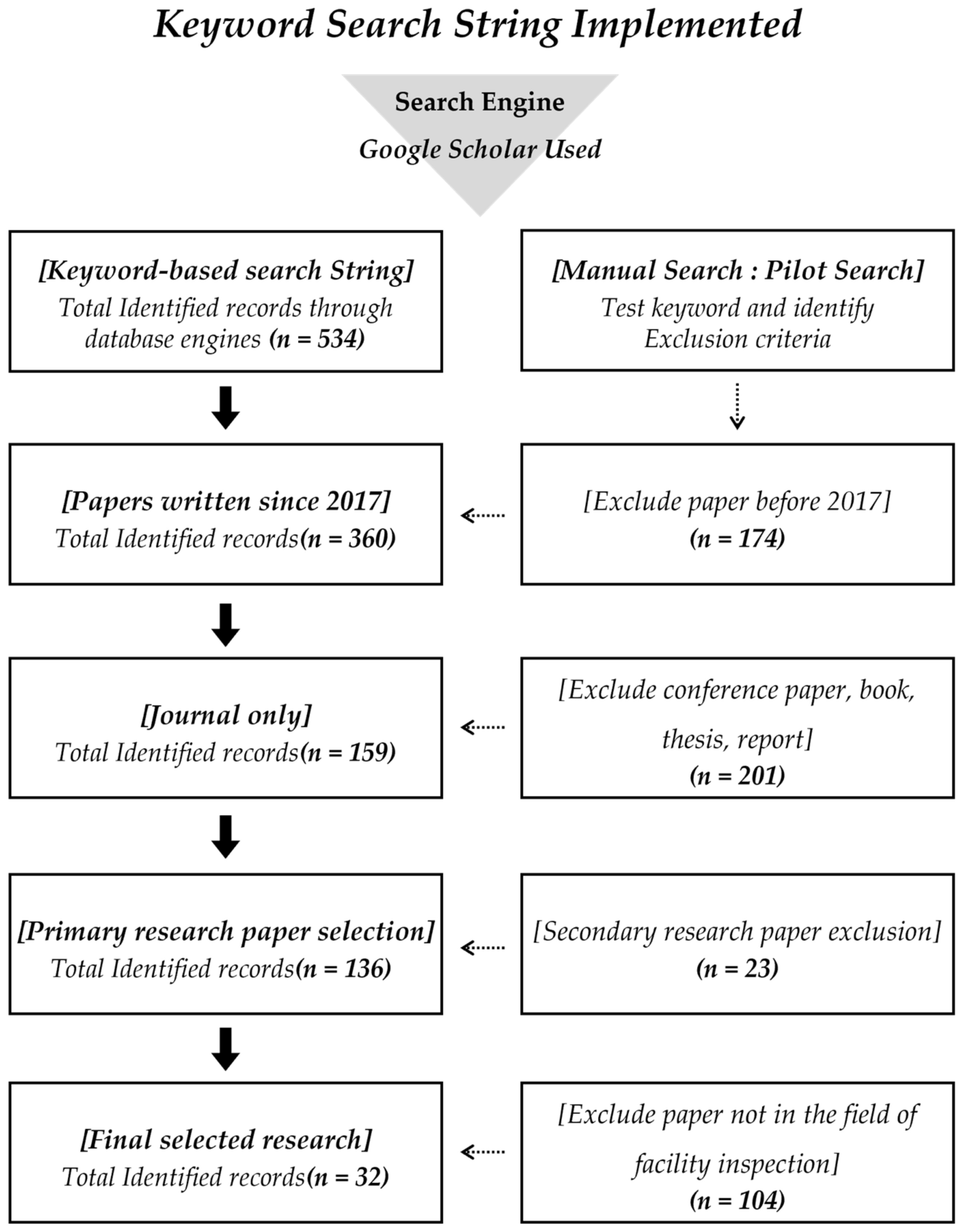

2. Facility Condition Monitoring

3. Research Methodology

4. Synthesis of Research in UAS-Based Facility Monitoring

4.1. Synthesis of Contributions of Current UAS-Based Facility Monitoring Studies

4.2. Synthesis of Limitations of Current UAS-Based Facility Monitoring Studies

4.3. Conslidation of Further Research Direction

5. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lee, J.H. Need to Prepare in Advance for the Environment that has changed since COVID-19. In Construction Trend Briefing; CERIK: Seoul, Republic of Korea, 2020; Volume 759, p. 8. [Google Scholar]

- Rajat, A.; Shankar, C.; Mukund, S. Imagining Construction’s Digital Future; Mckinsey & Company: Tokyo, Japan, 2016. [Google Scholar]

- Jonas, B.; Jose, L.B.; Jan, M.; Maria, J.R.; David, R.; Erik, J.; Gernot, S. How Construction Can Emerge Stronger after Coronavirus; McKinsey & Company: Tokyo, Japan, 2020. [Google Scholar]

- Siyuan, C.; Debra, F.L.; Eleni, M.; SM Iman, Z.; Jonathan, B. UAV Bridge Inspection through Evaluated 3D Reconstructions. J. Bridge Eng. 2019, 24, 05019001. [Google Scholar]

- Jang, J.H. BIM Based Infrastructure Maintenance. KSCE Mag. 2020, 68, 38–47. [Google Scholar]

- Hanita, Y.; Mustaffa, A.A.; Aadam, M.T.A. Historical Building Inspection using the Unmanned Aerial Vehicle(UAV). IJSCET 2020, 11, 12–20. [Google Scholar]

- Donghai, L.; Xietian, X.; Junjie, C.; Shuai, L. Integrating Building Information Model and Augmented Reality for Drone-based Building Inspection. J. Comput. Civ. Eng. 2021, 35, 04020073. [Google Scholar]

- Kim, H.Y.; Choi, K.A.; Lee, I.P. Drone image-based facility inspection-focusing on automatic process using reference images. J. Korean Soc. Geospat. Inf. Sci. 2018, 26, 21–32. [Google Scholar]

- FLYABILTY. Available online: https://www.flyability.com/casestudies/indoor-drones-in-bridge-inspection-between-beams-and-inside-box-girder (accessed on 24 October 2022).

- DRONEID. Available online: http://www.droneid.co.kr./usecase/01_view.php?type=&num=13 (accessed on 24 October 2022).

- Lee, K.W.; Park, J.K. Modeling and Management of Bridge Structures Using Unmanned Aerial Vehicle in Korea. Sens. Mater. 2019, 31, 3765–3772. [Google Scholar] [CrossRef]

- Chen, K.; Reichard, G.; Akanmu, A.; Xu, X. Geo-registering UAV-captured close-range images to GIS-based spatial model for building facade inspections. Autom. Constr. 2021, 122, 103503. [Google Scholar] [CrossRef]

- Jeong, D.M.; Lee, J.H.; Lee, D.H.; Ju, Y.K. Rapid Structural Safety Evaluation Method of Buildings using Unmanned Aerial Vehicle (SMART SKY EYE). J. Archit. Inst. Korea Struct. Constr. 2019, 35, 3–11. [Google Scholar]

- Kim, S.J.; Yu, G.; Javier, I. Framework for UAS-Integrated Airport Runway Design Code Compliance Using Incremental Mosaic Imagery. J. Comput. Civ. Eng. 2021, 35, 04020070. [Google Scholar] [CrossRef]

- Besada, J.A.; Bergesio, L.; Campana, I.; Vaquero-Melchor, D.; Lopez-Araquistain, J.; Bernardos, A.M.; Casar, J.R. Drone Mission Definition and Implementation for Automated Infrastructure Inspection using Airborne Sensors. Sensors 2018, 18, 1170. [Google Scholar] [CrossRef]

- Aliyari, M.; Ashrafi, B.; Ayele, Y.Z. Hazards identification and risk assessment for UAV-assisted bridge inspection. Struct. Infrastruct. Eng. 2021, 18, 412–428. [Google Scholar] [CrossRef]

- Han, D.Y.; Park, J.B.; Huh, J.W. Orientation Analysis between UAV Video and Photos for 3D Measurement of Bridges. J. Korean Soc. Surv. Geod. Photogramm. Cart. 2018, 36, 451–456. [Google Scholar]

- Ruiz, R.D.B.; Junior, A.C.L.; Rocha, J.H.A. Inspection of facades with Unmanned Aerial Vehicles(UAV): An exploratory study. Rev. ALCONPAT 2021, 11, 88–104. [Google Scholar]

- Akbar, M.A.; Qidwai, U.; Jahanshahi, M.R. An evaluation of image-based structural health monitoring using integrated unmanned aerial vehicle platform. Struct. Control Health Monit. 2019, 26, e2276. [Google Scholar] [CrossRef]

- Mattar, R.A.; Kalai, R. Development of a Wall-Sticking Drone for Non-Destructive Ultrasonic and Corrosion Testing. Drones 2018, 2, 8. [Google Scholar] [CrossRef]

- Peng, X.; Zhong, X.; Zhao, C.; Chen, A.; Zhang, T. A UAV-based machine vision method for bridge crack recognition and width quantification through hybrid feature learning. Constr. Build. Mater. 2021, 299, 123896. [Google Scholar] [CrossRef]

- Grosso, R.; Mecca, U.; Moglia, G.; Prizzon, F.; Rebaudengo, M. Collecting Built Environment Information Using UAVs: Time and Applicability in Building Inspection Activities. Sustainability 2020, 12, 4731. [Google Scholar] [CrossRef]

- Aliyari, M.; Ashrafi, B.; Ayele, A.Z. Drone-based bridge inspection in harsh operating environment: Risks and safeguards. Int. J. Transp. Dev. Integr. 2021, 5, 118–135. [Google Scholar] [CrossRef]

- Sauti, N.S.; Yusoff, N.M.; Abu Bakar, N.A.; Akbar, Z.A. Visual Inspection in Dilapidation Study of Heritage Structure Using Unmanned Aerial Vehicle(UAV): Case Study AS-Solihin Mosque, Melaka. Politek. Kolej Komuniti J. Life Long Learn. 2018, 2, 26+28–38. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. RESEARCH METHODS AND REPORTING 2020. Syst. Rev. 2021, 10, 89. [Google Scholar] [CrossRef]

- Kung, R.Y.; Pan, N.H.; Wang, C.C.; Lee, P.C. Application of Deep Learning and Unmanned Aerial Vehicle on Building Maintenance. Adv. Civ. Eng. 2021, 2021, 5598690. [Google Scholar] [CrossRef]

- Ayele, Y.Z.; Aliyari, M.; Griffiths, D.; Droguett, E.L. Automatic Crack Segmentation for UAV-Assisted Bridge Inspection. Energies 2020, 13, 6250. [Google Scholar] [CrossRef]

- Cho, J.; Shin, H.; Ahn, Y.; Lee, S. Proposal of Regular Safety Inspection Process in the Apartment Housing Using a Drone. Korea Inst. Ecol. Archit. Environ. 2019, 19, 121–127. [Google Scholar]

- Freimuth, H.; Konig, M. A Framework for Automated Acquisition and Processing of As-Built Data with Autonomous Unmanned Aerial Vehicles. Sensors 2019, 19, 4513. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.; Hog, H.; Sael, L.; Kim, H.Y. MultiDefectNet: Multi-Class Defect Detection on Building Facade Based on Deep Convolutional Neural Network. Sustainability 2020, 12, 9785. [Google Scholar] [CrossRef]

- Yang, L.; Muqing, C.; Shenghai, Y.; Lihua, X. Vision Based Autonomous UAV Plane Estimation And Following for Building Inspection. arXiv 2021, arXiv:2102.01423. [Google Scholar]

- Jeong, H.J.; Lee, S.H.; Chang, H.J. Research on the Safety Inspection Plan of Outdoor Advertising Safety Using Drone. J. Korea Inst. Inf. Electron. Commun. Technol. 2020, 13, 530–538. [Google Scholar]

- Kim, Y.G.; Kwon, J.W. The Maintenance and Management Method of Deteriorated Facilities Using 4D map Based on UAV and 3D Point Cloud. J. Korea Inst. Build. Constr. 2019, 19, 239–246. [Google Scholar]

- Falorca, J.F.; Miraldes, J.P.N.D.; Lanzinha, J.C.G. New trends in visual inspection of buildings and structures: Study for the use of drones. Open Eng. 2021, 11, 734–743. [Google Scholar] [CrossRef]

- Bolourian, N.; Hammad, A. LiDAR-equipped UAV path planning considering potential locations of defects for bridge inspection. Autom. Constr. 2020, 117, 103250. [Google Scholar] [CrossRef]

- Bae, J.H.; Lee, J.H.; Jang, A.; Ju, Y.G. SMART SKY EYE System for structural Safety Assessment Using Drones and Thermal Images. J. Korean Assoc. Spat. Struct. 2019, 19, 4–8. [Google Scholar] [CrossRef]

- Falorca, J.F.; Lanzinha, J.C.G. Facade inspections with drones-theoretical analysis and exploratory tests. Int. J. Build. Pathol. Adapt. 2020, 39, 235–258. [Google Scholar] [CrossRef]

- Kaiwen, C.; George, R.; Xin, X. Opportunities for Applying Camera-Equipped Drones towards Performance Inspections of Building Facades. Comput. Civ. Eng. 2021, 2019, 113–120. [Google Scholar]

- Seo, J.W.; Duque, L.; Wacker, J.P. Field Application of UAS-Based Bridge Inspection. Transp. Res. Rec. 2018, 2672, 72–81. [Google Scholar] [CrossRef]

| Criteria | Content |

|---|---|

| Inclusion Criteria | Papers drafted in the past 5 years |

| Written in English | |

| Primary research paper | |

| Papers related to UAS-based facility inspections | |

| Exclusion Criteria | Papers drafted prior to the past 5 years |

| Conference, Book, Thesis, Report | |

| Secondary research paper | |

| Papers not related to facility inspections | |

| Papers on monitoring building energy and heat |

| Identifier (Reference) | Type of Facilities | Type of Technologies * | |||||

|---|---|---|---|---|---|---|---|

| Bridge (BR) | Building (BU) | Other Facility ** (OT) | Visual Identification (VI) | 3D Modeling Technology (3D) | Automatic Identification (AI) | Unclassified *** (UN) | |

| J01 (Liu et al., 2021) [7] | √ | √ | |||||

| J02 (Sauti et al., 2018) [24] | √ | √ | |||||

| J03 (Han et al., 2018) [17] | √ | √ | |||||

| J04 (Lee et al., 2019) [11] | √ | √ | |||||

| J05 (Grosso et al., 2020) [22] | √ | √ | |||||

| J06 (Chen et al., 2021) [12] | √ | √ | |||||

| J07 (Kung et al., 2021) [26] | √ | √ | |||||

| J08 (Ayele et al., 2020) [27] | √ | √ | |||||

| J09 (Kim et al., 2018) [8] | √ | √ | |||||

| J10 (Cho et al., 2019) [28] | √ | √ | |||||

| J11 (Freimuth et al., 2019) [29] | √ | √ | |||||

| J12 (Jeong et al., 2019) [13] | √ | √ | |||||

| J13 (Lee et al., 2019) [30] | √ | √ | |||||

| J14 (Kim et al., 2021) [14] | √ | √ | |||||

| J15 (Yang et al., 2021) [31] | √ | √ | |||||

| J16 (Yusof et al., 2020) [6] | √ | √ | |||||

| J17 (Jeong et al., 2020) [32] | √ | √ | |||||

| J18 (Kim et al., 2019) [33] | √ | √ | |||||

| J19 (Falorca et al., 2021) [34] | √ | √ | |||||

| J20 (Akbar et al., 2018) [19] | √ | √ | |||||

| J21 (Bolourian et al., 2020) [35] | √ | √ | |||||

| J22 (Ruiz et al., 2021) [18] | √ | √ | |||||

| J23 (Peng et al., 2021) [21] | √ | √ | |||||

| J24 (Aliyari et al., 2021) [16] | √ | √ | |||||

| J25 (Mattar et al., 2018) [20] | √ | √ | |||||

| J26 (Bae et al., 2019) [36] | √ | √ | |||||

| J27 (Aliyari et al., 2021) [23] | √ | √ | |||||

| J28 (Falorca et al., 2021) [37] | √ | √ | |||||

| J29 (Kaiwen et al., 2019) [38] | √ | √ | |||||

| J30 (Seo et al., 2018) [39] | √ | √ | |||||

| J31 (Siyuan et al., 2019) [4] | √ | √ | |||||

| J32 (Besada et al., 2018) [15] | √ | √ | |||||

| Technology | Current State of Knowledge for Facility Monitoring |

|---|---|

| VI | It was commonly contributed that UAS-based facility condition monitoring can collect the image data of point-of-interest as well as help to identify various defects with high efficiency, safety, and economic benefits. |

| 3D | A 3D point cloud-based crack detection and estimation framework for constructed-facility condition monitoring was commonly developed. One of the papers proposed an integrated system between UAS and BIM-based AR for facility condition monitoring. |

| AI | The selected articles developed a framework by using a deep learning-based object recognition model for detecting the defects of the facilities. They used commonly existing datasets (e.g., COCO dataset) for transfer learning and image segmentation. |

| Technology | BR and BU | OT |

|---|---|---|

| VI | It is possible to identify damages to facilities, but it cannot pinpoint damages such as cracks, efflorescence, and detachment, nor the level of such damages | |

| 3D | It was pointed out that the need for more accuracy of point cloud data collected through UAS makes it challenging to identify damages to facilities accurately. | |

| Insufficient accuracy of point cloud data collected through UAS. Current and previous studies focused on how to improve the accuracy of the 3D modeling data without identifying damages to facilities. | - | |

| AI | Deep-learning model that can only identify single damage types of facilities. It required large amounts of data to generalize the deep-learning model. | - |

| Deep-learning model that can only identify single damage types of facilities. 3D modeling research has yet to perform much. Therefore, it is hard to identify where the damage occurred in the facility. | ||

| UN | The process development or economic benefits are not conducted with actual UAS flight case studies. | |

| Technology | BR and BU | OT |

|---|---|---|

| VI | It is necessary to develop technologies that can automatically identify damages to facilities. | |

| 3D | It is necessary to develop data processing technologies to improve data collection technologies and accuracy to acquire high-quality point clouds. It is necessary to develop technologies that can accurately identify damages to facilities through 3D modeling technologies. | |

| AI | It is necessary to develop a deep-learning model that can simultaneously identify various damages to facilities. It is necessary to develop a system that can efficiently collect and manage large amounts of data. It is necessary to develop technologies that can improve the accuracy of the deep-learning model. It is necessary to develop technologies that visually identify locations where damages occurred linked with 3D modeling technologies. It is necessary to develop technologies that can improve the accuracy of the deep-learning model. | |

| UN | It should validate and verify the process for developed autonomous flight systems through case studies. | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, K.; Kwon, J.; Do, S.L.; Lee, D.; Kim, S. A Synthetic Review of UAS-Based Facility Condition Monitoring. Drones 2022, 6, 420. https://doi.org/10.3390/drones6120420

Jeong K, Kwon J, Do SL, Lee D, Kim S. A Synthetic Review of UAS-Based Facility Condition Monitoring. Drones. 2022; 6(12):420. https://doi.org/10.3390/drones6120420

Chicago/Turabian StyleJeong, Kyeongtae, Jinhyuk Kwon, Sung Lok Do, Donghoon Lee, and Sungjin Kim. 2022. "A Synthetic Review of UAS-Based Facility Condition Monitoring" Drones 6, no. 12: 420. https://doi.org/10.3390/drones6120420

APA StyleJeong, K., Kwon, J., Do, S. L., Lee, D., & Kim, S. (2022). A Synthetic Review of UAS-Based Facility Condition Monitoring. Drones, 6(12), 420. https://doi.org/10.3390/drones6120420