Deep Complex-Valued Convolutional Neural Network for Drone Recognition Based on RF Fingerprinting †

Abstract

1. Introduction

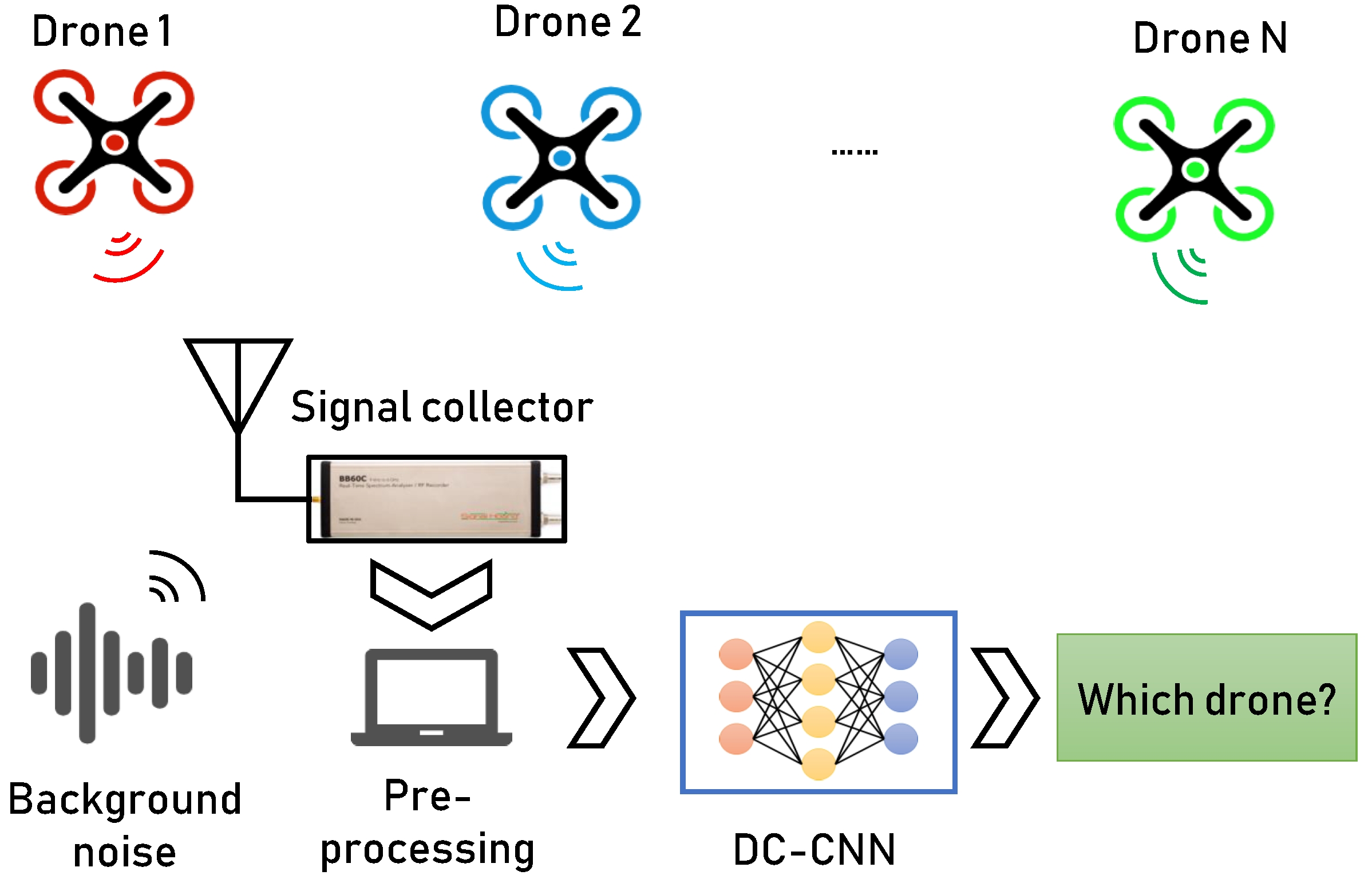

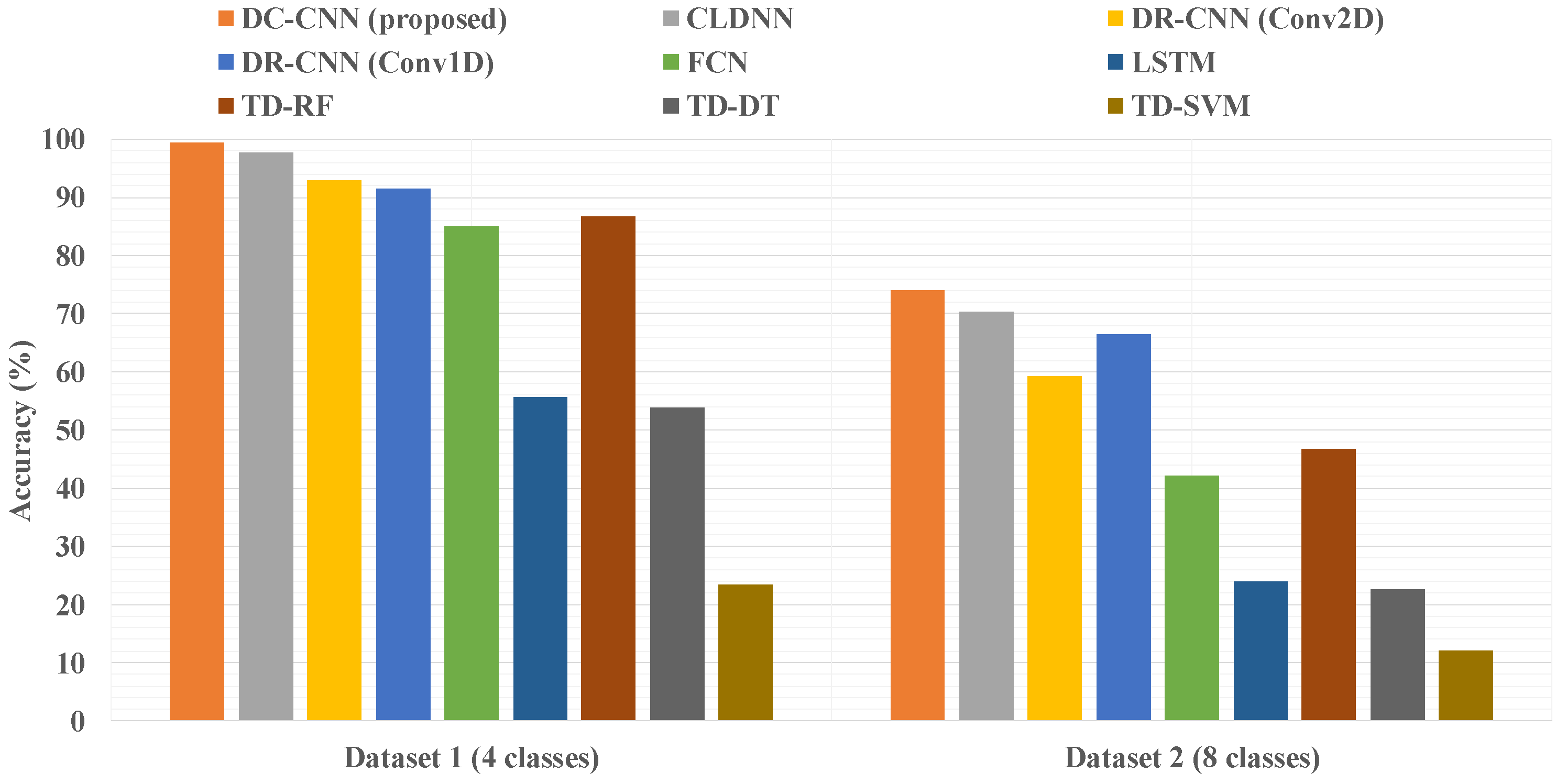

- We propose a drone recognition technology based on a DC-CNN model with improved classification performances within two given independent drone signal datasets.

- Our study used recently published drone datasets [37] in which drone RF data (measured under different operating modes) and background activities were captured in a laboratory setting at Qatar University. This dataset used two RF signal receivers to receive the high and low-frequency signal data of the drone and the entire RF spectrum was obtained by performing a discrete Fourier transform (DFT) on these signal data.

- We present nine different models that compare and evaluate classification performances to show the superior performance of the DC-CNN model. We comprehensively evaluated the performance of each algorithm and found that the proposed DC-CNN model is superior to the other algorithm models.

2. Related Works

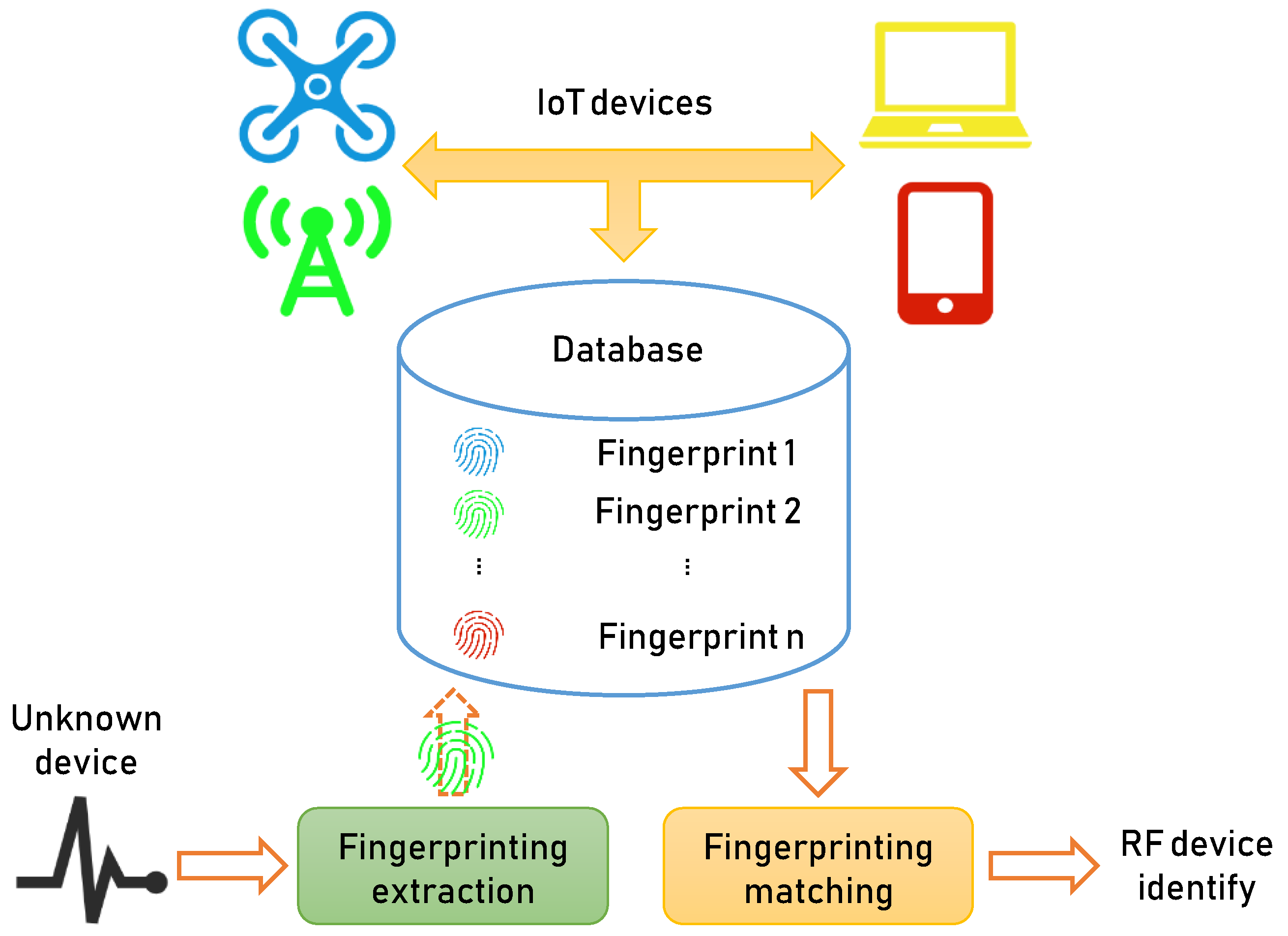

2.1. Traditional Transmitter Device Recognition Methods

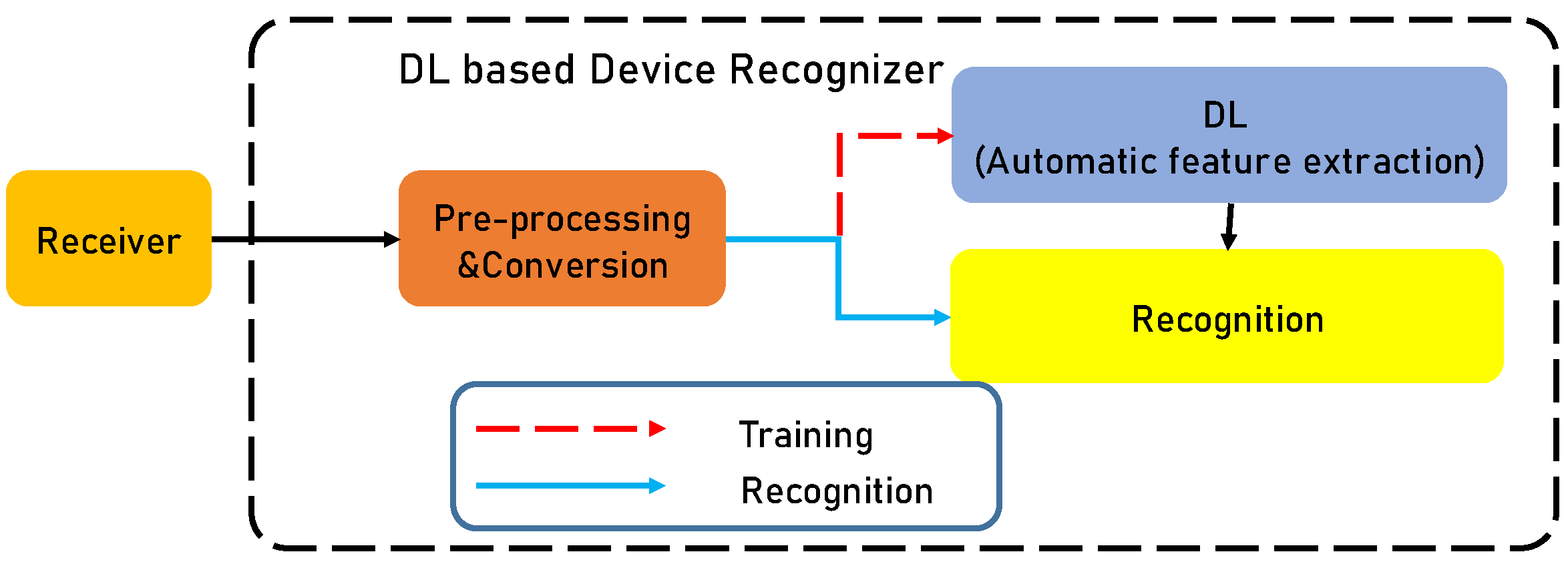

2.2. Automatic Feature Extraction-Based RF Fingerprinting Methods

3. System Design and Complex-Valued Network Theory

3.1. System Design

3.2. Deep Complex-Valued Network

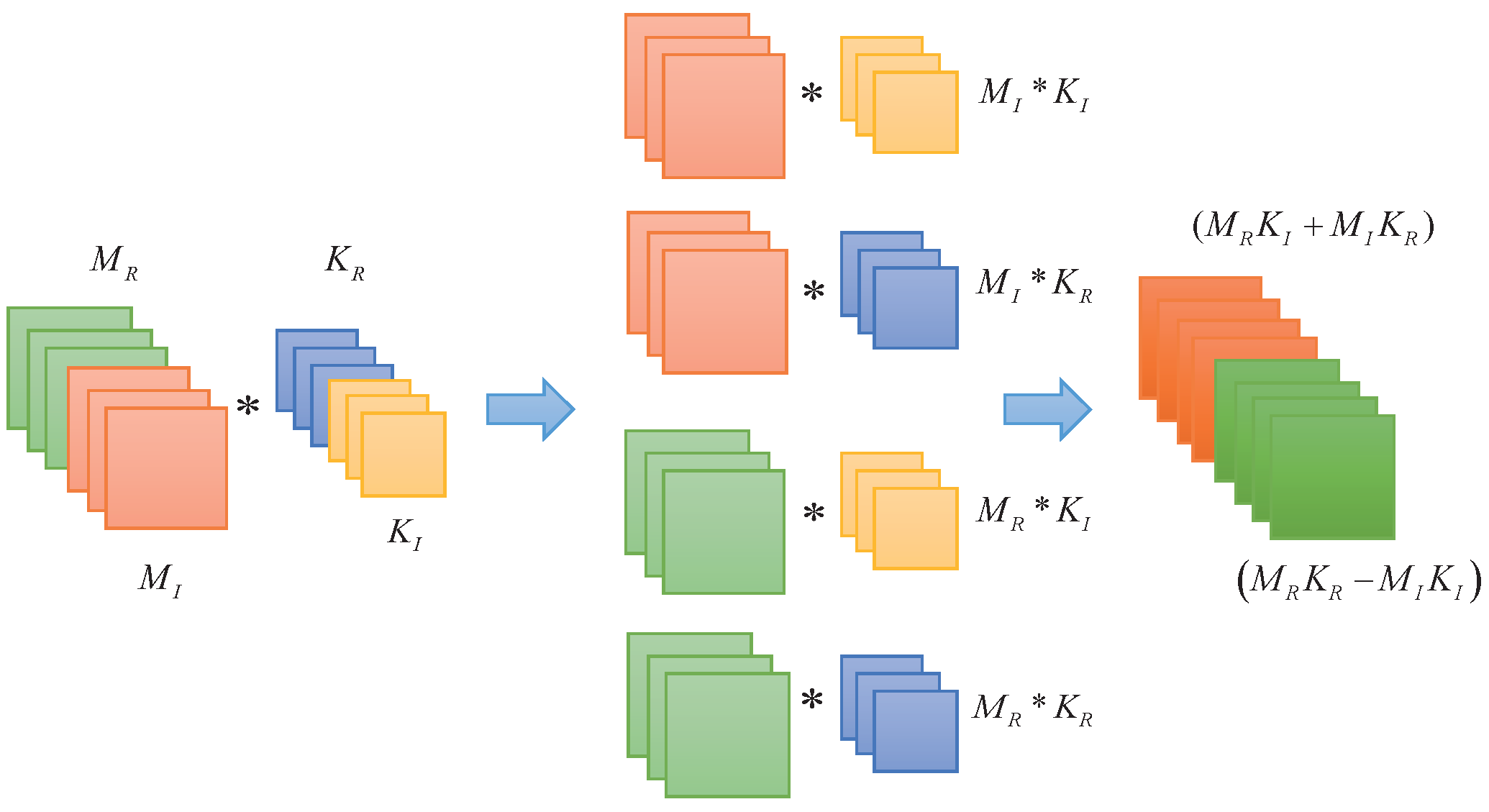

3.2.1. Complex-Valued Convolution Operation

3.2.2. Complex-Valued Weight Initialization

3.2.3. Complex-Valued Batch Normalization

3.2.4. Complex-Valued Activation Function

4. Algorithm Model and Implementation

4.1. Architecture of CLDNN

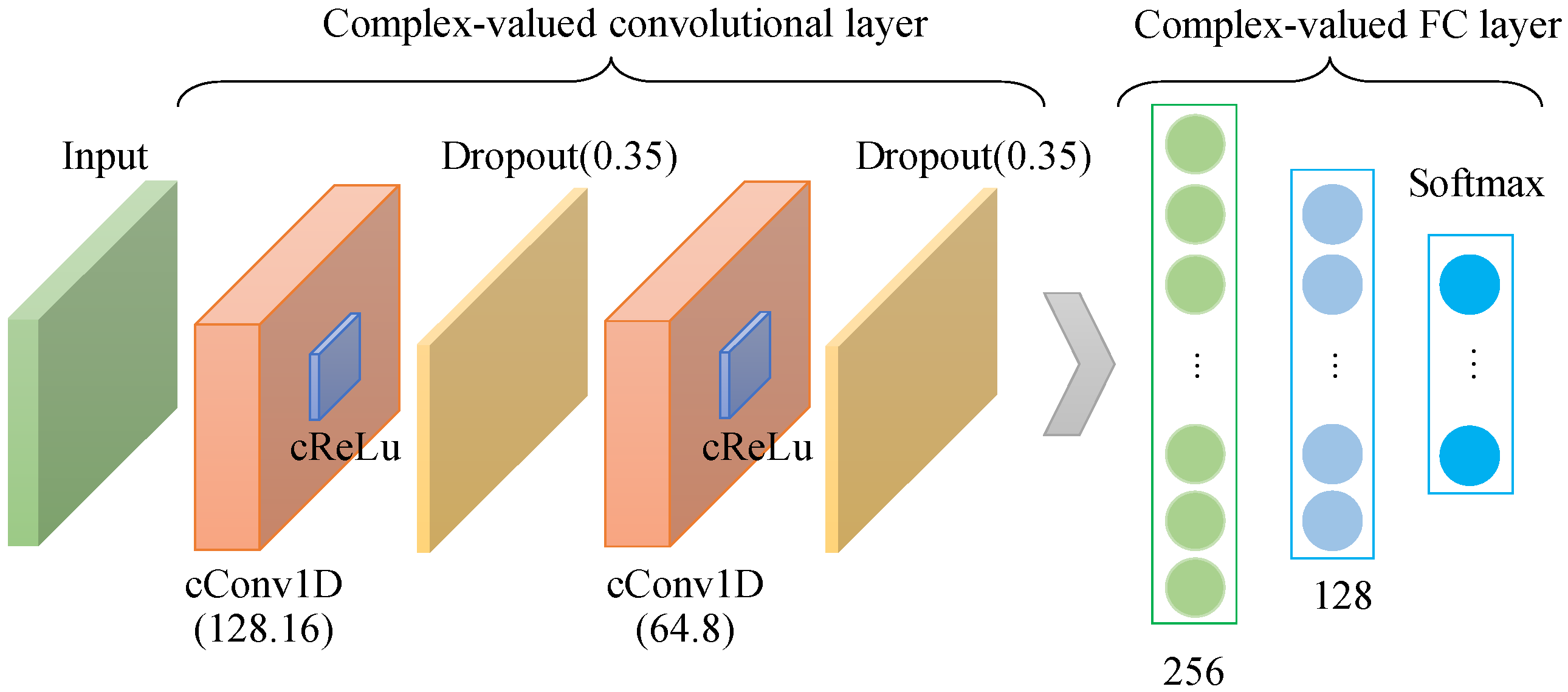

4.2. Architecture of DC-CNN

4.3. Architecture of Other DL Models

4.4. Training Process of DL-Based Drone Recognition Method

| Algorithm 1 The proposed DL-based drone recognition method. |

|

4.5. Comparison Method: TD Feature with ML Recognizers

4.5.1. Pre-Processing & Conversion

4.5.2. Feature Extraction

4.5.3. Recognition-Based on ML

5. Results and Discussion

5.1. Dataset Description and Experimental Setup

5.2. Accuracy of DL and Traditional Algorithm Methods within Two Datasets

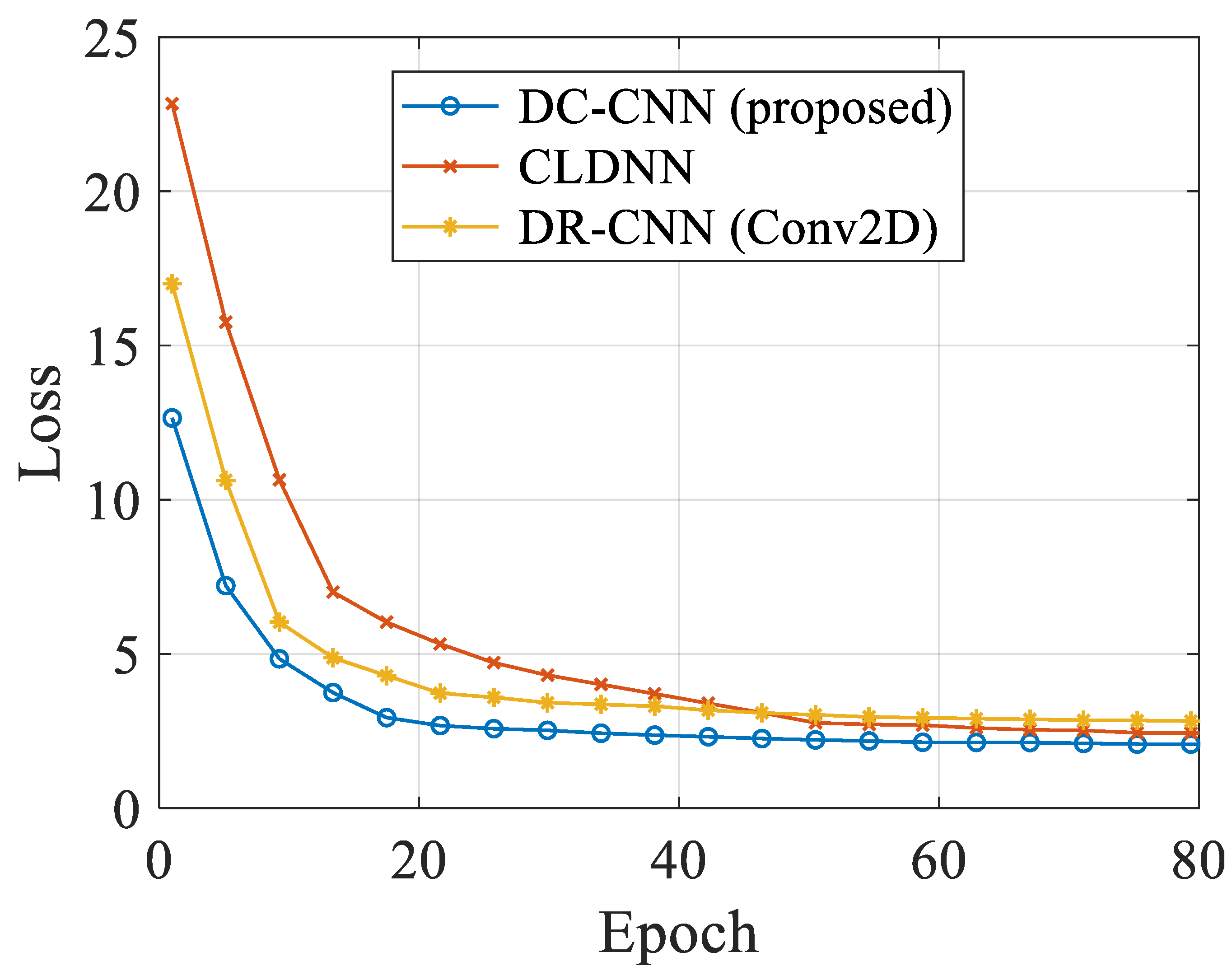

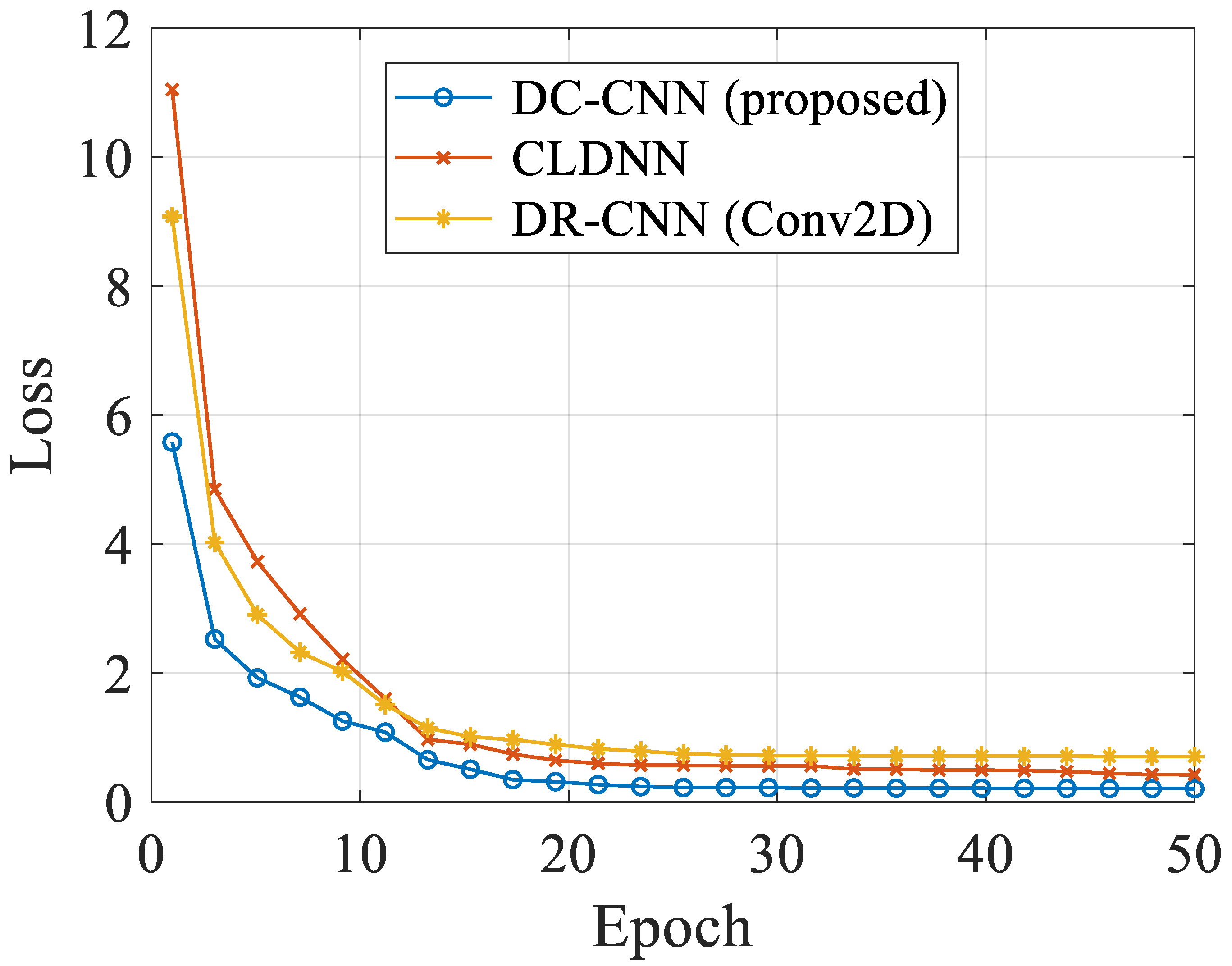

5.3. Learning Curves of Different DL Models in Different Datasets

5.4. Algorithm System Comparison

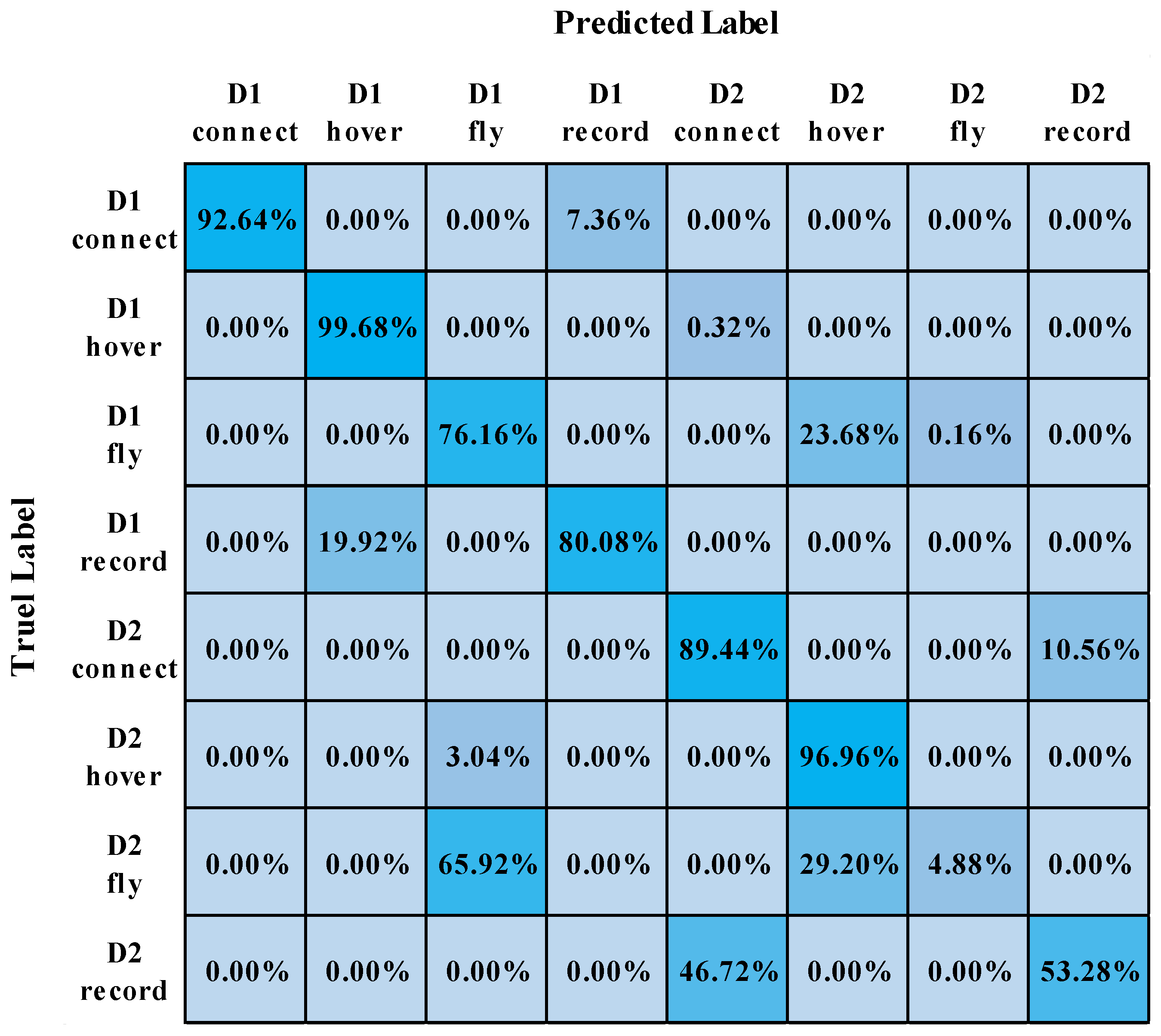

5.5. Confusion Matrix of the DC-CNN Model in Different Datasets

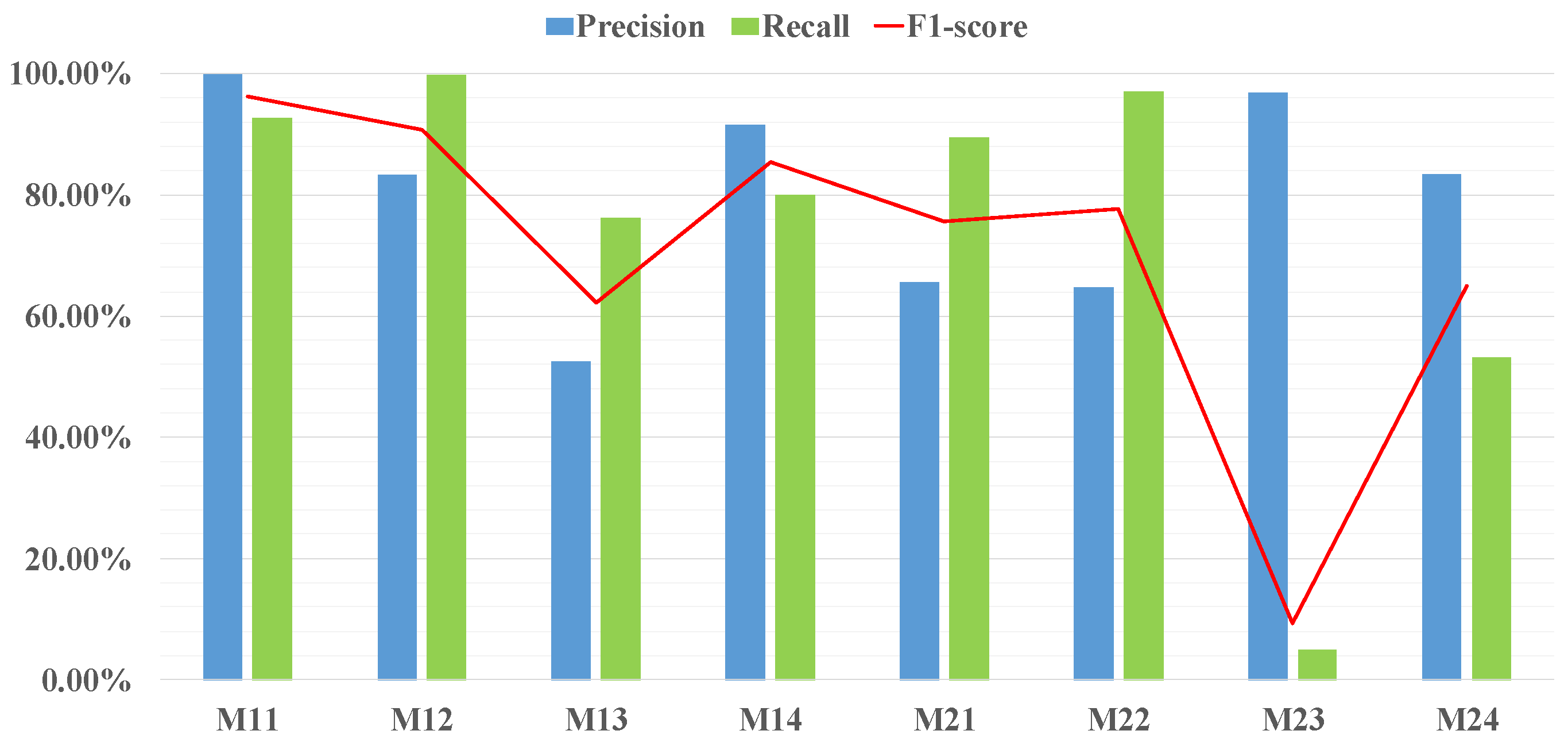

5.6. Additive Evaluation of DC-CNN Model in Different Datasets

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, M.; Yang, J.; Gui, G. DSF-NOMA: UAV-assisted emergency communication technology in a heterogeneous internet of things. IEEE Internet Things J. 2019, 6, 5508–5519. [Google Scholar] [CrossRef]

- Liu, M.; Tang, F.; Kato, N.; Adachi, F. 6G: Opening new horizons for integration of comfort, security and intelligence. IEEE Wirel. Commun. Mag. 2020, 27, 126–132. [Google Scholar]

- Mohanti, S.; Soltani, N.; Sankhe, K.; Jaisinghani, D.; Felice, M.D.; Chowdhury, K. AirID: Injecting a custom RF fingerprint for enhanced UAV identification using deep learning. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), Taipei, Taiwan, 7–11 December 2020; pp. 1–9. [Google Scholar]

- Jagannath, A.; Jithin, J.; Kumar, P. A comprehensive survey on radio frequency (rf) fingerprinting: Traditional approaches, deep learning, and open challenges. arXiv 2022, arXiv:2201.00680. [Google Scholar] [CrossRef]

- Shoufan, A.; Al-Angari, H.M.; Sheikh, M.F.A.; Damiani, E. Drone pilot identification by classifying radio-control signals. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2439–2447. [Google Scholar] [CrossRef]

- Al-Emadi, S.; Al-Ali, A.; Mohammad, A.; Al-Ali, A. Audio based drone detection and identification using deep learning. In Proceedings of the International Wireless Communications and Mobile Computing Conference (IWCMC), Tangier, Morocco, 24–28 June 2019; pp. 459–464. [Google Scholar]

- Gong, J.; Xu, X.; Lei, Y. Unsupervised specific emitter identification method using radio-frequency fingerprint embedded infoGAN. IEEE Trans. Inf. Forensics Secur. 2020, 15, 2898–2913. [Google Scholar] [CrossRef]

- Su, H.-R.; Chen, K.-Y.; Wong, W.J.; Lai, S.-H. A deep learning approach towards pore extraction for high-resolution fingerprint recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech, & Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 2057–2061. [Google Scholar]

- Roy, D.; Mukherjee, T.; Chatterjee, M.; Blasch, E.; Pasiliao, E. RFAL: Adversarial learning for RF transmitter identification and classification. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 783–801. [Google Scholar] [CrossRef]

- Tian, X.; Wu, X.; Li, H.; Wang, X. RF fingerprints prediction for cellular network positioning: A subspace identification approach. IEEE Trans. Mob. Comput. 2020, 19, 450–465. [Google Scholar] [CrossRef]

- Wang, Y.; Gui, G.; Gacanin, H.; Ohtsuki, T.; Dobre, O.A.; Poor, H.V. An efficient specific emitter identification method based on complex-valued neural networks and network compression. IEEE J. Sel. Areas Commun. 2021, 39, 2305–2317. [Google Scholar] [CrossRef]

- Satyanarayana, K.; El-Hajjar, M.; Mourad, A.A.M.; Hanzo, L. Deep learning aided fingerprint-based beam alignment for mmWave vehicular communication. IEEE Trans. Veh. Technol. 2019, 68, 10858–10871. [Google Scholar] [CrossRef]

- Peng, Y.; Liu, P.; Wang, Y.; Gui, G.; Adebisi, B.; Gacanin, H. Radio frequency fingerprint identification based on slice integration cooperation and heat constellation trace figure. IEEE Wirel. Commun. Lett. 2022, 11, 543–547. [Google Scholar] [CrossRef]

- Lin, Y.; Zhu, X.; Zheng, Z.; Dou, Z.; Zhou, R. The individual identification method of wireless device based on dimensionality reduction and machine learning. J. Supercomput. 2019, 75, 3010–3027. [Google Scholar] [CrossRef]

- Ezuma, M.; Erden, F.; Anjinappa, C.K.; Ozdemir, O.; Guvenc, I. Detection and classification of UAVs using RF fingerprints in the presence of Wi-Fi and bluetooth interference. IEEE Open J. Commun. Soc. 2020, 1, 60–76. [Google Scholar] [CrossRef]

- Yang, K.; Kang, J.; Jang, J.; Lee, H.N. Multimodal sparse representation-based classification scheme for RF fingerprinting. IEEE Commun. Lett. 2019, 23, 867–870. [Google Scholar] [CrossRef]

- Li, C. Dynamic offloading for multiuser muti-CAP MEC networks: A deep reinforcement learning approach. IEEE Trans. Veh. Technol. 2021, 70, 2922–2927. [Google Scholar] [CrossRef]

- Zheng, Q.; Yang, M.; Yang, J.; Zhang, Q.; Zhang, X. Improvement of Generalization Ability of Deep CNN via Implicit Regularization in Two-Stage Training Process. IEEE Access 2018, 6, 15844–15869. [Google Scholar] [CrossRef]

- Zhao, M.; Jha, A.; Liu, Q.; Millis, B.A.; Jansen, A.M.; Lu, L.; Landman, B.A.; Tyska, M.J.; Huo, Y. Faster Mean-shift: GPU-accelerated clustering for cosine embedding-based cell segmentation and tracking. Med. Image Anal. 2021, 71, 102048. [Google Scholar] [CrossRef]

- Zhao, M.; Liu, Q.; Jha, A.; Deng, R. VoxelEmbed: 3D instance segmentation and tracking with voxel embedding based deep learning. In Proceedings of the International Workshop on Machine Learning in Medical Imaging, Strasbourg, France, 27 September 2021; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Jin, B.; Cruz, L.; Gonçalves, N. Pseudo RGB-D Face Recognition. IEEE Sens. J. 2022, 22, 21780–21794. [Google Scholar] [CrossRef]

- Wang, Z. An adaptive deep learning-based UAV receiver design for coded MIMO with correlated noise. Phys. Commun. 2021, 45, 101292. [Google Scholar] [CrossRef]

- Huang, H.; Peng, Y.; Yang, J.; Xia, W.; Gui, G. Fast beamforming design via deep learning. IEEE Trans. Veh. Technol. 2020, 69, 1065–1069. [Google Scholar] [CrossRef]

- He, K.; He, L.; Fan, L.; Deng, Y.; Karagiannidis, G.K.; Nallanathan, A. Learning based signal detection for MIMO systems with unknown noise statistics. IEEE Trans. Commun. 2021, 69, 3025–3038. [Google Scholar] [CrossRef]

- Lai, S.; Zhao, R.; Tang, S.; Xia, J.; Zhou, F.; Fan, L. Intelligent secure mobile edge computing for beyond 5G wireless networks. Phys. Commun. 2021, 45, 101283. [Google Scholar] [CrossRef]

- Gu, H.; Wang, Y.; Hong, S.; Gui, G. Blind channel identification aided generalized automatic modulation recognition based on deep learning. IEEE Access 2019, 7, 110722–110729. [Google Scholar] [CrossRef]

- Sun, J.; Shi, W.; Han, Z.; Yangi, J. Behavioral modeling and linearization of wideband RF power amplifiers using BiLSTM networks for 5G wireless systems. IEEE Trans. Veh. Technol. 2019, 68, 10348–10356. [Google Scholar] [CrossRef]

- Gacanin, H. Autonomous wireless systems with artificial intelligence: A knowledge management perspective. IEEE Veh. Technol. Mag. 2019, 14, 51–59. [Google Scholar] [CrossRef]

- Guo, Y.; Zhao, Z.; He, K.; Lai, S.; Xia, J.; Fan, L. Efficient and flexible management for industrial internet of things: A federated learning approach. Comput. Netw. 2021, 192, 1–9. [Google Scholar] [CrossRef]

- Shi, Z.; Gao, W.; Zhang, S.; Liu, J.; Kato, N. AI-enhanced cooperative spectrum sensing for non-orthogonal multiple access. IEEE Wirel. Commun. Mag. 2020, 27, 173–179. [Google Scholar] [CrossRef]

- Tang, F.; Kawamoto, Y.; Kato, N.; Liu, J. Future intelligent and secure vehicular network towards 6G: Machine-learning approaches. Proc. IEEE 2020, 108, 292–307. [Google Scholar] [CrossRef]

- Wang, Y.; Gui, J.; Yin, Y.; Wang, J.; Sun, J. Automatic modulation classification for MIMO systems via deep learning and zero-forcing equalization. IEEE Trans. Veh. Technol. 2020, 69, 5688–5692. [Google Scholar] [CrossRef]

- Wang, Y.; Gui, G.; Gacanin, H.; Adebisi, B.; Sari, H.; Adachi, F. Federated learning for automatic modulation classification under class imbalance and varying noise condition. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 86–96. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, J.; Liu, M.; Gui, G. LightAMC: Lightweight automatic modulation classification using deep learning and compressive sensing. IEEE Trans. Veh. Technol. 2020, 69, 3491–3495. [Google Scholar] [CrossRef]

- Peng, L.; Zhang, J.; Liu, M.; Hu, A. Deep learning based RF fingerprint identification using differential constellation trace figure. IEEE Trans. Veh. Technol. 2020, 69, 1091–1095. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.Q. Complex-valued convolutional neural network and its application in polarimetric SAR image classification. IEEE Trans. Geosci. Remote. Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

- Al-Sa’d, M.; Al-Ali, A.; Mohamed, A.; Khattab, T.; Erbad, A. RF-based drone detection and identification using deep learning approaches: An initiative towards a large open source drone database. Future Gener. Comput. Syst. 2019, 100, 86–97. [Google Scholar] [CrossRef]

- Shaw, D.; Kinsner, W. Multifractal modelling of radio transmitter transients for classification. In Proceedings of the IEEE WESCANEX 97 Communications, Power and Computing. Conference Proceedings, Winnipeg, MB, Canada, 22–23 May 1997; pp. 306–312. [Google Scholar]

- Kennedy, I.O.; Scanlon, P.; Mullany, F.J.; Buddhikot, M.M.; Nolan, K.E.; Rondeau, T.W. Radio transmitter fingerprinting: A steady state frequency domain approach. In Proceedings of the IEEE 68th Vehicular Technology Conference (VTC2008-Fall), Calgary, AB, Canada, 21–24 September 2008; pp. 1–5. [Google Scholar]

- Merchant, K.; Revay, S.; Stantchev, G.; Nousain, B. Deep learning for RF device fingerprinting in cognitive communication networks. IEEE J. Sel. Top. Signal Process. 2018, 12, 160–167. [Google Scholar] [CrossRef]

- Khatab, Z.E.; Hajihoseini, A.; Ghorashi, S.A. A fingerprint method for indoor localization using autoencoder based deep extreme learning machine. IEEE Sens. Lett. 2018, 2, 2057–2061. [Google Scholar] [CrossRef]

- Li, Y.; Chen, X.; Lin, Y.; Srivastava, G.; Liu, S. Wireless transmitter identification based on device imperfections. IEEE Access 2020, 8, 59305–59314. [Google Scholar] [CrossRef]

| Algorithm | Conv. Layer | LSTM Layer | FC Layer | Model Size |

|---|---|---|---|---|

| DR-CNN (Conv2D) | {128, 64} | / | {256, 128, 64, M} | 33,891,912 |

| DR-CNN (Conv1D) [40] | {128, 64} | / | {256, 128, 64, M} | 33,859,656 |

| FCN [37] | / | / | {512, 256, 128, M} | 2,267,400 |

| LSTM | / | {256, 128} | {256, 128, 64, M} | 2,665,160 |

| Category | Classes | Samples |

|---|---|---|

| Dataset 1 | ||

| background | background activities | 1100 |

| drone | drone {1, 2, 3} activities | 3300 |

| Dataset 2 | ||

| drone 1 | modes {connect, hover, fly, record} | 4400 |

| drone 2 | modes {connect, hover, fly, record} | 4400 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Gu, H.; Hu, C.; Zhang, X.; Gui, G.; Gacanin, H. Deep Complex-Valued Convolutional Neural Network for Drone Recognition Based on RF Fingerprinting. Drones 2022, 6, 374. https://doi.org/10.3390/drones6120374

Yang J, Gu H, Hu C, Zhang X, Gui G, Gacanin H. Deep Complex-Valued Convolutional Neural Network for Drone Recognition Based on RF Fingerprinting. Drones. 2022; 6(12):374. https://doi.org/10.3390/drones6120374

Chicago/Turabian StyleYang, Jie, Hao Gu, Chenhan Hu, Xixi Zhang, Guan Gui, and Haris Gacanin. 2022. "Deep Complex-Valued Convolutional Neural Network for Drone Recognition Based on RF Fingerprinting" Drones 6, no. 12: 374. https://doi.org/10.3390/drones6120374

APA StyleYang, J., Gu, H., Hu, C., Zhang, X., Gui, G., & Gacanin, H. (2022). Deep Complex-Valued Convolutional Neural Network for Drone Recognition Based on RF Fingerprinting. Drones, 6(12), 374. https://doi.org/10.3390/drones6120374