Simulating a Hybrid Acquisition System for UAV Platforms

Abstract

1. Introduction

Paper Aims

- -

- a novel (simulated) low-cost, low-weight, hybrid, multi-view hybrid acquisition system for UAV platforms (Section 2.1, Section 2.2 and Section 2.3);

- -

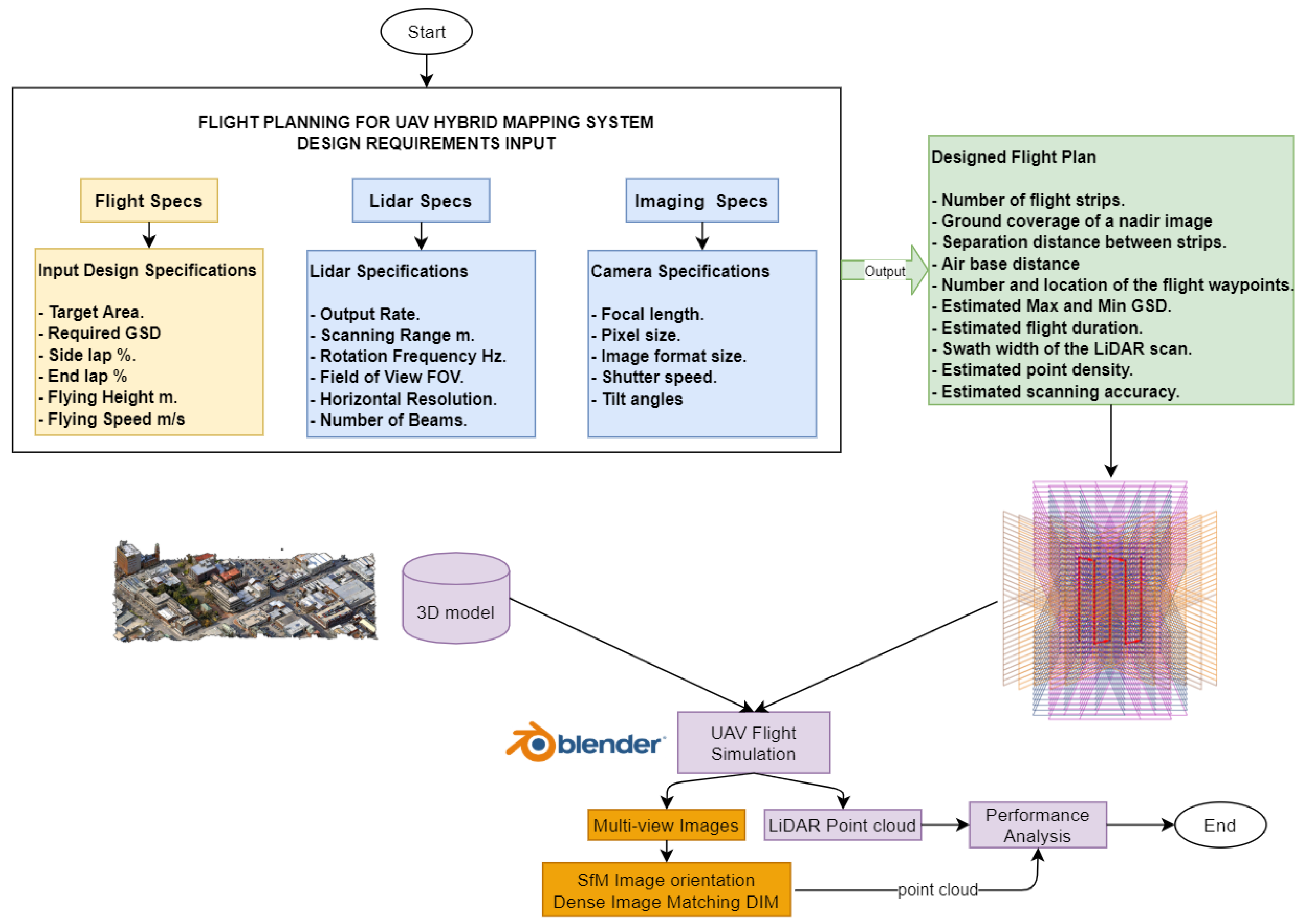

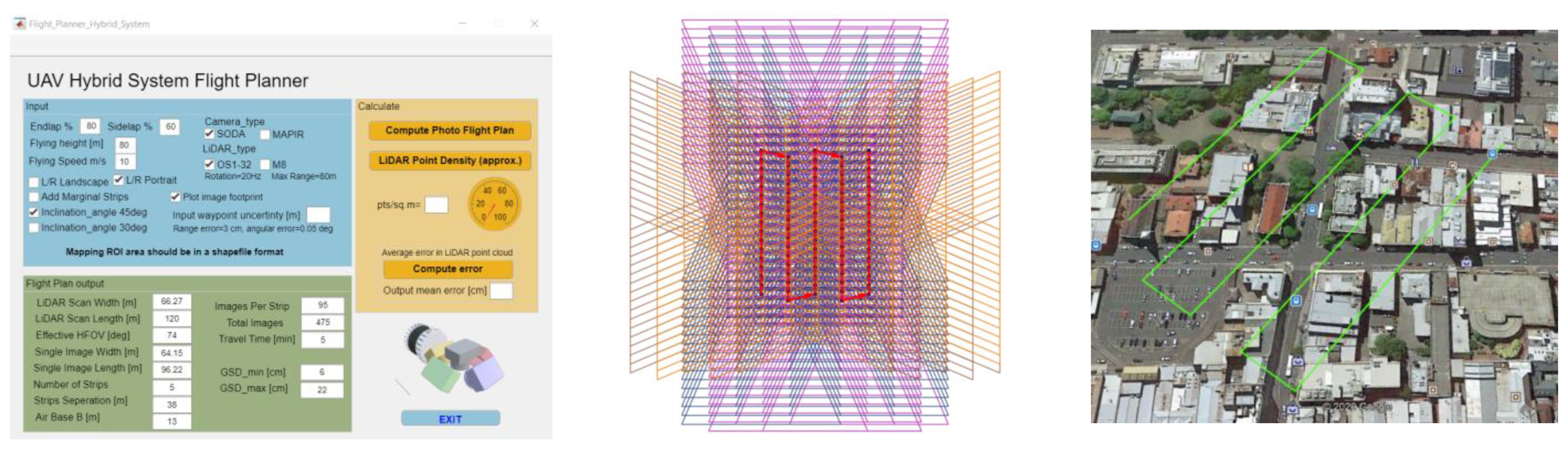

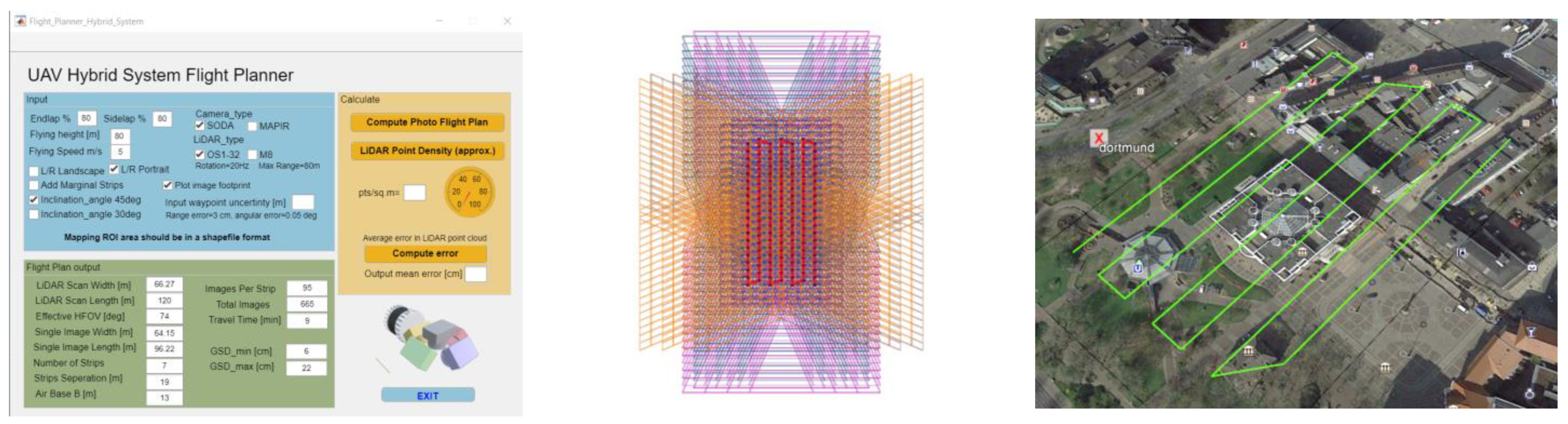

- dedicated software for UAV flight planning with a hybrid sensor (Section 2.4);

- -

- experiments with simulated data on two large urban scenarios (Section 3).

2. Proposed Hybrid System for UAV Platforms

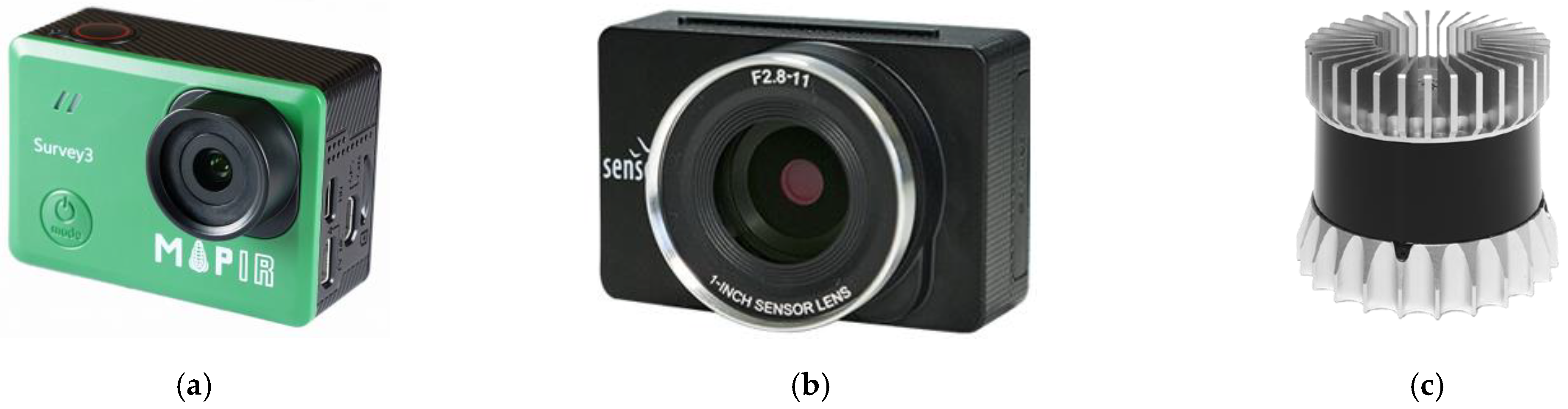

2.1. The Camera Components

2.2. The LiDAR Component

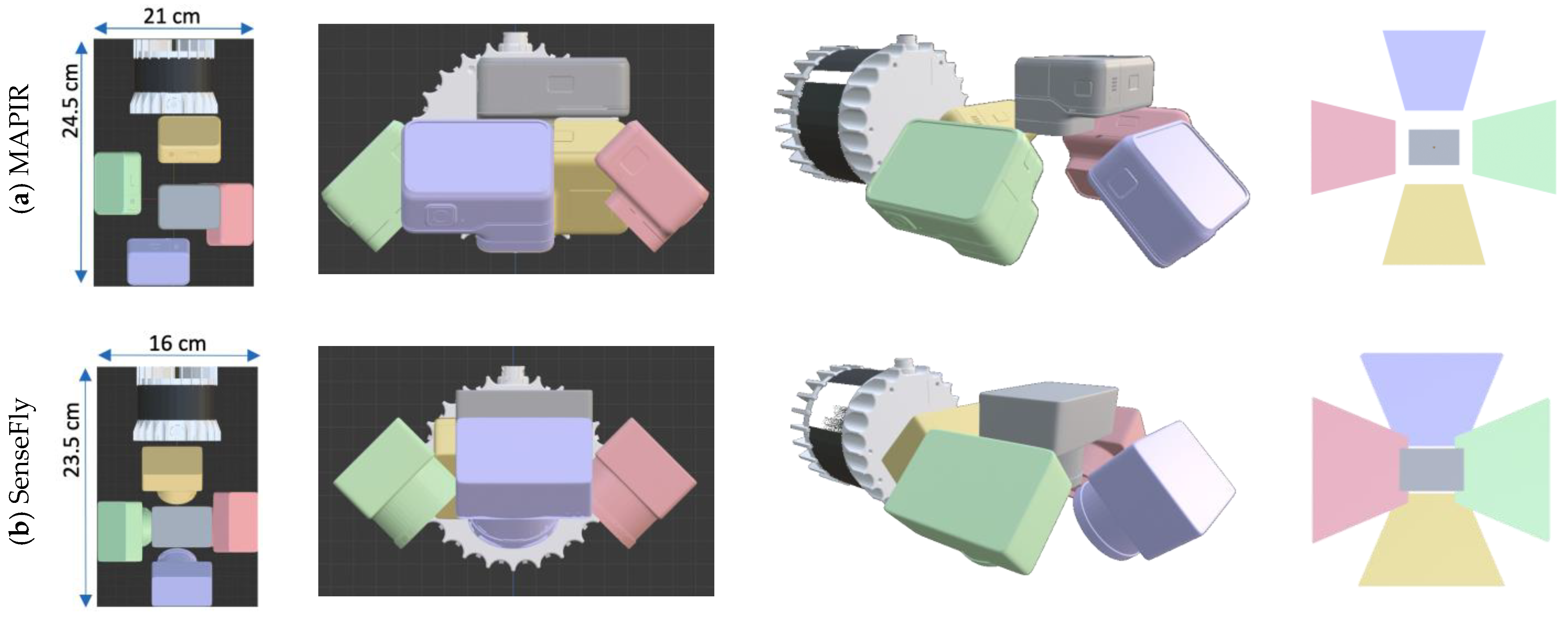

2.3. The Integrated Hybrid System

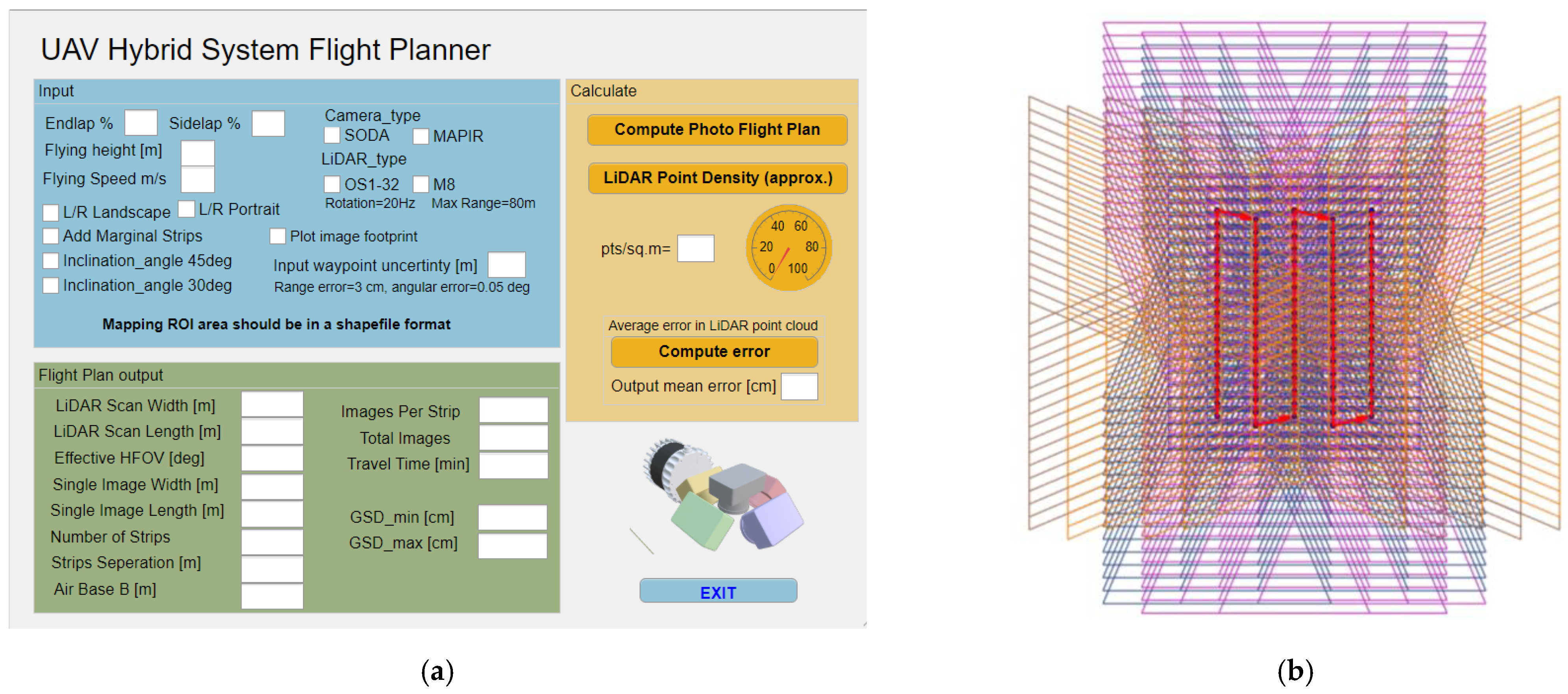

2.4. UAV Flight Planning Tool for Hybrid Systems

- The maximum and minimum image GSD;

- The ground coverage of the multi-view images (Figure 4b);

- The number of flight strips, waypoints per strip, and the total waypoints;

- The separation distance between the adjacent flight strips;

- The baseline between the two consecutive waypoints;

- The estimated travel time;

- The LiDAR scan footprint width and length;

- The LiDAR effective scanning angles.

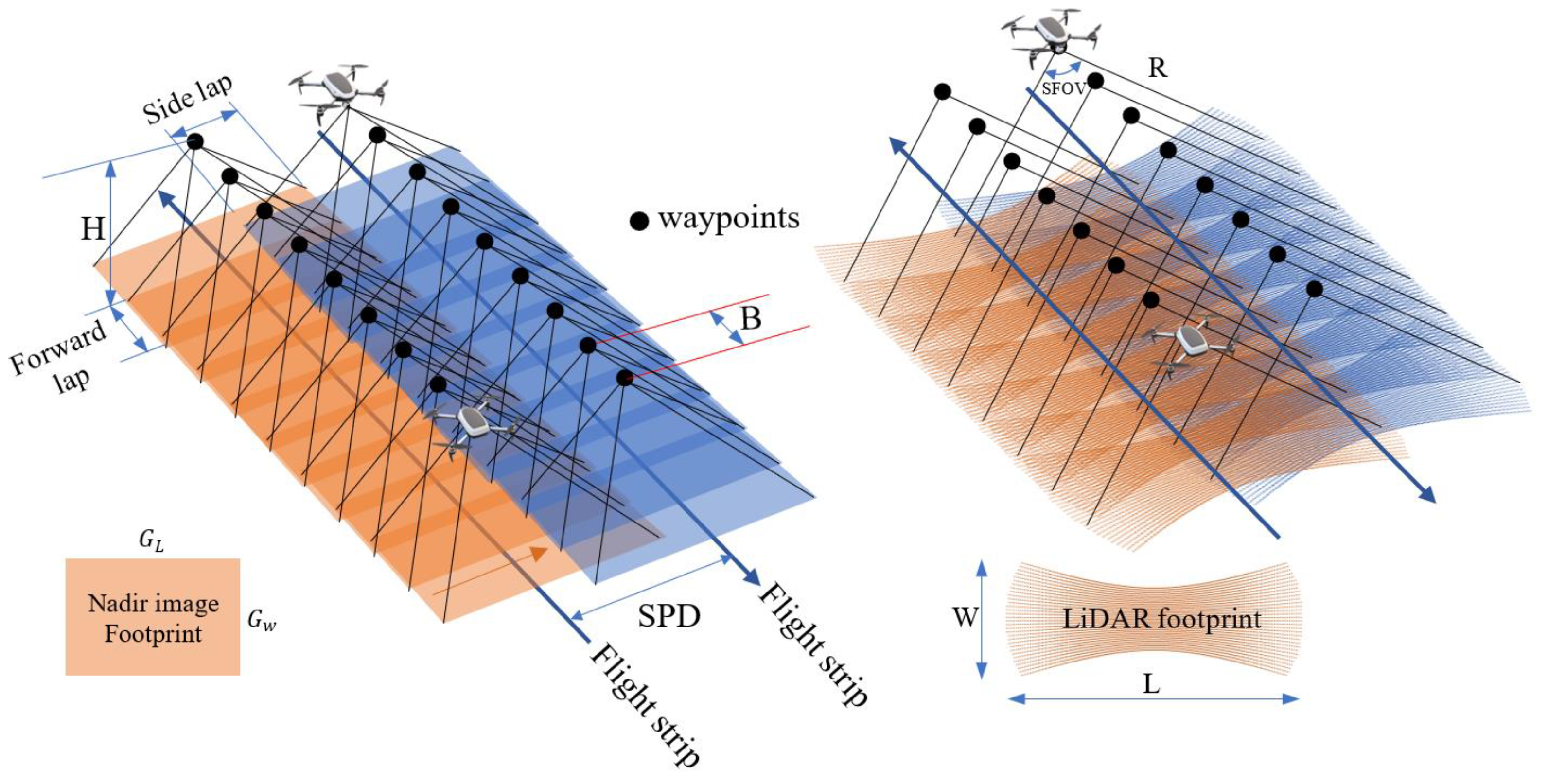

- f = focal length,

- H = flying height above the ground level,

- t = inclination angle of the camera,

- β = inclination angle to a certain image point.

- : project area dimensions are defined as a rectangle with a length and width .

- : nadir image coverage along track.

- : nadir image coverage across track.

- distance between two successive waypoints.

- : separation distance between the flight strips.

- : number of flight strips rounded to positive infinity.

- number of waypoints per strip.

- scanning range of the LiDAR.

- : vertical field of view of the LiDAR.

- : along-track LiDAR scanning width.

- : across-track swath width of the LiDAR scanning.

- : effective scanning field of view.

- R is the measured range distance from the sensor to the scanned point.

- is the measured azimuth angle of the laser beam.

- is the measured vertical angle of the laser beam measured from the horizontal plane.

- , and are the coordinates of the LiDAR at time .

- The variance–covariance matrix of the observed range and angles.

- The variance–covariance matrix of the derived coordinates of point i where represent the variances of the coordinates, respectively.

3. Experiments and Analyses

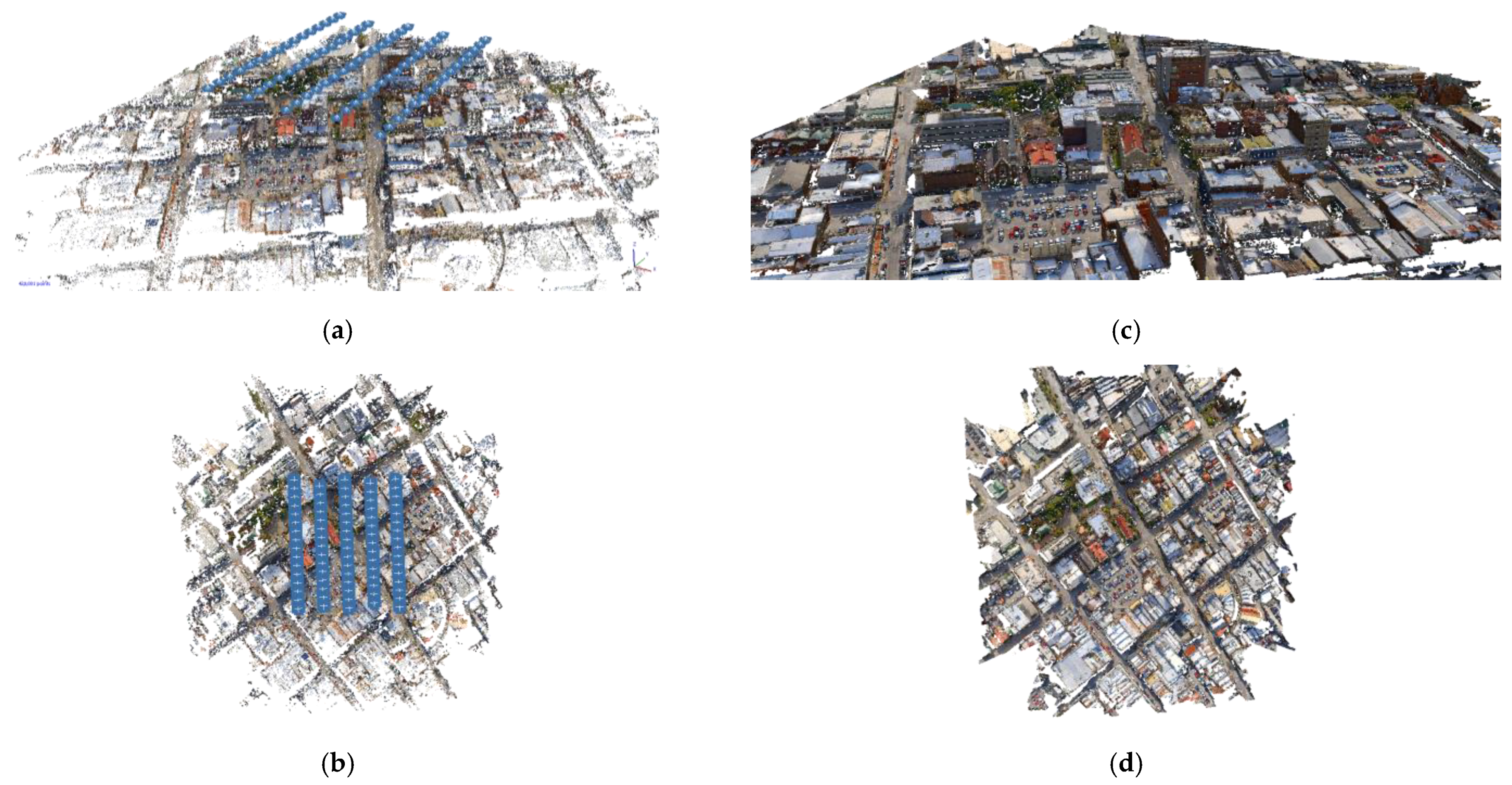

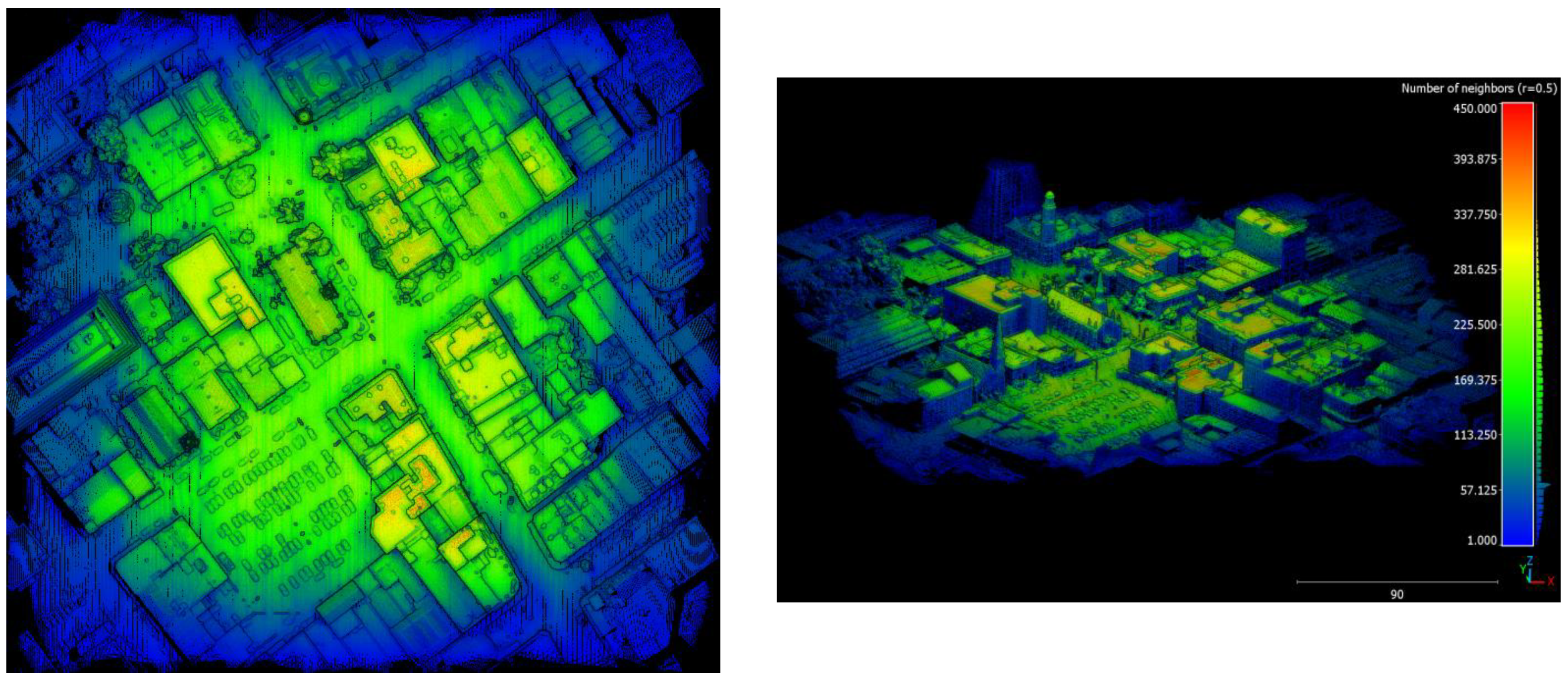

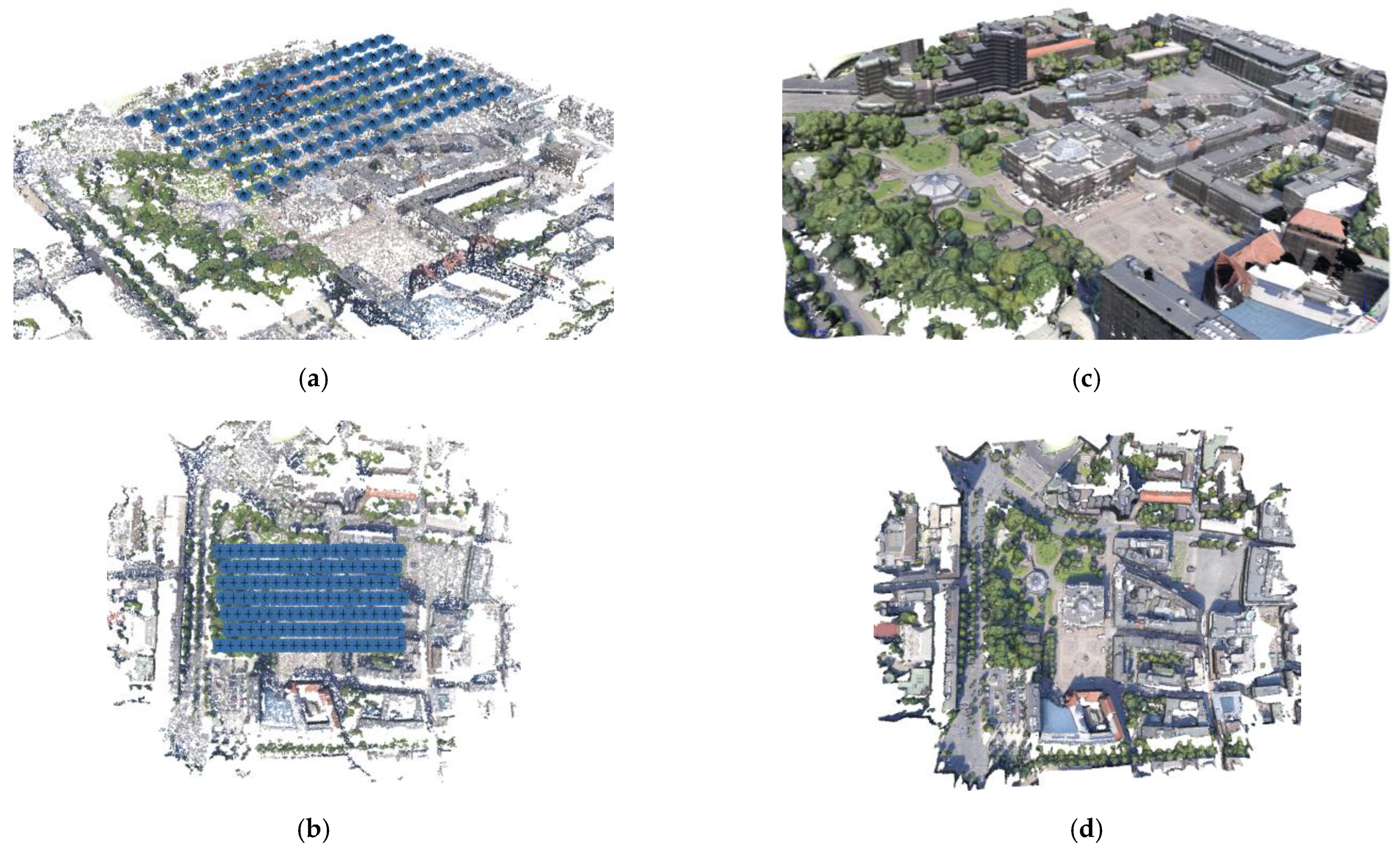

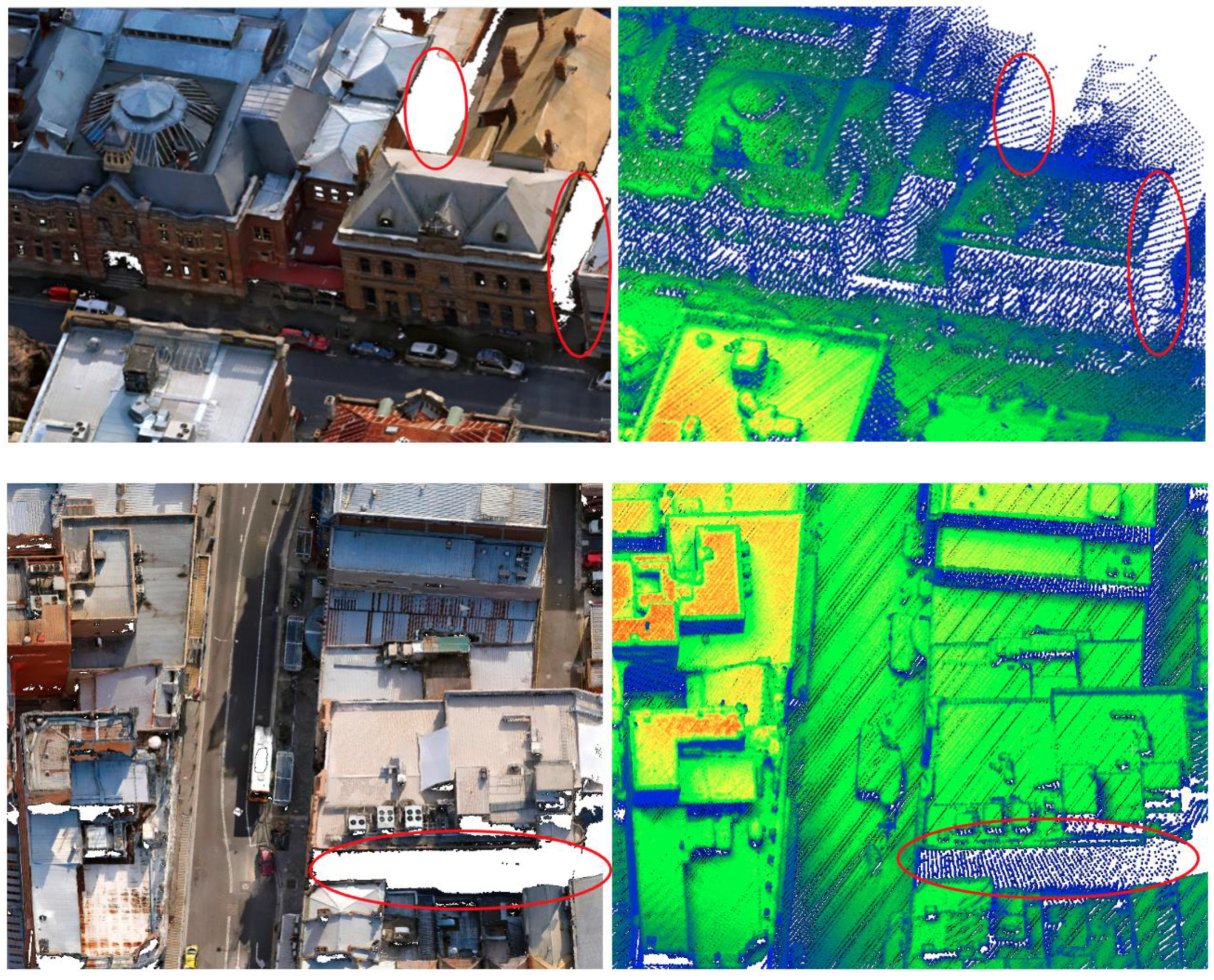

3.1. Use Case #1—Launceston

- flying height = 80 m

- flying speed = 10 m/s

- end lap = 80% and side lap = 60%

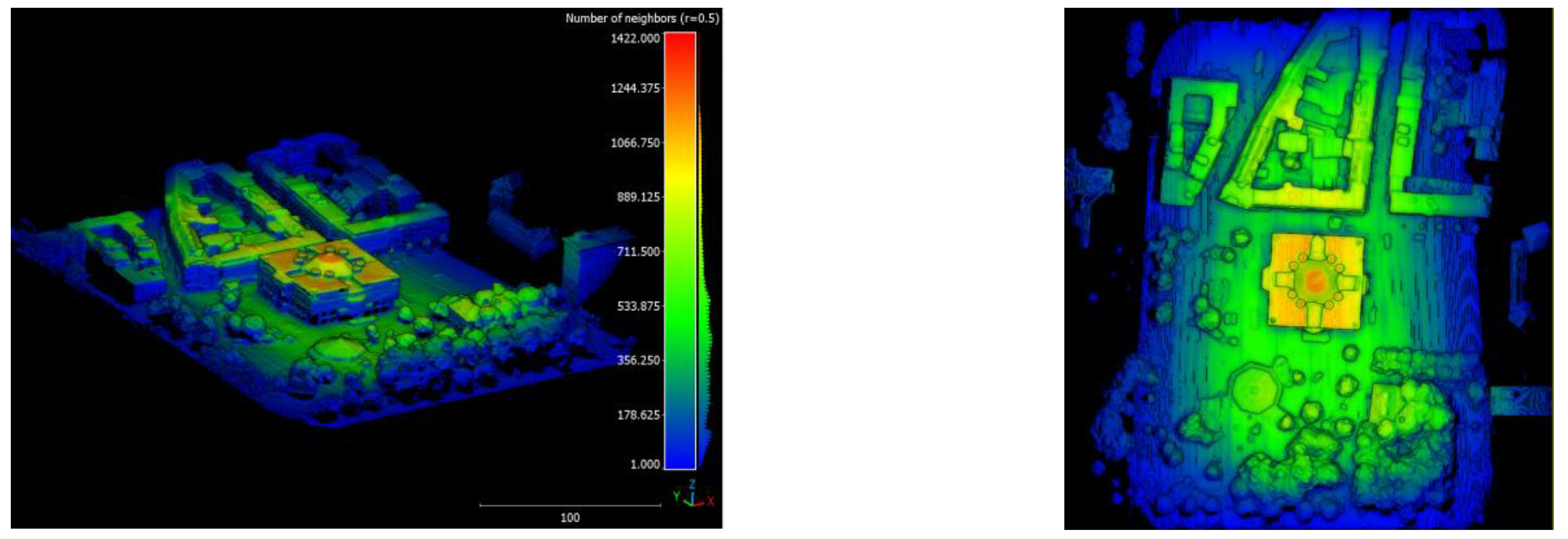

3.1.1. SenseFly-Based Hybrid UAV Surveying

3.1.2. MAPIR-Based Hybrid UAV Surveying

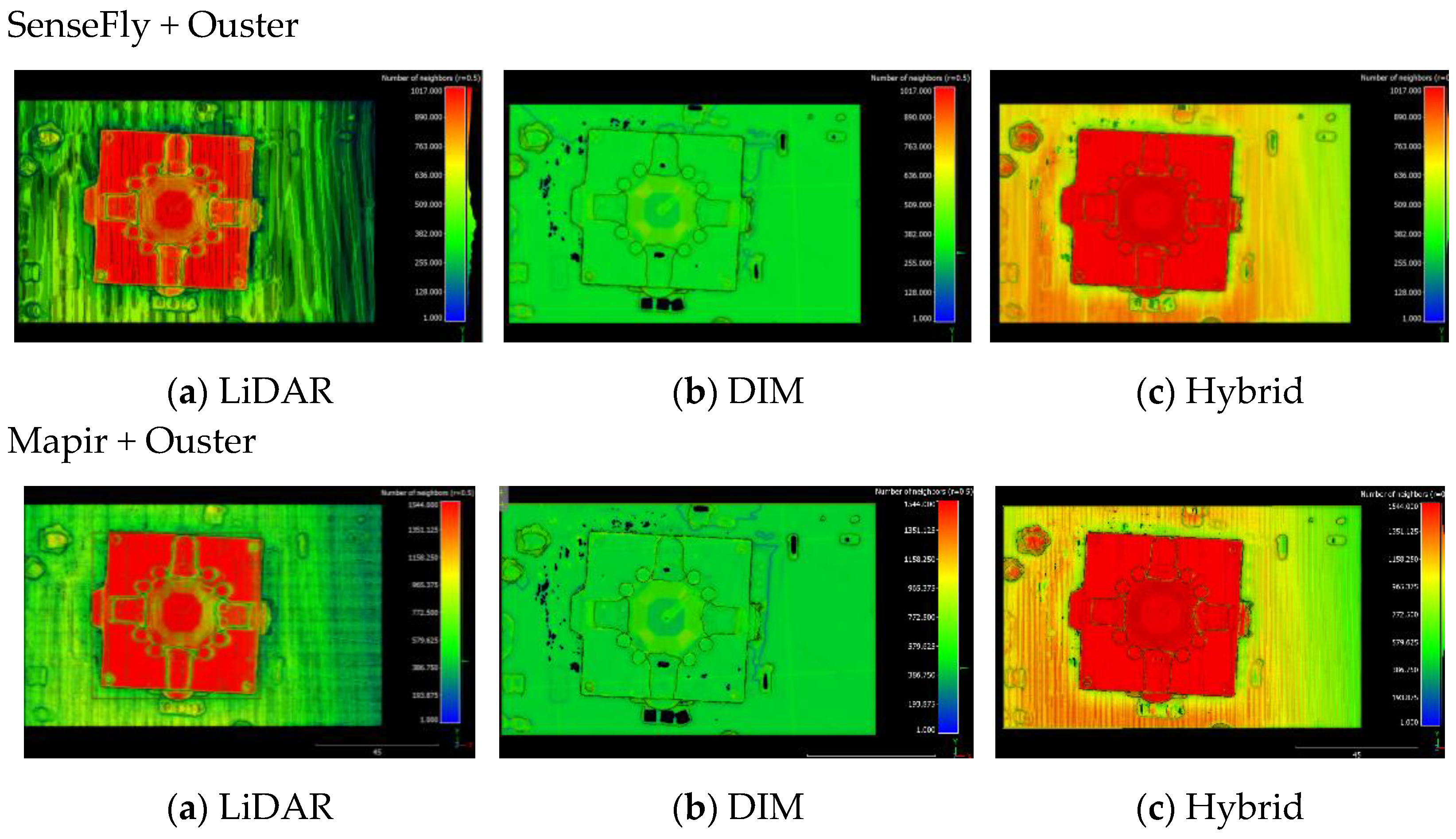

3.2. Use Case #2—Dortmund

- flying height = 80 m

- speed = 5 m/sec

- end lap = 80% and side lap = 80%

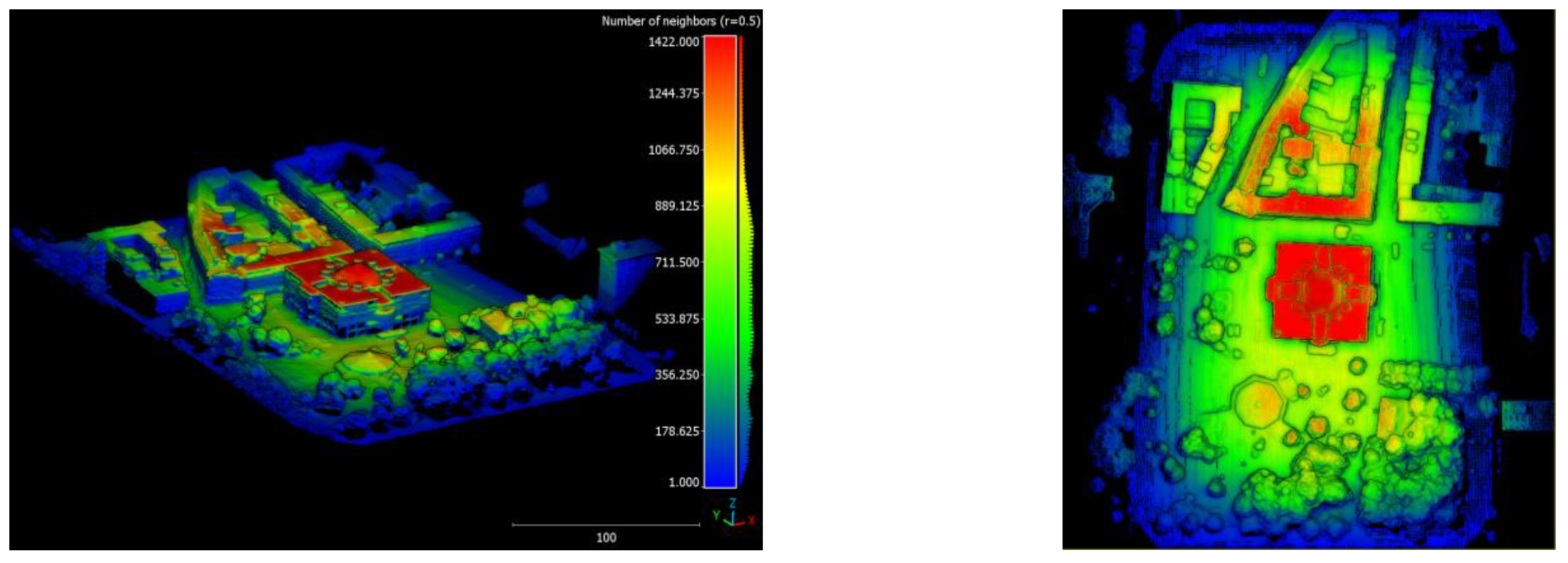

3.2.1. SenseFly-Based Hybrid UAV Surveying

3.2.2. MAPIR-Based Hybrid UAV Surveying

4. Discussions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Colomina, I.; Molina, P. Unmanned Aerial Systems for Photogrammetry and Remote Sensing: A Review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. Uav for 3d Mapping Applications: A Review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Hassanalian, M.; Abdelkefi, A. Classifications, Applications, and Design Challenges of Drones: A Review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Granshaw, S.I. Rpv, Uav, Uas, Rpas … or Just Drone? Photogramm. Rec. 2018, 33, 160–170. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Francesco, I.; Bianchi, A.; Cina, A.; Michele, C.D.; Maschio, P.; Passoni, D.; Pinto, L. Mid-Term Monitoring of Glacier’s Variations with UAVs: The Example of the Belvedere Glacier. Remote Sens. 2022, 14, 28. [Google Scholar]

- Nex, F.; Armenakis, C.; Cramer, M.; Cucci, D.A.; Gerke, M.; Honkavaara, E.; Kukko, A.; Persello, C.; Skaloud, J. Uav in the Advent of the Twenties: Where We Stand and What Is Next. ISPRS J. Photogramm. Remote Sens. 2022, 184, 215–242. [Google Scholar] [CrossRef]

- Steenbeek, A.; Nex, F. Cnn-Based Dense Monocular Visual Slam for Real-Time Uav Exploration in Emergency Conditions. Drones 2022, 6, 79. [Google Scholar] [CrossRef]

- Giordan, D.; Hayakawa, Y.; Nex, F.; Remondino, F.; Tarolli, P. Review Article: The Use of Remotely Piloted Aircraft Systems (Rpass) for Natural Hazards Monitoring and Management. Nat. Hazards Earth Syst. Sci. 2018, 18, 1079–1096. [Google Scholar] [CrossRef]

- Wang, D.; Xing, S.; He, Y.; Yu, J.; Xu, Q.; Li, P. Evaluation of a New Lightweight Uav-Borne Topo-Bathymetric Lidar for Shallow Water Bathymetry and Object Detection. Sensors 2022, 22, 1379. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Skarlatos, D.; Georgopoulos, A.; Karantzalos, K. Shallow Water Bathymetry Mapping from Uav Imagery Based on Machine Learning. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2019, XLII-2/W10, 9–16. [Google Scholar] [CrossRef]

- Rossi, M.; Brunelli, D.; Adami, A.; Lorenzelli, L.; Menna, F.; Remondino, F. Gas-Drone: Portable gas sensing system on UAVs for gas leakage localization. In Proceedings of the SENSORS, 2014 IEEE, Valencia, Spain, 2–5 November 2014; pp. 1431–1434. [Google Scholar]

- Rohi, G.; Ejofodomi, O.; Ofualagba, G. Autonomous Monitoring, Analysis, and Countering of Air Pollution Using Environmental Drones. Heliyon 2020, 6, e03252. [Google Scholar] [CrossRef] [PubMed]

- Skondras, A.; Karachaliou, E.; Tavantzis, I.; Tokas, N.; Valari, E.; Skalidi, I.; Bouvet, G.A.; Stylianidis, E. Uav Mapping and 3d Modeling as a Tool for Promotion and Management of the Urban Space. Drones 2022, 6, 115. [Google Scholar] [CrossRef]

- Stöcker, C.; Koeva, M.N.; Zevenbergen, J.A. Uav Technology: Opportunities to Support the Updating Process of the Rwandan Cadastre. In Proceedings of the 10th East Africa Land Administration Network (EALAN) Conference 2019, Ruhengeri, Rawanda, 21–25 July 2019. [Google Scholar]

- Koeva, M.; Stöcker, C.; Crommelinck, S.; Ho, S.; Chipofya, M.; Sahib, J.; Bennett, R.; Zevenbergen, J.; Vosselman, G.; Lemmen, C.; et al. Innovative Remote Sensing Methodologies for Kenyan Land Tenure Mapping. Remote Sens. 2020, 12, 273. [Google Scholar] [CrossRef]

- Immerzeel, W.W.; Kraaijenbrink, P.D.A.; Shea, J.M.; Shrestha, A.B.; Pellicciotti, F.; Bierkens, M.F.P.; De Jong, S.M. High-Resolution Monitoring of Himalayan Glacier Dynamics Using Unmanned Aerial Vehicles. Remote Sens. Environ. 2014, 150, 93–103. [Google Scholar] [CrossRef]

- Ren, H.; Zhao, Y.; Xiao, W.; Hu, Z. A Review of Uav Monitoring in Mining Areas: Current Status and Future Perspectives. Int. J. Coal Sci. Technol. 2019, 6, 320–333. [Google Scholar] [CrossRef]

- Li, X.; Li, Z.; Wang, H.; Li, W. Unmanned Aerial Vehicle for Transmission Line Inspection: Status, Standardization, and Perspectives. Front. Energy Res. 2021, 9, 713634. [Google Scholar] [CrossRef]

- Mandirola, M.; Casarotti, C.; Peloso, S.; Lanese, I.; Brunesi, E.; Senaldi, I. Use of Uas for Damage Inspection and Assessment of Bridge Infrastructures. Int. J. Disaster Risk Reduct. 2022, 72, 102824. [Google Scholar] [CrossRef]

- Kern, A.; Fanta-Jende, P.; Glira, P.; Bruckmüller, F.; Sulzbachner, C. An Accurate Real-Time Uav Mapping Solution for the Generation of Orthomosaics and Surface Models. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2021, XLIII-B1-2, 165–171. [Google Scholar] [CrossRef]

- Elmokadem, T.; Savkin, A.V. Towards Fully Autonomous Uavs: A Survey. Sensors 2021, 21, 6223. [Google Scholar] [CrossRef]

- Toschi, I.; Remondino, F.; Rothe, R.; Klimek, K. Combining Airborne Oblique Camera and Lidar Sensors: Investigation and New Perspectives. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2018, XLII-1, 437–444. [Google Scholar] [CrossRef]

- Toschi, I.; Farella, E.; Welponer, M.; Remondino, F. Quality-Based Registration Refinement of Airborne Lidar and Photogrammetric Point Clouds. ISPRS J. Photogramm. Remote Sens. 2021, 172, 160–170. [Google Scholar] [CrossRef]

- Toschi, I.; Remondino, F.; Hauck, T.; Wenzel, K. When photogrammetry meets LiDAR: Towards the airborne hybrid era. GIM Int. 2019, 17–21. [Google Scholar]

- Shan, J.; Toth, C.K. Topographic Laser Ranging and Scanning: Principles and Processing, 1st ed.; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Vosselman, G.; Maas, H.-G. Airborne and Terrestrial Laser Scanning; Whittles Publishing: Scotland, UK, 2010. [Google Scholar]

- Haala, N.; Rothermel, M. Dense Multiple Stereo Matching of Highly Overlapping Uav Imagery. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2012, XXXIX-B1, 387–392. [Google Scholar] [CrossRef]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F.C. State of the Art in High Density Image Matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef]

- Rupnik, E.; Nex, F.C.; Toschi, I.; Remondino, F. Aerial Multi—Camera Systems: Accuracy and Block Triangulation Issues. ISPRS J. Photogramm. Remote Sens. 2015, 101, 233–246. [Google Scholar] [CrossRef]

- Remondino, F.; Gerke, M. Oblique Aerial Imagery: A Review. In Proceedings of the Photogrammetric Week ’15, Stuttgart, Germany, 7–11 September 2015; Frietsch, D., Ed.; Wichmann: Stuttgart, Germany, 2015; pp. 75–83. [Google Scholar]

- Moe, K.; Toschi, I.; Poli, D.; Lago, F.; Schreiner, C.; Legat, K.; Remondino, F. Changing the Production Pipeline—Use of Oblique Aerial Cameras for Mapping Purposes. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2016, XLI-B4, 631–637. [Google Scholar]

- Toschi, I.; Ramos, M.M.; Nocerino, E.; Menna, F.; Remondino, F.; Moe, K.; Poli, D.; Legat, K.; Fassi, F. Oblique Photogrammetry Supporting 3d Urban Reconstruction of Complex Scenarios. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, XLII-1/W1, 519–526. [Google Scholar] [CrossRef]

- Remondino, F.; Toschi, I.; Gerke, M.; Nex, F.; Holland, D.; McGill, A.; Talaya Lopez, J.; Magarinos, A. Oblique Aerial Imagery for Nma—Some Best Practices. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2016, XLI-B4, 639–645. [Google Scholar] [CrossRef]

- Bláha, M.; Eisenbeiss, H.; Grimm, D.; Limpach, P. Direct Georeferencing of Uavs. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2012, XXXVIII-1, 131–136. [Google Scholar] [CrossRef]

- Masiero, A.; Fissore, F.; Vettore, A. A Low Cost Uwb Based Solution for Direct Georeferencing Uav Photogrammetry. Remote Sens. 2017, 9, 414. [Google Scholar] [CrossRef]

- Liu, X.; Lian, X.; Yang, W.; Wang, F.; Han, Y.; Zhang, Y. Accuracy Assessment of a Uav Direct Georeferencing Method and Impact of the Configuration of Ground Control Points. Drones 2022, 6, 30. [Google Scholar] [CrossRef]

- Grayson, B.; Penna, N.T.; Mills, J.P.; Grant, D.S. Gps Precise Point Positioning for Uav Photogrammetry. Photogramm. Rec. 2018, 33, 427–447. [Google Scholar] [CrossRef]

- Valente, D.S.M.; Momin, A.; Grift, T.; Hansen, A. Accuracy and Precision Evaluation of Two Low-Cost Rtk Global Navigation Satellite Systems. Comput. Electron. Agric. 2020, 168, 105142. [Google Scholar] [CrossRef]

- Famiglietti, N.; Cecere, G.; Grasso, C.; Memmolo, A.; Vicari, A. A Test on the Potential of a Low Cost Unmanned Aerial Vehicle Rtk/Ppk Solution for Precision Positioning. Sensors 2021, 21, 3882. [Google Scholar] [CrossRef]

- MAPIR. Survey3: Multi-Spectral Survey Cameras. Available online: https://www.mapir.camera/pages/survey3-cameras (accessed on 18 October 2022).

- Sensefly. Sensefly, S.O.D.A. Available online: https://www.sensefly.com/camera/sensefly-soda-photogrammetry-camera/ (accessed on 18 October 2022).

- Bashar, A.; Remondino, F. Flight Planning for Lidar-Based Uas Mapping Applications. ISPRS Int. J. Geo-Inf. 2020, 9, 378. [Google Scholar]

- Ouster. Digital Vs Analog Lidar. Available online: https://www.youtube.com/watch?v=yDPotPQfRTE&feature=emb_logo (accessed on 1 October 2022).

- Höhle, J. Oblique Aerial Images and Their Use in Cultural Heritage Documentation. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2013, XL-5/W2, 349–354. [Google Scholar] [CrossRef]

- Wolf, P.; De Witt, B. Elements of Photogrammetry with Applications in Gis, 3rd ed.; McGraw Hill: NewYork, NY, USA, 2000. [Google Scholar]

- Alsadik, B. Adjustment Models in 3d Geomatics and Computational Geophysics: With Matlab Examples; Elsevier: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Bllender. Available online: http://www.blender.org (accessed on 18 October 2022).

- Agisoft. Agisoft Metashape. Available online: http://www.agisoft.com/downloads/installer/ (accessed on 18 October 2022).

- Launceston City 3d Model. Available online: http://s3-ap-southeast-2.amazonaws.com/launceston/atlas/index.html (accessed on 18 October 2022).

- Nex, F.; Gerke, M.; Remondino, F.; Przybilla, H.-J.; Bäumker, M.; Zurhorst, A. Isprs Benchmark for Multi-Platform Photogrammetry. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2015, II-3/W4, 135–142. [Google Scholar] [CrossRef]

| Resolution | Sensors | Size | Weight | |

|---|---|---|---|---|

| Viewprouav VO305 | 305 MPx | Full frame | 20 m × 20 m × 112 mm | - |

| Share 303S Pro | 305 MPx | Full frame | 200 × 200 × 125 mm | 1.4 kg |

| DroneBase N5 | 120 MPx | APS-C | 160 × 160 × 85 mm | 730 gr |

| ADTi Surveyor 5 PRO | 120 MPx | APS-C | 105 × 150 × 80 mm | 850 gr |

| Quantum-System D2M | 130 MPx | APS-C | - | 830 gr |

| JOUAV CA504R | 305 MPx | Full frame | 270 × 180 × 154 mm | 2.25 kg |

| Specification | MAPIR Survey3W | senseFly SODA |

|---|---|---|

| Focal length [mm] | 8.25 | 10.6 |

| Sensor [pixels] | Sony Exmor R IMX1174032 × 3024 | CMOS 1”, 5472 × 3648 |

| Pixel size [microns] | 1.55 | 2.33 |

| Field of view | 87° | 74° |

| Storage [GB] | Max 128 GB Storage | Based on external memory |

| Data exchange format | PWM, USB, HDMI, and SD | PWM, USB, HDMI, and SD |

| Shutter speed | Global Shutter 1/2000 to 1 min | Global Shutter 1/500–1/2000 s |

| Aperture | f/2.8 | f/2.8–f/11 |

| Dimensions | 59 × 41.5 × 36 mm | 75 × 48 × 33 mm |

| Weight | 75.4 g with battery | 85 g |

| Battery | 1200 mAh Li-Ion (150 min) | No internal battery, powered by drone |

| Price | ca. EUR 400 | ca. EUR 1500 |

| LiDAR Sensor/System | Ouster OS1-32 |  |

| Max. Range | ≤120 m@80% reflectivity | |

| Typical Range Accuracy 1σ | ±3 cm | |

| Beam divergence | 0.18° (3 mrad) | |

| Beam footprint | 22 cm@100 m | |

| Scanning channels/beams | 32 channels | |

| Output rate pts/sec. | 655,360 | |

| Laser Returns | 1 per outgoing pulse | |

| FOV-Vertical | 45° (±22.5°) | |

| Rotation rate | 10–20 Hz | |

| Angular resolution | 0.35°–2.8° | |

| LiDAR mechanism | Spinning | |

| LiDAR type | Digital | |

| Laser Wavelength | 865 nm | |

| Power consumption | 14–20 w | |

| Weight | 455 g | |

| Dimensions (diameter × height) | 85 × 73.5 mm | |

| Operating Temperature | −20 °C to +50 °C | |

| Initial price | ca. EUR 8000 |

| LiDAR | Ouster S1-32 |

|---|---|

| Number of cameras | 4 oblique + 1 nadir |

| Dimensions | 24.6 cm × 21 cm × 8 cm (MAPIR config.) 23.4 cm × 15.8 cm × 8 cm (SODA config.) |

| Focal length | 10.6 mm (SODA config.) 8.25 mm (MAPIR config.) |

| Megapixels | 12 MPx (MAPIR config.) 20 MPx (SODA config.) |

| Camera tilting | 45° (or 30°) |

| Data exchange format | USB Memory Stick |

| Expected operation time per charge | >1 h |

| Operational temperature range | 0 °C to 40 °C |

| Mass | <1 kg, with battery |

| Price | EUR <10,000 |

|

|

| RMSE_ XY (mm) | RMSE_Z (mm) | Total RMSE (mm) | ||

|---|---|---|---|---|

| senseFly-based hybrid system (nadir GSD: 1.8 cm) |  | |||

| GCPs (5) | 4.1 mm | 1.3 mm | 4.3 mm | |

| CPs (7) | 4.3 mm | 1.8 mm | 4.6 mm | |

| MAPIR-based hybrid system (nadir GSD: 1.5 cm) |  | |||

| GCPs (5) | 4.4 mm | 1.0 mm | 4.5 mm | |

| CPs (7) | 3.7 mm | 1.2 mm | 3.8 mm | |

| RMSE_ XY (mm) | RMSE_Z (mm) | Total RMSE (mm) | ||

|---|---|---|---|---|

| senseFly-based hybrid system |  | |||

| GCPs (6) | 2.8 mm | 0.5 mm | 2.9 mm | |

| CPs (9) | 3.7 mm | 1.8 mm | 4.1 mm | |

| MAPIR-based hybrid system |  | |||

| GCPs (6) | 2.3 mm | 2.4 mm | 3.3 mm | |

| CPs (9) | 4.6 mm | 3.3 mm | 5.7 mm | |

| First test | Second test | |||

|---|---|---|---|---|

| senseFly + Ouster | MAPIR + Ouster | senseFly + Ouster | MAPIR + Ouster | |

| Total DIM points | 3,165,250 | 5,516,791 | 7,523,625 | 10,818,103 |

| Total LiDAR points | 1,100,675 | 1,692,890 | 9,593,969 | 14,289,084 |

| Total Integrated | 4,265,925 | 7,209,681 | 16,912,628 | 25,107,187 |

| Density DIM | 469 ± 81 pts/m2 | 804 ± 121 pts/m2 | 338 ± 77 pts/m2 | 496 ± 120 pts/m2 |

| Density LiDAR | 188 ± 67 pts/m2 | 296 ± 102 pts/m2 | 659 ± 340 pts/m2 | 1050 ± 544 pts/m2 |

| Density Integrated | 619 ± 120 pts/m2 | 1039 ± 178 pts/m2 | 877 ± 359 pts/m2 | 1329 ± 575 pts/m2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alsadik, B.; Remondino, F.; Nex, F. Simulating a Hybrid Acquisition System for UAV Platforms. Drones 2022, 6, 314. https://doi.org/10.3390/drones6110314

Alsadik B, Remondino F, Nex F. Simulating a Hybrid Acquisition System for UAV Platforms. Drones. 2022; 6(11):314. https://doi.org/10.3390/drones6110314

Chicago/Turabian StyleAlsadik, Bashar, Fabio Remondino, and Francesco Nex. 2022. "Simulating a Hybrid Acquisition System for UAV Platforms" Drones 6, no. 11: 314. https://doi.org/10.3390/drones6110314

APA StyleAlsadik, B., Remondino, F., & Nex, F. (2022). Simulating a Hybrid Acquisition System for UAV Platforms. Drones, 6(11), 314. https://doi.org/10.3390/drones6110314