A Human-Detection Method Based on YOLOv5 and Transfer Learning Using Thermal Image Data from UAV Perspective for Surveillance System

Abstract

1. Introduction

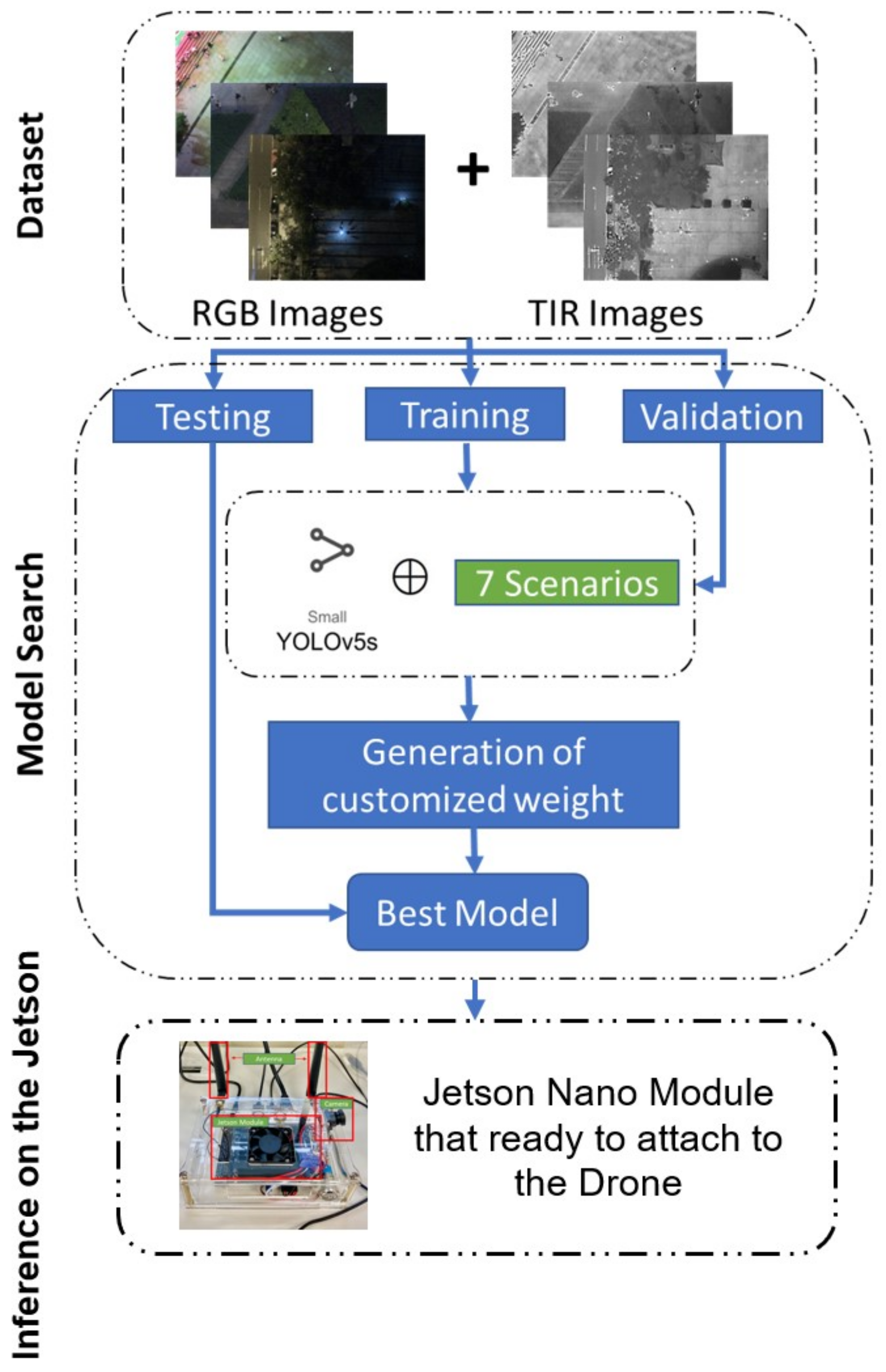

- Optimizing the YOLOv5s algorithm for small human–object detection dataset via the transfer learning method.

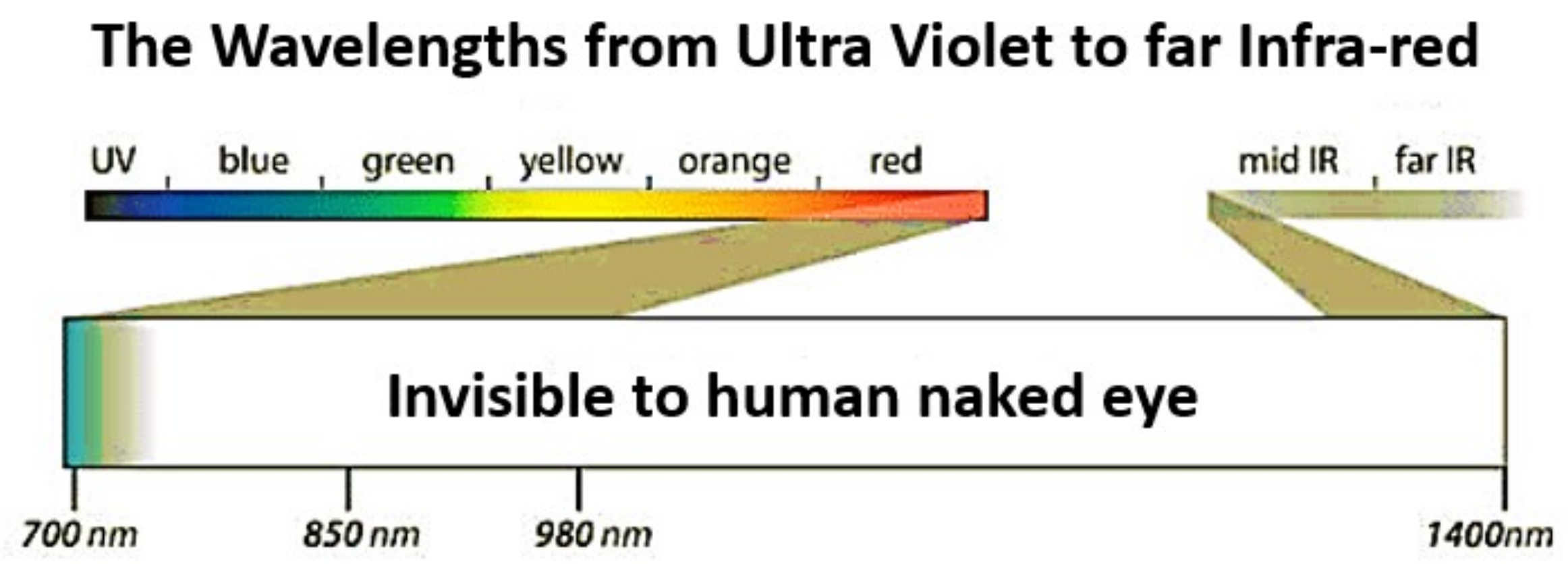

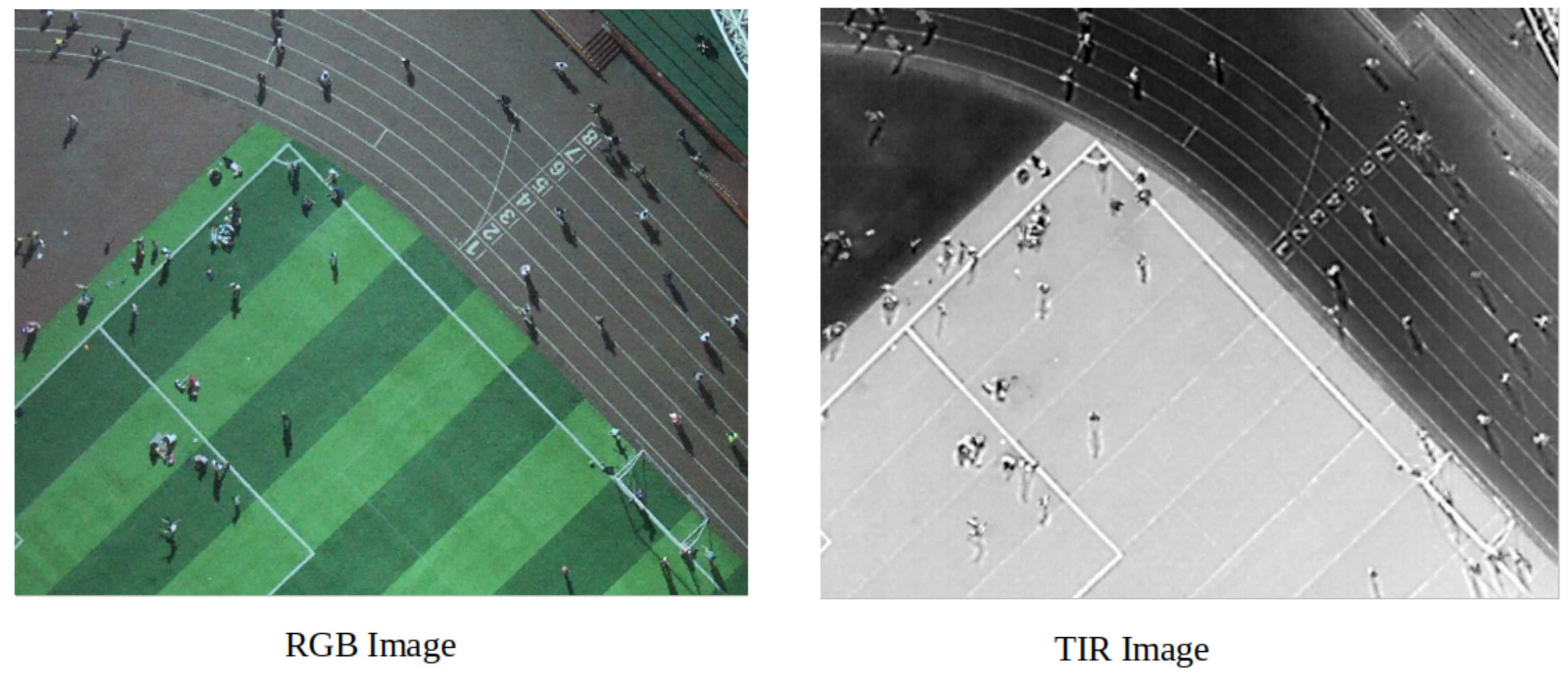

- Developing a method to handle different environmental issues, including illumination and mobility change using thermal infrared (TIR) images in addition to RGB (RGBT) images.

- The original dataset has been manually annotated to be YOLO-format-compatible, and the annotation will be made available to the public.

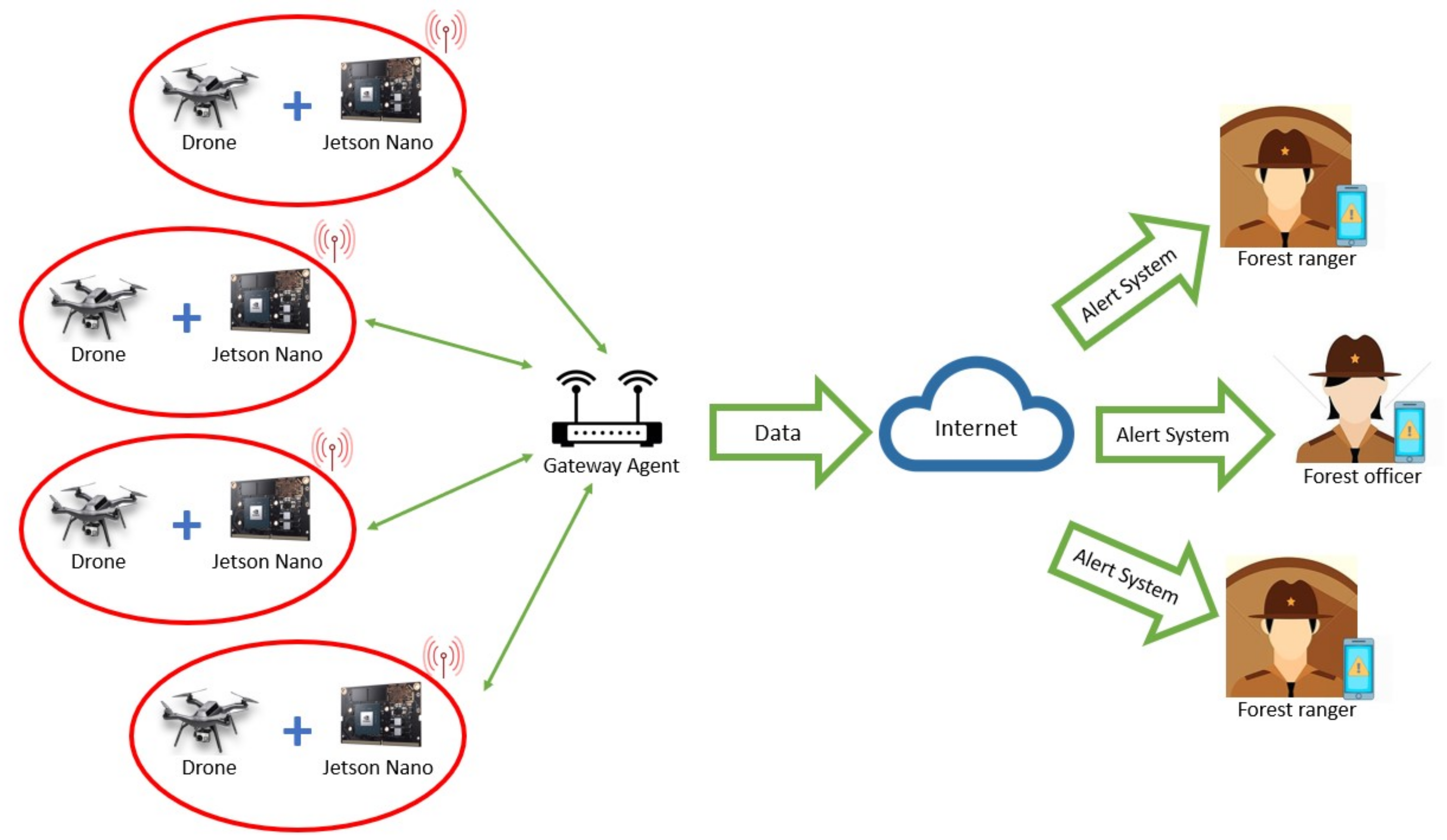

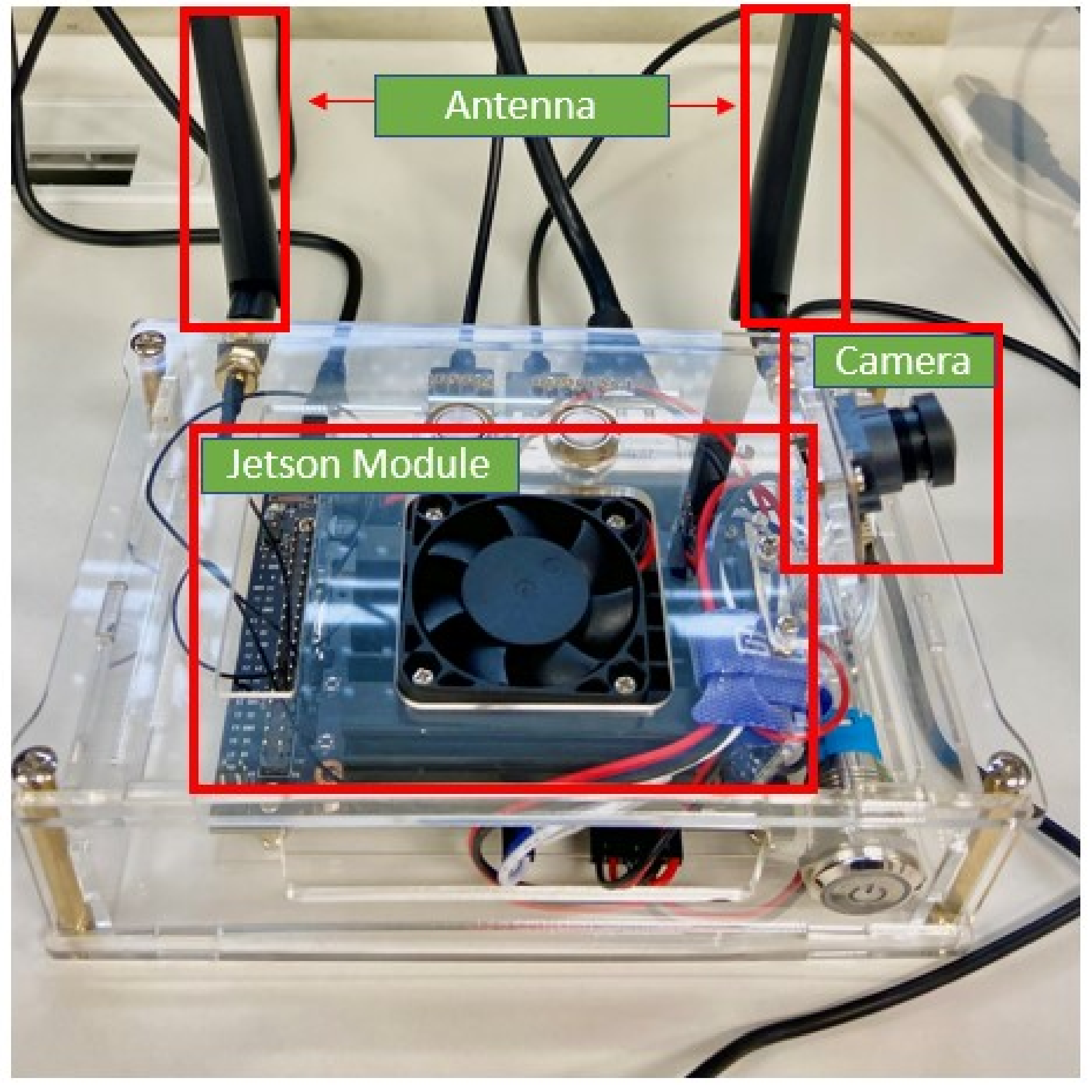

- Proposing a surveillance system for wildlife conservation using NVIDIA Jetson Nano module.

2. Related Work

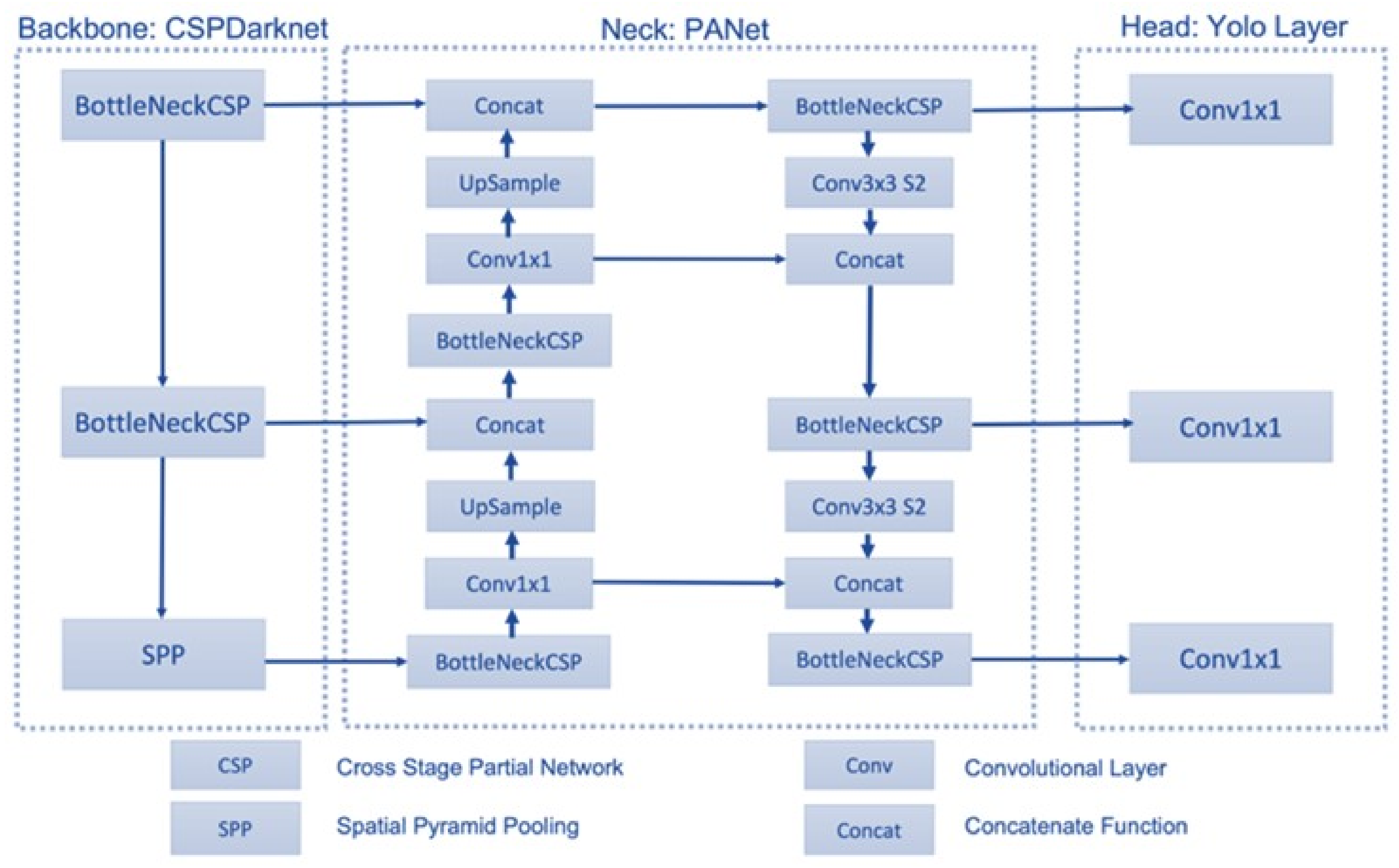

2.1. You Only Look Once (YOLO)

2.2. NVIDIA Jetson Modules

3. Methodology

3.1. Object Detector

3.2. Dataset

3.3. Experiment Setup

- Original YOLOv5 model (MS COCO RGB Dataset);

- VisDrone RGB image data + transfer learning YOLOv5s model (YOLO-RGB-TL);

- VisDrone RGB image data (YOLO-RGB);

- VisDrone TIR image data + transfer learning YOLOv5s model (YOLO-TIR-TL);

- VisDrone TIR image data (YOLO-TIR);

- VisDrone RGB and TIR Image + transfer learning YOLOv5s model (YOLO-RGBT-TL);

- VisDrone RGB and TIR image data (YOLO-RGBT).

3.4. Evaluation

- TP denotes true positive;

- FP denotes false positive;

- FN denotes false negative.

4. Experiments and Results

4.1. Model Search

4.2. Inference on the Jetson GPUs

4.3. The Limitation of This Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- United Nations. International Day of Forests, 21 March. Available online: https://www.un.org/en/observances/forests-and-trees-day (accessed on 1 September 2021).

- Food and Agriculture Organization of the United Nations. Global Forest Resources Assessment 2020: Main Report; FAO: Rome, Italy, 2020. [Google Scholar] [CrossRef]

- Assifa, F. Setiap Tahun, HUTAN INDONESIA HILANG 684.000 Hektar. Available online: https://regional.kompas.com/read/2016/08/30/15362721/setiap.tahun.hutan.indonesia.hilang.684.000.hektar (accessed on 24 April 2021).

- Nugroho, W.; Eko Prasetyo, M.S. Forest Management and Environmental Law Enforcement Policy against Illegal Logging in Indonesia. Int. J. Manag. 2019, 10, 317–323. [Google Scholar] [CrossRef]

- Mantau, A.J.; Widayat, I.W.; Köppen, M. A Genetic Algorithm for Parallel Unmanned Aerial Vehicle Scheduling: A Cost Minimization Approach. In Proceedings of the International Conference on Intelligent Networking and Collaborative Systems, Taichung, Taiwan, 1–3 September 2021; Springer: Cham, Switzerland, 2021; pp. 125–135. [Google Scholar] [CrossRef]

- Shakeri, R.; Al-Garadi, M.A.; Badawy, A.; Mohamed, A.; Khattab, T.; Al-Ali, A.; Harras, K.A.; Guizani, M. Design Challenges of Multi-UAV Systems in Cyber-Physical Applications: A Comprehensive Survey, and Future Directions. arXiv 2018, arXiv:1810.09729. [Google Scholar] [CrossRef]

- Bokolonga, E.; Hauhana, M.; Rollings, N.; Aitchison, D.; Assaf, M.H.; Das, S.R.; Biswas, S.N.; Groza, V.; Petriu, E.M. A compact multispectral image capture unit for deployment on drones. In Proceedings of the 2016 IEEE International Instrumentation and Measurement Technology Conference Proceedings, Taipei, Taiwan, 23–26 May 2016; pp. 1–5. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Hengstler, S.; Prashanth, D.; Fong, S.; Aghajan, H. Mesheye: A hybrid-resolution smart camera mote for applications in distributed intelligent surveillance. In Proceedings of the 6th International Conference on Information Processing in Sensor Networks, Cambridge, MA, USA, 25–27 April 2007; pp. 360–369. [Google Scholar]

- Widiyanto, D.; Purnomo, D.; Jati, G.; Mantau, A.; Jatmiko, W. Modification of particle swarm optimization by reforming global best term to accelerate the searching of odor sources. Int. J. Smart Sens. Intell. Syst. 2016, 9, 1410–1430. [Google Scholar] [CrossRef]

- Zhao, H.; Zhou, Y.; Zhang, L.; Peng, Y.; Hu, X.; Peng, H.; Cai, X. Mixed YOLOv3-LITE: A lightweight real-time object-detection method. Sensors 2020, 20, 1861. [Google Scholar] [CrossRef] [PubMed]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small object detection on unmanned aerial vehicle perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Cass, S. Nvidia makes it easy to embed AI: The Jetson nano packs a lot of machine-learning power into DIY projects—[Hands on]. IEEE Spectr. 2020, 57, 14–16. [Google Scholar] [CrossRef]

- Jetson Modules. 2021. Available online: https://developer.nvidia.com/embedded/jetson-modules (accessed on 12 January 2022).

- Kirk, D. NVIDIA Cuda Software and Gpu Parallel Computing Architecture. In Proceedings of the 6th International Symposium on Memory Management, ISMM’07, Montreal, QC, Canada, 21–22 October; Association for Computing Machinery: New York, NY, USA, 2007; pp. 103–104. [Google Scholar] [CrossRef]

- Krömer, P.; Nowaková, J. Medical Image Analysis with NVIDIA Jetson GPU Modules. In Proceedings of the Advances in Intelligent Networking and Collaborative Systems; Barolli, L., Chen, H.C., Miwa, H., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 233–242. [Google Scholar] [CrossRef]

- Jocher, G. yolov5. 2021. Available online: https://github.com/ultralytics/yolov5 (accessed on 31 January 2022).

- University, T. Crowd Counting. Available online: http://aiskyeye.com/download/crowd-counting_/ (accessed on 6 June 2022).

- Zhang, E.; Zhang, Y. Average Precision. In Encyclopedia of Database Systems; Liu, L., Özsu, M.T., Eds.; Springer: Boston, MA, USA, 2009; pp. 192–193. [Google Scholar] [CrossRef]

| Technical Specifications | |

|---|---|

| AI Performance | 472 GFLOPs |

| GPU | NVIDIA Maxwell architecture |

| CPU | Quad-core ARM Cortex-A57 MPCore processor |

| Cuda Core | 128 |

| Memory | 4 GB 64-bit LPDDR4 25.6 GB/s |

| Power | 5 W|10 W |

| Training Parameter | Value |

|---|---|

| Class | 1 |

| Batch size | 16 |

| Epoch | 100 |

| Learning rate | 1 × 10 |

| Model | Precision (%) | Recall (%) | AP (%) |

|---|---|---|---|

| YOLOv5 | 24.6 | 7.3 | 12.36 |

| YOLO-RGB-TL | 80.8 | 75.4 | 79.8 |

| YOLO-RGB | 71.5 | 68 | 70 |

| YOLO-TIR-TL | 12.2 | 13.3 | 4.94 |

| YOLO-TIR | 9.89 | 12 | 4.01 |

| YOLO-RGBT-TL | 80.1 | 75.1 | 79.1 |

| YOLO-RGBT | 76.5 | 66.8 | 71.4 |

| Model | Precision (%) | Recall (%) | AP (%) |

|---|---|---|---|

| YOLOv5 | 21.2 | 4.2 | 8.3 |

| YOLO-RGB-TL | 76.3 | 63.3 | 71.3 |

| YOLO-RGB | 66 | 61.4 | 64 |

| YOLO-TIR-TL | 86.6 | 84.2 | 88.8 |

| YOLO-TIR | 81.7 | 80.1 | 84.6 |

| YOLO-RGBT-TL | 86.3 | 83.9 | 88.8 |

| YOLO-RGBT | 82.5 | 81.5 | 85.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mantau, A.J.; Widayat, I.W.; Leu, J.-S.; Köppen, M. A Human-Detection Method Based on YOLOv5 and Transfer Learning Using Thermal Image Data from UAV Perspective for Surveillance System. Drones 2022, 6, 290. https://doi.org/10.3390/drones6100290

Mantau AJ, Widayat IW, Leu J-S, Köppen M. A Human-Detection Method Based on YOLOv5 and Transfer Learning Using Thermal Image Data from UAV Perspective for Surveillance System. Drones. 2022; 6(10):290. https://doi.org/10.3390/drones6100290

Chicago/Turabian StyleMantau, Aprinaldi Jasa, Irawan Widi Widayat, Jenq-Shiou Leu, and Mario Köppen. 2022. "A Human-Detection Method Based on YOLOv5 and Transfer Learning Using Thermal Image Data from UAV Perspective for Surveillance System" Drones 6, no. 10: 290. https://doi.org/10.3390/drones6100290

APA StyleMantau, A. J., Widayat, I. W., Leu, J.-S., & Köppen, M. (2022). A Human-Detection Method Based on YOLOv5 and Transfer Learning Using Thermal Image Data from UAV Perspective for Surveillance System. Drones, 6(10), 290. https://doi.org/10.3390/drones6100290