Auto-Encoder Learning-Based UAV Communications for Livestock Management

Abstract

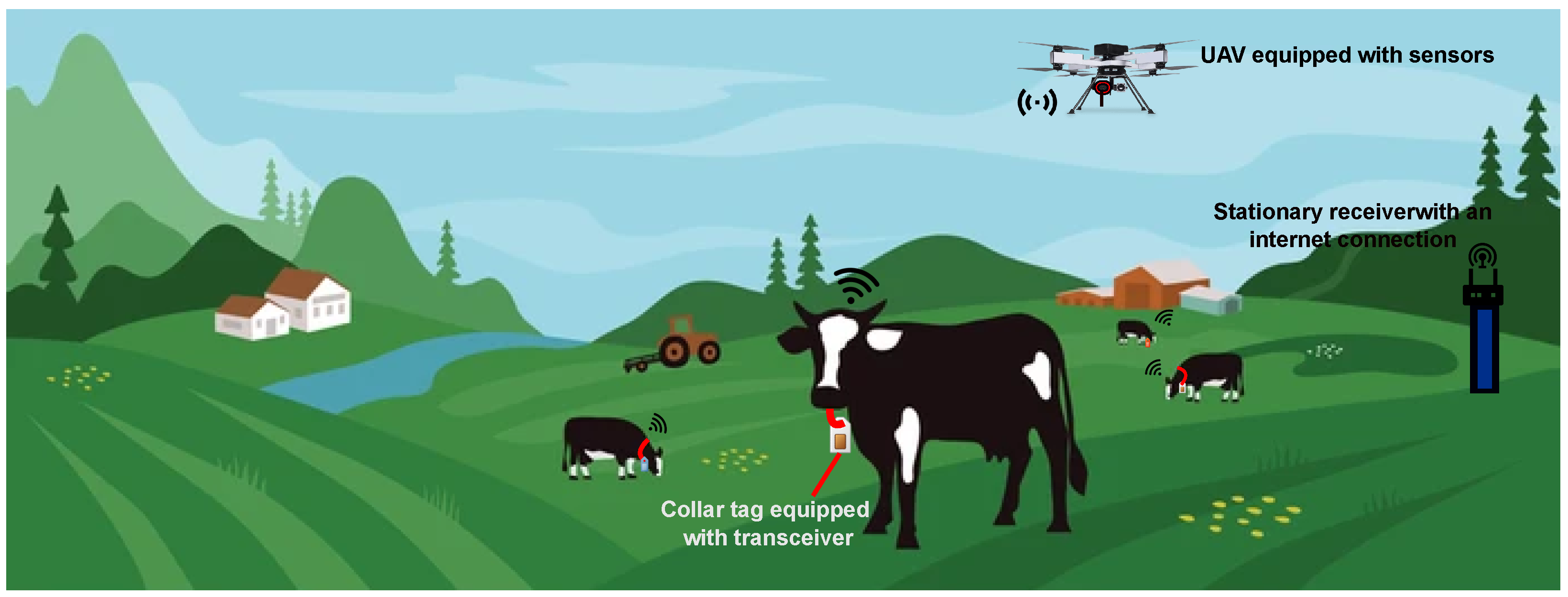

1. Introduction

1.1. Motivation

1.2. Related Works

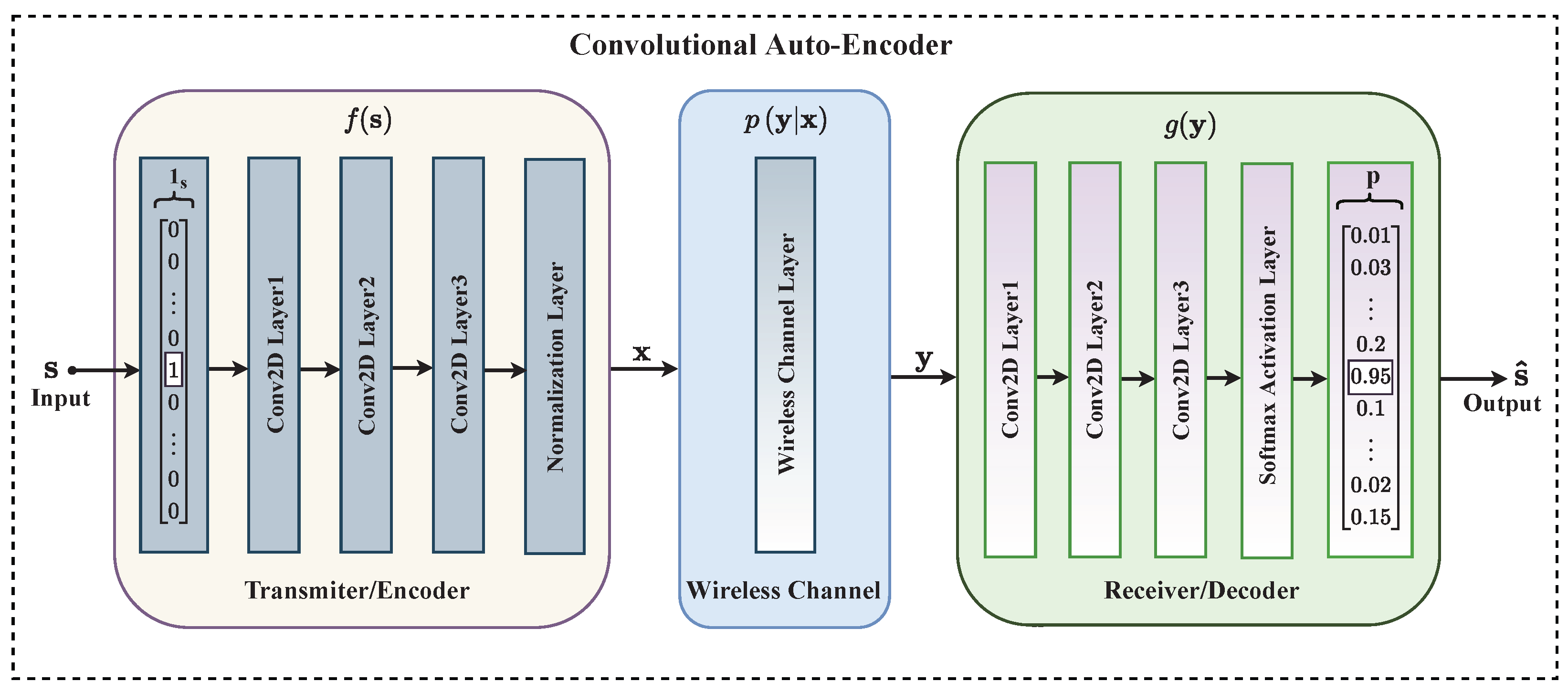

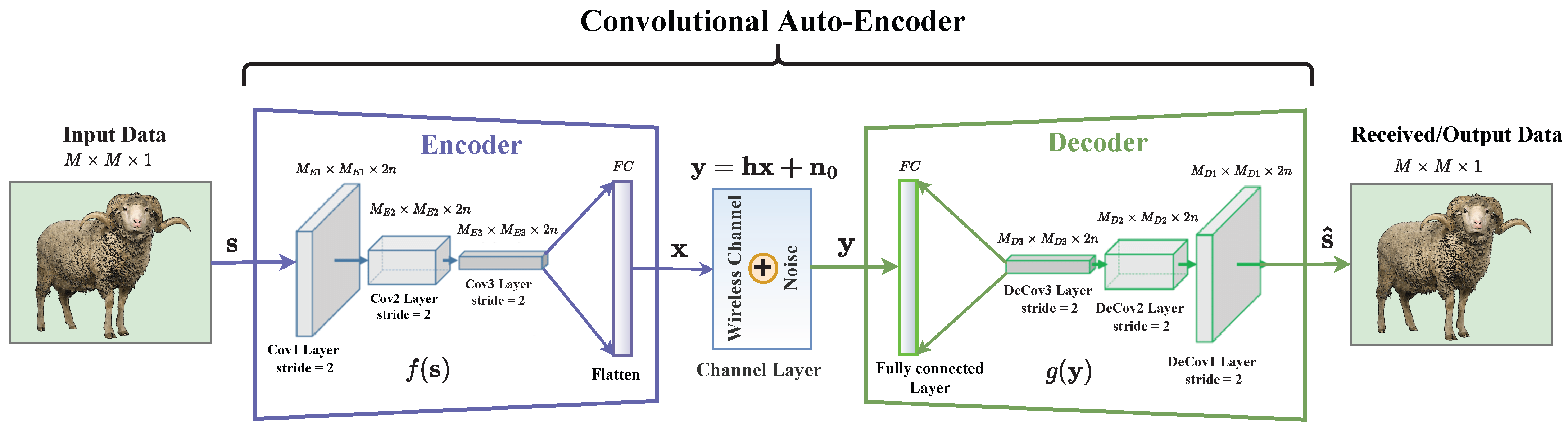

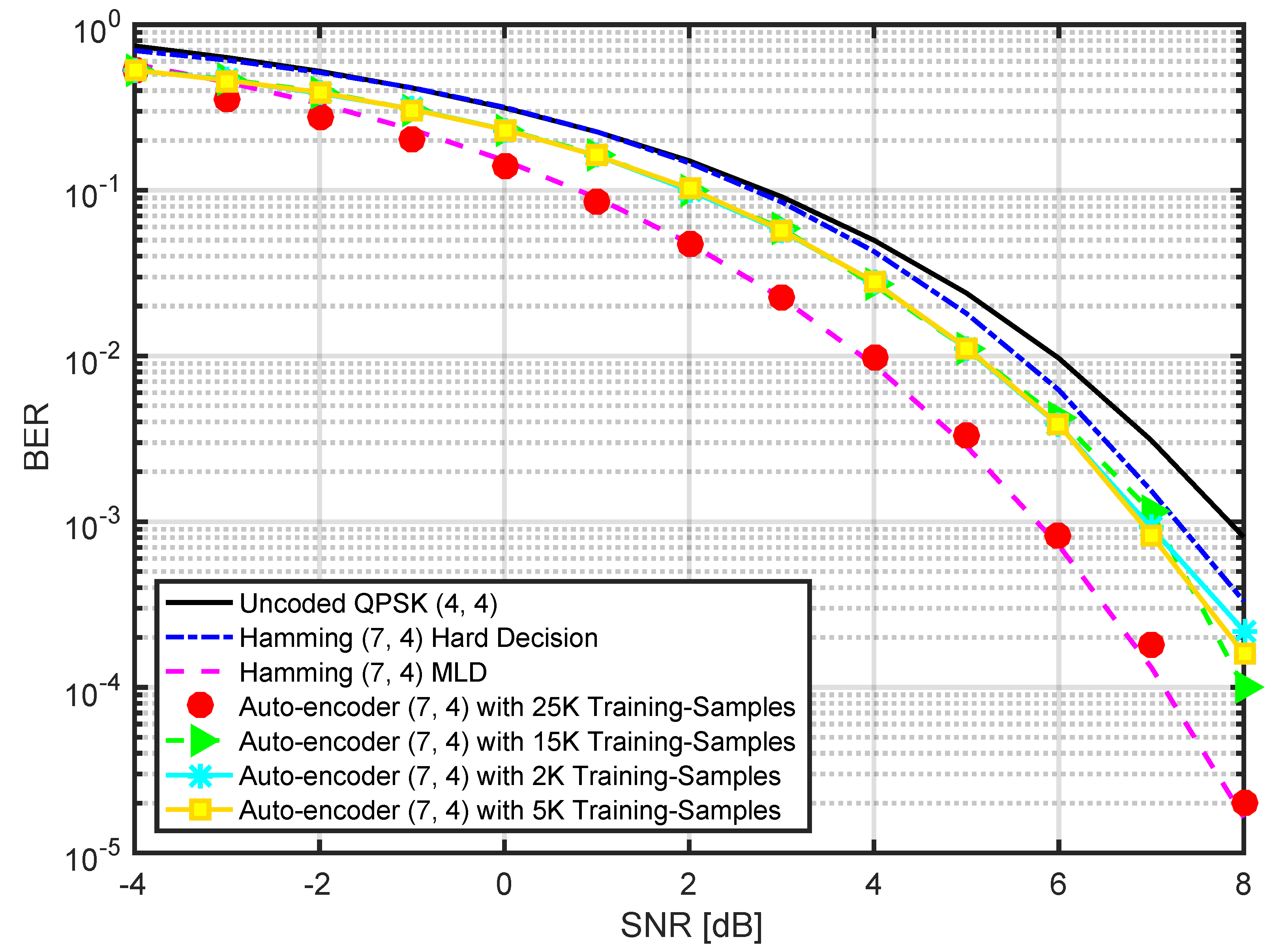

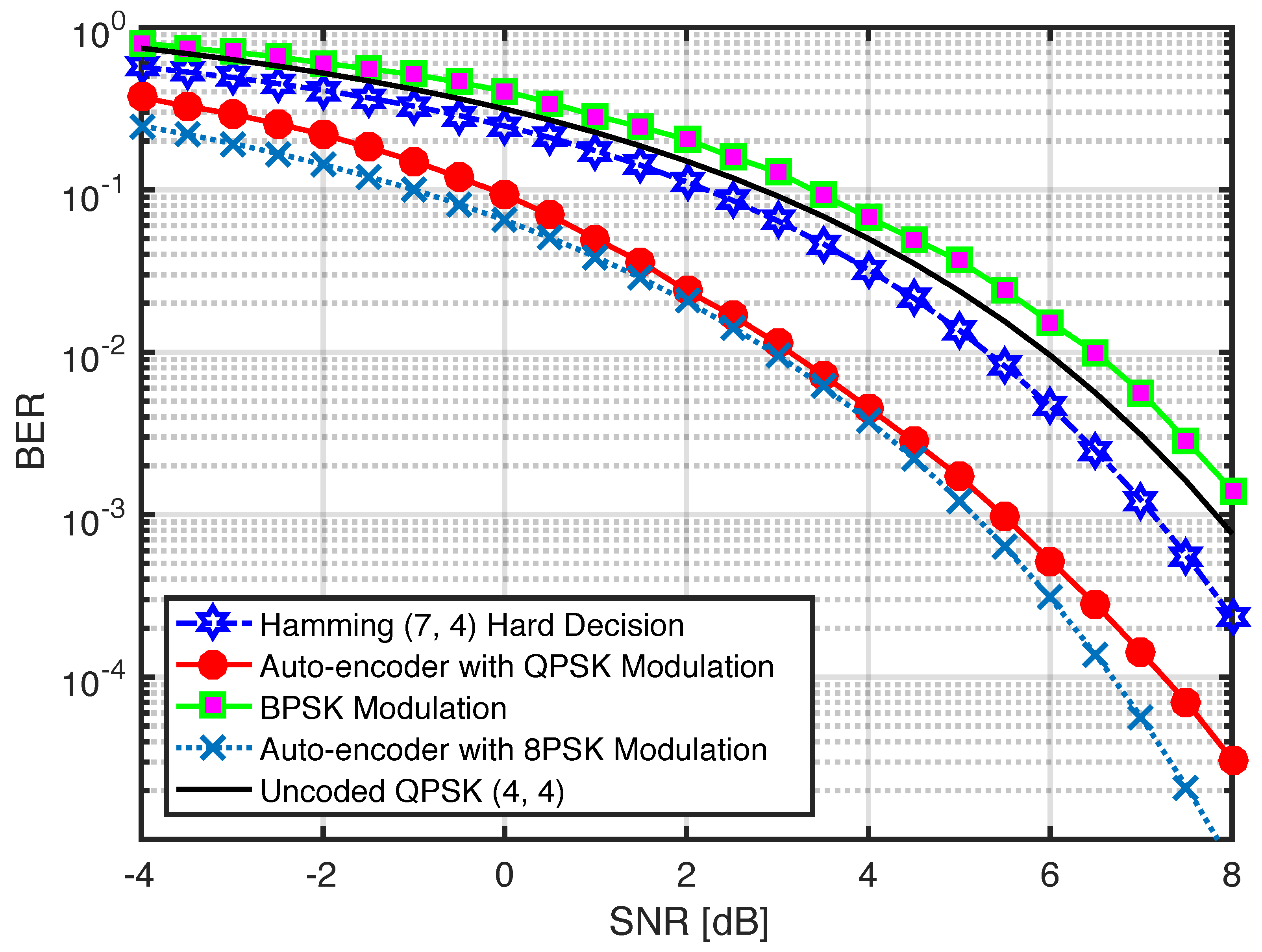

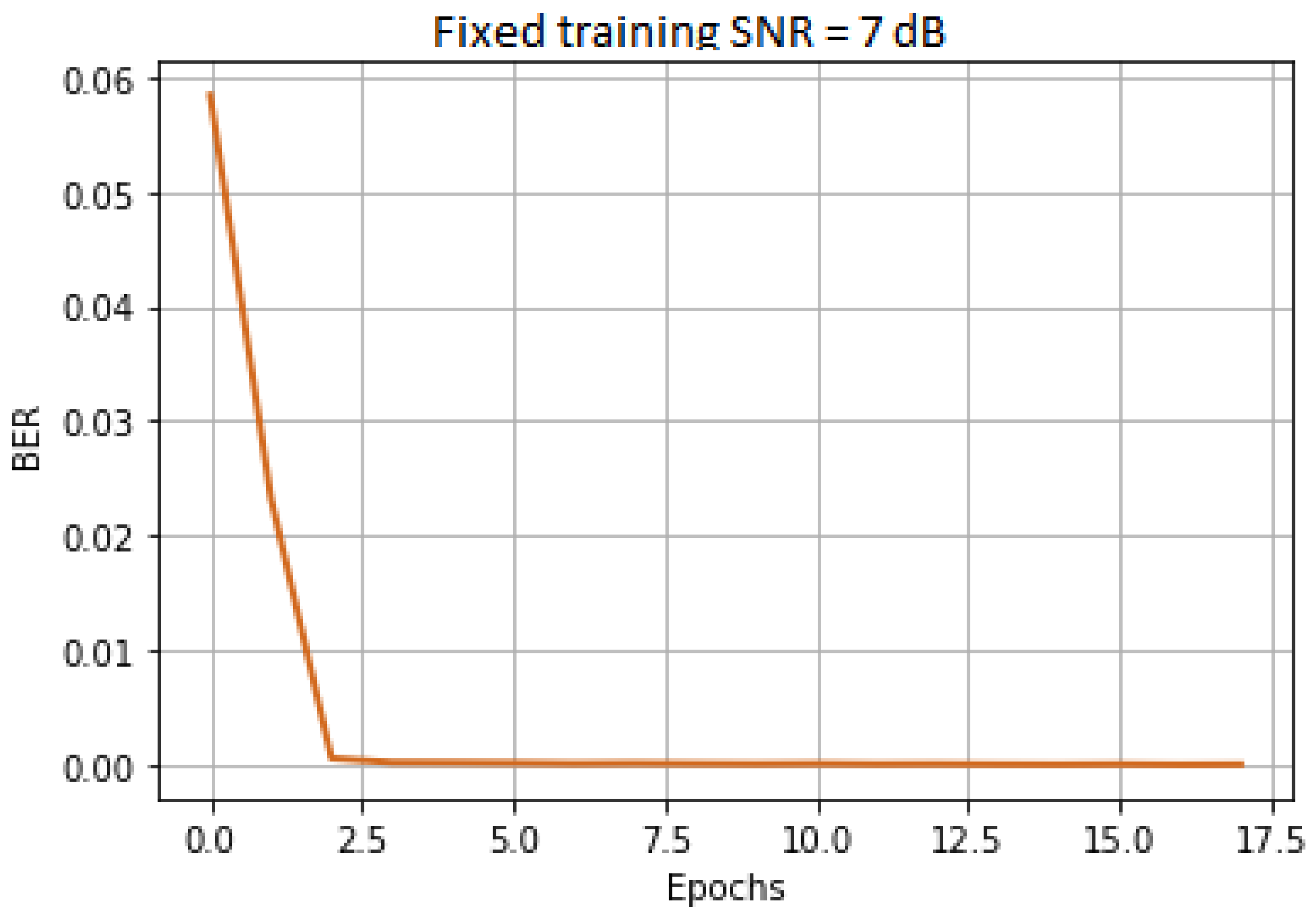

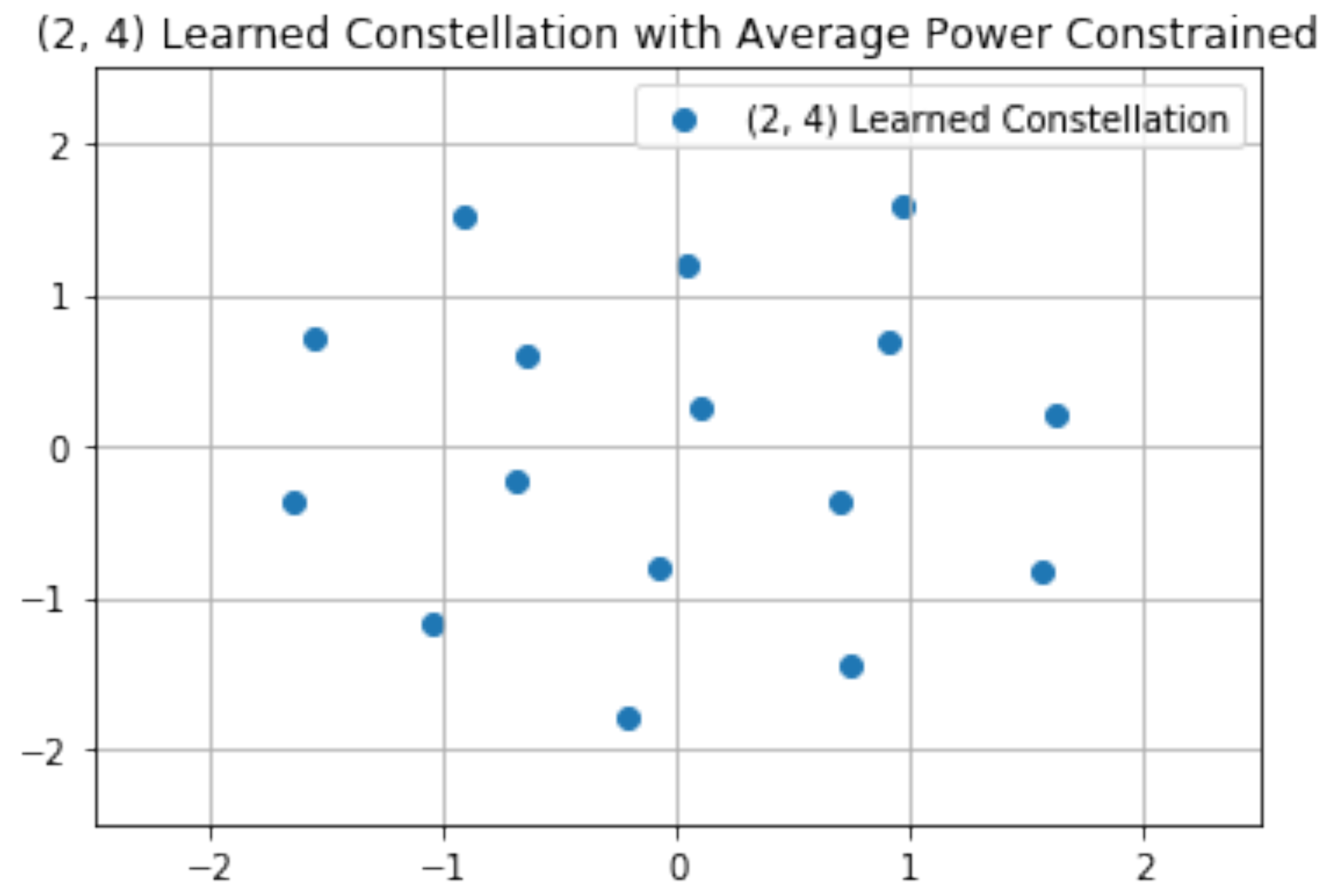

- We built an auto-encoder for end-to-end wireless communications for UAV-assisted livestock management systems. We showed that learning the entire transmitter (UAV) and receiver (GCS or UAV) implementations for a given communication channel link optimized for a chosen loss function (e.g., minimizing BER) is possible. The basic idea is to describe the transmitter, channel, and receiver as a single deep CNN that can be trained as an auto-encoder. Interestingly, this technique can be used as a model approximator to approximate optimal solutions for systems with unknown channel models and loss functions.

- We simulated the communication links with a different set of communication rates to learn various communication schemes, such as QPSK, 8PSK and 16QAM.

- For a (7, 4) communication rate, the proposed auto-encoder performance matched the optimal Hamming code maximum likelihood decoding scheme.

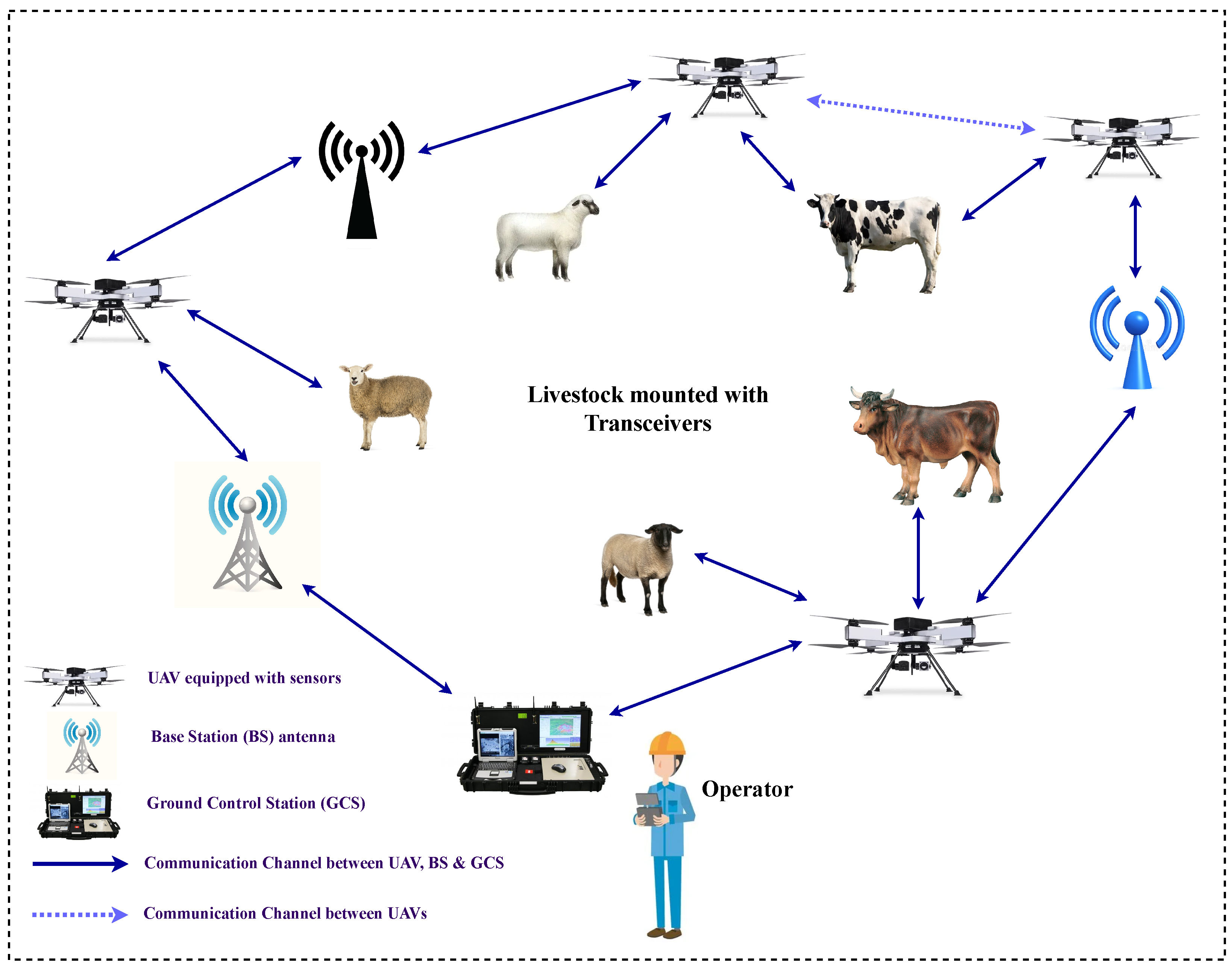

2. System Model and Problem Formulation

3. Proposed Methodology

Data Generation, Training and Inference

4. Results and Discussions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zeng, Y.; Zhang, R.; Lim, T.J. Wireless communications with unmanned aerial vehicles: Opportunities and challenges. IEEE Commun. Mag. 2016, 54, 36–42. [Google Scholar] [CrossRef]

- Nyamuryekung’e, S.; Cibils, A.F.; Estell, R.E.; Gonzalez, A.L. Use of an unmanned aerial vehicle-mounted video camera to assess feeding behavior of Raramuri Criollo cows. Rangel. Ecol. Manag. 2016, 69, 386–389. [Google Scholar] [CrossRef]

- Razaak, M.; Kerdegari, H.; Davies, E.; Abozariba, R.; Broadbent, M.; Mason, K.; Argyriou, V.; Remagnino, P. An integrated precision farming application based on 5G, UAV and deep learning technologies. In Proceedings of the International Conference on Computer Analysis of Images and Patterns, Salerno, Italy, 6 September 2019; pp. 109–119. [Google Scholar]

- Xiao, Z.; Zhu, L.; Xia, X.G. UAV communications with millimeter-wave beamforming: Potentials, scenarios, and challenges. China Commun. 2020, 17, 147–166. [Google Scholar] [CrossRef]

- Gura, D.; Rukhlinskiy, V.; Sharov, V.; Bogoyavlenskiy, A. Automated system for dispatching the movement of unmanned aerial vehicles with a distributed survey of flight tasks. J. Intell. Syst. 2021, 30, 728–738. [Google Scholar] [CrossRef]

- Miao, W.; Luo, C.; Min, G.; Wu, L.; Zhao, T.; Mi, Y. Position-based Beamforming Design for UAV communications in LTE networks. In Proceedings of the ICC 2019-2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Vroegindeweij, B.A.; van Wijk, S.W.; van Henten, E. Autonomous unmanned aerial vehicles for agricultural applications. In Proceedings of the International Conference of Agricultural Engineering (AgEng), Zurich, Switzerland, 6–10 July 2014. [Google Scholar]

- Li, X.; Xing, L. Use of unmanned aerial vehicles for livestock monitoring based on streaming K-means clustering. Ifac-Papersonline 2019, 52, 324–329. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q.V. Unmanned aerial vehicles in smart agriculture: Applications, requirements, and challenges. IEEE Sens. J. 2021, 21, 17608–17619. [Google Scholar] [CrossRef]

- Alanezi, M.A.; Sadiq, B.O.; Sha’aban, Y.A.; Bouchekara, H.R.E.H. Livestock Management on Grazing Field: A FANET Based Approach. Appl. Sci. 2022, 12, 6654. [Google Scholar] [CrossRef]

- Sejian, V.; Silpa, M.; Lees, A.M.; Krishnan, G.; Devaraj, C.; Bagath, M.; Anisha, J.; Reshma Nair, M.; Manimaran, A.; Bhatta, R.; et al. Opportunities, Challenges, and Ecological Footprint of Sustaining Small Ruminant Production in the Changing Climate Scenario. In Agroecological Footprints Management for Sustainable Food System; Springer: Singapore, 2021; pp. 365–396. [Google Scholar]

- Faraji-Biregani, M.; Fotohi, R. Secure communication between UAVs using a method based on smart agents in unmanned aerial vehicles. J. Supercomput. 2021, 77, 5076–5103. [Google Scholar] [CrossRef]

- Behjati, M.; Mohd Noh, A.B.; Alobaidy, H.A.; Zulkifley, M.A.; Nordin, R.; Abdullah, N.F. LoRa communications as an enabler for internet of drones towards large-scale livestock monitoring in rural farms. Sensors 2021, 21, 5044. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Zhang, W.; Wang, W.; Yang, L.; Zhang, W. Research challenges and opportunities of UAV millimeter-wave communications. IEEE Wirel. Commun. 2019, 26, 58–62. [Google Scholar] [CrossRef]

- Puri, V.; Nayyar, A.; Raja, L. Agriculture drones: A modern breakthrough in precision agriculture. J. Stat. Manag. Syst. 2017, 20, 507–518. [Google Scholar] [CrossRef]

- Jiangpeng, Z.; Haiyan, C.; Liwen, H.; Yong, H. Development and performance evaluation of a multi-rotor unmanned aircraft system for agricultural monitoring. Smart Agric. 2019, 1, 43. [Google Scholar]

- Abdulai, G.; Sama, M.; Jackson, J. A preliminary study of the physiological and behavioral response of beef cattle to unmanned aerial vehicles (UAVs). Appl. Anim. Behav. Sci. 2021, 241, 105355. [Google Scholar] [CrossRef]

- Kaya, S.; Goraj, Z. The Use of Drones in Agricultural Production. Int. J. Innov. Approaches Agric. Res. 2020, 4, 166–176. [Google Scholar] [CrossRef]

- Yinka-Banjo, C.; Ajayi, O. Sky-farmers: Applications of unmanned aerial vehicles (UAV) in agriculture. In Autonomous Vehicles; IntechOpen: London, UK, 2019; pp. 107–128. [Google Scholar]

- Manlio, B.; Paolo, B.; Alberto, G.; Massimiliano, R. Unmanned Aerial Vehicles for Agriculture: An Overview of IoT-Based Scenarios. In Autonomous Airborne Wireless Networks; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2021; pp. 217–235. [Google Scholar]

- Uche, U.; Audu, S. UAV for Agrochemical Application: A Review. Niger. J. Technol. 2021, 40, 795–809. [Google Scholar] [CrossRef]

- Alanezi, M.A.; Shahriar, M.S.; Hasan, M.B.; Ahmed, S.; Sha’aban, Y.A.; Bouchekara, H.R. Livestock Management with Unmanned Aerial Vehicles: A Review. IEEE Access 2022, 10, 45001–45028. [Google Scholar] [CrossRef]

- Mohammad, A.; Masouros, C.; Andreopoulos, Y. Accelerated learning-based MIMO detection through weighted neural network design. In Proceedings of the ICC 2020-2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Andrew, W.; Greatwood, C.; Burghardt, T. Visual localisation and individual identification of holstein friesian cattle via deep learning. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2850–2859. [Google Scholar]

- Rivas, A.; Chamoso, P.; González-Briones, A.; Corchado, J.M. Detection of cattle using drones and convolutional neural networks. Sensors 2018, 18, 2048. [Google Scholar] [CrossRef] [PubMed]

- Andrew, W.; Greatwood, C.; Burghardt, T. Aerial animal biometrics: Individual friesian cattle recovery and visual identification via an autonomous uav with onboard deep inference. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 237–243. [Google Scholar]

- Mohammad, A.; Masouros, C.; Andreopoulos, Y. Complexity-scalable neural-network-based MIMO detection with learnable weight scaling. IEEE Trans. Commun. 2020, 68, 6101–6113. [Google Scholar] [CrossRef]

- Goutay, M.; Aoudia, F.A.; Hoydis, J. Deep hypernetwork-based MIMO detection. In Proceedings of the 2020 IEEE 21st International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), Atlanta, GA, USA, 26–29 May 2020; pp. 1–5. [Google Scholar]

- Mohammad, A.; Masouros, C.; Andreopoulos, Y. An unsupervised learning-based approach for symbol-level-precoding. In Proceedings of the 2021 IEEE Global Communications Conference (GLOBECOM), Madrid, Spain, 7–11 December 2021; pp. 1–6. [Google Scholar]

- O’shea, T.; Hoydis, J. An introduction to deep learning for the physical layer. IEEE Trans. Cogn. Commun. Netw. 2017, 3, 563–575. [Google Scholar] [CrossRef]

- Feng, Y.; Teng, Z.; Meng, F.; Qian, B. An accurate modulation recognition method of QPSK signal. Math. Probl. Eng. 2015, 2015, 516081. [Google Scholar] [CrossRef]

- Sklar, B. Digital Communications; Prentice Hall: Upper Saddle River, NJ, USA, 2001; Volume 2. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

| Layer | Output |

|---|---|

| Input | |

| 2D Convolution + ReLU | , |

| 2D Convolution + ReLU | , |

| 2D Convolution + ReLU | , |

| Flatten | |

| Normalization | |

| Wireless channel + Noise | |

| Fully Connected + ReLU | |

| 2D Convolution + ReLU | , |

| 2D Convolution + ReLU | , |

| 2D Convolution + ReLU | , |

| Fully Connected + softmax |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alanezi, M.A.; Mohammad, A.; Sha’aban, Y.A.; Bouchekara, H.R.E.H.; Shahriar, M.S. Auto-Encoder Learning-Based UAV Communications for Livestock Management. Drones 2022, 6, 276. https://doi.org/10.3390/drones6100276

Alanezi MA, Mohammad A, Sha’aban YA, Bouchekara HREH, Shahriar MS. Auto-Encoder Learning-Based UAV Communications for Livestock Management. Drones. 2022; 6(10):276. https://doi.org/10.3390/drones6100276

Chicago/Turabian StyleAlanezi, Mohammed A., Abdullahi Mohammad, Yusuf A. Sha’aban, Houssem R. E. H. Bouchekara, and Mohammad S. Shahriar. 2022. "Auto-Encoder Learning-Based UAV Communications for Livestock Management" Drones 6, no. 10: 276. https://doi.org/10.3390/drones6100276

APA StyleAlanezi, M. A., Mohammad, A., Sha’aban, Y. A., Bouchekara, H. R. E. H., & Shahriar, M. S. (2022). Auto-Encoder Learning-Based UAV Communications for Livestock Management. Drones, 6(10), 276. https://doi.org/10.3390/drones6100276