1. Introduction

As small unmanned aerial system (sUAS) methods and technologies become increasingly more affordable, they are well poised to shift the way land and wildlife managers conduct data acquisition [

1,

2,

3]. In forestry applications, sUAS platforms present opportunities to reduce operating cost and increase data precision by substituting for more traditional methods [

4,

5,

6,

7]. With sUAS methods, personnel needs are lowered, larger datasets are obtained faster, and limiting factors such as harsh terrain are not as impactful when compared to ground survey approaches.

sUAS are particularly advantageous when assessing forest classes and structure in fine detail [

5]. Finer resolutions than 1 cm are possible, depending on flight configurations. Temporal scale is also relatively unrestricted, with sUAS technologies easily and quickly deployable [

8]. This makes sUAS approaches a relatively cost-effective and flexible data acquisition option when compared to alternatives such as manned flight or satellite remote sensing methods [

7].

Decentralization of forest management from government to smaller communities is an important trend that works in the interest of forest conservation [

9]. Numerous countries are currently or beginning to adopt this structure of forest governance because of the benefits of empowering community or municipal level forest managers [

10,

11,

12]. Successful forest governance looms large in the climate change agenda, with deforestation and loss of biodiversity being factors to consider [

13]. Affordable and accessible forest monitoring methods are an invaluable tool to these communities of forest managers, many of whom are already leveraging sUAS technologies to acquire data and perform analysis [

14,

15,

16,

17]. Opportunities to reinforce the capabilities of these technologies and expand on their applications will only continue to enable forest managers who conduct data acquisition with limited resources.

Standout methods for assessing vertical structure of forested areas include Structure from Motion (SfM) and LIDAR, or light detection and ranging, with multiple examples of comparisons in the literature [

18,

19,

20,

21]. SfM uses photogrammetry algorithms and numerous overlapping photos to model vegetation structure, whereas LIDAR sends and receives light pulses for measuring “ranges” in the study area. Both methods generate a 3-dimensional point cloud representing vegetation structure. This is then used to assess a variety of forest indices, such as tree height, stand inventorying, or biomass measurements [

6,

22,

23]. While LIDAR has shown to be an effective approach for penetrating past the upper canopy to evaluate vertical forest structure, it is a relatively expensive method when compared to SfM.

The use of low-cost sUAS technologies has already been leveraged in other successful studies where SfM served as a feasible method of point cloud generation [

21,

24,

25,

26,

27]. SfM was employed in New South Wales to highlight the benefits and capabilities of photogrammetric methods for detecting sirex-attacked trees, and monitoring forest health remotely [

25]. Color orthophotos proved to be an effective tool in this case, especially when paired with a near-infrared band. Information as discrete as tree species was identified in another study, where supervised classification using a Random Forests classifier was performed to identify various tree species along multiple phenological timelines [

26]. The Red-Green-Blue color bands, the same acquired by most consumer-grade cameras, proved to be more effective at species identification than near-infrared sensing in this study. Another study used multi-temporal crop surface models (CSMs) to derive barley plant height (PH), and later estimate crop biomass using PH and linear modeling [

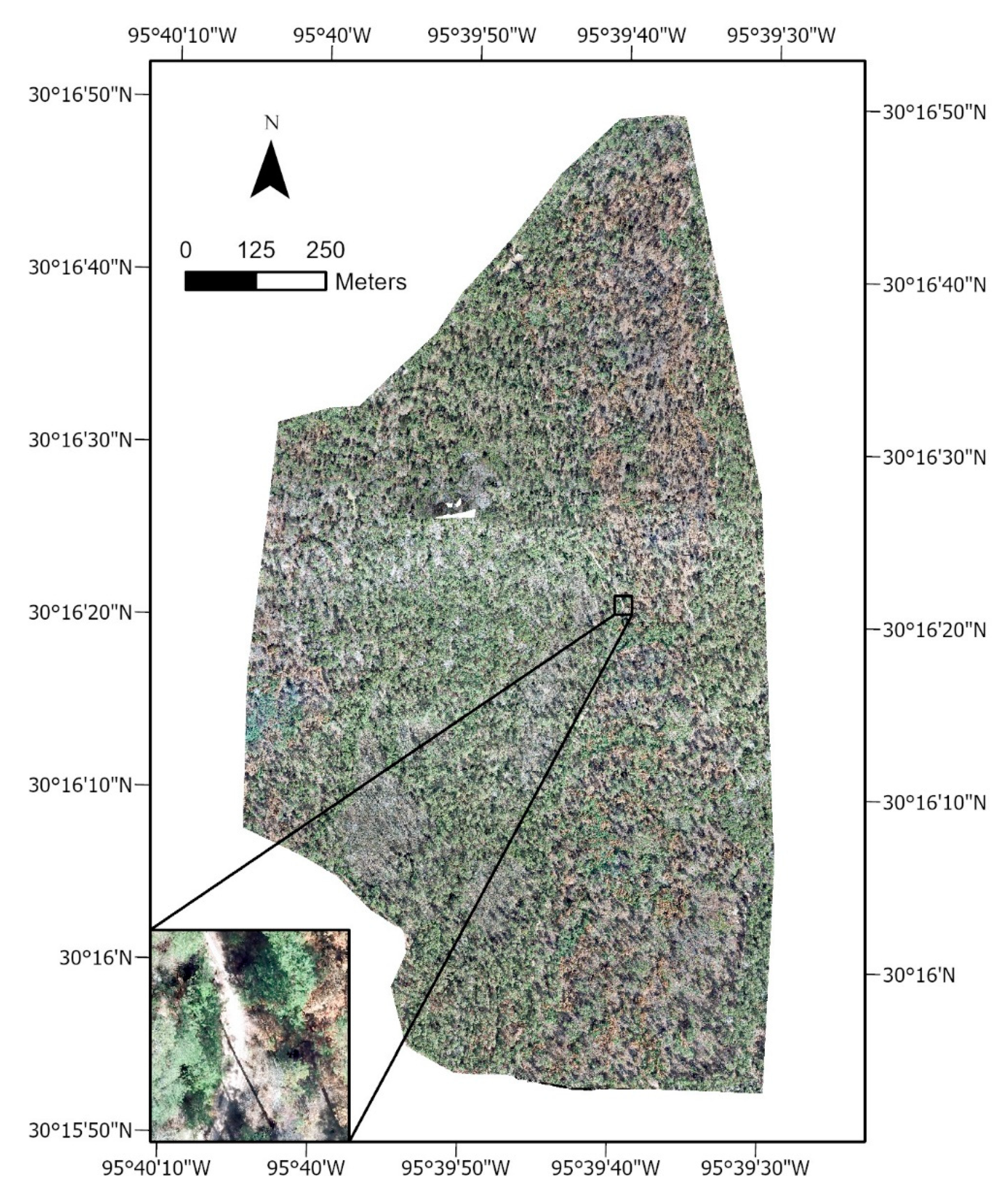

27]. These technologies are highly adaptable, and can be applied to a variety of research questions. In our case, we sought to use SfM combined with a consumer-grade sUAS to assess red-cockaded woodpecker habitat.

Red-cockaded woodpeckers (

Dryobates borealis) are currently listed as an endangered species by the U.S. Fish and Wildlife Service, and are endemic to the Southeastern United States [

28,

29,

30]. One of the primary characters defining high-quality red-cockaded woodpecker (RCW) habitat is old pine trees for roosting and nesting cavity excavation; preferably Longleaf Pine (

Pinus palustris) or Shortleaf Pine (

Pinus echinate), followed by Loblolly Pine (

Pinus taeda) or Slash Pine (

Pinus elliotii), although their presence, absence, and ratios can vary by region and management history [

28,

29,

30,

31,

32]. Other factors determining habitat quality include herbaceous groundcover, intermediate pine density, and absence of midstory, all of which are positive contributors to RCW fitness [

28,

30,

32]. These three habitat features are also positively correlated with the use of prescribed fire, particularly growing season fires, as a management tool [

28,

30,

32]. Frances C. James et al. concluded that the density of larger trees (>35 cm diameter at breast height (dbh)) compared to smaller trees (15–25 cm dbh), and also the ratio of herbaceous ground cover to woody ground cover, are both major contributors to RCW fitness [

33]. Another study by France C. James et al. suggests that, in addition to ground cover composition, the extent of natural pine regeneration was also significantly related to the birds’ success and therefore habitat quality [

34].

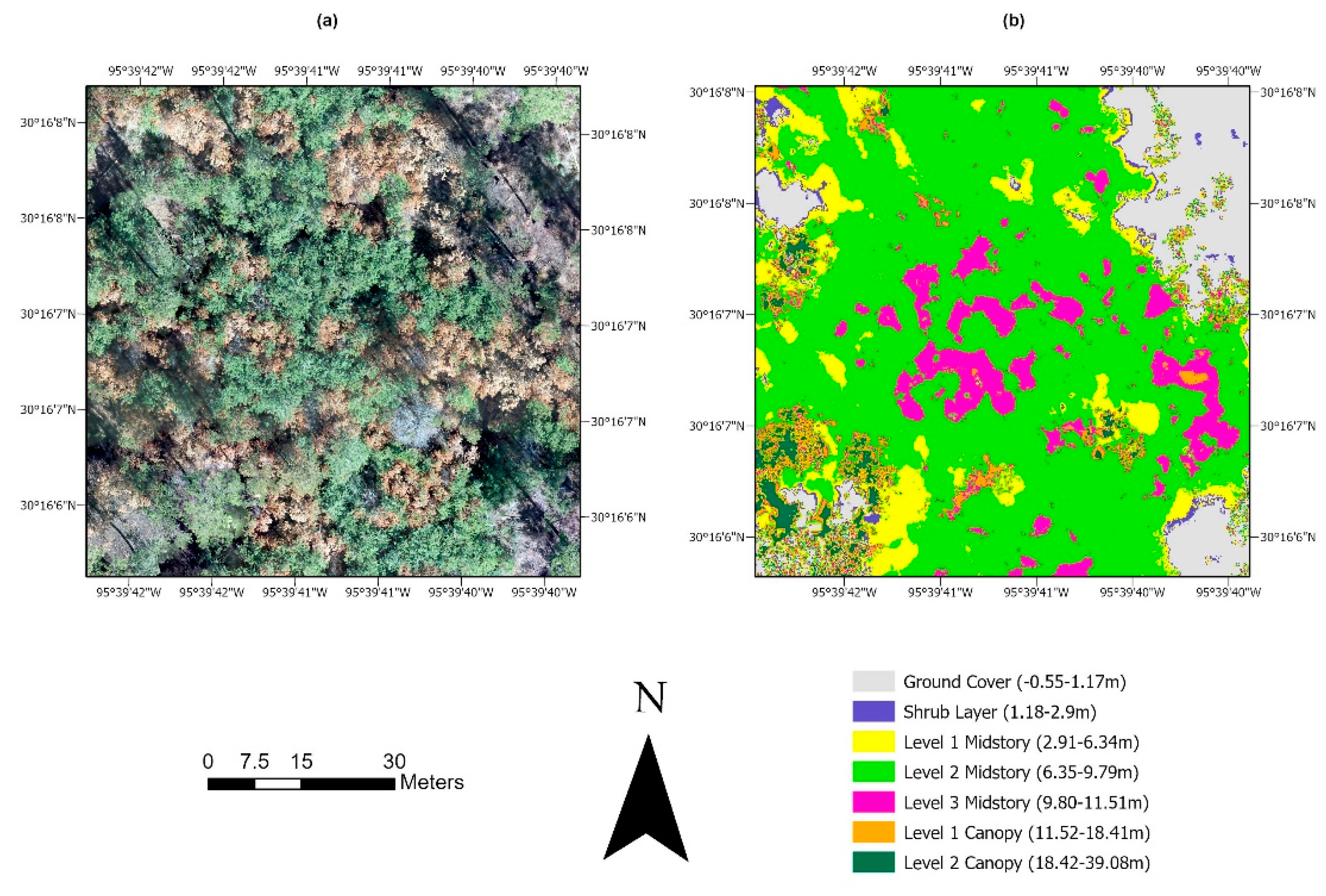

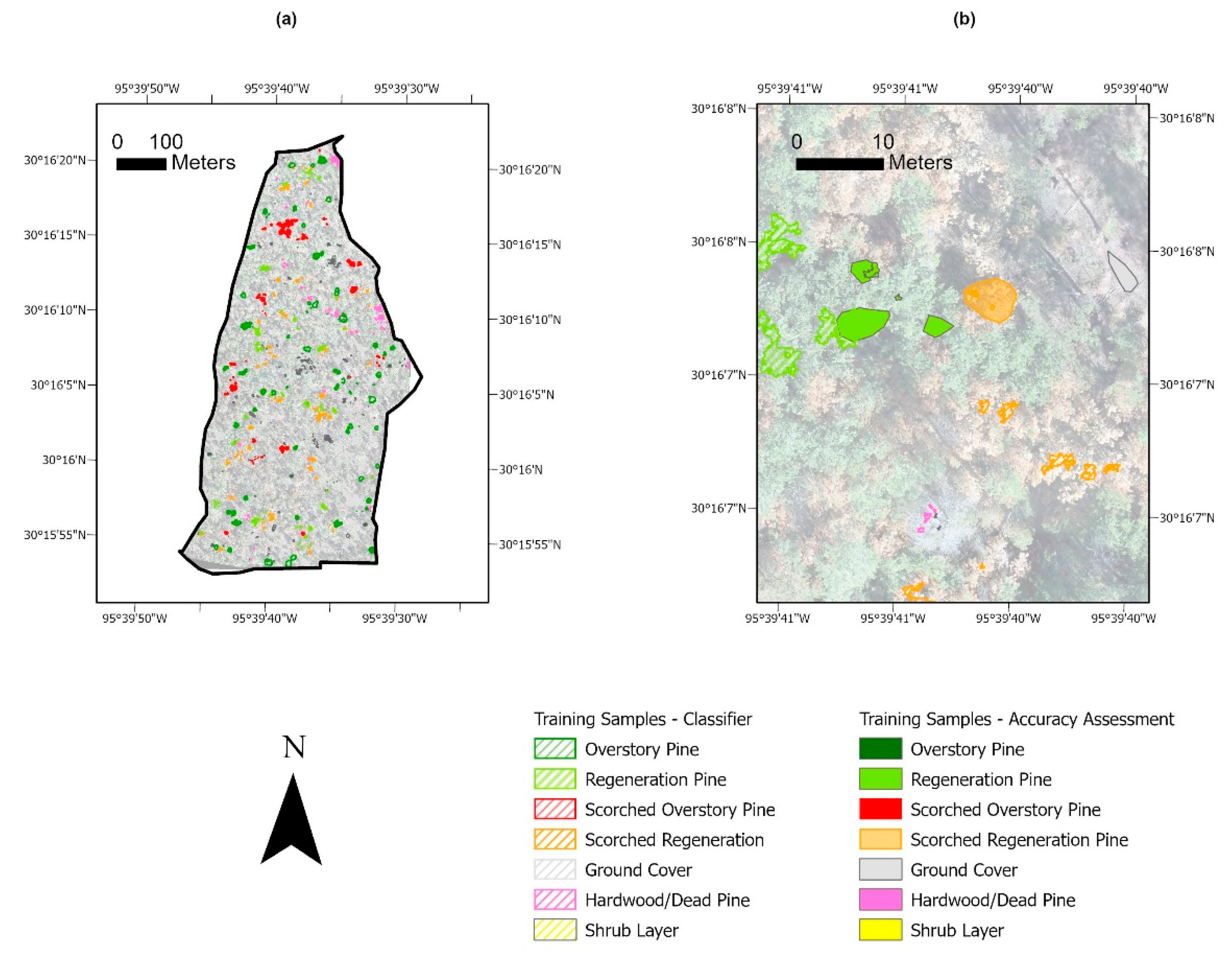

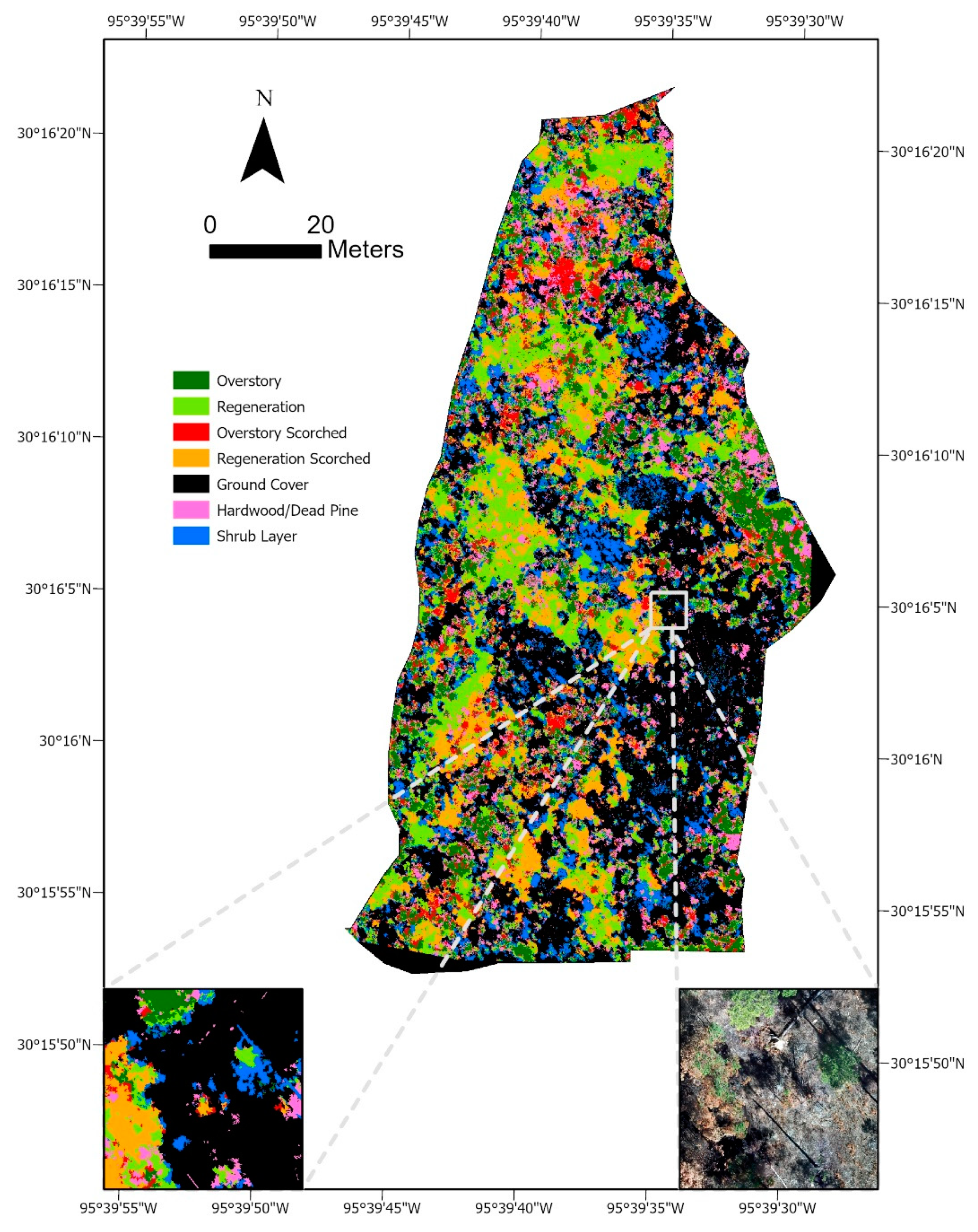

What previous studies have made clear is that the distribution of these various forest classes and their abundance are major indicators of RCW habitat quality. There are some examples where LIDAR technologies were used to asses the vegetative structure of RCW habitat or similar habitat [

35,

36]. In this case, SfM will be used as a low-cost, low-complexity alternative for RCW habitat assessment. Of particular interests are the habitat quality indicators mentioned above; herbaceous ground cover, the prescence of woody midstory, the amount of pine regeneration, and the amount of mature or overstory pine.

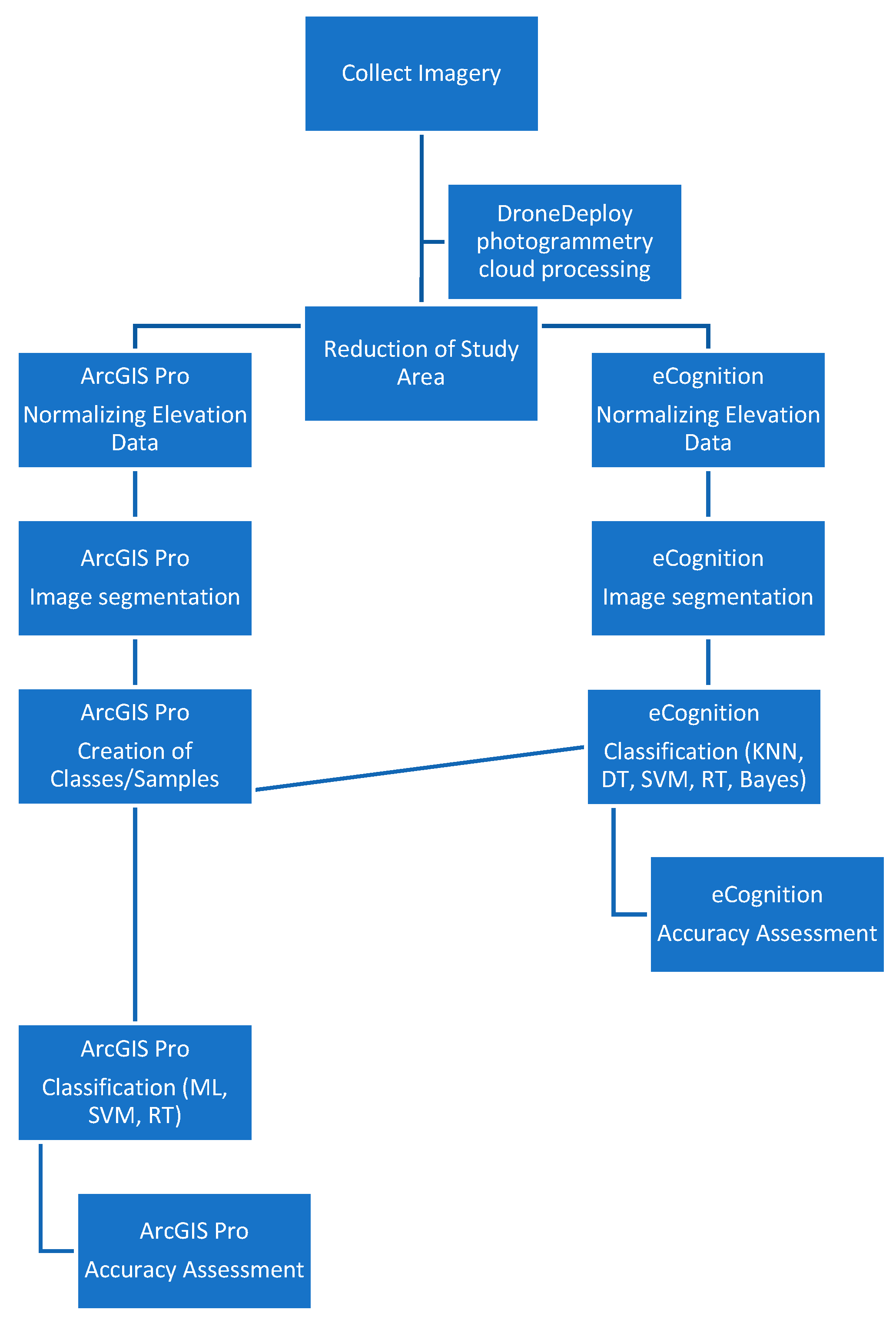

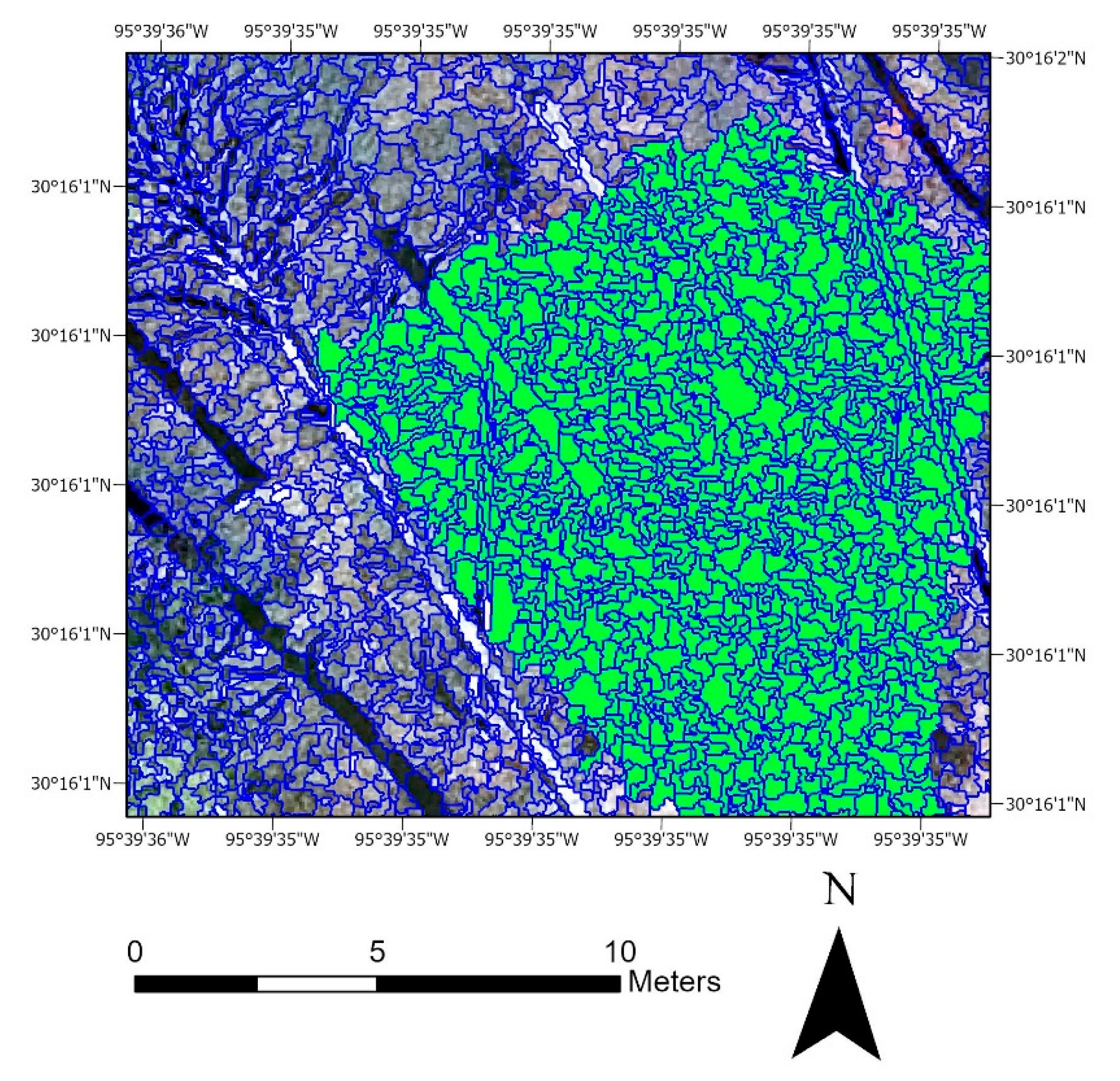

4. Discussion

Overall, accuracy assessments yielded positive classification results amongst almost all the forest classes, but could be moderately to significantly impacted by the classifier used. Support Vector Machine produced the best accuracy results in ArcGIS Pro, and K-Nearest Neighbor in the case of eCognition. Support Vector Machine performed the worst when used in eCognition, and by a wide margin at 12% overall accuracy. While the Hardwood/Dead Pine class was not a target group for this study, classifiers performed relatively inaccurately when classifying this group.

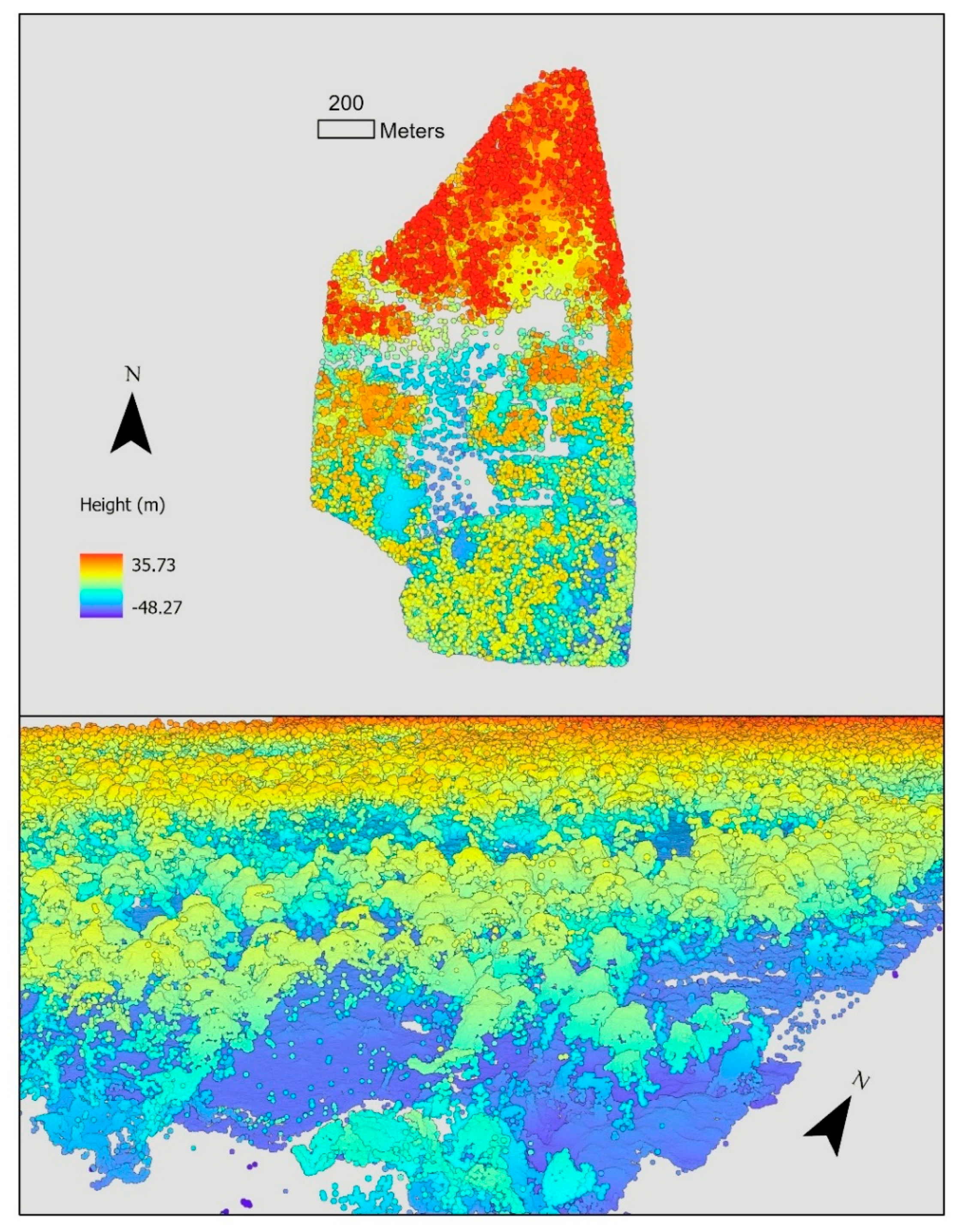

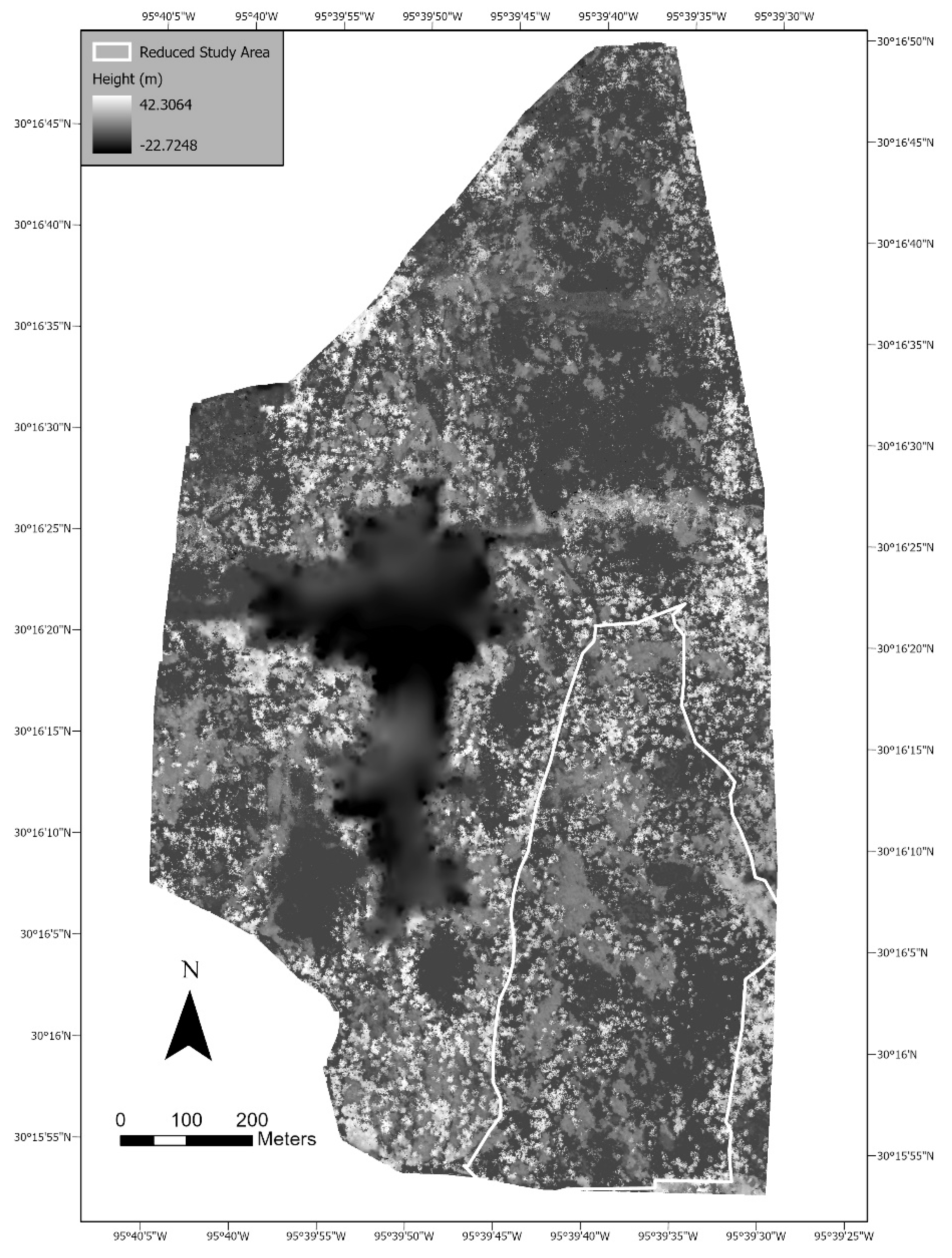

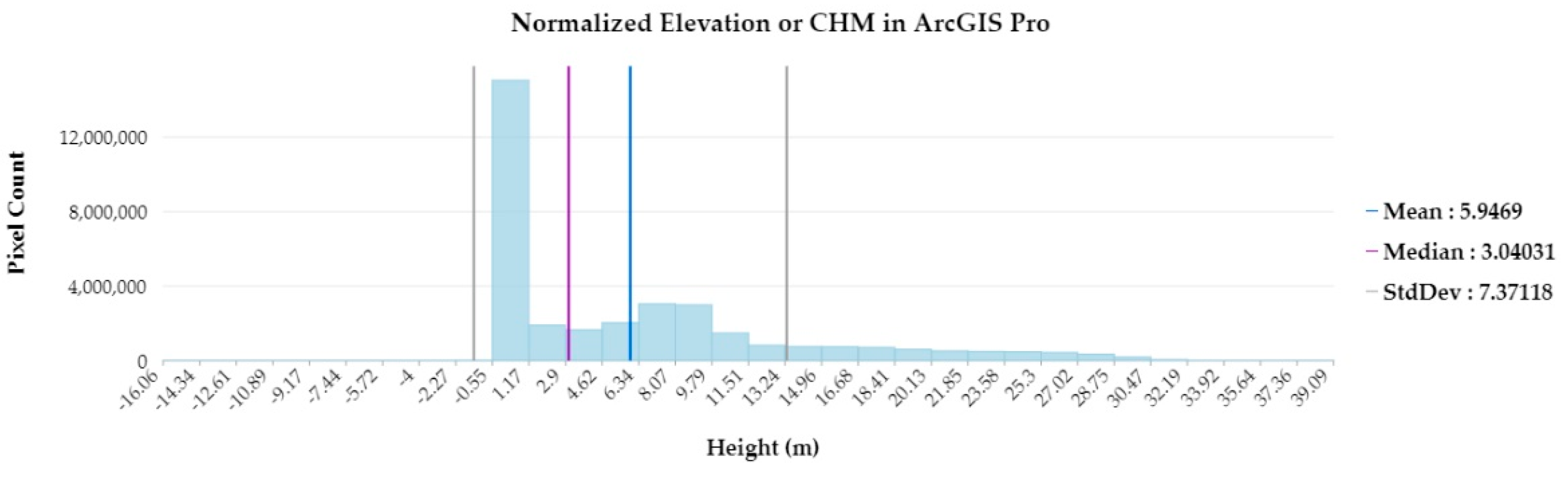

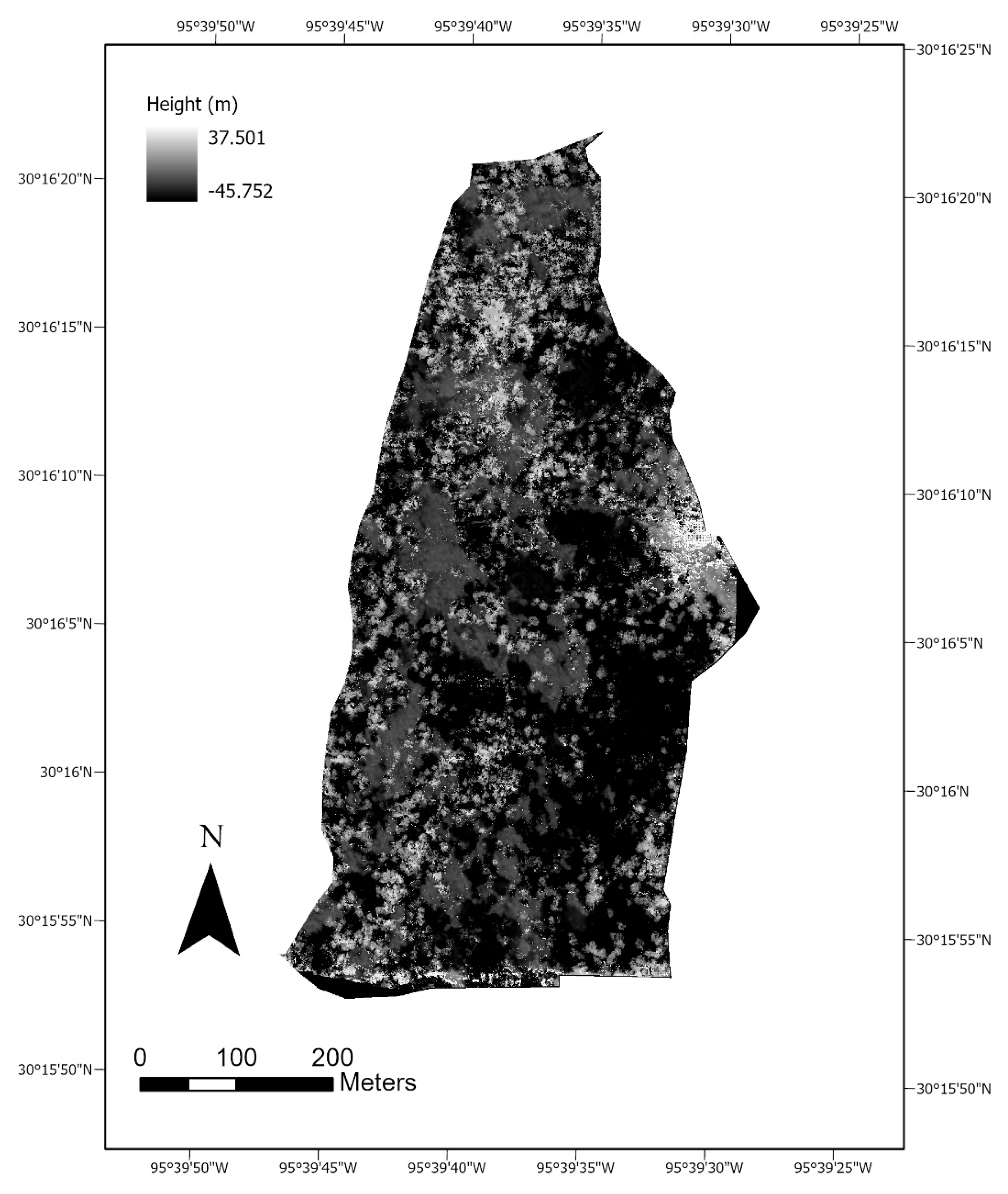

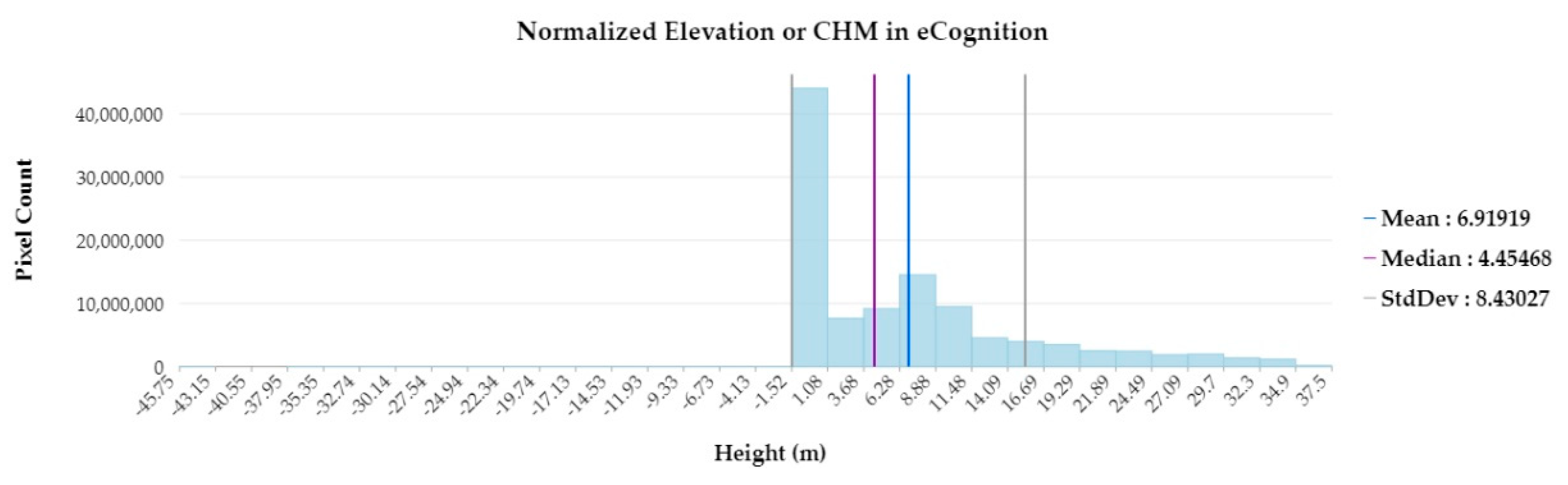

Reclassifying the CHM in ArcGIS Pro arguably had a positive impact on accuracy results and is possibly one explanation for its outperformance of eCognition. This extra step was the major difference between the two classification methodologies used in the software packages. Additionally, the image segmentation algorithms, and CHM outputs were slightly different for eCognition and ArcGIS Pro. Outside of these exceptions, both software programs used the same orthoimage, DTM, point cloud, training samples, and accuracy assessment samples.

Producer accuracy was consistently lower for overstory classes when compared to their regeneration counterparts. This is most likely because of the complex assortment of height classes found within an overstory pine’s canopy, and because fine resolution elevation data can penetrate past the upper-most canopy vegetation. Regeneration elevation data were typically more continuous, and not as interrupted by canopy openings large enough to create complex and variable elevation groupings. Therefore, training samples for regeneration classes were not capturing a variety of height classes, possibly resulting in higher producer accuracies. Random Trees in ArcGIS Pro was the most successful at reducing this error (

Table A2).

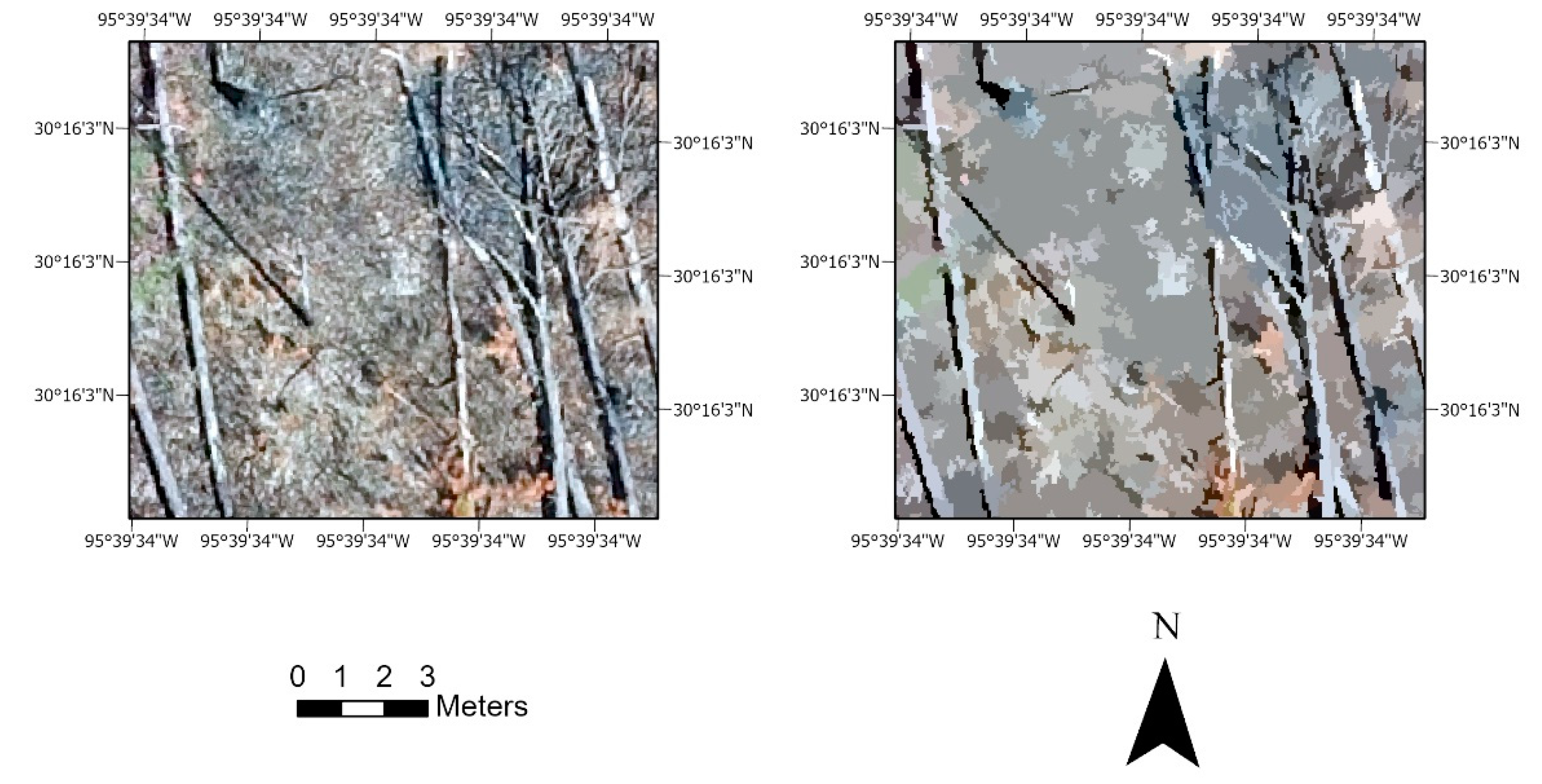

Low user accuracy for Hardwood/Dead Pine is consistent across all the ArcGIS Pro classifications, while producer accuracy is relatively high. This indicates that despite having well-referenced training samples, all three classification strategies struggled to accurately classify Hardwood/Dead Pine. A possible explanation is the spectral similarities between leaf-off hardwood and dead pine, and the frequent presence of dead, woody debris on the ground level. This creates a situation where objects of similar spectral characters, such as color and texture, also possess an unpredictable range of height classifications. Confusion matrix results appear to support this idea, with all three ArcGIS Pro classifiers possessing a significant amount of Ground Cover mistakenly classified as Hardwood/Dead Pine.

The results provide a number of insights into the quality of red-cockaded woodpecker habitat based on standards created by previous studies. The RCW recovery plan recommends managing for 40% or more herbaceous groundcover, with group size and reproduction increasing at this threshold [

28]. Our measurement of Ground Cover was just shy of this threshold, at 38.09%. Next, a measure of woody hardwood with a height of approximately 2.7 m or less is made with our Shrub Layer class, at 7.01–15.24% depending on the classifier. A reduction in this class has shown to have a positive impact on RCW fitness, and here we successfully quantified and mapped its distribution. This information can help with vegetation management approaches such as mechanical removal or herbicidal treatments.

Comparisons can also be made between the amount of Pine Overstory to Pine Regeneration, and the ratio of Ground Cover to Shrub Layer. Both of these ratios are considered strong indicators of RCW habitat quality based on one study [

33]. Specifically, the indicators used were the difference between 15–25 cm dbh trees and >35 cm dbh trees, and also the difference in groundcover between wiregrass and woody-plug-palmetto vegetation. Frances C. James et al. performed their work on the Wakulla and Apalachicola Ranger Districts in Florida, a region of Longleaf Pine RCW habitat far separated from our own study area. Despite the differences of study area, vegetation, etc., the habitat indicators are similar in many regards.

Quantifying and mapping the Pine Regeneration class was an important goal of this study. Pine regeneration is amongst the RCW habitat quality indicators, and of particular interests because of our goal of managing a long-term outlook when forest planning. Because Cook’s Branch Conservancy maintains an annual fire program to promote and improve RCW habitat, information about pine regeneration can help strategize where to burn and with what intensity. The seasonality of burning and particular burn conditions can help preserve or naturally thin pine regeneration, so this information informs forest managers of where to best apply fire.

Accurate and correctly reclassified elevation data were a key component of successful forest classification. For the sake of comparison, some iterations without elevation data were performed, along with unsupervised and pixel-based classification configurations. In all cases, classification accuracies were noticeably lower using the same training sample dataset. Most notably, the omission of elevation data resulted in significantly lower accuracy results, and so the inclusion of elevation data was not only necessary but confirmed the validity of Structure from Motion as a source of elevation data for our targeted classes.

A well-constructed training sample dataset was also important for accurate classification. This step, along with processing time during trial iterations, represent the most time-intensive portion of analysis. Once the parameters of a suitable training sample dataset were identified, they can be reproduced with much more efficiency in future and similar classification efforts.

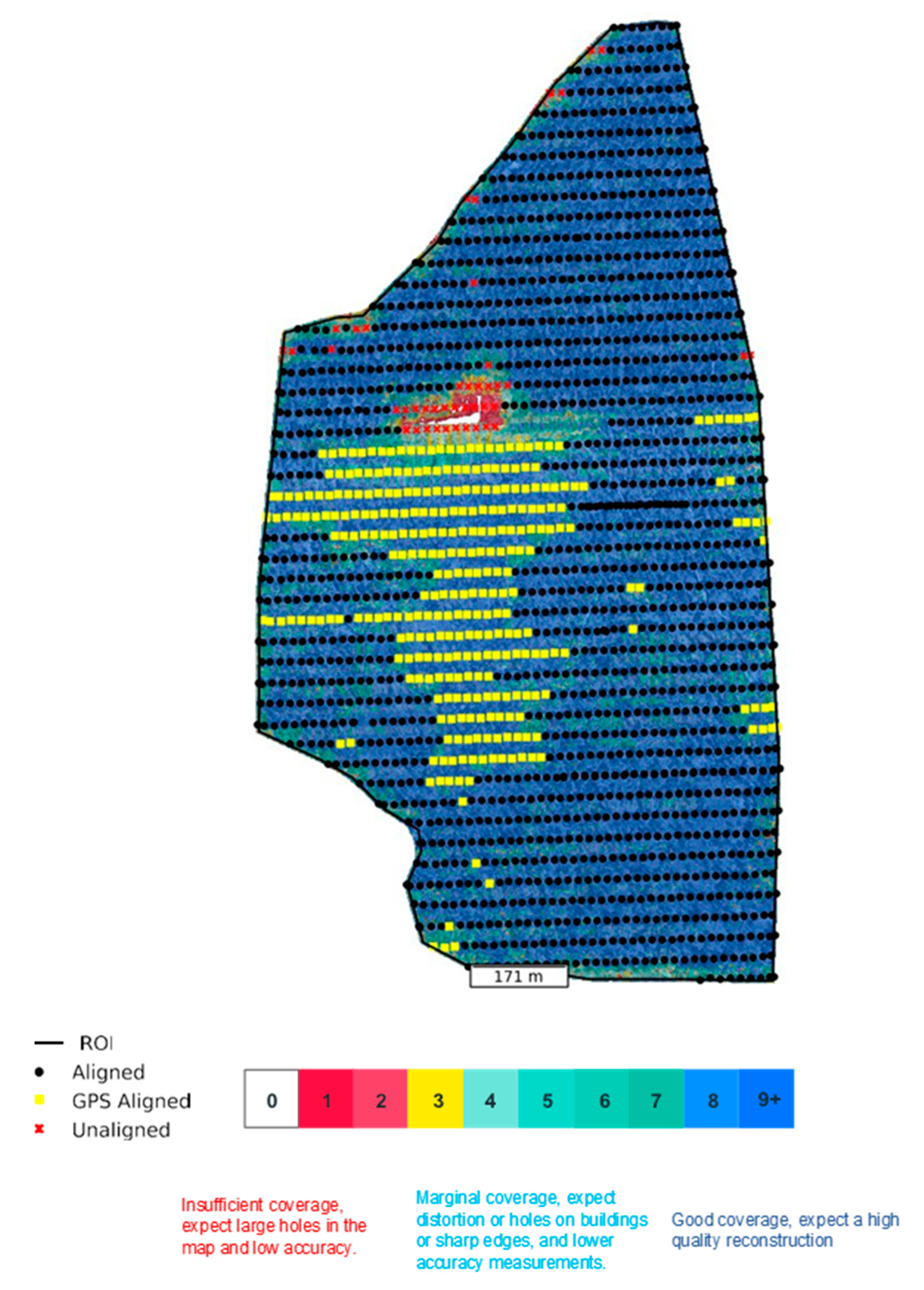

The results of this study indicate the functionality of software applications, such as DroneDeploy, for creating elevation models using Structure from Motion photogrammetric methods, and how they can be leveraged in specific forest management scenarios. In this case, it effectively mapped indicators of RCW habitat quality. The time and cost efficiency are substantial when compared to hand-crew methods and highlight the value of continuing to further incorporate sUAS technologies into land management practices. Cost-savings are not only to the advantage of landowners, but to conservation efforts overall. sUAS gathered datasets will enable larger and more frequent coverage, along with potentially more detailed and accurate information on a comparable budget. Efforts to identify where data acquisition is most necessary, and how to effectively leverage it will likely be the challenge going forward.