UAV Obstacle Avoidance Algorithm to Navigate in Dynamic Building Environments

Abstract

1. Introduction

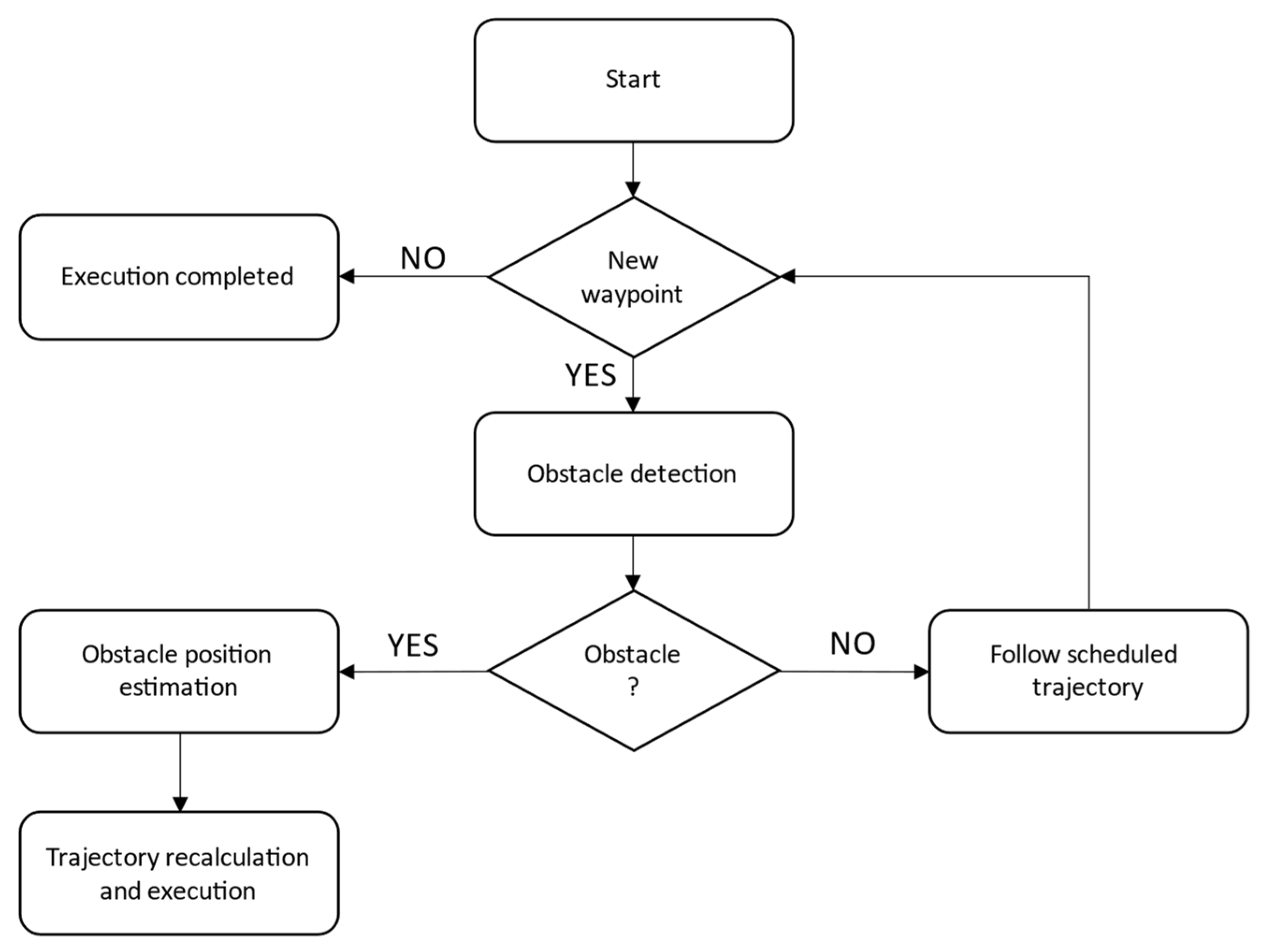

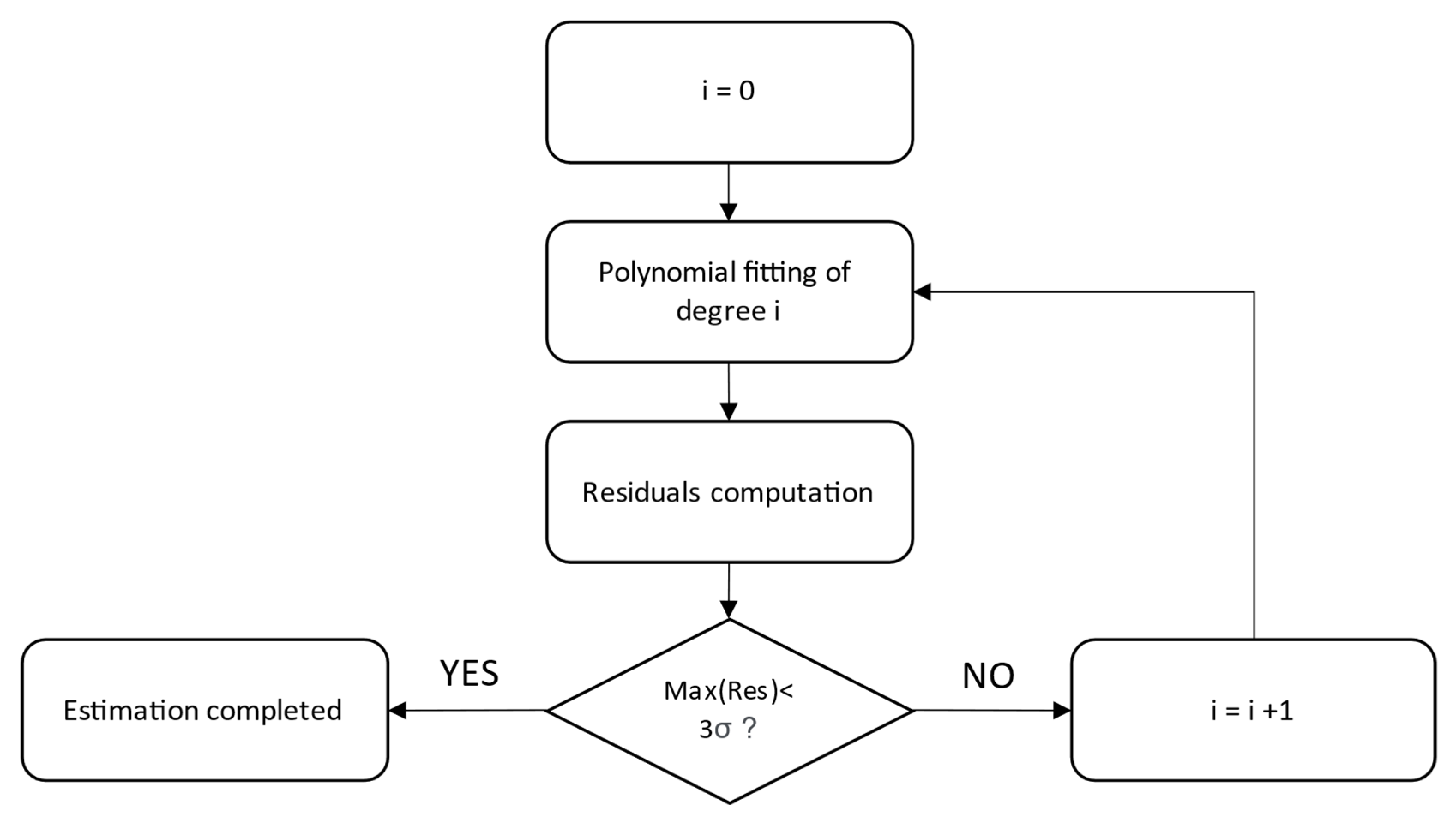

2. Methodology

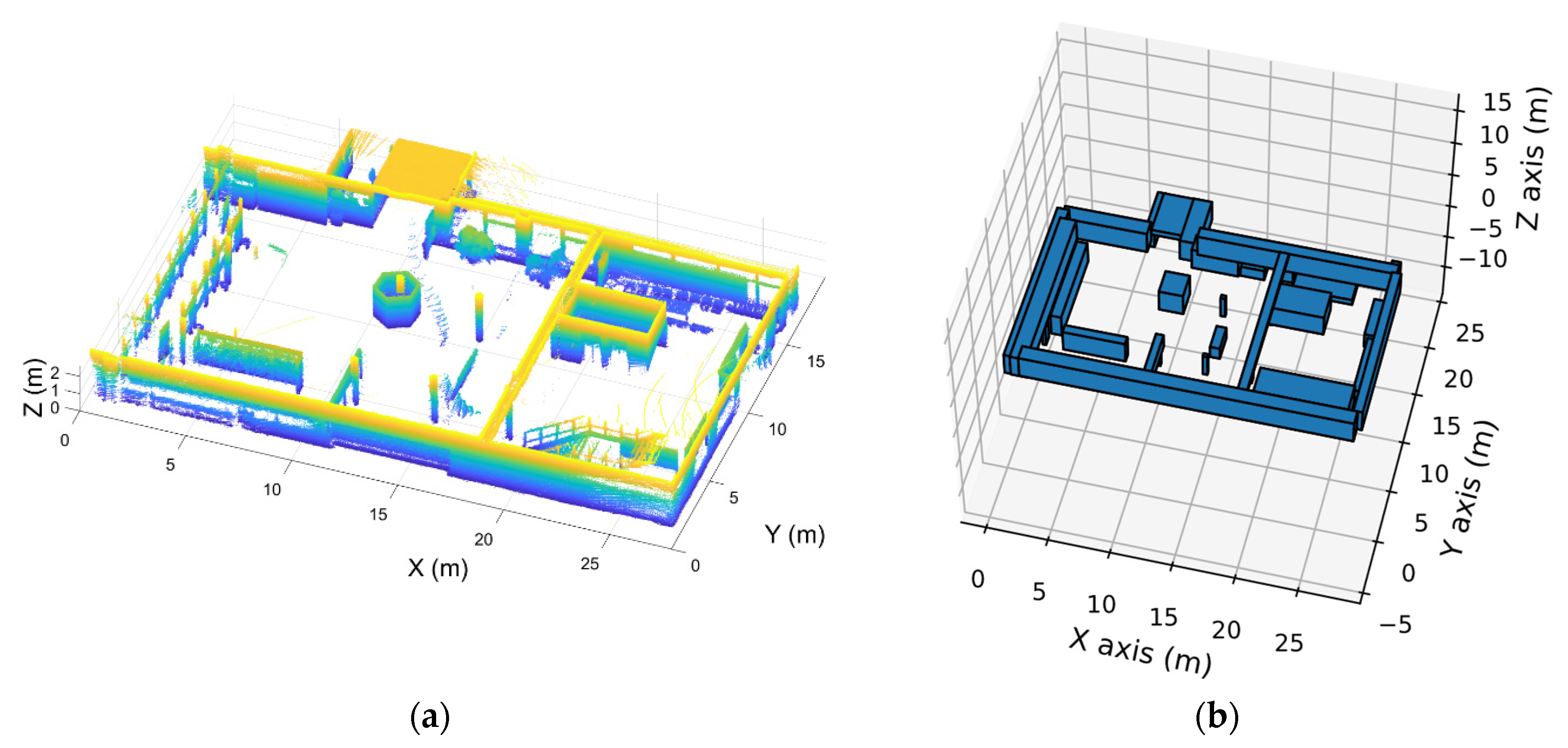

2.1. Scenario Discretization

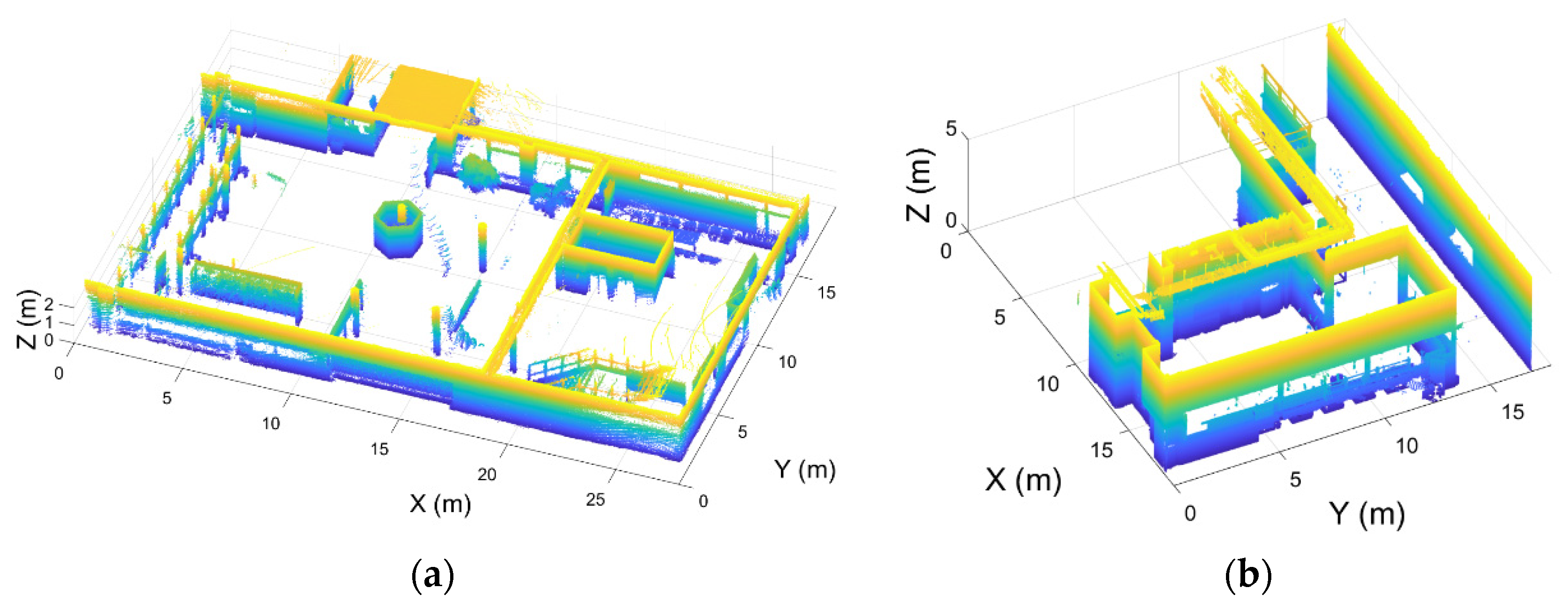

- MTI (Industrial Technology Module). It is an industrial warehouse of the University of Vigo, a much wider room with a high ceiling (approximately 5 m high). Flying over obstacles will be tested in this room (Figure 2b). This point cloud was acquired with the TLS Faro Focus 3D X330 laser scanner [44].

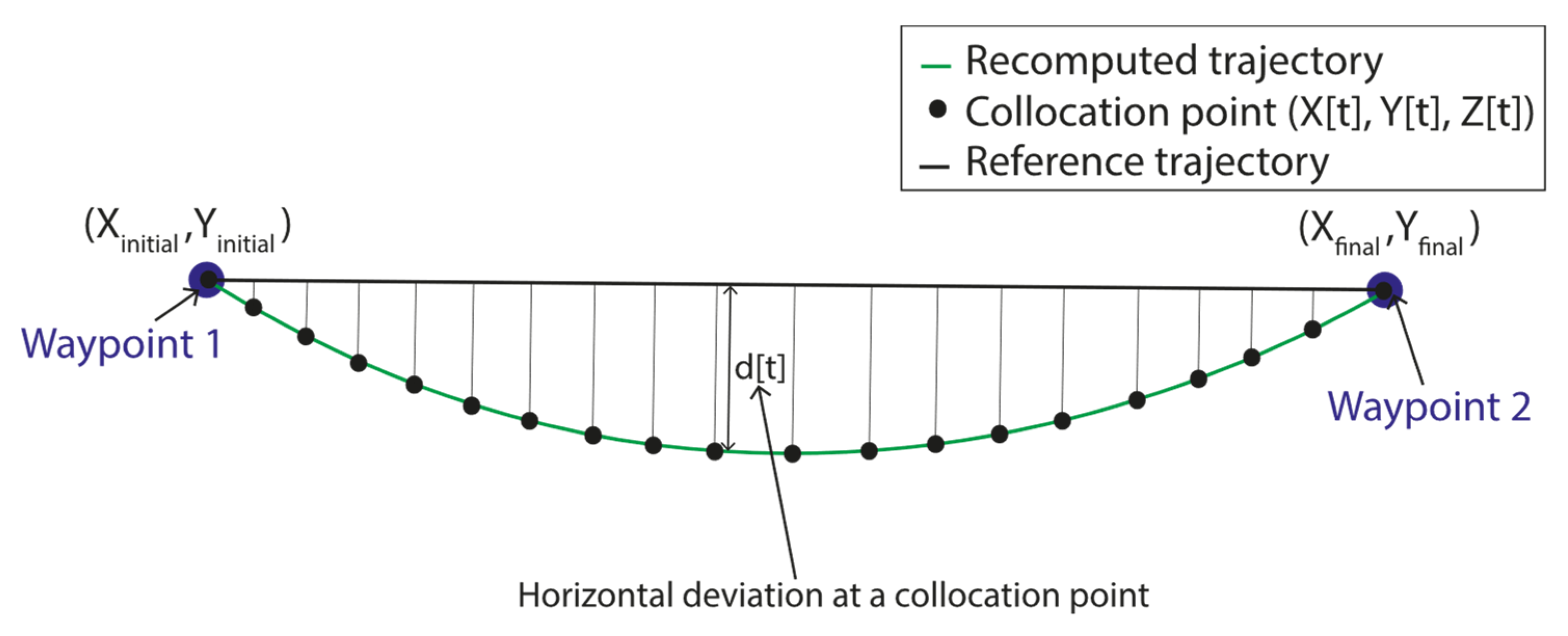

2.2. Optimal Control Problem Formulation

2.2.1. Dynamic Constraints of the Vehicle

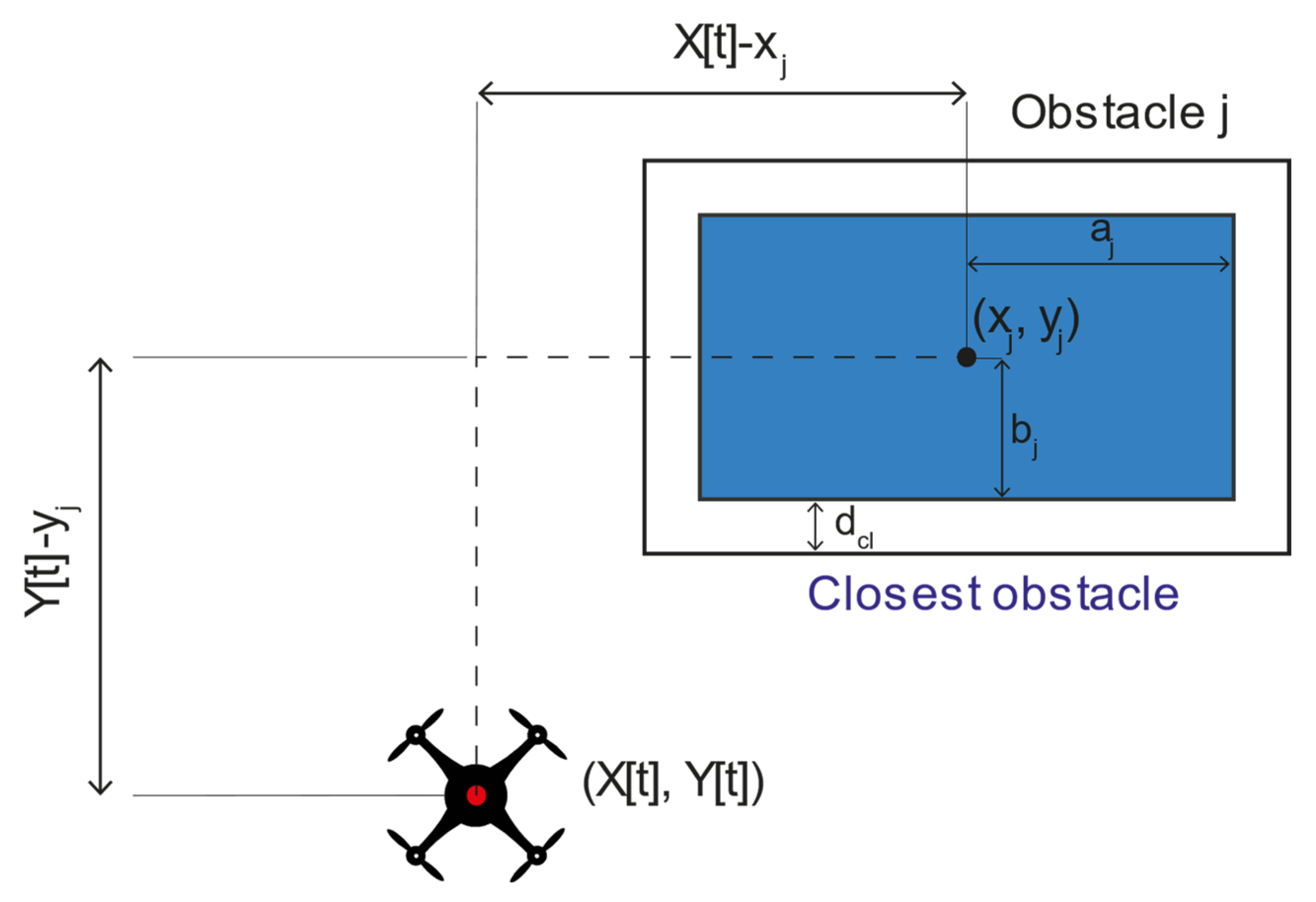

2.2.2. Algebraic Constraints

- Room scenario

- Unrecorded and moving obstacles

3. Results and Discussion

3.1. Study Cases

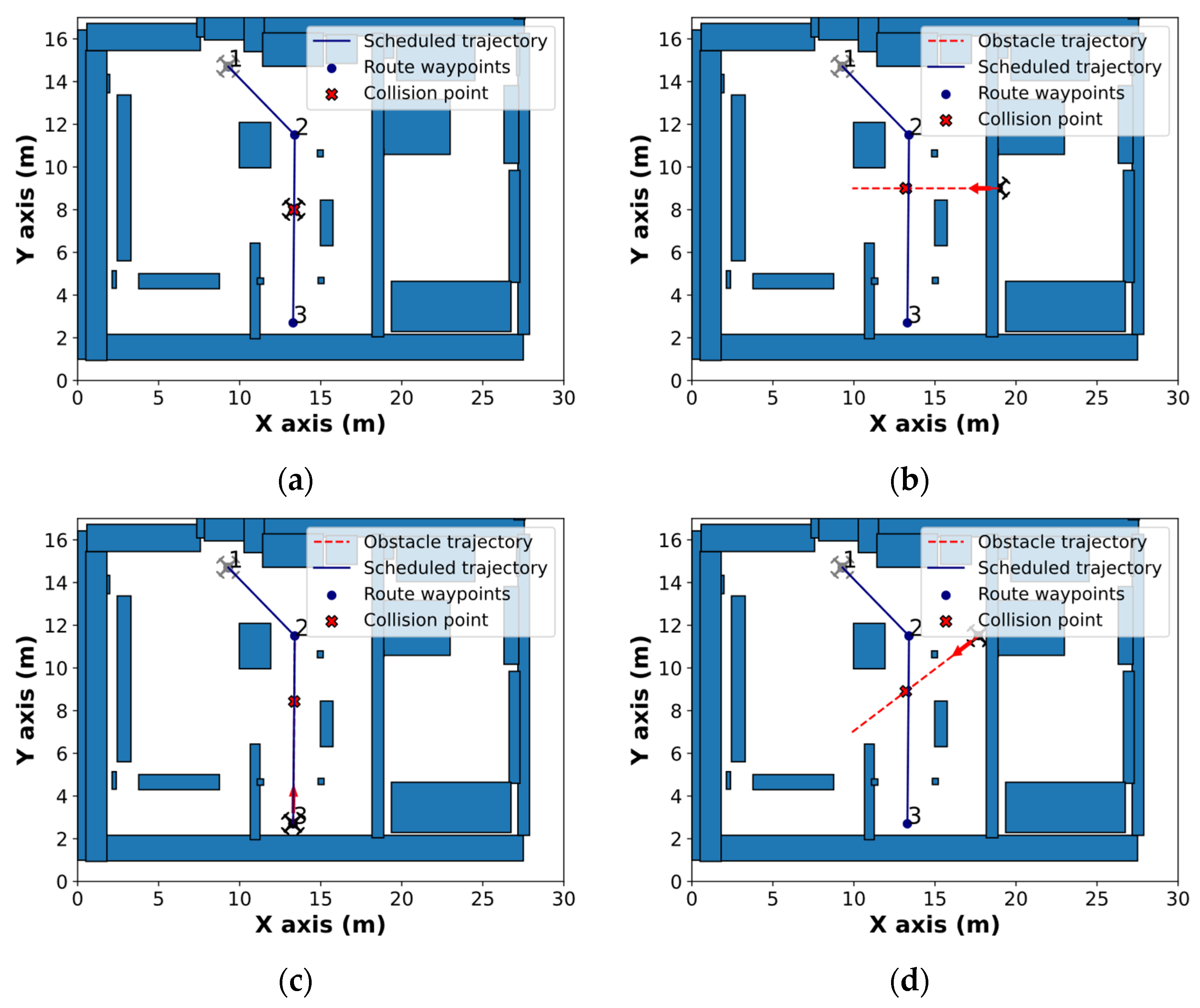

- Static obstacle: The first maneuver to be tested is a single static obstacle placed in the middle of the scheduled trajectory.

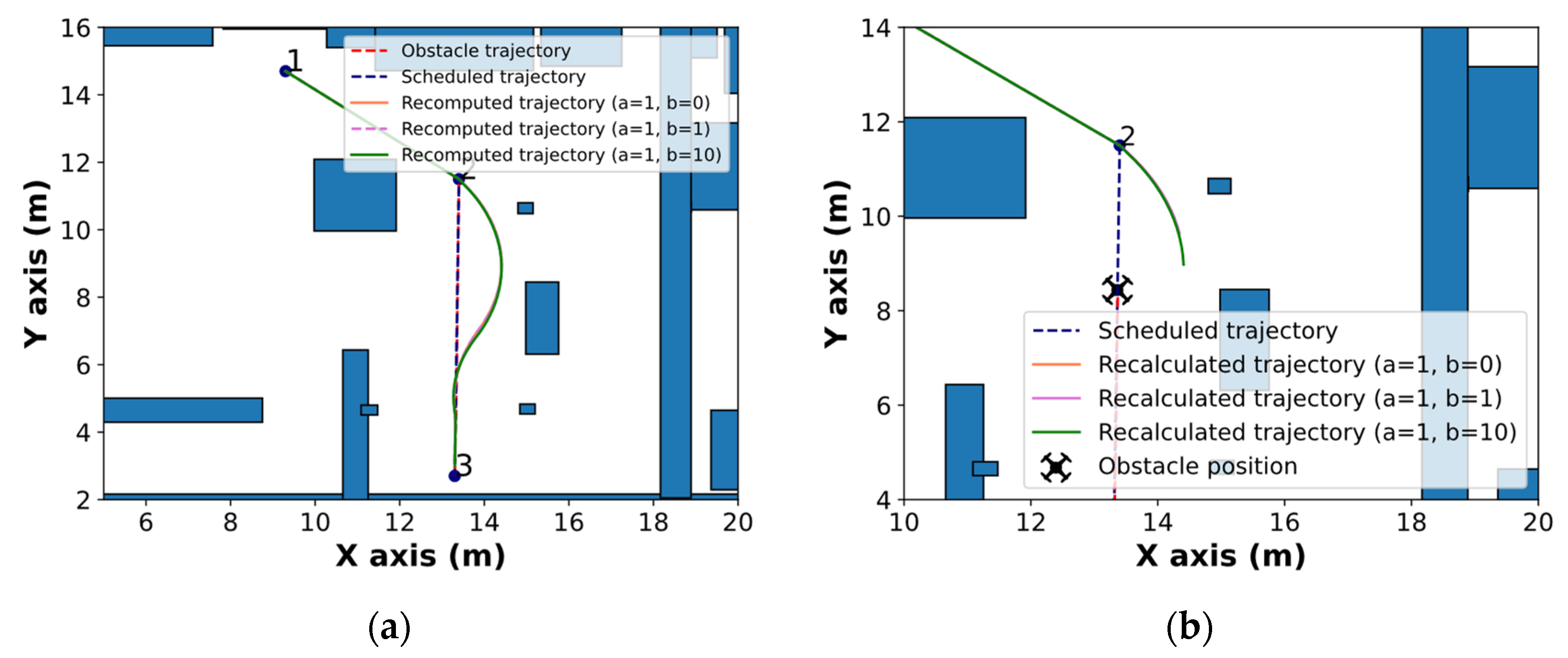

- Perpendicular moving obstacle: In this case, the object is moving perpendicularly to the obstacle at a constant speed of 1 m/s, intercepting the UAV in a point of the trajectory.

- Colinear moving obstacle: The obstacle moves in the opposite direction to the UAV at a constant speed of 1 m/s.

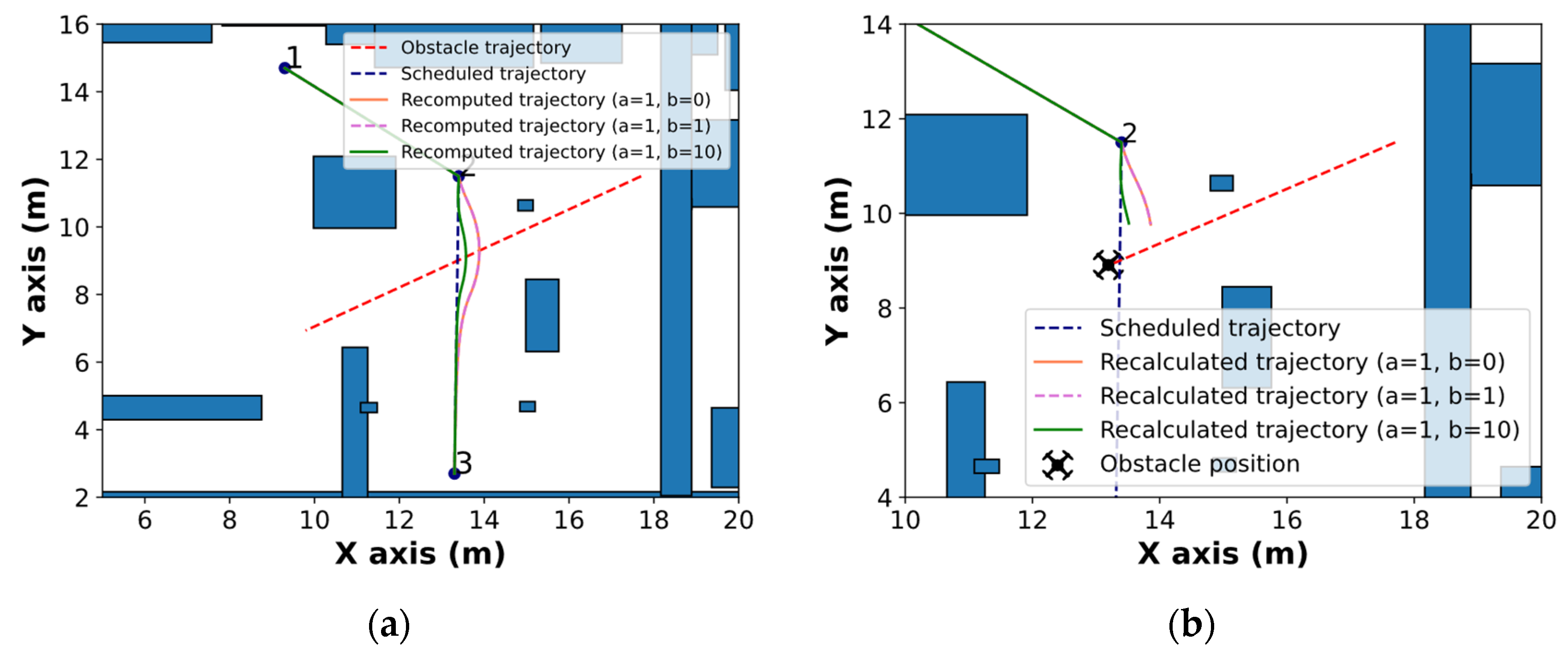

- Oblique moving obstacle: A similar scenario to the one presented in the previous cases, but with a different incidence angle.

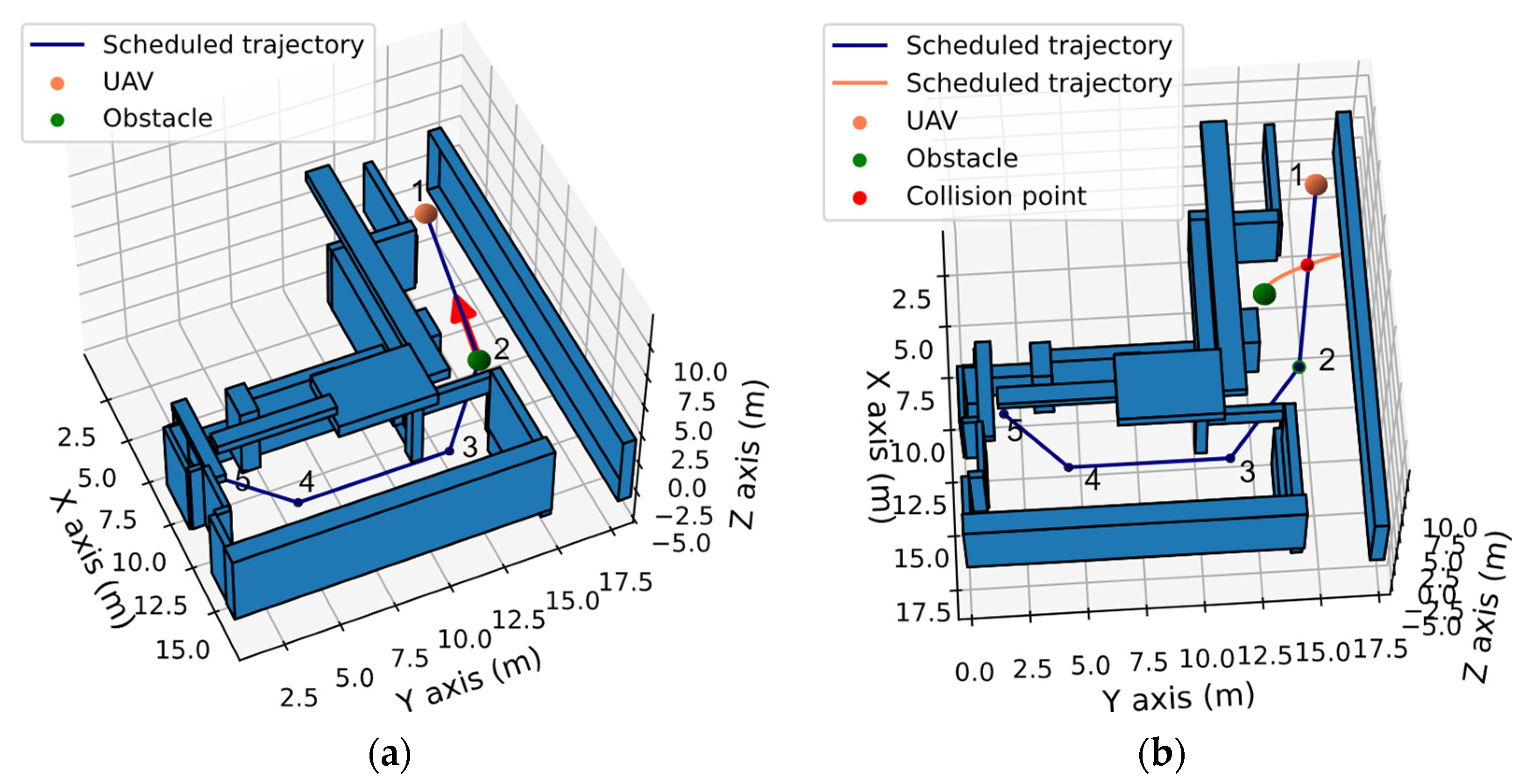

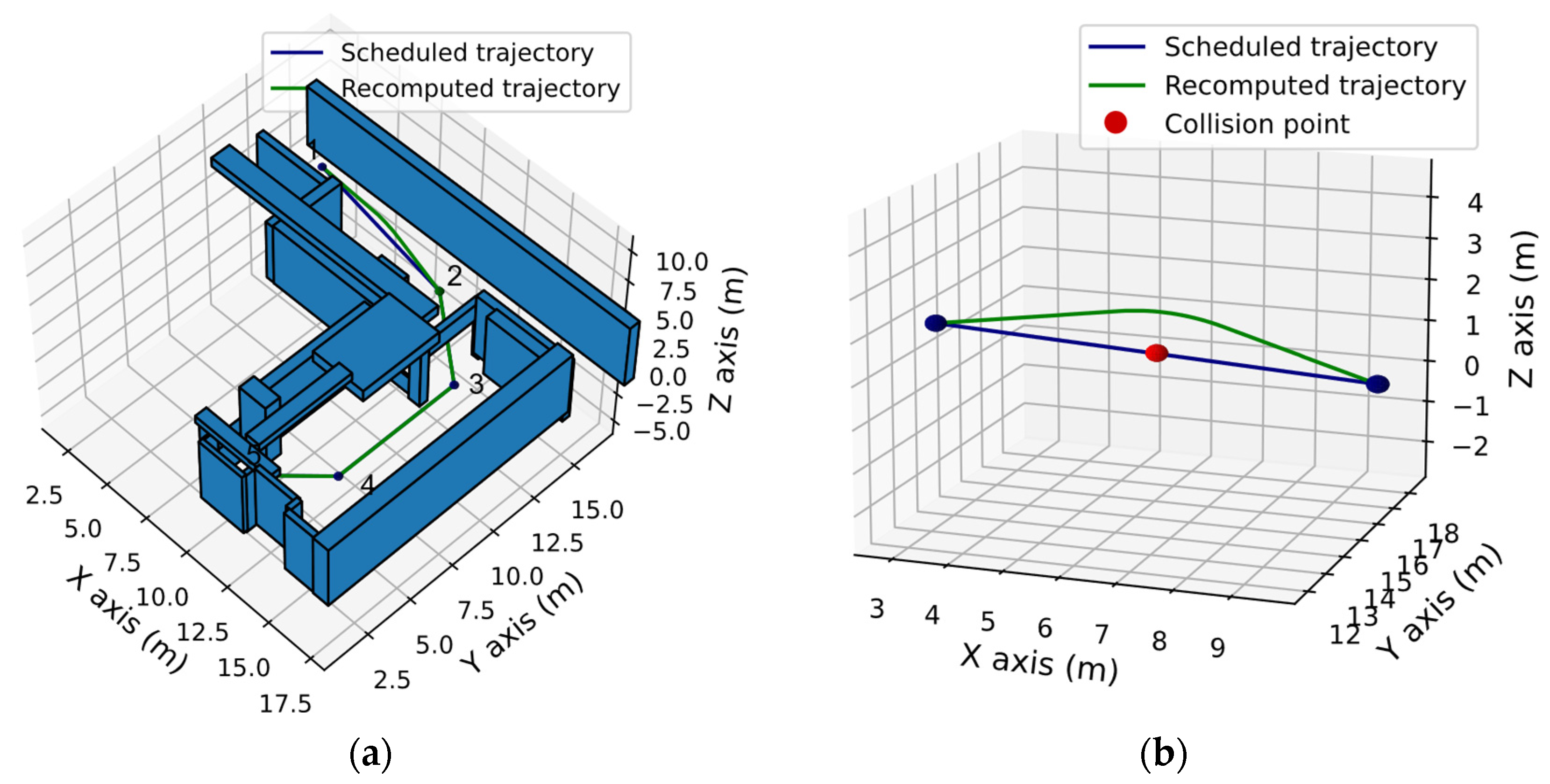

- Vertical avoidance: In this test case, a simple colinear obstacle is simulated. Vertical avoidance is a feasible maneuver as the height of the ceiling of the MTI building is around 5 m.

- Curved accelerated obstacle trajectory: An accelerated parabolic obstacle trajectory to demonstrate the capabilities of the proposed method to avoid obstacles with complex trajectories. The obstacle moves at a constant speed in the x direction and has a constant acceleration in the y component.

3.2. Avoidance Maneuvers

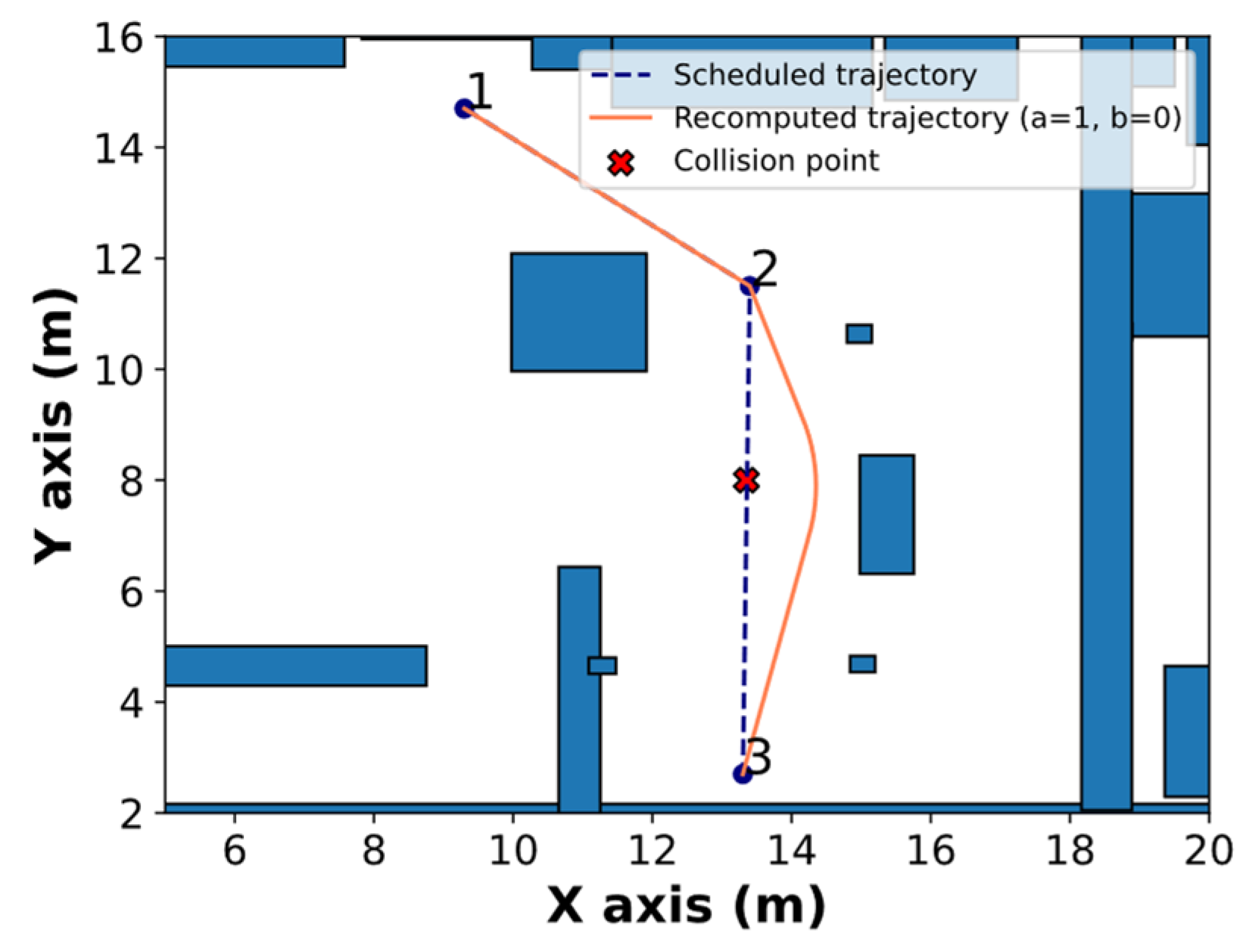

3.2.1. Static Obstacle

3.2.2. Obstacle Moving Perpendicularly toward the UAV

3.2.3. Obstacle following a Colinear Trajectory toward the UAV

3.2.4. Obstacle following an Oblique Trajectory toward the UAV

3.2.5. Vertical Avoidance

3.2.6. Curved Accelerated Trajectory

3.3. Computation Time

4. Conclusions

- The algorithm can avoid fixed and moving obstacles.

- The calculated trajectories are optimal in terms of deviation in time and position from the planned route.

- UAV performance limitations are considered in the obstacle avoidance protocols.

- Room model was successfully included in the avoidance algorithm.

- Computation times are affordable for real-time implementation.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- González-Jorge, H.; Martínez-Sánchez, J.; Bueno, M.; Arias, P. Unmanned aerial systems for civil applications: A review. Drones 2017, 1, 2. [Google Scholar] [CrossRef]

- Sony, S.; Laventure, S.; Sadhu, A. A literature review of next-generation smart sensing technology in structural health monitoring. Struct. Control Health Monit. 2019, 26, e2321. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Freeman, M.R.; Kashani, M.M.; Vardanega, P.J. Aerial robotic technologies for civil engineering: Established and emerging practice. J. Unmanned Veh. Syst. 2021, 9, 75–91. [Google Scholar] [CrossRef]

- Kim, K.; Kim, S.; Shchur, D. A UAS-based work zone safety monitoring system by integrating internal traffic control plan (ITCP) and automated object detection in game engine environment. Autom. Constr. 2021, 128, 103736. [Google Scholar] [CrossRef]

- Boukoberine, M.N.; Zhou, Z.; Benbouzid, M. A critical review on unmanned aerial vehicles power supply and energy management: Solutions, strategies, and prospects. Appl. Energy 2019, 255, 113823. [Google Scholar] [CrossRef]

- Elistair SAFE-T Tethered Drone Station. Available online: https://elistair.com/safe-t-tethered-drone-station/ (accessed on 5 October 2021).

- Rakha, T.; Gorodetsky, A. Review of Unmanned Aerial System (UAS) applications in the built environment: Towards automated building inspection procedures using drones. Autom. Constr. 2018, 93, 252–264. [Google Scholar] [CrossRef]

- Ekanayake, B.; Wong, J.K.W.; Fini, A.A.F.; Smith, P. Computer vision-based interior construction progress monitoring: A literature review and future research directions. Autom. Constr. 2021, 127, 103705. [Google Scholar] [CrossRef]

- Jiang, W.; Zhou, Y.; Ding, L.; Zhou, C.; Ning, X. UAV-based 3D reconstruction for hoist site mapping and layout planning in petrochemical construction. Autom. Constr. 2020, 113, 103137. [Google Scholar] [CrossRef]

- Wu, J.; Peng, L.; Li, J.; Zhou, X.; Zhong, J.; Wang, C.; Sun, J. Rapid safety monitoring and analysis of foundation pit construction using unmanned aerial vehicle images. Autom. Constr. 2021, 128, 103706. [Google Scholar] [CrossRef]

- Puri, N.; Turkan, Y. Bridge construction progress monitoring using lidar and 4D design models. Autom. Constr. 2020, 109, 102961. [Google Scholar] [CrossRef]

- Rebolj, D.; Pučko, Z.; Babič, N.Č.; Bizjak, M.; Mongus, D. Point cloud quality requirements for Scan-vs-BIM based automated construction progress monitoring. Autom. Constr. 2017, 84, 323–334. [Google Scholar] [CrossRef]

- Azimi, M.; Eslamlou, A.D.; Pekcan, G. Data-driven structural health monitoring and damage detection through deep learning: State-ofthe- art review. Sensors 2020, 20, 2778. [Google Scholar] [CrossRef]

- González-deSantos, L.M.; Martínez-Sánchez, J.; González-Jorge, H.; Navarro-Medina, F.; Arias, P. UAV payload with collision mitigation for contact inspection. Autom. Constr. 2020, 115, 103200. [Google Scholar] [CrossRef]

- Trujillo, M.; Martínez-de Dios, J.; Martín, C.; Viguria, A.; Ollero, A. Novel Aerial Manipulator for Accurate and Robust Industrial NDT Contact Inspection: A New Tool for the Oil and Gas Inspection Industry. Sensors 2019, 19, 1305. [Google Scholar] [CrossRef] [PubMed]

- Jeelani, I.; Gheisari, M. Safety challenges of UAV integration in construction: Conceptual analysis and future research roadmap. Saf. Sci. 2021, 144, 105473. [Google Scholar] [CrossRef]

- Guo, J.; Liang, C.; Wang, K.; Sang, B.; Wu, Y. Three-Dimensional Autonomous Obstacle Avoidance Algorithm for UAV Based on Circular Arc Trajectory. Int. J. Aerosp. Eng. 2021, 2021, 1–13. [Google Scholar] [CrossRef]

- Sasongko, R.A.; Rawikara, S.S.; Tampubolon, H.J. UAV Obstacle Avoidance Algorithm Based on Ellipsoid Geometry. J. Intell. Robot. Syst. Theory Appl. 2017, 88, 567–581. [Google Scholar] [CrossRef]

- Singh, R.; Bera, T.K. Obstacle Avoidance of Mobile Robot using Fuzzy Logic and Hybrid Obstacle Avoidance Algorithm. In Proceedings of the IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 517. [Google Scholar]

- Khairudin, M.; Refalda, R.; Yatmono, S.; Pramono, H.S.; Triatmaja, A.K.; Shah, A. The mobile robot control in obstacle avoidance using fuzzy logic controller. Indones. J. Sci. Technol. 2020, 5, 334–351. [Google Scholar] [CrossRef]

- Cetin, O.; Zagli, I.; Yilmaz, G. Establishing obstacle and collision free communication relay for UAVs with artificial potential fields. J. Intell. Robot. Syst. Theory Appl. 2013, 69, 361–372. [Google Scholar] [CrossRef]

- Fan, X.; Guo, Y.; Liu, H.; Wei, B.; Lyu, W. Improved Artificial Potential Field Method Applied for AUV Path Planning. Math. Probl. Eng. 2020, 2020, 1–21. [Google Scholar] [CrossRef]

- Zhang, T.; Kahn, G.; Levine, S.; Abbeel, P. Learning deep control policies for autonomous aerial vehicles with MPC-guided policy search. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; Volume 2016. [Google Scholar]

- Han, X.; Wang, J.; Xue, J.; Zhang, Q. Intelligent Decision-Making for 3-Dimensional Dynamic Obstacle Avoidance of UAV Based on Deep Reinforcement Learning. In Proceedings of the 2019 11th International Conference on Wireless Communications and Signal Processing, WCSP 2019, Xi’an, China, 23–25 October 2019. [Google Scholar]

- Dai, X.; Mao, Y.; Huang, T.; Qin, N.; Huang, D.; Li, Y. Automatic obstacle avoidance of quadrotor UAV via CNN-based learning. Neurocomputing 2020, 402, 346–358. [Google Scholar] [CrossRef]

- Xin, C.; Wu, G.; Zhang, C.; Chen, K.; Wang, J.; Wang, X. Research on indoor navigation system of uav based on lidar. In Proceedings of the 2020 12th International Conference on Measuring Technology and Mechatronics Automation (ICMTMA), Phuket, Thailand, 28–29 February 2020; pp. 763–766. [Google Scholar]

- Ajay Kumar, G.; Patil, A.K.; Patil, R.; Park, S.S.; Chai, Y.H. A LiDAR and IMU integrated indoor navigation system for UAVs and its application in real-time pipeline classification. Sensors 2017, 17, 1268. [Google Scholar] [CrossRef]

- Mane, S.B.; Vhanale, S. Real time obstacle detection for mobile robot navigation using stereo vision. In Proceedings of the International Conference on Computing, Analytics and Security Trends, CAST 2016, Pune, India, 19–21 December 2016. [Google Scholar]

- Di Lizia, P.; Armellin, R.; Bernelli-Zazzera, F.; Berz, M. High order optimal control of space trajectories with uncertain boundary conditions. Acta Astronaut. 2014, 93, 217–229. [Google Scholar] [CrossRef]

- Chu, H.; Ma, L.; Wang, K.; Shao, Z.; Song, Z. Trajectory optimization for lunar soft landing with complex constraints. Adv. Space Res. 2017, 60, 2060–2076. [Google Scholar] [CrossRef]

- Chai, R.; Savvaris, A.; Tsourdos, A.; Chai, S.; Xia, Y. Trajectory Optimization of Space Maneuver Vehicle Using a Hybrid Optimal Control Solver. IEEE Trans. Cybern. 2019, 49, 467–480. [Google Scholar] [CrossRef]

- Olympio, J.T. A Continuous Implementation of a Second-Variation Optimal Control Method for Space Trajectory Problems. J. Optim. Theory Appl. 2013, 158, 687–716. [Google Scholar] [CrossRef]

- Soler, M.; Kamgarpour, M.; Lloret, J.; Lygeros, J. A Hybrid Optimal Control Approach to Fuel-Efficient Aircraft Conflict Avoidance. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1826–1838. [Google Scholar] [CrossRef]

- Cai, J.; Zhang, N. Mixed Integer Nonlinear Programming for Aircraft Conflict Avoidance by Applying Velocity and Altitude Changes. Arab. J. Sci. Eng. 2019, 44, 8893–8903. [Google Scholar] [CrossRef]

- Cai, J.; Zhang, N. Mixed integer nonlinear programming for three-dimensional aircraft conflict avoidance. PeerJ 2018, 6, e27410v1. [Google Scholar] [CrossRef][Green Version]

- Petit, M.L. Dynamic optimization. The calculus of variations and optimal control in economics and management. Int. Rev. Econ. Financ. 1994, 3, 245–247. [Google Scholar] [CrossRef]

- Augeraud-Véron, E.; Boucekkine, R.; Veliov, V.M. Distributed optimal control models in environmental economics: A review. Math. Model. Nat. Phenom. 2019, 14, 106. [Google Scholar] [CrossRef]

- Karush, W. Minima of functions of several variables with inequalities as side conditions. In Traces and Emergence of Nonlinear Programming; Springer: New York, NY, USA, 2014. [Google Scholar]

- Wächter, A.; Biegler, L.T. On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Program. 2006, 106, 25–27. [Google Scholar] [CrossRef]

- González-Desantos, L.M.; Frías, E.; Martínez-Sánchez, J.; González-Jorge, H. Indoor path-planning algorithm for uav-based contact inspection. Sensors 2021, 21, 642. [Google Scholar] [CrossRef] [PubMed]

- Hart, P.E.; Nilsson, N.J.; Raphael, B. A Formal Basis for the Heuristic Determination of Minimum Cost Paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- GeoSLAM Ltd. ZEB-REVO Laser Scanner. Available online: https://download.geoslam.com/docs/zeb-revo/ZEB-REVOUserGuideV3.0.0.pdf (accessed on 3 November 2020).

- FARO, Inc. Faro Focus 3D X330. Available online: https://downloads.faro.com/index.php/s/z6nEwtBPDpGPmYW?dir=undefined&openfile=42057 (accessed on 27 August 2021).

| Scenario | 25 Discretization Points | 50 Discretization Points | 100 Discretization Points |

|---|---|---|---|

| Fixed obstacle | 0.07 | 0.09 | 0.25 |

| Perpendicular obstacle | 0.08 | 0.13 | 0.42 |

| Colinear obstacle | 0.06 | 0.10 | 0.25 |

| Oblique obstacle | 0.08 | 0.11 | 0.38 |

| Vertical avoidance | 0.08 | 0.15 | 0.57 |

| Curved accelerated obstacle trajectory | 0.10 | 0.13 | 0.42 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aldao, E.; González-deSantos, L.M.; Michinel, H.; González-Jorge, H. UAV Obstacle Avoidance Algorithm to Navigate in Dynamic Building Environments. Drones 2022, 6, 16. https://doi.org/10.3390/drones6010016

Aldao E, González-deSantos LM, Michinel H, González-Jorge H. UAV Obstacle Avoidance Algorithm to Navigate in Dynamic Building Environments. Drones. 2022; 6(1):16. https://doi.org/10.3390/drones6010016

Chicago/Turabian StyleAldao, Enrique, Luis M. González-deSantos, Humberto Michinel, and Higinio González-Jorge. 2022. "UAV Obstacle Avoidance Algorithm to Navigate in Dynamic Building Environments" Drones 6, no. 1: 16. https://doi.org/10.3390/drones6010016

APA StyleAldao, E., González-deSantos, L. M., Michinel, H., & González-Jorge, H. (2022). UAV Obstacle Avoidance Algorithm to Navigate in Dynamic Building Environments. Drones, 6(1), 16. https://doi.org/10.3390/drones6010016