Energy-Efficient Inference on the Edge Exploiting TinyML Capabilities for UAVs

Abstract

:1. Introduction

2. Energy-Efficient Machine Learning Approaches for UAVs

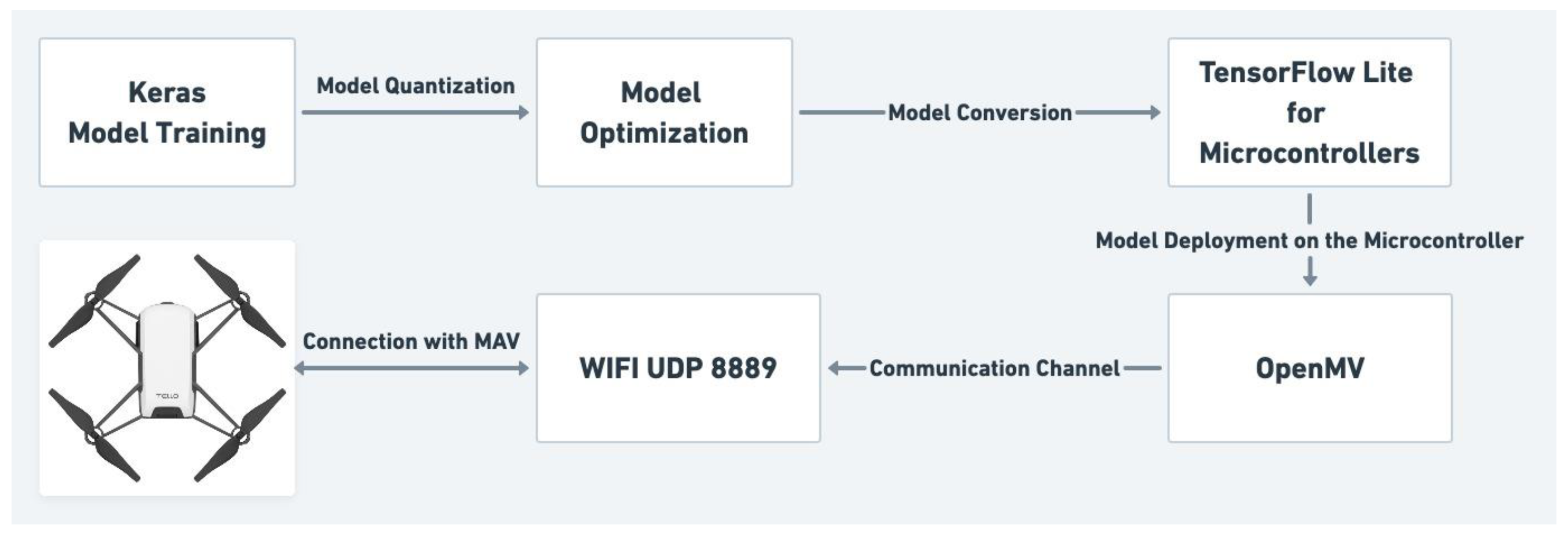

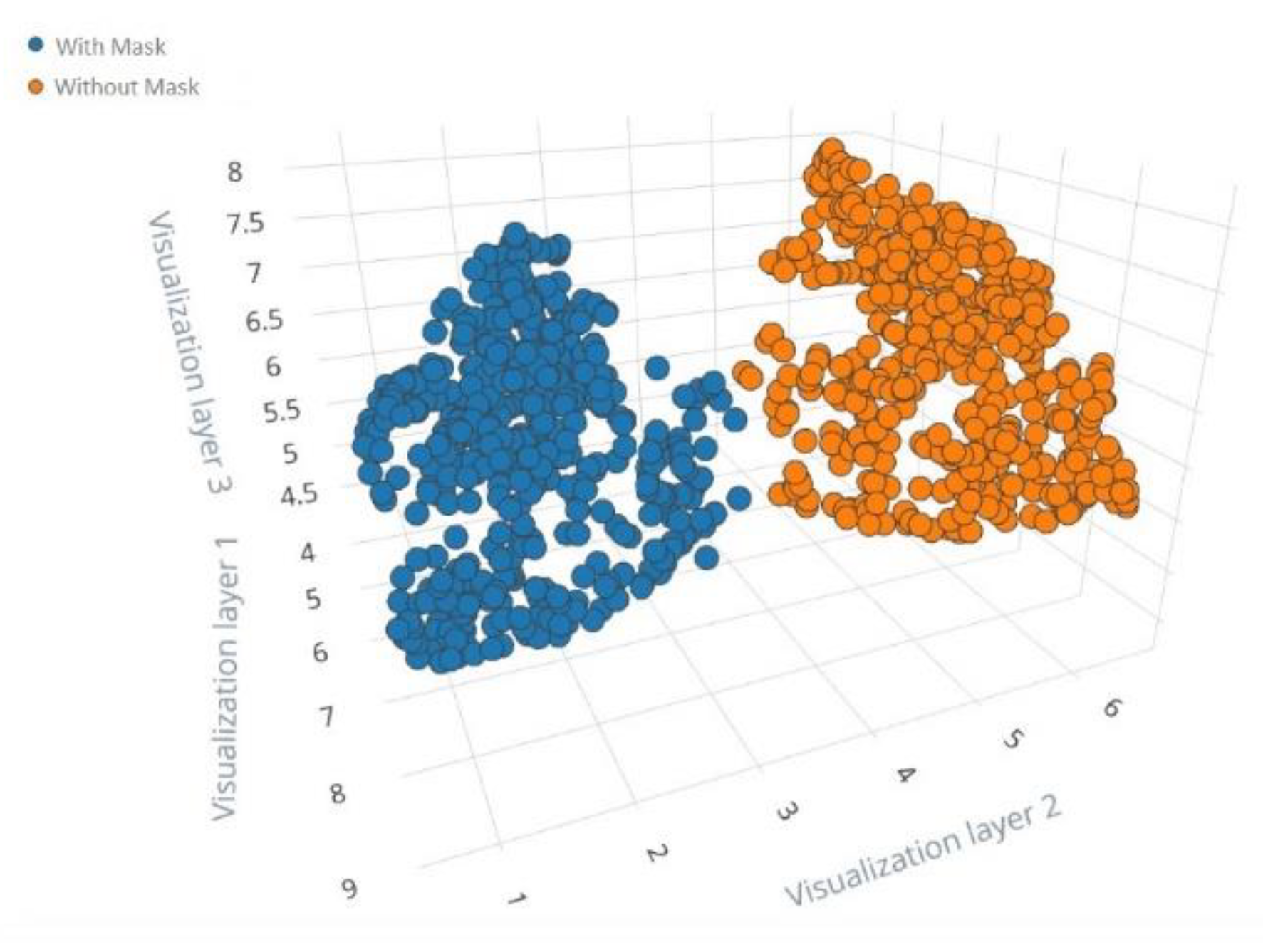

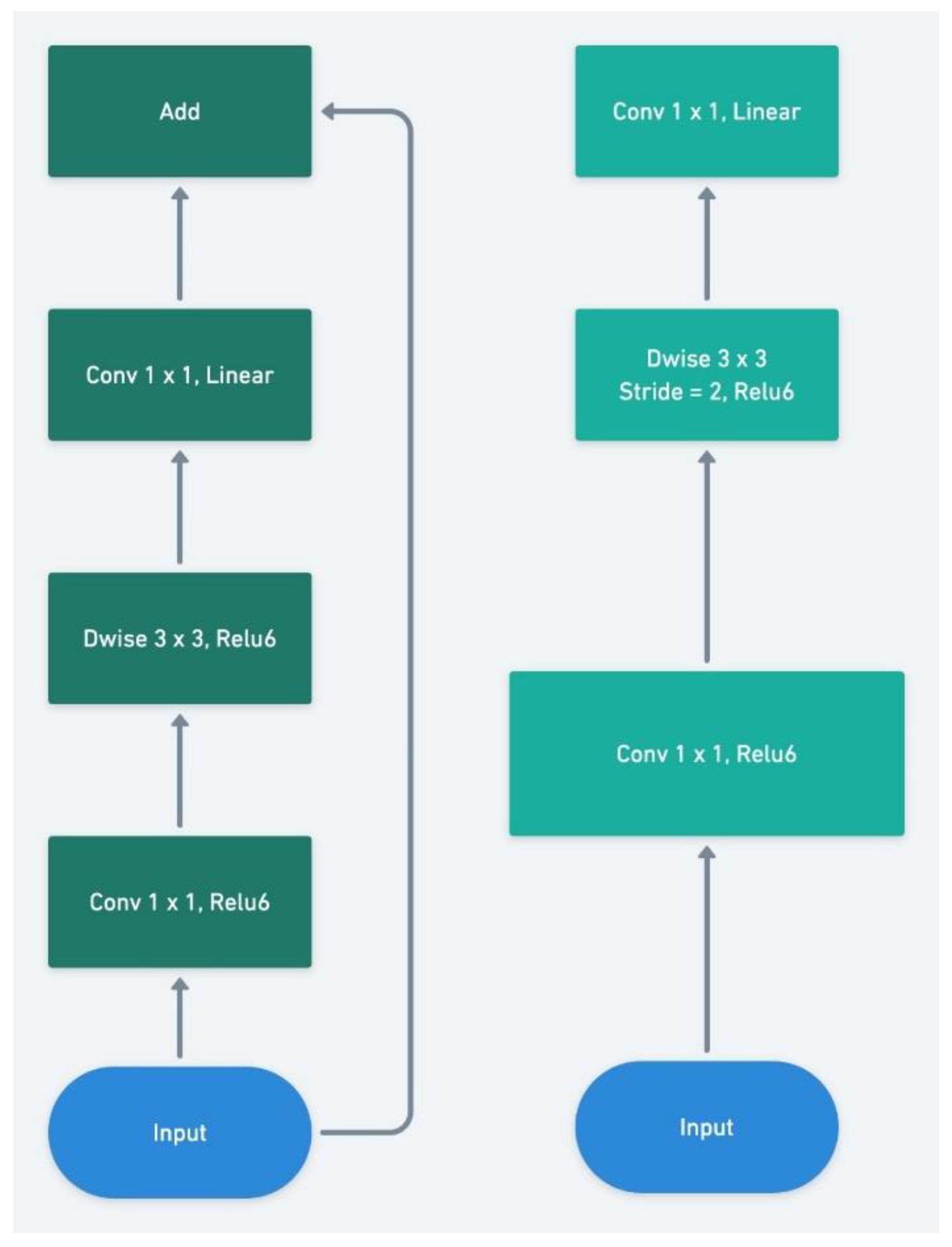

3. Proposed System

4. Experimental Validation

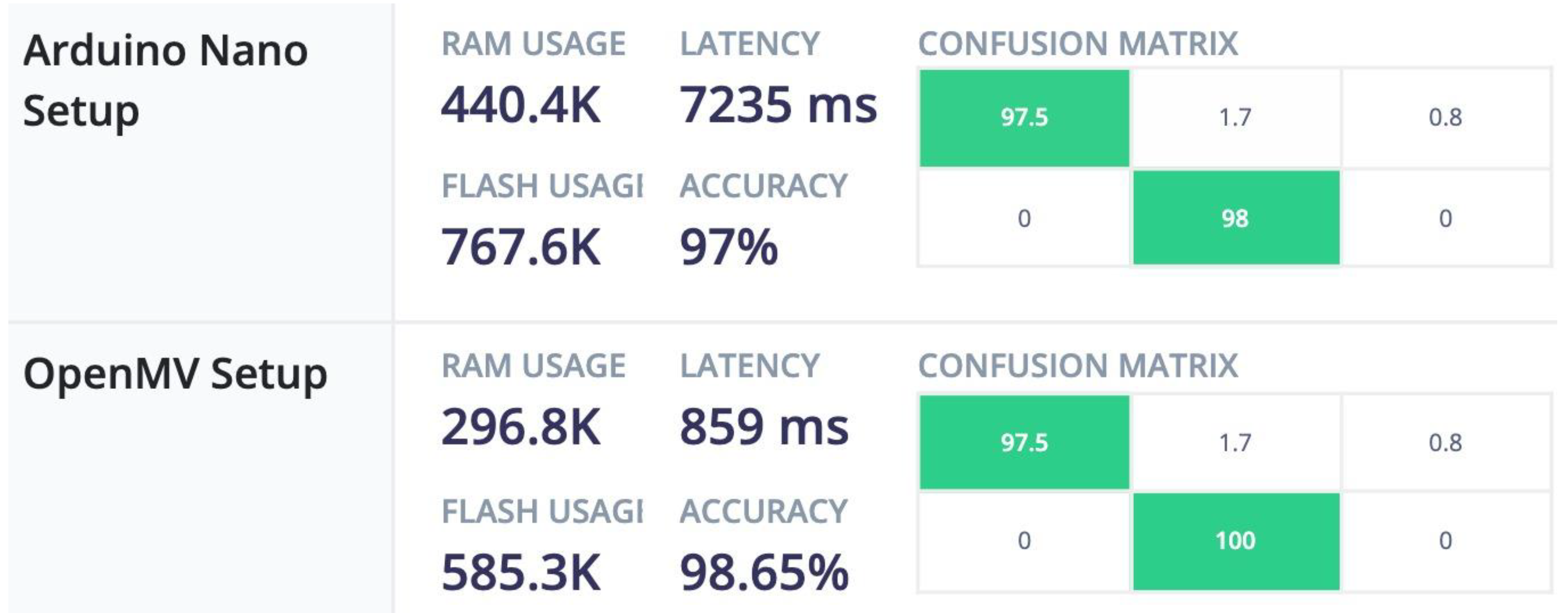

4.1. Experimental Setup

4.2. Experimental Results and Comparisons

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Atzori, L.; Iera, A.; Morabito, G. The internet of things: A survey. Comput. Netw. 2010, 54, 2787–2805. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Hakak, S.; Alazab, M.; Bhattacharya, S.; Gadekallu, T.R.; Khan, W.Z.; Pham, Q.V. Unmanned Aerial Vehicles in Smart Agriculture: Applications, Requirements, and Challenges. IEEE Sens. J. 2021, 21, 17608–17619. [Google Scholar] [CrossRef]

- Roberge, V.; Tarbouchi, M.; Labonté, G. Fast Genetic Algorithm Path Planner for Fixed-Wing Military UAV Using GPU. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 2105–2117. [Google Scholar] [CrossRef]

- Qu, T.; Zang, W.; Peng, Z.; Liu, J.; Li, W.; Zhu, Y.; Zhang, B.; Wang, Y. Construction Site Monitoring using UAV Oblique Phtogrammetry and BIM Technologies. In Proceedings of the 22nd CAADRIA Conference, Suzhou, China, 5–8 April 2017. [Google Scholar]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Boukoberine, M.N.; Zhou, Z.; Benbouzid, M. A critical review on unmanned aerial vehicles power supply and energy management: Solutions, strategies, and prospects. Appl. Energy 2019, 255, 113823. [Google Scholar] [CrossRef]

- Voghoei, S.; Tonekaboni, N.H.; Wallace, J.G.; Arabnia, H.R. Deep learning at the edge. In Proceedings of the 2018 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 12–14 December 2018. [Google Scholar]

- Banbury, C.R.; Reddi, V.J.; Lam, M.; Fu, W.; Fazel, A.; Holleman, J.; Huang, X.; Hurtado, R.; Kanter, D.; Lokhmotov, A.; et al. Benchmarking TinyML systems: Challenges and direction. arXiv 2020, arXiv:2003.04821. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 16), Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Ketkar, N. Introduction to keras. In Deep Learning with Python; Apress: Berkeley, CA, USA, 2017; pp. 97–111. [Google Scholar]

- Marchisio, A.; Hanif, M.A.; Khalid, F.; Plastiras, G.; Kyrkou, C.; Theocharides, T.; Shafique, M. Deep Learning for Edge Computing: Current Trends, Cross-Layer Optimizations, and Open Research Challenges. In Proceedings of the 2019 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), Miami, FL, USA, 15–17 July 2019; pp. 553–559. [Google Scholar] [CrossRef] [Green Version]

- Lu, L.; Hu, Y.; Zhang, Y.; Jia, G.; Nie, J.; Shikh-Bahaei, M. Machine Learning for Predictive Deployment of UAVs with Rate Splitting Multiple Access. In Proceedings of the 2020 IEEE Globecom Workshops, Taipei, Taiwan, 7–11 December 2020. [Google Scholar]

- Hoseini, S.A.; Hassan, J.; Bokani, A.; Kanhere, S.S. Trajectory optimization of flying energy sources using q-learning to recharge hotspot uavs. In Proceedings of the IEEE INFOCOM 2020-IEEE Conference on Computer Communications Workshops, Toronto, ON, Canada, 6–9 July 2020. [Google Scholar]

- Wang, S.; Hosseinalipour, S.; Gorlatova, M.; Brinton, C.G.; Chiang, M. UAV-assisted Online Machine Learning over Multi-Tiered Networks: A Hierarchical Nested Personalized Federated Learning Approach. arXiv 2021, arXiv:2106.15734. [Google Scholar]

- Pustokhina, I.V.; Pustokhin, D.A.; Kumar Pareek, P.; Gupta, D.; Khanna, A.; Shankar, K. Energy-efficient cluster-based unmanned aerial vehicle networks with deep learning-based scene classification model. Int. J. Commun. Syst. 2021, 34, e4786. [Google Scholar] [CrossRef]

- Qi, H.; Hu, Z.; Huang, H.; Wen, X.; Lu, Z. Energy efficient 3-D UAV control for persistent communication service and fairness: A deep reinforcement learning approach. IEEE Access 2020, 8, 53172–53184. [Google Scholar] [CrossRef]

- Ghavimi, F.; Riku, J. Energy-efficient uav communications with interference management: Deep learning framework. In Proceedings of the 2020 IEEE Wireless Communications and Networking Conference Workshops (WCNCW), Seoul, Korea, 6–9 April 2020. [Google Scholar]

- Sanchez-Iborra, R.; Skarmeta, A.F. Tinyml-enabled frugal smart objects: Challenges and opportunities. IEEE Circuits Syst. Mag. 2020, 20, 4–18. [Google Scholar] [CrossRef]

- RAZE. Tello. 2019. Available online: https://www.ryzerobotics.com/kr/tello (accessed on 13 March 2021).

- Abdelkader, I.; El-Sonbaty, Y.; El-Habrouk, M. Openmv: A Python powered, extensible machine vision camera. arXiv 2017, arXiv:1711.10464. [Google Scholar]

- Krishnamoorthi, R. Quantizing deep convolutional networks for efficient inference: A whitepaper. arXiv 2018, arXiv:1806.08342. [Google Scholar]

- David, R.; Duke, J.; Jain, A.; Reddi, V.J.; Jeffries, N.; Li, J.; Kreeger, N.; Nappier, I.; Natraj, M.; Regev, S.; et al. Tensorflow lite micro: Embedded machine learning on tinyml systems. arXiv 2020, arXiv:2010.08678. [Google Scholar]

- Joyo, M.K.; Ahmed, S.F.; Bakar, M.I.A.; Ali, A. Horizontal Motion Control of Underactuated Quadrotor Under Disturbed and Noisy Circumstances. In Information and Communication Technology; Springer: Singapore, 2018; pp. 63–79. [Google Scholar]

- Mohan, P.; Aditya, J.P.; Abhay, C. Aditya, J.P.; Abhay, C. A tiny CNN architecture for medical face mask detection for resource-constrained endpoints. In Innovations in Electrical and Electronic Engineering; Springer: Singapore, 2021; pp. 657–670. [Google Scholar]

- Available online: https://www.kaggle.com/prasoonkottarathil/face-mask-lite-dataset (accessed on 8 July 2021).

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Warden, P.; Situnayake, D. Tinyml: Machine Learning with Tensorflow Lite on Arduino and Ultra-Low-Power Microcontrollers; O’Reilly Media: Newton, MA, USA, 2019. [Google Scholar]

- Heim, L.; Biri, A.; Qu, Z.; Thiele, L. Measuring what Really Matters: Optimizing Neural Networks for TinyML. arXiv 2021, arXiv:2104.10645. [Google Scholar]

| Research Area | Objective | Outcome | Limitations | Refs. |

|---|---|---|---|---|

| Machine Learning Flying Base Station | The model uses back-propagation learning to predict future traffic | The simulation result shows that the power can be reduced by 24% | Every base station (BS) has the same cellular traffic distribution | [12] |

| Q-Learning at a Recharge Hotspot | An optimized trajectory is proposed to solve the problem. A Markov decision process is used for wireless UAV hotspot services | The results confirm that the two benchmark strategies, random motion and static levitation, outperform SoA | Due to the powerful EM wave requirement, the energy transfer is limited by distance | [13] |

| Distributed ML on UAV Networks For Geo-Distributed Device Clusters | ML personalized local models HN-PFL are proposed where it splits the drone-based model training problem into a network-enabled macro-trajectory and learning duration designs | Their deep reinforcement learning approach shows a reduction in the percentage of energy consumption compared with greedy offloading | The UAVs perform the local model training and commonality among the data across the device on the clusters only | [14] |

| Cluster-Based UAV Networks With Deep Learning (DL) | Clustering with a parameter-tuned residual network (C-PTRN) that works in two main phases, clustering and scene classification | The results show that the T2FL-C technique reaches the lowest energy consumption | The T1FL-C model method achieves poor results with a high energy consumption compared with related techniques | [15] |

| Deep Deterministic Policy Gradient (UC-DDPG) | 3D drone based on the DDPG algorithm, which considers the residual energy, mobility power, circuit power, communication power, and hover power | The simulation results show that UC-DDPG inspired by reinforcement learning has a good convergence | The RL model is not suitable for complex tasks or working in continuous and high dimensional spaces | [16] |

| Interference Management Deep Learning | Proposal of various key performance indicators (KPIs) to achieve a trade-off between maximizing the energy efficiency and spectral efficiency | The approach makes the advantages of using intelligent energy-efficient systems evident | A highly complex, non-convex optimization problem. Due to the high mobility, calculating the solution in every time instant is unrealistic | [17] |

| Consumption (kJoule) | No Payload | Arduino | OpenMV |

| Idle | 60.192 | - | - |

| Hovering | 77.976 | 96.307 | 116.280 |

| Maneuvering | 89.727 | 112.449 | 141.588 |

| Flying time (min:sec) | No Payload | Arduino | OpenMV |

| Idle State | 12:00 | - | - |

| Hovering | 09:23 | 7:50 | 6:20 |

| Maneuvering | 08:05 | 6:42 | 5:10 |

| Consumption (kJoule) | Distributed Inference | OpenMV |

| Hovering | 86.320 | 116.280 |

| Maneuvering | 101.232 | 141.588 |

| Flying time (min:sec) | Distributed Inference | OpenMV |

| Hovering | 08:36 | 6:20 |

| Maneuvering | 07:13 | 5:10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raza, W.; Osman, A.; Ferrini, F.; Natale, F.D. Energy-Efficient Inference on the Edge Exploiting TinyML Capabilities for UAVs. Drones 2021, 5, 127. https://doi.org/10.3390/drones5040127

Raza W, Osman A, Ferrini F, Natale FD. Energy-Efficient Inference on the Edge Exploiting TinyML Capabilities for UAVs. Drones. 2021; 5(4):127. https://doi.org/10.3390/drones5040127

Chicago/Turabian StyleRaza, Wamiq, Anas Osman, Francesco Ferrini, and Francesco De Natale. 2021. "Energy-Efficient Inference on the Edge Exploiting TinyML Capabilities for UAVs" Drones 5, no. 4: 127. https://doi.org/10.3390/drones5040127

APA StyleRaza, W., Osman, A., Ferrini, F., & Natale, F. D. (2021). Energy-Efficient Inference on the Edge Exploiting TinyML Capabilities for UAVs. Drones, 5(4), 127. https://doi.org/10.3390/drones5040127