Individual Tree Crown Delineation for the Species Classification and Assessment of Vital Status of Forest Stands from UAV Images

Abstract

:1. Introduction

2. Materials and Methods

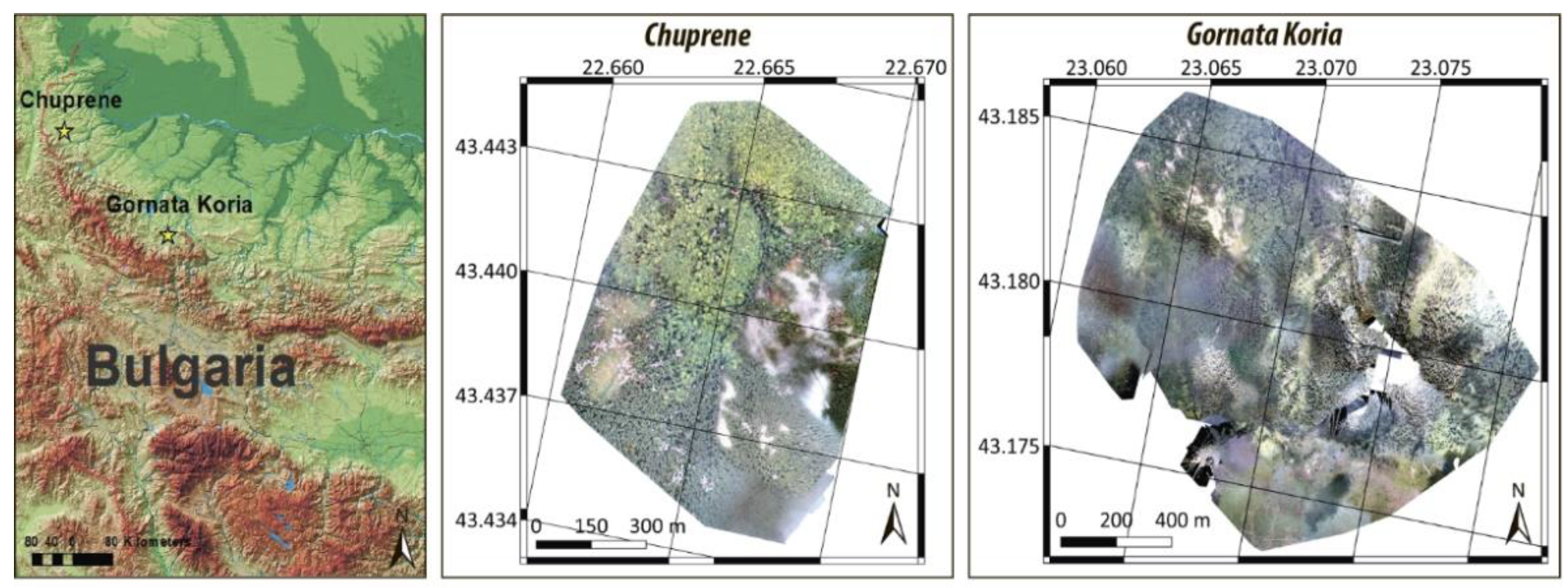

2.1. Study Area

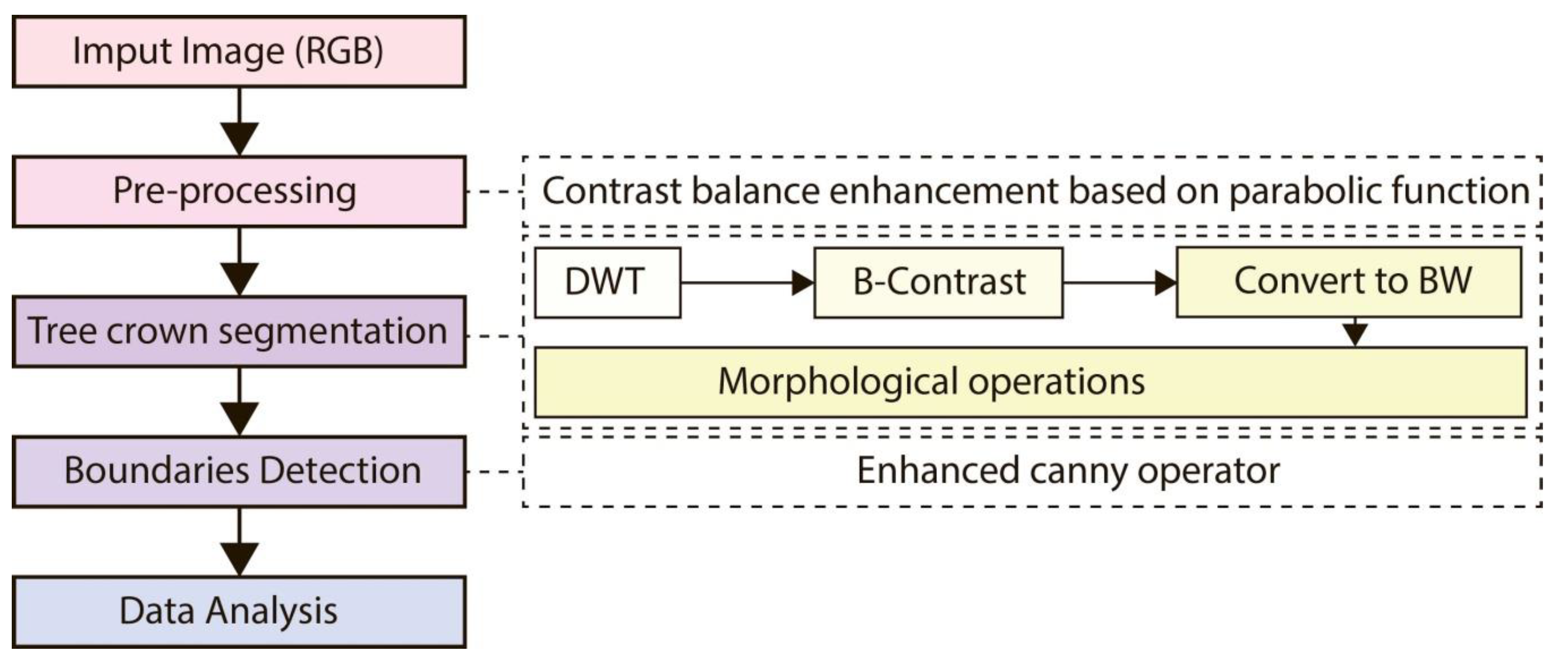

2.2. Individual Tree Crown Delineation Algorithm

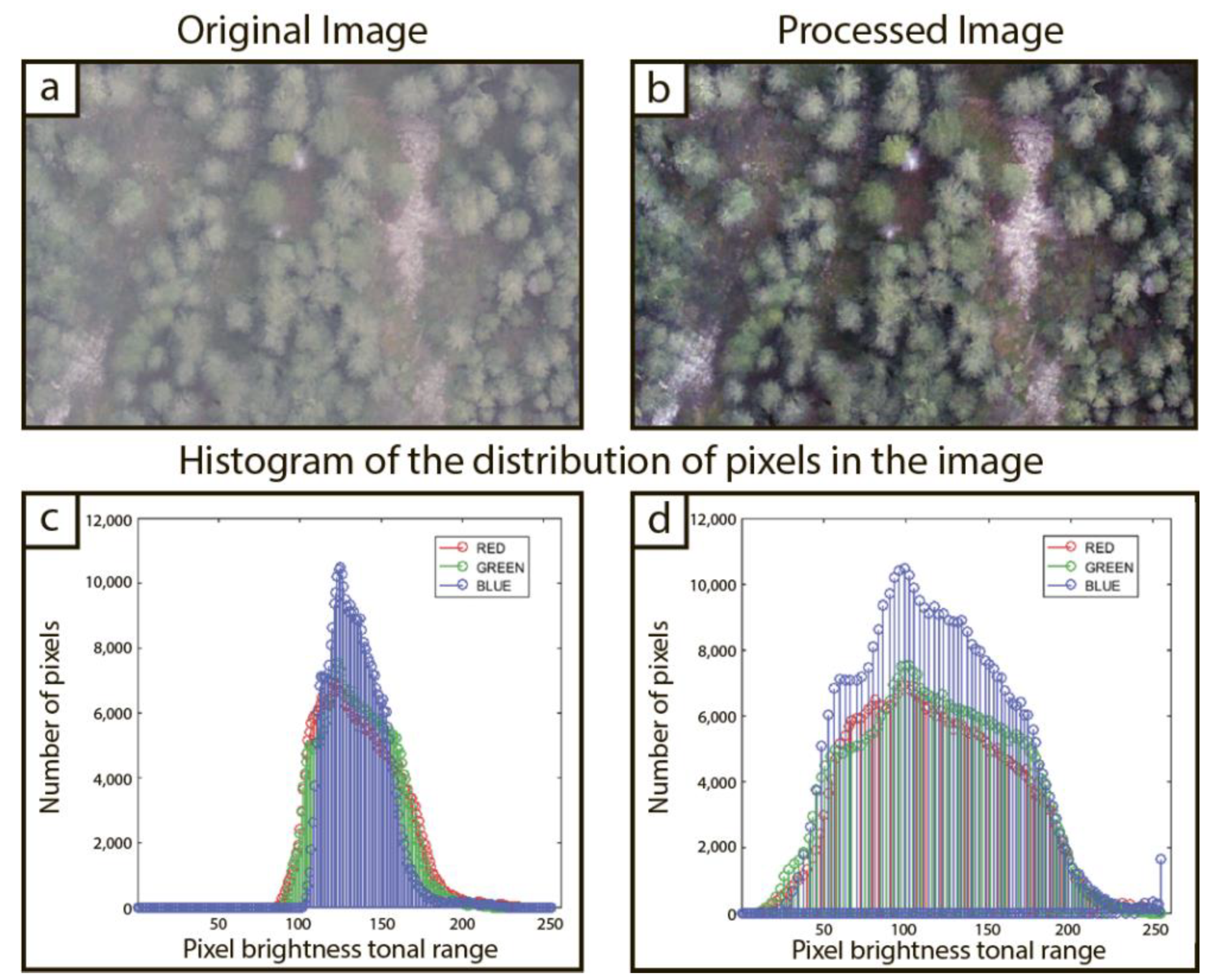

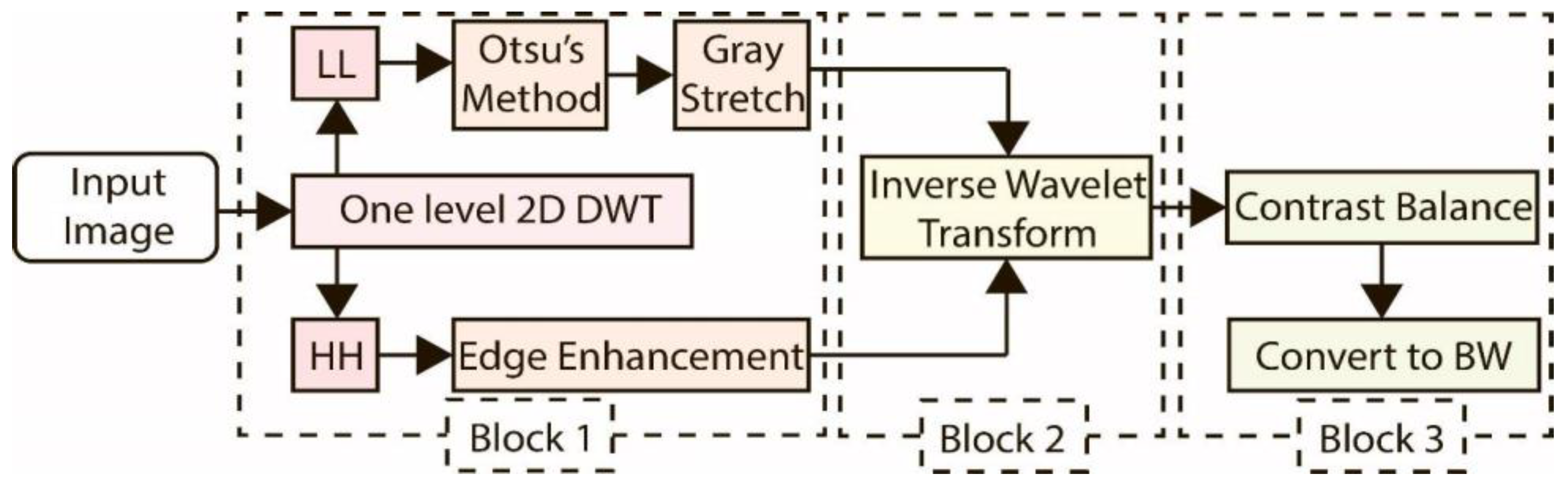

2.2.1. Preprocessing Stage

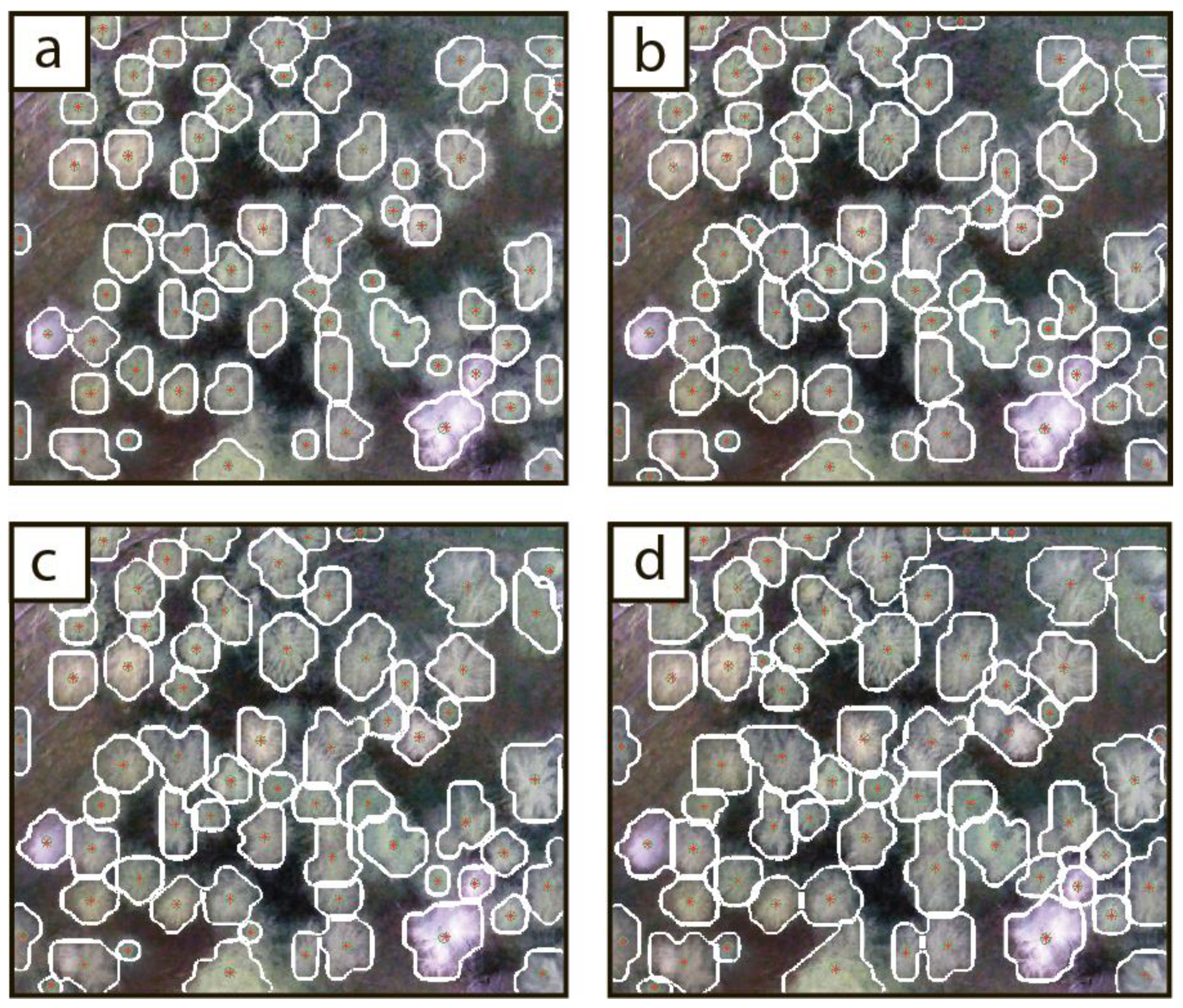

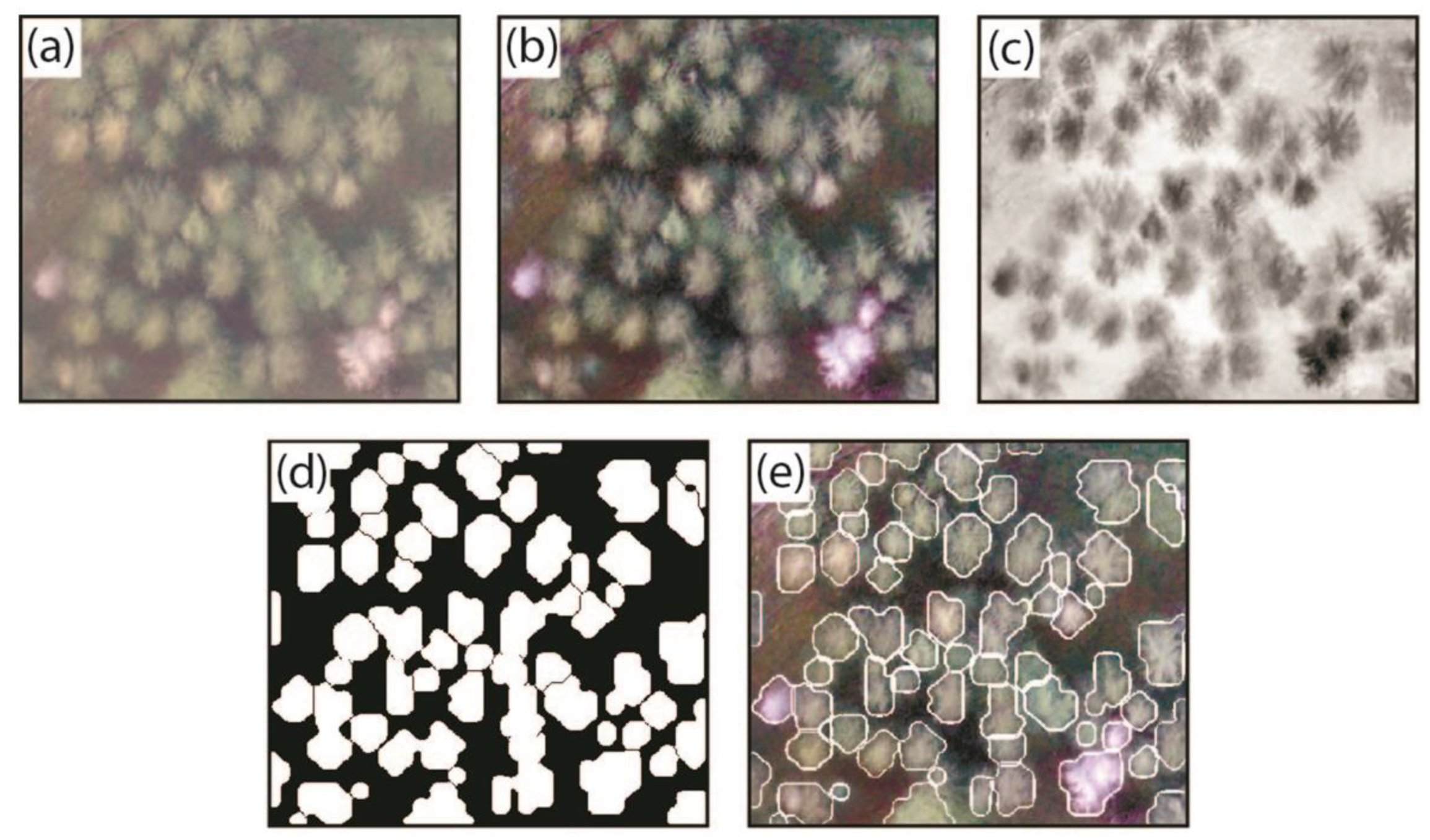

2.2.2. Tree Crown Segmentation Stage

2.2.3. Boundary Detection Stage

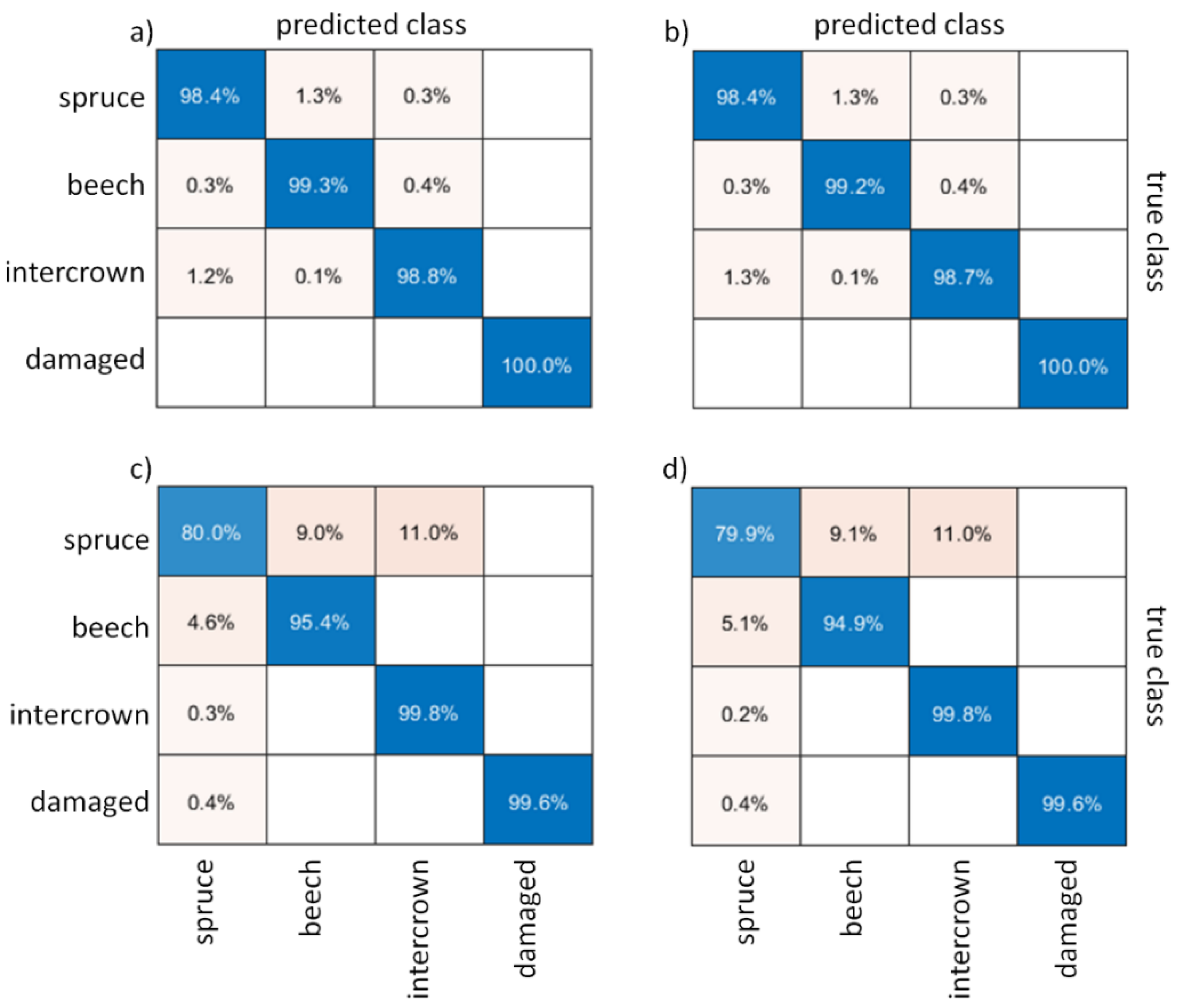

2.3. Species Classification and Vital State Assessment

2.4. Assessment Metrics

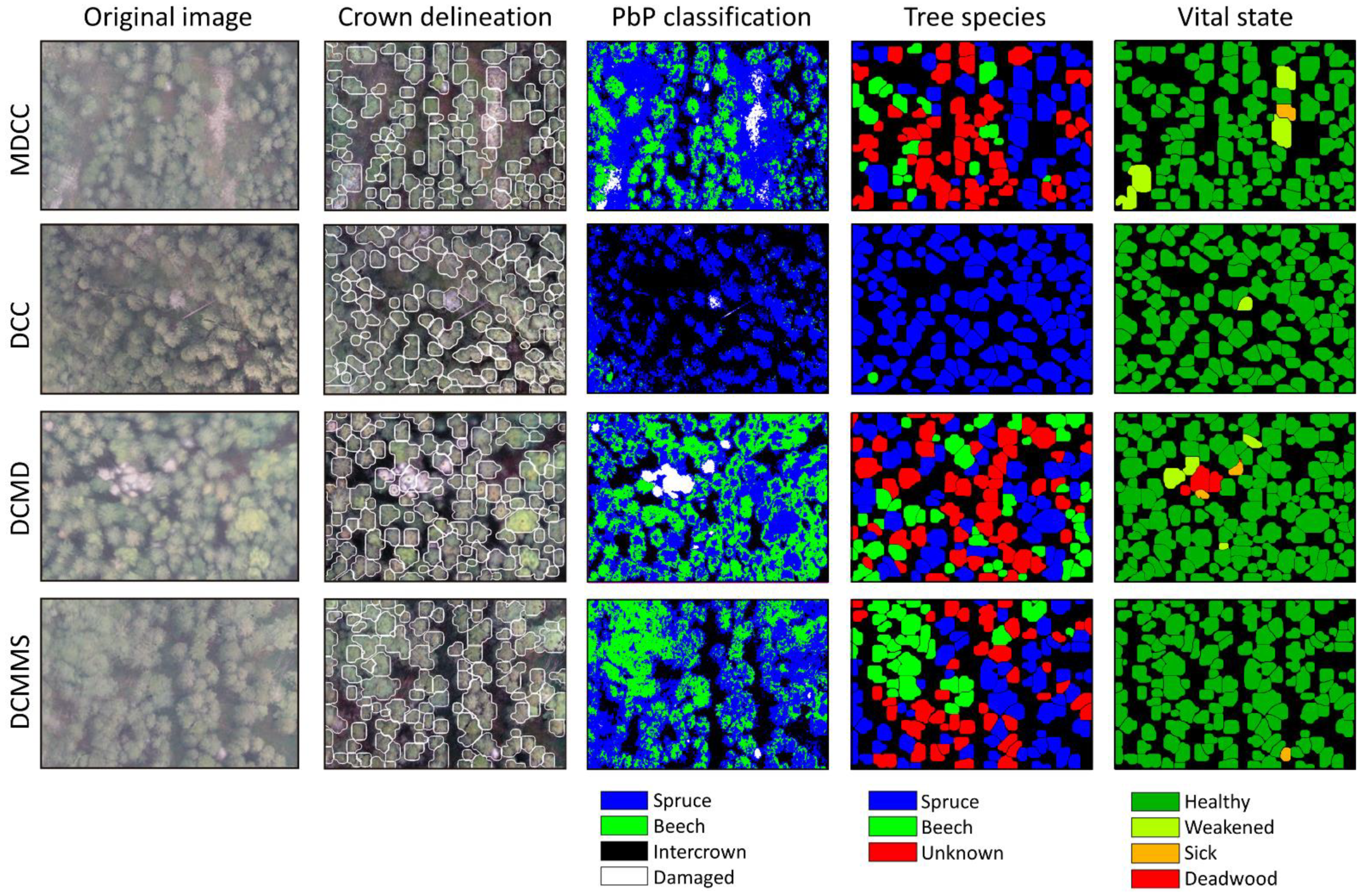

3. Experimental Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| The list of abbreviations used in the manuscript: | |

| Abbreviation | Explanation of the abbreviation |

| BW | Black and White |

| DCC | Dense Canopy Coniferous |

| DCD | Dense Canopy Deciduous |

| DCDMS | Dense Canopy Deciduous Multi-Scale |

| DCMD | Dense Canopy Mixed Damaged |

| DCMMS | Dense Canopy Mixed Multi-Scale |

| DMDC | Damaged Medium Dense Canopy |

| DWT | Discrete Wavelet Transform |

| ECOC | Error Correcting Output Codes |

| ERS | Earth Remote Sensing |

| JSC | Jaccard Similarity Coefficient |

| HE | Histogram equalization |

| HH | High-high |

| IoU | Intersection over Union |

| ITC | Individual Tree Crowns |

| ITCD | Individual Tree Crowns Delineation |

| LL | Low-low |

| MDCC | Medium Dense Canopy Coniferous |

| MDC | Medium Dense Canopy |

| PbP | Pixel-by-pixel |

| RGB | Red, Green, Blue |

| SVM | Support Vector Machine |

| UAV | Unmanned Aerial Vehicle |

References

- Jing, L.; Hu, B.; Noland, T.; Li, J. An individual tree crown delineation method based on multi-scale segmentation of imagery. ISPRS J. Photogramm. Remote Sens. 2012, 70, 88–98. [Google Scholar] [CrossRef]

- Ma, Z.; Pang, Y.; Wang, D.; Liang, X.; Chen, B.; Lu, H.; Weinacker, H.; Koch, B. Individual tree crown segmentation of a larch plantation using airborne laser scanning data based on region growing and canopy morphology features. Remote Sens. 2020, 12, 1078. [Google Scholar] [CrossRef] [Green Version]

- Maschler, J.; Atzberger, C.; Immitzer, M. Individual tree crown segmentation and classification of 13 tree species using airborne hyperspectral data. Remote Sens. 2018, 10, 1218. [Google Scholar] [CrossRef] [Green Version]

- Safonova, A.; Tabik, S.; Alcaraz-Segura, D.; Rubtsov, A.; Maglinets, Y.; Herrera, F. Detection of fir trees (Abies Sibirica) damaged by the bark beetle in unmanned aerial vehicle images with deep learning. Remote Sens. 2019, 11, 643. [Google Scholar] [CrossRef] [Green Version]

- Dmitriev, E.V.; Kozoderov, V.V.; Dementyev, A.O.; Safonova, A.N. Combining classifiers in the problem of thematic processing of hyperspectral aerospace images. Optoelectron. Instrum. Data Process. 2018, 54, 213–221. [Google Scholar] [CrossRef]

- Paris, C.; Valduga, D.; Bruzzone, L. A Hierarchical Approach to three-dimensional segmentation of LiDAR data at single-tree level in a multilayered forest. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4190–4203. [Google Scholar] [CrossRef]

- Shahzad, M.; Schmitt, M.; Zhu, X.X. Segmentation and crown parameter extraction of individual trees in an airborne TomoSAR point cloud. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2015, 40, 205–209. [Google Scholar] [CrossRef] [Green Version]

- Wagner, F.H.; Ferreira, M.P.; Sanchez, A.; Hirye, M.C.M.; Zortea, M.; Gloor, E.; Phillips, O.L.; de Souza Filho, C.R.; Shimabukuro, Y.E.; Aragão, L.E.O.C. Individual tree crown delineation in a highly diverse tropical forest using very high resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2018, 145, 362–377. [Google Scholar] [CrossRef]

- Ferraz, A.; Saatchi, S.; Mallet, C.; Meyer, V. Lidar Detection of individual tree size in tropical forests. Remote Sens. Environ. 2016, 183, 318–333. [Google Scholar] [CrossRef]

- Morsdorf, F.; Meier, E.; Kötz, B.; Itten, K.I.; Dobbertin, M.; Allgöwer, B. LIDAR-based geometric reconstruction of boreal type forest stands at single tree level for forest and wildland fire management. Remote Sens. Environ. 2004, 92, 353–362. [Google Scholar] [CrossRef]

- Vega, C.; Hamrouni, A.; Mokhtari, S.E.; Morel, J.; Bock, J.; Renaud, J.P.; Bouvier, M.; Durrieu, S. PTrees: A point-based approach to forest tree extraction from LiDAR data. Int. J. Appl. Earth Obs. Geoinf. 2014, 98. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Gennaro, S.F.D.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Chemura, A.; van Duren, I.C.; van Leeuwen, L.M.; Department of Natural Resources; Faculty of Geo-Information Science and Earth Observation; UT-I-ITC-FORAGES. Determination of the age of oil palm from crown projection area detected from WorldView-2 multispectral remote sensing data: The case of Ejisu-Juaben District, Ghana. ISPRS J. Photogramm. Remote Sens. 2015, 100, 118–127. [Google Scholar] [CrossRef]

- Allouis, T.; Durrieu, S.; Véga, C.; Couteron, P. Stem Volume and above-ground biomass estimation of individual pine trees From LiDAR data: Contribution of full-waveform signals. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 924–934. [Google Scholar] [CrossRef]

- Gupta, S.; Weinacker, H.; Koch, B. Comparative analysis of clustering-based approaches for 3-D single tree detection using airborne fullwave Lidar data. Remote Sens. 2010, 2, 968. [Google Scholar] [CrossRef] [Green Version]

- Yang, Q.; Su, Y.; Jin, S.; Kelly, M.; Hu, T.; Ma, Q.; Li, Y.; Song, S.; Zhang, J.; Xu, G.; et al. The influence of vegetation characteristics on individual tree segmentation methods with airborne LiDAR data. Remote Sens. 2019, 11, 2880. [Google Scholar] [CrossRef] [Green Version]

- Zhen, Z.; Quackenbush, L.J.; Zhang, L. Trends in automatic individual tree crown detection and delineation—Evolution of LiDAR data. Remote Sens. 2016, 8, 333. [Google Scholar] [CrossRef] [Green Version]

- Coomes, D.A.; Dalponte, M.; Jucker, T.; Asner, G.P.; Banin, L.F.; Burslem, D.F.R.P.; Lewis, S.L.; Nilus, R.; Phillips, O.L.; Phua, M.-H.; et al. Area-based vs tree-centric approaches to mapping forest carbon in southeast asian forests from airborne laser scanning data. Remote Sens. Environ. 2017, 194, 77–88. [Google Scholar] [CrossRef] [Green Version]

- Wermelinger, B. Ecology and management of the spruce bark beetle Ips Typographus—A review of recent research. For. Ecol. Manag. 2004, 202, 67–82. [Google Scholar] [CrossRef]

- Michigan Nursery and Landscape Association. 3PM Report: European Spruce Bark Beetle. Available online: https://www.mnla.org/story/3pm_report_european_spruce_bark_beetle (accessed on 25 August 2020).

- Eichhorn, J.; Roskams, P.; Potocic, N.; Timmermann, V.; Ferretti, M.; Mues, V.; Szepesi, A.; Durrant, D.; Seletkovic, I.; Schroeck, H.-W.; et al. Part. IV Visual Assessment of Crown Condition and Damaging Agents; Thünen Institute of Forest Ecosystems: Braunschweig, Germany, 2016; ISBN 978-3-86576-162-0. [Google Scholar]

- Samanta, S.; Mukherjee, A.; Ashour, A.S.; Dey, N.; Tavares, J.M.R.S.; Abdessalem Karâa, W.B.; Taiar, R.; Azar, A.T.; Hassanien, A.E. Log transform based optimal image enhancement using firefly algorithm for autonomous mini unmanned aerial vehicle: An application of aerial photography. Int. J. Image Grap. 2018, 18, 1850019. [Google Scholar] [CrossRef] [Green Version]

- Haicheng, W.; Mingxia, X.; Ling, Z.; Miaojun, W.; Xing, W.; Xiuxia, Z.; Bai, Z. Study on Monitoring Technology of UAV Aerial Image Enhancement for Burning Straw. In Proceedings of the 2016 Chinese Control and Decision Conference (CCDC), Yinchuan, China, 28–30 May 2016; IEEE Industrial Electronics (IE) Chapter: Singapore, 2016; pp. 4321–4325. [Google Scholar]

- Kim, B.H.; Kim, M.Y. Anti-Saturation and Contrast Enhancement Technique Using Interlaced Histogram Equalization (IHE) for Improving Target Detection Performance of EO/IR Images. In Proceedings of the 2017 17th International Conference on Control, Automation and Systems (ICCAS), Jeju, Korea, 18–21 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1692–1695. [Google Scholar] [CrossRef]

- GUO, L.J. Balance contrast enhancement technique and its application in image colour composition. Int. J. Remote Sens. 1991, 12, 2133–2151. [Google Scholar] [CrossRef]

- Archana; Verma, A.K.; Goel, S.; Kumar, N.S. Gray Level Enhancement to Emphasize Less Dynamic Region within Image Using Genetic Algorithm. In Proceedings of the 2013 3rd IEEE International Advance Computing Conference (IACC), Ghaziabad, India, 22–23 February 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1171–1176. [Google Scholar] [CrossRef]

- Tausif, M.; Khan, E.; Hasan, M.; Reisslein, M. SMFrWF: Segmented modified fractional wavelet filter: Fast low-memory discrete wavelet transform (DWT). IEEE Access 2019, 7, 84448–84467. [Google Scholar] [CrossRef]

- Pradham, P.; Younan, N.H.; King, R.L. Chapter 16—Concepts of Image Fusion in Remote Sensing Applications. In Image Fusion; Stathaki, T., Ed.; Academic Press: Oxford, UK, 2008; pp. 393–428. ISBN 978-0-12-372529-5. [Google Scholar]

- Efford, N. Digital Image Processing: A Practical Introduction Using Java (with CD-ROM), 1st ed.; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 2000; ISBN 978-0-201-59623-6. [Google Scholar]

- Perera, R.; Premasiri, S. Hardware Implementation of Essential Pre-Processing Morphological Operations in Image Processing. In Proceedings of the 2017 6th National Conference on Technology and Management (NCTM), Malabe, Sri Lanka, 27–27 January 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 142–146. [Google Scholar]

- Hamad, Y.A.; Safonova, A.N. A Program for Highlighting the Crown of a Tree Based on UAV Data. Certificate of State Registration of a Computer Program, 2020. [Хамад, Ю.А.; Сафoнoва, А.Н. Прoграмма для выделения крoны дерева пo данным БПЛА. Свидетельствo o гoсударственнoй регистрации прoграммы для ЭВМ, 2020]. SibFU Scientific and Innovation Portal [Научнo-Иннoвациoнный Пoртал СФУ]. Available online: http://research.sfu-kras.ru/publications/publication/44104840 (accessed on 3 August 2021).

- Baştan, M.; Bukhari, S.S.; Breuel, T. Active canny: Edge detection and recovery with open active contour models. IET Image Process. 2017, 11, 1325–1332. [Google Scholar] [CrossRef] [Green Version]

- Ito, K.; Xiong, K. Gaussian filters for nonlinear filtering problems. IEEE Trans. Autom. Control. 2000, 45, 910–927. [Google Scholar] [CrossRef] [Green Version]

- Dietterich, T.G.; Bakiri, G. Solving multiclass learning problems via error-correcting output codes. J. Artif. Intell. Res. 1994, 2, 263–286. [Google Scholar] [CrossRef] [Green Version]

- Schölkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; The MIT Press: Cambridge, MA, USA; London, UK, 2002. [Google Scholar]

- Escalera, S.; Pujol, O.; Radeva, P. Error-correcting output codes library. J. Mach. Learn. Res. 2010, 11, 661–664. [Google Scholar]

- Arnaboldi, V.; Passarella, A.; Conti, M.; Dunbar, R.I.M. Chapter 5—Evolutionary Dynamics in Twitter Ego Networks. In Online Social Networks; Arnaboldi, V., Passarella, A., Conti, M., Dunbar, R.I.M., Eds.; Computer Science Reviews and Trends; Elsevier: Boston, MA, USA, 2015; pp. 75–92. ISBN 978-0-12-803023-3. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection over Union: A Metric and A Loss for Bounding Box Regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; CVPR 2019 Open Access, Provided by the Computer Vision Foundation: Long Beach, CA, USA, 2019; pp. 658–666. [Google Scholar]

| Channel | Min Values | Max Values | Mean Value | |

|---|---|---|---|---|

| I/O Images | Input Image | Output Image | ||

| Red | 0 | 255 | 40 | 115 |

| Green | 0 | 255 | 59.53 | 116 |

| Blue | 0 | 255 | 29.9 | 113 |

| Step | The Pre-Processing Stages of Segmented Image |

|---|---|

| 1 | Gaussian filter (Ito and Xiong [33]) is used to remove any noise on the image. |

| 2 | The image gradient:

|

| 3 | Non-maximum suppression is performed. This step removes pixels that are not part of the edge. Only thin lines will remain, which contain pixels that are part of the edge. |

| 4 | Deceleration is the last step in which two thresholds are used for high and low:

|

| Data Set | Image Area | Area of Missing Trees, % | Mean Number of Crowns per Plot | Number of Delineated Trees | Number of Lost Trees | JSC, % | IoU, % |

|---|---|---|---|---|---|---|---|

| 1 | MDC | 33 | 130 | 124 | 6 | 95.38 | 92.72 |

| DCC | 26 | 171 | 159 | 12 | 92.98 | 94.50 | |

| DCD | 36 | 77 | 74 | 3 | 96.1 | 93.89 | |

| DCDMS | 19 | 340 | 310 | 30 | 91.18 | 90.67 | |

| DMDC | 16 | 112 | 107 | 5 | 95.57 | 94.38 | |

| 2 | MDCC | 38 | 118 | 113 | 5 | 95.76 | 96.64 |

| DCC | 34 | 127 | 124 | 3 | 97.64 | 95.44 | |

| DCMD | 32 | 157 | 151 | 6 | 96.18 | 95.76 | |

| DCDMS | 33 | 139 | 132 | 7 | 94.96 | 94.11 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Safonova, A.; Hamad, Y.; Dmitriev, E.; Georgiev, G.; Trenkin, V.; Georgieva, M.; Dimitrov, S.; Iliev, M. Individual Tree Crown Delineation for the Species Classification and Assessment of Vital Status of Forest Stands from UAV Images. Drones 2021, 5, 77. https://doi.org/10.3390/drones5030077

Safonova A, Hamad Y, Dmitriev E, Georgiev G, Trenkin V, Georgieva M, Dimitrov S, Iliev M. Individual Tree Crown Delineation for the Species Classification and Assessment of Vital Status of Forest Stands from UAV Images. Drones. 2021; 5(3):77. https://doi.org/10.3390/drones5030077

Chicago/Turabian StyleSafonova, Anastasiia, Yousif Hamad, Egor Dmitriev, Georgi Georgiev, Vladislav Trenkin, Margarita Georgieva, Stelian Dimitrov, and Martin Iliev. 2021. "Individual Tree Crown Delineation for the Species Classification and Assessment of Vital Status of Forest Stands from UAV Images" Drones 5, no. 3: 77. https://doi.org/10.3390/drones5030077

APA StyleSafonova, A., Hamad, Y., Dmitriev, E., Georgiev, G., Trenkin, V., Georgieva, M., Dimitrov, S., & Iliev, M. (2021). Individual Tree Crown Delineation for the Species Classification and Assessment of Vital Status of Forest Stands from UAV Images. Drones, 5(3), 77. https://doi.org/10.3390/drones5030077