SeeCucumbers: Using Deep Learning and Drone Imagery to Detect Sea Cucumbers on Coral Reef Flats

Abstract

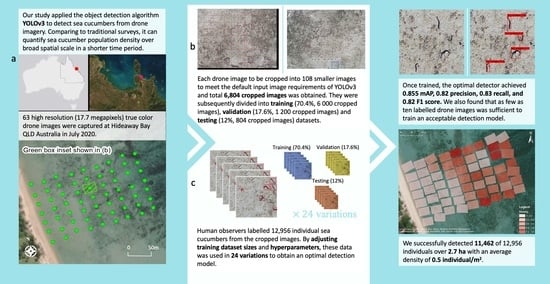

1. Introduction

2. Methods

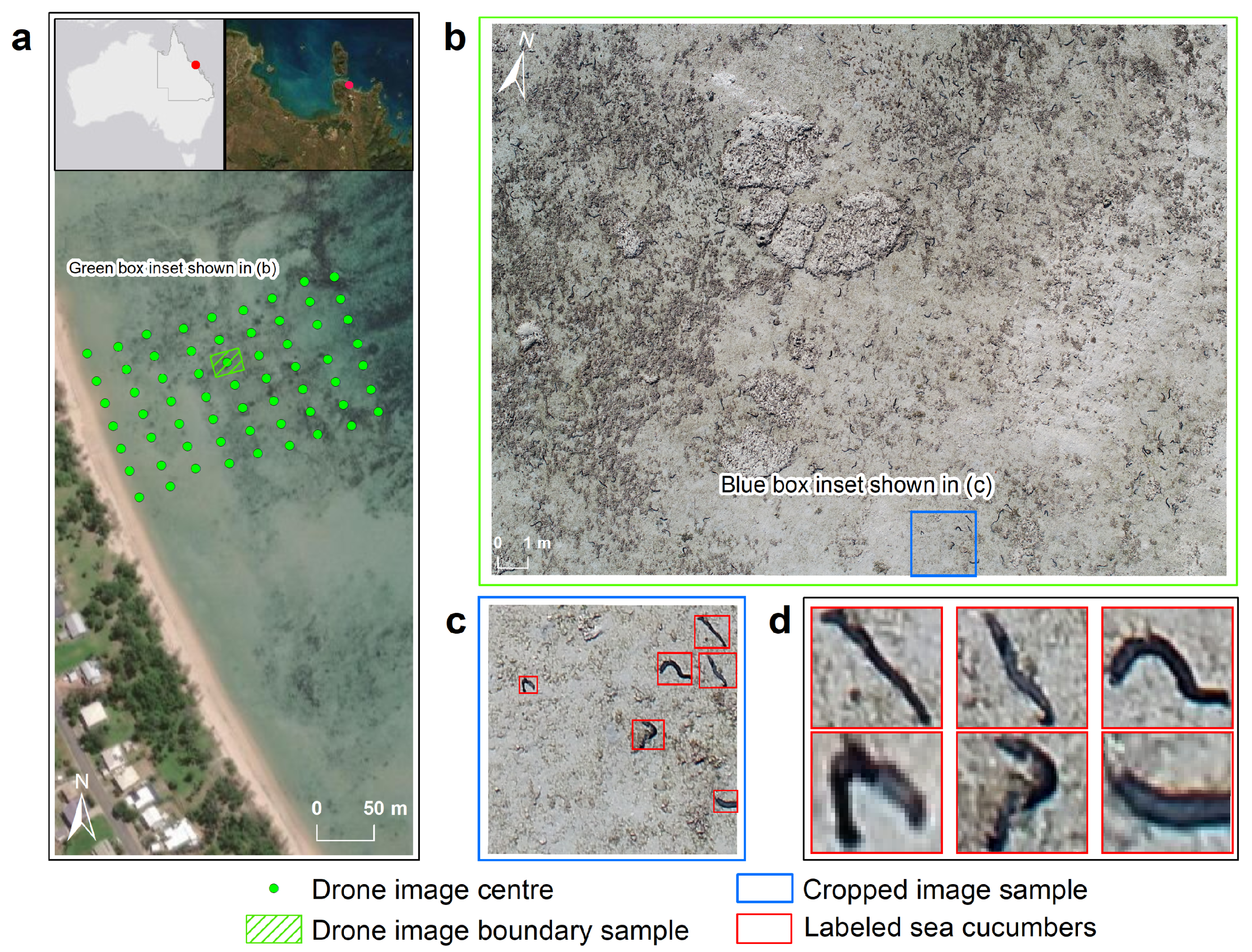

2.1. Study Site

2.2. Data Acquisition

2.3. Data Processing

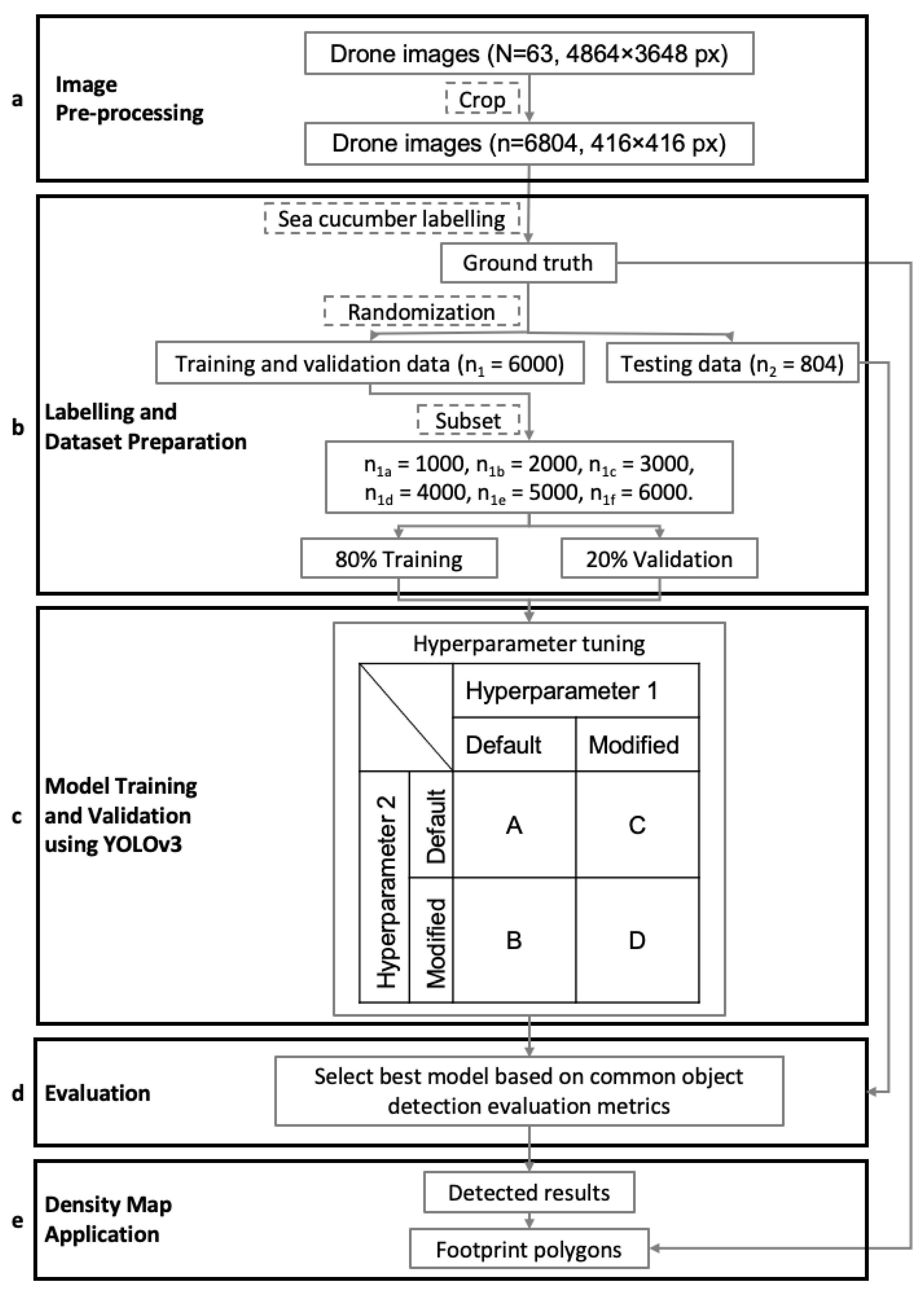

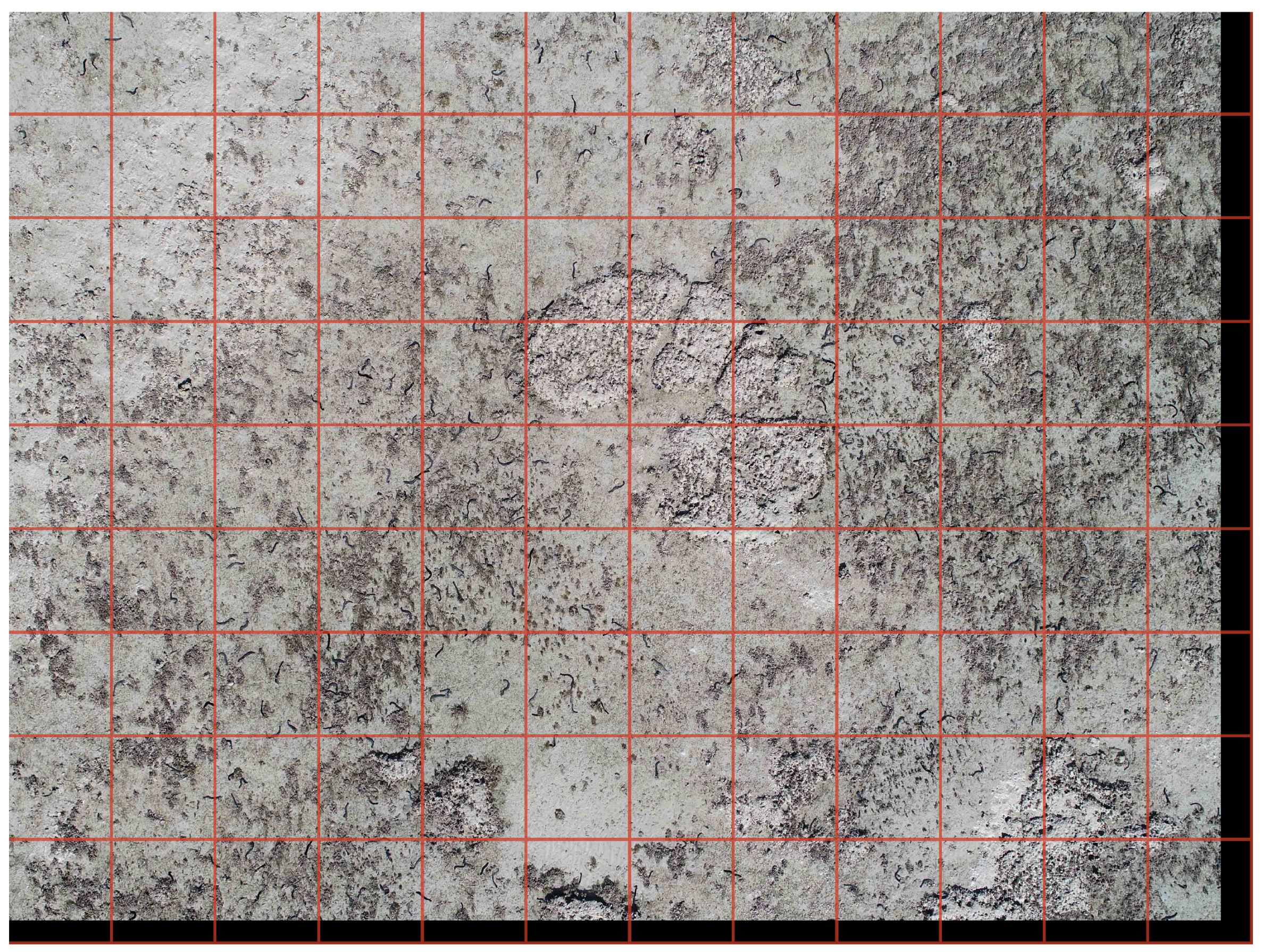

2.3.1. Image Pre-Processing

2.3.2. Labelling and Dataset Preparation

2.3.3. Model Training and Validation

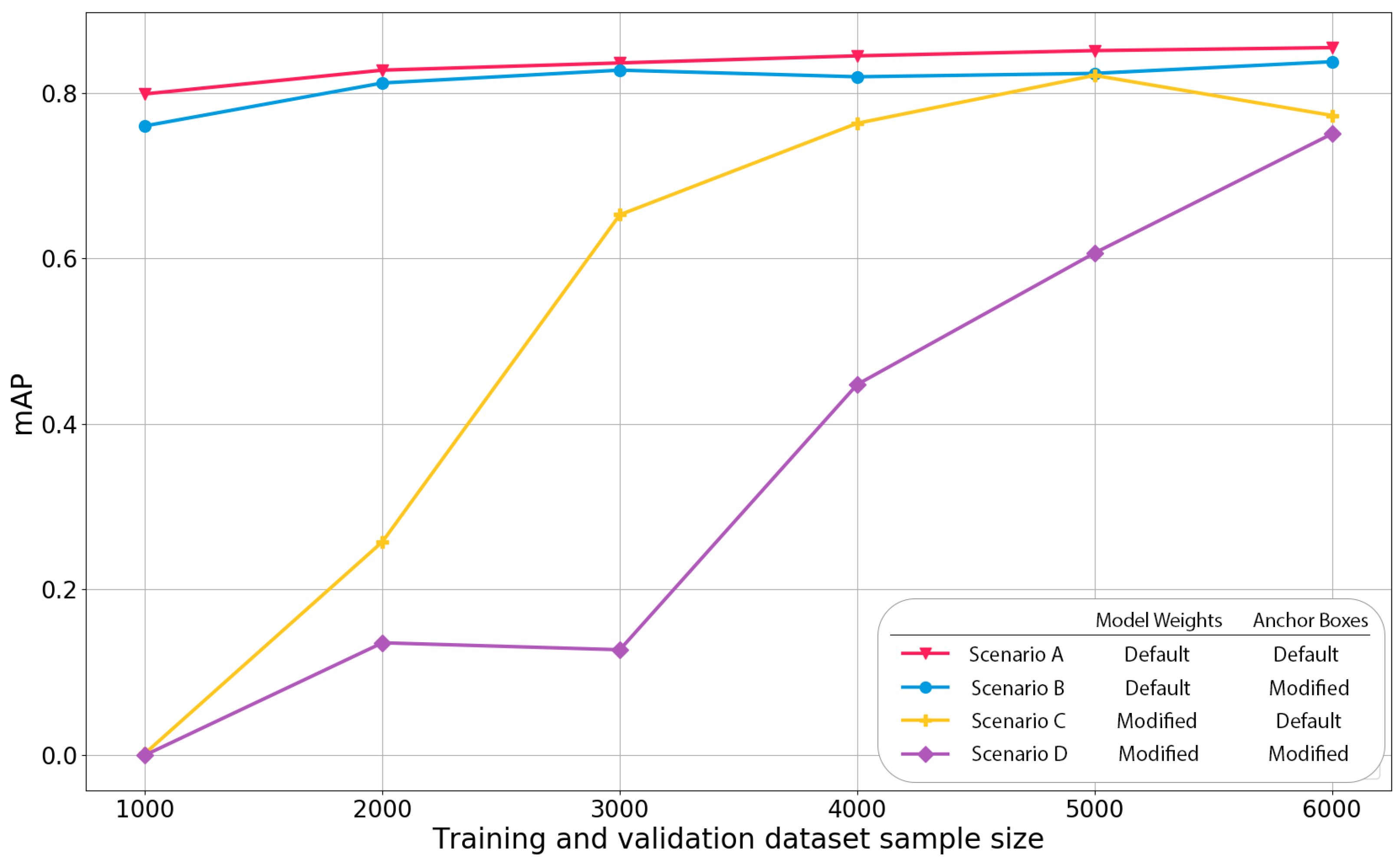

- Scenario A: zero hyperparameters tuned: default pre-trained model weights and default anchor boxes.

- Scenario B: one hyperparameter tuned: default pre-trained model weights and modified anchor boxes.

- Scenario C: one hyperparameter tuned: modified pre-trained model weights and default anchor boxes.

- Scenario D: two hyperparameters tuned: modified pre-trained model weights and modified anchor boxes.

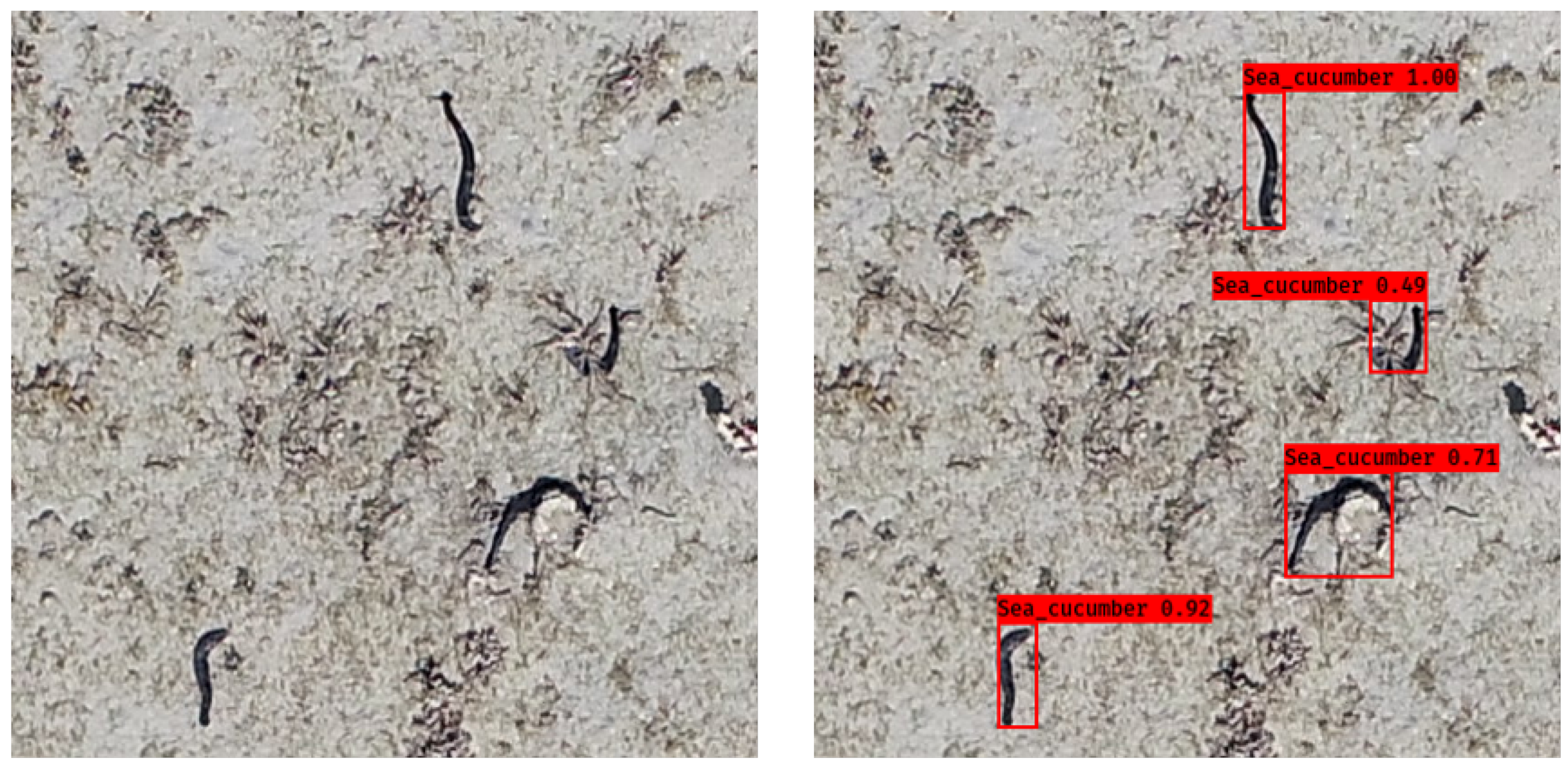

2.3.4. Sea Cucumber Detection Evaluation

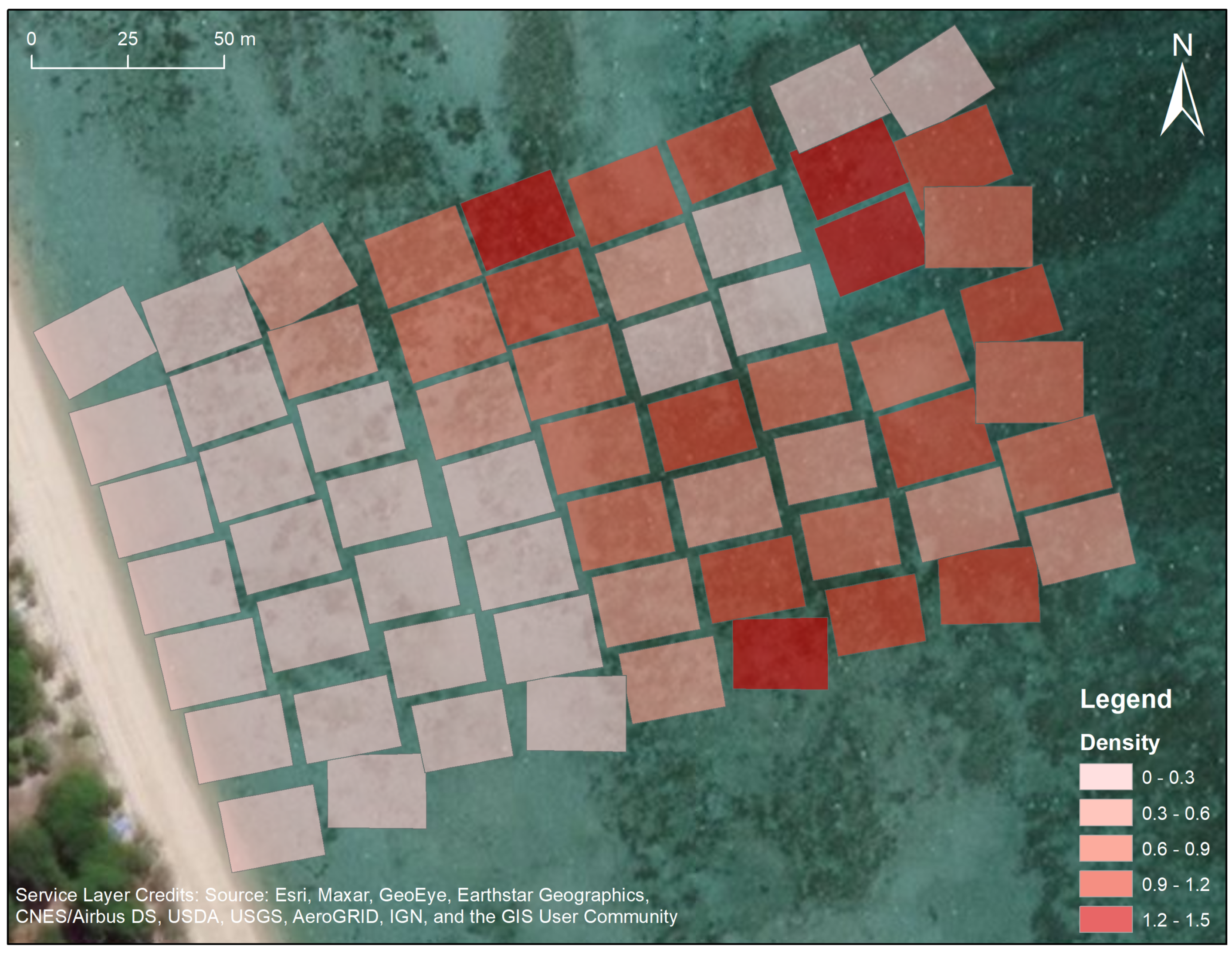

2.3.5. Mapping Sea Cucumber Density

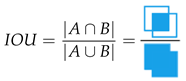

| Evaluation Metrics | Definitions | Interpretation and Relevance | |||

|---|---|---|---|---|---|

| Intersection over Union (IOU) |  | By using an IOU threshold of 0.5 to define true positive detections we required that at least 50% of the bounding box area identified by the ML approach overlapped with the area identified by the human observer. A higher IOU threshold would indicate a higher accuracy of the detection location within an image, and thus result in less true positive detections. In this study, a moderate IOU threshold (0.5) was chosen to compare with other object detection challenges (used for both COCO and PASCAL VOC object detection challenge) [49,51] and as the exact location of a sea cucumber individual was not the priority. | |||

| where A is the area of the detected bounding box and B is the area of the mannually labelled bounding box. | |||||

| Confusion/ Error matrix | Predicted by ML model | A bounding box is deemed a TP, TN, FN, or FP when the confidence score (in this case it was set to 0 to evaluate the performance) and IOU exceed the chosen threshold (in this case IOU ≥ 0.5). The numbers of the TP, TN, FN, and FP detected results alone do not indicate the performance quality of resulting model but are the basic values used to calculate other evaluation metrics. | |||

| Positive | Negative | ||||

| Ground Truth | Positive | True Positive (TP) | False Negative (FN) | ||

| Negative | False Positive (FP) | True Negative (TN) | |||

| Precision | Precision values range from 0 for very low precision to 1 for perfect precision. Higher precision means higher correct detection in all detected results, i.e., more detected sea cucumbers are actually sea cucumbers. High Precision value was preferred if the detected sea cucumber correctly in this study. | ||||

| where TP is the number of true positives and FP is the number of false positive detected results. | |||||

| Recall | Recall values range from 0 for poor recall to 1 for perfect recall. Higher recall means less incorrect detections, i.e., less detection of objects that are not sea cucumbers. | ||||

| where TP is the number of true positive and FN is the number of false negative detected results. | |||||

| F1 score | This is the harmonic mean of precision and recall. The closer the F1 score is to a value of 1 the better the performance of the model. Instead of choosing either the model with the best precision or the best recall, the highest F1 score balances the two values. It is useful when both high precision and high recall are desired. | ||||

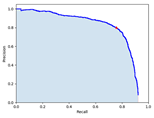

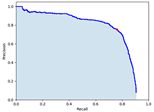

| mAP | This metric is similar to the F1 score, but with the benefit that it has the potential to measure multiple categories if required. | ||||

| where N is the number object classes being detected (in our case, N = 1 since we only detect se cucumbers), n is the number of recall levels (in an ascending order) at which the precision is first interpolated, r is recall, and p is precision [51,54]. | |||||

3. Results and Discussion

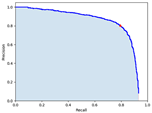

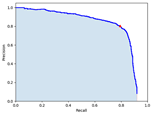

3.1. Model Performance Evaluation

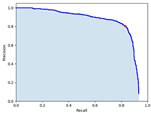

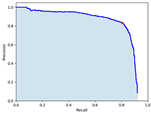

3.1.1. Influence of Training Dataset Size

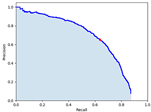

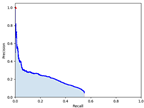

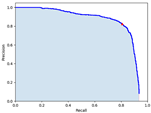

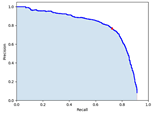

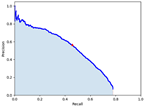

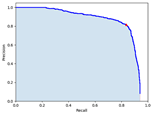

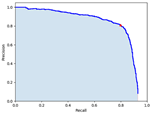

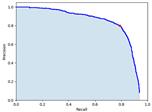

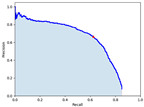

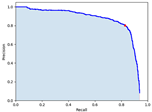

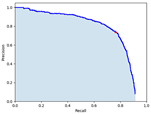

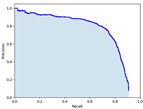

3.1.2. Influence of Hyperparameter Tuning

3.1.3. Comparison to Previous Studies

3.2. Mapping Sea Cucumber Density

3.3. Potential Future Applications

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| COCO | Common Object in Context dataset |

| CNN | Convolutional Neural Networks |

| DL | Deep Learning |

| FN | False Negative |

| FOV | Field of View |

| FP | False Positive |

| GSD | Ground Sampling Distance |

| IOU | Intersection Over Union |

| mAP | mean Average Precision |

| ML | Machine Learning |

| R-CNN | Regions with CNN |

| RS | Remote Sensing |

| TP | True Positive |

| TN | True Negative |

| UAV | Unoccupied Aerial Vehicles |

| YOLOv3 | You Only Look Once version 3 |

Appendix A

| Training Dataset Size | Scenario | |||

|---|---|---|---|---|

| A | B | C | D | |

| 1000 |  |  |  |  |

| 2000 |  |  |  |  |

| 3000 |  |  |  |  |

| 4000 |  |  |  |  |

| 5000 |  |  |  |  |

| 6000 |  |  |  |  |

| Number | File Name | Image Area Size (m) | Detected Density (ind/m) | Detected Counts | Ground Truth | TP |

|---|---|---|---|---|---|---|

| 1 | DJI_0001 | 441.9 | 0.72 | 319 | 319 | 285 |

| 2 | DJI_0005 | 416.06 | 0.62 | 257 | 257 | 234 |

| 3 | DJI_0009 | 410.56 | 0.67 | 276 | 288 | 245 |

| 4 | DJI_0013 | 419.05 | 0.56 | 236 | 250 | 208 |

| 5 | DJI_0017 | 409.59 | 0.53 | 217 | 230 | 197 |

| 6 | DJI_0073 | 402.93 | 1.24 | 499 | 498 | 463 |

| 7 | DJI_0077 | 402.98 | 0.96 | 385 | 399 | 347 |

| 8 | DJI_0081 | 410.16 | 0.5 | 205 | 207 | 183 |

| 9 | DJI_0085 | 401.98 | 1 | 403 | 403 | 379 |

| 10 | DJI_0089 | 397.71 | 0.24 | 97 | 105 | 91 |

| 11 | DJI_0093 | 410.25 | 0.37 | 151 | 157 | 133 |

| 12 | DJI_0097 | 421.86 | 1.12 | 474 | 456 | 417 |

| 13 | DJI_0154 | 374.8 | 1.04 | 391 | 367 | 332 |

| 14 | DJI_0158 | 392.92 | 0.21 | 84 | 95 | 76 |

| 15 | DJI_0162 | 398.96 | 0.29 | 116 | 124 | 105 |

| 16 | DJI_0166 | 382.7 | 0.67 | 255 | 247 | 225 |

| 17 | DJI_0170 | 374.12 | 0.48 | 181 | 164 | 157 |

| 18 | DJI_0174 | 364.25 | 0.71 | 257 | 235 | 212 |

| 19 | DJI_0178 | 366.27 | 1.17 | 427 | 415 | 386 |

| 20 | DJI_0261 | 456.35 | 0 | 0 | 2 | 0 |

| 21 | DJI_0265 | 453.21 | 0.01 | 3 | 3 | 3 |

| 22 | DJI_0269 | 446.41 | 0.01 | 3 | 0 | 0 |

| 23 | DJI_0273 | 444.26 | 0 | 1 | 0 | 0 |

| 24 | DJI_0277 | 440.27 | 0.03 | 13 | 17 | 12 |

| 25 | DJI_0281 | 421.4 | 0.01 | 3 | 4 | 2 |

| 26 | DJI_0285 | 412.05 | 0 | 1 | 2 | 1 |

| 27 | DJI_0339 | 435.39 | 0.07 | 30 | 28 | 24 |

| 28 | DJI_0343 | 413.88 | 0.02 | 9 | 10 | 7 |

| 29 | DJI_0347 | 437.93 | 0.09 | 38 | 47 | 36 |

| 30 | DJI_0351 | 426.29 | 0.11 | 45 | 56 | 44 |

| 31 | DJI_0355 | 442.01 | 0.02 | 7 | 10 | 7 |

| 32 | DJI_0359 | 446.24 | 0.18 | 79 | 83 | 72 |

| 33 | DJI_0363 | 466.61 | 0.08 | 37 | 41 | 35 |

| 34 | DJI_0416 | 432.52 | 0.43 | 185 | 183 | 166 |

| 35 | DJI_0420 | 402.38 | 0.51 | 207 | 201 | 185 |

| 36 | DJI_0424 | 398.08 | 0.3 | 119 | 122 | 110 |

| 37 | DJI_0428 | 388.56 | 0.15 | 60 | 61 | 55 |

| 38 | DJI_0432 | 394.32 | 0.1 | 38 | 38 | 31 |

| 39 | DJI_0436 | 379.58 | 0.22 | 85 | 90 | 79 |

| 40 | DJI_0440 | 371.23 | 0.04 | 13 | 23 | 10 |

| 41 | DJI_0575 | 437.82 | 0.97 | 423 | 418 | 389 |

| 42 | DJI_0579 | 442.82 | 0.34 | 152 | 151 | 133 |

| 43 | DJI_0583 | 453.16 | 1.15 | 521 | 488 | 449 |

| 44 | DJI_0587 | 448.56 | 0.66 | 295 | 285 | 248 |

| 45 | DJI_0591 | 441.95 | 1.31 | 580 | 540 | 481 |

| 46 | DJI_0595 | 446.16 | 1.43 | 636 | 647 | 565 |

| 47 | DJI_0599 | 449.44 | 0.21 | 96 | 102 | 87 |

| 48 | DJI_0654 | 449.4 | 0.2 | 91 | 80 | 64 |

| 49 | DJI_0658 | 444.72 | 0.99 | 439 | 461 | 356 |

| 50 | DJI_0662 | 522.65 | 0.64 | 336 | 355 | 249 |

| 51 | DJI_0666 | 348.31 | 1.08 | 377 | 371 | 297 |

| 52 | DJI_0670 | 522.65 | 0.78 | 407 | 396 | 358 |

| 53 | DJI_0674 | 447.42 | 0.75 | 336 | 301 | 264 |

| 54 | DJI_0678 | 420.08 | 0.31 | 131 | 115 | 105 |

| 55 | DJI_0911 | 443.09 | 0.16 | 71 | 62 | 58 |

| 56 | DJI_0915 | 430.35 | 0.18 | 76 | 73 | 67 |

| 57 | DJI_0919 | 432.47 | 0.11 | 48 | 40 | 38 |

| 58 | DJI_0923 | 434.66 | 0.11 | 49 | 48 | 43 |

| 59 | DJI_0927 | 432.97 | 0.56 | 244 | 223 | 199 |

| 60 | DJI_0931 | 429.91 | 0.8 | 342 | 309 | 283 |

| 61 | DJI_0935 | 436.15 | 0.85 | 372 | 343 | 319 |

| 62 | DJI_0992 | 416.33 | 1.34 | 556 | 509 | 480 |

| 63 | DJI_0996 | 422.9 | 1.04 | 440 | 402 | 376 |

| Total | - | 26,662.02 | 0.50 | 13,224 | 12,956 | 11,462 |

References

- Han, Q.; Keesing, J.K.; Liu, D. A review of sea cucumber aquaculture, ranching, and stock enhancement in China. Rev. Fish. Sci. Aquac. 2016, 24, 326–341. [Google Scholar] [CrossRef]

- Purcell, S.W. Value, Market Preferences and Trade of Beche-De-Mer from Pacific Island Sea Cucumbers. PLoS ONE 2014, 9, e95075. [Google Scholar] [CrossRef] [PubMed]

- Purcell, S.W.; Mercier, A.; Conand, C.; Hamel, J.F.; Toral-Granda, M.V.; Lovatelli, A.; Uthicke, S. Sea cucumber fisheries: Global analysis of stocks, management measures and drivers of overfishing. Fish Fish. 2013, 14, 34–59. [Google Scholar] [CrossRef]

- Toral-Granda, V.; Lovatelli, A.; Vasconcellos, M. Sea cucumbers. Glob. Rev. Fish. Trade. Fao Fish. Aquac. Tech. Pap. 2008, 516, 317. [Google Scholar]

- Purcell, S.W.; Conand, C.; Uthicke, S.; Byrne, M. Ecological Roles of Exploited Sea Cucumbers. Oceanogr. Mar. Biol. 2016, 54, 367–386. [Google Scholar] [CrossRef]

- Uthicke, S. Sediment bioturbation and impact of feeding activity of Holothuria (Halodeima) atra and Stichopus chloronotus, two sediment feeding holothurians, at Lizard Island, Great Barrier Reef. Bull. Mar. Sci. 1999, 64, 129–141. [Google Scholar]

- Hammond, L. Patterns of feeding and activity in deposit-feeding holothurians and echinoids (Echinodermata) from a shallow back-reef lagoon, Discovery Bay, Jamaica. Bull. Mar. Sci. 1982, 32, 549–571. [Google Scholar]

- Williamson, J.E.; Duce, S.; Joyce, K.E.; Raoult, V. Putting sea cucumbers on the map: Projected holothurian bioturbation rates on a coral reef scale. Coral Reefs 2021, 40, 559–569. [Google Scholar] [CrossRef]

- Shiell, G. Density of H. nobilis and distribution patterns of common holothurians on coral reefs of northwestern Australia. In Advances in Sea Cucumber Aquaculture and Management; Food and Agriculture Organization: Rome, Italy, 2004; pp. 231–238. [Google Scholar]

- Tuya, F.; Hernández, J.C.; Clemente, S. Is there a link between the type of habitat and the patterns of abundance of holothurians in shallow rocky reefs? Hydrobiologia 2006, 571, 191–199. [Google Scholar] [CrossRef]

- Da Silva, J.; Cameron, J.L.; Fankboner, P.V. Movement and orientation patterns in the commercial sea cucumber Parastichopus californicus (Stimpson) (Holothuroidea: Aspidochirotida). Mar. Freshw. Behav. Physiol. 1986, 12, 133–147. [Google Scholar] [CrossRef]

- Graham, J.C.; Battaglene, S.C. Periodic movement and sheltering behaviour of Actinopyga mauritiana (Holothuroidea: Aspidochirotidae) in Solomon Islands. SPC Bechede-Mer Inf. Bull. 2004, 19, 23–31. [Google Scholar]

- Bonham, K.; Held, E.E. Ecological observations on the sea cucumbers Holothuria atra and H. leucospilota at Rongelap Atoll, Marshall Islands. Pac. Sci. 1963, 17, 305–314. [Google Scholar]

- Jontila, J.B.S.; Balisco, R.A.T.; Matillano, J.A. The Sea cucumbers (Holothuroidea) of Palawan, Philippines. Aquac. Aquar. Conserv. Legis. 2014, 7, 194–206. [Google Scholar]

- Uthicke, S.; Benzie, J. Effect of bêche-de-mer fishing on densities and size structure of Holothuria nobilis (Echinodermata: Holothuroidea) populations on the Great Barrier Reef. Coral Reefs 2001, 19, 271–276. [Google Scholar] [CrossRef]

- Kilfoil, J.P.; Rodriguez-Pinto, I.; Kiszka, J.J.; Heithaus, M.R.; Zhang, Y.; Roa, C.C.; Ailloud, L.E.; Campbell, M.D.; Wirsing, A.J. Using unmanned aerial vehicles and machine learning to improve sea cucumber density estimation in shallow habitats. ICES J. Mar. Sci. 2020, 77, 2882–2889. [Google Scholar] [CrossRef]

- Prescott, J.; Vogel, C.; Pollock, K.; Hyson, S.; Oktaviani, D.; Panggabean, A.S. Estimating sea cucumber abundance and exploitation rates using removal methods. Mar. Freshw. Res. 2013, 64, 599–608. [Google Scholar] [CrossRef][Green Version]

- Murfitt, S.L.; Allan, B.M.; Bellgrove, A.; Rattray, A.; Young, M.A.; Ierodiaconou, D. Applications of unmanned aerial vehicles in intertidal reef monitoring. Sci. Rep. 2017, 7, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Kachelriess, D.; Wegmann, M.; Gollock, M.; Pettorelli, N. The application of remote sensing for marine protected area management. Ecol. Indic. 2014, 36, 169–177. [Google Scholar] [CrossRef]

- Roughgarden, J.; Running, S.W.; Matson, P.A. What does remote sensing do for ecology? Ecology 1991, 72, 1918–1922. [Google Scholar] [CrossRef]

- Oleksyn, S.; Tosetto, L.; Raoult, V.; Joyce, K.E.; Williamson, J.E. Going Batty: The Challenges and Opportunities of Using Drones to Monitor the Behaviour and Habitat Use of Rays. Drones 2021, 5, 12. [Google Scholar] [CrossRef]

- Casella, E.; Collin, A.; Harris, D.; Ferse, S.; Bejarano, S.; Parravicini, V.; Hench, J.L.; Rovere, A. Mapping coral reefs using consumer-grade drones and structure from motion photogrammetry techniques. Coral Reefs 2017, 36, 269–275. [Google Scholar] [CrossRef]

- Fallati, L.; Saponari, L.; Savini, A.; Marchese, F.; Corselli, C.; Galli, P. Multi-Temporal UAV Data and Object-Based Image Analysis (OBIA) for Estimation of Substrate Changes in a Post-Bleaching Scenario on a Maldivian Reef. Remote Sens. 2020, 12, 2093. [Google Scholar] [CrossRef]

- Lowe, M.K.; Adnan, F.A.F.; Hamylton, S.M.; Carvalho, R.C.; Woodroffe, C.D. Assessing Reef-Island Shoreline Change Using UAV-Derived Orthomosaics and Digital Surface Models. Drones 2019, 3, 44. [Google Scholar] [CrossRef]

- Parsons, M.; Bratanov, D.; Gaston, K.J.; Gonzalez, F. UAVs, hyperspectral remote sensing, and machine learning revolutionizing reef monitoring. Sensors 2018, 18, 2026. [Google Scholar] [CrossRef] [PubMed]

- Hamylton, S.M.; Zhou, Z.; Wang, L. What Can Artificial Intelligence Offer Coral Reef Managers? Front. Mar. Sci. 2020. [Google Scholar] [CrossRef]

- Shihavuddin, A.S.M.; Gracias, N.; Garcia, R.; Gleason, A.; Gintert, B. Image-Based Coral Reef Classification and Thematic Mapping. Remote Sens. 2013, 5, 1809–1841. [Google Scholar] [CrossRef]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and Classification of Ecologically Sensitive Marine Habitats Using Unmanned Aerial Vehicle (UAV) Imagery and Object-Based Image Analysis (OBIA). Remote Sens. 2018, 10, 1331. [Google Scholar] [CrossRef]

- Kim, K.S.; Park, J.H. A survey of applications of artificial intelligence algorithms in eco-environmental modelling. Environ. Eng. Res. 2009, 14, 102–110. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Purcell, S.W.; Samyn, Y.; Conand, C. Commercially Important Sea Cucumbers of the World; Food and Agriculture Organization: Rome, Italy, 2012. [Google Scholar]

- Gallacher, D.; Khafaga, M.T.; Ahmed, M.T.M.; Shabana, M.H.A. Plant species identification via drone images in an arid shrubland. In Proceedings of the 10th International Rangeland Congress, Saskatoon, SK, Canada, 17–22 July 2016; pp. 981–982. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: tensorflow.org (accessed on 24 March 2021).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Nice, France, 2019; pp. 8024–8035. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 24 March 2021).

- Claesen, M.; Moor, B.D. Hyperparameter Search in Machine Learning. arXiv 2015, arXiv:1502.02127. [Google Scholar]

- Hopley, D.; Smithers, S.G.; Parnell, K. The Geomorphology of the Great Barrier Reef: Development, Diversity and Change; Cambridge University Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Thompson, A.; Costello, P.; Davidson, J.; Logan, M.; Coleman, G. Marine Monitoring Program: Annual Report for Inshore Coral Reef Monitoring 2017-18; Great Barrier Reef Marine Park Authority: Townsville, Australia, 2019. [Google Scholar]

- Albertz, J.; Wolf, B. Generating true orthoimages from urban areas without height information. In 1st EARSeL Workshop of the SIG Urban Remote Sensing; Citeseer: Forest Grove, OR, USA, 2006; pp. 2–3. [Google Scholar]

- Joyce, K.; Duce, S.; Leahy, S.; Leon, J.; Maier, S. Principles and practice of acquiring drone-based image data in marine environments. Mar. Freshw. Res. 2019, 70, 952–963. [Google Scholar] [CrossRef]

- Hashemi, M. Enlarging smaller images before inputting into convolutional neural network: Zero-padding vs. interpolation. J. Big Data 2019, 6, 1–13. [Google Scholar] [CrossRef]

- Wada, K. LabelMe: Image Polygonal Annotation with Python. 2016. Available online: https://github.com/wkentaro/labelme (accessed on 24 March 2021).

- GitHub. Qqwweee/Keras-Yolo3: A Keras Implementation of YOLOv3 (Tensorflow Backend); GitHub: San Francisco, CA, USA, 2020. [Google Scholar]

- Torrey, L.; Shavlik, J. Transfer learning. In Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques; IGI Global: Hershey, PA, USA, 2010; pp. 242–264. [Google Scholar]

- Zhong, Y.; Wang, J.; Peng, J.; Zhang, L. Anchor box optimization for object detection. In Proceedings of the IEEE Workshop on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020; pp. 1286–1294. [Google Scholar]

- Géron, A. Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems; O’Reilly Media: Sebastopol, CA, USA, 2019. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- COCO Common Objects in Context-Detection-Evaluate. 2020. Available online: https://cocodataset.org/#detection-eval (accessed on 10 December 2020).

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- COCO Common Objects in Context-Detection-Leaderboard. 2020. Available online: https://cocodataset.org/#detection-leaderboard (accessed on 10 December 2020).

- ESRI. ArcGIS Desktop: Release 10.1; ESRI (Environmental Systems Resource Institute): Redlands, CA, USA, 2011. [Google Scholar]

- Everingham, M.; Winn, J. The pascal visual object classes challenge 2012 (voc2012) development kit. Pattern Anal. Stat. Model. Comput. Learn. Tech. Rep 2011, 8, 4–32. [Google Scholar]

- Beijbom, O.; Edmunds, P.J.; Roelfsema, C.; Smith, J.; Kline, D.I.; Neal, B.P.; Dunlap, M.J.; Moriarty, V.; Fan, T.Y.; Tan, C.J. Towards automated annotation of benthic survey images: Variability of human experts and operational modes of automation. PLoS ONE 2015, 10, e0130312. [Google Scholar] [CrossRef] [PubMed]

- Villon, S.; Chaumont, M.; Subsol, G.; Villéger, S.; Claverie, T.; Mouillot, D. Coral reef fish detection and recognition in underwater videos by supervised machine learning: Comparison between deep learning and HOG+SVM methods. Int. Conf. Adv. Concepts Intell. Vis. Syst. 2016, 10016, 160–171. [Google Scholar] [CrossRef]

- Tebbett, S.B.; Goatley, C.H.; Bellwood, D.R. Algal turf sediments across the Great Barrier Reef: Putting coastal reefs in perspective. Mar. Pollut. Bull. 2018, 137, 518–525. [Google Scholar] [CrossRef] [PubMed]

| Number | mAP | Confidence Score Threshold | Precision | Recall | F1 Score | Training Dataset | Scenario * |

|---|---|---|---|---|---|---|---|

| 1 | 0.799 | 0.29 | 0.80 | 0.76 | 0.78 | 1000 | A |

| 2 | 0.827 | 0.26 | 0.80 | 0.79 | 0.80 | 2000 | A |

| 3 | 0.836 | 0.21 | 0.80 | 0.83 | 0.82 | 3000 | A |

| 4 | 0.845 | 0.30 | 0.83 | 0.81 | 0.82 | 4000 | A |

| 5 | 0.851 | 0.26 | 0.82 | 0.84 | 0.83 | 5000 | A |

| 6 | 0.855 | 0.27 | 0.82 | 0.83 | 0.82 | 6000 | A |

| 7 | 0.760 | 0.22 | 0.75 | 0.76 | 0.76 | 1000 | B |

| 8 | 0.812 | 0.26 | 0.80 | 0.79 | 0.80 | 2000 | B |

| 9 | 0.827 | 0.27 | 0.83 | 0.81 | 0.82 | 3000 | B |

| 10 | 0.819 | 0.29 | 0.81 | 0.80 | 0.80 | 4000 | B |

| 11 | 0.823 | 0.26 | 0.81 | 0.80 | 0.80 | 5000 | B |

| 12 | 0.838 | 0.24 | 0.80 | 0.83 | 0.82 | 6000 | B |

| 13 | 0.002 | 1.00 | 0.00 | 0.00 | 0.03 | 1000 | C |

| 14 | 0.258 | 0.07 | 0.33 | 0.38 | 0.35 | 2000 | C |

| 15 | 0.653 | 0.14 | 0.65 | 0.64 | 0.65 | 3000 | C |

| 16 | 0.753 | 0.24 | 0.77 | 0.73 | 0.75 | 4000 | C |

| 17 | 0.821 | 0.25 | 0.80 | 0.79 | 0.80 | 5000 | C |

| 18 | 0.773 | 0.21 | 0.74 | 0.76 | 0.75 | 6000 | C |

| 19 | 0.000 | 0.00 | 0.00 | 0.00 | 0.00 | 1000 | D |

| 20 | 0.136 | 0.18 | 0.94 | 0.01 | 0.25 | 2000 | D |

| 21 | 0.127 | 0.40 | 1.00 | 0.00 | 0.25 | 3000 | D |

| 22 | 0.448 | 0.12 | 0.57 | 0.46 | 0.51 | 4000 | D |

| 23 | 0.606 | 0.17 | 0.67 | 0.63 | 0.65 | 5000 | D |

| 24 | 0.750 | 0.23 | 0.76 | 0.73 | 0.75 | 6000 | D |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.Y.Q.; Duce, S.; Joyce, K.E.; Xiang, W. SeeCucumbers: Using Deep Learning and Drone Imagery to Detect Sea Cucumbers on Coral Reef Flats. Drones 2021, 5, 28. https://doi.org/10.3390/drones5020028

Li JYQ, Duce S, Joyce KE, Xiang W. SeeCucumbers: Using Deep Learning and Drone Imagery to Detect Sea Cucumbers on Coral Reef Flats. Drones. 2021; 5(2):28. https://doi.org/10.3390/drones5020028

Chicago/Turabian StyleLi, Joan Y. Q., Stephanie Duce, Karen E. Joyce, and Wei Xiang. 2021. "SeeCucumbers: Using Deep Learning and Drone Imagery to Detect Sea Cucumbers on Coral Reef Flats" Drones 5, no. 2: 28. https://doi.org/10.3390/drones5020028

APA StyleLi, J. Y. Q., Duce, S., Joyce, K. E., & Xiang, W. (2021). SeeCucumbers: Using Deep Learning and Drone Imagery to Detect Sea Cucumbers on Coral Reef Flats. Drones, 5(2), 28. https://doi.org/10.3390/drones5020028