Deep Neural Networks and Transfer Learning for Food Crop Identification in UAV Images

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Related Works

1.3. Our Approach

2. Materials and Methods

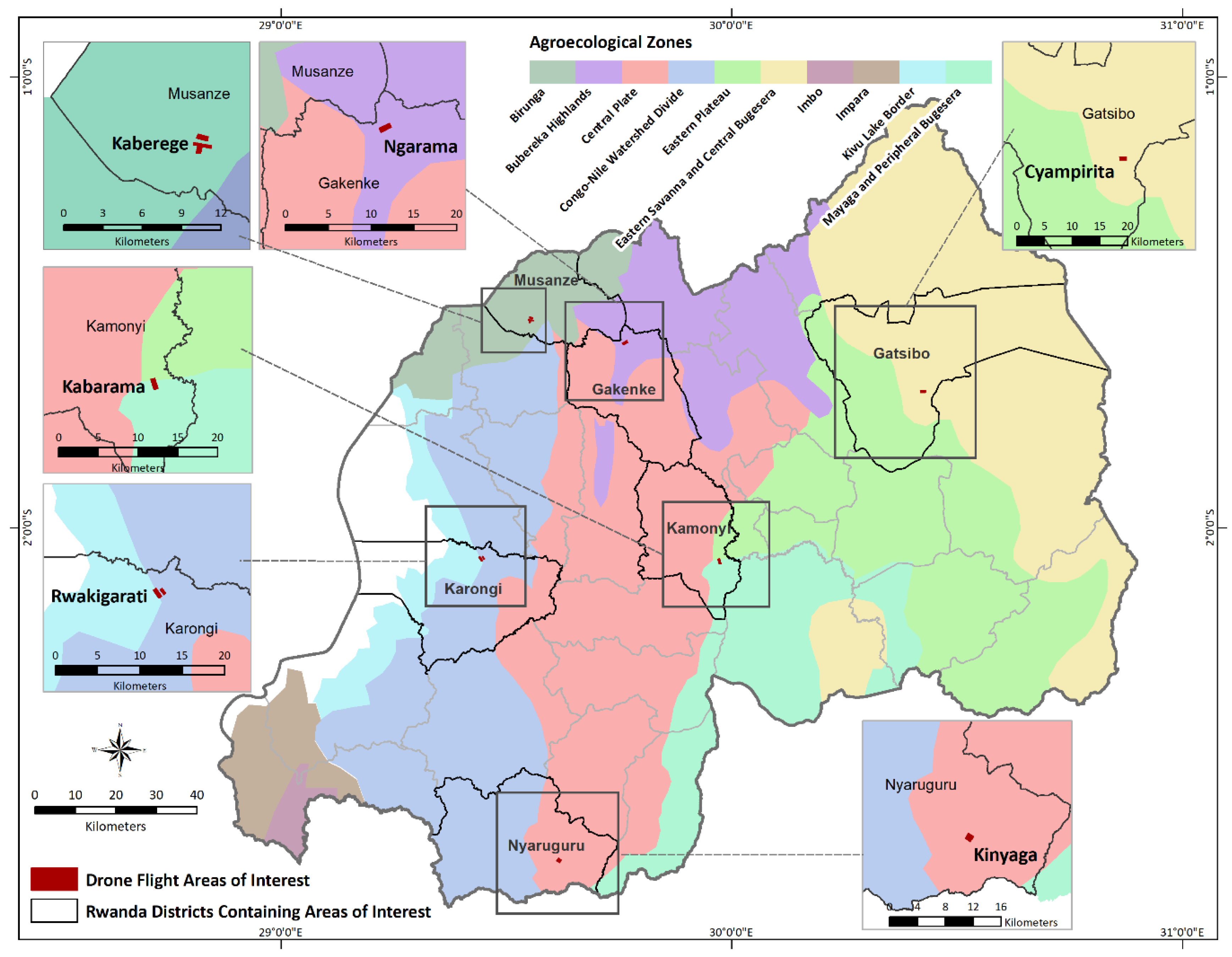

2.1. Study Area

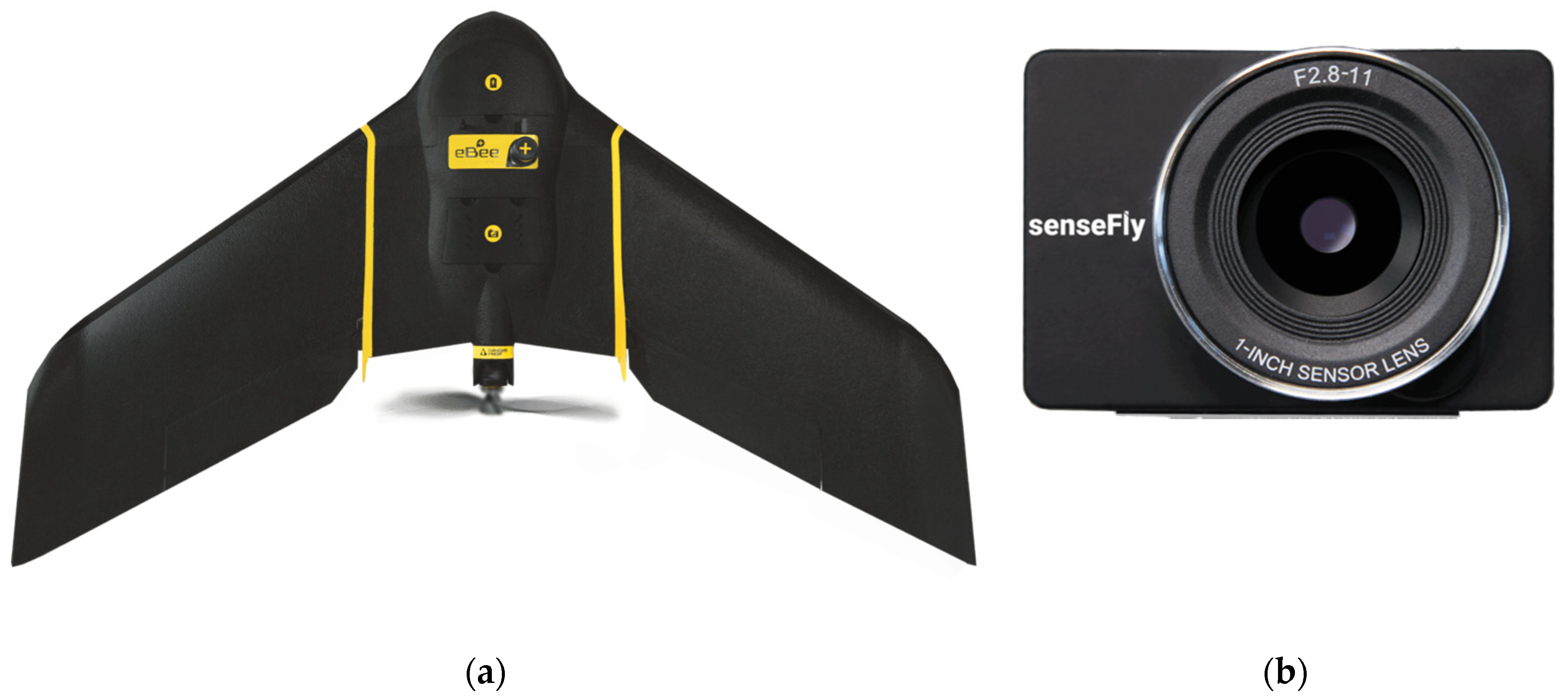

2.2. Data Collection

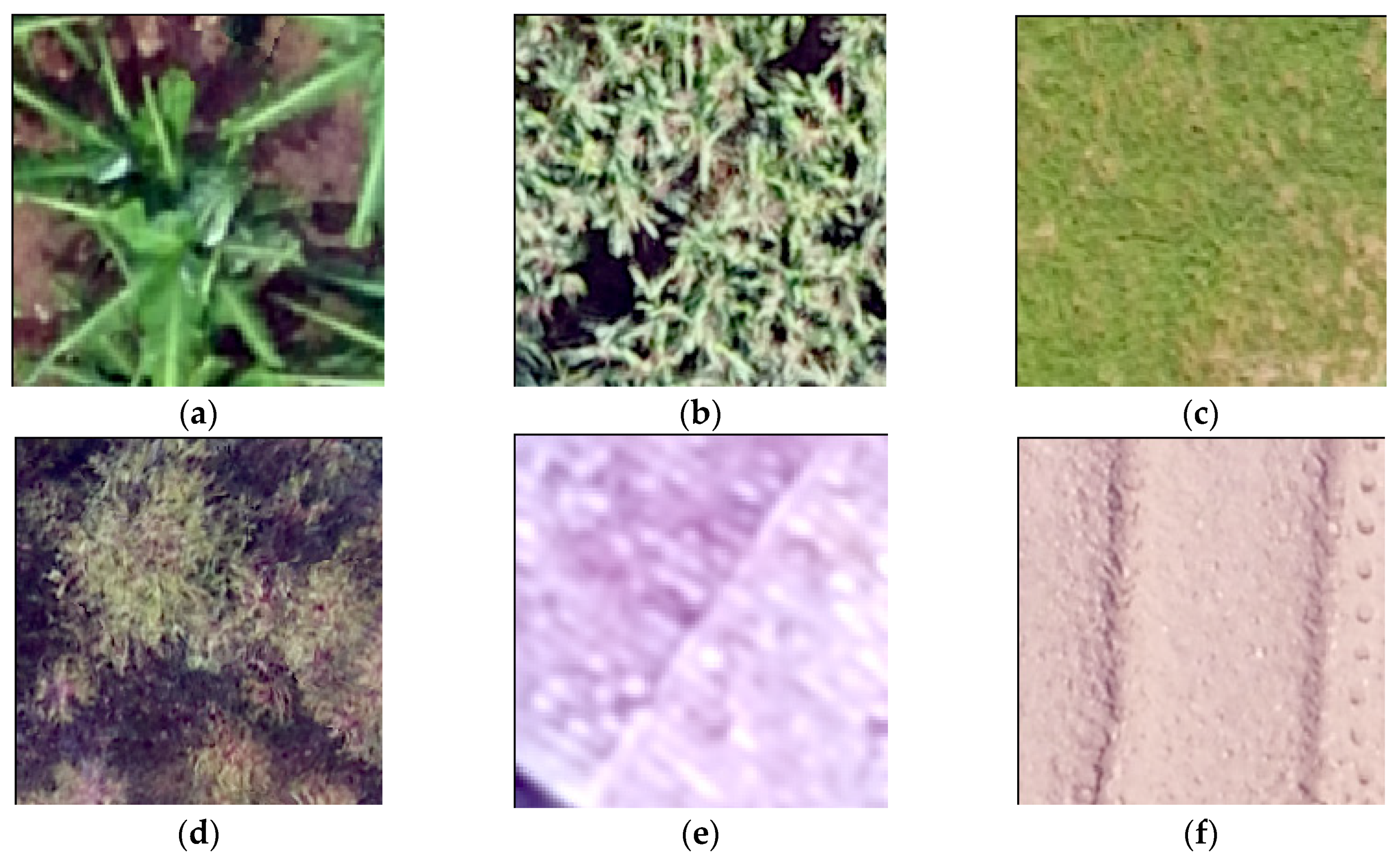

2.3. Data Labeling

2.4. Data Description

2.5. Agricultural Classification Model

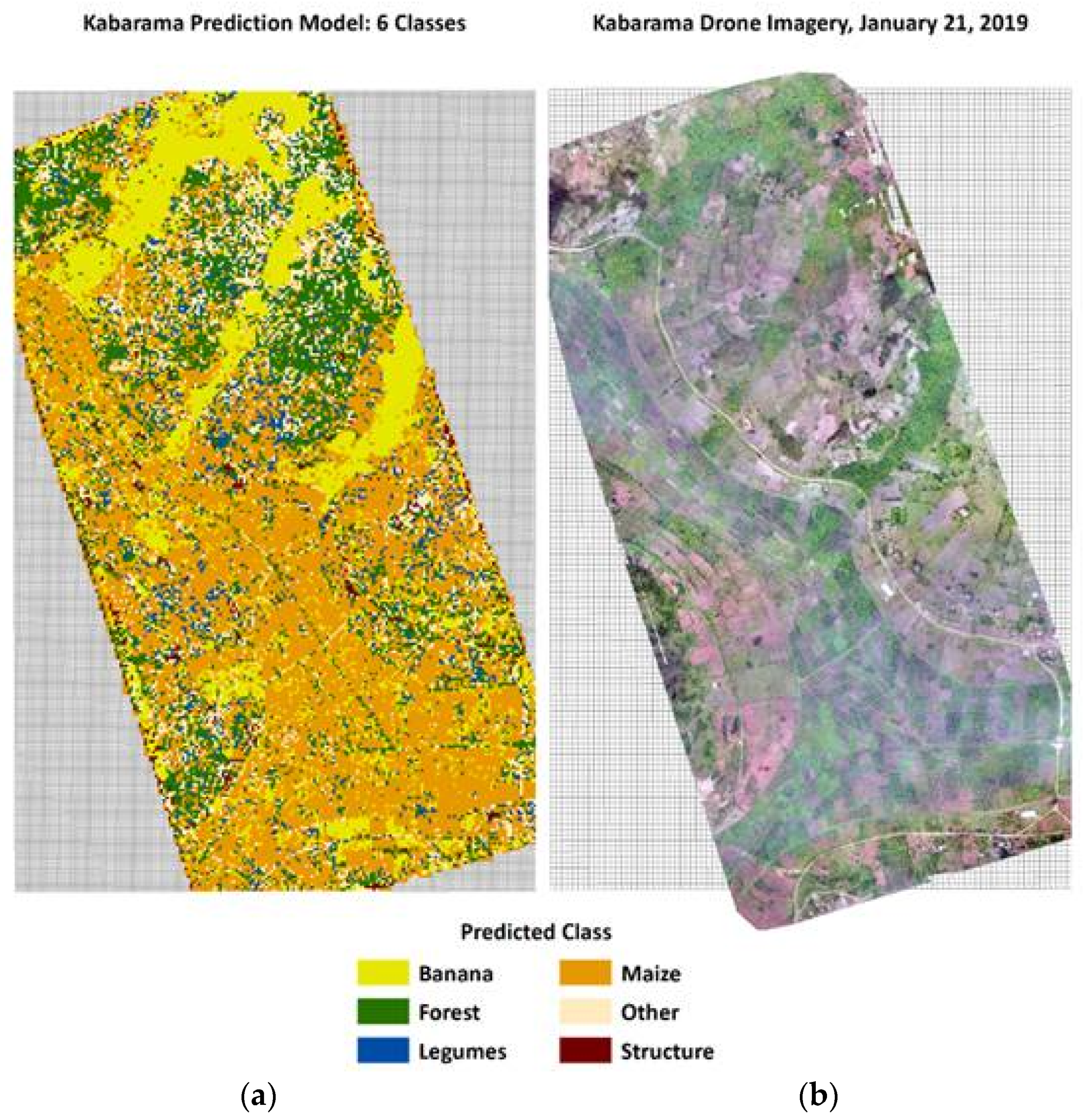

3. Results

4. Discussion

4.1. Study Limitations

4.2. Future Research

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pérez-Escamilla, R. Food Security and the 2015–2030 Sustainable Development Goals: From Human to Planetary Health. Curr. Dev. Nutr. 2017, 1, e000513. [Google Scholar] [CrossRef] [PubMed]

- Brown, M.E.; Funk, C.C. Early Warning of Food Security Crises in Urban Areas: The Case of Harare, Zimbabwe, 2007. In Geospatial Techniques in Urban Hazard and Disaster Analysis; Springer: Berlin/Heidelberg, Germany, 2009; pp. 229–241. [Google Scholar]

- Weersink, A.; Fraser, E.; Pannell, D.; Duncan, E.; Rotz, S. Opportunities and Challenges for Big Data in Agricultural and Environmental Analysis. Annu. Rev. Resour. Econ. 2018, 10, 19–37. [Google Scholar] [CrossRef]

- Lowder, S.K.; Skoet, J.; Raney, T. The Number, Size, and Distribution of Farms, Smallholder Farms, and Family Farms Worldwide. World Dev. 2016, 87, 16–29. [Google Scholar] [CrossRef]

- Samberg, L.H.; Gerber, J.S.; Ramankutty, N.; Herrero, M.; West, P.C. Subnational distribution of average farm size and smallholder contributions to global food production. Environ. Res. Lett. 2016, 11, 124010. [Google Scholar] [CrossRef]

- HLPE. Investing in Smallholder Agriculture for Food Security: A Report by the High Level Panel of Experts on Food Security and Nutrition of the Committee on World Food Security; FAO: Roma, Italy, 2013. [Google Scholar]

- Burke, M.; Lobell, D.B. Satellite-based assessment of yield variation and its determinants in smallholder African systems. Proc. Natl. Acad. Sci. USA 2017, 114, 2189–2194. [Google Scholar] [CrossRef] [PubMed]

- Jin, Z.; Azzari, G.; You, C.; Di Tommaso, S.; Aston, S.; Burke, M.; Lobell, D.B. Smallholder maize area and yield mapping at national scales with Google Earth Engine. Remote Sens. Environ. 2019, 228, 115–128. [Google Scholar] [CrossRef]

- Jin, Z.; Azzari, G.; Burke, M.; Aston, S.; Lobell, D. Mapping smallholder yield heterogeneity at multiple scales in Eastern Africa. Remote Sens. 2017, 9, 931. [Google Scholar] [CrossRef]

- Temple, D.S.; Polly, J.S.; Hegarty-Craver, M.; Rineer, J.I.; Lapidus, D.; Austin, K.; Woodward, K.P.; Beach, R.H. The View From Above: Satellites Inform Decision-Making for Food Security. RTI Press 2019, 10109. [Google Scholar] [CrossRef]

- Radiometric-Resolutions-Sentinel-2 MSI-User Guides-Sentinel Online. Available online: https://earth.esa.int/web/sentinel/user-guides/sentinel-2-%20msi/resolutions/radiometric (accessed on 25 December 2019).

- Turner, D.; Lucieer, A.; Wallace, L. Direct Georeferencing of Ultrahigh-Resolution UAV Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2738–2745. [Google Scholar] [CrossRef]

- Tripicchio, P.; Satler, M.; Dabisias, G.; Ruffaldi, E.; Avizzano, C.A. Towards Smart Farming and Sustainable Agriculture with Drones. In Proceedings of the 2015 International Conference on Intelligent Environments, Prague, Czech Republic, 15–17 July 2015; pp. 140–143. [Google Scholar]

- Polly, J.; Hegarty-Craver, M.; Rineer, J.; O’Neil, M.; Lapidus, D.; Beach, R.; Temple, D.S. The use of Sentinel-1 and -2 data for monitoring maize production in Rwanda. In Proceedings of the Remote Sensing for Agriculture, Ecosystems, and Hydrology XXI. Int. Soc. Opt. Photonics 2019, 11149, 111491. [Google Scholar]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Yang, M.D.; Huang, K.S.; Kuo, Y.H.; Tsai, H.P.; Lin, L.M. Spatial and Spectral Hybrid Image Classification for Rice Lodging Assessment through UAV Imagery. Remote Sens. 2017, 9, 583. [Google Scholar] [CrossRef]

- Jiang, H.; Chen, S.; Li, D.; Wang, C.; Yang, J. Papaya Tree Detection with UAV Images Using a GPU-Accelerated Scale-Space Filtering Method. Remote Sens. 2017, 9, 721. [Google Scholar] [CrossRef]

- [1403.6382] CNN Features Off-the-Shelf: An Astounding Baseline for Recognition. Available online: https://arxiv.org/abs/1403.6382 (accessed on 14 November 2019).

- Hall, O.; Dahlin, S.; Marstorp, H.; Archila Bustos, M.F.; Öborn, I.; Jirström, M. Classification of Maize in Complex Smallholder Farming Systems Using UAV Imagery. Drones 2018, 2, 22. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems 25; Pereira, F., Burges, C.J.C., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Zhang, M.; Li, W.; Du, Q. Diverse region-based CNN for hyperspectral image classification. IEEE Trans. Image Process. 2018, 27, 2623–2634. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Gao, Q.; Lim, S.; Jia, X. Hyperspectral image classification using convolutional neural networks and multiple feature learning. Remote Sens. 2018, 10, 299. [Google Scholar] [CrossRef]

- Geng, J.; Wang, H.; Fan, J.; Ma, X. Deep Supervised and Contractive Neural Network for SAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2442–2459. [Google Scholar] [CrossRef]

- Geng, J.; Fan, J.; Wang, H.; Ma, X.; Li, B.; Chen, F. High-resolution SAR image classification via deep convolutional autoencoders. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2351–2355. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, H.; Xu, F.; Jin, Y.Q. Polarimetric SAR image classification using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1935–1939. [Google Scholar] [CrossRef]

- Zbontar, J.; LeCun, Y. Computing the stereo matching cost with a convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1592–1599. [Google Scholar]

- Fischer, P.; Dosovitskiy, A.; Brox, T. Descriptor matching with convolutional neural networks: A comparison to sift. arXiv 2014, arXiv:1405.5769. [Google Scholar]

- Hu, F.; Xia, G.S.; Hu, J.; Zhang, L. Transferring deep convolutional neural networks for the scene classification of high-resolution remote sensing imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Chew, R.F.; Amer, S.; Jones, K.; Unangst, J.; Cajka, J.; Allpress, J.; Bruhn, M. Residential scene classification for gridded population sampling in developing countries using deep convolutional neural networks on satellite imagery. Int. J. Health Geogr. 2018, 17, 12. [Google Scholar] [CrossRef] [PubMed]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Han, X.; Zhong, Y.; Cao, L.; Zhang, L. Pre-trained alexnet architecture with pyramid pooling and supervision for high spatial resolution remote sensing image scene classification. Remote Sens. 2017, 9, 848. [Google Scholar] [CrossRef]

- Nogueira, K.; Penatti, O.A.B.; dos Santos, J.A. Towards better exploiting convolutional neural networks for remote sensing scene classification. Pattern Recognit. 2017, 61, 539–556. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Africa: Rwanda—The World Factbook-Central Intelligence Agency. Available online: https://www.cia.gov/library/publications/resources/the-world-factbook/geos/rw.html (accessed on 25 September 2019).

- Ali, D.A.; Deininger, K. Is There a Farm-Size Productivity Relationship in African Agriculture? Evidence from Rwanda; The World Bank: Washington, DC, USA, 2014. [Google Scholar]

- National Institute of Statistics of Rwanda. Seasonal Agricultural Survey: Season A; National Institute of Statistics of Rwanda: Kigali City, Rwanda, 2019.

- senseFly-eBee Plus. Available online: https://www.sensefly.com/drone/ebee-plus-survey-drone (accessed on 25 December 2019).

- senseFly-senseFly S.O.D.A. Available online: https://www.sensefly.com/camera/sensefly-soda-photogrammetry-camera (accessed on 25 December 2019).

- Bank, T.W. Rwanda—Fourth Transformation of Agriculture Sector Program and Second Phase of Program for Results Project; The World Bank: Washington, DC, USA, 2018; pp. 1–115. [Google Scholar]

- Cantore, N. The Crop Intensification Program in Rwanda: A Sustainability Analysis; ODI: London, UK, 2011. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Duch, W.; Jankowski, N. Survey of neural transfer functions. Neural Comput. Surv. 1999, 2, 163–212. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Lottes, P.; Khanna, R.; Pfeifer, J.; Siegwart, R.; Stachniss, C. UAV-based crop and weed classification for smart farming. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3024–3031. [Google Scholar]

- Hung, C.; Xu, Z.; Sukkarieh, S. Feature Learning Based Approach for Weed Classification Using High Resolution Aerial Images from a Digital Camera Mounted on a UAV. Remote Sens. 2014, 6, 12037–12054. [Google Scholar] [CrossRef]

- Christie, G.; Fendley, N.; Wilson, J.; Mukherjee, R. Functional Map of the World. arXiv 2018, arXiv:1711.07846. [Google Scholar]

| Crop | District | Country | |||||

|---|---|---|---|---|---|---|---|

| Musanze | Karongi | Gakenke | Kamonyi | Nyaruguru | Gatsibo | Rwanda | |

| Maize | 27% | 13% | 18% | 11% | 11% | 23% | 16% |

| Beans | 5% | 28% | 28% | 24% | 11% | 25% | 19% |

| Bananas | 10% | 15% | 19% | 24% | 17% | 29% | 23% |

| Class | # Training | # Test |

|---|---|---|

| Maize | 1660 | 415 |

| Banana | 1329 | 332 |

| Forest | 1016 | 254 |

| Other | 600 | 150 |

| Legume | 290 | 73 |

| Structure | 265 | 66 |

| Total | 5160 | 1290 |

| Class | F1 Score | Precision | Recall | Accuracy | Kappa |

|---|---|---|---|---|---|

| Banana | 0.96 | 0.97 | 0.95 | 0.98 | 0.95 |

| Forest | 0.89 | 0.88 | 0.90 | 0.96 | 0.86 |

| Legume | 0.49 | 0.57 | 0.42 | 0.95 | 0.46 |

| Maize | 0.90 | 0.87 | 0.93 | 0.93 | 0.85 |

| Other | 0.62 | 0.67 | 0.58 | 0.92 | 0.58 |

| Structure | 0.89 | 0.84 | 0.95 | 0.99 | 0.89 |

| Overall | 0.86 | 0.86 | 0.86 | 0.86 | 0.82 |

| Predicted | ||||||||

|---|---|---|---|---|---|---|---|---|

| Banana | Forest | Legume | Maize | Other | Structure | |||

| Actual | Banana | 315 | 3 | 0 | 9 | 5 | 0 | 332 |

| Forest | 0 | 229 | 4 | 6 | 12 | 3 | 254 | |

| Legume | 1 | 5 | 31 | 20 | 15 | 1 | 73 | |

| Maize | 3 | 6 | 9 | 388 | 9 | 0 | 415 | |

| Other | 5 | 17 | 10 | 23 | 87 | 8 | 150 | |

| Structure | 0 | 1 | 0 | 0 | 2 | 63 | 66 | |

| 324 | 261 | 54 | 446 | 130 | 75 | |||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chew, R.; Rineer, J.; Beach, R.; O’Neil, M.; Ujeneza, N.; Lapidus, D.; Miano, T.; Hegarty-Craver, M.; Polly, J.; Temple, D.S. Deep Neural Networks and Transfer Learning for Food Crop Identification in UAV Images. Drones 2020, 4, 7. https://doi.org/10.3390/drones4010007

Chew R, Rineer J, Beach R, O’Neil M, Ujeneza N, Lapidus D, Miano T, Hegarty-Craver M, Polly J, Temple DS. Deep Neural Networks and Transfer Learning for Food Crop Identification in UAV Images. Drones. 2020; 4(1):7. https://doi.org/10.3390/drones4010007

Chicago/Turabian StyleChew, Robert, Jay Rineer, Robert Beach, Maggie O’Neil, Noel Ujeneza, Daniel Lapidus, Thomas Miano, Meghan Hegarty-Craver, Jason Polly, and Dorota S. Temple. 2020. "Deep Neural Networks and Transfer Learning for Food Crop Identification in UAV Images" Drones 4, no. 1: 7. https://doi.org/10.3390/drones4010007

APA StyleChew, R., Rineer, J., Beach, R., O’Neil, M., Ujeneza, N., Lapidus, D., Miano, T., Hegarty-Craver, M., Polly, J., & Temple, D. S. (2020). Deep Neural Networks and Transfer Learning for Food Crop Identification in UAV Images. Drones, 4(1), 7. https://doi.org/10.3390/drones4010007