1. Introduction

Search and rescue (SAR) is a vital line of defence against unnecessary loss of life. SAR services are provided across governmental, private, or voluntary sectors, and teams operate globally in a variety of environments such as mountains, urban settings, general lowland areas, coastal, and maritime. Generally, SAR operations are conducted over large areas. As survival chances are negatively correlated with time to detection [

1], finding people in need of rescue needs to happen as fast as possible.

Reconnaissance forms the foundation of SAR efforts. In the UK, standard practice guidelines take the form of Generic Risk Assessments (GRAs) prescribed by the governing body (the Chief Fire and Rescue Adviser) [

2]. The GRAs strongly emphasise the requirement for reliable, up-to-date information about the working space that SAR crews are operating in [

3,

4,

5]. In large, complex or dynamic environments, it is insufficient to rely upon information gathered only at the beginning of an operation, and continual reassessment is needed [

3]. In general, the approach to an SAR operation is defined by the management of risk, both of action, and inaction [

2].

To reduce risk, SAR services deploy a variety of vehicles to find missing people and to keep their own staff safe. Traditionally, these are ground vehicles such as off road vehicles or tracked all terrain carriers, a variety of sea vessels, or manned aircraft and more recently drones. Aerial vehicles have the advantage of being able to cover large areas quickly, easily access difficult or hazardous terrain, and give clearer lines of sight over uneven ground. However, manned aircraft SAR operations are very costly. For example, in 2016/17, the UK National Police Air Service (NPAS) logged 16,369 operational hours, and the cost per flying hour was estimated to be £2820 [

6].

In addition to financial cost, there is considerable risk in using manned aircraft for certain SAR operations, and situations in which manned aircraft are constrained are common (e.g., fog in which low flights are often considered too risky). There is clear demand for the cheaper and safer aerial support potentially offered by drones. In 2017, 28 of the 43 fire and rescue forces in England and Wales had purchased at least one drone, with a further nine considering the introduction of drones [

6]. At the time, it was claimed that the introduction of drones led to a decrease in the requirement of NPAS support. However, none of the forces surveyed were able to produce analysis on the efficiency or effectiveness of drones [

6].

Recently, drones have been proposed as a tool to support several aspects of disaster response [

7,

8] such as gathering information on the ground state, 3D mapping [

9], situational awareness, logistics planning, damage assessment, communications relay points, and direct SAR missions. They have also been used to support SAR missions [

10] through delivery of live-saving equipment such as life vests [

11,

12], or allowing for audible communication through speakers with people once they are located. Whilst several drone systems have been proposed for carrying out SAR directly by autonomously locating people in need of rescue [

13,

14,

15,

16,

17,

18], to date, they have not been implemented as the leading method for finding casualties in an automatic or systematic way, or made widely available to SAR practitioners to use. This may be due to limitations of drones themselves or the RGB-based detection systems on board. Some groups highlight issues such as range, flight time, ability to avoid hazards (e.g., trees) and data transfer limitations [

8,

13,

19].

Rapid and autonomous location and identification of humans in need of rescue via drones, as the leading SAR approach, could come through the addition of thermal infrared (TIR) cameras. TIR allows warm bodies to be seen distinctly from their surroundings. Humans (and other warm animals) appear as bright objects in thermal images, whereas, in conventional visible spectrum (RGB) footage, all objects appear with the same brightness [

20]. This means that they can more easily be picked out by the naked eye in TIR images than in RGB images in many cases. Appearing visually distinct in TIR footage also means that there is great potential for using automatic computer-based systems to detect people in need of rescue more rapidly and reliably than would be possible by the naked eye [

21]. Whilst human detection from ground based platforms has been very successful, detecting and tracking humans from aerial platforms have been problematic mainly due to the relatively small apparent size (number of pixels) of humans in these images and lack of discernible textures or human body part shapes [

22,

23]. Previous studies have shown that man-made objects in a marine setting, such as boats, can be detected and identified using TIR [

14]. However, detecting humans automatically from thermal-equipped drones tends to rely on finding hot-spots of a given size [

24,

25].

Machine learning (ML) is another potentially powerful tool for SAR applications when combined with drones and thermal sensors. A trained machine learning system can recognise humans from a variety of angles even if the human is partially obscured. However, computer vision based machine learning algorithms—those which are able to identify objects in images—require a large amount of training data to be able to recognise any specific object. The accuracy of these algorithms deteriorates rapidly if insufficient training data are available [

26,

27,

28], and, in some cases, simpler statistical methods have been shown to perform better than deep learning algorithms for object recognition [

29].

The limitations of small training data volume in applying machine learning to SAR applications were described for detecting people partially buried in avalanches [

15]. Since very little aerial footage of this scenario is available, even when additional data were gathered by simulating people in need of rescue, this led to only ∼200 RGB images for training an ML algorithm. The outcome was that the algorithm produced high numbers of false positive and false negative detections, and a true positive rate that was insufficient for safety critical applications [

15]. In this case of avalanche SAR scenarios, detecting humans also relied on the relatively uniform background of snow to perform image segmentation. Having a uniform background is rarely the case in other scenarios where scenes can be cluttered.

Humans were detected and classified in drone-based RGB data in urban settings [

16] using a convolutional neural network (CNN) with a large training dataset. However, in this case, the drone was flown at 20 m above ground level (AGL) to acquire sufficiently detailed images for training the CNN. Flying this low is unrealistic for SAR applications as the low height will give a limited field of view on the ground, meaning only a small area can be covered within the limited drone battery life. For built-up areas, 20 m AGL is also too low to avoid obstacles such as trees, buildings or birds (and it is illegal to fly an unmanned aerial vehicle this low in built-up areas in the UK, Europe, USA and multiple other countries).

In all cases where machine learning is applied to RGB drone images, processing times pose a challenge. Only being able to classify ∼1 frame per second [

13,

15,

16] on the ground post flight is of limited use when time is critical. Thus far, onboard processing has been challenging due to limitations on computing power of sufficiently small devices for operation on drones. Live data streaming from the drone is also problematic due to limited availability of mobile networks, distances between drones and ground station being too far to maintain connection, or limited bandwidth for data transfer of large or very numerous images. TIR images typically have fewer pixels than RGB images, thus presenting the possibility of reduced processing times when applying ML and lower bandwidth requirements for live streaming. SAR scenarios are highly varied, and drone-based data of humans in different scenarios is very limited for both RGB and TIR cameras. Given this limitation, we examine whether a simple, non-machine learning algorithm, augmented with physical knowledge of the local environment and drone operating parameters, could be used to reliably detect humans.

Morecambe Bay is the largest expanse of intertidal mudflats and sand in the United Kingdom, covering a total area of 310 km

. Bay Search and Rescue respond to 20–50 call outs per year, and logged 4737 operational hours in 2018. Being a large area, with some challenging terrain such as marshland and quicksands, it is difficult to carry out SAR operations on foot.

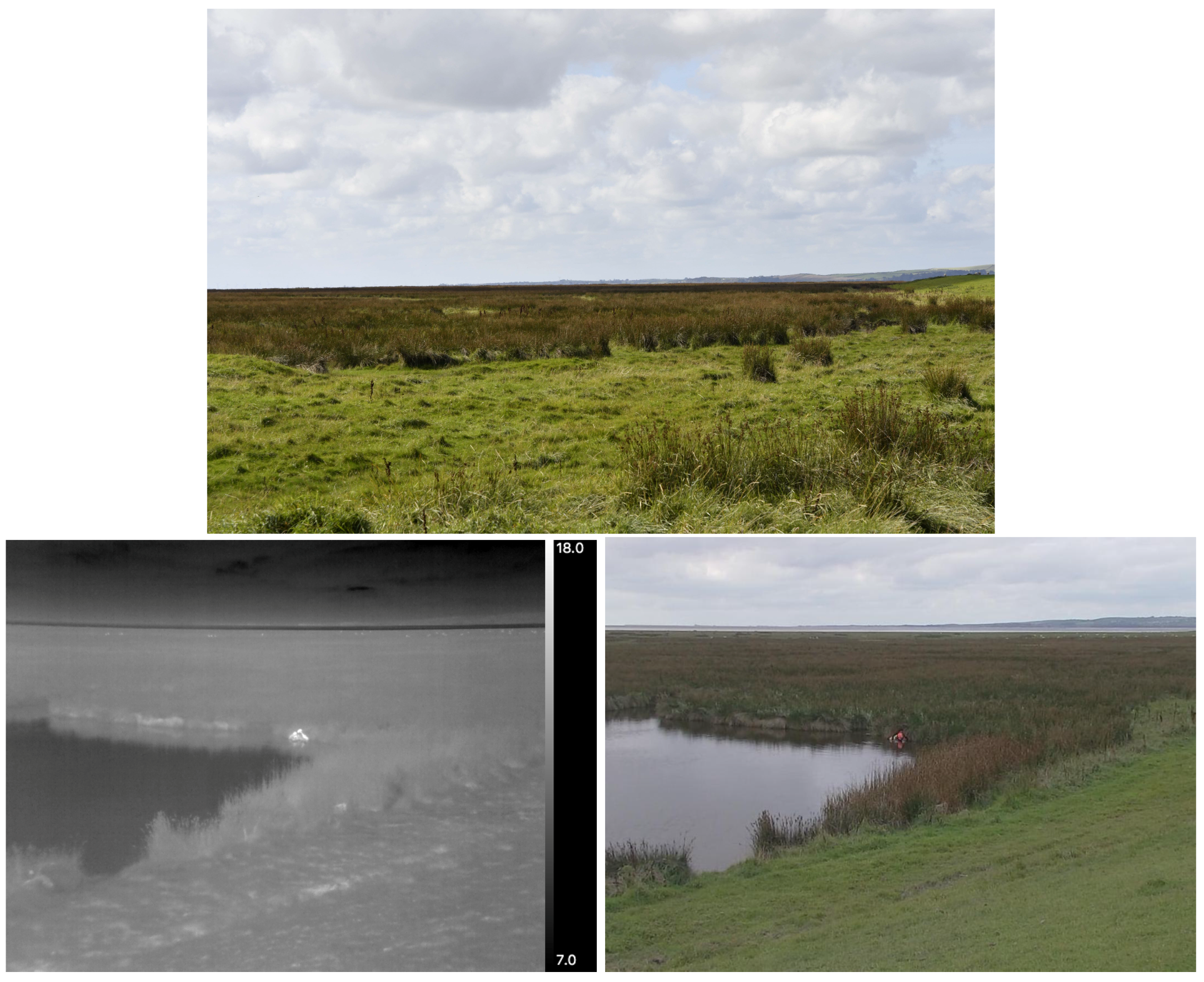

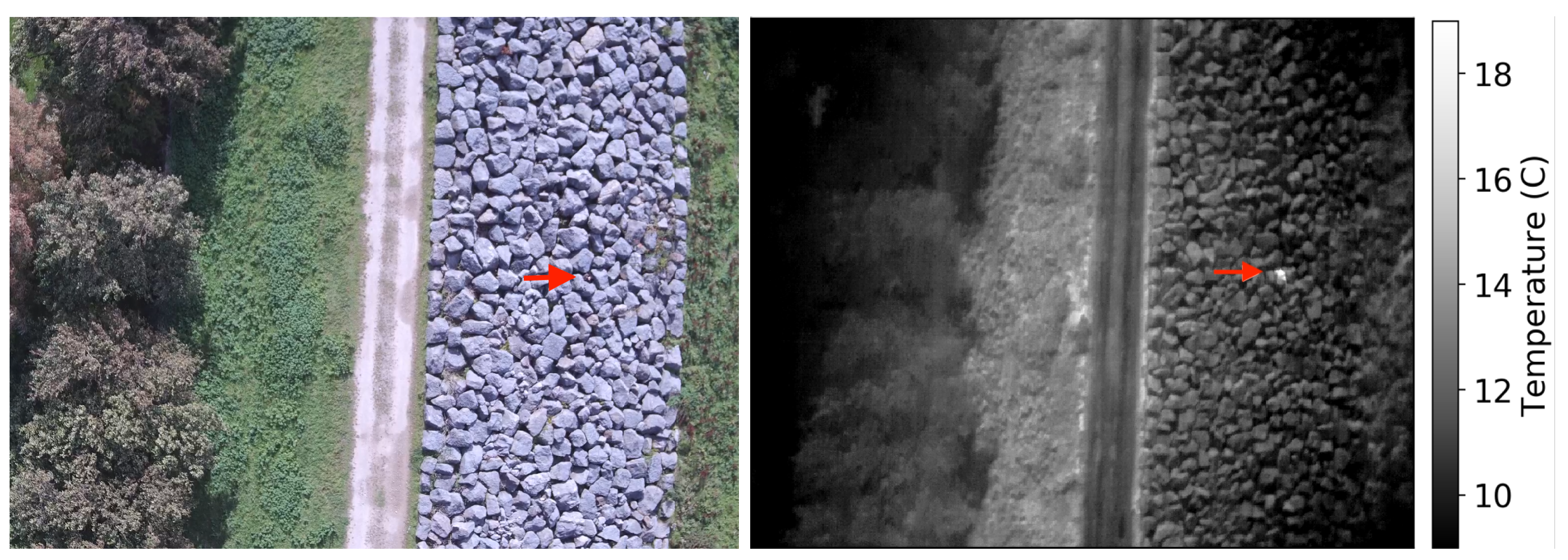

Figure 1 shows typical terrain in Morecambe Bay. The potential advantages of drones and automated detection of humans in SAR operations here are clear. In this paper, we describe a pilot study to test the effectiveness of thermal-equipped drones for locating humans in a variety of realistic search and rescue scenarios in a marine and coastal environment, both by the naked eye and using a rudimentary automated detection system. We outline the requirements and next steps to implementation of an autonomous drone-based system for SAR. This study was carried out in Morecambe Bay in partnership with Bay Search and Rescue.

2. Methods

To test the effectiveness of thermal-equipped drones for finding people in need of rescue, we performed a series of simulated SAR scenarios, all of which were realistic and had been encountered by the Morecambe Bay Search and Rescue team in the past. As part of their regular SAR training, volunteers from Bay Search and Rescue were used as the simulated survivors in need of rescue. This test was carried out near latitude 54.19284, longitude −2.99588 on 29 September 2018. There were four scenarios;

A person was submerged in water up to shoulder height.

A person lying down in the long marsh grass, in a position where they could not be seen from ground level.

A person lying down between the rocks which make up a sea wall, and not visible from ground level.

People hidden in vegetation. Three separate tests were performed for this scenario, one person in a gully under mid to sparse vegetation cover; one person at the edge of a small wooded area; two people in the middle of a small wooded area with full canopy cover.

We used a custom hexacopter drone based around a Tarot 680 airframe and Pixhawk 2.1 flight controller. The drone was equipped with a custom designed two-axis brushless gimbal mechanism to provide stabilisation of the camera in both roll and pitch. The gimbal also allowed independent and remote control of the camera’s pitch angle. The thermal camera used was a FLIR Duo Pro R. This consists of a Tau2 TIR detector ( pixels, 60 Hz) with a 13 mm lens ( field of view), and a 4K RGB camera (4000 × 3000 pixels, field of view) affixed side-by-side. The camera was interfaced to the flight controller to enable remote triggering and geotagging information to be recorded. Live video feedback was available in flight via a 5.8 GHz analogue video transmitter and receiver system.

For each scenario, we adopted an appropriate flight strategy, either a straight line fly-over or a grid search pattern. Data were subsequently examined by the naked eye after each flight. The drone pilot and those who examined the data were not aware of the locations of the volunteers being searched for beforehand, only the general area in which they were located.

Machine learning would be the ideal tool to carry out automated detection and identification of the volunteers in these experiments. However, we only have five experiments worth of data to train with. Each flight lasted between 5–10 min. Allowing one minute each for take-off and landing, with the 30 Hz camera used, this generates 5400–14,400 frames of data per flight. The number of these frames that contain humans was between 150–1500 per flight (or per flight height) depending on how the drone was moving. After splitting the data into training, testing and verification sets, this would leave a small number of meaningfully different images to train with (i.e., not temporally adjacent in the footage, thus forming essentially the same image). Previous work has shown that small training dataset sizes lead to dramatic drops in classification accuracy [

26,

27,

28]. With a small number of experiments, a machine learning system would only recognise the specific humans in the specific scenarios we tested with, and it would be difficult to be certain that the reported performance would in fact be meaningful in a wider range of real world scenarios. Given the safety critical constraints of SAR requiring high accuracy, or at least low false positives, we decided to test an alternative detection method.

A prototype automated detection system was applied to the data gathered after each flight. Automated detection is based on a threshold temperature and size expected for humans when viewed from the height of the drone. See [

30] for a full description of the automated detection algorithm used. We calculated expected apparent sizes using the method outlined in [

20] (

http://www.astro.ljmu.ac.uk/~aricburk/uav_calc/) With the thermal camera used in this instance, at a height of 50 m above ground level (AGL), a prone human 1.7 m long would appear 25 pixels in length in the data obtained. A human viewed from above with head and shoulders 0.5 m wide would be nine pixels in width in the thermal data from 50 m AGL. At a drone height of 100 m AGL, these sizes will be 15 pixels (prone) and 4 pixels (upright), respectively.

Human surface temperatures (which would be recorded by thermal cameras as opposed to internal core temperature) are dependent on several factors. Clothing reduces the heat emitted from the body, meaning humans will appear cooler in thermal footage. The homeostatic response to maintaining body temperature causes skin temperature to increase or decrease depending on the temperature of a person’s surroundings—skin is warmer in a warm environment and cooler in a colder one [

31]. The local ambient air temperature on the day of the data being recorded was 13–15

C, and the volunteers we were searching for in this test were not overly warmly clothed.

Thermal contrast, defined as the difference in temperature between an object of interest and surrounding objects or ground, is a key factor in distinguishing and detecting people with TIR cameras. If the temperature of an object is the same as, or very close to that of, the ground, when viewed from a drone, it will be difficult to distinguish them from the ground in TIR data. The temperature of the ground in any given area is more dependent on incoming solar radiation (sunlight) than on air temperature. The expected ground temperature for the region of the Bay where this study was performed was calculated following [

20]. For late September, the land surface temperature is expected to be 11–16

C during the day. Given this, we expect humans to be detected at high significance in the data. For example, the drone pilot was recorded during takeoff for the first flight as being 10–18

C (depending on point on body measured) compared to the recorded ground temperature of 5–11

C.

The weather was partly cloudy, cool and breezy. Using a hand held Kestrel Weather Meter on the ground we measured the air temperature to be 13–15 C, pressure 1026 hPa, humidity 56–68%, and wind speed 11–15 km/h. Whilst temperature, humidity, pressure and particularly wind do change with increasing altitude, for the flights that we conducted, the maximum drone height was 100 m AGL. Over this range of heights, the only variable that will show significant change is wind speed.

Considering the variation of the ground temperature as it heats and cools throughout the day, and the regulation of body temperature by humans as a response, setting an absolute temperature threshold is not necessarily the best way to detect humans. For automated detection, we set the temperature threshold for identifying humans as the 99th percentile of warmest pixels, and the size threshold to be between 8–30 pixels at 50 m and 3–17 pixels at 100 m. All of the automated detections were subsequently inspected by the naked eye to check for true positives and false positives.

3. Results

When examining the data by eye, the volunteers were easily distinguishable in all scenarios, although a little more difficult to distinguish in the woodland scenario. It could be argued that spotting the humans in the woods with the naked eye was partly due to knowing that the humans were in there somewhere, which may not be the case in a real-life search and rescue scenario. Further tests are required to investigate the detectability of humans in woodlands. No false positives were identified by eye.

The automated detection algorithm found the volunteers in all scenarios except in the woodland. False positives for the automated detection were warm vehicle engines, warm building roofs and reflective/warmed rocks. These were easily excluded as not humans by visual inspection. False positives are clearly less important than false negatives for search and rescue, and a few false positives can be tolerated so long as no actual people in need of rescue are missed. For a field-ready system, it is clear that the automated detection of survivors needs to be more intelligent than simple temperature threshold and estimated size limits. This kind of intelligence could include, for example, a system that knows the height of the drone and how large to expect a human to appear in the FOV, or a map of the terrain with possible sources of false positives already highlighted.

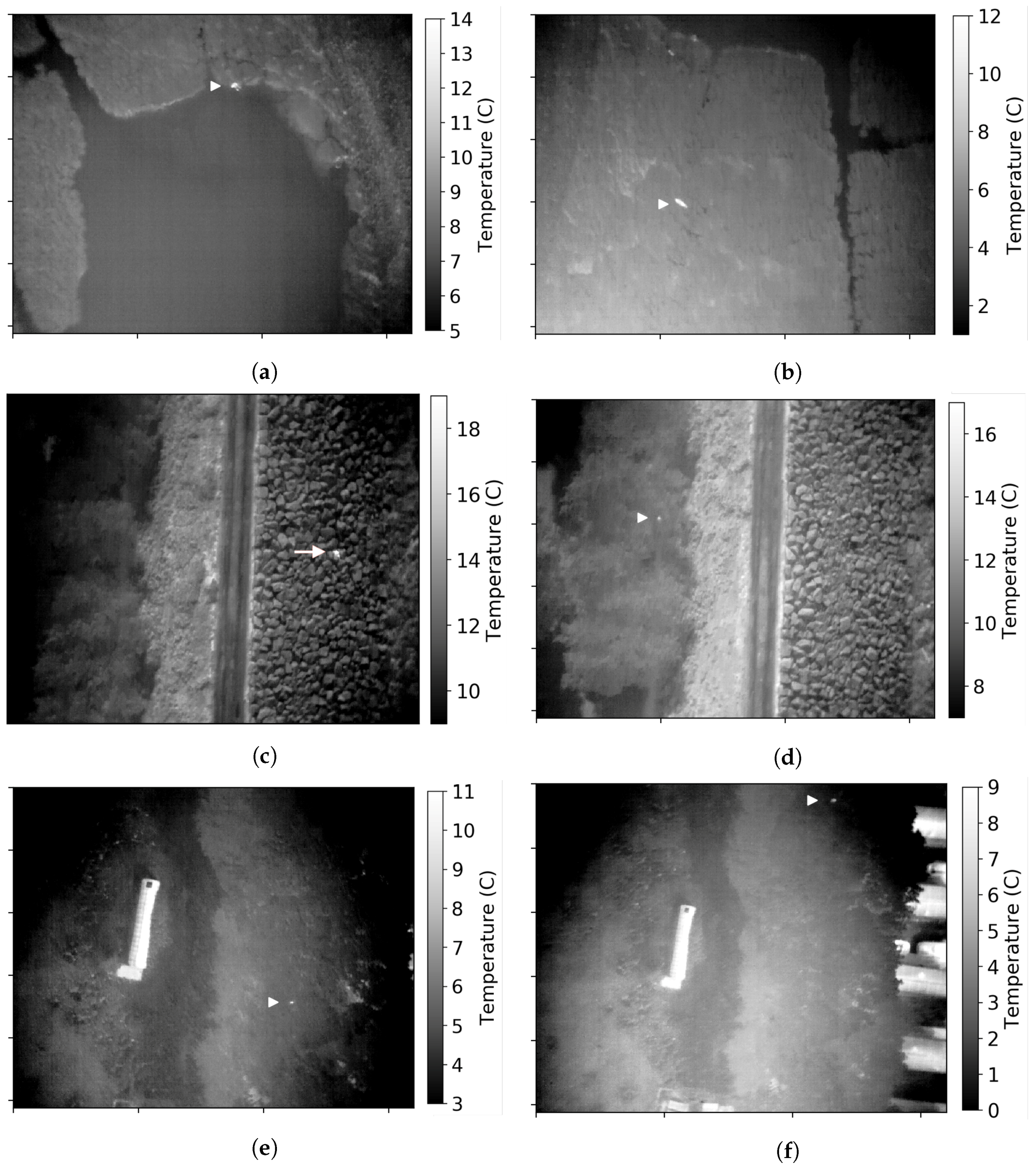

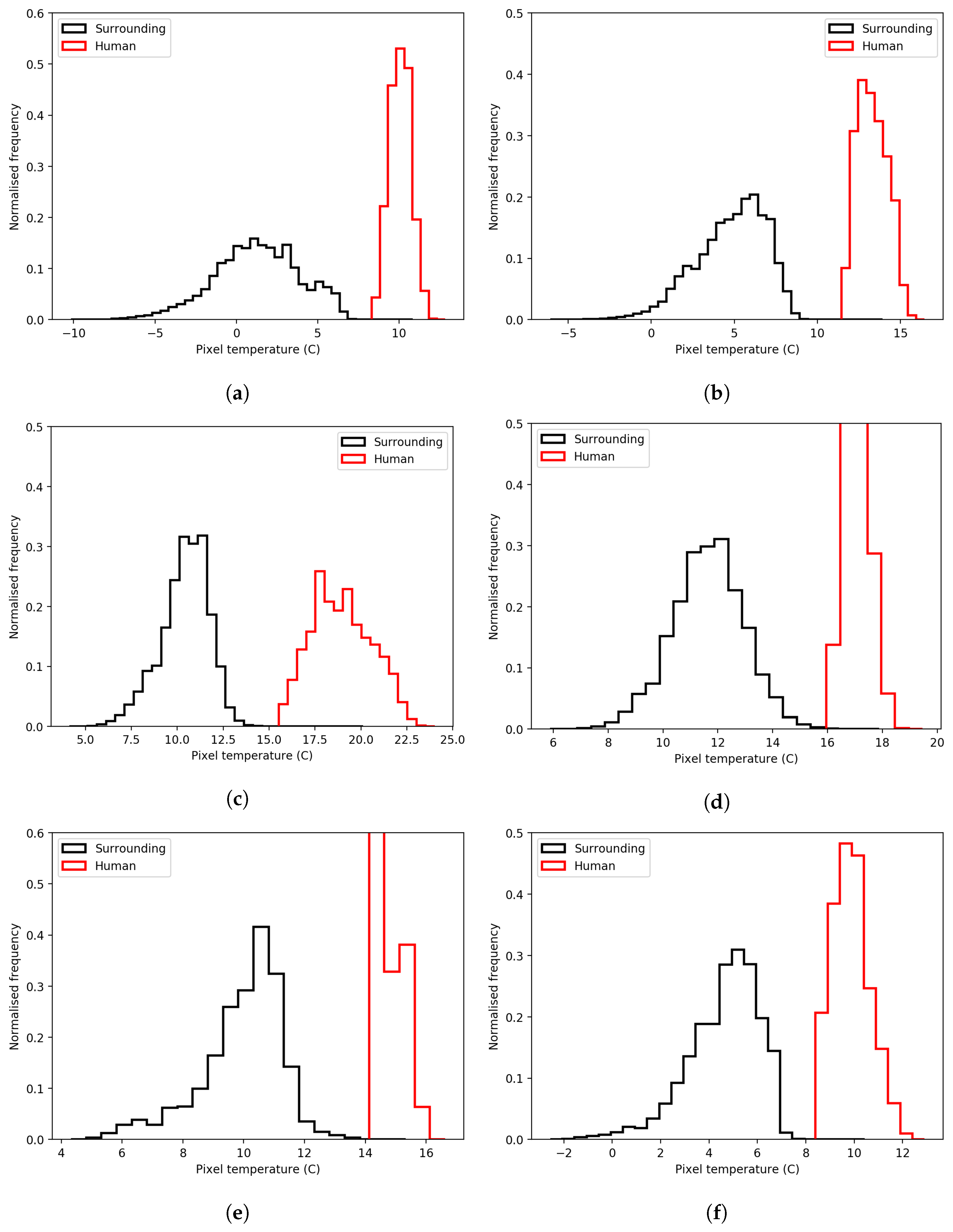

We examined the recorded temperature of the people in each scenario and compared it to the surrounding temperature to get an estimate of the detectability of people in each scenario (

Figure 4 and

Table 1). As environmental temperature varies with illumination, surface composition and time of day, so does human skin temperature. This is evident in the histograms (

Figure 4) and

Table 1. The largest temperature difference between the means of the ground and humans was ∼8

C, and the smallest was ∼4

C. Given these observations, we constructed some synthetic TIR data to estimate the limits on detectability of humans in these scenarios.

Detection Limits and Potential for Broader Application

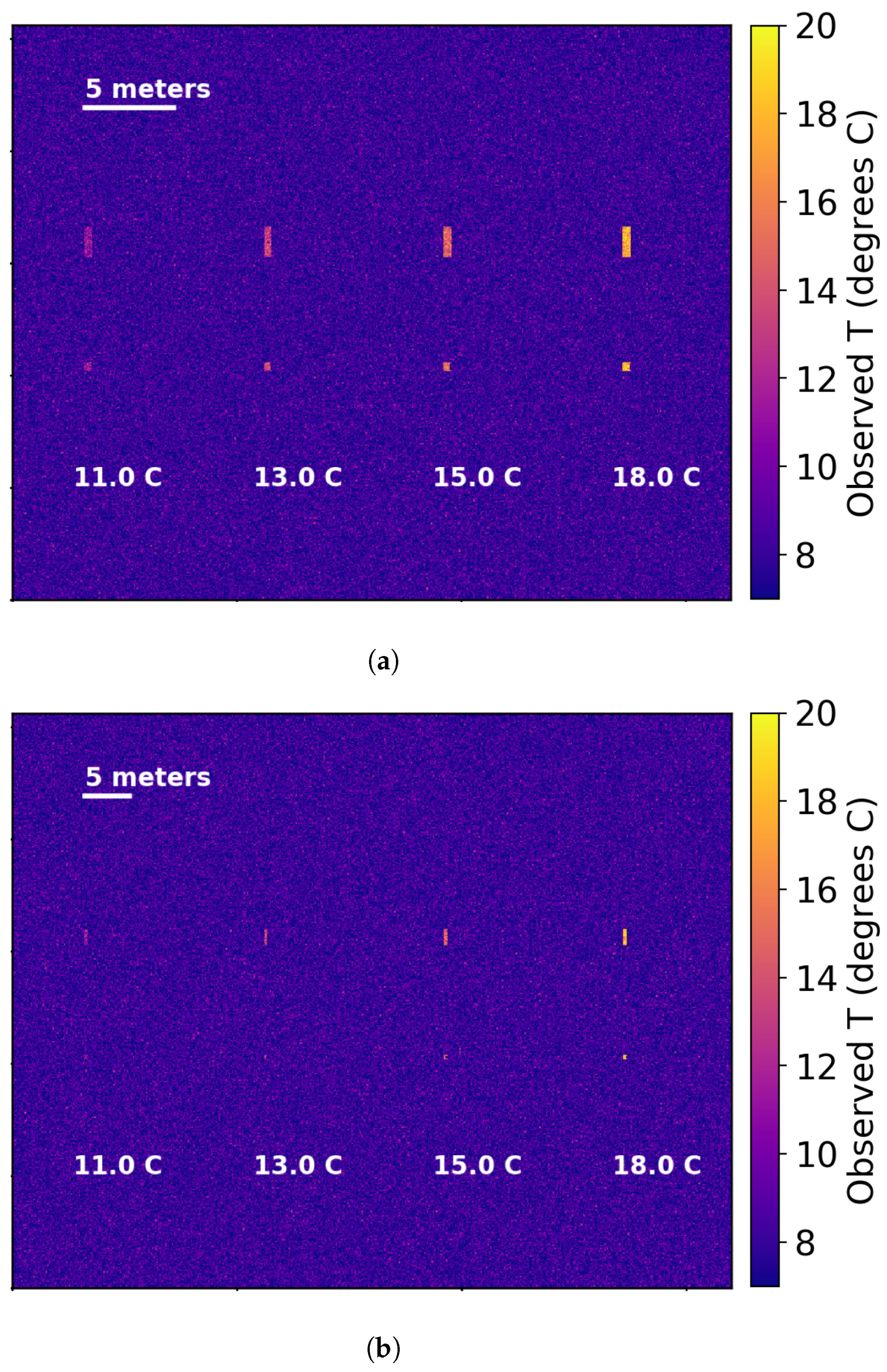

We constructed synthetic observations following the calculations described in [

20,

30] for spot size effect and blending of temperatures within a single pixel. Briefly, when a pixel in a thermal camera contains more than one object, the temperature recorded within that pixel is a blend of that of the two or more objects. We used the typical observed ground temperature from our TIR drone data of 8

C, and human temperatures of 3, 5, 7 and 10

C greater than this to simulate the range of observed temperatures. We simulate a human when viewed from above to be 0.5 × 0.5 m and prone to be 1.7 × 0.5 m. We have assumed the same camera field of view and pixel count for these synthetic observations as for the real camera used in the observations above. We produce the same scene for drone heights of 50 and 100 m AGL. Examples of the synthetic data are shown in

Figure 5. Following the observed variation in ground temperature recorded in

Table 1 of around 2

C, we added Gaussian distributed random noise to the data to simulate this variation.

Detectability is strongly dependent on the difference in temperature between the person and their surroundings. This is obviously also related to their clothing, whether they are wet, how hidden they are and if they are obscured from above. For example, in

Figure 4c,d, the same human appears cooler when observed from higher drone height AGL due to spot size blending. As was evident from our observations of humans in the woodland, obscuration by vegetation leads to a reduced thermal contrast (decrease in recorded temperature difference), due to increased blending of tree branches and leaves with humans in each pixel.

Generally to be able to identify a warm spot in TIR images as being a human, as distinct from an animal of similar size or other warm object, they must appear larger than 10 pixels in size [

20,

25]. If the only warm objects expected in an area are humans (if false positives don’t matter), this might be pushed to five pixels. A single pixel detection is too small for any kind of confidence on identifying a warm spot as being a human.

Figure 6 shows the recorded temperature for 5 and 10 pixel-sized objects as the height of the drone increases (constructed following the same process as above), for a 0.5 × 0.5 m person viewed from above. When the measured temperature of an object drops to that of the background, it can no longer be distinguished from the ground and hence cannot be detected.

Figure 5 shows the extremes of human temperature difference from the background as recorded in our observations of 3

C and 10

C, and the background temperature of 8

C with variation of 2

C. The figures additionally show a slightly higher background variation of 3

C.

Figure 6 shows that the measured temperature of the humans remains stable as height increases at first, until the effect of blending temperatures becomes apparent; then, the temperature drops off. For this figure, the background temperature variation in 2

C. To distinguish a 0.5 × 0.5 m human as five pixels in TIR data, the maximum drone height for detection is 397 m AGL for humans 10

C warmer than the background, and 119 m AGL for humans 3

C warmer than the background. For cases where humans take up 10 pixels in the data, these heights AGL are 199 m and 60 m, respectively.

If the variation in ground temperature is up to 3 C, then the 11 C human is never detectable. In reality, background variation is not random, rather it is more dependent on the terrain and vegetation type. At low height AGL, there will be a coherent human shape in the data even if their temperature is within the ground variation. As such, it may be possible to identify a human by the naked eye so long as they are warmer than the background on average, but, in this case, an automated algorithm based on a simple threshold may fail. However, it may be possible to discern humans using a machine learning system if sufficient training data are utilised.

4. Discussion and Future Work

In this pilot study, we have shown that, in realistic search and rescue scenarios, humans can be detected in thermal infrared data obtained via a drone. We have also shown that it is possible to automatically detect humans in the data using a simplistic temperature and size thresholding method. However, this method would need to be improved upon and refined for real-life operations, and will also need to be able to run live as the drone flies.

In the case of a live human-detecting system, images of the humans detected and their locations would be relayed rapidly to the SAR team. Being in Northern England, Morecambe Bay has a temperate climate and is generally uninhabited by humans. As such, there are very few sources of false positives, most of these are expected to be sheep, dogs and car engines. Using a machine learning system, it may be possible to filter these out. However, in the absence of sufficient training data and since SAR is an emergency operation, it may also be advisable for a human to examine and interpret all possible detections. The potential disadvantage of human interpretation of the TIR images is that untrained observers may misidentify a human in need of rescue as a false positive object or vice versa. Well trained machine learning systems have been shown to be more reliable at identifying objects in images than humans [

32]. As such a machine learning system which only reports human detections to SAR teams would need to be very robust, a deep understanding of its reliability and detection completeness would be required. A system that sits somewhere between fully autonomous detecting and fully human-inspected is also a possibility, and may be a requirement as an autonomous system becomes trained. This would allow the system and users to evolve side-by-side, building reliability and trust as it does so.

TIR has several distinct advantages over RGB imaging or visual searches when it comes to some types of adverse weather conditions. Since TIR light has a longer wavelength than optical light, it is possible to see long distances through fog and mist, even if it is too dense to see through by the naked eye [

20]. Snow can be optically challenging for search and rescue as it is highly reflective of visible light (it has a high albedo)—the brightness of the snow makes detecting people or other objects very challenging in RGB images or by the naked eye. This is not an issue for TIR cameras as the snow is not reflective at this wavelength and instead appears dark due to its low temperature. However, drones are limited in their application in other varieties of adverse weather. Whilst fixed-wing drones can be made resistant to a certain amount of precipitation, heavy rain or snow and strong winds will likely prohibit any drone flying. Falling snow and rain are also strong absorbers of TIR light, acting to produce a similar obscuration effect as they do optically. These conditions are also challenging and often prohibitive for SAR operations as currently carried out on the ground.

Drones can be launched on a very short timescale, so there is potential for very rapid deployment of a drone SAR system, very soon after an alarm is raised. Typical battery life limits are 30 min for multi-rotor drones and over an hour for fixed wing. The combination of large search areas with the requirement for long-duration flights means that longer battery life would be necessary. If a fixed-wing drone needed to land, a battery change over could be performed in minutes, and it could then return to searching quickly. Whilst regular returns to base may not be ideal, drones still offer an improvement in area covered in a given amount of time. For a fixed wing-style drone, an off-the-shelf model may have a typical maximum speed of 30 m/s, and a custom-built system could potentially reach speeds of 50 m/s. If equipped with the TIR camera used in our experiments above, and flying at 100 m AGL, a fixed-wing drone could cover an area of 8.73 km

/h at 30 m/s or 14.54 km

/h at 50 m/s. Whilst this is clearly a larger area than a ground search team could cover in the same time, it would still require several hours to cover all of Morecambe Bay (310 km

in area). It is rare that an SAR team will need to search the whole bay, but large portions of it may need to be covered more regularly. Thus, to be truly effective as a search tool in these cases, the ability to fly at extended or beyond a visual line of sight (EVLOS or BVLOS) of the pilot would be necessary. This is largely prohibited in the UK for safety reasons, but there is a precedent for emergency services undertaking life-saving activities to gain permission for BVLOS flight from the authorities [

33].

Maximising the most efficient search patterns is also a consideration for a live detection system [

8,

19]. Possible search patterns might include those which are similar or complementary to ground search methods. For example, a drone could follow a ground SAR team, or fly a distance ahead and report back what it detects. In this case, the drone can also observe the ground teams and quickly report back if they encounter any trouble themselves, thus reducing the risk of SAR operations. Alternatively, a spiral outwards from the ground team, or grid search pattern beginning at the start location of the ground team, may be more efficient for maximising area coverage.

An off-the-shelf drone system capable of carrying a thermal camera and flying for 30 min to an hour (depending on platform style) costs £2000–£10,000. Off-the-shelf thermal cameras of sufficient resolution for SAR applications cost £2000–£6000. With allowances for additional parts, a reasonable estimation for the price of a complete thermal-equipped drone system would be up to £20,000. Given that manned aircraft operated by NPAS cost £2820 per flying hour [

6], and come with a far higher initial equipment cost of around £3 million, drones represent a far cheaper method for aerial reconnaissance.