UAS-GEOBIA Approach to Sapling Identification in Jack Pine Barrens after Fire

Abstract

1. Introduction

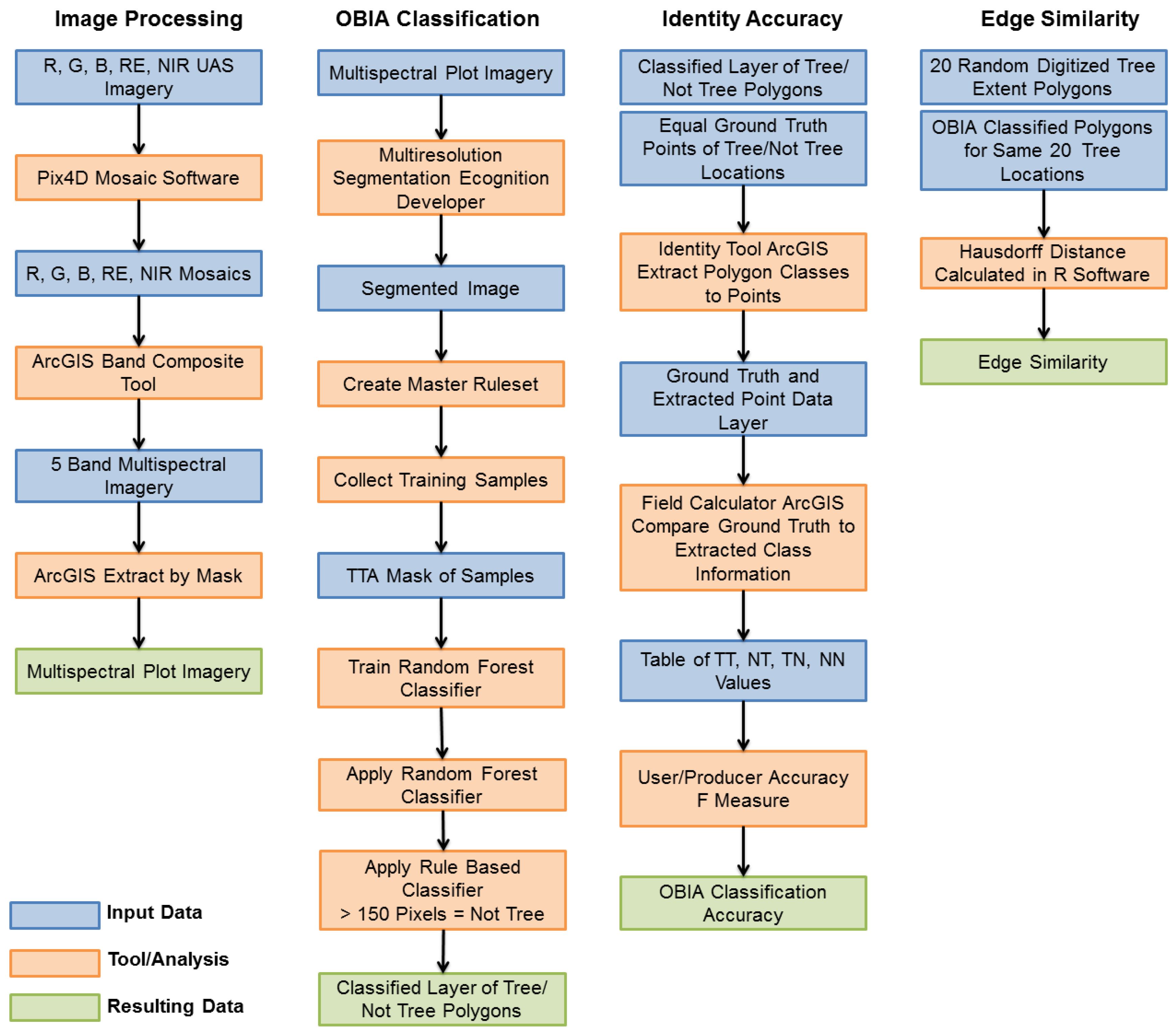

2. Methods and Materials

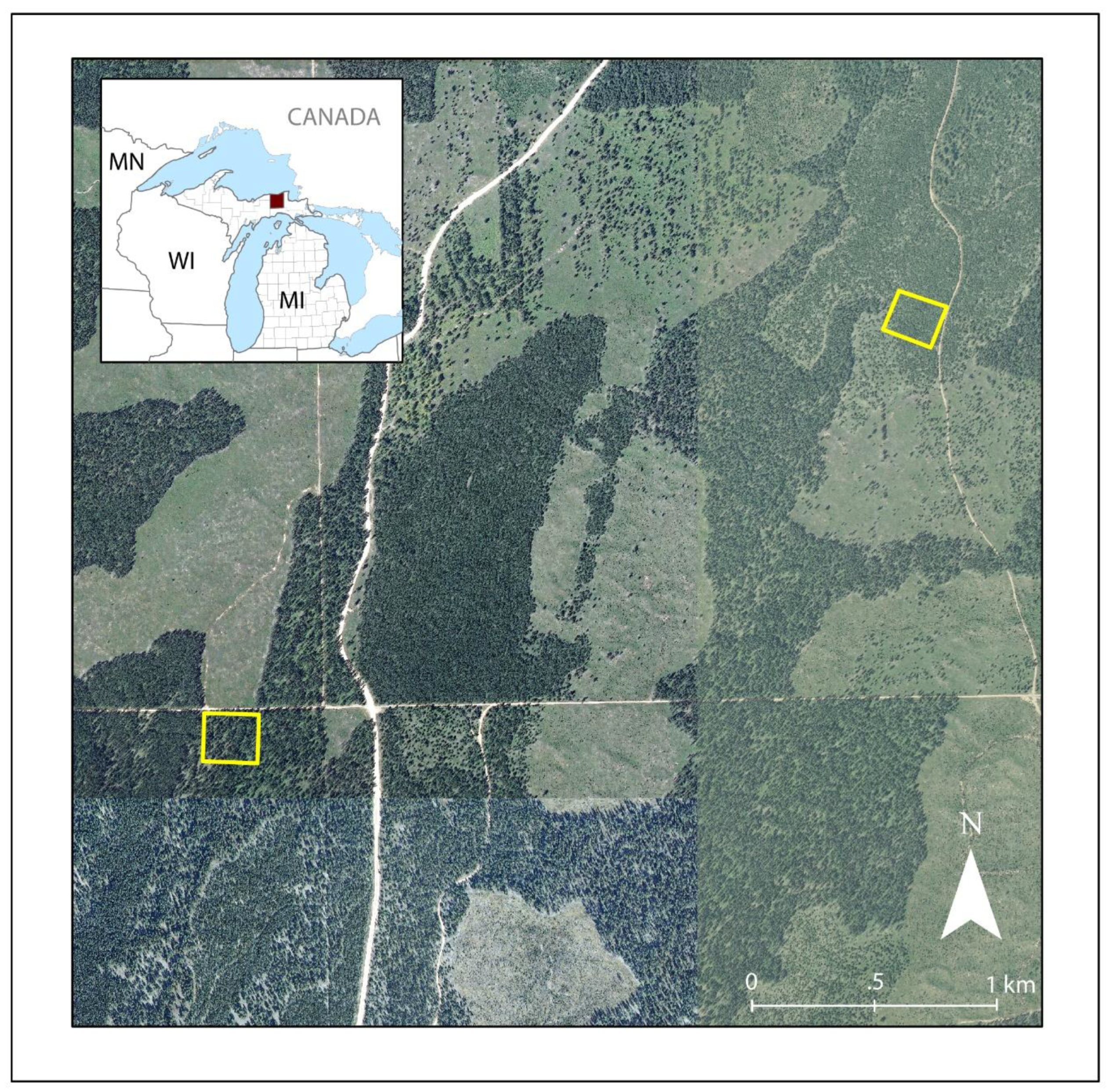

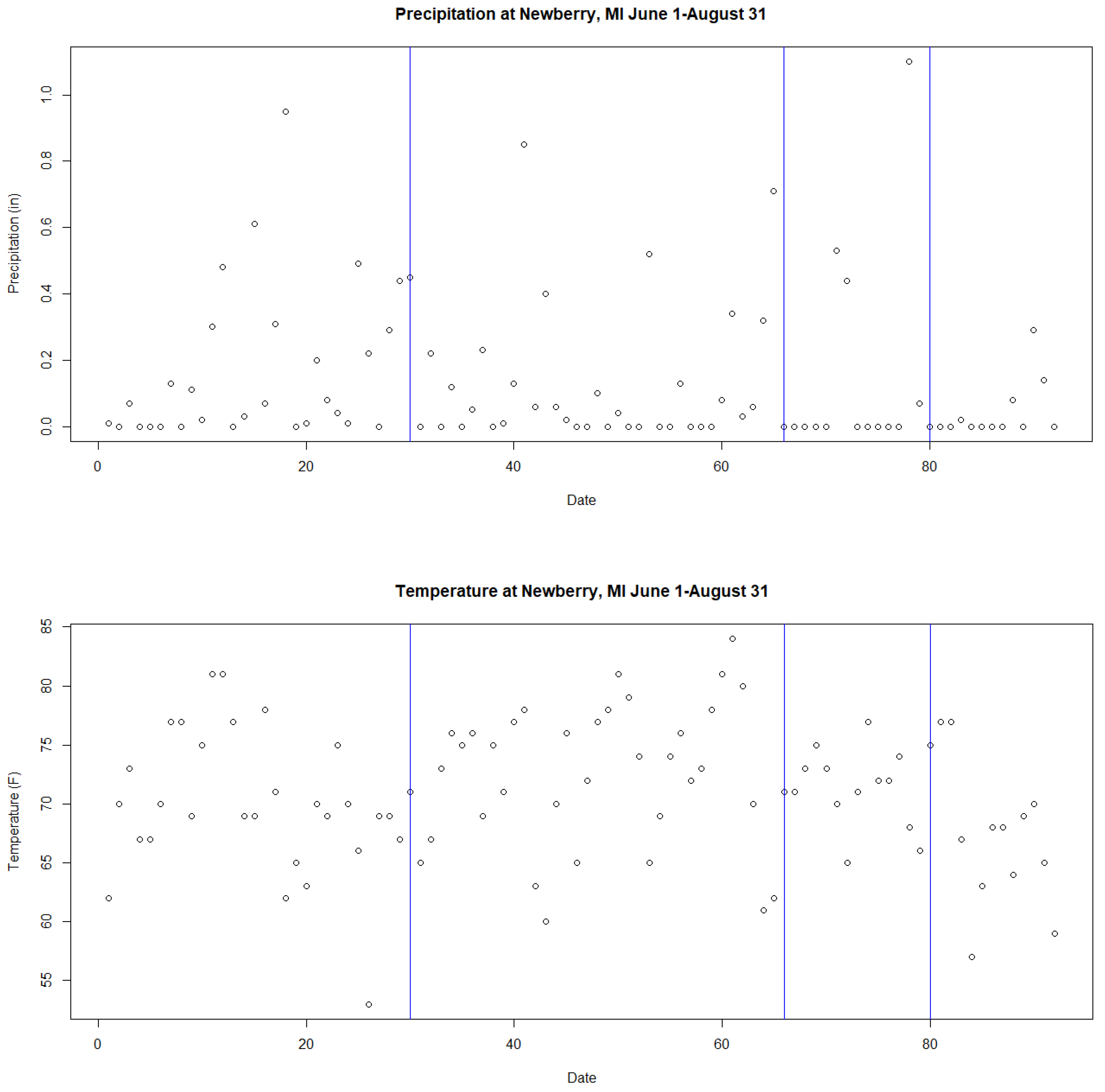

2.1. Study Area

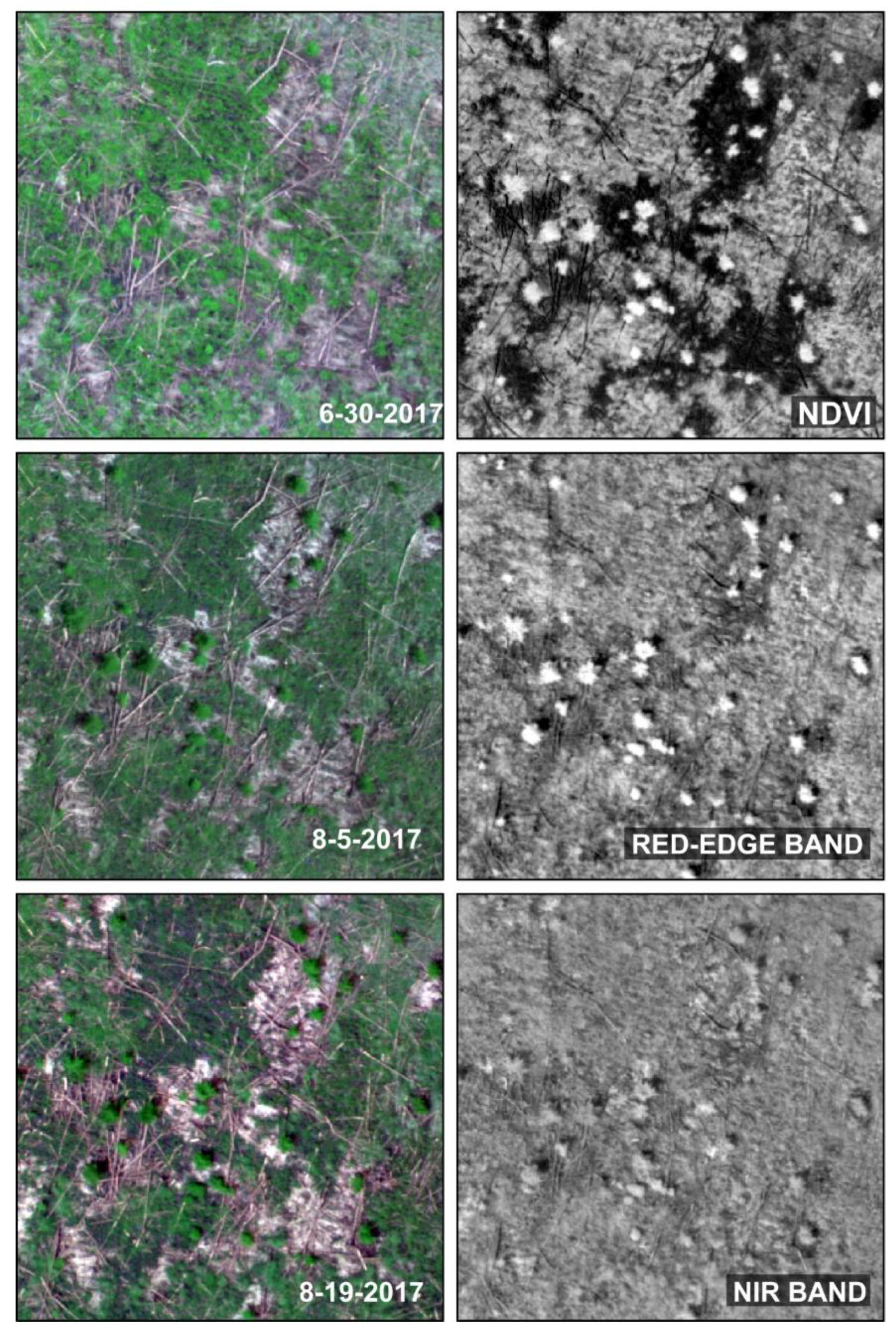

2.2. UAS Image Acquisition

2.3. Geographic Object-Based Image Analysis

2.3.1. Image Segmentation

2.3.2. Random Forest Classification

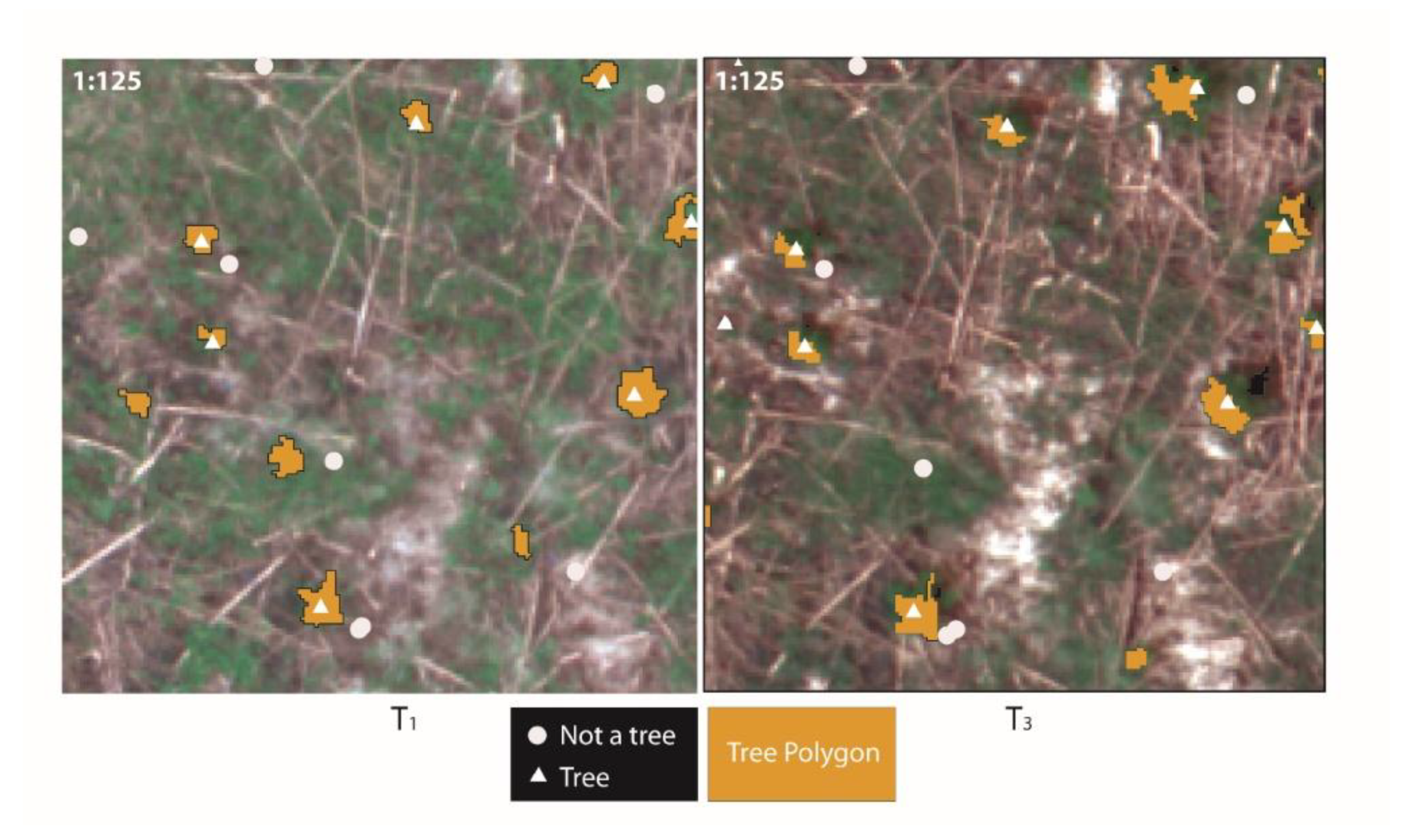

2.4. Accuracy Assessment

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pouliot, D.; King, D.; Bell, F.; Pitt, D. Automated tree crown detection and delineation in high-resolution digital camera imagery of coniferous forest regeneration. Remote Sens. Environ. 2002, 82, 322–334. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Berni, J.A.; Zarco-Tejada, P.J.; Suárez Barranco, M.D.; Fereres Castiel, E. Thermal and Narrow-Band Multispectral Remote Sensing for Vegetation Monitoring from an Unmanned Aerial Vehicle; Institute of Electrical and Electronics Engineers: Piscataway, NJ, USA, 2009. [Google Scholar]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Westoby, M.; Brasington, J.; Glasser, N.; Hambrey, M.; Reynolds, J. ‘Structure-from-Motion’photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Duncanson, L.; Dubayah, R. Monitoring individual tree-based change with airborne lidar. Ecol. Evol. 2018, 8, 5079–5089. [Google Scholar] [CrossRef] [PubMed]

- Mohan, M.; Silva, C.A.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.T.; Dia, M. Individual tree detection from unmanned aerial vehicle (UAV) derived canopy height model in an open canopy mixed conifer forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef]

- Delagrange, S.; Rochon, P. Reconstruction and analysis of a deciduous sapling using digital photographs or terrestrial-LiDAR technology. Ann. Bot. 2011, 108, 991–1000. [Google Scholar] [CrossRef] [PubMed]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.W.; Hyyppa, J.; Saari, H.; Polonen, I.; Imai, N.N.; et al. Individual Tree Detection and Classification with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; Cosenza, D.N.; Rodriguez, L.C.E.; Silva, M.; Tomé, M.; Díaz-Varela, R.A.; González-Ferreiro, E. Comparison of ALS- and UAV(SfM)-derived high-density point clouds for individual tree detection in Eucalyptus plantations. Int. J. Remote Sens. 2018, 39, 5211–5235. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Zare, A.; Dewitt, B.A.; Flory, L.; Smith, S.E. A fully learnable context-driven object-based model for mapping land cover using multi-view data from unmanned aircraft systems. Remote Sens. Environ. 2018, 216, 328–344. [Google Scholar] [CrossRef]

- Filella, I.; Penuelas, J. The red edge position and shape as indicators of plant chlorophyll content, biomass and hydric status. Int. J. Remote Sens. 1994, 15, 1459–1470. [Google Scholar] [CrossRef]

- Myneni, R.; Asrar, G. Atmospheric effects and spectral vegetation indices. Remote Sens. Environ. 1994, 47, 390–402. [Google Scholar] [CrossRef]

- Weichelt, H.; Rosso, P.; Marx, A.; Reigber, S.; Douglass, K.; Heynen, M. White Paper: The RapidEye Red Edge Band Mapping; RESA: Brandenburg, Germany, 2012. [Google Scholar]

- Pinar, A.; Curran, P. Technical note grass chlorophyll and the reflectance red edge. Int. J. Remote Sens. 1996, 17, 351–357. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.; Schell, J.; Deering, D. Monitoring Vegetation Systems in the Great Plains with ERTS; Texas A&M University: College Station, TX, USA, 1974. [Google Scholar]

- Huete, A.; Jackson, R. Soil and atmosphere influences on the spectra of partial canopies. Remote Sens. Environ. 1988, 25, 89–105. [Google Scholar] [CrossRef]

- Schuster, C.; Förster, M.; Kleinschmit, B. Testing the red edge channel for improving land-use classifications based on high-resolution multi-spectral satellite data. Int. J. Remote Sens. 2012, 33, 5583–5599. [Google Scholar] [CrossRef]

- Middleton, E.; Sullivan, J.; Bovard, B.; Deluca, A.; Chan, S.; Cannon, T. Seasonal variability in foliar characteristics and physiology for boreal forest species at the five Saskatchewan tower sites during the 1994 Boreal Ecosystem-Atmosphere Study. J. Geophys. Res. Atmos. 1997, 102, 28831–28844. [Google Scholar] [CrossRef]

- Porcar-Castell, A.; Garcia-Plazaola, J.I.; Nichol, C.J.; Kolari, P.; Olascoaga, B.; Kuusinen, N.; Fernández-Marín, B.; Pulkkinen, M.; Juurola, E.; Nikinmaa, E. Physiology of the seasonal relationship between the photochemical reflectance index and photosynthetic light use efficiency. Oecologia 2012, 170, 313–323. [Google Scholar] [CrossRef] [PubMed]

- Eitel, J.U.; Vierling, L.A.; Litvak, M.E.; Long, D.S.; Schulthess, U.; Ager, A.A.; Krofcheck, D.J.; Stoscheck, L. Broadband, red-edge information from satellites improves early stress detection in a New Mexico conifer woodland. Remote Sens. Environ. 2011, 115, 3640–3646. [Google Scholar] [CrossRef]

- Cleland, D.T.; Crow, T.R.; Saunders, S.C.; Dickmann, D.I.; Maclean, A.L.; Jordan, J.K.; Watson, R.L.; Sloan, A.M.; Brosofske, K.D. Characterizing historical and modern fire regimes in Michigan (USA): A landscape ecosystem approach. Landsc. Ecol. 2004, 19, 311–325. [Google Scholar] [CrossRef]

- Whitney, G.G. An ecological history of the Great Lakes forest of Michigan. J. Ecol. 1987, 75, 667–684. [Google Scholar] [CrossRef]

- Bergeron, Y.; Harvey, B.; Leduc, A.; Gauthier, S. Forest management guidelines based on natural disturbance dynamics: Stand-and forest-level considerations. For. Chron. 1999, 75, 49–54. [Google Scholar] [CrossRef]

- Spaulding, S.E.; Rothstein, D.E. How well does Kirtland’s warbler management emulate the effects of natural disturbance on stand structure in Michigan jack pine forests? For. Ecol. Manag. 2009, 258, 2609–2618. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 1: A review of progress and challenges. J. Unmanned Veh. Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Schaetzl, R.J.; Thompson, M.L. Soils; Cambridge University Press: New York, NY, USA, 2015. [Google Scholar]

- Schaetzl, R.J.; Darden, J.T.; Brandt, D.S. Michigan Geography and Geology; Pearson Learning Solutions: New York, NY, USA, 2009. [Google Scholar]

- Zhang, Q.; Pregitzer, K.S.; Reed, D.D. Historical changes in the forests of the Luce District of the Upper Peninsula of Michigan. Am. Midl. Nat. 2000, 143, 94–110. [Google Scholar] [CrossRef]

- Pix4dmapper; Pix4D: San Francisco, CA, USA, 2017; Volume 3.3.

- MicaSense RedEdge 3 Multispectral Camera User Manual; MicaSense Inc.: Seattle, WA, USA, 2015; p. 33.

- Osborne, M. Mission Planner; ArduPilot; 2016. [Google Scholar]

- Clapuyt, F.; Vanacker, V.; Van Oost, K. Reproducibility of UAV-based earth topography reconstructions based on Structure-from-Motion algorithms. Geomorphology 2016, 260, 4–15. [Google Scholar] [CrossRef]

- ESRI. ArcGIS Desktop Release 10.5; Environmental Systems Research Institute: Readlands, CA, USA, 2011. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Komárek, J.; Klouček, T.; Prošek, J. The potential of Unmanned Aerial Systems: A tool towards precision classification of hard-to-distinguish vegetation types? Int. J. Appl. Earth Obs. Geoinf. 2018, 71, 9–19. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- eCognition 9.0; Trimble Navigation: Sunnyvale, CA, USA, 2014.

- Baatz, M.; Schäpe, A. Multiresolution Segmentation: An Optimization Approach for High Quality Multi-Scale Image Segmentation; Geographische Informationsverarbeitung XII; Herbert Wichmann Verlag: Karlsruhe, Germany, 2000; pp. 12–13. [Google Scholar]

- Radoux, J.; Bogaert, P. Good Practices for Object-Based Accuracy Assessment. Remote Sens. 2017, 9, 646. [Google Scholar] [CrossRef]

- Bankier, A.A.; Levine, D.; Halpern, E.F.; Kressel, H.Y. Consensus Interpretation in Imaging Research: Is There a Better Way? Radiological Society of North America, Inc.: Oak Creek, IL, USA, 2010. [Google Scholar]

- Radoux, J.; Bogaert, P.; Fasbender, D.; Defourny, P. Thematic accuracy assessment of geographic object-based image classification. Int. J. Geograph. Inf. Sci. 2011, 25, 895–911. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree Species Classification with Random Forest Using Very High Spatial Resolution 8-Band WorldView-2 Satellite Data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Adelabu, S.; Mutanga, O.; Adam, E.E.; Cho, M.A. Exploiting machine learning algorithms for tree species classification in a semiarid woodland using RapidEye image. J. Appl. Remote Sens. 2013, 7, 073480. [Google Scholar] [CrossRef]

- Nichol, C.J.; Huemmrich, K.F.; Black, T.A.; Jarvis, P.G.; Walthall, C.L.; Grace, J.; Hall, F.G. Remote sensing of photosynthetic-light-use efficiency of boreal forest. Agric. For. Meteorol. 2000, 101, 131–142. [Google Scholar] [CrossRef]

- Yin, D.M.; Wang, L. How to assess the accuracy of the individual tree-based forest inventory derived from remotely sensed data: A review. Int. J. Remote Sens. 2016, 37, 4521–4553. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.S. Evaluating tree detection and segmentation routines on very high resolution UAV LiDAR data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7619–7628. [Google Scholar] [CrossRef]

- Adelabu, S.; Mutanga, O.; Adam, E. Evaluating the impact of red-edge band from Rapideye image for classifying insect defoliation levels. ISPRS J. Photogramm. Remote Sens. 2014, 95, 34–41. [Google Scholar] [CrossRef]

- Jack Pine. In Silvicultural Handbook; State of Wisconsin: Madison, WI, USA, 2016; pp. 33-1–33-62.

- Lizarazo, I. Accuracy assessment of object-based image classification: Another STEP. Int. J. Remote Sens. 2014, 35, 6135–6156. [Google Scholar] [CrossRef]

- Potter, C.; Li, S.; Huang, S.; Crabtree, R.L. Analysis of sapling density regeneration in Yellowstone National Park with hyperspectral remote sensing data. Remote Sens. Environ. 2012, 121, 61–68. [Google Scholar] [CrossRef]

- Plieninger, T.; Pulido, F.J.; Schaich, H. Effects of land-use and landscape structure on holm oak recruitment and regeneration at farm level in Quercus ilex L. dehesas. J. Arid Environ. 2004, 57, 345–364. [Google Scholar] [CrossRef]

- Hutchinson, T.F.; Sutherland, E.K.; Yaussy, D.A. Effects of repeated prescribed fires on the structure, composition, and regeneration of mixed-oak forests in Ohio. For. Ecol. Manag. 2005, 218, 210–228. [Google Scholar] [CrossRef]

| Spectral Bands | Blue, Green, Red, Read Edge, NIR |

| Ground Sample Distance | 8.2 cm/pixel (per band) at 120 m Above Ground Level |

| Capture Speed | 1 capture per second (all bands) |

| Format | 12-bit Camera RAW |

| Focal Length/Field of View | 5.5 cm/47.2 degrees FOV |

| Image Resolution | 1280 × 960 pixels |

| Band Number | Band Name | Center Wavelength (nm) | Bandwidth FWHM |

|---|---|---|---|

| 1 | Blue | 475 | 20 |

| 2 | Green | 560 | 20 |

| 3 | Red | 558 | 10 |

| 5 | Red Edge | 717 | 10 |

| 4 | Near IR | 840 | 40 |

| Plot | Flight | Images | Altitude (m) | Ground Resolution (m px−1) |

|---|---|---|---|---|

| A | T1 | 200 | 80 | 0.05505 |

| T2 | 200 | 0.05441 | ||

| T3 | 200 | 0.05513 | ||

| B | T3 | 190 | 80 | 0.05561 |

| Plot A | |||||

| Date | Bands | UA | PA | F-Score | CA |

| T1 | RE + NIR | 0.46 | 1.0 | 0.63 | 0.72 |

| NIR | 0.57 | 0.98 | 0.72 | 0.78 | |

| RE | 0.43 | 0.96 | 0.60 | 0.70 | |

| NIR − R | 0.60 | 0.94 | 0.73 | 0.78 | |

| T2 | RE + NIR | 0.50 | 1.0 | 0.66 | 0.75 |

| NIR | 0.50 | 1.0 | 0.66 | 0.75 | |

| RE | 0.54 | 1.0 | 0.82 | 0.69 | |

| NIR − R | 0.79 | 0.97 | 0.71 | 0.88 | |

| T3 | RE + NIR | 0.64 | 0.96 | 0.78 | 0.81 |

| NIR | 0.65 | 0.97 | 0.78 | 0.81 | |

| RE | 0.57 | 0.97 | 0.72 | 0.78 | |

| NIR − R | 0.79 | 0.97 | 0.87 | 0.88 | |

| Plot B | |||||

| Date | Bands | UA | PA | F-Score | CA |

| T3 | RE + NIR | 1.0 | 0.86 | 0.91 | 0.93 |

| NIR | 1.0 | 0.86 | 0.91 | 0.93 | |

| RE | 1.0 | 0.80 | 0.889 | 0.90 | |

| NIR − R | 1.0 | 0.95 | 0.79 | 0.98 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

White, R.A.; Bomber, M.; Hupy, J.P.; Shortridge, A. UAS-GEOBIA Approach to Sapling Identification in Jack Pine Barrens after Fire. Drones 2018, 2, 40. https://doi.org/10.3390/drones2040040

White RA, Bomber M, Hupy JP, Shortridge A. UAS-GEOBIA Approach to Sapling Identification in Jack Pine Barrens after Fire. Drones. 2018; 2(4):40. https://doi.org/10.3390/drones2040040

Chicago/Turabian StyleWhite, Raechel A., Michael Bomber, Joseph P. Hupy, and Ashton Shortridge. 2018. "UAS-GEOBIA Approach to Sapling Identification in Jack Pine Barrens after Fire" Drones 2, no. 4: 40. https://doi.org/10.3390/drones2040040

APA StyleWhite, R. A., Bomber, M., Hupy, J. P., & Shortridge, A. (2018). UAS-GEOBIA Approach to Sapling Identification in Jack Pine Barrens after Fire. Drones, 2(4), 40. https://doi.org/10.3390/drones2040040