Remote Sensing of Yields: Application of UAV Imagery-Derived NDVI for Estimating Maize Vigor and Yields in Complex Farming Systems in Sub-Saharan Africa

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Villages

2.2. Plot and Subplot Selection

2.3. In-Field Measurements

2.3.1. Chlorophyll Measurements

2.3.2. Crop Growth and Vigor Monitoring

2.3.3. Grain Yield Measurement

2.4. Remote Sensing of Yields

2.4.1. Unmanned Aerial Vehicle Platform and Sensors

2.4.2. Image Pre-processing and Analysis

2.4.3. GNDVI from UAV Imagery

2.5. Data Analysis

3. Results

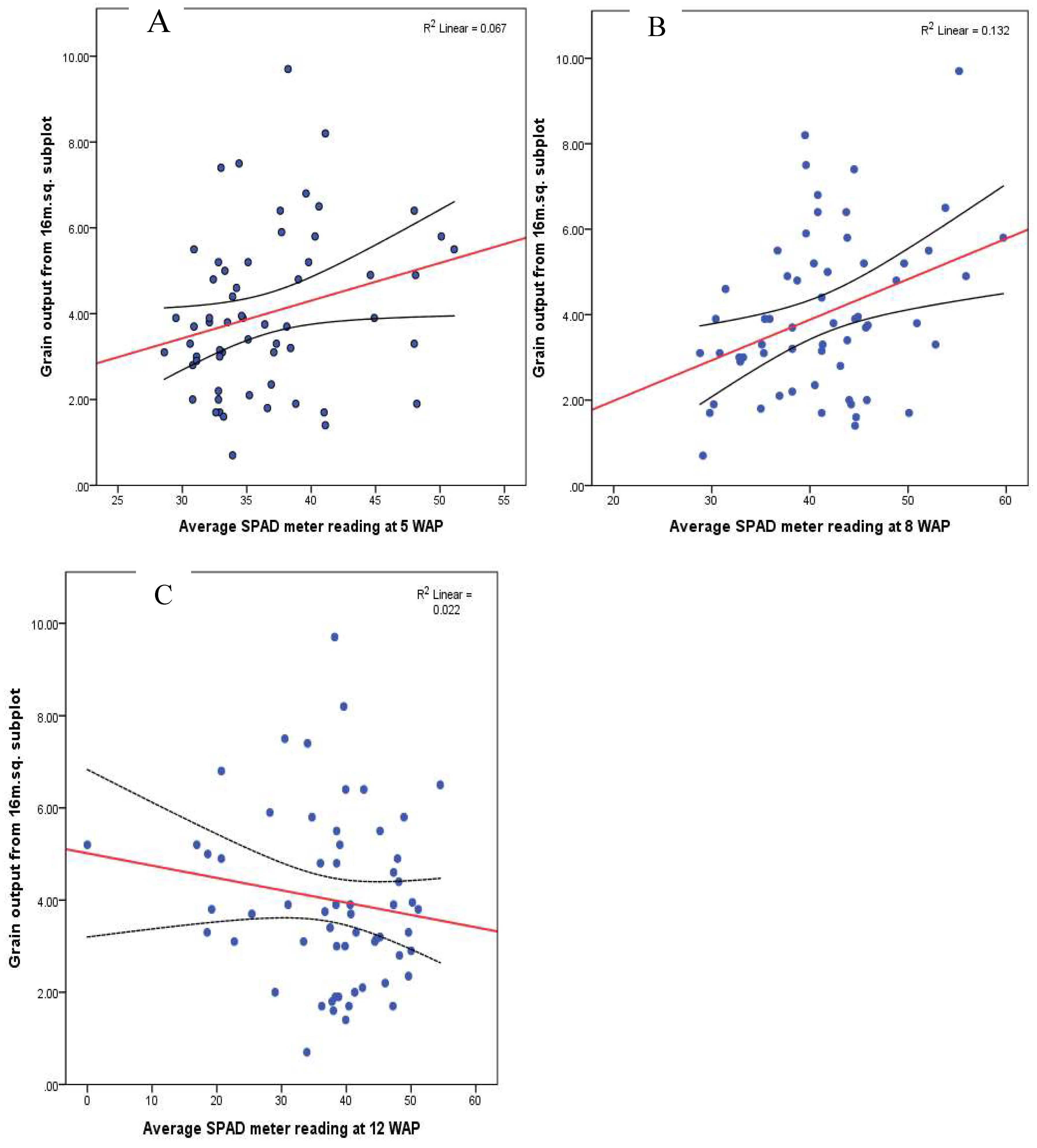

3.1. In-Field Measurements Results

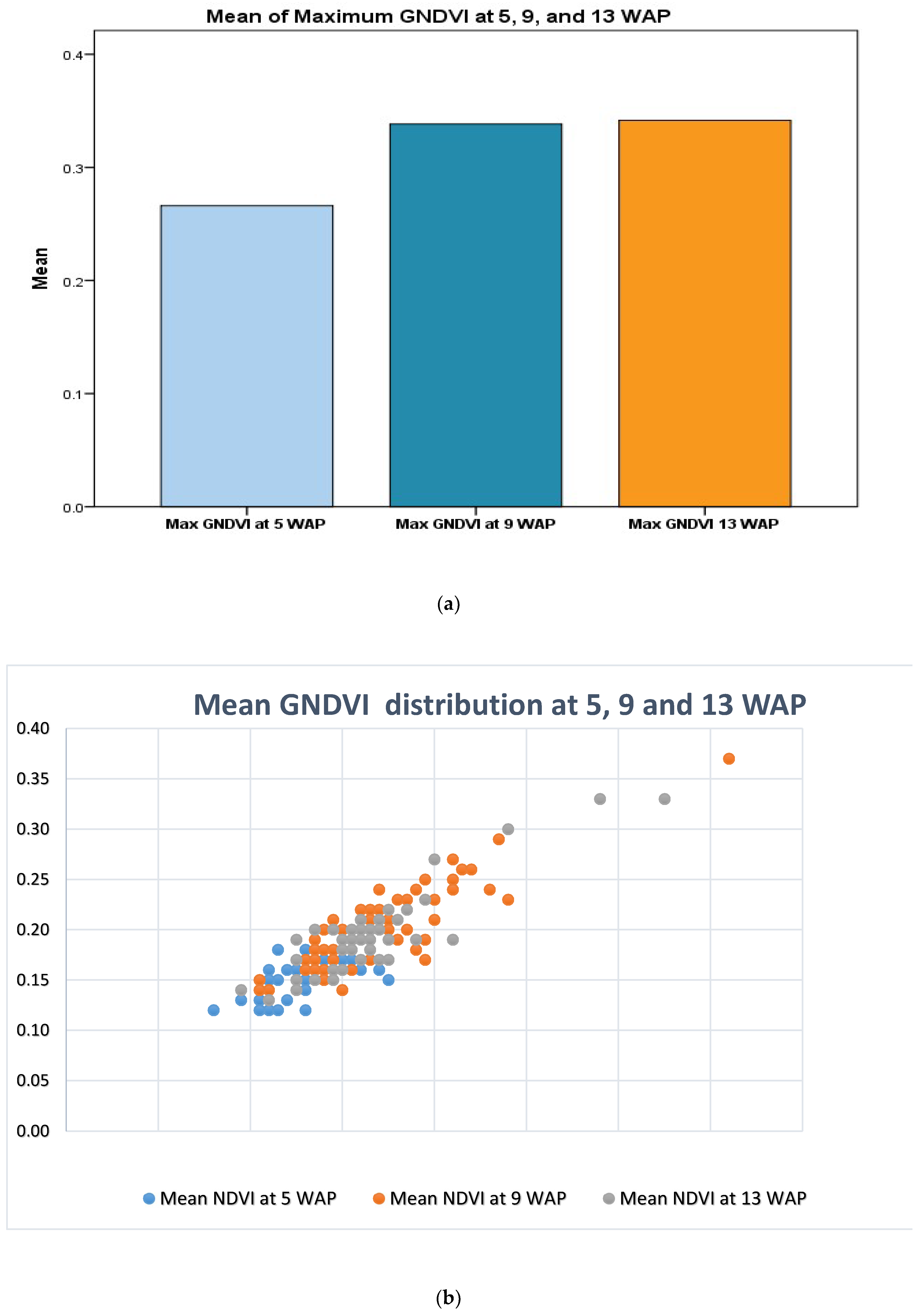

3.2. UAV GNDVI Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- FAOSTAT. Food and Agriculture Organization’s Statistical Database. FAO, 2017. Available online: http://www.Fao.Org/faostat/en/#home (accessed on 21 February 2017).

- Dzanku, F.M.; Jirström, M.; Marstorp, H. Yield gap-based poverty gaps in rural Sub-Saharan Africa. World Dev. 2015, 67, 336–362. [Google Scholar] [CrossRef]

- Swain, K.C.; Zaman, Q.U. Rice crop monitoring with unmanned helicopter remote sensing images. In Remote Sensing of Biomass—Principles and Applications; Fatoyinbo, L., Ed.; INTECH Open Access Publisher: Rijeka, Croatia, 2012; pp. 253–272. [Google Scholar]

- Srbinovska, M.; Gavrovski, C.; Dimcev, V.; Krkoleva, A.; Borozan, V. Environmental parameters monitoring in precision agriculture using wireless sensor netweorks. J. Clean. Prod. 2015, 88, 297–307. [Google Scholar] [CrossRef]

- Jones, H.G.; Vaughan, R.A. Remote Sensing of Vegetation: Principles, Techniques, and Applications, 1st ed.; Oxford University Press: Oxford, UK, 2010. [Google Scholar]

- Benincasa, P.; Antognelli, S.; Brunetti, L.; Fabbri, C.A.; Natale, A.; Sartoretti, V.; Modeo, G.; Guiducci, M.; Tei, F.; Vizzari, M. Reliability of NDVI derived by high resolution satellite and UAV compared to in-field methods for the evaluation of early crop n status and grain yield in wheat. Exp. Agric. 2017, 54, 604–622. [Google Scholar] [CrossRef]

- Fageria, N.K. Nitrogen harvest index and its association with crop yields. J. Plant Nutr. 2014, 37, 795–810. [Google Scholar] [CrossRef]

- Chapman, S.C.; Merz, T.; Chan, A.; Jackway, P.; Hrabar, S.; Dreccer, M.F.; Holland, E.; Zheng, B.; Ling, T.J.; Jimenez-Berni, J. Pheno-copter: A low-altitude, autonomous remote sensing robotic helicopter for high-throughput field-based phenotyping. Agron. J. 2014, 4, 279–301. [Google Scholar] [CrossRef]

- Lobell, D.B. The use of satellite data for crop yield gap analysis. Field Crops Res. 2013, 143, 56–64. [Google Scholar] [CrossRef]

- Bisht, P.; Kumar, P.; Yadav, M.; Rawat, J.S.; Sharma, M.P.; Hooda, R.S. Spatial dynamics for relative contribution of cropping pattern analysis on environment by integrating remote sensing and GIS. Int. J. Plant Prod. 2014, 8, 1735–8043. [Google Scholar]

- Jovanović, D.; Govedarica, M.; Rašić, D. Remote sensing as a trend in agriculture. Res. J. Agric. Sci. 2014, 46, 32–37. [Google Scholar]

- Roumenina, E.; Atzberger, C.; Vassilev, V.; Dimitrov, P.; Kamenova, I.; Banov, M.; Filchev, L.; Jelev, G. Single- and multi-data crop identification using PROBA-V 100 and 300 m S1 products on Zlatia test site, Bulgaria. Remote Sens. 2015, 7, 13843–13862. [Google Scholar] [CrossRef]

- Pinter, P.J.J.; Hatfield, J.L.; Schepers, J.S.; Barnes, E.M.; Moran, M.S.; Daughtry, C.S.T.; Upchurch, D.R. Remote sensing for crop management. Photogramm. Eng. Remote Sens. 2003, 69, 647–664. [Google Scholar] [CrossRef]

- Yao, X.; Huang, Y.; Shang, G.; Zhou, C.; Cheng, T.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of six algorithms to monitor wheat leaf nitrogen concentration. Remote Sens. 2015, 7, 14939–14966. [Google Scholar] [CrossRef]

- Frazier, A.E.; Wang, L.; Chen, J. Two new hyperspectral indices for comparing vegetation chlorophyll content. Geo-Spat. Inf. Sci. 2014, 17, 17–25. [Google Scholar] [CrossRef]

- Liang, L.; Qin, Z.; Zhao, S.; Di, L.; Zhang, C.; Deng, M.; Lin, H.; Zhang, L.; Wang, L.; Liu, Z. Estimating crop chlorophyll content with hyperspectral vegetation indices and the hybrid inversion method. Int. J. Remote Sens. 2016, 37, 2923–2949. [Google Scholar] [CrossRef]

- Imran, M.; Stein, A.; Zurita-Milla, R. Using geographically weighted regression kriging for crop yield mapping in West Africa. Int. J. Geogr. Inf. Sci. 2015, 29, 234–257. [Google Scholar] [CrossRef]

- Jaafar, H.H.; Ahmad, F.A. Crop yield prediction from remotely sensed vegetation indices and primary productivity in arid and semi-arid lands. Int. J. Remote Sens. 2015, 36, 4570–4589. [Google Scholar] [CrossRef]

- Cheng, T.; Yang, Z.; Inoue, Y.; Zhu, Y.; Cao, W. Recent advances in remote sensing for crop growth monitoring. Remote Sens. 2016, 8, 116. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Wójtowicz, M.; Wójtowicz, A.; Piekarczyk, J. Application of remote sensing methods in agriculture. Commun. Biometry Crop Sci. 2016, 11, 31–50. [Google Scholar]

- Berni, J.A.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Burke, M.; Lobell, D.B. Satellite-based assessment of yield variation and its determinants in smallholder African systems. Proc. Natl. Acad. Sci. USA 2017, 114, 2189–2194. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Hoffmann, W.C. Low-cost single-camera imaging system for aerial applicators. J. Appl. Remote Sens. 2015, 9, 096064. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, aircraft and satellite remote sensing platforms for precision viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Colwell, R.N. Determining the prevalence of certain cereal crop diseases by means of aerial photography. Hilgardia 1956, 26, 223–286. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral remote sensing from unmanned aircraft: Image processing workflows and applications for rangeland environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Sakamoto, T.; Gitelson, A.A.; Nguy-Robertson, A.L.; Arkebauer, T.J.; Wardlow, B.D.; Suyker, A.E.; Verma, S.B.; Shibayama, M. An alternative method using digital cameras for continuous monitoring of crop status. Agric. For. Meteorol. 2013, 154–155, 113–126. [Google Scholar] [CrossRef]

- Piekarczyk, J.; Sulewska, H.; Szymańska, G. Winter oilseed-rape yield estimates from hyperspectral radiometer measurements. Quaest. Geogr. 2011, 30, 77–84. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, C.; Song, H.; Hoffmann, W.S.; Zhang, D.; Zhang, G. Evaluation of an airborne remote sensing platform consisting of two consumer-grade cameras for crop identification. Remote Sens. 2016, 8, 257. [Google Scholar] [CrossRef]

- Swain, K.C.; Thomson, S.J.; Jayasuriya, H.P.W. Adoption of an unmanned helicopter for low-altitude remote sensing to estimate yield and total biomass of a rice crop. Am. Soc. Agric. Biol. Eng. 2010, 53, 21–27. [Google Scholar]

- Shanahan, J.F.; Schepers, J.S.; Francis, D.D.; Varvel, G.E.; Wilhelm, W.W.; Tringe, J.M.; Schlemmer, M.R.; Major, D.J. Use of remote sensing imagery to estimate corn grain yield. Agron. J. 2001, 93, 583–589. [Google Scholar] [CrossRef]

- GSS. 2010 Population and Housing Census: District Analytical Report. Upper Manya Krobo District; Ghana Statistical Service, Ed.; Ghana Statistical Service: Accra, Ghana, 2014. [Google Scholar]

- GSS. 2010 Population and Housing Census: District Analytical Report. Lower Manya Krobo Municipality; Ghana Statistical Service, Ed.; Ghana Statistical Service: Accra, Ghana, 2014. [Google Scholar]

- Djurfeldt, G.; Aryeetey, E.; Isinika, A.C.E. African Smallholders: Food Crops, Markets and Policy; Djurfeldt, G., Aryeetey, E., Isinika, A.C., Eds.; CAB International: Oxfordshire, UK, 2011; 397p. [Google Scholar]

- Wood, C.W.; Reeves, D.W.; Himelrick, D.G. (Eds.) Relationships between Chlorophyll Meter Readings and Leaf Chlorophyll Concentration, N Status, and Crop Yield: A Review; Agronomy Society of New Zealand: Palmerston North, New Zealand, 1993; Volume 23. [Google Scholar]

- FAO. Guidelines for Soil Description; Food and Agriculture Organization of the United Nations: Rome, Italy, 2006. [Google Scholar]

- Carletto, C.; Jolliffe, D.; Banerjee, R. From trajedy to renaissance: Improving agricultural data for better policies. J. Dev. Stud. 2015, 51, 133–148. [Google Scholar] [CrossRef]

- Sud, U.C.; Ahmad, T.; Gupta, V.K.; Chandra, H.; Sahoo, P.M.; Aditya, K.; Singh, M.; Biswas, A. Methodology for Estimation of Crop Area and Crop Yield under Mixed and Continuous Cropping; ICAR-Indian Agricultural Statistics Research Institute: New Delhi, India, 2017. [Google Scholar]

- Underhill, J. Personal communication through email to first author on 31 May 2018, 2018.

- Agribotix. Colorado: Agribotix. 2018. Available online: https://agribotix.com/blog/2017/04/30/comparing-rgb-based-vegetation-indices-with-ndvi-for-agricultural-drone-imagery/ (accessed on 4 June 2018).

- Agribotix. How do You do NDVI? Agribotix: Boulder, CA, USA, 2013. Available online: https://agribotix.com/farm-lens-faq/ (accessed on 3 June 2018).

- Mesas-Carrascosa, F.-J.; Torres-Sánchez, J.; Clavero-Rumbao, I.; García-Ferrer, A.; Peña, J.-M.; Borra-Serrano, I.; López-Granados, F. Assessing optimal flight parameters for generating accurate multispectral Orthomosaicks by UAV to support site-specific crop management. Remote Sens. 2015, 7, 12793–12814. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. (Eds.) Monitoring Vegetation Systems in the Great Plains with ERTS; Third ERTS Symposium, December 1973; The National Aeronautics and Space Administration Goddard Space Flight Centre: Greenbelt, MD, USA, 1973. [Google Scholar]

- Jackson, R.D.; Huete, A.R. Interpreting vegetation indices. Prev. Vet. Med. 1991, 11, 185–200. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1978, 8, 127–150. [Google Scholar] [CrossRef]

- Bausch, W.C.; Halvorson, A.D.; Cipra, J. Quickbird satellite and ground-based multispectral data correlations with agronomic parameters of irrigated maize grown in small plots. Biosyst. Eng. 2008, 101, 306–315. [Google Scholar] [CrossRef]

- Raun, W.R.; Johnson, G.V.; Stone, M.L.; Sollie, J.B.; Lukina, E.V.; Thomason, W.E.; Schepers, J.S. In-season prediction of potential grain yield in winter wheat using canopy reflectance. Agron. J. 2001, 93, 131–138. [Google Scholar] [CrossRef]

- Teal, R.K.; Tubana, B.; Girma, K.; Freeman, K.W.; Arnall, D.B.; Walsh, O.; Raun, W.R. In-season prediction of corn grain yield potential using normalized difference vegetation index. Agron. J. 2006, 98, 1488–1494. [Google Scholar] [CrossRef]

- AU. Drones on the Horizon: Transforming Africa’s Agriculture; The African Union-The New Partnership for Africa’s Development: Gauteng, South Africa, 2018. [Google Scholar]

- Saberioon, M.M.; Amin, M.S.M.; Anuar, A.R.; Gholizadeh, A.; Wayayok, A.; Khairunniza-Bejo, S. Assessment of rice leaf chlorophyll content using visible bands at different growth stages at both the leaf and canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 35–45. [Google Scholar] [CrossRef]

- Ballesteros, R.; Ortega, J.F.; Hernandez, D.; del Campo, A.; Moreno, M.A. Combined use of agro-climatic and very high-resolution remote sensing information for crop monitoring. Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 66–75. [Google Scholar] [CrossRef]

| SPAD Meter and Yields Correlations | |||||

|---|---|---|---|---|---|

| Variables | 1 | 2 | 3 | 4 | |

| Grain output from 16 m.sq. subplot | Pearson Correlation | 1 | |||

| Sig. (one-tailed) | |||||

| N | 60 | ||||

| Average SPAD meter reading at 5 WAP | Pearson Correlation | 0.259 * | 1 | ||

| Sig. (one-tailed) | 0.023 | ||||

| N | 60 | 87 | |||

| Average SPAD meter reading at 9 WAP | Pearson Correlation | 0.363 ** | 0.205 * | 1 | |

| Sig. (one-tailed) | 0.002 | 0.028 | |||

| N | 60 | 87 | 87 | ||

| Average SPAD meter reading at 13 WAP | Pearson Correlation | −0.150 | −0.119 | −0.120 | 1 |

| Sig. (one-tailed) | 0.127 | 0.137 | 0.134 | ||

| N | 60 | 87 | 87 | 87 | |

| Bivariate Correlation Analyses between In-Field Measures | |||||

|---|---|---|---|---|---|

| Variables | 1 | 2 | 3 | 4 | |

| Grain output from 16 m.sq. subplot | Pearson Correlation | 1 | |||

| Sig. (one-tailed) | |||||

| N | 60 | ||||

| Crop vigor score at 5 WAP | Pearson Correlation | −0.140 | 1 | ||

| Sig. (one-tailed) | 0.142 | ||||

| N | 60 | 87 | |||

| Crop vigor score at 9 WAP | Pearson Correlation | 0.122 | 0.673 ** | 1 | |

| Sig. (one-tailed) | 0.177 | 0.000 | |||

| N | 60 | 87 | 87 | ||

| Crop vigor score at 13 WAP | Pearson Correlation | 0.226 * | 0.444 ** | 0.516 ** | 1 |

| Sig. (one-tailed) | 0.041 | 0.000 | 0.000 | ||

| N | 60 | 87 | 87 | 87 | |

| Descriptive Statistics | ||||||

|---|---|---|---|---|---|---|

| N | Range | Minimum | Maximum | Mean | Std. Deviation | |

| Max GNDVI at 5 WAP | 58 | 0.19 | 0.16 | 0.35 | 0.2609 | 0.03845 |

| Max GNDVI at 9 WAP | 74 | 0.51 | 0.21 | 0.72 | 0.3354 | 0.07406 |

| Max GNDVI 13 WAP | 44 | 0.46 | 0.19 | 0.65 | 0.3302 | 0.08236 |

| Mean GNDVI at 5 WAP | 58 | 0.09 | 0.12 | 0.21 | 0.1541 | 0.02009 |

| Mean GNDVI at 9 WAP | 74 | 0.24 | 0.13 | 0.37 | 0.2014 | 0.03801 |

| Mean GNDVI 13 WAP | 44 | 0.20 | 0.13 | 0.33 | 0.1950 | 0.04332 |

| Valid N (listwise) | 18 | |||||

| Bivariate Correlation Analysis between Yields and UAV-Derived GNDVI Measures | ||||||||

|---|---|---|---|---|---|---|---|---|

| Variables | 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

| Grain output from 16 m.sq. subplot | Pearson Correlation | 1 | ||||||

| Sig. (one-tailed) | ||||||||

| N | 60 | |||||||

| Max GNDVI at 5 WAP | Pearson Correlation | 0.393 ** | 1 | |||||

| Sig. (one-tailed) | 0.006 | |||||||

| N | 41 | 58 | ||||||

| Max GNDVI at 9 WAP | Pearson Correlation | 0.131 | 0.342 ** | 1 | ||||

| Sig. (one-tailed) | 0.182 | 0.006 | ||||||

| N | 50 | 53 | 74 | |||||

| Max GNDVI 13 WAP | Pearson Correlation | 0.123 | 0.359 * | 0.046 | 1 | |||

| Sig. (one-tailed) | 0.267 | 0.046 | 0.390 | |||||

| N | 28 | 23 | 39 | 44 | ||||

| Mean GNDVI at 5 WAP | Pearson Correlation | 0.372 ** | 0.645 ** | 0.584 ** | 0.369 * | 1 | ||

| Sig. (one-tailed) | 0.008 | 0.000 | 0.000 | 0.042 | ||||

| N | 41 | 58 | 53 | 23 | 58 | |||

| Mean GNDVI at 9 WAP | Pearson Correlation | 0.041 | 0.393 ** | 0.865 ** | −0.033 | 0.545 ** | 1 | |

| Sig. (one-tailed) | 0.389 | 0.002 | 0.000 | 0.421 | 0.000 | |||

| N | 50 | 53 | 74 | 39 | 53 | 74 | ||

| Mean GNDVI 13 WAP | Pearson Correlation | 0.156 | 0.411 * | 0.076 | 0.893 ** | 0.401 * | 0.010 | 1 |

| Sig. (one-tailed) | 0.213 | 0.026 | 0.323 | 0.000 | 0.029 | 0.476 | ||

| N | 28 | 23 | 39 | 44 | 23 | 39 | 44 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wahab, I.; Hall, O.; Jirström, M. Remote Sensing of Yields: Application of UAV Imagery-Derived NDVI for Estimating Maize Vigor and Yields in Complex Farming Systems in Sub-Saharan Africa. Drones 2018, 2, 28. https://doi.org/10.3390/drones2030028

Wahab I, Hall O, Jirström M. Remote Sensing of Yields: Application of UAV Imagery-Derived NDVI for Estimating Maize Vigor and Yields in Complex Farming Systems in Sub-Saharan Africa. Drones. 2018; 2(3):28. https://doi.org/10.3390/drones2030028

Chicago/Turabian StyleWahab, Ibrahim, Ola Hall, and Magnus Jirström. 2018. "Remote Sensing of Yields: Application of UAV Imagery-Derived NDVI for Estimating Maize Vigor and Yields in Complex Farming Systems in Sub-Saharan Africa" Drones 2, no. 3: 28. https://doi.org/10.3390/drones2030028

APA StyleWahab, I., Hall, O., & Jirström, M. (2018). Remote Sensing of Yields: Application of UAV Imagery-Derived NDVI for Estimating Maize Vigor and Yields in Complex Farming Systems in Sub-Saharan Africa. Drones, 2(3), 28. https://doi.org/10.3390/drones2030028